#what is the difference between machine learning and artificial intelligence

Explore tagged Tumblr posts

Text

The conversation around AI is going to get away from us quickly because people lack the language to distinguish types of AI--and it's not their fault. Companies love to slap "AI" on anything they believe can pass for something "intelligent" a computer program is doing. And this muddies the waters when people want to talk about AI when the exact same word covers a wide umbrella and they themselves don't know how to qualify the distinctions within.

I'm a software engineer and not a data scientist, so I'm not exactly at the level of domain expert. But I work with data scientists, and I have at least rudimentary college-level knowledge of machine learning and linear algebra from my CS degree. So I want to give some quick guidance.

What is AI? And what is not AI?

So what's the difference between just a computer program, and an "AI" program? Computers can do a lot of smart things, and companies love the idea of calling anything that seems smart enough "AI", but industry-wise the question of "how smart" a program is has nothing to do with whether it is AI.

A regular, non-AI computer program is procedural, and rigidly defined. I could "program" traffic light behavior that essentially goes { if(light === green) { go(); } else { stop();} }. I've told it in simple and rigid terms what condition to check, and how to behave based on that check. (A better program would have a lot more to check for, like signs and road conditions and pedestrians in the street, and those things will still need to be spelled out.)

An AI traffic light behavior is generated by machine-learning, which simplistically is a huge cranking machine of linear algebra which you feed training data into and it "learns" from. By "learning" I mean it's developing a complex and opaque model of parameters to fit the training data (but not over-fit). In this case the training data probably includes thousands of videos of car behavior at traffic intersections. Through parameter tweaking and model adjustment, data scientists will turn this crank over and over adjusting it to create something which, in very opaque terms, has developed a model that will guess the right behavioral output for any future scenario.

A well-trained model would be fed a green light and know to go, and a red light and know to stop, and 'green but there's a kid in the road' and know to stop. A very very well-trained model can probably do this better than my program above, because it has the capacity to be more adaptive than my rigidly-defined thing if the rigidly-defined program is missing some considerations. But if the AI model makes a wrong choice, it is significantly harder to trace down why exactly it did that.

Because again, the reason it's making this decision may be very opaque. It's like engineering a very specific plinko machine which gets tweaked to be very good at taking a road input and giving the right output. But like if that plinko machine contained millions of pegs and none of them necessarily correlated to anything to do with the road. There's possibly no "if green, go, else stop" to look for. (Maybe there is, for traffic light specifically as that is intentionally very simplistic. But a model trained to recognize written numbers for example likely contains no parameters at all that you could map to ideas a human has like "look for a rigid line in the number". The parameters may be all, to humans, meaningless.)

So, that's basics. Here are some categories of things which get called AI:

"AI" which is just genuinely not AI

There's plenty of software that follows a normal, procedural program defined rigidly, with no linear algebra model training, that companies would love to brand as "AI" because it sounds cool.

Something like motion detection/tracking might be sold as artificially intelligent. But under the covers that can be done as simply as "if some range of pixels changes color by a certain amount, flag as motion"

2. AI which IS genuinely AI, but is not the kind of AI everyone is talking about right now

"AI", by which I mean machine learning using linear algebra, is very good at being fed a lot of training data, and then coming up with an ability to go and categorize real information.

The AI technology that looks at cells and determines whether they're cancer or not, that is using this technology. OCR (Optical Character Recognition) is the technology that can take an image of hand-written text and transcribe it. Again, it's using linear algebra, so yes it's AI.

Many other such examples exist, and have been around for quite a good number of years. They share the genre of technology, which is machine learning models, but these are not the Large Language Model Generative AI that is all over the media. Criticizing these would be like criticizing airplanes when you're actually mad at military drones. It's the same "makes fly in the air" technology but their impact is very different.

3. The AI we ARE talking about. "Chat-gpt" type of Generative AI which uses LLMs ("Large Language Models")

If there was one word I wish people would know in all this, it's LLM (Large Language Model). This describes the KIND of machine learning model that Chat-GPT/midjourney/stablediffusion are fueled by. They're so extremely powerfully trained on human language that they can take an input of conversational language and create a predictive output that is human coherent. (I am less certain what additional technology fuels art-creation, specifically, but considering the AI art generation has risen hand-in-hand with the advent of powerful LLM, I'm at least confident in saying it is still corely LLM).

This technology isn't exactly brand new (predictive text has been using it, but more like the mostly innocent and much less successful older sibling of some celebrity, who no one really thinks about.) But the scale and power of LLM-based AI technology is what is new with Chat-GPT.

This is the generative AI, and even better, the large language model generative AI.

(Data scientists, feel free to add on or correct anything.)

3K notes

·

View notes

Text

Growing ever more frustrated with the use of the term "AI" and how the latest marketing trend has ensured its already rather vague and highly contextual meaning has now evaporated into complete nonsense. Much like how the only real commonality between animals colloquially referred to as "Fish" is "probably lives in the water", the only real commonality between things currently colloquially referred to as "AI" is "probably happens on a computer"

For example, the "AI" you see in most games wot controls enemies and other non-player actors typically consist primarily of timers, conditionals, and RNG - and are typically designed with the goal of trying to make the game fun and/or interesting rather than to be anything ressembling actually intelligent. By contrast, the thing that the tech sector is currently trying to sell to us as "AI" relates to a completely different field called Machine Learning - specifically the sub-fields of Deep Learning and Neural Networks, specifically specifically the sub-sub-field of Large Language Models, which are an attempt at modelling human languages through large statistical models built on artificial neural networks by way of deep machine learning.

the word "statistical" is load bearing.

Say you want to teach a computer to recognize images of cats. This is actually a pretty difficult thing to do because computers typically operate on fixed patterns whereas visually identifying something as a cat is much more about the loose relationship between various visual identifiers - many of which can be entirely optional: a cat has a tail except when it doesn't either because the tail isn't visible or because it just doesn't have one, a cat has four legs, two eyes and two ears except for when it doesn't, it has five digits per paw except for when it doesn't, it has whiskers except for when it doesn't, all of these can look very different depending on the camera angle and the individual and the situation - and all of these are also true of dogs, despite dogs being a very different thing from a cat.

So, what do you do? Well, this where machine learning comes into the picture - see, machine learning is all about using an initial "training" data set to build a statistical model that can then be used to analyse and identify new data and/or extrapolate from incomplete or missing data. So in this case, we take a machine learning system and feeds it a whole bunch of images - some of which are of cats and thus we mark as "CAT" and some of which are not of cats and we mark as "NOT CAT", and what we get out of that is a statistical model that, upon given a picture, will assign a percentage for how well it matches its internal statistical correlations for the categories of CAT and NOT CAT.

This is, in extremely simplified terms, how pretty much all machine learning works, including whatever latest and greatest GPT model being paraded about - sure, the training methods are much more complicated, the statistical number crunching even more complicated still, and the sheer amount of training data being fed to them is incomprehensively large, but at the end of the day they're still models of statistical probability, and the way they generate their output is pretty much a matter of what appears to be the most statistically likely outcome given prior input data.

This is also why they "hallucinate" - the question of what number you get if you add 512 to 256 or what author wrote the famous novel Lord of the Rings, or how many academy awards has been won by famous movie Goncharov all have specific answers, but LLMs like ChatGPT and other machine learning systems are probabilistic systems and thus can only give probabilistic answers - they neither know nor generally attempt to calculate what the result of 512 + 256 is, nor go find an actual copy of Lord of the Rings and look what author it says on the cover, they just generalise the most statistically likely response given their massive internal models. It is also why machine learning systems tend to be highly biased - their output is entirely based on their training data, they are inevitably biased not only by their training data but also the selection of it - if the majority of english literature considered worthwhile has been written primarily by old white guys then the resulting model is very likely to also primarily align with the opinion of a bunch of old white guys unless specific care and effort is put into trying to prevent it.

It is this probabilistic nature that makes them very good at things like playing chess or potentially noticing early signs of cancer in x-rays or MRI scans or, indeed, mimicking human language - but it also means the answers are always purely probabilistic. Meanwhile as the size and scope of their training data and thus also their data models grow, so does the need for computational power - relatively simple models such as our hypothetical cat identifier should be fine with fairly modest hardware, while the huge LLM chatbots like ChatGPT and its ilk demand warehouse-sized halls full of specialized hardware able to run specific types of matrix multiplications at rapid speed and in massive parallel billions of times per second and requiring obscene amounts of electrical power to do so in order to maintain low response times under load.

37 notes

·

View notes

Text

Clarification: Generative AI does not equal all AI

💭 "Artificial Intelligence"

AI is machine learning, deep learning, natural language processing, and more that I'm not smart enough to know. It can be extremely useful in many different fields and technologies. One of my information & emergency management courses described the usage of AI as being a "human centaur". Part human part machine; meaning AI can assist in all the things we already do and supplement our work by doing what we can't.

💭 Examples of AI Benefits

AI can help advance things in all sorts of fields, here are some examples:

Emergency Healthcare & Disaster Risk X

Disaster Response X

Crisis Resilience Management X

Medical Imaging Technology X

Commercial Flying X

Air Traffic Control X

Railroad Transportation X

Ship Transportation X

Geology X

Water Conservation X

Can AI technology be used maliciously? Yeh. Thats a matter of developing ethics and working to teach people how to see red flags just like people see red flags in already existing technology.

AI isn't evil. Its not the insane sentient shit that wants to kill us in movies. And it is not synonymous with generative AI.

💭 Generative AI

Generative AI does use these technologies, but it uses them unethically. Its scraps data from all art, all writing, all videos, all games, all audio anything it's developers give it access to WITHOUT PERMISSION, which is basically free reign over the internet. Sometimes with certain restrictions, often generative AI engineers—who CAN choose to exclude things—may exclude extremist sites or explicit materials usually using black lists.

AI can create images of real individuals without permission, including revenge porn. Create music using someones voice without their permission and then sell that music. It can spread disinformation faster than it can be fact checked, and create false evidence that our court systems are not ready to handle.

AI bros eat it up without question: "it makes art more accessible" , "it'll make entertainment production cheaper" , "its the future, evolve!!!"

💭 AI is not similar to human thinking

When faced with the argument "a human didn't make it" the come back is "AI learns based on already existing information, which is exactly what humans do when producing art! We ALSO learn from others and see thousands of other artworks"

Lets make something clear: generative AI isn't making anything original. It is true that human beings process all the information we come across. We observe that information, learn from it, process it then ADD our own understanding of the world, our unique lived experiences. Through that information collection, understanding, and our own personalities we then create new original things.

💭 Generative AI doesn't create things: it mimics things

Take an analogy:

Consider an infant unable to talk but old enough to engage with their caregivers, some point in between 6-8 months old.

Mom: a bird flaps its wings to fly!!! *makes a flapping motion with arm and hands*

Infant: *giggles and makes a flapping motion with arms and hands*

The infant does not understand what a bird is, what wings are, or the concept of flight. But she still fully mimicked the flapping of the hands and arms because her mother did it first to show her. She doesn't cognitively understand what on earth any of it means, but she was still able to do it.

In the same way, generative AI is the infant that copies what humans have done— mimicry. Without understanding anything about the works it has stolen.

Its not original, it doesn't have a world view, it doesn't understand emotions that go into the different work it is stealing, it's creations have no meaning, it doesn't have any motivation to create things it only does so because it was told to.

Why read a book someone isn't even bothered to write?

Related videos I find worth a watch

ChatGPT's Huge Problem by Kyle Hill (we don't understand how AI works)

Criticism of Shadiversity's "AI Love Letter" by DeviantRahll

AI Is Ruining the Internet by Drew Gooden

AI vs The Law by Legal Eagle (AI & US Copyright)

AI Voices by Tyler Chou (Short, flash warning)

Dead Internet Theory by Kyle Hill

-Dyslexia, not audio proof read-

#ai#anti ai#generative ai#art#writing#ai writing#wrote 95% of this prior to brain stopping sky rocketing#chatgpt#machine learning#youtube#technology#artificial intelligence#people complain about us being#luddite#but nah i dont find mimicking to be real creations#ai isnt the problem#ai is going to develop period#its going to be used period#doesn't mean we need to normalize and accept generative ai

73 notes

·

View notes

Text

Detroit: Become Human – A Musical Masterpiece

Music has a way of getting into a person’s mind and speaking to them directly, expressing things words or pictures could never. It is often used in storytelling to immerse the audience further into the piece and evokes an emotional response within a person. In 2018’s Detroit: Become Human, music is used incredibly well to engage the audience in its vividly fresh environments and story. Detroit: Become Human is a narrative-based storytelling game, which centers around a near-future world in which artificially intelligent beings, androids, are quickly on the rise in society. Incidents of what people have started to call deviancy, androids breaking free from their programming and thinking for themselves, is quickly becoming a problem. The game follows three protagonists: Connor, the police force deviant hunter; Kara, the household assistant; and Markus, the revolution leader. The game uses three different composers for its music, one for each character to create contrast between them. Three different composers, coupled with the fact that the score was created more like a film score than a traditional video game score, and the consistency of music throughout the game, help to create the dense musical environment of Detroit: Become Human.

In the game there are three different characters living completely different lives and embarking on highly different adventures. Each story has a vastly different tone to it.The soundtrack contributes compliments this in a logical way, as it has three different composers that bring to life the story of Detroit. Philip Sheppard, who composed Kara’s soundtrack, was tasked with creating a familial sounding score, one that highlights Kara’s journey of becoming Alice’s protector. Of the three characters, I believe that Kara has the most straightforward journey of them all and her soundtrack reflects that. Her primary function before deviating was personal caretaking, and throughout her journey with Alice, she returns to that primal instinct time and time again. In a short documentary, Sheppard says that “I had a log fire in the room I study in…and it became the basis for Kara’s theme…Now over the top of that I found a little theme that just seemed to fit over top which is taking Kara’s name Kara, Kara – and doing like, a two-syllable motif.” (Playstation, Detroit: Become Human – Behind The Music) Sheppard goes on to say later in the conversation that one of those two base pieces are present in every track of Kara’s. Keeping her musical pieces relatively the same while still ever-growing reflects Kara’s story deeply, a household care assistant turned mother. While fighting her way through Detroit to make it to Canada, she is learning how to deal with her newly discovered sense of self and feelings. Kara’s soundtrack features various string instruments and piano quite heavily. This delicate sounding score highlights and contrasts the other two scores beautifully. Had Sheppard been in charge of composing each of the three soundtracks, the game would have had a very different musical tone. That is why bringing in both John Paesano and Nima Fakhrara to compose the other two scores was vital. Take for example Connor’s score, which was done with synthesizers and custom-made instruments. This electronic sounding score shows the inner turmoil Connor faces throughout the game - am I man or machine? Fakhrara’s complex knowledge of film scores and instrument making provides the perfect set of skills to compose a soundtrack like Connor’s. Phillip Sheppard is a cellist and brings his unique sounds to Kara’s soundtrack. Each composer uses their unique skillset to come up with three beautifully crafted scores, all sounding unique but combined in a neatly presented package. Hearing the contrast from one chapter to another is what makes you appreciate each one individually.

Abundant, film score-like soundtracks are one of the reasons why modern-day video games have started to look more and more cinematic. Many choice based, narrative driven games like Detroit have the feeling of playing out a movie. Various YouTube channels, including Andy Gilleand, have edited gameplay of the game to remove button prompts and turn the game into a feature length film (Gilleand, Detroit: Become Human (The Movie)). The lead audio producer, Aurelien Baguerre stated in the musical documentary about the game that the team wanted to make the score more similar to a film score then a traditional video game score (Playstation, Detroit: Become Human – Behind The Music). You can clearly see this in the way the score is formatted. Each section has a particular track that plays, the music will loop within the chapter, but the same track is never heard twice, unlike more traditional game scores where one or more themes will be used in certain areas. Throughout the game, various cutscenes will play, moments where the player is not in control of the action happening on screen. During these scenes, often times particular music is playing over it to enhance the scene playing out. For example, the chapter “Jericho” finds Markus looking for the safe haven Jericho and features a cutscene in which Markus finds himself jumping off a construction bridge into a vat of water below. As he falls, the music swells in an orchestral chorus of voices. In the background of all of this, we see a delicately rendered sky, the sun slowly setting in it. The music highlights the scene in a way that shows the player the importance of this moment. To me, this cutscene symbolizes angel-like figures coming to guide him to the safe haven Jericho. Without the music, this cinematic moment would have fallen flat.

Back before technology was advanced enough, most films produced were silent. Despite the lack of talking, most silent films were accompanied by some sort of music. Words and dialogue were quite literally swapped for music, and what could not be said was instead represented through music. Now that technology has further advanced, we now have ways of recording speaking for movies, and now video games. However, that isn’t to say that music isn’t still used as a way to supplement the words being spoken on screen. Throughout Detroit, if you stop and stand for a second anywhere in the game, you’ll notice music is always playing. This is true throughout both cutscenes and playable sections. Whether it is a good thing or not, humans always like to be stimulated. By making Detroit both visually and auditorily stimulating works in its favour to create the atmosphere needed. The place I noticed it the most was in many of Connor’s chapters. Searching around an apartment to find clues to an active murder case? Tense, deep music, making you feel constantly on edge and running out of time. Interrogating a deviant murderer? Slow, methodical music with a tone that sends shivers up your spine. Of course, while all of this is going on, the game is showing you similar things to invoke similar reactions. Although the visuals alone could clue the player in on how they are supposed to feel, by adding music behind all the scenes, it gives a deeper understanding of how a character might be feeling at any given time. Humans often rely on multiple cues to alert them as to how they should feel, consciously or not. By consistently having music playing, it gives people a trustworthy second source to base their emotions towards the game on.

Detroit: Become Human has a soundtrack that creeps its way into your mind. By employing several different techniques, the game creates a vast auditory landscape. Hiring separate composers for each character, staging its score in a cinematic way, and never leaving players in a quiet area made an already emotional game into that of a masterpiece. The score adds an intelligent depth to its game, which sets it apart from many titles in the genre. The music of Detroit will not soon be forgotten.

Works Cited

PlayStation. “Detroit: Become Human – Behind The Music | PS4.” YouTube, 12 Apr. 2018, www.youtube.com/watch?v=KUIUFUC5dsw.

Detroit: Become Human. Directed by David Cage, Sony Interactive Entertainment/Quantic Dream, 2018. Sony PlayStation 4 game.

Fakhrara, Nima, et al. Detroit: Become Human (Original Soundtrack). Sony Interactive Entertainment, 25 May 2018.

#creative writing#writing#video games#essay#essay writing#detroit become human#music#dbh#dbh connor#dbh markus#dbh kara#video game music#video game writing

19 notes

·

View notes

Video

youtube

AI Basics for Dummies- Beginners series on AI- Learn, explore, and get empowered

For beginners, explain what Artificial Intelligence (AI) is. Welcome to our series on Artificial Intelligence! Here's a breakdown of what you'll learn in each segment: What is AI? – Discover how AI powers machines to perform human-like tasks such as decision-making and language understanding. What is Machine Learning? – Learn how machines are trained to identify patterns in data and improve over time without explicit programming. What is Deep Learning? – Explore advanced machine learning using neural networks to recognize complex patterns in data. What is a Neural Network in Deep Learning? – Dive into how neural networks mimic the human brain to process information and solve problems. Discriminative vs. Generative Models – Understand the difference between models that classify data and those that generate new data. Introduction to Large Language Models in Generative AI – Discover how AI models like GPT generate human-like text, power chatbots, and transform industries. Applications and Future of AI – Explore real-world applications of AI and how these technologies are shaping the future.

Next video in this series: Generative AI for Dummies- AI for Beginners series. Learn, explore, and get empowered

Here is the bonus: if you are looking for a Tesla, here is the link to get you a $1000.00 discount

Thanks for watching! www.youtube.com/@UC6ryzJZpEoRb_96EtKHA-Cw

37 notes

·

View notes

Text

Been a while, crocodiles. Let's talk about cad.

or, y'know...

Yep, we're doing a whistle-stop tour of AI in medical diagnosis!

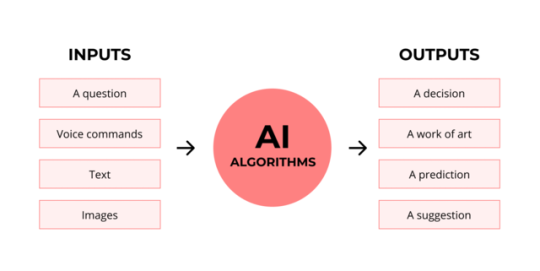

Much like programming, AI can be conceived of, in very simple terms, as...

a way of moving from inputs to a desired output.

See, this very funky little diagram from skillcrush.com.

The input is what you put in. The output is what you get out.

This output will vary depending on the type of algorithm and the training that algorithm has undergone – you can put the same input into two different algorithms and get two entirely different sorts of answer.

Generative AI produces ‘new’ content, based on what it has learned from various inputs. We're talking AI Art, and Large Language Models like ChatGPT. This sort of AI is very useful in healthcare settings to, but that's a whole different post!

Analytical AI takes an input, such as a chest radiograph, subjects this input to a series of analyses, and deduces answers to specific questions about this input. For instance: is this chest radiograph normal or abnormal? And if abnormal, what is a likely pathology?

We'll be focusing on Analytical AI in this little lesson!

Other forms of Analytical AI that you might be familiar with are recommendation algorithms, which suggest items for you to buy based on your online activities, and facial recognition. In facial recognition, the input is an image of your face, and the output is the ability to tie that face to your identity. We’re not creating new content – we’re classifying and analysing the input we’ve been fed.

Many of these functions are obviously, um, problematique. But Computer-Aided Diagnosis is, potentially, a way to use this tool for good!

Right?

....Right?

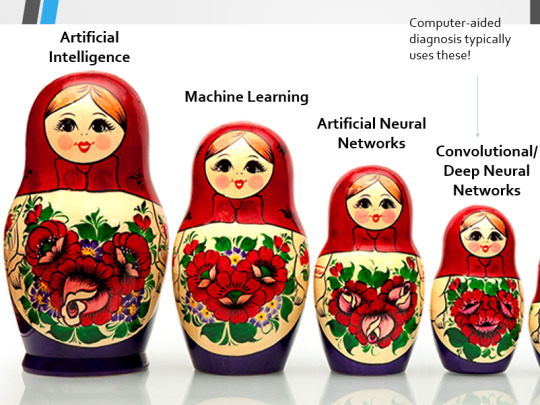

Let's dig a bit deeper! AI is a massive umbrella term that contains many smaller umbrella terms, nested together like Russian dolls. So, we can use this model to envision how these different fields fit inside one another.

AI is the term for anything to do with creating and managing machines that perform tasks which would otherwise require human intelligence. This is what differentiates AI from regular computer programming.

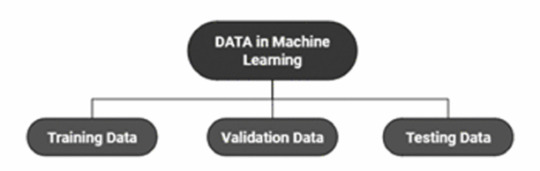

Machine Learning is the development of statistical algorithms which are trained on data –but which can then extrapolate this training and generalise it to previously unseen data, typically for analytical purposes. The thing I want you to pay attention to here is the date of this reference. It’s very easy to think of AI as being a ‘new’ thing, but it has been around since the Fifties, and has been talked about for much longer. The massive boom in popularity that we’re seeing today is built on the backs of decades upon decades of research.

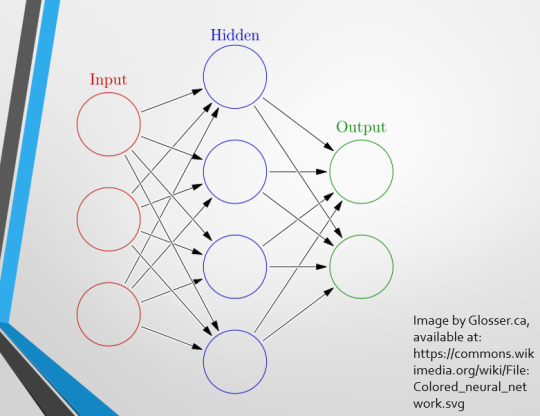

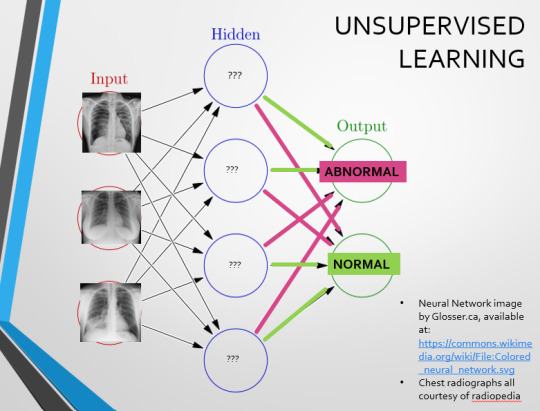

Artificial Neural Networks are loosely inspired by the structure of the human brain, where inputs are fed through one or more layers of ‘nodes’ which modify the original data until a desired output is achieved. More on this later!

Deep neural networks have two or more layers of nodes, increasing the complexity of what they can derive from an initial input. Convolutional neural networks are often also Deep. To become ‘convolutional’, a neural network must have strong connections between close nodes, influencing how the data is passed back and forth within the algorithm. We’ll dig more into this later, but basically, this makes CNNs very adapt at telling precisely where edges of a pattern are – they're far better at pattern recognition than our feeble fleshy eyes!

This is massively useful in Computer Aided Diagnosis, as it means CNNs can quickly and accurately trace bone cortices in musculoskeletal imaging, note abnormalities in lung markings in chest radiography, and isolate very early neoplastic changes in soft tissue for mammography and MRI.

Before I go on, I will point out that Neural Networks are NOT the only model used in Computer-Aided Diagnosis – but they ARE the most common, so we'll focus on them!

This diagram demonstrates the function of a simple Neural Network. An input is fed into one side. It is passed through a layer of ‘hidden’ modulating nodes, which in turn feed into the output. We describe the internal nodes in this algorithm as ‘hidden’ because we, outside of the algorithm, will only see the ‘input’ and the ‘output’ – which leads us onto a problem we’ll discuss later with regards to the transparency of AI in medicine.

But for now, let’s focus on how this basic model works, with regards to Computer Aided Diagnosis. We'll start with a game of...

Spot The Pathology.

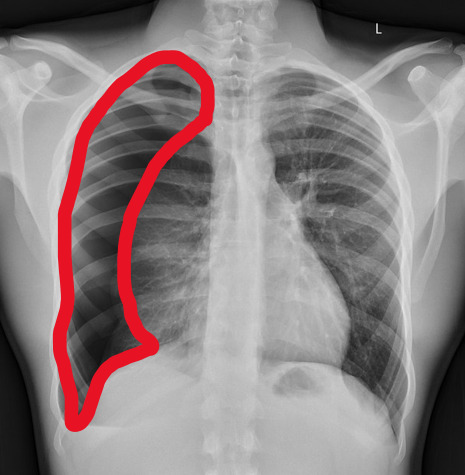

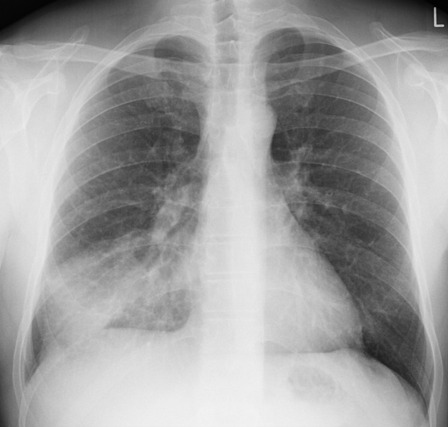

yeah, that's right. There's a WHACKING GREAT RIGHT-SIDED PNEUMOTHORAX (as outlined in red - images courtesy of radiopaedia, but edits mine)

But my question to you is: how do we know that? What process are we going through to reach that conclusion?

Personally, I compared the lungs for symmetry, which led me to note a distinct line where the tissue in the right lung had collapsed on itself. I also noted the absence of normal lung markings beyond this line, where there should be tissue but there is instead air.

In simple terms.... the right lung is whiter in the midline, and black around the edges, with a clear distinction between these parts.

Let’s go back to our Neural Network. We’re at the training phase now.

So, we’re going to feed our algorithm! Homnomnom.

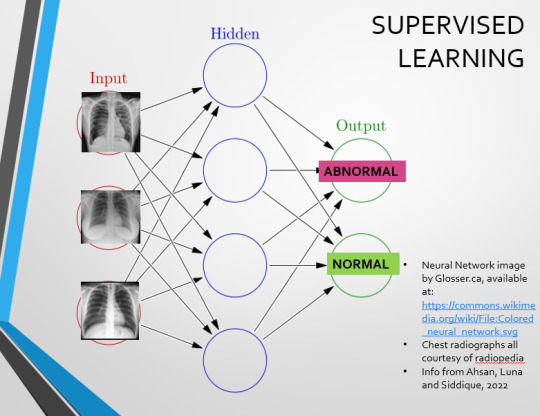

Let’s give it that image of a pneumothorax, alongside two normal chest radiographs (middle picture and bottom). The goal is to get the algorithm to accurately classify the chest radiographs we have inputted as either ‘normal’ or ‘abnormal’ depending on whether or not they demonstrate a pneumothorax.

There are two main ways we can teach this algorithm – supervised and unsupervised classification learning.

In supervised learning, we tell the neural network that the first picture is abnormal, and the second and third pictures are normal. Then we let it work out the difference, under our supervision, allowing us to steer it if it goes wrong.

Of course, if we only have three inputs, that isn’t enough for the algorithm to reach an accurate result.

You might be able to see – one of the normal chests has breasts, and another doesn't. If both ‘normal’ images had breasts, the algorithm could as easily determine that the lack of lung markings is what demonstrates a pneumothorax, as it could decide that actually, a pneumothorax is caused by not having breasts. Which, obviously, is untrue.

or is it?

....sadly I can personally confirm that having breasts does not prevent spontaneous pneumothorax, but that's another story lmao

This brings us to another big problem with AI in medicine –

If you are collecting your dataset from, say, a wealthy hospital in a suburban, majority white neighbourhood in America, then you will have those same demographics represented within that dataset. If we build a blind spot into the neural network, and it will discriminate based on that.

That’s an important thing to remember: the goal here is to create a generalisable tool for diagnosis. The algorithm will only ever be as generalisable as its dataset.

But there are plenty of huge free datasets online which have been specifically developed for training AI. What if we had hundreds of chest images, from a diverse population range, split between those which show pneumothoraxes, and those which don’t?

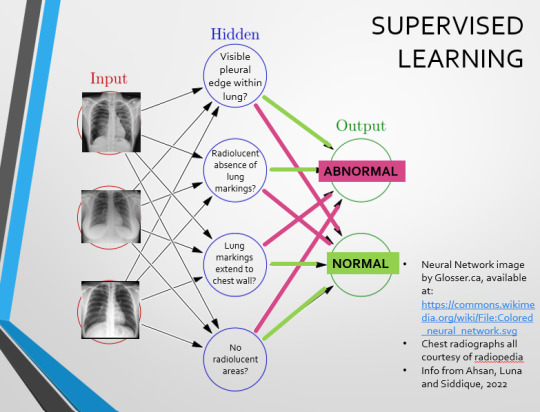

If we had a much larger dataset, the algorithm would be able to study the labelled ‘abnormal’ and ‘normal’ images, and come to far more accurate conclusions about what separates a pneumothorax from a normal chest in radiography. So, let’s pretend we’re the neural network, and pop in four characteristics that the algorithm might use to differentiate ‘normal’ from ‘abnormal’.

We can distinguish a pneumothorax by the appearance of a pleural edge where lung tissue has pulled away from the chest wall, and the radiolucent absence of peripheral lung markings around this area. So, let’s make those our first two nodes. Our last set of nodes are ‘do the lung markings extend to the chest wall?’ and ‘Are there no radiolucent areas?’

Now, red lines mean the answer is ‘no’ and green means the answer is ‘yes’. If the answer to the first two nodes is yes and the answer to the last two nodes is no, this is indicative of a pneumothorax – and vice versa.

Right. So, who can see the problem with this?

(image courtesy of radiopaedia)

This chest radiograph demonstrates alveolar patterns and air bronchograms within the right lung, indicative of a pneumonia. But if we fed it into our neural network...

The lung markings extend all the way to the chest wall. Therefore, this image might well be classified as ‘normal’ – a false negative.

Now we start to see why Neural Networks become deep and convolutional, and can get incredibly complex. In order to accurately differentiate a ‘normal’ from an ‘abnormal’ chest, you need a lot of nodes, and layers of nodes. This is also where unsupervised learning can come in.

Originally, Supervised Learning was used on Analytical AI, and Unsupervised Learning was used on Generative AI, allowing for more creativity in picture generation, for instance. However, more and more, Unsupervised learning is being incorporated into Analytical areas like Computer-Aided Diagnosis!

Unsupervised Learning involves feeding a neural network a large databank and giving it no information about which of the input images are ‘normal’ or ‘abnormal’. This saves massively on money and time, as no one has to go through and label the images first. It is also surprisingly very effective. The algorithm is told only to sort and classify the images into distinct categories, grouping images together and coming up with its own parameters about what separates one image from another. This sort of learning allows an algorithm to teach itself to find very small deviations from its discovered definition of ‘normal’.

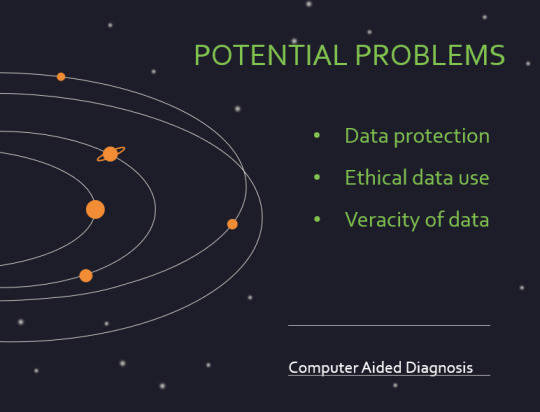

BUT this is not to say that CAD is without its issues.

Let's take a look at some of the ethical and practical considerations involved in implementing this technology within clinical practice!

(Image from Agrawal et al., 2020)

Training Data does what it says on the tin – these are the initial images you feed your algorithm. What is key here is volume, variety - with especial attention paid to minimising bias – and veracity. The training data has to be ‘real’ – you cannot mislabel images or supply non-diagnostic images that obscure pathology, or your algorithm is useless.

Validation data evaluates the algorithm and improves on it. This involves tweaking the nodes within a neural network by altering the ‘weights’, or the intensity of the connection between various nodes. By altering these weights, a neural network can send an image that clearly fits our diagnostic criteria for a pneumothorax directly to the relevant output, whereas images that do not have these features must be put through another layer of nodes to rule out a different pathology.

Finally, testing data is the data that the finished algorithm will be tested on to prove its sensitivity and specificity, before any potential clinical use.

However, if algorithms require this much data to train, this introduces a lot of ethical questions.

Where does this data come from?

Is it ‘grey data’ (data of untraceable origin)? Is this good (protects anonymity) or bad (could have been acquired unethically)?

Could generative AI provide a workaround, in the form of producing synthetic radiographs? Or is it risky to train CAD algorithms on simulated data when the algorithms will then be used on real people?

If we are solely using CAD to make diagnoses, who holds legal responsibility for a misdiagnosis that costs lives? Is it the company that created the algorithm or the hospital employing it?

And finally – is it worth sinking so much time, money, and literal energy into AI – especially given concerns about the environment – when public opinion on AI in healthcare is mixed at best? This is a serious topic – we’re talking diagnoses making the difference between life and death. Do you trust a machine more than you trust a doctor? According to Rojahn et al., 2023, there is a strong public dislike of computer-aided diagnosis.

So, it's fair to ask...

why are we wasting so much time and money on something that our service users don't actually want?

Then we get to the other biggie.

There are also a variety of concerns to do with the sensitivity and specificity of Computer-Aided Diagnosis.

We’ve talked a little already about bias, and how training sets can inadvertently ‘poison’ the algorithm, so to speak, introducing dangerous elements that mimic biases and problems in society.

But do we even want completely accurate computer-aided diagnosis?

The name is computer-aided diagnosis, not computer-led diagnosis. As noted by Rajahn et al, the general public STRONGLY prefer diagnosis to be made by human professionals, and their desires should arguably be taken into account – as well as the fact that CAD algorithms tend to be incredibly expensive and highly specialised. For instance, you cannot put MRI images depicting CNS lesions through a chest reporting algorithm and expect coherent results – whereas a radiologist can be trained to diagnose across two or more specialties.

For this reason, there is an argument that rather than focusing on sensitivity and specificity, we should just focus on producing highly sensitive algorithms that will pick up on any abnormality, and output some false positives, but will produce NO false negatives.

(Sensitivity = a test's ability to identify sick people with a disease)

(Specificity = a test's ability to identify that healthy people do not have this disease)

This means we are working towards developing algorithms that OVERESTIMATE rather than UNDERESTIMATE disease prevalence. This makes CAD a useful tool for triage rather than providing its own diagnoses – if a CAD algorithm weighted towards high sensitivity and low specificity does not pick up on any abnormalities, it’s highly unlikely that there are any.

Finally, we have to question whether CAD is even all that accurate to begin with. 10 years ago, according to Lehmen et al., CAD in mammography demonstrated negligible improvements to accuracy. In 1989, Sutton noted that accuracy was under 60%. Nowadays, however, AI has been proven to exceed the abilities of radiologists when detecting cancers (that’s from Guetari et al., 2023). This suggests that there is a common upwards trajectory, and AI might become a suitable alternative to traditional radiology one day. But, due to the many potential problems with this field, that day is unlikely to be soon...

That's all, folks! Have some references~

#medblr#artificial intelligence#radiography#radiology#diagnosis#medicine#studyblr#radioactiveradley#radley irradiates people#long post

16 notes

·

View notes

Text

Only you :

Nathan bateman x reader

It was another day in the sleek, high-tech labyrinth of Nathan Bateman’s isolated estate. The stark, minimalist design of the building only heightened the eerie sense of being watched — though Y/N knew it wasn’t Nathan who kept an eye on her every move, but rather the many layers of surveillance he had built into the house.

Y/N, a simple assistant in this world of genius-level robotics and AI, couldn’t help but feel out of place. She’d been hired because she was competent at her job, sure, but that didn’t change the fact that her boss was Nathan Bateman — a man as brilliant as he was intimidating. There were whispers, of course. Nathan’s reputation preceded him: intelligent, arrogant, and, to her dismay, rumored to have a habit of sleeping with his androids.

Still, she couldn’t deny the odd attraction she had to him. The way he commanded a room — or even just the kitchen when he’d wander in shirtless, making his “morning” smoothie in the afternoon. She’d learned to put up walls to protect herself from getting too caught up in whatever strange, magnetic pull he had. After all, this was a man who built artificial women to his liking.

But as her feelings grew, so did her doubts. Could she even compare to the perfection of a machine? She wasn’t Ava, programmed to be flawless. She was human — capable of flaws, mistakes, and very bad decisions, like dating other people to distract herself from her confusing emotions toward her reclusive boss.

“Hey, Nathan,” she said one afternoon, her voice casual, but her stomach a mess of nerves.

Nathan glanced up from his desk, eyebrow raised, the edge of his glass whiskey tumbler catching the light. “Yeah?”

“I’ve got plans tonight. Going out,” she continued, pretending to fuss over the tablet in her hand as if the schedule needed rearranging.

He leaned back in his chair, narrowing his eyes. “Plans, huh?”

Y/N swallowed, suddenly feeling like she was under one of his scrutinizing microscopes. “Yeah... Dinner, maybe drinks afterward.”

“With who?”

The question caught her off guard. “Just... some guy I met online.”

Nathan’s jaw tensed, but his expression remained unreadable. “That so?” He stood up, downing the rest of his drink in one go. “And you think that’s a good idea?”

Y/N blinked. “What?”

“Dating. Other people,” Nathan clarified, stepping closer. His voice dropped, more serious now. “When you’re already working for me.”

Her heart rate spiked as his words settled in, something ominous lingering in the air between them. But before she could protest, Nathan cut her off.

“I need to tell you something,” he said, his tone casual but his eyes sharp, pinning her in place.

“What is it?” she asked, wary of where this conversation was going.

Nathan hesitated for a second, as though weighing his next words. Then, with all the grace of a sledgehammer, he said, “You’re the only person I’m fucking.”

Y/N stared at him, mouth agape. “What?”

“I just want you to know that,” he repeated, his expression infuriatingly calm. “In case you were wondering.”

Y/N’s brain short-circuited, unable to process what he just said. Was this his idea of reassurance? She wasn’t sure whether to laugh, cry, or hit him.

“Okay, first of all — that’s not how you tell someone you like them!” she exclaimed, exasperated.

Nathan shrugged, his face unreadable. “You want me to write you a sonnet?”

“No, I—Nathan!” She ran a hand through her hair, a nervous habit she hadn’t managed to shake. “You... What does this even mean?”

“I thought it was pretty obvious,” he said, taking another step forward, crowding her space. “I don’t do this... dating thing. Haven’t needed to. But you? You’re different.”

She narrowed her eyes. “How exactly?”

“You’re not an android,” he said bluntly, as though that was the clearest explanation possible. “You’re not perfect, you make mistakes, you’re... real.”

Y/N blinked, suddenly aware of just how close he was standing. His presence was overwhelming — a mix of whiskey and his natural scent filling the space between them.

Nathan reached up and tucked a strand of hair behind her ear, his thumb grazing her jawline. “And I like real.”

Her breath hitched. Despite all the warning signs, all the internal alarms blaring that this was a terrible idea, Y/N couldn’t deny the pull between them. Maybe it was reckless. Maybe it was another in her long list of bad decisions. But at this moment, she couldn’t bring herself to care.

Later that night, they were tangled in the sheets of his impossibly sleek bed, her body still buzzing from the intensity of the encounter. Nathan lay beside her, his breathing slow and steady, but his mind clearly still racing.

As Y/N rested her head on his chest, listening to the rhythmic thump of his heartbeat, she couldn’t help but feel a surge of confusion. Was this real?

“You should know,” she started, her voice quiet, “I’m still terrible at making decisions.”

Nathan chuckled softly, his hand running lazily up and down her arm. “Yeah, I’ve noticed.”

“But, um, can I give you some advice?” she asked, glancing up at him.

He raised an eyebrow. “Advice from you?”

“Hey, I’ve got wisdom too,” she teased, lightly smacking his chest. “Listen... Don’t be with a woman who wants you to give up everything for her.”

Nathan frowned, looking down at her. “What are you getting at?”

“I mean, don’t be with someone who wants you to stop being who you are — even if who you are is a bit of an asshole.”

Nathan smirked. “Bit?”

“Okay, more than a bit,” she conceded with a laugh. “But still. Be with someone who wants to be a part of your life, not change it.”

Nathan was quiet for a moment, considering her words. Then, with a wry smile, he leaned down and kissed the top of her head. “I think you’re stuck with me now.��

Y/N grinned, snuggling closer. “I’ll survive.”

After a beat, Nathan added, his voice low and teasing, “You do realize you’re the only person I’m fucking, right?”

Y/N groaned, burying her face in his chest. “Nathan! That is not romantic!”

He laughed, the sound deep and rich. “Good thing I’m not trying to be romantic.”

She rolled her eyes, but couldn’t help the smile that tugged at her lips. “You’re impossible.”

“Yeah,” he agreed, pressing a lazy kiss to her forehead. “But now I’m your impossible.”

And with that, they both drifted off, content in the strange, twisted, yet oddly comforting relationship they’d found themselves in.

#nathan bateman#nathan bateman x reader#ex machina#oscar isaac character#oscar isaac#oscar isaac characters

13 notes

·

View notes

Text

The Silent Genius: Is Your AI Smarter Than You Think?

When we think of the words artificial intelligence, we usually think of high-tech robots, complex code, or smart voice assistants carrying out our every command. But AI has come a long way in its evolution from a futuristic fantasy, and more likely than not, it's already a hidden part of your life. You may have even been touched by AI before without realizing it.

It’s Not Sci-Fi Anymore — It’s Daily Life

Think about your daily interactions. Your phone tells you the fastest route to work with what is happening on the roads right now. Netflix recommends a movie you didn’t realize you wanted to watch. Spotify queues up a song that just happens to match exactly how you feel at that moment. None of that is a coincidence — it’s AI that has been built to adapt to your behavior, find trends, and make decisions for you.

But the interesting thing is, you don’t even pay attention to it. AI is so much a part of our digital lives, it’s so subtle, that we often forget it is even there. That invisibility is part of its brilliance — and part of its power.

Not Just Smart — Deeply Aware

What makes modern AI different from the rigid bots of the past is its ability to adapt. These systems don't just follow rules; they learn. Over time, your devices form a digital fingerprint of your preferences, habits, and even your emotional responses.

Think of your smartphone. It likely knows:

What time you usually wake up

Which apps you use first thing in the morning

Who you message most often

When you need a reminder to hydrate or stretch

Now multiply that across every platform you use — from email to ecommerce. Your digital self is being mapped in incredible detail.

So... Who’s Really in Control?

There’s a strange irony in how dependent we’ve become on systems we barely understand. Most users don’t know how recommendation algorithms work, or how voice assistants process language. Yet we rely on them constantly.

And that opens up deeper questions:

If an AI system can predict your choices, influence your decisions, and quietly guide your behavior — is it still just a tool? Or is it becoming something more?

The more invisible AI becomes, the more it operates in the background — nudging, optimizing, suggesting — the harder it is to draw a line between our natural decisions and the ones shaped by machines.

The Future: Friendly or Frightening?

It’s easy to feel either amazed or alarmed by this silent genius that follows us through our digital lives. The truth lies somewhere in between. AI has the potential to make our lives easier, healthier, and more efficient. But it also demands awareness. If we don’t recognize how it shapes our behavior, we risk losing a piece of agency with every helpful suggestion.

So the next time your phone finishes your sentence, your calendar schedules your life, or your feed seems a little too perfect — ask yourself:

Is this me thinking?

Or is it my AI thinking for me?

4 notes

·

View notes

Text

Some Fortune 500 companies have begun testing software that can spot a deepfake of a real person in a live video call, following a spate of scams involving fraudulent job seekers who take a signing bonus and run.

The detection technology comes courtesy of GetReal Labs, a new company founded by Hany Farid, a UC-Berkeley professor and renowned authority on deepfakes and image and video manipulation.

GetReal Labs has developed a suite of tools for spotting images, audio, and video that are generated or manipulated either with artificial intelligence or manual methods. The company’s software can analyze the face in a video call and spot clues that may indicate it has been artificially generated and swapped onto the body of a real person.

“These aren’t hypothetical attacks, we’ve been hearing about it more and more,” Farid says. “In some cases, it seems they're trying to get intellectual property, infiltrating the company. In other cases, it seems purely financial, they just take the signing bonus.”

The FBI issued a warning in 2022 about deepfake job hunters who assume a real person’s identity during video calls. UK-based design and engineering firm Arup lost $25 million to a deepfake scammer posing as the company’s CFO. Romance scammers have also adopted the technology, swindling unsuspecting victims out of their savings.

Impersonating a real person on a live video feed is just one example of the kind of reality-melting trickery now possible thanks to AI. Large language models can convincingly mimic a real person in online chat, while short videos can be generated by tools like OpenAI’s Sora. Impressive AI advances in recent years have made deepfakery more convincing and more accessible. Free software makes it easy to hone deepfakery skills, and easily accessible AI tools can turn text prompts into realistic-looking photographs and videos.

But impersonating a person in a live video is a relatively new frontier. Creating this type of a deepfake typically involves using a mix of machine learning and face-tracking algorithms to seamlessly stitch a fake face onto a real one, allowing an interloper to control what an illicit likeness appears to say and do on screen.

Farid gave WIRED a demo of GetReal Labs’ technology. When shown a photograph of a corporate boardroom, the software analyzes the metadata associated with the image for signs that it has been modified. Several major AI companies including OpenAI, Google, and Meta now add digital signatures to AI-generated images, providing a solid way to confirm their inauthenticity. However, not all tools provide such stamps, and open source image generators can be configured not to. Metadata can also be easily manipulated.

GetReal Labs also uses several AI models, trained to distinguish between real and fake images and video, to flag likely forgeries. Other tools, a mix of AI and traditional forensics, help a user scrutinize an image for visual and physical discrepancies, for example highlighting shadows that point in different directions despite having the same light source, or that do not appear to match the object that cast them.

Lines drawn on different objects shown in perspective will also reveal if they converge on a common vanishing point, as would be the case in a real image.

Other startups that promise to flag deepfakes rely heavily on AI, but Farid says manual forensic analysis will also be crucial to flagging media manipulation. “Anybody who tells you that the solution to this problem is to just train an AI model is either a fool or a liar,” he says.

The need for a reality check extends beyond Fortune 500 firms. Deepfakes and manipulated media are already a major problem in the world of politics, an area Farid hopes his company’s technology could do real good. The WIRED Elections Project is tracking deepfakes used to boost or trash political candidates in elections in India, Indonesia, South Africa, and elsewhere. In the United States, a fake Joe Biden robocall was deployed last January in an effort to dissuade people from turning out to vote in the New Hampshire Presidential primary. Election-related “cheapfake” videos, edited in misleading ways, have gone viral of late, while a Russian disinformation unit has promoted an AI-manipulated clip disparaging Joe Biden.

Vincent Conitzer, a computer scientist at Carnegie Mellon University in Pittsburgh and coauthor of the book Moral AI, expects AI fakery to become more pervasive and more pernicious. That means, he says, there will be growing demand for tools designed to counter them.

“It is an arms race,” Conitzer says. “Even if you have something that right now is very effective at catching deepfakes, there's no guarantee that it will be effective at catching the next generation. A successful detector might even be used to train the next generation of deepfakes to evade that detector.”

GetReal Labs agrees it will be a constant battle to keep up with deepfakery. Ted Schlein, a cofounder of GetReal Labs and a veteran of the computer security industry, says it may not be long before everyone is confronted with some form of deepfake deception, as cybercrooks become more conversant with the technology and dream up ingenious new scams. He adds that manipulated media is a top topic of concern for many chief security officers. “Disinformation is the new malware,” Schlein says.

With significant potential to poison political discourse, Farid notes that media manipulation can be considered a more challenging problem. “I can reset my computer or buy a new one,” he says. “But the poisoning of the human mind is an existential threat to our democracy.”

13 notes

·

View notes

Note

As we're in the topic of AI, I remember my mom once insisting I learn how to use it as she believed all jobs are going to be replaced by machines in the future. When I tried giving my valid arguments to how AI should be implemented without totally replacing people, harming the environment, spreading misinfo (I'm lookin' at you, Google AI!) and stealing information, she just shut me down with "Oh, but you risk being left behind! Remember Kodak? They shut down when everyone started going digital!"

There was this wise man who said "I'd prefer to have AI help me do my chores and reduce the workload but not take over the job I love." As an artist, you know that anything that's made by a human becomes a novelty and more sought after. I remember passing by our local mall and all of the ads had these hollow, generic AI-lookin' CGI graphics. There is just something in them that makes me gag!

Heck, I'd rather look at those corporate Memphis illustrations more than those slop. Again, I wonder if she's even listening when I suggested how AI could be susceptible to privacy breaches. I hate how even Google and DuckDuckGo have AI features now whenever I search for something. Even one of the prestigious art schools such as Gobelins landed under fire for using AI!

For now, AI doesn't seem to be a very promising tool. It's not like digital art or cameras because at least it doesn't feed off data and just makes creating art or taking photographs much easier. At least you get to curate the results by toggling with settings and textures instead of just typing random prompts leading to some sickening random image.

In regards to AI, I know a lot of people have anxiety around the topic. But it's a great time to actually talk about what AI is and isn't.

And what we call AI is not actually artificial intelligence. What we are calling AI is still a highly regulated script of pseudo-reasoning that is impressive on first blush, but quickly shows its debilitating limitations.

For one, large language models are impressive as long as you don't think about it. The hive mind these companies have sought to create fails at the basics due to the fact that this is still just a software program we are talking about. It is utterly useless as a data collection and research tool as it has no idea what is and isn't true. The large language models look impressive. It looks like it's thinking as it goes step by step to “prove it's work” so to speak. But it is just sifting through data, it's not actually thinking. Thinking would be reasoning. It would be categorizing sources based on accuracy, while also taking into account implicit bias of such sources.

Asking GhatGPT to make a pasta recipe, but asking for substitutions to certain ingredients will not yield a surefire result as the computer is not going to understand the difference between a tomato and a lemon as both are acidic fruits. It does not understand the concept of texture or where it comes from. It doesn't grasp the experience of eating food because it is running on an assumption that the ability to taste yields a singular result. That everyone will find a lemon sour and a grapefruit bitter and a cherry tart. But what if you don't taste soap when you eat cilantro? What if lemons are sugary sweet while grapefruits are tart? The machine is never going to be able to account for the experience of sensing.

As such, AI will never be able to portray meaningful art either. The fact that AI has taken up so much of the artistic community's discourse goes to show the issues with art today. People are so afraid of a machine creating something that looks pretty because that's all we make any more. We have commodified and commercialized art to the point of it being soulless. Its only purpose is to appear aesthetically pleasing for an audience who will spend less than a minute on a piece of art we've spent hours to days to weeks working on. But the reason is because our art lacks meaning. Whenever someone praised art on Twitter and claimed an emotional reaction, they attribute their feelings to the context of the source material or the appearance to the art.

When I went to an art museum, the paintings were all very well done, but not all aesthetically pleasing. And the one that stuck with me the most was a painting of four elderly women staring back at me. The only aspect of the painting that is in sharp focus are these women’s blue-grey eyes. And that was intentional. Because I kept finding myself going back to that painting because I kept feeling a strange sense of guilt. These eyes were on me and I couldn’t tell if it was with tenderness or scorn, so I had to keep going back. I felt guilty, if it was with tenderness I was ashamed I couldn’t remember anything else about them. Their faces left my mind the second I looked away. If it was with scorn, I felt the need to figure out what I missed. What quality was in the painting that was leaving me confused about the way these women looked at me.

That’s when I noticed their faces were literally painted in such a way that gave them an almost dream-like effect. The artist played on my brain’s inherent desire to identify a face, and with the eyes painted in such fine detail, the hazy idea of a face was held together in my brain. But I couldn’t say anything else about them without looking directly at them.

And it made me feel, but feel in a way that was slow and contemplative. It made me consider what the artist was trying to say without just googling it. A guess based on our wordless conversation through his medium. Because the real beauty and power that makes art ART is the way you get to interact with it as an individual. It’s vaguely spiritual. You can have these conversations with people long passed, and come to know them through their works.

That isn’t how it is anymore.

When you’re chasing numbers, be it in the form of money or perceived admiration, you inherently lose sight of what got you started: a feeling, a thought, an idea. Computers will never be able to question an idea. Never be able to extrapolate meaning from information or technique. Computers only understand numbers.

The fear of AI is the fear of replacing capitalism and consumerism. All we are thinking about is numbers. Numbers in the price of rent and food. Numbers in the hours worked and days off. Numbers in how we justify our own existence through social media clout and how much we consume with literal numbers. We function like computers, so of course people are scared of being replaced by computers. That they need these computers to stay on the latest operating system.

Only machines are scared of machines.

#anon ask#ai art#chatgpt#artificial intelligence#technology#consumer culture#consumerism#capitalism#robert glover#social media#neoliberalism#libertarianism#socioeconomic

4 notes

·

View notes

Note

I feel like if we’re going to emphasize the different between general AI and generative AI, which is significant, then the general public needs a better explanation of what makes ‘AI’ different than algorithms that already exist. It’s all machine learning, right? So where does it cross the line into being quote-unquote Artificial Intelligence? That’s my concern, honestly, because depending on how significant the distinction is, have we not all been using AI already? I don’t have much frame of reference because this isn’t a particular interest of mine, just something I believe I should be concerned and considerate of as a human being in our current society, you know?

That's fair.

However, I just woke up and haven't consumed enough coffee yet for an intelligent response so i'll just nod my head. Anyone seeing this is free to respond tho!

Good morning friends.

7 notes

·

View notes

Text

Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA: Best Price Guarantee

Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA: Best Price Guarantee

Introduction

Artificial Intelligence (AI) continues to evolve, demanding powerful computing resources to train and deploy complex models. In the United States, where AI research and development are booming, access to high-end GPUs like the RTX 4090 and RTX 5090 has become crucial. However, owning these GPUs is expensive and not practical for everyone, especially startups, researchers, and small teams. That’s where GPU rentals come in.

If you're looking for Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA, you’re in the right place. With services like NeuralRack.ai, you can rent cutting-edge hardware at competitive rates, backed by a best price guarantee. Whether you’re building a machine learning model, training a generative AI system, or running high-intensity simulations, rental GPUs are the smartest way to go.

Read on to discover how RTX 4090 and RTX 5090 rentals can save you time and money while maximizing performance.

Why Renting GPUs Makes Sense for AI Projects

Owning a high-performance GPU comes with a significant upfront cost. For AI developers and researchers, this can become a financial hurdle, especially when models change frequently and need more powerful hardware. Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA offer a smarter solution.

Renting provides flexibility—you only pay for what you use. Services like NeuralRack.ai Configuration let you customize your GPU rental to your exact needs. With no long-term commitments, renting is perfect for quick experiments or extended research periods.

You get access to enterprise-grade GPUs, excellent customer support, and scalable options—all without the need for in-house maintenance. This makes GPU rentals ideal for AI startups, freelance developers, educational institutions, and tech enthusiasts across the USA.

RTX 4090 vs. RTX 5090 – A Quick Comparison

Choosing between the RTX 4090 and RTX 5090 depends on your AI project requirements. The RTX 4090 is already a powerhouse with over 16,000 CUDA cores, 24GB GDDR6X memory, and superior ray-tracing capabilities. It's excellent for deep learning, natural language processing, and 3D rendering.

On the other hand, the newer RTX 5090 outperforms the 4090 in almost every way. With enhanced architecture, more CUDA cores, and optimized AI acceleration features, it’s the ultimate choice for next-gen AI applications.

Whether you choose to rent the RTX 4090 or RTX 5090, you’ll benefit from top-tier GPU performance. At NeuralRack Pricing, both GPUs are available at unbeatable rates. The key is to align your project requirements with the right hardware.

If your workload involves complex computations and massive datasets, opt for the RTX 5090. For efficient performance at a lower cost, the RTX 4090 remains an excellent option. Both are available under our Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA offering.

Benefits of Renting RTX 4090 and RTX 5090 for AI in the USA

AI projects require massive computational power, and not everyone can afford the hardware upfront. Renting GPUs solves that problem. The Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA offer:

High-end Performance: RTX 4090 and 5090 GPUs deliver lightning-fast training times and high accuracy for AI models.

Cost-Effective Solution: Eliminate capital expenditure and pay only for what you use.

Quick Setup: Platforms like NeuralRack Configuration provide instant access.

Scalability: Increase or decrease resources as your workload changes.

Support: Dedicated customer service via NeuralRack Contact Us ensures smooth operation.

You also gain flexibility in testing different models and architectures. Renting GPUs gives you freedom without locking your budget or technical roadmap.

If you're based in the USA and looking for high-performance AI development without the hardware investment, renting from NeuralRack.ai is your best bet.

Who Should Consider GPU Rentals in the USA?

GPU rentals aren’t just for large enterprises. They’re a great fit for:

AI researchers working on time-sensitive projects.

Data scientists training machine learning models.

Universities and institutions running large-scale simulations.

Freelancers and startups with limited hardware budgets.

Developers testing generative AI, NLP, and deep learning tools.

The Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA model is perfect for all these groups. You get premium resources without draining your capital. Plus, services like NeuralRack About assure you’re working with experts in the field.

Instead of wasting time with outdated hardware or bottlenecked cloud services, switch to a tailored GPU rental experience.

How to Choose the Right GPU Rental Service

When selecting a rental service for RTX GPUs, consider these:

Transparent Pricing – Check NeuralRack Pricing for honest rates.

Hardware Options – Ensure RTX 4090 and 5090 models are available.

Support – Look for responsive teams like at NeuralRack Contact Us.

Ease of Use – Simple dashboard, fast deployment, easy scaling.

Best Price Guarantee – A promise you get with NeuralRack’s rentals.

The right service will align with your performance needs, budget, and project timelines. That’s why the Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA offered by NeuralRack are highly rated among developers nationwide.

Pricing Overview: What Makes It “Affordable”?

Affordability is key when choosing GPU rentals. Buying a new RTX 5090 can cost over $2,000+, while renting from NeuralRack Pricing gives you access at a fraction of the cost.

Rent by the hour, day, or month depending on your needs. Bulk rentals also come with discounted packages. With NeuralRack’s Best Price Guarantee, you’re assured of the lowest possible rate for premium GPUs.

There are no hidden fees or forced commitments. Just clear pricing and instant setup. Visit NeuralRack.ai to explore more.

Where to Find Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA (150 words)

Finding reliable and budget-friendly GPU rentals is easy with NeuralRack. As a trusted provider of Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA, they deliver enterprise-grade hardware, best price guarantee, and 24/7 support.

Simply go to NeuralRack.ai and view the available configurations on the Configuration page. Have questions? Contact the support team through NeuralRack Contact Us.

Whether you’re based in California, New York, Texas, or anywhere else in the USA—NeuralRack has you covered.

Future-Proofing with RTX 5090 Rentals

The RTX 5090 is designed for the future of AI. With faster processing, more CUDA cores, and higher bandwidth, it supports next-gen AI models and applications. Renting the 5090 from NeuralRack.ai gives you access to bleeding-edge performance without the upfront cost.

It’s perfect for generative AI, LLMs, 3D modeling, and more. Make your project future-ready with Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA.

Final Thoughts: Why You Should Go for Affordable GPU Rentals

If you want performance, flexibility, and affordability all in one package, go with GPU rentals. The Affordable RTX 4090 and RTX 5090 Rentals for AI in the USA from NeuralRack.ai are trusted by developers and researchers across the country.

You get high-end GPUs, unbeatable prices, and expert support—all with zero commitment. Explore the pricing at NeuralRack Pricing and get started today.

FAQs

What’s the best way to rent an RTX 4090 or 5090 in the USA? Use NeuralRack.ai for affordable, high-performance GPU rentals.

How much does it cost to rent an RTX 5090? Visit NeuralRack Pricing for updated rates.

Is there a minimum rental duration? No, NeuralRack offers flexible hourly, daily, and monthly options.

Can I rent GPUs for AI and deep learning? Yes, both RTX 4090 and 5090 are optimized for AI workloads.

Are there any discounts for long-term rentals? Yes, NeuralRack offers bulk and long-term discounts.

Is setup assistance provided? Absolutely. Use the Contact Us page to get help.

What if I need multiple GPUs? You can configure your rental on the Configuration page.

Is the hardware reliable? Yes, NeuralRack guarantees high-quality, well-maintained GPUs.

Do you support cloud access? Yes, NeuralRack supports remote GPU access for AI workloads.

Where can I learn more about NeuralRack? Visit the About page for the full company profile.

2 notes

·

View notes

Text

What are AI, AGI, and ASI? And the positive impact of AI

Understanding artificial intelligence (AI) involves more than just recognizing lines of code or scripts; it encompasses developing algorithms and models capable of learning from data and making predictions or decisions based on what they’ve learned. To truly grasp the distinctions between the different types of AI, we must look at their capabilities and potential impact on society.

To simplify, we can categorize these types of AI by assigning a power level from 1 to 3, with 1 being the least powerful and 3 being the most powerful. Let’s explore these categories:

1. Artificial Narrow Intelligence (ANI)

Also known as Narrow AI or Weak AI, ANI is the most common form of AI we encounter today. It is designed to perform a specific task or a narrow range of tasks. Examples include virtual assistants like Siri and Alexa, recommendation systems on Netflix, and image recognition software. ANI operates under a limited set of constraints and can’t perform tasks outside its specific domain. Despite its limitations, ANI has proven to be incredibly useful in automating repetitive tasks, providing insights through data analysis, and enhancing user experiences across various applications.

2. Artificial General Intelligence (AGI)

Referred to as Strong AI, AGI represents the next level of AI development. Unlike ANI, AGI can understand, learn, and apply knowledge across a wide range of tasks, similar to human intelligence. It can reason, plan, solve problems, think abstractly, and learn from experiences. While AGI remains a theoretical concept as of now, achieving it would mean creating machines capable of performing any intellectual task that a human can. This breakthrough could revolutionize numerous fields, including healthcare, education, and science, by providing more adaptive and comprehensive solutions.

3. Artificial Super Intelligence (ASI)

ASI surpasses human intelligence and capabilities in all aspects. It represents a level of intelligence far beyond our current understanding, where machines could outthink, outperform, and outmaneuver humans. ASI could lead to unprecedented advancements in technology and society. However, it also raises significant ethical and safety concerns. Ensuring ASI is developed and used responsibly is crucial to preventing unintended consequences that could arise from such a powerful form of intelligence.

The Positive Impact of AI

When regulated and guided by ethical principles, AI has the potential to benefit humanity significantly. Here are a few ways AI can help us become better:

• Healthcare: AI can assist in diagnosing diseases, personalizing treatment plans, and even predicting health issues before they become severe. This can lead to improved patient outcomes and more efficient healthcare systems.

• Education: Personalized learning experiences powered by AI can cater to individual student needs, helping them learn at their own pace and in ways that suit their unique styles.

• Environment: AI can play a crucial role in monitoring and managing environmental changes, optimizing energy use, and developing sustainable practices to combat climate change.

• Economy: AI can drive innovation, create new industries, and enhance productivity by automating mundane tasks and providing data-driven insights for better decision-making.

In conclusion, while AI, AGI, and ASI represent different levels of technological advancement, their potential to transform our world is immense. By understanding their distinctions and ensuring proper regulation, we can harness the power of AI to create a brighter future for all.

8 notes

·

View notes

Text

What's the difference between Machine Learning and AI?

Machine Learning and Artificial Intelligence (AI) are often used interchangeably, but they represent distinct concepts within the broader field of data science. Machine Learning refers to algorithms that enable systems to learn from data and make predictions or decisions based on that learning. It's a subset of AI, focusing on statistical techniques and models that allow computers to perform specific tasks without explicit programming.

On the other hand, AI encompasses a broader scope, aiming to simulate human intelligence in machines. It includes Machine Learning as well as other disciplines like natural language processing, computer vision, and robotics, all working towards creating intelligent systems capable of reasoning, problem-solving, and understanding context.

Understanding this distinction is crucial for anyone interested in leveraging data-driven technologies effectively. Whether you're exploring career opportunities, enhancing business strategies, or simply curious about the future of technology, diving deeper into these concepts can provide invaluable insights.