#why devops as a service

Explore tagged Tumblr posts

Text

Why Do Most DevOps Transformations Fail Without Expert Consulting?

DevOps has become the go-to approach for improving alignment and coordination between development and operations teams, speeding up software delivery, and enhancing product quality. However, many companies struggle to implement DevOps successfully. In fact, several DevOps transformations fail — and one key reason or aspect is the lack of expert DevOps consulting behind those implementations.

Let’s see what happens and how expert consulting can make all the difference.

1. Lack of a Clear Strategy

DevOps isn’t just about using new tools or modern resources. As it’s also about changing how the teams work together with better co-ordination. Without a clear roadmap or strategy, teams often jump into DevOps without aligning their goals, processes, or workflows. DevOps consulting assists with structured plan and road map customized as per your organization’s needs, helping them avoid confusion and wasted efforts.

2. Poor Tool Integration

Many businesses begin or head using multiple DevOps tools without understanding how they fit together. As this leads towards workflow issues, data silos, and tool overload. Expert consultants and professionals know which tools work best for your tech stack and help integrate them smoothly for end-to-end automation.

3. Resistance to Change

DevOps requires a cultural shift, which can face pushback from teams used to traditional methods. Consultants and professional experts play a vital and keen role in guiding this change, training teams, and building a collaborative setting that embraces new ways and types of working.

4. Inadequate Skills and Experience

Hiring and training in-house DevOps experts can be time-consuming and costly. Many transformations fail because teams lack the right expertise. DevOps consulting brings experienced professionals who know what works and what doesn't — ensuring your transformation is done right the first time.

5. Ignoring Continuous Improvement

DevOps is not a one-time setup; it's an ongoing process of refinement. Without expert guidance, businesses may stop improving after initial implementation. Consultants and a group of experts help monitor progress, measure outcomes, and continuously optimize the pipelines and practices.

DevOps transformation is complex and major and attempting towards it alone often can lead to delays, rework, and frustration. Investing in DevOps consulting aids organizations to adopt best practices, avoid common pitfalls, and achieve faster, more sustainable results. Trusted companies like Suma Soft, IBM, and Cyntexa offer professional DevOps consulting services and offer professional expertise that can guide your business toward a successful transformation — efficiently and confidently with its adaptation and assessment into the infrastructure.

#it services#technology#saas#software#saas development company#saas technology#digital transformation#usa#canada

3 notes

·

View notes

Text

About ThoughtCoders – Leading Software Testing Company ThoughtCoders is a premier Software Testing Company offering comprehensive end-to-end solutions in Quality Assurance, Test Automation, Low-Code and Codeless Automation, Integration Testing, and Offshore QA Services. With a strong track record of delivering high-quality enterprise solutions, we are trusted by clients across industries. Our team comprises industry leaders, expert consultants, and domain-specific SMEs who are passionate about delivering excellence in software quality. Established in June 2021, ThoughtCoders is a government-registered entity, officially recognized under the Startup India Programme by the Ministry of Corporate Affairs (MCA). We operate from a state-of-the-art delivery center in Ballia, Uttar Pradesh, equipped with robust infrastructure to support global delivery models. Our Core Services Quality Assurance Services Test Automation Services Offshore Quality Assurance & Testing Remote QA Team Setup QA Outsourcing Non-Functional Testing (NFR) Visual Testing Solutions Accessibility Testing Services Why Choose ThoughtCoders? At ThoughtCoders, we specialize in independent software testing, TestOps, DevOps integration, and the development of custom QA tools that help accelerate release cycles while maintaining top-tier software quality.

2 notes

·

View notes

Text

How Python Powers Scalable and Cost-Effective Cloud Solutions

Explore the role of Python in developing scalable and cost-effective cloud solutions. This guide covers Python's advantages in cloud computing, addresses potential challenges, and highlights real-world applications, providing insights into leveraging Python for efficient cloud development.

Introduction

In today's rapidly evolving digital landscape, businesses are increasingly leveraging cloud computing to enhance scalability, optimize costs, and drive innovation. Among the myriad of programming languages available, Python has emerged as a preferred choice for developing robust cloud solutions. Its simplicity, versatility, and extensive library support make it an ideal candidate for cloud-based applications.

In this comprehensive guide, we will delve into how Python empowers scalable and cost-effective cloud solutions, explore its advantages, address potential challenges, and highlight real-world applications.

Why Python is the Preferred Choice for Cloud Computing?

Python's popularity in cloud computing is driven by several factors, making it the preferred language for developing and managing cloud solutions. Here are some key reasons why Python stands out:

Simplicity and Readability: Python's clean and straightforward syntax allows developers to write and maintain code efficiently, reducing development time and costs.

Extensive Library Support: Python offers a rich set of libraries and frameworks like Django, Flask, and FastAPI for building cloud applications.

Seamless Integration with Cloud Services: Python is well-supported across major cloud platforms like AWS, Azure, and Google Cloud.

Automation and DevOps Friendly: Python supports infrastructure automation with tools like Ansible, Terraform, and Boto3.

Strong Community and Enterprise Adoption: Python has a massive global community that continuously improves and innovates cloud-related solutions.

How Python Enables Scalable Cloud Solutions?

Scalability is a critical factor in cloud computing, and Python provides multiple ways to achieve it:

1. Automation of Cloud Infrastructure

Python's compatibility with cloud service provider SDKs, such as AWS Boto3, Azure SDK for Python, and Google Cloud Client Library, enables developers to automate the provisioning and management of cloud resources efficiently.

2. Containerization and Orchestration

Python integrates seamlessly with Docker and Kubernetes, enabling businesses to deploy scalable containerized applications efficiently.

3. Cloud-Native Development

Frameworks like Flask, Django, and FastAPI support microservices architecture, allowing businesses to develop lightweight, scalable cloud applications.

4. Serverless Computing

Python's support for serverless platforms, including AWS Lambda, Azure Functions, and Google Cloud Functions, allows developers to build applications that automatically scale in response to demand, optimizing resource utilization and cost.

5. AI and Big Data Scalability

Python’s dominance in AI and data science makes it an ideal choice for cloud-based AI/ML services like AWS SageMaker, Google AI, and Azure Machine Learning.

Looking for expert Python developers to build scalable cloud solutions? Hire Python Developers now!

Advantages of Using Python for Cloud Computing

Cost Efficiency: Python’s compatibility with serverless computing and auto-scaling strategies minimizes cloud costs.

Faster Development: Python’s simplicity accelerates cloud application development, reducing time-to-market.

Cross-Platform Compatibility: Python runs seamlessly across different cloud platforms.

Security and Reliability: Python-based security tools help in encryption, authentication, and cloud monitoring.

Strong Community Support: Python developers worldwide contribute to continuous improvements, making it future-proof.

Challenges and Considerations

While Python offers many benefits, there are some challenges to consider:

Performance Limitations: Python is an interpreted language, which may not be as fast as compiled languages like Java or C++.

Memory Consumption: Python applications might require optimization to handle large-scale cloud workloads efficiently.

Learning Curve for Beginners: Though Python is simple, mastering cloud-specific frameworks requires time and expertise.

Python Libraries and Tools for Cloud Computing

Python’s ecosystem includes powerful libraries and tools tailored for cloud computing, such as:

Boto3: AWS SDK for Python, used for cloud automation.

Google Cloud Client Library: Helps interact with Google Cloud services.

Azure SDK for Python: Enables seamless integration with Microsoft Azure.

Apache Libcloud: Provides a unified interface for multiple cloud providers.

PyCaret: Simplifies machine learning deployment in cloud environments.

Real-World Applications of Python in Cloud Computing

1. Netflix - Scalable Streaming with Python

Netflix extensively uses Python for automation, data analysis, and managing cloud infrastructure, enabling seamless content delivery to millions of users.

2. Spotify - Cloud-Based Music Streaming

Spotify leverages Python for big data processing, recommendation algorithms, and cloud automation, ensuring high availability and scalability.

3. Reddit - Handling Massive Traffic

Reddit uses Python and AWS cloud solutions to manage heavy traffic while optimizing server costs efficiently.

Future of Python in Cloud Computing

The future of Python in cloud computing looks promising with emerging trends such as:

AI-Driven Cloud Automation: Python-powered AI and machine learning will drive intelligent cloud automation.

Edge Computing: Python will play a crucial role in processing data at the edge for IoT and real-time applications.

Hybrid and Multi-Cloud Strategies: Python’s flexibility will enable seamless integration across multiple cloud platforms.

Increased Adoption of Serverless Computing: More enterprises will adopt Python for cost-effective serverless applications.

Conclusion

Python's simplicity, versatility, and robust ecosystem make it a powerful tool for developing scalable and cost-effective cloud solutions. By leveraging Python's capabilities, businesses can enhance their cloud applications' performance, flexibility, and efficiency.

Ready to harness the power of Python for your cloud solutions? Explore our Python Development Services to discover how we can assist you in building scalable and efficient cloud applications.

FAQs

1. Why is Python used in cloud computing?

Python is widely used in cloud computing due to its simplicity, extensive libraries, and seamless integration with cloud platforms like AWS, Google Cloud, and Azure.

2. Is Python good for serverless computing?

Yes! Python works efficiently in serverless environments like AWS Lambda, Azure Functions, and Google Cloud Functions, making it an ideal choice for cost-effective, auto-scaling applications.

3. Which companies use Python for cloud solutions?

Major companies like Netflix, Spotify, Dropbox, and Reddit use Python for cloud automation, AI, and scalable infrastructure management.

4. How does Python help with cloud security?

Python offers robust security libraries like PyCryptodome and OpenSSL, enabling encryption, authentication, and cloud monitoring for secure cloud applications.

5. Can Python handle big data in the cloud?

Yes! Python supports big data processing with tools like Apache Spark, Pandas, and NumPy, making it suitable for data-driven cloud applications.

#Python development company#Python in Cloud Computing#Hire Python Developers#Python for Multi-Cloud Environments

2 notes

·

View notes

Text

At KSoft Technologies, we make your business shine online with a comprehensive suite of services designed to elevate your digital presence. Our expertise spans custom web development, mobile app solutions, and tailored ERP systems, ensuring your business has the tools it needs to thrive. Whether it's creating a stunning website, custom WordPress development, or PHP solutions, we ensure everything fits your unique needs. But we don’t stop there. Our Digital marketing consultation services, including SEO strategies andGoogle Ads management, help drive targeted traffic and maximize ROI, positioning your brand for growth. Additionally, our DevOps services and Business process optimization streamline operations, while our Manual testing and UAT ensure your software performs flawlessly. From building an Ecommerce website to delivering Motion graphics and Content creation, we provide End-to-end solutions that empower your business to Stand out and succeed in the digital world. #ksofttechnologies #businessgrowth #digitalconsulting #ecommercedevelopment #customwordpressdevelopment #erpdevelopment #businessstrategy #customerp #erpsolutions #fullstackwebdeveloper

#adobe#entrepreneur#web design#ecommerce#branding#seo services#web development#artificial intelligence

3 notes

·

View notes

Text

🌐 Your Trusted DevOps Partner: SDH Global 🌐

Are you ready to take your infrastructure and workflows to the next level? SDH’s expert DevOps services ensure seamless operations from start to finish.

⚙️ What We Offer:

Infrastructure management to keep your systems robust 💻

CI/CD pipelines for streamlined development 🚀

Automated testing to save time and resources 🕒

Disaster recovery for ultimate peace of mind 🔒

Cost optimization to maximize ROI 💵

🚀 Why Choose SDH? With our experience across various industries, we ensure your organization stays ahead in today’s competitive landscape.

🌟 Let us guide your business to success through innovation and reliability.

2 notes

·

View notes

Text

GitOps: Automating Infrastructure with Git-Based Workflows

In today’s cloud-native era, automation is not just a convenience—it’s a necessity. As development teams strive for faster, more reliable software delivery, GitOps has emerged as a game-changing methodology. By using Git as the single source of truth for infrastructure and application configurations, GitOps enables teams to automate deployments, manage environments, and scale effortlessly. This approach is quickly being integrated into modern DevOps services and solutions, especially as the demand for seamless operations grows.

What is GitOps?

GitOps is a set of practices that use Git repositories as the source of truth for declarative infrastructure and applications. Any change to the system—whether a configuration update or a new deployment—is made by modifying Git, which then triggers an automated process to apply the change in the production environment. This methodology bridges the gap between development and operations, allowing teams to collaborate using the same version control system they already rely on.

With GitOps, infrastructure becomes code, and managing environments becomes as easy as managing your codebase. Rollbacks, audits, and deployments are all handled through Git, ensuring consistency and visibility.

Real-World Example of GitOps in Action

Consider a SaaS company that manages multiple Kubernetes clusters across environments. Before adopting GitOps, the operations team manually deployed updates, which led to inconsistencies and delays. By shifting to GitOps, the team now updates configurations in a Git repo, which triggers automated pipelines that sync the changes across environments. This transition reduced deployment errors by 70% and improved release velocity by 40%.

GitOps and DevOps Consulting Services

For companies seeking to modernize their infrastructure, DevOps consulting services provide the strategic roadmap to implement GitOps successfully. Consultants analyze your existing systems, assess readiness for GitOps practices, and help create the CI/CD pipelines that connect Git with your deployment tools. They ensure that GitOps is tailored to your workflows and compliance needs.

To explore how experts are enabling seamless GitOps adoption, visit DevOps consulting services offered by Cloudastra.

GitOps in Managed Cloud Environments

GitOps fits perfectly into devops consulting and managed cloud services, where consistency, security, and scalability are top priorities. Managed cloud providers use GitOps to ensure that infrastructure remains in a desired state, detect drifts automatically, and restore environments quickly when needed. With GitOps, they can roll out configuration changes across thousands of instances in minutes—without manual intervention.

Understand why businesses are increasingly turning to devops consulting and managed cloud services to adopt modern deployment strategies like GitOps.

GitOps and DevOps Managed Services: Driving Operational Excellence

DevOps managed services teams are leveraging GitOps to bring predictability and traceability into their operations. Since all infrastructure definitions and changes are stored in Git, teams can easily track who made a change, when it was made, and why. This kind of transparency reduces risk and improves collaboration between developers and operations.

Additionally, GitOps enables managed service providers to implement automated recovery solutions. For example, if a critical microservice is accidentally deleted, the Git-based controller recognizes the drift and automatically re-deploys the missing component to match the declared state.

Learn how DevOps managed services are evolving with GitOps to support enterprise-grade reliability and control.

GitOps in DevOps Services and Solutions

Modern devops services and solutions are embracing GitOps as a core practice for infrastructure automation. Whether managing multi-cloud environments or microservices architectures, GitOps helps teams streamline deployments, improve compliance, and accelerate recovery. It provides a consistent framework for both infrastructure as code (IaC) and continuous delivery, making it ideal for scaling DevOps in complex ecosystems.

As organizations aim to reduce deployment risks and downtime, GitOps offers a predictable and auditable solution. It is no surprise that GitOps has become an essential part of cutting-edge devops services and solutions.

As Alexis Richardson, founder of Weaveworks (the team that coined GitOps), once said:

"GitOps is Git plus automation—together they bring reliability and speed to software delivery."

Why GitOps Matters More Than Ever

The increasing complexity of cloud-native applications and infrastructure demands a method that ensures precision, repeatability, and control. GitOps brings all of that and more by shifting infrastructure management into the hands of developers, using tools they already understand. It reduces errors, boosts productivity, and aligns development and operations like never before.

As Kelsey Hightower, a renowned DevOps advocate, puts it:

"GitOps takes the guesswork out of deployments. Your environment is only as good as what’s declared in Git."

Final Thoughts

GitOps isn’t just about using Git for configuration—it’s about redefining how teams manage and automate infrastructure at scale. By integrating GitOps with your DevOps strategy, your organization can gain better control, faster releases, and stronger collaboration across the board.

Ready to modernize your infrastructure with GitOps workflows?Please visit Cloudastra DevOps as a Services if you are interested to study more content or explore our services. Our team of experienced devops services is here to help you turn innovation into reality—faster, smarter, and with measurable outcomes.

1 note

·

View note

Text

How DevOps Testing Services Improve Time-to-Market and Product Stability?

DevOps Testing Services

In today’s fast-paced and modern software developing industry, businesses are always under pressure to deliver high-quality products quickly or as soon as they can. Meeting deadlines without compromising on stability can be tough. This is where DevOps Testing Services play a critical role. They help streamline testing, improve product performance, and significantly lowers the time it takes generally to bring a product to market or in the industry.

What Are DevOps Testing Services?

DevOps Test Services combine software development (Dev) and IT operations (Ops) with continuous testing. Instead of going or opting for testing software after it's built, testing is integrated into every stage of the development process as a key aspect. This aids in identifying and fixing problems early, saving time and effort later.

1. Faster Time-to-Market

One of the biggest benefits of DevOps Testing Services is speed and reliability. Since testing happens continuously alongside development or side by side, issues are caught and resolved right away. This eliminates the chances of delays and shortens the development cycle, allowing the businesses to launch products faster.

2. Continuous Feedback and Improvement

With DevOps, teams get real-time feedback through automated testing tools and continuous integration pipelines. Developers and coders can make changes quickly and fast, by testing them immediately, and push updates without waiting for the full release cycle with its implementation. This makes the product development faster, easier and more responsive.

3. Improved Product Stability

DevOps Testing Services ensure stability by running frequent and thorough tests. This includes steps as unit tests, integration tests, performance tests, and security checks in its process. Bugs and glitches are caught early, lowering the risk of failures in production and during crafting. As a result, the businesses deliver and serve more secure, stable and reliable products to customers.

4. Enhanced Collaboration

DevOps promotes better collaboration and alignment between development and operations teams of the organizations. Everyone works together, shares responsibilities and uses the same tools and resources. This teamwork leads towards more efficient processes and fewer mistakes as compared to other factors and resources.

Why Does It Matter for Businesses Today?

Customers expect quick and regular updates, smooth performance, and bug-free experiences. DevOps Test Services assist the businesses to meet those expectations without sacrificing speed or quality at any par.

Companies like Suma Soft, IBM, and Cyntexa offer robust DevOps Test strategies customized to each business’s needs—helping them stay competitive, reduce downtime, and bring better products to market faster.

#devops#devops testing#saas#it services#technology#software#saas development company#saas technology#digital transformation#usa

3 notes

·

View notes

Text

How to Choose the Right Tech Stack for Your Web App in 2025

In this article, you’ll learn how to confidently choose the right tech stack for your web app, avoid common mistakes, and stay future-proof. Whether you're building an MVP or scaling a SaaS platform, we’ll walk through every critical decision.

What Is a Tech Stack? (And Why It Matters More Than Ever)

Let’s not overcomplicate it. A tech stack is the combination of technologies you use to build and run a web app. It includes:

Front-end: What users see (e.g., React, Vue, Angular)

Back-end: What makes things work behind the scenes (e.g., Node.js, Django, Laravel)

Databases: Where your data lives (e.g., PostgreSQL, MongoDB, MySQL)

DevOps & Hosting: How your app is deployed and scaled (e.g., Docker, AWS, Vercel)

Why it matters: The wrong stack leads to poor performance, higher development costs, and scaling issues. The right stack supports speed, security, scalability, and a better developer experience.

Step 1: Define Your Web App’s Core Purpose

Before choosing tools, define the problem your app solves.

Is it data-heavy like an analytics dashboard?

Real-time focused, like a messaging or collaboration app?

Mobile-first, for customers on the go?

AI-driven, using machine learning in workflows?

Example: If you're building a streaming app, you need a tech stack optimized for media delivery, latency, and concurrent user handling.

Need help defining your app’s vision? Bluell AB’s Web Development service can guide you from idea to architecture.

Step 2: Consider Scalability from Day One

Most startups make the mistake of only thinking about MVP speed. But scaling problems can cost you down the line.

Here’s what to keep in mind:

Stateless architecture supports horizontal scaling

Choose microservices or modular monoliths based on team size and scope

Go for asynchronous processing (e.g., Node.js, Python Celery)

Use CDNs and caching for frontend optimization

A poorly optimized stack can increase infrastructure costs by 30–50% during scale. So, choose a stack that lets you scale without rewriting everything.

Step 3: Think Developer Availability & Community

Great tech means nothing if you can’t find people who can use it well.

Ask yourself:

Are there enough developers skilled in this tech?

Is the community strong and active?

Are there plenty of open-source tools and integrations?

Example: Choosing Go or Elixir might give you performance gains, but hiring developers can be tough compared to React or Node.js ecosystems.

Step 4: Match the Stack with the Right Architecture Pattern

Do you need:

A Monolithic app? Best for MVPs and small teams.

A Microservices architecture? Ideal for large-scale SaaS platforms.

A Serverless model? Great for event-driven apps or unpredictable traffic.

Pro Tip: Don’t over-engineer. Start with a modular monolith, then migrate as you grow.

Step 5: Prioritize Speed and Performance

In 2025, user patience is non-existent. Google says 53% of mobile users leave a page that takes more than 3 seconds to load.

To ensure speed:

Use Next.js or Nuxt.js for server-side rendering

Optimize images and use lazy loading

Use Redis or Memcached for caching

Integrate CDNs like Cloudflare

Benchmark early and often. Use tools like Lighthouse, WebPageTest, and New Relic to monitor.

Step 6: Plan for Integration and APIs

Your app doesn’t live in a vacuum. Think about:

Payment gateways (Stripe, PayPal)

CRM/ERP tools (Salesforce, HubSpot)

3rd-party APIs (OpenAI, Google Maps)

Make sure your stack supports REST or GraphQL seamlessly and has robust middleware for secure integration.

Step 7: Security and Compliance First

Security can’t be an afterthought.

Use stacks that support JWT, OAuth2, and secure sessions

Make sure your database handles encryption-at-rest

Use HTTPS, rate limiting, and sanitize inputs

Data breaches cost startups an average of $3.86 million. Prevention is cheaper than reaction.

Step 8: Don’t Ignore Cost and Licensing

Open source doesn’t always mean free. Some tools have enterprise licenses, usage limits, or require premium add-ons.

Cost checklist:

Licensing (e.g., Firebase becomes costly at scale)

DevOps costs (e.g., AWS vs. DigitalOcean)

Developer productivity (fewer bugs = lower costs)

Budgeting for technology should include time to hire, cost to scale, and infrastructure support.

Step 9: Understand the Role of DevOps and CI/CD

Continuous integration and continuous deployment (CI/CD) aren’t optional anymore.

Choose a tech stack that:

Works well with GitHub Actions, GitLab CI, or Jenkins

Supports containerization with Docker and Kubernetes

Enables fast rollback and testing

This reduces downtime and lets your team iterate faster.

Step 10: Evaluate Real-World Use Cases

Here’s how popular stacks perform:

Look at what companies are using, then adapt, don’t copy blindly.

How Bluell Can Help You Make the Right Tech Choice

Choosing a tech stack isn’t just technical, it’s strategic. Bluell specializes in full-stack development and helps startups and growing companies build modern, scalable web apps. Whether you’re validating an MVP or building a SaaS product from scratch, we can help you pick the right tools from day one.

Conclusion

Think of your tech stack like choosing a foundation for a building. You don’t want to rebuild it when you’re five stories up.

Here’s a quick recap to guide your decision:

Know your app’s purpose

Plan for future growth

Prioritize developer availability and ecosystem

Don’t ignore performance, security, or cost

Lean into CI/CD and DevOps early

Make data-backed decisions, not just trendy ones

Make your tech stack work for your users, your team, and your business, not the other way around.

1 note

·

View note

Text

🚀 Red Hat Services Management and Automation: Simplifying Enterprise IT

As enterprise IT ecosystems grow in complexity, managing services efficiently and automating routine tasks has become more than a necessity—it's a competitive advantage. Red Hat, a leader in open-source solutions, offers robust tools to streamline service management and enable automation across hybrid cloud environments.

In this blog, we’ll explore what Red Hat Services Management and Automation is, why it matters, and how professionals can harness it to improve operational efficiency, security, and scalability.

🔧 What Is Red Hat Services Management?

Red Hat Services Management refers to the tools and practices provided by Red Hat to manage system services—such as processes, daemons, and scheduled tasks—across Linux-based infrastructures.

Key components include:

systemd: The default init system on RHEL, used to start, stop, and manage services.

Red Hat Satellite: For managing system lifecycles, patching, and configuration.

Red Hat Ansible Automation Platform: A powerful tool for infrastructure and service automation.

Cockpit: A web-based interface to manage Linux systems easily.

🤖 What Is Red Hat Automation?

Automation in the Red Hat ecosystem primarily revolves around Ansible, Red Hat’s open-source IT automation tool. With automation, you can:

Eliminate repetitive manual tasks

Achieve consistent configurations

Enable Infrastructure as Code (IaC)

Accelerate deployments and updates

From provisioning servers to configuring complex applications, Red Hat automation tools reduce human error and increase scalability.

🔍 Key Use Cases

1. Service Lifecycle Management

Start, stop, enable, and monitor services across thousands of servers with simple systemctl commands or Ansible playbooks.

2. Automated Patch Management

Use Red Hat Satellite and Ansible to automate updates, ensuring compliance and reducing security risks.

3. Infrastructure Provisioning

Provision cloud and on-prem infrastructure with repeatable Ansible roles, reducing time-to-deploy for dev/test/staging environments.

4. Multi-node Orchestration

Manage workflows across multiple servers and services in a unified, centralized fashion.

🌐 Why It Matters

⏱️ Efficiency: Save countless admin hours by automating routine tasks.

🛡️ Security: Enforce security policies and configurations consistently across systems.

📈 Scalability: Manage hundreds or thousands of systems with the same effort as managing one.

🤝 Collaboration: Teams can collaborate better with playbooks that document infrastructure steps clearly.

🎓 How to Get Started

Learn Linux Service Management: Understand systemctl, logs, units, and journaling.

Explore Ansible Basics: Learn to write playbooks, roles, and use Ansible Tower.

Take a Red Hat Course: Enroll in Red Hat Certified Engineer (RHCE) to get hands-on training in automation.

Use RHLS: Get access to labs, practice exams, and expert content through the Red Hat Learning Subscription (RHLS).

✅ Final Thoughts

Red Hat Services Management and Automation isn’t just about managing Linux servers—it’s about building a modern IT foundation that’s scalable, secure, and future-ready. Whether you're a sysadmin, DevOps engineer, or IT manager, mastering these tools can help you lead your team toward more agile, efficient operations.

📌 Ready to master Red Hat automation? Explore our Red Hat training programs and take your career to the next level!

📘 Learn. Automate. Succeed. Begin your journey today! Kindly follow: www.hawkstack.com

1 note

·

View note

Video

youtube

Complete Hands-On Guide: Upload, Download, and Delete Files in Amazon S3 Using EC2 IAM Roles

Are you looking for a secure and efficient way to manage files in Amazon S3 using an EC2 instance? This step-by-step tutorial will teach you how to upload, download, and delete files in Amazon S3 using IAM roles for secure access. Say goodbye to hardcoding AWS credentials and embrace best practices for security and scalability.

What You'll Learn in This Video:

1. Understanding IAM Roles for EC2: - What are IAM roles? - Why should you use IAM roles instead of hardcoding access keys? - How to create and attach an IAM role with S3 permissions to your EC2 instance.

2. Configuring the EC2 Instance for S3 Access: - Launching an EC2 instance and attaching the IAM role. - Setting up the AWS CLI on your EC2 instance.

3. Uploading Files to S3: - Step-by-step commands to upload files to an S3 bucket. - Use cases for uploading files, such as backups or log storage.

4. Downloading Files from S3: - Retrieving objects stored in your S3 bucket using AWS CLI. - How to test and verify successful downloads.

5. Deleting Files in S3: - Securely deleting files from an S3 bucket. - Use cases like removing outdated logs or freeing up storage.

6. Best Practices for S3 Operations: - Using least privilege policies in IAM roles. - Encrypting files in transit and at rest. - Monitoring and logging using AWS CloudTrail and S3 access logs.

Why IAM Roles Are Essential for S3 Operations: - Secure Access: IAM roles provide temporary credentials, eliminating the risk of hardcoding secrets in your scripts. - Automation-Friendly: Simplify file operations for DevOps workflows and automation scripts. - Centralized Management: Control and modify permissions from a single IAM role without touching your instance.

Real-World Applications of This Tutorial: - Automating log uploads from EC2 to S3 for centralized storage. - Downloading data files or software packages hosted in S3 for application use. - Removing outdated or unnecessary files to optimize your S3 bucket storage.

AWS Services and Tools Covered in This Tutorial: - Amazon S3: Scalable object storage for uploading, downloading, and deleting files. - Amazon EC2: Virtual servers in the cloud for running scripts and applications. - AWS IAM Roles: Secure and temporary permissions for accessing S3. - AWS CLI: Command-line tool for managing AWS services.

Hands-On Process: 1. Step 1: Create an S3 Bucket - Navigate to the S3 console and create a new bucket with a unique name. - Configure bucket permissions for private or public access as needed.

2. Step 2: Configure IAM Role - Create an IAM role with an S3 access policy. - Attach the role to your EC2 instance to avoid hardcoding credentials.

3. Step 3: Launch and Connect to an EC2 Instance - Launch an EC2 instance with the IAM role attached. - Connect to the instance using SSH.

4. Step 4: Install AWS CLI and Configure - Install AWS CLI on the EC2 instance if not pre-installed. - Verify access by running `aws s3 ls` to list available buckets.

5. Step 5: Perform File Operations - Upload files: Use `aws s3 cp` to upload a file from EC2 to S3. - Download files: Use `aws s3 cp` to download files from S3 to EC2. - Delete files: Use `aws s3 rm` to delete a file from the S3 bucket.

6. Step 6: Cleanup - Delete test files and terminate resources to avoid unnecessary charges.

Why Watch This Video? This tutorial is designed for AWS beginners and cloud engineers who want to master secure file management in the AWS cloud. Whether you're automating tasks, integrating EC2 and S3, or simply learning the basics, this guide has everything you need to get started.

Don’t forget to like, share, and subscribe to the channel for more AWS hands-on guides, cloud engineering tips, and DevOps tutorials.

#youtube#aws iamiam role awsawsaws permissionaws iam rolesaws cloudaws s3identity & access managementaws iam policyDownloadand Delete Files in Amazon#IAMrole#AWS#cloudolus#S3#EC2

2 notes

·

View notes

Text

What is Argo CD? And When Was Argo CD Established?

What Is Argo CD?

Argo CD is declarative Kubernetes GitOps continuous delivery.

In DevOps, ArgoCD is a Continuous Delivery (CD) technology that has become well-liked for delivering applications to Kubernetes. It is based on the GitOps deployment methodology.

When was Argo CD Established?

Argo CD was created at Intuit and made publicly available following Applatix’s 2018 acquisition by Intuit. The founding developers of Applatix, Hong Wang, Jesse Suen, and Alexander Matyushentsev, made the Argo project open-source in 2017.

Why Argo CD?

Declarative and version-controlled application definitions, configurations, and environments are ideal. Automated, auditable, and easily comprehensible application deployment and lifecycle management are essential.

Getting Started

Quick Start

kubectl create namespace argocd kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

For some features, more user-friendly documentation is offered. Refer to the upgrade guide if you want to upgrade your Argo CD. Those interested in creating third-party connectors can access developer-oriented resources.

How it works

Argo CD defines the intended application state by employing Git repositories as the source of truth, in accordance with the GitOps pattern. There are various approaches to specify Kubernetes manifests:

Applications for Customization

Helm charts

JSONNET files

Simple YAML/JSON manifest directory

Any custom configuration management tool that is set up as a plugin

The deployment of the intended application states in the designated target settings is automated by Argo CD. Deployments of applications can monitor changes to branches, tags, or pinned to a particular manifest version at a Git commit.

Architecture

The implementation of Argo CD is a Kubernetes controller that continually observes active apps and contrasts their present, live state with the target state (as defined in the Git repository). Out Of Sync is the term used to describe a deployed application whose live state differs from the target state. In addition to reporting and visualizing the differences, Argo CD offers the ability to manually or automatically sync the current state back to the intended goal state. The designated target environments can automatically apply and reflect any changes made to the intended target state in the Git repository.

Components

API Server

The Web UI, CLI, and CI/CD systems use the API, which is exposed by the gRPC/REST server. Its duties include the following:

Status reporting and application management

Launching application functions (such as rollback, sync, and user-defined actions)

Cluster credential management and repository (k8s secrets)

RBAC enforcement

Authentication, and auth delegation to outside identity providers

Git webhook event listener/forwarder

Repository Server

An internal service called the repository server keeps a local cache of the Git repository containing the application manifests. When given the following inputs, it is in charge of creating and returning the Kubernetes manifests:

URL of the repository

Revision (tag, branch, commit)

Path of the application

Template-specific configurations: helm values.yaml, parameters

A Kubernetes controller known as the application controller keeps an eye on all active apps and contrasts their actual, live state with the intended target state as defined in the repository. When it identifies an Out Of Sync application state, it may take remedial action. It is in charge of calling any user-specified hooks for lifecycle events (Sync, PostSync, and PreSync).

Features

Applications are automatically deployed to designated target environments.

Multiple configuration management/templating tools (Kustomize, Helm, Jsonnet, and plain-YAML) are supported.

Capacity to oversee and implement across several clusters

Integration of SSO (OIDC, OAuth2, LDAP, SAML 2.0, Microsoft, LinkedIn, GitHub, GitLab)

RBAC and multi-tenancy authorization policies

Rollback/Roll-anywhere to any Git repository-committed application configuration

Analysis of the application resources’ health state

Automated visualization and detection of configuration drift

Applications can be synced manually or automatically to their desired state.

Web user interface that shows program activity in real time

CLI for CI integration and automation

Integration of webhooks (GitHub, BitBucket, GitLab)

Tokens of access for automation

Hooks for PreSync, Sync, and PostSync to facilitate intricate application rollouts (such as canary and blue/green upgrades)

Application event and API call audit trails

Prometheus measurements

To override helm parameters in Git, use parameter overrides.

Read more on Govindhtech.com

#ArgoCD#CD#GitOps#API#Kubernetes#Git#Argoproject#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

Why You Need DevOps Consulting for Kubernetes Scaling

With today’s technological advances and fast-moving landscape, scaling Kubernetes clusters has become troublesome for almost every organization. The more companies are moving towards containerized applications, the harder it gets to scale multiple Kubernetes clusters. In this article, you’ll learn the exponential challenges along with the best ways and practices of scaling Kubernetes deployments successfully by seeking expert guidance.

The open-source platform K8s, used to deploy and manage applications, is now the norm in containerized environments. Since businesses are adopting DevOps services in USA due to their flexibility and scalability, cluster management for Kubernetes at scale is now a fundamental part of the business.

Understanding Kubernetes Clusters

Before moving ahead with the challenges along with its best practices, let’s start with an introduction to what Kubernetes clusters are and why they are necessary for modern app deployments. To be precise, it is a set of nodes (physical or virtual machines) connected and running containerized software. K8’s clusters are very scalable and dynamic and are ideal for big applications accessible via multiple locations.

The Growing Complexity Organizations Must Address

Kubernetes is becoming the default container orchestration solution for many companies. But the complexity resides with its scaling, as it is challenging to keep them in working order. Kubernetes developers are thrown many problems with consistency, security, and performance, and below are the most common challenges.

Key Challenges in Managing Large-Scale K8s Deployments

Configuration Management: Configuring many different Kubernetes clusters can be a nightmare. Enterprises need to have uniform policies, security, and allocations with flexibility for unique workloads.

Resource Optimization: As a matter of course, the DevOps consulting services would often emphasize that resources should be properly distributed so that overprovisioning doesn’t happen and the application can run smoothly.

Security and Compliance: Security on distributed Kubernetes clusters needs solid policies and monitoring. Companies have to use standard security controls with different compliance standards.

Monitoring and Observability: You’ll need advanced monitoring solutions to see how many clusters are performing health-wise. DevOps services in USA focus on the complete observability instruments for efficient cluster management.

Best Practices for Scaling Kubernetes

Implement Infrastructure as Code (IaC)

Apply GitOps processes to configure

Reuse version control for all cluster settings.

Automate cluster creation and administration

Adopt Multi-Cluster Management Tools

Modern organizations should:

Set up cluster management tools in dedicated software.

Utilize centralized control planes.

Optimize CI CD Pipelines

Using K8s is perfect for automating CI CD pipelines, but you want the pipelines optimized. By using a technique like blue-green deployments or canary releases, you can roll out updates one by one and not push the whole system. This reduces downtime and makes sure only stable releases get into production.

Also, containerization using Kubernetes can enable faster and better builds since developers can build and test apps in separate environments. This should be very tightly coupled with Kubernetes clusters for updates to flow properly.

Establish Standardization

When you hire DevOps developers, always make sure they:

Create standardized templates

Implement consistent naming conventions.

Develop reusable deployment patterns.

Optimize Resource Management

Effective resource management includes:

Implementing auto-scaling policies

Adopting quotas and limits on resource allocations.

Accessing cluster auto scale for node management

Enhance Security Measures

Security best practices involve:

Role-based access control (RBAC)—Aim to restrict users by role

Network policy isolation based on isolation policy in the network

Updates and security audits: Ongoing security audits and upgrades

Leverage DevOps Services and Expertise

Hire dedicated DevOps developers or take advantage of DevOps consulting services like Spiral Mantra to get the best of services under one roof. The company comprehends the team of experts who have set up, operated, and optimized Kubernetes on an enterprise scale. By employing DevOps developers or DevOps services in USA, organizations can be sure that they are prepared to address Kubernetes issues efficiently. DevOps consultants can also help automate and standardize K8s with the existing workflows and processes.

Spiral Mantra DevOps Consulting Services

Spiral Mantra is a DevOps consulting service in USA specializing in Azure, Google Cloud Platform, and AWS. We are CI/CD integration experts for automated deployment pipelines and containerization with Kubernetes developers for automated container orchestration. We offer all the services from the first evaluation through deployment and management, with skilled experts to make sure your organizations achieve the best performance.

Frequently Asked Questions (FAQs)

Q. How can businesses manage security on different K8s clusters?

Businesses can implement security by following annual security audits and security scanners, along with going through network policies. With the right DevOps consulting services, you can develop and establish robust security plans.

Q. What is DevOps in Kubernetes management?

For Kubernetes management, it is important to implement DevOps practices like automation, infrastructure as code, continuous integration and deployment, security, compliance, etc.

Q. What are the major challenges developers face when managing clusters at scale?

Challenges like security concerns, resource management, and complexity are the most common ones. In addition to this, CI CD pipeline management is another major complexity that developers face.

Conclusion

Scaling Kubernetes clusters takes an integrated strategy with the right tools, methods, and knowledge. Automation, standardization, and security should be the main objectives of organizations that need to take advantage of professional DevOps consulting services to get the most out of K8s implementations. If companies follow these best practices and partner with skilled Kubernetes developers, they can run containerized applications efficiently and reliably on a large scale.

1 note

·

View note

Text

Full Stack Testing vs. Full Stack Development: What’s the Difference?

In today’s fast-evolving tech world, buzzwords like Full Stack Development and Full Stack Testing have gained immense popularity. Both roles are vital in the software lifecycle, but they serve very different purposes. Whether you’re a beginner exploring your career options or a professional looking to expand your skills, understanding the differences between Full Stack Testing and Full Stack Development is crucial. Let’s dive into what makes these two roles unique!

What Is Full Stack Development?

Full Stack Development refers to the ability to build an entire software application – from the user interface to the backend logic – using a wide range of tools and technologies. A Full Stack Developer is proficient in both front-end (user-facing) and back-end (server-side) development.

Key Responsibilities of a Full Stack Developer:

Front-End Development: Building the user interface using tools like HTML, CSS, JavaScript, React, or Angular.

Back-End Development: Creating server-side logic using languages like Node.js, Python, Java, or PHP.

Database Management: Handling databases such as MySQL, MongoDB, or PostgreSQL.

API Integration: Connecting applications through RESTful or GraphQL APIs.

Version Control: Using tools like Git for collaborative development.

Skills Required for Full Stack Development:

Proficiency in programming languages (JavaScript, Python, Java, etc.)

Knowledge of web frameworks (React, Django, etc.)

Experience with databases and cloud platforms

Understanding of DevOps tools

In short, a Full Stack Developer handles everything from designing the UI to writing server-side code, ensuring the software runs smoothly.

What Is Full Stack Testing?

Full Stack Testing is all about ensuring quality at every stage of the software development lifecycle. A Full Stack Tester is responsible for testing applications across multiple layers – from front-end UI testing to back-end database validation – ensuring a seamless user experience. They blend manual and automation testing skills to detect issues early and prevent software failures.

Key Responsibilities of a Full Stack Tester:

UI Testing: Ensuring the application looks and behaves correctly on the front end.

API Testing: Validating data flow and communication between services.

Database Testing: Verifying data integrity and backend operations.

Performance Testing: Ensuring the application performs well under load using tools like JMeter.

Automation Testing: Automating repetitive tests with tools like Selenium or Cypress.

Security Testing: Identifying vulnerabilities to prevent cyber-attacks.

Skills Required for Full Stack Testing:

Knowledge of testing tools like Selenium, Postman, JMeter, or TOSCA

Proficiency in both manual and automation testing

Understanding of test frameworks like TestNG or Cucumber

Familiarity with Agile and DevOps practices

Basic knowledge of programming for writing test scripts

A Full Stack Tester plays a critical role in identifying bugs early in the development process and ensuring the software functions flawlessly.

Which Career Path Should You Choose?

The choice between Full Stack Development and Full Stack Testing depends on your interests and strengths:

Choose Full Stack Development if you love coding, creating interfaces, and building software solutions from scratch. This role is ideal for those who enjoy developing creative products and working with both front-end and back-end technologies.

Choose Full Stack Testing if you have a keen eye for detail and enjoy problem-solving by finding bugs and ensuring software quality. If you love automation, performance testing, and working with multiple testing tools, Full Stack Testing is the right path.

Why Both Roles Are Essential :

Both Full Stack Developers and Full Stack Testers are integral to software development. While developers focus on creating functional features, testers ensure that everything runs smoothly and meets user expectations. In an Agile or DevOps environment, these roles often overlap, with testers and developers working closely to deliver high-quality software in shorter cycles.

Final Thoughts :

Whether you opt for Full Stack Testing or Full Stack Development, both fields offer exciting opportunities with tremendous growth potential. With software becoming increasingly complex, the demand for skilled developers and testers is higher than ever.

At TestoMeter Pvt. Ltd., we provide comprehensive training in both Full Stack Development and Full Stack Testing to help you build a future-proof career. Whether you want to build software or ensure its quality, we’ve got the perfect course for you.

Ready to take the next step? Explore our Full Stack courses today and start your journey toward a successful IT career!

This blog not only provides a crisp comparison but also encourages potential students to explore both career paths with TestoMeter.

For more Details :

Interested in kick-starting your Software Developer/Software Tester career? Contact us today or Visit our website for course details, success stories, and more!

🌐visit - https://www.testometer.co.in/

2 notes

·

View notes

Text

Embrace the Future with AI 🚀

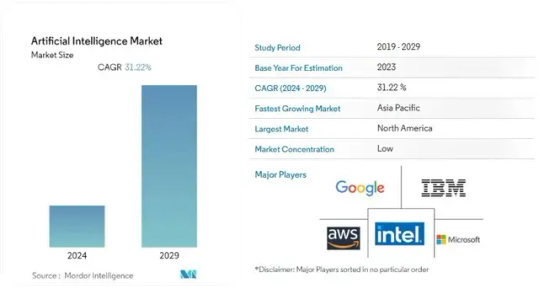

The AI industry is set to skyrocket from USD 2.41 trillion in 2023 to a projected USD 30.13 trillion by 2032, growing at a phenomenal CAGR of 32.4%! The AI market continues to experience robust growth driven by advancements in machine learning, natural language processing, and cloud computing. Key industry player heavily invests in AI to enhance their product offerings and gain competitive advantages.

Here is a brief analysis of why and how AI can transform businesses to stay ahead in the digital age.

Key Trends:

Predictive Analytics: There’s an increasing demand for predictive analytics solutions across various industries to leverage data-driven decision-making.

Data Generation: Massive growth in data generation due to technological advancements is pushing the demand for AI solutions.

Cloud Adoption: The adoption of cloud-based applications and services is accelerating AI implementation.

Consumer Experience: Companies are focusing on enhancing consumer experience through AI-driven personalized services.

Challenges:

Initial Costs: High initial costs and concerns over replacing the human workforce.

Skill Gap: A lack of skilled AI technicians and experts.

Data Privacy: Concerns regarding data privacy and security.

Vabro is excited to announce the launch of Vabro Genie, one of the most intelligent SaaS AI engines. Vabro Genie helps companies manage projects, DevOps, and workflows with unprecedented efficiency and intelligence. Don’t miss out on leveraging this game-changing tool!

Visit www.vabro.com

#ArtificialIntelligence#TechTrends#Innovation#Vabro#AI#VabroGenie#ProjectManagement#DevOps#Workflows#Scrum#Agile

3 notes

·

View notes

Text

What Are DevOps Testing Services and Why Do Modern Teams Need Them?

DevOps Testing Services

As businesses race or strive to release better software faster or more quickly, development and operations teams and working staff are under increasing pressure to deliver high-quality applications quickly and reliably. Traditional testing approaches and common practices often create roadblocks in this fast-paced environment and setting. That’s where DevOps Testing services come in—offering a modern solution that integrates the testing seamlessly into the development and deployment lifecycle.

What Is DevOps Testing?

DevOps Testing is a practice that embeds continuous testing into the DevOps lifecycle. As it assures that the quality assurance (QA) is not a separate task, or the last-minute task but a continuous process integrated from the early stages of software development through deployment and beyond.

In a DevOps environment, testing is automated, collaborative, and fast-paced. As the common goal is to detect bugs early, improve code quality, and support frequent software releases without sacrificing performance or user experience of the product and create ease for the users.

Key Features of DevOps Testing Services

1. Continuous Testing

Automated tests are executed at every stage of development and during the process, ensuring that code changes are validated continuously. This allows and grants the teams to catch defects early and fix them quickly.

2. Integration with CI/CD Pipelines

DevOps Test services work in sync with Continuous Integration and Continuous Deployment (CI/CD) tools. As the tests run automatically when the new code is committed, they assist the developers to get immediate feedback.

3. Test Automation

Manual testing slows down the software lifecycle . DevOps testing relies heavily on automation frameworks that perform unit tests, integration tests, regression tests, and performance tests with minimal human intervention.

4. Shift-Left Approach

Testing is no longer just a QA responsibility or a constant testing task. In DevOps, the developers are encouraged to write and run tests early in the development process, by reducing the cost and time of bug fixes on their own.

5. Collaboration and Transparency

Testing is collaborative in DevOps. The developers, QA teams, and operations teams share responsibilities and their assigned tasks, improving transparency and communication between the teams.

Why Do Modern Teams Need DevOps Testing?

1. Faster Time-to-Market

DevOps Test helps teams reduce testing time through automation and early bug detection. This directly accelerates the product delivery and overall release cycles.

2. Higher Product Quality

By testing or going through the process at every step, the teams ensure that each feature, update, or patch meets the set quality benchmarks. As it reduces the critical failures post-deployment and enhances overall user satisfaction.

3. Reduced Costs

Early bug detection and continuous feedback prevent costly post-release fixes. As automated testing also reduces the chances of manual effort and repetitive tasks.

4. Scalability

DevOps Testing enables the teams to run thousands of test cases simultaneously across all the environments. This makes it easier to scale testing as applications grow in size and complexity.

5. Improved Developer Productivity

With faster feedback loops and awareness, the developers or the coders can focus more on building features rather than debugging and reworking the late-stage code errors as it boosts productivity.

6. Better Risk Management

Continuous testing enables businesses to identify security vulnerabilities, performance bottlenecks, and compatibility issues or glitches before the deployment, thereby lowering the risk of failure in production environments and similar settings.

Use Cases of DevOps Test in Action

E-commerce platforms running high-volume sales events can rely on continuous load testing to ensure stability during peak traffic.

Financial institutions can integrate security testing tools to check vulnerabilities after every code update.

Healthcare and regulatory industries benefit from continuous compliance and audit checks via automated QA.

What to Look for in a DevOps Test Service Partner?

When choosing a partner for DevOps Test services, consider:

Experience with CI/CD pipelines and automation tools and other resources

Ability to customize the test strategies for your application

Knowledge of cloud-native environments and containerization (Docker, Kubernetes)

Support for various testing types—unit, API, integration, performance, and security

Strong communication and collaboration processes on its own

In a world where speed, reliability, and customer satisfaction drive business success, DevOps Testing has become a necessity, not a luxury. It brings together developers, testers, and operations teams to deliver stable, secure, and high-performing applications and products—faster than ever before. Organizations that are forward-thinking and committed towards quality often collaborate with experienced service providers who understand the nuances of testing in a DevOps setup and setting. Suma Soft, for instance, offers complete DevOps Testing services customized towards modern team workflows—aiding businesses to stay agile, competitive, and future-ready.

#it services#technology#saas#software#saas development company#saas technology#digital transformation#usa#canada

2 notes

·

View notes

Text

Breaking Barriers With DevOps: A Digital Transformation Journey

In today's rapidly evolving technological landscape, the term "DevOps" has become ingrained. But what does it truly entail, and why is it of paramount importance within the realms of software development and IT operations? In this comprehensive guide, we will embark on a journey to delve deeper into the principles, practices, and substantial advantages that DevOps brings to the table.

Understanding DevOps

DevOps, a fusion of "Development" and "Operations," transcends being a mere collection of practices; it embodies a cultural and collaborative philosophy. At its core, DevOps aims to bridge the historical gap that has separated development and IT operations teams. Through the promotion of collaboration and the harnessing of automation, DevOps endeavors to optimize the software delivery pipeline, empowering organizations to efficiently and expeditiously deliver top-tier software products and services.

Key Principles of DevOps

Collaboration: DevOps champions the concept of seamless collaboration between development and operations teams. This approach dismantles the conventional silos, cultivating communication and synergy.

Automation: Automation is the crucial for DevOps. It entails the utilization of tools and scripts to automate mundane and repetitive tasks, such as code integration, testing, and deployment. Automation not only curtails errors but also accelerates the software delivery process.

Continuous Integration (CI): Continuous Integration (CI) is the practice of automatically combining code alterations into a shared repository several times daily. This enables teams to detect integration issues in the embryonic stages of development, expediting resolutions.

Continuous Delivery (CD): Continuous Delivery (CD) is an extension of CI, automating the deployment process. CD guarantees that code modifications can be swiftly and dependably delivered to production or staging environments.

Monitoring and Feedback: DevOps places a premium on real-time monitoring of applications and infrastructure. This vigilance facilitates the prompt identification of issues and the accumulation of feedback for incessant enhancement.

Core Practices of DevOps

Infrastructure as Code (IaC): Infrastructure as Code (IaC) encompasses the management and provisioning of infrastructure using code and automation tools. This practice ensures uniformity and scalability in infrastructure deployment.

Containerization: Containerization, expressed by tools like Docker, covers applications and their dependencies within standardized units known as containers. Containers simplify deployment across heterogeneous environments.

Orchestration: Orchestration tools, such as Kubernetes, oversee the deployment, scaling, and monitoring of containerized applications, ensuring judicious resource utilization.

Microservices: Microservices architecture dissects applications into smaller, autonomously deployable services. Teams can fabricate, assess, and deploy these services separately, enhancing adaptability.

Benefits of DevOps

When an organization embraces DevOps, it doesn't merely adopt a set of practices; it unlocks a treasure of benefits that can revolutionize its approach to software development and IT operations. Let's delve deeper into the wealth of advantages that DevOps bequeaths:

1. Faster Time to Market: In today's competitive landscape, speed is of the essence. DevOps expedites the software delivery process, enabling organizations to swiftly roll out new features and updates. This acceleration provides a distinct competitive edge, allowing businesses to respond promptly to market demands and stay ahead of the curve.

2. Improved Quality: DevOps places a premium on automation and continuous testing. This relentless pursuit of quality results in superior software products. By reducing manual intervention and ensuring thorough testing, DevOps minimizes the likelihood of glitches in production. This improves consumer happiness and trust in turn.

3. Increased Efficiency: The automation-centric nature of DevOps eliminates the need for laborious manual tasks. This not only saves time but also amplifies operational efficiency. Resources that were once tied up in repetitive chores can now be redeployed for more strategic and value-added activities.

4. Enhanced Collaboration: Collaboration is at the heart of DevOps. By breaking down the traditional silos that often exist between development and operations teams, DevOps fosters a culture of teamwork. This collaborative spirit leads to innovation, problem-solving, and a shared sense of accountability. When teams work together seamlessly, extraordinary results are achieved.

5. Increased Resistance: The ability to identify and address issues promptly is a hallmark of DevOps. Real-time monitoring and feedback loops provide an early warning system for potential problems. This proactive approach not only prevents issues from escalating but also augments system resilience. Organizations become better equipped to weather unexpected challenges.

6. Scalability: As businesses grow, so do their infrastructure and application needs. DevOps practices are inherently scalable. Whether it's expanding server capacity or deploying additional services, DevOps enables organizations to scale up or down as required. This adaptability ensures that resources are allocated optimally, regardless of the scale of operations.

7. Cost Savings: Automation and effective resource management are key drivers of long-term cost reductions. By minimizing manual intervention, organizations can save on labor costs. Moreover, DevOps practices promote efficient use of resources, resulting in reduced operational expenses. These cost savings can be channeled into further innovation and growth.

In summation, DevOps transcends being a fleeting trend; it constitutes a transformative approach to software development and IT operations. It champions collaboration, automation, and incessant improvement, capacitating organizations to respond to market vicissitudes and customer requisites with nimbleness and efficiency.

Whether you aspire to elevate your skills, embark on a novel career trajectory, or remain at the vanguard in your current role, ACTE Technologies is your unwavering ally on the expedition of perpetual learning and career advancement. Enroll today and unlock your potential in the dynamic realm of technology. Your journey towards success commences here. Embracing DevOps practices has the potential to usher in software development processes that are swifter, more reliable, and of higher quality. Join the DevOps revolution today!

10 notes

·

View notes