Don't wanna be here? Send us removal request.

Text

GenAI in Data Governance: Bridging Gaps and Enhancing Compliance

Effective data governance is essential for organizations to maintain data accuracy, ensure security, and achieve compliance in today’s digital landscape. The emergence of Generative AI (Gen AI) has significantly improved the efficiency and reliability of data governance processes.

Cloud platforms offer scalability and flexibility, enabling businesses to store, process, and access vast amounts of data with ease. Unlike traditional on-premises infrastructure, cloud-based solutions eliminate physical limitations, making them indispensable for modern data management. This shift toward cloud technologies is a major driver behind the increasing demand for AI-powered data management solutions.

In this blog, we will delve into the transformative applications of Gen AI in data governance. We will also explore the latest advancements in AI-driven governance tools that have emerged in recent years. Let’s uncover how Gen AI is reshaping the way organizations manage, secure, and utilize their data. But first, we need to understand what data governance is.

Key Takeaways:

AI is revolutionizing optical network management by introducing intelligent, automated solutions.

There are many roles that GenAI can play in revolutionizing the data management industry. They have been helping businesses to automate their data management which makes it time efficient.

There are an immense number of benefits of integrating GenAI into your data governance, which you will learn in the blog.

Integration of AI in optical networks is paving the way for smarter and more adaptive network infrastructures.

What is Data Governance?

Data governance refers to the structured management of data throughout its entire lifestyle, from acquisition to usage and disposal. It is essential for every business. With businesses rapidly embracing digital transformation, data has become their most critical asset.

Senior leaders rely on acute and timely data to make informed strategic decisions. Marketing and sales teams depend on reliable data to anticipate customer preferences and drive engagement. Similarly, procurement and supply chain professionals need precise data to optimize inventory levels and reduce manufacturing costs.

Furthermore, compliance officers must ensure data is managed in line with internal policies and external regulations. Without effective data governance, achieving these goals becomes challenging, potentially impacting business performance and compliance standards.

By implementing robust data governance practices, organizations can ensure data quality, foster trust, and drive better decision-making across all departments. Transitioning to a data-driven approach empowers businesses to remain competitive and agile in a rapidly evolving market.

As it is done, we know exactly what data governance is. Now we need to understand the roles GenAI can have on data management.

The Role of GenAI in Data Governance

Generative AI (Gen AI) is a transformative subset of artificial intelligence that focuses on creating new content such as text, images, audio, and videos. It works by analyzing patterns in existing data and leveraging advanced generative models to produce outputs that closely mimic its training data. This technology enables the creation of diverse content, ranging from creative writing to hyper-realistic visuals.

While primarily recognized for content generation, Gen AI holds significant potential in revolutionizing data governance. Its advanced capabilities can streamline key aspects of data management and compliance processes, delivering accuracy and efficiency.

Automating Data Management Tasks

Gen AI can automate repetitive processes like data labeling, profiling, and classification. These tasks, often prone to human error, become more precise and less time-consuming with automation. By minimizing manual intervention, organizations can improve data governance frameworks and ensure greater consistency. It is said that automating your management AI-ready data can be one big step to working your way up in the industry.

Ensuring High-Quality Data for Decision–Making

Gen AI excels at identifying patterns and detecting anomalies in large datasets. This capability ensures that the data driving business decisions is both reliable and consistent. High-quality data enhances the accuracy of insights, reducing the risks associated with flawed or incomplete information.

Facilitating Transparency

Gen AI promotes transparency by making data governance processes more visible and understandable. It provides clear insights into data handling and processing methods, building trust across teams.

Supporting Regulatory Compliance

Complying with complex regulations like GDPR and CCPA is a critical challenge for organizations. Gen AI simplifies this by performing automated audits and monitoring data handling practices in real time. It ensures adherence to policies and implements necessary changes as legal standards evolve, reducing compliance risks.

Enabling Better Collaboration

With its intuitive tools, Gen AI fosters better collaboration across teams by providing shared, accessible insights into datasets. This eliminates silos and ensures alignment between business, compliance, and technical teams.

Reducing Manual Workload

By automating routine data management tasks, Gen AI frees up organizational resources. Employees can shift their focus from repetitive activities to strategic initiatives. This transition empowers businesses to prioritize innovation and growth instead of getting entangled in manual processes.

Gen AI’s ability to enhance data reliability, streamline compliance, promote transparency, and improve efficiency makes it a valuable tool for modern businesses. As organizations increasingly deal with vast and complex datasets, integrating Gen AI into data governance strategies will drive operational excellence and foster innovation.

Potential Challenges of Using GenAI in Data Governance

Generative AI, despite its transformative advantages in data governance, also introduces specific challenges that must be addressed. Identifying these challenges and implementing actionable solutions are key to maximizing its potential and ensuring ethical usage. Below are the critical challenges and ways to overcome them:

Data Security and Privacy Risks

Generative AI relies heavily on large datasets, often containing sensitive or personal information. This poses significant risks of unintentional exposure or misuse. Organizations should implement robust data anonymization techniques to mask sensitive information. Additionally, access controls should be enforced to limit unauthorized usage, and encryption must be applied to safeguard data during training and deployment. Proactively adopting these measures ensures both privacy and security throughout the AI lifecycle.

Bias and Fairness in AI Models

Generative AI can inadvertently amplify biases present in its training data, resulting in skewed or unethical outcomes. To address this, organizations should prioritize using diverse datasets that represent all demographic groups. Regular audits should also be conducted to identify and mitigate biases in AI outputs. By fostering fairness in model design and operation, businesses can promote more equitable outcomes and maintain user trust.

Regulatory Compliance Challenges

Adhering to data privacy Alaws, such as GDPR and HIPAA, is often complex for AI-driven processes. To simplify this, compliance protocols must be embedded during the design phase of AI model development. Regular monitoring of evolving legal standards ensures continued alignment with regulations. This proactive approach not only reduces risks but also reinforces accountability in AI usage.

Data Quality and Integrity Issues

AI-generated outputs must be closely monitored to avoid inaccuracies that could impact decision-making. Validating generated data against predefined benchmarks is essential to maintaining accuracy and reliability. Continuous monitoring processes help identify errors early and ensure the integrity of data used for business insights. This prevents flawed information from influencing critical operations.

Intellectual Property Concerns

Artificial intelligence-generated content might inadvertently infringe on intellectual property rights, raising legal and ethical concerns. To mitigate this, advanced algorithms should screen outputs for potential IP violations. Moreover, developers and users of generative AI must be educated about intellectual property guidelines. Awareness and preventive mechanisms ensure responsible and legally compliant content generation.

Scalability and Integration Challenges

Integrating generative AI into existing systems can be technically demanding and costly. Ensuring seamless adoption requires designing scalable models compatible with current infrastructures. Furthermore, clear integration strategies should be planned to align AI capabilities with business workflows. By addressing scalability proactively, businesses can reduce implementation hurdles and maximize return on investment.

Generative AI’s potential in data governance is immense, but managing its challenges is vital for achieving sustainable success.

Latest Developments for GenAI in Data Governance Solutions

The advancement of GenAI in data governance solutions highlights the integration of AI and ML into modern data management strategies. These developments focus on improving data accuracy, security, compliance, and accessibility. Let’s explore these emerging trends in detail:

Automated Data Processing

AI and machine learning have transformed data processing by automating repetitive tasks like cleansing and preparation. These technologies ensure that data remains accurate, reducing manual errors and saving time. By streamlining these processes, businesses can focus on extracting actionable insights instead of struggling with raw data management.

Predictive Analytics

Businesses are leveraging machine learning models to predict future trends and identify potential risks. Predictive analytics enables proactive decision-making by analyzing historical patterns and forecasting outcomes. This foresight helps businesses stay ahead of market shifts and mitigate risks effectively.

Personalized Insights

AI algorithms now provide insights tailored to individual user preferences and behaviors. This personalization enhances user experiences by delivering relevant data at the right time. For instance, businesses can use these insights to offer customized recommendations, improving customer satisfaction and engagement.

Scalable Data Management

Managing extensive datasets in real-time is now achievable through machine learning-powered scalability. These technologies enable organizations to process large volumes of data seamlessly, ensuring timely analysis. This scalability ensures businesses remain agile as their data needs grow.

Compliance with Data Privacy Laws

Adhering to data privacy laws like GDPR and HIPAA has become more streamlined with AI-driven solutions. These tools monitor data handling practices and flag potential non-compliance. By automating policy updates and mitigating risks, organizations can maintain adherence to ever-evolving regulations.

Consistency Across Data sources

Maintaining uniformity across diverse data sources requires standardized formats and validation rules. AI tools validate data entries and enforce consistency, minimizing discrepancies. This uniformity ensures smoother data integration and enhances overall operational efficiency.

Ensuring Consumer Data Rights

Regulations increasingly require organizations to honor consumer rights over their personal data. This mandates robust data management practices to ensure compliance. Automated solutions enable businesses to manage data access requests and ensure transparency, reinforcing consumer trust.

Data Enrichment and Transformation

AI enhances data by filling gaps, enriching datasets, and converting them into actionable formats. These processes add value to raw data, making it more meaningful for decision-making. Enriched data allows organizations to uncover deeper insights and gain a competitive edge.

Data Lineage Visualization

Visualization tools now track and display the flow of data across an organization. This transparency helps teams understand how data moves and transforms over time. Such insights are invaluable for troubleshooting, compliance, and optimizing data workflows.

Efficient Metadata Management

Automated tools collect and maintain metadata, ensuring it remains up-to-date and accurate. By minimizing manual efforts, businesses can focus on analyzing metadata for better decision-making. Current and reliable metadata improves data discoverability and governance.

Cloud Computing Benefits

Cloud-based solutions provide flexibility and scalability without requiring substantial capital investments. These platforms enable organizations to scale operations based on demand while reducing infrastructure costs. Cloud computing also ensures easy access to data, fostering collaboration.

Security and Compliance in the Cloud

Leading cloud providers incorporate robust security features and certifications to support regulatory compliance. These built-in safeguards ensure that sensitive data remains protected. Additionally, they simplify adherence to compliance standards, reducing the burden on businesses.

Read more about the cloud security controls strategies.

Decentralized Data Governance

Organizations are empowering individual departments with more control over their data. This decentralized approach enhances governance by enabling teams to manage their data efficiently. It also promotes accountability and ensures data governance is more aligned with departmental needs.

AI-powered data governance continues to evolve, driving innovation while addressing complexities in managing modern datasets. By embracing these advancements, organizations can improve data handling, boost compliance, and enhance operational efficiency.

Conclusion

The integration of GenAI into data governance marks a transformative leap toward smarter and more adaptive data management. By addressing critical challenges like security vulnerabilities and the demand for robust cloud governance frameworks, businesses can unlock GenAI’s full potential.

This integration ensures data integrity, safeguarding the accuracy and reliability of information across processes. Moreover, it reinforces compliance by aligning data practices with evolving legal and regulatory requirements.

By streamlining data handling and enabling innovative applications, Gen AI enhances organizational efficiency and accelerates decision-making. It equips leaders with actionable insights, fostering better strategic planning and more informed business decisions.

Incorporating Gen AI into governance strategies not only mitigates risks but also empowers organizations to use their data as a competitive asset. As a result, businesses can achieve greater agility, operational excellence, and sustainable growth for your business.

Your best choice is to go with the best mobile app development company that can make your project a real application.

Source URL: Genai-in-data-governance-bridging-gaps-and-enhancing-compliance

0 notes

Text

The Role of Outcome-Driven Metrics in Enhancing Cloud Security Control Strategies

Cloud services adoption surges globally. Many businesses must evolve their security strategies to address emerging challenges.

The global cloud security market was valued at $28.35 billion in 2022 and is expected to grow at a rate of 13.1% annually from 2023 to 2030. Businesses today face an increasing variety of cyber risks, including advanced malware and ransomware attacks. As companies shift to digital operations and store large amounts of sensitive data in the cloud, they have become key targets for cybercriminals looking to steal or exploit information.

Gartner forecasts that the combined markets for IaaS, PaaS, and SaaS will grow by over 17% annually through 2027. This remarkable expansion underscores the urgency for businesses to transition from traditional security methods to more advanced, cloud-native solutions. Conventional approaches often fall short in safeguarding dynamic cloud environments, emphasizing the need for innovative strategies.

To secure cloud-native and SaaS solutions effectively, organizations must focus on platform configuration and identity risk management. These elements form the cornerstone of modern cloud security. Addressing these areas requires a shift in both security approaches and spending models, ensuring alignment with evolving threats. Furthermore, security metrics must move beyond technical performance to demonstrate their relevance to business outcomes.

The cloud, far from being just a storage solution, represents a sophisticated web of interconnected services. This complexity calls for a refined approach to measuring the impact of security investments. Security and risk leaders should adopt outcome-driven metrics (ODMs) to assess the efficiency of their cloud security measures. ODMs empower leaders to align their efforts with organizational goals, offering actionable insights into their security posture.

By customizing ODMs, businesses can better manage risks, enhance cloud security strategies, and achieve results that support overall objectives. In this blog, we will delve into key ODMs that guide future investments in cloud security, ensuring robust protection and meaningful outcomes.

Key Features and Benefits of Outcome-Driven Metrics

Emphasis on Tangible Results

Outcome-driven metrics prioritize measurable outcomes like fewer incidents, reduced risks, and enhanced operational resilience.

For instance, ODMs don’t just count firewalls but assess how they minimize successful cyber attacks. They evaluate key performance indicators, such as shorter threat detection times, faster response rates, and lower incident severity.

This approach tracks outcomes like fewer data breaches, quicker recovery times, and lower overall security costs due to efficient controls. ODMs ensure that security efforts produce valuable, actionable results that enhance the organization’s resilience and performance.

Alignment with Business Objectives

ODMs integrate security goals with broader organizational priorities to ensure strategic alignment and meaningful impact.

This connection ensures security efforts support business growth, compliance, and customer trust. For example, safeguarding customer data not only prevents breaches but also strengthens brand reputation and meets regulatory requirements.

By translating technical outcomes into business-centric insights, ODMs bridge the gap between security teams and decision-makers. This alignment also helps justify security investments to executives by highlighting their contributions to achieving business goals.

Maximizing Cost-Value Efficiency

ODMs evaluate the cost-value balance of security measures to ensure optimal resource allocation and impactful investments.

Businesses can prioritize initiatives that offer the highest return on investment in risk reduction and operational benefits. For example, high-impact controls receive more funding, while less effective measures are reassessed.

This approach optimizes security budgets, ensuring every dollar spent maximizes protection and minimizes vulnerabilities. It enables organizations to strengthen their overall security posture with precision and efficiency.

Tailored Cloud Security Metrics

Cloud environments require dynamic, outcome-driven metrics to allocate resources effectively and address unique security needs.

Unlike fixed budgets, ODMs guide spending based on specific risks and requirements for various cloud services. For instance, mission-critical applications might need advanced encryption and robust identity management compared to less sensitive workloads.

Cloud-specific ODMs measure how controls like encryption, access management, and monitoring contribute to achieving desired security outcomes. This ensures cloud assets and data remain well-protected while enabling efficient resource utilization.

How to Implement Outcome-Driven Metrics (ODM) in Your Business?

Implementing outcome-driven metrics requires a systematic approach to ensure security measures align with desired outcomes and organizational objectives. Below is a detailed guide to implementation:

Develop Initial Processes and Supporting Technologies

Begin by defining critical security processes and mapping them to the technologies supporting these functions.

For instance, technologies like XDR and EDR underpin endpoint protection, while vulnerability scanners support vulnerability management. Similarly, IAM systems and directory services play a vital role in authentication.

This structured framework ensures each security process has a robust technological backbone, providing the foundation for precise measurement and management. It also helps streamline efforts, enabling teams to focus on impactful areas.

Identity Business Outcomes and ALign ODMs

The next step involves linking security processes to specific business goals and identifying desired results for each process.

For example, in endpoint protection, outcomes may include high deployment coverage and effective threat detection. Metrics could track endpoints actively protected and threats mitigated.

Similarly, in vulnerability management, scan frequency and addressing high-severity risks are critical. Desired outcomes may include percentages of systems scanned and vulnerabilities resolved. This alignment ensures security measures directly support organizational priorities.

Recognize Risks and Dependencies

Understanding risks and dependencies is crucial to managing potential failures and minimizing operational disruptions.

Each process depends on specific technologies, and their failure could jeopardize security efforts. For example, endpoint protection relies on XDR and EDR solutions, while vulnerability management depends on scanners.

Assessing these dependencies enables better contingency planning, ensuring uninterrupted operations and consistent protection against evolving threats. This proactive step mitigates vulnerabilities arising from system failures.

Define ODM for Key Processes

Develop clear and actionable metrics that measure the effectiveness of each security process in achieving its intended outcomes.

For instance, endpoint protection metrics could include the percentage of endpoints actively safeguarded and the average threat detection time. Vulnerability management metrics measure systems scanned, remediation timelines, and resolved high-severity vulnerabilities.

These metrics provide quantifiable insights, enabling organizations to assess progress and refine strategies for improved outcomes.

Evaluate Readiness and Mitigate Risks

Finally, assess the organization’s readiness to adopt outcome-driven metrics and identify risks that could impact implementation.

Ensure the necessary infrastructure, expertise, and resources are in place to monitor and act on ODM insights. Address challenges like data accuracy issues, resistance to change, or integration with existing processes through strategies like phased adoption and training.

This step ensures a smoother transition and maximizes the effectiveness of ODMs in aligning security investments with business objectives.

Implementing outcome-driven metrics transforms security management by focusing on measurable results that directly impact organizational goals. With advancements in technology, AI-driven insights enhance the value of ODMs by automating processes and improving decision-making accuracy.

Organizations leveraging these metrics effectively can achieve superior protection and align security efforts with strategic outcomes. Connect with our experts to explore how ODMs can empower your cybersecurity strategy.

Examples of Outcome-Driven Metrics

Outcome-driven metrics offer measurable insights that demonstrate the real-world impact of security initiatives. Below are some key examples:

Mean Time to Detect (MTTD)

MTTD highlights the average time taken to identify a security threat, focusing on faster detection to mitigate risks.

A reduced MTTD minimizes the damage caused by prolonged threats. For instance, organizations can compare current detection times with targeted benchmarks to monitor improvement.

Regular reporting on this metric may include actionable insights, such as areas needing improvement and how enhanced processes or tools can accelerate detection. Faster identification leads to reduced exposure and a more robust security posture.

Mean Time to Respond (MTTR)

MTTR tracks how quickly an organization contains and resolves incidents, aiming to limit the extent of a breach.

This metric emphasizes operational readiness by showcasing how swift responses can prevent critical disruptions or data losses. Reporting should cover the number of prevented breaches and how internal collaboration or automated solutions can further reduce response times.

Reducing MTTR strengthens resilience by demonstrating the organization’s ability to neutralize threats promptly and efficiently.

Phishing Click-Through Rate

This metric evaluates employee susceptibility to phishing attempts, focusing on awareness and preparedness against social engineering attacks.

A lower click-through rate reflects an informed workforce capable of identifying and avoiding malicious links or emails. Organizations can use simulations and trend reports to measure progress and identify vulnerable groups needing additional training.

Implementing regular phishing tests alongside educational programs enhances overall resistance, making the organization less prone to attacks exploiting human errors.

Security Return on Investment (ROI)

Security ROI quantifies the financial benefits of cybersecurity measures compared to the costs, offering a clear value assessment.

This metric helps illustrate how investments reduce downtime, decrease customer complaints, and lower insurance premiums. Organizations can highlight these savings alongside tangible improvements, such as fewer breaches or reduced recovery costs.

By presenting ROI data in monetary terms, security teams can effectively communicate their value to business leaders and justify future investments.

Outcome-driven metrics like these ensure that security efforts align with strategic goals while delivering measurable value. They empower organizations to focus on actionable outcomes, building trust and demonstrating the effectiveness of their cybersecurity programs.

Practical Examples of Outcome-Driven Metrics for Cloud Security

Cloud Governance ODM

An accurate estimate of activity monitored by cloud infrastructure is vital for robust security. Without detailed tracking of cloud assets, other metrics lose relevance as hidden risks may lurk outside the organization’s visibility and control. These challenges intensify when cloud adoption is primarily driven by business units rather than IT departments, as these units often direct accountability.

For effective cloud governance, visibility into all cloud accounts is crucial. Organizations often monitor only “known cloud accounts,” which may represent only part of their cloud presence. Identifying additional accounts requires compensating controls, such as rigorous approval workflows, expense monitoring, and advanced technical solutions like security service edges and network firewalls. These controls should aim for a holistic view of all active accounts to ensure metric accuracy.

Cloud Account Accountability: Clear ownership ensures accountability for managing account configurations and usage policies.

Cloud Account Usage and Risk: Regular assessments are essential to track account usage and mitigate evolving risks in dynamic cloud environments.

Cloud Operation ODM

Operational security metrics play a pivotal role in securing cloud environments, but their relevance varies based on infrastructure setups. These metrics provide insights into the effectiveness of security measures. However, accurate measurements often depend on the availability of advanced tools. Analyzing these metrics account-by-account or by priority level enhances clarity.

Real-Time Cloud Workload Protection: Critical workloads require real-time runtime monitoring for memory, processes, and other dynamic components.

Runtime Cloud Workload Protection: Non-critical workloads can utilize agentless scanning methods to achieve sufficient security without continuous visibility.

Cloud Identity ODM

Cloud identity management extends beyond user accounts, particularly in IaaS environments, where workloads require their own machine identities and privileges. Effective lifecycle management and governance for these identities are essential. In IaaS environments, identity functions as the primary control for application consumers. Overprivileged identities remain a major concern across cloud providers. Without the right tools, measuring identity can be challenging, necessitating specialized solutions.

Workload Access to Sensitive Data: Machine identities often outnumber user accounts, making privileged workloads a critical area for risk mitigation.

Active Multi-Factor Authentication (MFA) Users: MFA serves as a fundamental defense for securing user accounts accessing cloud tenants.

Conclusion

Understanding and tracking the cloud services used in an organization is key to effective cloud security and developing meaningful metrics. While some on-premises metrics can be adjusted for cloud use, the unique and fast-changing nature of cloud adoption calls for a fresh approach. Cloud-specific outcome-driven metrics (ODMs) focus on achieving specific security results, rather than simply basing investments on a portion of cloud spending.

Automation is vital for managing these controls in the dynamic cloud environment. Automating tasks like tracking, reporting, and configuration management helps ensure efficiency and accuracy. However, many organizations are cautious about automating fixes in live production environments to avoid disrupting operations. Building strong automation capabilities is often necessary to meet many of these cloud security goals effectively.

With TechAhead, you can be the next leader in the industry. We have been taking the app development services to another level. Because we have the most respected and experienced mobile app developers in the market.

Source URL: The-role-of-outcome-driven-metrics-in-enhancing-cloud-security-control-strategies

#cloud security adoption#outcome-driven metrics for security#cloud security strategies#cloud-native security solutions#cloud security metrics

0 notes

Text

Empowering SMEs: A Guide to Digital transformation for Financial Success

Small and medium-sized enterprises (SMEs) often operate under tight constraints, facing challenges that differ significantly from those of large corporations with huge budgets and resources. Despite these limitations, SMEs find themselves under increasing pressure to embrace digital technologies. Transformation is no longer an optional investment; it is a critical step for both survival and growth.

For SMEs, the conversation around digital transformation takes on a distinct perspective. It isn’t simply about integrating the latest technologies—it’s about strategically leveraging these tools to improve efficiency, enhance customer interactions, and fuel innovation. However, the journey toward digitally transforming is often fraught with challenges. Limited resources, a lack of expertise, and uncertainty about how to begin can make the process feel overwhelming.

This blog is crafted specifically to address the unique needs of SMEs. It offers actionable insights and practical strategies to help businesses navigate the complexities of digital transformation. From adopting cloud-based solutions to automating workflows and strengthening cybersecurity, each step outlined in the blog aims to empower SMEs. The goal is clear: to enable these enterprises to harness digitalization effectively, driving sustainable growth in an increasingly competitive landscape.

Why Does Digital Transformation Matter for SMEs?

Digital transformation is no longer just a trend; it is a fundamental strategy for staying relevant in today’s dynamic market. For SMEs, embracing digital innovation can revolutionize operations, elevate customer engagement, and drive sustainable growth. Here’s why it holds immense significance.

Boosts Operational Efficiency

Adopting digital tools simplifies complex workflows and eliminates inefficiencies. Automating repetitive tasks like invoicing or inventory management saves time and resources. Employees can then focus on high-value tasks, fostering innovation and productivity. Real-time data insights enable quicker decision-making, improving overall organizational agility.

Reduces Costs and Optimizes Resources

Though the initial investment might seem challenging, the financial returns outweigh the costs over time. Cloud-based solutions reduce the reliance on costly hardware, cutting cloud infrastructure expenses significantly. Resource optimization enables SMEs to reinvest in critical growth areas, ensuring better financial health and scalability.

Enhances Customer Experience

Modern customers demand personalized and seamless interactions across channels. Digital tools like CRM platforms provide deep insights into customer preferences and behaviors. SMEs can use this data to deliver tailored services and proactive solutions, building loyalty. Exceptional customer experiences drive retention and amplify revenue streams.

Facilities Scalability and Business Growth

Digital transformation equips SMEs with scalable solutions tailored to their growth needs. Cloud computing offers flexible storage and computing power, eliminating traditional growth barriers. SMEs can expand effortlessly, responding to increasing demands without significant infrastructure investments.

Maintains Competitiveness in a Digital Era

A study by IDC projects that 65% of global GDP will come from digital products and services by 2025. SMEs embracing digital technologies stay competitive, meeting market demands with agility and innovation. Delaying transformation risks being outpaced by competitors leveraging advanced digital ecosystems.

By integrating digital transformation, SMEs unlock new opportunities, enhance efficiency, and secure their position in a tech-driven economy. While the journey requires commitment and strategic investments, the rewards—greater productivity, higher profitability, and sustained growth—are transformative.

Key Challenges SMEs Encounter in Digital Transformation

Digital transformation offers immense potential, but SMEs often face complex hurdles that can make the journey daunting. Identifying these challenges is the first step to effectively addressing them.

Limited Financial Resources and Budget Constraints

For SMEs, budget limitations remain one of the most significant roadblocks in digital transformation. Unlike larger corporations, SMEs operate on lean margins, making high upfront costs challenging. Expenses for advanced technologies, software subscriptions, and IT infrastructure upgrades can strain financial resources. Creative financing options or incremental adoption strategies can help address these constraints.

Insufficient In-House Digital Expertise

A lack of skilled personnel often hinders SMEs from sorting and managing digital tools effectively. Many SMEs lack professionals well-versed in cloud computing, automation, or analytics. This skills gap creates a dependency on external consultants or service providers, which can be both costly and time-consuming. Upskilling employees through targeted training programs can help bridge this gap.

Organizational Resistance to Change

Digital transformation demands a cultural overhaul, which is particularly challenging for SMEs with traditional practices. Employees may resist adopting new tools, fearing job displacement or struggling to adapt to unfamiliar systems. This resistance delays progress and disrupts workflows. Effective change management strategies and clear communication can ease this transition.

Complexity of Integrating Legacy Systems

Many SMEs rely on outdated systems that are incompatible with modern digital solutions. Integrating these legacy systems with advanced tools can lead to technical complexities and operational disruptions. Migrating data, ensuring platform compatibility, and maintaining business continuity requires meticulous planning and execution. Leveraging hybrid solutions can help facilitate smoother transitions.

Increased Exposure to Cybersecurity Risks

Digital transformation makes SMEs more vulnerable to sophisticated cyber threats. Without robust security measures, these businesses face risks such as data breaches and financial theft. Limited awareness of cybersecurity protocols further exacerbates the issue. Investing in strong cybersecurity frameworks and employee training can significantly reduce vulnerabilities.

Ambiguity in Measuring ROI

Determining the ROI from digital transformation efforts remains a challenge for many SMEs. Without clear metrics or immediate benefits, businesses may hesitate to commit fully to these initiatives. Comprehensive tracking mechanisms and realistic benchmarks can help demonstrate tangible outcomes and long-term value.

While the challenges are real, SMEs can overcome them with strategic planning and expert support. Addressing these obstacles head-on ensures a smoother transition, empowering businesses to unlock the full potential of digital transformation.

Steps to Begin Your Digital Transformation Journey

Starting a digital transformation journey can feel daunting, but breaking it into actionable steps simplifies the process. Here’s a comprehensive roadmap tailored for SMEs:

Conduct a Digital Readiness Assessment

Evaluate existing processes, technologies, and workforce digital skills to identify gaps.

Pinpoint inefficiencies and bottlenecks where digital tools can create maximum impact.

Benchmark your business against industry leaders to understand your digital maturity level.

Use this analysis to map out areas requiring urgent attention, such as outdated workflows or redundant manual tasks.

Understanding where you stand ensures your transformation efforts are targeted and effective, avoiding wasted resources.

Define Clear Goals and Objectives

Set specific and measurable goals aligned with your business strategy. Examples include:

Boosting operational efficiency by 20% through automation.

Enhancing customer retention by leveraging data analytics to understand behaviors.

Cutting manual workload in inventory management by implementing tracking solutions.

Ensure these objectives reflect long-term business growth and adaptability to future needs.

Clear objectives create a roadmap for action and provide benchmarks to measure success.

Prioritize Key Initiatives

Begin with high-impact, low-complexity projects offering quick returns. Examples include:

Deploying cloud-based CRM software for improved customer data management.

Automating repetitive tasks such as invoicing, payroll, or appointment scheduling.

Gradually progress to advanced solutions like AI-driven analytics or IoT integration for deeper insights and efficiency.

Starting small builds momentum, boosts confidence, and minimizes risks during larger implementations.

Choose the Right Technology Partner

Partner with experts specializing in SME digital transformation to guide the journey.

Look for vendors offering holistic solutions, including implementation, ongoing support, and employee training.

Seek recommendations, check client reviews, and request live demos to ensure compatibility.

Prioritize partners who offer scalable solutions to grow your business.

A reliable partner ensures a smooth transition and ongoing success with tailored solutions.

Invest in Employee Training and Engagement

Conduct structured training programs to familiarize employees with new tools and systems.

Address concerns proactively and collect feedback to foster a collaborative environment.

Emphasize how digital solutions simplify their workload, reduce errors, and improve productivity.

Recognize employees who champion the change to encourage widespread adoption.

Well-trained and motivated employees drive the success of digital transformation initiatives.

Implement in Phases

Avoid overwhelming your team by introducing technologies gradually.

Begin with smaller departments or specific workflows to pilot new solutions.

Use these pilot programs to identify potential issues, gather insights, and refine strategies.

Scale implementation based on proven success and lessons learned from initial deployments.

Gradual rollouts reduce risks, ensure smoother transitions, and improve team confidence in adopting changes.

Monitor Progress and Adapt

Use KPIs to track progress across areas like cost savings, process efficiency, and customer satisfaction.

Evaluate metrics consistently to identify successes and areas needing adjustments.

Stay informed about emerging technologies to identify opportunities for continuous innovation.

Foster an adaptive mindset to embrace change and refine strategies over time.

Regular assessments keep initiatives on track and ensure alignment with business objectives.

Communicate Your Successes

Share achievements with employees, stakeholders, and customers to showcase the transformation’s impact.

Highlight success stories, such as improved customer experiences or operational savings, to build momentum.

Foster a culture of innovation by celebrating milestones and encouraging continuous improvement.

Transparent communication boosts morale, strengthens stakeholder confidence, and positions your business as forward-thinking.

By following these structured steps, SMEs can navigate the complexities of digital transformation effectively. Staying adaptable, investing in continuous improvement, and leveraging technology strategically ensures long-term success and growth.

Tailored Digital Transformation Strategies for SMEs

SMEs can overcome hurdles and unlock the full potential of digital transformation by implementing targeted strategies. A customized approach ensures that solutions align with specific goals and constraints. Here are practical methods to guide SMEs:

Start with Scalable, Incremental Solutions

Focus on small, affordable technologies that address immediate operational pain points. For instance, cloud-based tools like CRM systems simplify customer management without requiring significant investments. Incremental adoption minimizes risk and ensures seamless integration. Instead of an all-encompassing shift, tackle one process at a time to build confidence and efficiency.

Harness the Power of Cloud Computing

Cloud platforms provide cost-effective, scalable solutions tailored to SME needs. Tools like Google Workspace streamline workflows and reduce infrastructure expenses. Cloud storage ensures secure, centralized data access, promoting collaboration across distributed teams. This flexibility is particularly beneficial for SMEs embracing hybrid or remote work environments.

Automate Routine Operations

Automation eliminates repetitive tasks, boosting efficiency and reducing human errors. For example, automating inventory management or email marketing saves time and optimizes performance. Tools like QuickBooks and HubSpot enable SMEs to implement automation without requiring advanced technical skills. Focus on automating processes with the highest manual effort to maximize returns.

Strengthen Cybersecurity Measures

Invest in robust yet budget-friendly security solutions like firewalls, antivirus software, and regular vulnerability assessments. Employee training on recognizing phishing attacks and using strong passwords can reduce cybersecurity risks. Managed security services offer affordable, professional protection for SMEs lacking in-house expertise.

Empower Employees Through Upskilling

Digital tools are only as effective as the teams operating them. Invest in training programs to equip employees with the necessary skills. Workshops or hands-on sessions help employees overcome resistance to new technologies. Collaborate with technology vendors offering comprehensive training to ensure a smoother transition.

Embrace a Customer-Centric Approach

Digital tools enable SMEs to gather actionable insights into customer behavior and preferences. Platforms like Power BI analyze customer data, helping SMEs deliver personalized experiences. These insights can be used to create targeted campaigns and improve service quality, fostering loyalty and enhancing revenue streams.

Partner with Digital Transformation Roadmap

Collaborating with experienced technology consultants simplifies the adoption process. Experts help identify relevant tools, streamline workflows, and address potential challenges. A strategic partnership ensures that SMEs make informed decisions, avoiding costly missteps.

With the right strategies, SMEs can embark on a transformation journey tailored to their unique needs. Focusing on scalable solutions, employee empowerment, and customer-centric practices ensures a sustainable approach. By prioritizing these initiatives, SMEs can achieve long-term success in the digital era.

Tools and Technologies for SMEs

Digital transformation is attainable for SMEs when equipped with the right tools and technologies. Selecting scalable, cost-effective, and user-friendly solutions empowers businesses to enhance operations, customer relationships, and decision-making.

Cloud-Based Solutions

Microsoft Dynamic 365

Integrates ERP and CRM functionalities, enabling efficient management of finances, operations, and customer relationships. Ideal for comprehensive oversight.

Google Workspace

Offers collaborative tools like Gmail, Drive, Docs, and Sheets, fostering seamless teamwork and document sharing.

Zoho One

Features over 40 business applications covering sales, HR, finance, and more, creating a unified digital ecosystem.

Why Choose Cloud-Based Development?

Cloud-based platforms simplify workflows, eliminate the need for expensive hardware, and support remote operations with ease.

Customer Relation Management (CRM)

HubSpot CRM

A free solution offering lead management, email marketing automation, and sales tracking, tailored for growing businesses.

Salesforce Essentials

Designed for SMEs, this tool streamlines customer interactions and automates sales processes for better efficiency.

Why Does CRM Matters?

CRM tools centralized customer data, improved engagement, and enabled data-driven decision-making to enhance customer satisfaction.

Project Management and Collaboration

Trello

Uses visual boards to track tasks and project statuses, making it intuitive for team collaboration.

Asana

A versatile platform that helps teams plan, manage, and monitor project progress with clear timelines.

Slack

Enhances communication by integrating with other tools, ensuring seamless collaboration and real-time updates.

Impact of Project Management Tools

Streamlined project management fosters productivity, keeps teams aligned, and ensures timely project delivery.

Marketing and Analytics

Mailchimp

Automates email marketing campaigns, tracks engagement, and provides insights for targeted strategies.

Google Analytics

Delivers in-depth analysis of website traffic, helping SMEs optimize marketing efforts and customer experience.

Canva

A user-friendly design tool for creating professional-grade marketing assets like social media graphics and ads.

How Do These Help?

Marketing tools empower SMEs to reach audiences effectively, analyze performance, and refine strategies for better ROI.

Finance and Accounting

QuickBooks

Simplifies accounting tasks, including invoicing, expense tracking, and financial reporting, suitable for small businesses.

Wave

A free tool for managing invoicing, payroll, and expenses, offering an accessible solution for startups.

Value of These Solutions

Efficient financial tools save time, reduce errors, and provide actionable insights into business health.

Cybersecurity

Norton Small Business

Shields devices and data against malware, ensuring robust protection for small business networks.

LastPass

Simplifies password management, enhancing security with features like encrypted storage and auto-login.

Cloudflare

Boosts website performance while protecting against cyberattacks, ensuring uptime and safety.

Significance of Cybersecurity

Strong security measures safeguard sensitive data, protect reputations, and prevent financial losses from breaches.

Data Analytics and Business Intelligence

Microsoft Power BI

Converts raw data into actionable insights using advanced analytics and interactive visualizations.

Tableau

Enables SMEs to create custom dashboards, uncover trends, and make informed decisions.

Why Analytics?

Data-driven insights allow SMEs to identify opportunities, improve processes, and maintain a competitive edge.

E-Commerce Platforms

Shopify

A complete solution for building, managing, and scaling online stores with minimal technical expertise required.

WooCommerce

Turns WordPress websites into feature-rich e-commerce stores, offering flexibility and customizability.

E-Commerce Platforms Impact

These platforms empower SMEs to reach wider markets, manage sales efficiently, and adapt to online consumer behavior.

Why You Need to Tailor Your Tools to Your Business Success?

When selecting tools, SMEs should prioritize solutions that:

Align with business goals: Avoid unnecessary complexities by choosing tools that directly address operational needs.

Offer scalability: Select platforms capable of growing with the business, ensuring long-term viability.

Provide comprehensive support: Opt for tools with accessible training and responsive customer assistance to streamline adoption.

By leveraging these technologies, SMEs can accelerate their digital transformation journey, enhance operational efficiency, and secure a competitive advantage in the evolving market landscape.

Conclusion

Digital transformation is no longer a choice for SMEs—it is an essential pathway to achieving growth, competitiveness, and long-term sustainability. With the right strategies, tools, and execution, SMEs can optimize their operations, elevate customer experiences, and unlock new growth avenues. Although the journey may appear complex, segmenting it into actionable steps and leveraging expert support can significantly ease the transition.

At TechAhead, we empower SMEs to confidently navigate the digital transformation process. Our expertise spans diverse domains, including cloud-based solutions, advanced data analytics, process automation, and robust cybersecurity measures. We craft customized strategies tailored to the specific challenges faced by SMEs, ensuring a practical and impactful approach.

Our services encompass the entire transformation journey—from crafting a strategic roadmap to seamless implementation and employee training. This holistic approach guarantees that businesses experience a smooth shift to a digitally driven operational model. By aligning cutting-edge technologies with SME objectives, we enable sustained progress in an increasingly digital economy.

Don’t let resource constraints or limited expertise hinder your digital transformation journey. Partner with TechAhead to transform your SME into a digitally empowered enterprise equipped for future challenges.

Contact us today or schedule a consultation with our experienced professionals to take the first step toward innovation and growth!

Source URL: Empowering-smes-a-guide-to-digital-transformation-for-financial-success/

#Cloud-Based Solutions#digital transformation#SMEs#Artificial Intelligence#SME Automation Tools#cybersecurity

0 notes

Text

AWS Cloud Migration: Benefits, Strategies, and Phases Simplified

Many businesses embark on their cloud migration journey with a strategy known as “lift and shift.” This approach involves relocating existing applications from on-premises environments to the cloud without altering their architecture. It’s an efficient and straightforward starting point, often appealing due to its simplicity and speed.

However, as companies dive deeper into the cloud ecosystem, they uncover a broader spectrum of possibilities. Lift and shift, though effective initially, is only the foundation of cloud migration. Businesses quickly realize that cloud computing offers far more than just infrastructure relocation.

To unlock its true potential, modernization becomes essential. Modernization transforms applications to align with cloud-native architectures. This ensures businesses can harness advanced features like scalability, resilience, and cost efficiency. It’s no longer about merely shifting workloads; it’s about reimagining them for the future.

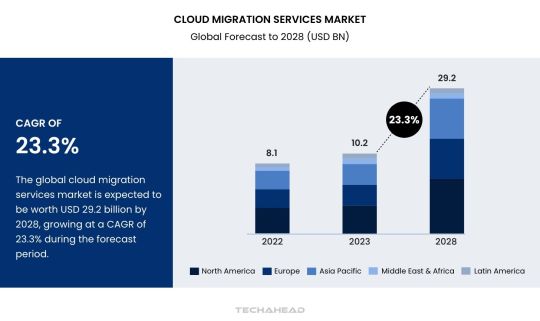

This evolution in approach is also reflected in the market’s staggering growth. According to Gartner, Inc., global end-user spending on public cloud services is projected to grow by 20.4% in 2024, reaching $675.4 billion from $561 billion in 2023. Generative AI (GenAI) and application modernization are major drivers of this surge. This data underscores the strategic importance of not just migrating to the cloud but modernizing applications to stay competitive in an evolving landscape.

For instance, early adopters of cloud migration often struggled with limited performance gains post-migration. They soon discovered that while lift and shift addressed immediate needs, it didn’t optimize long-term efficiency. Modernization solved this challenge by enabling applications to utilize the dynamic capabilities of cloud platforms.

This shift in approach isn’t just about technology—it’s about competitiveness. Businesses that embrace modernization gain agility and faster time-to-market, giving them a significant edge. They leverage tools like containerization and serverless computing, allowing seamless adaptation to evolving customer demands.

In this blog, we’ll delve into the reasons why lift and shift is just the starting line. We’ll also explore how modernization drives real value and share best practices for ensuring a smooth transition. By the end, you’ll understand why adapting to the evolving cloud landscape is not just a choice—it’s a necessity.

Benefits of AWS Migration

Transitioning your cloud infrastructure to the AWS cloud unlocks unparalleled scalability and efficiency. AWS provides a suite of advanced computing resources tailored to manage IT operations seamlessly. This enables your business to channel its efforts, resources, and investments into core activities that drive growth and profitability.

By adopting AWS cloud infrastructure, you eliminate the constraints of physical data centers and gain unrestricted, anytime-anywhere access to your data. Global giants like Netflix, Facebook and the BBC leverage AWS for its unmatched reliability and innovation. Let’s explore how AWS helps streamline IT operations while ensuring cost-effectiveness and agility.

Cost Efficiency

Expanding cloud infrastructure typically requires significant investment in hardware and administrative overhead. AWS eliminates these costs with a pay-as-you-go model.

Zero Upfront Investment: Run enterprise applications and systems without the need for large initial capital.

Flexible Scaling: AWS enables businesses to upscale or downscale resources instantly, ensuring that you never pay for unused capacity. This dynamic scaling matches your operational demands and avoids the waste associated with overprovisioning.

Advanced Cost Control: AWS provides tools like Cost Explorer and AWS Budgets, helping businesses track, forecast, and optimize cloud expenses. By analyzing consumption patterns, organizations can minimize waste and ensure maximum return on investment (ROI).

Reduced Maintenance Overheads: Without the need to maintain physical servers, businesses save on administrative and repair costs, redirecting budgets to strategic growth areas.

Enhanced Flexibility

AWS offers unparalleled adaptability, making it suitable for businesses of all sizes, whether they are start-ups, enterprises, or global businesses. Its integration capabilities enable smooth migrations and rapid scaling.

Seamless Compatibility: AWS supports a vast number of programming languages, operating systems, and database types. This ensures that existing applications or software frameworks can integrate effortlessly, eliminating time-consuming reconfigurations.

Rapid Provisioning of Resources: Whether migrating applications, launching new services, or preparing for DevOps, AWS provides the agility to provision resources instantly. For instance, during seasonal demand spikes, businesses can quickly allocate additional capacity and scale back during off-peak times.

Developer Productivity: Developers save time as they don’t need to rewrite codebases or adopt new frameworks. This allows them to focus on building innovative applications rather than troubleshooting compatibility issues.

Unmatched Security

Security is a cornerstone of AWS’s offerings, ensuring that your data remains protected against internal and external threats. AWS combines global infrastructure standards with customizable tools to meet unique security needs.

Shared Responsibility Model: AWS takes care of the underlying infrastructure, including physical security and global compliance. Customers are responsible for managing access, configuring permissions, and securing their data.

Data Encryption: AWS allows businesses to encrypt data both at rest and in transit, ensuring end-to-end protection. Businesses can leverage services like AWS Key Management Service (KMS) for robust encryption.

Compliance and Governance: AWS adheres to internationally recognized standards, including ISO 27001, GDPR, and SOC. This helps businesses meet legal and regulatory requirements with ease.

Threat Mitigation: AWS offers tools like AWS Shield and GuardDuty to detect and mitigate cybersecurity threats in real time, providing peace of mind in a rapidly evolving threat landscape.

Resilient Disaster Recovery

Disruption like power outages, data corruption, or natural disasters can cripple traditional IT systems. AWS AWS equips businesses with robust disaster recovery solutions to maintain operational continuity.

Automated Recovery Processes: AWS simplified disaster recovery through services like AWS Elastic Disaster Recovery, which automates recovery workflows and reduces downtime significantly.

Global Redundancy: Data is stored across multiple geographic locations, ensuring that even if one region experiences issues, operations can seamlessly shift to another. This minimizes disruptions and maintains business continuity.

Cost-Efficient Solutions: Unlike traditional disaster recovery setups that duplicate hardware, AWS’s cloud-based approach uses on-demand resources. This reduces capital investments while delivering the same level of protection.

Faster Recovery Times: With AWS, businesses can restore systems and data within minutes, ensuring minimal impact on operations and customer experiences.

Cloud migration with AWS is more than just a technological upgrade; it’s a strategic move that empowers businesses to innovate, scale, and thrive in a competitive market. By leveraging AWS, organizations can reduce costs, enhance flexibility, strengthen security, and ensure resilience.

7 Cloud Migration Strategies for AWS

AWS’s updated 7 Rs model for cloud migration builds on Gartner’s original 5 Rs framework. Each strategy caters to unique workloads and business needs, offering a tailored approach for moving to the cloud. Let’s explore these strategies in detail.

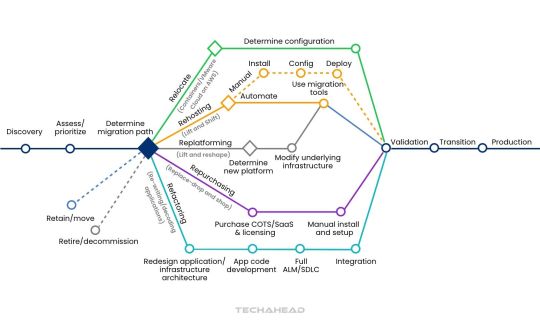

Rehost (Lift and Shift)

The rehost strategy involves moving workloads to the cloud with minimal changes using Infrastructure-as-a-Service (IaaS). Enterprises migrate applications and dependencies as they are, retaining the existing configurations. This approach ensures operational consistency and reduces downtime during migration. It is an easy-to-perform option, especially for businesses with limited in-house cloud expertise. Additionally, rehosting helps businesses avoid extensive re-architecting, making it a cost-effective and efficient solution.

Relocate (Hypervision-Level Lift and Shift)

Relocating shifts workloads to a cloud-based platform without altering source code or disrupting ongoing operations. Organizations can transition from on-premises platforms like VMware to cloud services such as Amazon Elastic Kubernetes Service (EKS). This strategy minimizes downtime and ensures seamless business operations during migration. Relocating maintains existing configurations, eliminating the need for staff retraining or new hardware. It also offers predictable migration costs, with clear scalability limits to control expenses.

Replatform (Lift and Reshape)

The replatform approach optimizes workloads by introducing cloud-native features while maintaining the core application architecture. Applications are modernized to leverage automation, scalability, and cloud compliance without rewriting the source code. This strategy enhances resilience and flexibility while preserving legacy functionality. Partial modernization reduces migration costs and time while ensuring minimal disruptions. Teams can manage re-platformed workloads with ease since the fundamental application structure remains intact.

Refactor (Re-architect)

Refactoring involves redesigning workloads from scratch to utilize cloud-native technologies and features fully. This strategy supports advanced capabilities like serverless computing, autoscaling, and enhanced automation.

Refactored workloads are highly scalable and can adapt to changing demands efficiently. Applications are often broken into microservices, improving availability and operational efficiency. Although refactoring requires significant initial investment, it reduces long-term operational costs by optimizing the cloud framework.

Repurchase (Drop and Shop)

Repurchasing replaces existing systems with third-party solutions available on the cloud marketplace. Organizations adopt a Software-as-a-Service (SaaS) model, eliminating the need for infrastructure management. This approach reduces operational efforts and simplifies regulatory compliance, ensuring efficient governance. Repurchasing aligns IT costs with revenue through consumption-based pricing models. It also accelerates migration timelines, enhancing user experience and performance with minimal downtime.

Retire

The retirement strategy focuses on decommissioning applications that no longer hold business value. Inefficient legacy systems are terminated or downsized to free up resources for more critical functions. Retiring outdated workloads reduces operational costs and simplifies IT management. This strategy also allows businesses to streamline their application portfolio, focusing efforts on modernizing essential systems.

Retain (Revisit)

The retain strategy is used for applications that cannot yet be migrated to the cloud. Some workloads rely on systems that need to be transitioned first, making retention a temporary solution. Businesses may also retain applications while waiting for SaaS versions from third-party providers. Retaining workloads provides flexibility, allowing organizations to revisit migration strategies and align them with long-term objectives.

Cloud Transformation Phases

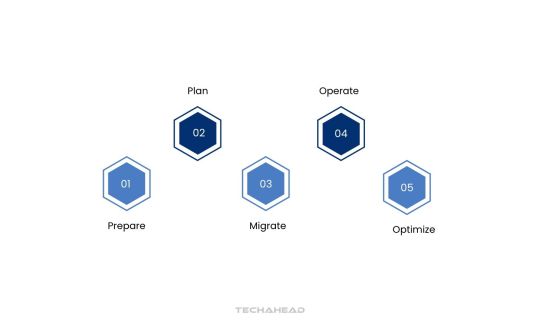

Cloud transformation is a comprehensive process where businesses transition from traditional IT infrastructure to a modern, cloud-centric framework. Below is an in-depth exploration of its critical phases.

Prepare

The preparation phase sets the foundation for a successful migration by assessing feasibility and identity benefits.

Evaluate current IT infrastructure: Audit existing hardware, software, and networks to confirm if the cloud aligns with organizational goals. This step ensures clarity about readiness.

Identify potential risks: Analyze risks like data loss, downtime, or security threats. A detailed mitigation strategy ensures minimized disruptions.

Analyze interdependencies: Understand how applications, databases, and systems interact to prevent issues during migration. Dependency mapping is vital for seamless transitions.

Select a migration strategy: Choose from approaches such as rehosting, refactoring, or rebuilding. Tailor the strategy to meet specific organizational needs and ensure efficiency.

Plan

The planning phase involves creating a structured roadmap for the migration process, ensuring alignment with business objectives.

Define goals and objectives: Establish specific goals like cost reduction, scalability improvement, or enhanced security. This clarity drives project focus.

Select a cloud service provider: Choose a provider that matches your organization’s priorities. Evaluate cost, performance, security, and customer support before finalizing.

Identify required resources and tools: Determine essential resources such as migration tools, management software development, and skilled personnel to execute the project effectively.

Migrate

Migration focuses on the actual transfer of IT infrastructure, applications, and data to the cloud.

Configure and deploy cloud resources: Set up virtual machines, storage, and networking components to create a robust cloud environment for workloads.

Migrate data securely: Use data migration tools or replication techniques to ensure secure and accurate data transfer with minimal disruptions.

Test applications in the cloud: Run performance tests to verify that applications meet operational requirements. Address issues before full-scale deployment.

Operate

The operation phase emphasizes managing and maintaining the cloud environment for optimal performance.

Monitor and update resources: Continuously monitor cloud infrastructure to identify bottlenecks and ensure resources align with evolving organizational needs.

Perform ongoing maintenance: Proactively resolve infrastructure or application issues to prevent service interruptions and maintain system integrity.

Address security concerns: Implement robust security measures, including encryption, access controls, and regular log reviews, to safeguard data and applications.

Optimize

Optimization ensures that cloud resources are fine-tuned for maximum performance and cost efficiency.

Monitor performance metrics: Use advanced monitoring tools to track application performance and identify improvement opportunities in real time.

Adjust and fine-tune resources: Scale resources dynamically based on demand to maintain performance without unnecessary cost overheads.

Leverage cost-saving features: Use provider offerings like auto-scaling, reserved instances, and spot instances to minimize operational costs while maintaining quality.

By thoroughly understanding and executing each phase of cloud transformation, organizations can achieve a seamless transition to a modern, efficient cloud environment. This structured approach ensures scalability, performance, and long-term success.

Conclusion

Cloud migration is a multifaceted process that demands in-depth analysis of existing challenges and aligning them with strategic changes to meet business objectives. Selecting the right migration strategy depends on workload complexities, associated costs, and potential disruption to current systems. Each organization must evaluate these factors to ensure a smooth transition while minimizing impact.

While the benefits of a well-planned migration are significant, organizations must address the ongoing risks and effort required for maintenance. Ensuring compatibility and performance in the cloud environment demands continuous oversight and optimization.

For a deeper understanding of how TechAhead can streamline your cloud migration journey, explore our comprehensive guide on migrating enterprise workloads. Our cloud migration case studies also provide insights into the transformative advantages of moving critical business operations to the cloud. Partnering with experts ensures a seamless transition, unlocking agility, scalability, and innovation for your business.

Source URL: https://www.techaheadcorp.com/blog/aws-cloud-migration-benefits-strategies-and-phases-simplified/

#AWS Cloud Services#Cloud Transformation#AWS Cloud Migration#Lift and Shift Cloud Migration#Cloud Migration Strategies

0 notes

Text

Understanding Cloud Outages: Causes, Consequences, and Mitigation Strategies

Cloud computing has transformed business operations, providing unmatched scalability, flexibility, and cost-effectiveness. However, even leading cloud platforms are vulnerable to cloud outages.

Cloud outages can severely disrupt service delivery, jeopardizing business continuity and causing substantial financial setbacks. When a vendor’s servers experience downtime or fail to meet SLA commitments, the consequences can be far-reaching.

During a cloud outage, organizations often lose access to critical applications and data, rendering essential operations inoperable. This unavailability halts productivity, delays decision-making, and undermines customer trust.

Although cloud technology promises high reliability, no system is entirely immune to disruptions. Even the most reputable cloud service providers occasionally face interruptions due to unforeseen issues. These outages highlight the inherent challenges of cloud computing and the necessity for businesses to prepare for such contingencies.

While cloud computing offers transformative benefits, the risks of cloud outages demand proactive strategies. Organizations must adopt robust mitigation plans to ensure resilience and sustain operations during these inevitable disruptions.

Key Takeaways:

Cloud outages occur when services become unavailable. These disruptions impact businesses by affecting operations, causing financial loss, and harming reputation.

Power failures disrupt data centers, cybersecurity threats like DDoS attacks can compromise services, and human errors or technical failures can lead to downtime. Network problems and scheduled maintenance can also cause outages.

Outages have significant consequences; these include financial loss from service interruptions, reputational damage due to loss of customer trust, and legal implications from data breaches or non-compliance.

Distributing workloads across multiple regions, implementing strong security protocols, and continuously monitoring systems help prevent outages. Planning maintenance and having disaster recovery protocols ensure quick recovery from disruptions.

Businesses should focus on minimizing risks to ensure service availability and protect against potential disruptions.

What are Cloud Outages?

Cloud outages are periods when cloud-hosted applications and services become temporarily inaccessible. During these downtimes, users face slow response times, connectivity issues, or complete service disruptions. These interruptions can severely impact businesses across multiple dimensions.

The financial repercussions of cloud outages are immediate and far-reaching. When services go offline, organizations lose revenue as customers are unable to complete transactions. Additionally, businesses cannot track critical performance metrics, which can lead to operational inefficiencies and delayed decision-making.

Beyond monetary losses, cloud outages also cause reputational damage. Frustrated customers often perceive these disruptions as a sign of unreliability. A lack of transparent communication during downtime further exacerbates customer dissatisfaction. Over time, this can erode trust and push clients toward competitors offering more dependable solutions.

Another critical concern during cloud outages is the potential for legal consequences. If an outage leads to data loss, breaches, or compromised privacy, businesses may face litigation, regulatory penalties, and increased scrutiny. The fallout from such incidents can add both financial and reputational burdens.

Long-term consequences of cloud outages include reduced customer satisfaction, loss of client loyalty, and ongoing revenue declines. Organizations may also incur significant costs to restore affected systems and prevent future outages. Inadequate cloud infrastructure increases the risk of repeated disruptions, making businesses more vulnerable to prolonged downtimes.

To mitigate these risks, organizations must proactively invest in robust backup and recovery systems. Reliable disaster recovery plans and redundancies help minimize downtime, ensuring business continuity during unforeseen cloud outages. This strategic approach safeguards revenue streams, protects customer trust, and fortifies operational resilience.

Common Causes of Cloud Outages

Cloud outages can stem from various factors, both within and beyond the control of cloud vendors. These challenges must be addressed to ensure cloud services meet Service Level Agreements (SLAs) with optimal performance and reliability.

Power Outages

Power disruptions are one of the most prevalent causes of cloud outages. Data centers operate on an enormous scale, consuming anywhere from tens to hundreds of megawatts of electricity. These facilities often rely on national power grids or third-party-operated power plants.

Consistently maintaining sufficient electricity supply becomes increasingly difficult as demand surges alongside market growth. Limited power scalability can leave cloud infrastructure vulnerable to sudden disruptions, impacting the availability of hosted services. To address this, cloud vendors invest heavily in backup solutions like on-site generators and alternative energy sources.

Cybersecurity Threats

Cyber attacks, such as Distributed Denial of Service (DDoS) attacks, overwhelm data centers with malicious traffic, disrupting legitimate access to cloud services. Despite robust security measures, attackers continuously identify loopholes to exploit. These intrusions may trigger automated protective mechanisms that mistakenly block legitimate users, leading to unexpected downtime.

In severe cases, breaches result in data leaks, service shutdowns, or prolonged outages. Cloud vendors constantly refine their defense systems to combat these evolving threats and ensure service continuity despite rising cybersecurity challenges.

Human Error

Human errors, though rare, can have catastrophic effects on cloud infrastructure. A single misconfiguration or incorrect command may trigger a chain reaction, causing widespread outages. Even leading cloud providers have experienced significant disruptions due to human oversight.

For instance, a human error at an AWS data center in 2017 led to widespread Internet outages globally. Although anomaly detection systems can identify such issues early, complete restoration often requires system-wide restarts, prolonging the recovery period. Cloud vendors mitigate this risk through rigorous protocols, automation tools, and comprehensive staff training.

Software and Technical Glitches

Cloud infrastructure relies on a complex interplay of hardware and software components. Even minor bugs or glitches within this ecosystem can trigger unexpected cloud outages. Technical faults may remain undetected during routine monitoring until they manifest as critical service disruptions. When these incidents occur, identifying and resolving the root cause can take time, leaving end-users unable to access essential services. Cloud vendors implement automated monitoring, rigorous testing, and proactive maintenance to identify vulnerabilities before they impact operations.

Networking Issues

Networking failures are other significant contributor to cloud outages. Cloud vendors often rely on telecommunications providers and government-operated networks for global connectivity. Issues in these external networks, such as damaged infrastructure or cross-border disruptions, are beyond the vendor’s direct control. To mitigate these risks, leading cloud providers operate data centers across geographically diverse regions. Dynamic workload balancing allows cloud vendors to shift operations to unaffected regions, ensuring uninterrupted service delivery even during network failures.

Maintenance Activities

Scheduled and unscheduled maintenance is essential for improving cloud infrastructure performance and cloud security. Cloud vendors routinely conduct upgrades, fixes, and system optimizations to enhance service delivery. However, these maintenance activities may require temporary service interruptions, workload transfers, or full system restarts.

During this period, end-users may experience service disruptions classified as cloud outages. Vendors strive to minimize downtime through well-planned maintenance windows, redundancy systems, and real-time communication with customers.

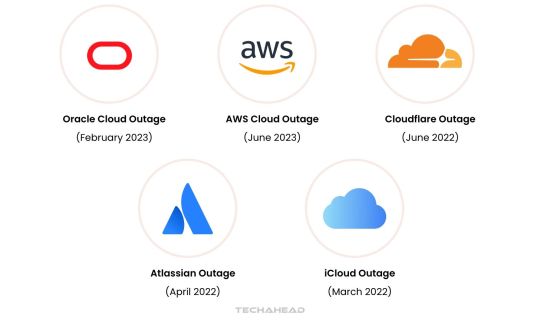

Global Cloud Outage Statistics and Notable Cases