Don't wanna be here? Send us removal request.

Text

[Applitools v10] Our Enterprise Visual UI Testing Platform: 10,000 Users, 300 Companies, 100 Million Tests, 1 Billion Component Level Results

Guest post by Aakrit Prasad, VP Product @ Applitools

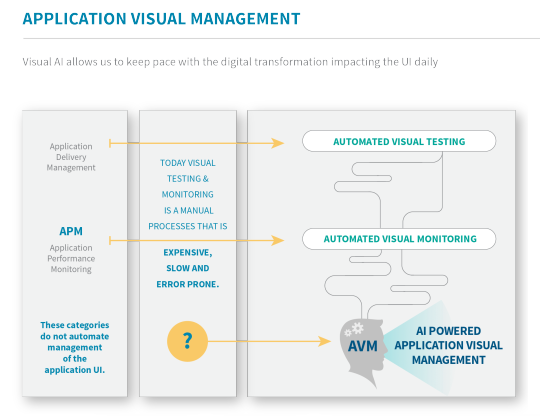

From Visual AI Inception to Application Visual Management (AVM)

Today’s digital era is increasingly dominated by user interactions with our brands and products through visual interfaces on web and mobile devices. It’s all about user convenience, time savings, and in many cases the ease of purchase instead of shopping in-store. As businesses evolve to serve the majority of their brand interactions through digital experiences, digital transformation means their software becomes their brand. For example, an e-commerce application crash or UI bug can be analogous to a negative in-store customer experience. Obviously a scenario any brand owner must avoid, but how?

Automated UI testing for changes in application visual interfaces across browsers, devices, form factors, viewport sizes, in different languages, continues to be a huge challenge. A challenge further exaggerated by the enterprise focus on release agility due to pressure to innovate faster and deliver better and better customer experiences with each release. To tackle the resulting UI testing challenges, enterprises have historically relied on either manual visual validation, prone to repeatable human error, or rudimentary pixel-based image comparison tools yielding high false positives. We know today that neither approach scales –- enter Applitools Visual AI based technology to detect UI issues fast, across hundreds of interfaces with 99.999% accuracy.

Over the past couple years, we’ve learned from our customers that comprehensive visual UI testing requires additional capabilities. This includes baseline management, team collaboration on issues, automated maintenance of tests with intuitive dashboarding & analytics, as well as wider SDK coverage with seamless integrations into ecosystem tools used for CI/CD, Ticketing, SCM, et al. In the recent version 10 release, we successfully added all these key capabilities to officially launch our enterprise-grade platform for Automated Visual UI Testing, putting us on the path to Application Visual Management.

Our v10 Enterprise Platform Components Explained

1. The Core Visual AI

Applitools is the first and only enterprise product that allows automation teams the ability to fully manage the visual UI layer of their applications to deliver great customer experiences. Our robust AI based solution, trained with deep learning from the largest data set of UI validations in the world, is now achieving accuracy of 99.999%. It’s the key component necessary to the delivery of AVM. Our approach to visual testing has been AI-first since inception, and our AI engine continues to evolve through machine learning by analyzing millions of new images daily. Beyond just coverage for static and dynamic web content, our AI technology is also used for document validation with growing use cases to validation all areas where manual human validation is a bottleneck to scalability, quality, and growth.

2. Visual Test Management

Visual content continues to evolve at a faster pace and before, with more dynamic and personalized experiences across various languages and interfaces. Thus, comprehensive visually testing can involve hundreds of test scenarios and use cases. Applitools visual test management platform is designed for this complexity. With the ability to handle single and component level UI validations across different browsers, devices, languages, content types (dynamic, PDF), Applitools provides an intuitive UI to manage 1,000s of daily tests. Furthermore, the test manager allows a test to cover various UI pages as part of a user journey and offers several automated maintenance features for ease of use, leveraging our AI.

3. Dashboard, Analytics, and Collaboration

With the capability to run many tests at scale comes the need for analyzing the results quickly. Applitools offers powerful dashboarding & analytics across all tests to see execution results, distribution by status, coverage (browser, devices), and uncover root causes of any UI issues. Furthermore, issues identified can be assigned to users for review responsibility. Often, however, issues detected require collaboration within teams to access impact. Applitools allows teams to discuss any issue or remark on the screen shot itself, with full visual context, and supports built-in notification management.

4. Baseline Management

Behind all the tests and issues identified is an intelligent baseline management service, which rounds out our Enterprise offering. With baseline management, users can map baselines to tests & apps, view history of UI versions, compare them, and create new definitions to cover use cases such as cross browser or cross device testing. Automated maintenance features allow for updates to several baselines, where necessary, with just the click of a button. In addition, baselining supports branching and merge of tests, and has a seamless integration with Github.

5. Ecosystem Integrations

Applitools also supports many integrations across the ecosystem and other industry vendors focused on other areas of web & mobile test automation such as Sauce Labs and Perfecto Mobile. Supporting all continuous integration tools via APIs, Applitools also provides built in plug-ins for some of the most popular: Jenkins, TeamCity, and Team Server Foundation. In addition, integrations with Jira for ticketing, Github for source control, and Slack for collaboration allow users to easily work with Applitools daily.

Summary

As enterprises across every industry continue on their journey to digital transformation, their software has a larger footprint on their brand. The customer experience for any such business starts at the visual layer, where issues found have an instant impact. This makes Application Visual Management a crucial and necessary part of the success for every business. We’re proud of the platform we’ve now built and have all of you to thank for the ideas, the usage, the product feedback. Keep it coming, and expect much more to come in 2018 and beyond!

Yours In Visual Perfection, Aakrit VP Product @ Applitools

1 note

·

View note

Text

Visual Testing as an Aid to Functional Testing

Guest post by Gil Tayar, Senior Architect at Applitools

In a previous blog post of mine "Embracing Agility: A Practical Approach for Software Testers", I discussed how an agile team changes the role of the software tester. Instead of being a gatekeeper that tests the product after it is developed, the software tester is part of the development process.

I also described how the shift-left movement enables the software tester to concentrate on the more interesting tests, and leave the boring tests (“this form field can only accept email addresses”) to the software developer. But those interesting tests, at least the ones we decided to automate, need to be written, and written quickly.

In this blog post I will discuss a technique whereby you can use visual testing to make writing those tests much easier.

Visual Testing vs Functional Testing

Visual tests are usually used to check the visual aspects of your application. Makes sense, no? To automate tests that verify that your application looks OK, you write visual tests, and use visual testing tools such as Applitools Eyes. But visual testing tools can also be used for functional tests.

Really? How can visual testing help us write functional tests, tests that check how the application behaves? First, let’s understand how visual testing and functional testing of applications work.

Functional Testing

A functional test (in this blog post! I am sure that you can find other people that define it differently) is a test that starts an application in a certain state, performs a set of actions, and tests that the application is in the correct state after those actions.

So a functional test looks like this:

Load a specific set of application data

Start the application

Execute a set of actions on the UI (or the REST API!) of the application

Assert that the actual state of the application is the expected one.

Yes, you might argue that it is not the test that starts the application, and that the a test should always create the specific set of data using other actions. You could, but that’s bikeshedding. True, no functional tests look exactly like this, but the idea is correct:

action, action, action

assertion, assertion, assertion

action, action, action

assertion, assertion, assertion

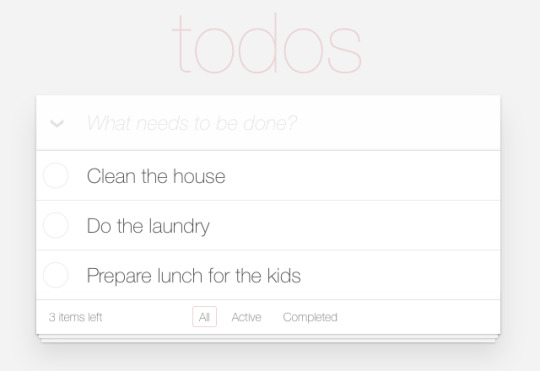

Let’s take an example of a todo list application:

Todo List

One functional test would be:

Action: check a todo

Assertion: it is checked in the “All” tab

Assertion: it is gone from the “Active” tab

Assertion: it is checked in the “Completed” tab

Implementing functional assertions

How would one go about implementing the assertions? If it’s a web application, and we’re using a browser automation library like Selenium Webdriver, then the tester would need to check whether the specific todo’s checkbox is checked, and also check that the other checkboxes are not checked. Also, check that the text of the todo is striked out, while the others are not striked out.

Oh, and don’t forget to check the “2 items left” text in the bottom left of the list.

And each such check is a pain in the butt — the tester needs to determine what the id of the control they are checking is. And sometimes there is no specific id (or class), and so the tester needs to go to the developer and ask them to add an id for testing, or if the tester is in an agile team, maybe add it themselves!

And this needs to be done across hundreds of tests, and thousands of assertions. This is practically impossible, and definitely impossible to maintain. What usually happens is that the developer chooses a subset of assertions, and tests just those. In our example, it would only assert that the checkbox of the todo item is checked, and forego the other tests.

Visual Testing

We’ve seen what functional tests are, and one of the problems associated with them. Let’s move on to visual tests: a functional test (in this blog post! I am sure that you can find other people that define it differently) is a test that starts an application in a certain state, performs a set of actions, and tests that the application looks good after these actions.

See how similar they are?

See how similar they are? A visual test looks like this:

Load a specific set of application data

Start the application

Execute a set of actions on the UI (or the REST API!) of the application

Assert that the application looks correct

This is exactly like functional tests, except that the assertions are different. So let’s look at how assertions are performed in visual tests. How does a visual test go about testing that an application looks correct?

Implementing visual assertions

How do visual assertions work? Well, first of all, we take a screenshot of the screen — we are doing a visual assertion after all. But this screenshot, alone, is not enough. What we do is compare this to a baseline screenshot that we took in a previous run of the test. If the algorithm comparing the current screenshot to the baseline one tells us that they’re the same, then the assertion passes, otherwise it fails.

Comparing a screenshot against a baseline

And how do we generate the screenshot? In a two phase process:

We define the screenshots that are created by the assertion of the first test as the baseline screenshots. The tester manually checks each one of those baseline screenshots.

Each time the test fails it could be because there is a bug in the software, but it could also be because the software changed and this is the new baseline. The tester in this case manually checks each one of those new screenshots and declares them as the new baseline.

How does visual comparison work?

Unfortunately, visually comparing two screenshots seems easy, but it’s not. Theoretically, it should be as easy as comparing pixels, but this unfortunately is not the case. Different operating systems, browsers, even different versions of the same browser generate slightly different images. Moreover what if the screenshot includes the current time? You would need to check everything but that region of the screen.

There are even more concerns, which we should outline in a future blog post. Fortunately, tools like Applitools Eyes hide us from these concerns.

Using Visual Assertions in Functional Tests

Now that we understand how functional testing works, we understand that there’s no difference between functional and visual tests in how they are structured — the only difference is in what they test and in how they implement the assertions:

Functional assertions check each and every control on the page to ensure that they have the correct value

Visual assertions compares a screenshot of the page against a manually verified baseline screenshot.

Visual assertions seem pretty easy to use. Just manually verify the first screenshot, and just take screenshots of the pages after the actions in the test. No need to check each control, determine the id of the control, or ask the developers to add ids to controls. Just take a screenshot and let visual testing frameworks like WebDriverCSS or Applitools Eyes compare them.

And we’ve seen how functional assertions are hard. But what if we can use visual assertions in functional tests? Something like this:

Load a specific set of application data

Start the application

Execute a set of actions on the UI (or the REST API!) of the application

Take a screenshot to verify that the data is correct

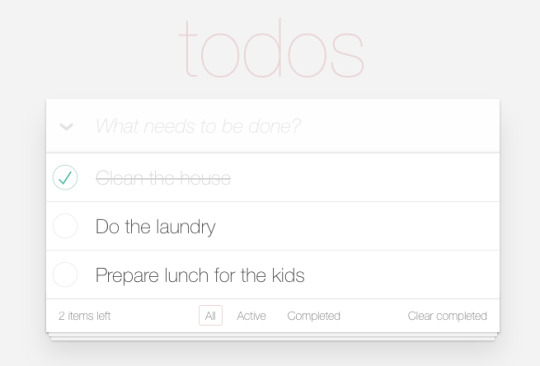

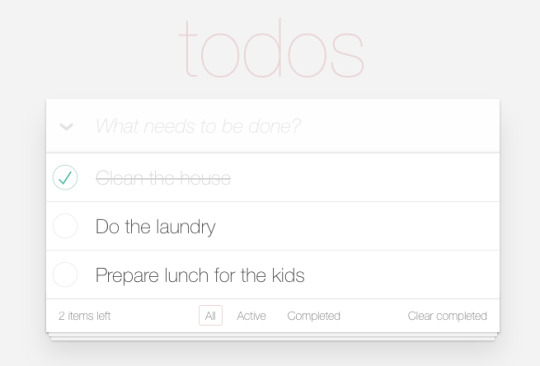

Think of the todos page after checking the todo item:

With one screenshot, we can check that the check was was checked (ouch, too many “check”-s), verify that the other todo items weren’t, that the “2 items left” is correct, that the todo item is striked out, and probably other stuff which we didn’t even notice.

Many frontend developers use this kind of “testing against a baseline”, but what they test is the html of the components, and not how they look like. But the idea is similar. The difference is that it is much nicer to check the difference from a baseline between two images, than to compare a textual diff of HTML.

Comparing a screenshot against a baseline

Pleasant side benefit of using visual assertions: you get to check the visual look of your application for free!

Summary

In this agile world, tests need to be written quickly and efficiently, and need to test as many things as possible. There are a variety of tools to do this. One of these is using visual testing assertions in your regular tests.

h2, h3, h4, h5 { font-weight: bold } figcaption { font-size: 10px; line-height: 1.4; color: grey; letter-spacing: 0; position: relative; left: 0; width: 100%; top: 0; margin-bottom: 15px; text-align: center; z-index: 300; } figure img { margin: 0; }

About the Author: 30 years of experience have not dulled the fascination Gil Tayar has with software development. From the olden days of DOS, to the contemporary world of Software Testing, Gil was, is, and always will be, a software developer. He has in the past co-founded WebCollage, survived the bubble collapse of 2000, and worked on various big cloudy projects at Wix. His current passion is figuring out how to test software, a passion which he has turned into his main job as Evangelist and Senior Architect at Applitools. He has religiously tested all his software, from the early days as a junior software developer to the current days at Applitools, where he develops tests for software that tests software, which is almost one meta layer too many for him. In his private life, he is a dad to two lovely kids (and a cat), an avid reader of Science Fiction, (he counts Samuel Delany, Robert Silverberg, and Robert Heinlein as favorites) and a passionate film buff. (Stanley Kubrick, Lars Von Trier, David Cronenberg, anybody?) Unfortunately for him, he hasn’t really answered the big question of his life - he still doesn't know whether static languages or dynamic languages are best.

0 notes

Text

Embracing Agility: A Practical Approach for Software Testers

Guest post by Gil Tayar, Senior Architect at Applitools

The Agile Manifesto is 16 years old. In the home page, it defines a very simple set of values:

Individuals and interactions over processes and tools

Working software over comprehensive documentation

Customer collaboration over contract negotiation

Responding to change over following a plan

These four simple values have sparked a revolution in how we write and deliver software. I like to call them the “cut the bulls**t” values. To understand how these values affect testers, let’s dispense with the ceremony, let’s dispense with lengthy design documents, and, yes, lengthy test plan documents, and focus on understanding the core — writing the software.

And the way to do that is to deliver working software from the start (this is the “Working software over documentation” part of the manifesto). The agile manifesto asserts that the product needs to work from the beginning, and not wait for a lengthy and cumbersome “integration phase” when all the work on the product is integrated together. Instead of many team silos, where everybody is working on one piece of the puzzle (and the architect is the only one aware of how everything will be pieced together), we start with a small team that builds an initial working software (MVP, or minimal viable product) and gradually grows it to a bigger product with a bigger team.

This team includes everybody — the customer, product manager, developers, and testers (this are the “Individuals and interactions” and “customer collaboration” values of the agile manifesto). This integrated team delivers a minimal version of the product in a very short time, and… waits. Waits for what? For the customer to start working on the product and figure out the next step for the product. In other words, agile teams don’t build a specification for the whole product, but rather start small and incrementally determine how the product should look like (this is the “Responding to change over following a plan” part).

Yeah, yeah, yeah. Bla, bla, bla. I got that. But how does it affect my work as a software tester? It does. Immensely. I have one sentence for you:

Embrace agility: shift left and shift right.

But, unfortunately, to explain this, and to see how this affects your work as a software tester, I need a bit more explanations, so hang in there.

Testing in the Days of “Release Early, Release Often”

The agile manifesto also says: “Deliver working software frequently”. And today’s software development community has taken it to the extreme. Some companies release every week, some every day, some don’t even have the concept of “release”. They just release anytime a feature is ready.

Moreover, agility forced the backend to come up with an alternative to the “monolithic backend”. The concept of “microservices” has replaced the “monolith”. This concept enables large companies to break up their backend into many small services, each one responsible for a small piece of the puzzle, and to deploy each microservice independent of each other.

These two things means that in many companies there is no “release”. It’s a fluid set of changes that just happen to the product in ongoing process.

But if there is no release, how will software testers test? I have one sentence for you:

Embrace agility: shift left and shift right.

Shifting Left

What does shifting left mean?

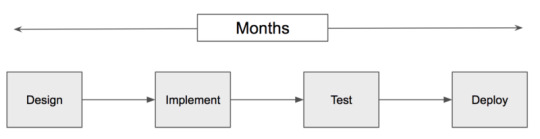

The software development software, in the days before the process became agile, looked like this:

The software development model before the agile revolution

Software testers used to be at the right of this process. They used to be the gatekeepers that bar the gate to releases that are not qualified for deployment.

“This release shall not pass!”

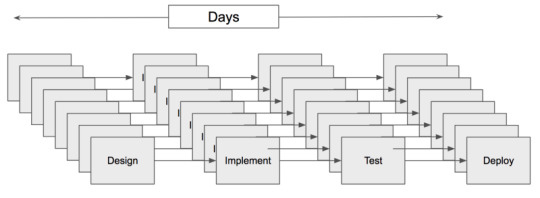

This process actually hasn’t changed much in the days of agile, except that now we have many of these pipelines in parallel, and each takes days, and not months:

The agile software development model

But how can a software tester be a gatekeeper in this model? It’s practically impossible. Once the implementation is done, there is no “time” for testing, as the team has gone on to the next thing it needs to do. In the agile model, delivering software incrementally is paramount. Time for testing is not included.

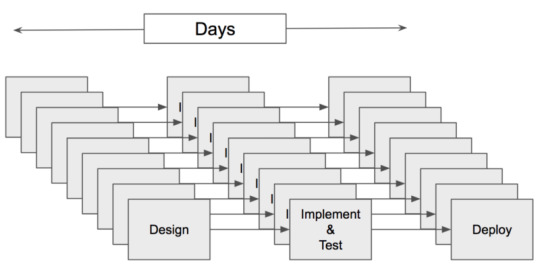

To solve this, the tester needs to shift the testing left. It needs to be part of the implementation, and not a separate step:

Shifting left

This has many practical consequences, consequences that affect you as a software tester:

Software developers need to test too

Shifting left means that a lot of the burden of testing needs to be dealt with by the software developer and not by the tester. A tester should not need to check that a certain form field needs to be numeric. A tester should not need to check that the value in this table is correct. All those boring tests that occupy a lot of the tester’s time need to sit “close to the code”, and should not be dealt with by the tester. The software developer can use unit testing to check those boring checks in much faster and more reliable manner than the tester, and free up the tester’s time for the more interesting and holistic tests, whether manual or automated.

Automated tester tests need to sit close to the code too

As discussed above, agile software is now deployed piecemeal-wise, and not in one big deployment bang. This means that the software testers tests need to test not just the whole big product, but also parts of it.

This means that if the microservice your team is writing is a web service that communicates via REST API, then your tests should check that REST API in isolation from the rest of the product. And if your team is building UI components that the other parts of the company uses, those components should be tested in isolation from the rest of the product.

In other words, software testers should not only do end to end tests, but should also check parts of the software, and not the whole thing. Which parts? A good rule of thumb is that if your team is building components and microservices that are part of a bigger whole, you should test those components in isolation and not only as parts of the bigger whole.

Automated tester tests need to be part of the CI process

Software developers that operate as part of a true agile process frequently use CI tools to help them cope with the fantastic pace of software development. For software developers, the CI tool is the gatekeeper for their software change.

What is a CI tool? CI means Continuous Integration, and a CI Tool is a tool that listens to changes in the source code, builds it, runs the tests, and if the tests pass, mark the changes “green”. If they fail, they usually email the person that submitted the software changes. The CI tool helps the software developer ensure that all the tests they write are executed for those specific changes. Examples of CI Tools are Jenkins, TeamCity, Travis, Bamboo.

These are fantastic tool, and are definitely part of the shift-left movement, as the tests runs for every code change. A software developer should ensure that not only do the software developer tests run, but also their own tests. A code change needs to be green only if the developer tests pass and the tester tests pass.

The three rules above are, for me, the essence of the shift-left movement. To be agile, automated tests should not be a separate stage of the development, but should be part and parcel of it. And to implement this should be the responsibility of both the tester and the developer.

And this responsibility brings us to what is for me, the holy grail of the shift-left movement:

Automated tester tests need to be part of the source code

There should be no difference between where the developer tests reside and where the tester tests reside. They should be part of the same source code, and as we said above, run as part of the same CI process. I will dedicate a blog post in the near future to this important idea (“Speaking the language of frontend developers”).

Remember, in an agile process, the tests become as important and integral as the development itself. Embrace agility.

Shifting Right

But if the shift-left movement means that a tester does not check the whole product holistically, then does that mean that it does not need to be checked as a whole?

Of course it has to be checked as a whole. The big question is when? If there is no release, then when do we test the whole product? The answer may surprise you and is part of the shift-right movement.

The reason for the shift-right movement

And the answer is — whenever you want to. Once there is no release, and software changes keep pouring out of the pipe, and they are well-tested due to the shift-left movement, then you can do E2E testing whenever you want to.

Run your end to end tests continuously

If you can run your end to end tests whenever you want, then just run them continuously. Yes, source code keeps pouring in from the left, and deployments keep pouring out from the right, but that doesn’t mean that we should give up and not test. It actually means that we should run them all the time.

No, we will never be testing what is exactly going to be in production in an hour. We will always be testing an approximation. But if this run of e2e tests doesn’t uncover the bug, the next one will. And, yes, it will be too late, because that bug is already in production, but that’s OK — because we’re agile, fixing the bug also takes a very short amount of time.

In an agile world, it’s OK for bugs to sometimes reach production. This is what is meant by Facebook when they say “Move fast and break things”. While an extreme sentence, it does hold the important truth that trying to stop all bugs from reaching production doesn’t help development, it harms it.

Run your end to end tests in production

Some companies take this to the extreme, and have software testers test the full product not in a testing or staging environment, but actually in production, after it’s released! (this is why it’s called “shift right”). And incredibly enough, this is not as dumb as it sounds. Testing in production enables us to:

uncover bugs that are due to bad data that can only be found in production

uncover bugs that are due to the scale and amount of people using the application in production

uncover bugs that are due to the deployment system used for production

uncover bugs in the infrastructure (servers, networks, database, etc) used in production

Embracing Agility

So yes, embrace agility. Agility changes and amplifies the role of the software tester. The shift left movement encourages the software developer to take on the role of the tester, and encourages the software tester to participate in the software development process, while the shift right movement encourages the software tester to take on some of the responsibility of monitoring the application.

And this is a good thing — the tester should not be a gatekeeper, a judge that is aloof and separate from the software development and deployment process. The tester should not be the bad guy saying, “thou shall not pass” and delaying the release of the process.

What the tester should be is an integral part of the software development process. And embracing agility allows them to do that.

Yes, But How?

Embrace agility. Shift left:

Software developers need to test too: shifting tests to the left means that software developers should test their code too. Educate your consumers. Work with them to make this happen. Fortunately, ongoing education is moving software developers to this understanding.

Automated tester tests need to sit close to the code too: stop thinking as gatekeepers and start thinking as developers. Test parts of the code and not just the final product.

Automated tester tests need to be part of the CI process: the CI tool is the new gatekeeper, so your tests should be part of the CI.

Automated tester tests need to be part of the source code: if you can, have your tests be part of the source code, and not a separate piece.

Embrace agility. Shift right:

Run your end to end tests continuously: there is no release. Continuously test the “current” product, no matter whether it is not yet in production or already there.

Run your end to end tests in production: there is no release. The best place to run your tests is in production, and you will get a ton of added benefits from it.

What’s in it for Applitools?

In the end, I am a developer advocate for Applitools, a company that builds a Visual Testing platform for testers and developers. So, what’s in this movement for Applitools?

Plenty. We believe visual testing tools are crucial for shifting left and shifting right. But that’s for another future blog post.

h2, h3, h4, h5 { font-weight: bold } figcaption { font-size: 10px; line-height: 1.4; color: grey; letter-spacing: 0; position: relative; left: 0; width: 100%; top: 0; margin-bottom: 15px; text-align: center; z-index: 300; } figure img { margin: 0; }

About the Author: 30 years of experience have not dulled the fascination Gil Tayar has with software development. From the olden days of DOS, to the contemporary world of Software Testing, Gil was, is, and always will be, a software developer. He has in the past co-founded WebCollage, survived the bubble collapse of 2000, and worked on various big cloudy projects at Wix. His current passion is figuring out how to test software, a passion which he has turned into his main job as Evangelist and Senior Architect at Applitools. He has religiously tested all his software, from the early days as a junior software developer to the current days at Applitools, where he develops tests for software that tests software, which is almost one meta layer too many for him. In his private life, he is a dad to two lovely kids (and a cat), an avid reader of Science Fiction, (he counts Samuel Delany, Robert Silverberg, and Robert Heinlein as favorites) and a passionate film buff. (Stanley Kubrick, Lars Von Trier, David Cronenberg, anybody?) Unfortunately for him, he hasn’t really answered the big question of his life - he still doesn't know whether static languages or dynamic languages are best.

0 notes

Text

[Webinar Recording] “Testing is Not a 9 To 5 Job" - Talk by Industry Executive Mike Lyles

Find your hire power: in this webinar, industry executive Mike Lyles evaluated extracurricular activities and practices that will enable you to grow from a good tester to a great tester.

Being an expert tester is no different. While the art and craft of testing and being a thinking tester is something that is built within you, simply going to work every day and being a tester is not always enough.

Each of us can become “gold medal testers” by practicing, studying, refining our skills, and building our craft.

Listen to this webinar, and enjoy these key takeaways:

Inputs from testing experts on how they improve their skills

Online training and materials, which should be studied

How to leverage social media to interact with the testing community

Contributions you can make to the testing community to build your name as a leading test engineer

Mike's slide-deck can be found here -- and you can watch the full recording here:

youtube

BONUS: Mike prepared a blog post where he answered several questions asked during the webinar; you can read it here.

2 notes

·

View notes

Text

Staying Ahead of the Curve: 4 Trends Changing the Digital Transformation Game for CIOs

Guest post by Gil Sever -- Applitools CEO and Co-founder

For companies of all sizes across all verticals, digital transformation efforts now weigh heavily on the business and CIOs bear the heavy burden when it comes to shepherding the organization forward. What are the trends that are reshaping the role of the CIO? How can technology leaders stay ahead of the curve? A recent Enterpriser’s Project article from CEO Gil Sever dives deeper into the four growing trends for 2018. Here’s a quick synopsis of the takeaways:

Customer experience has always been important when it comes to digital transformations, but CIOs are now tasked with taking this to a new level of thinking about the customer in every development decision by incorporating Application Visual Management (AVM) strategies. Merely understanding your customer is no longer enough and bringing the customer perspective into every phase is now a requirement to achieve true success.

Artificial intelligence has an emerging role as a key business driver. AI and Big Data are less a fringe tactic for making business moves, but are quickly becoming a core function driving day-in and day-out organizational decisions. Visual AI is prime example - a critical and practical technology innovation focused on UI integrity.

“Fail fast” is the digital transformation battle cry. For years, businesses have focused on minimizing mistakes, even at the sake of moving slowly. In today's hyper-competitive business environment, speed is the name of the game and being fast often means being first. When second place might mean missing out completely, this is more important than ever before.

Continuously be testing, learning, and pivoting. CIOs are tapping into Agile methodologies to incorporate more testing into their development pipelines to make decisions that are better informed and better for the customer.

2018 is the year where we need to put the customer experience first and tap into the emergence of Application Visual Management (AVM). How? By using customer behavior metrics to inform business decisions, putting the product in your customers’ hands more quickly, testing your applications more thoroughly and leveraging Visual AI and other automated techniques, being “customer obsessed” becomes a realistic goal that helps drive business success.

To read more about these trends and how you can develop a game plan that will help you gain a competitive edge through these means, including Application Visual Management and Visual AI, check out the full article here.

0 notes

Text

[Webinar Recording] The Shifting Landscape of Mobile Test Automation and the Future of Appium - presented by Jonathan Lipps

Watch Jonathan Lipps' in-depth overview of the mobile test automation landscape: past present and future, including an in-depth analysis of current frameworks and what's in store for Appium.

Jonathan Lipps

5 years ago, mobile automation was in its infancy. None of the tools that enabled testing of mobile apps was very comprehensive, but on the other hand, there were a lot of open source options. Nowadays, the players and the playing field are different, and Appium came to dominate the open source mobile testing scene.

In this talk, expert Jonathan Lipps gives an exposition of the mobile testing landscape. He talks about what writing tests looks like with each of the current tools, and discuss when each might be a good (or bad) choice. In addition, he'll share his reflections on increasingly popular modes of testing beyond functional testing (like visual testing, for example), and what challenges might lie ahead for testers.

"There’s a lot at stake in how we invest in mobile testing, and this talk will be an exhortation for everyone involved in the industry to participate in shaping a better and more stable future" -- Jonathan Lipps

Key takeaways from Jonathan's session:

History of mobile automation

In-depth overview of the current technology and trends

Set of factors to use when picking the technology that's right for you

All about Appium's vision for the future

Jonathan's slide-deck can be found here -- and you can watch the full recording here:

youtube

You are invited to subscribe to Jonathan's AppiumPro Newsletter, where he shares advanced tips, tricks and best practices about Appium and mobile test automation - with code examples!

0 notes

Text

Testing for Digital Transformation

Guest post by Justin Rohrman

While everyone knows that printed newspaper sales are dwindling, the interesting story is in online subscriptions, which are exploding. Electronic Medical Records (EMR) are becoming the new normal, eliminating lookup, replacement, and a whole lot of printing. The Plain Old Telephone system (POTS), which phone poles and a separate physical wire into the house is falling into disrepair but anyone under 30 is unlikely to notice, because they do not have a physical land line phone.

The digital transformation is in full swing and the consequences are everywhere.

Can software testers help smooth digital transformations with Visual Testing?

I think so.

Quick Response to Customer Needs

Several years ago I was working on a software product used by nurses and anaesthesiologist. Our software was meant to be a bridge between the world of paper charting and a completely paperless workflow. Our initial offering was a digital pen solution. Doctors would use a special electronic pen to write on forms that looked no different from what they used daily for years. At the end of the day, a doctor or nurse syncs the pen to a computer using a USB cable, and all of those forms were magically transferred to our dashboard. The flow from documenting with a pen to having digital patient information was supposed to be as close to what the doctors normally do as possible.

The next stage in this product was something completely new, an iPad app.

The further a software company moves from a workflow people have been using for the last 20 years, the more problems they will encounter. Starting a transformation with a visual testing strategy can help software makers respond to customer needs before the customers realize they have them. Visual testing tools provide instant feedback on the look and feel of your product with every build. Rather than building a new feature and waiting for the market to give feedback you can build a prototype, get user experience feedback from a customer champion, and make that your visual baseline.

Cross Platform Delivery

Before a digital transformation, companies might deal in one medium. For the doctors we were supporting, that was pen and paper. This product ran in a few versions of Internet Explorer, as well as 5 or 6 mobile platforms. Using visual testing, we could have had a better feel for consistency across platforms and devices.

A visual testing strategy simplifies the cross platform testing problem. Start will a complete scan of the elements on each page of the browser you care about the most. This base line give you a reference point for every other place you need to test, and also is a way to explore the full feature set in your product. Once you have that baseline, you can run your visual testing suite in Continuous Integration and discover how each platform and operating system -- iOS, Android, iPad, iPad mini, Google Tablets, and a variety of mobile phones -- are different. Your development team will discover that an iPhone 6 running iOS 8 is missing a submit button that displays perfectly everywhere else before your customers.

Dealing with Humans

Software customers tend to be change averse. Take something that might be clunky, but has worked for them for the past 20 years, and turn it into software. What usually happens here is shelf-ware, products that get no use because people either couldn't figure out how to use them or the new software was more painful than what they had before. This pain can come from software bugs, it can come from bad design, or it can come from normal ways of working before being completely absent from the new software solution. Consistency in one of the most important factors for a successful digital transformation. A visual testing strategy will give your development team an early alert on how your products are different between browsers and platforms. That means an easier digital transformation for your customers.

HAPPY TESTING

About the author:

Justin has been a professional software tester in various capacities since 2005. In his current role, Justin is a consulting software tester and writer working with Excelon Development. Outside of work, he is currently serving on the Association for Software Testing Board of Directors as President, helping to facilitate and develop projects like BBST, WHOSE, and the annual conference CAST.

0 notes

Text

Adding App Visual Management to Mobile Test Automation

Guest post by Gil Sever - Applitools CEO and Co-founder

Today’s mobile app development cycle demands short sprints to enable faster deployments. Time allowed for development is shrinking, with even less time for testing. Testing and development must also be scalable as teams face high pressure deadlines, while focusing on quality throughout the pipeline.

These dynamics are very well known, and the industry now includes a variety of quality offerings to ensure mobile apps perform great while delivering value to the customer. However, as testing and/or QA teams increasingly test against the varying device types, OS versions, phone sizes and languages, visual testing platforms are emerging to help ensure mobile apps look great and provide the best customer experience possible. This new dimension to mobile testing addresses the downside and impatience end-users have with mobile apps that are not formatted, sized or able to present a visually pleasing experience.

Consider These Visual Test Scenarios

For a mobile app, testers and QA teams must determine how they are going to test each case. For example, apps today often support multiple languages. Visual testing helps validate the different local languages to make sure they look correct each time within the mobile app. It ensures that the design fully supports multiple languages by accounting for right-to-left reading in languages such as Arabic or Hebrew, as well as left-to-right like the Western languages. App designs also must support varying lengths of text strings for different languages. If testing is done only in the native language, this can create a visually unpleasant and unusable application for anyone who uses it in a different language.

What also makes visual testing vital is how all mobile manufacturers do many things differently. Visual testing can help account for the visual presentation of a single app across each OS version, along with varying resolutions, screen-sizes and types of devices - to name a few. If one of these elements is overlooked, glaring issues are going to surface that will negatively impact the user experience. For example, it is easy to ignore the landscape orientation on a mobile device, because portrait is more commonly used and tested. This is surprisingly overlooked and some apps are completely unusable in landscape – this won’t fly in today’s customer experience-driven world.

What are the solutions?

Theoretically, a developer could try a single-threaded manual approach to visual testing, but the amount of effort required in a short time frame makes that neither effective or efficient, nor fair to the tester. Another option is a multi-threaded manual approach, but that is not going to scale with modern application release demands. This is where automation comes into play, and today there are options for mobile web and native apps. For example, there are visual testing platforms that automatically validate the look, feel and user experience of an app while allowing the tester to run their test locally and scale on a cloud service. This is a developer’s dream – to gain immediate feedback for all code changes and that they don’t adversely impact the overall application in terms of “look and feel.”

These solutions, while new to the industry, are designed to integrate with existing automated test tools and platforms, rather than introducing new tests that are not integrated to the overall quality and performance of the application.

Bringing it All Together

To get started with visual testing and monitoring for iOS, for example, the tester would install Xcode, command line tools, and create a simulator or connect a real device. For Android, they would use the Android SDK and create an emulator or connect a real device and enable debugging mode. Then they would install the automation testing framework for visual testing.

The new setup will check a mobile web app to ensure that it functions and looks correct on every single release, on every single responsive design, and it will all be available in a visual format. Some visual testing frameworks come equipped with a variety of SDKs and libraries. The tester can then set a full-page screenshot which can navigate to a cloud service to run the test. Now, any time a change is made to the application and the visual test runs, it captures the page to validate that it looks correct.

New mobile automation tools with a dashboard allow you to gain visual assertion of where and how the design, copy and images are laid out – and confirm that everything is in the right place. There are dashboards that offer radar features that highlight all the discrepancies that are caught during testing. Then, the tester is able to drill down to the baseline of the visual anomaly and find the issue. For example, perhaps it was caused by a spelling mistake. This automated process aims to catch errors that would be easily overlooked or difficult to find during manual testing. With the technology and tools in place to make this type of testing possible, the next step is to scale.

Some visual test automation platforms can run all on a local computer with multiple devices and emulators connected, however, it would need to be a substantial machine or multiple machines to work. Alternatively, the tester can leverage an internal device grid or cloud test services. Cloud services are the future of visual test automation that can minimize or even eliminate the need for running a local test. Instead, everything will run in the cloud. That will offer ease of setup and integration, because essentially that is already done for the tester.

Today, cloud services can be run parallel with the visual test, offering varying levels of analytics (depending on the service selected). Some cloud services integrate easily with CI services to allow the tester to create a dictionary of different device and OS versions, split them into different threads, and test them, whereas this would be very expensive if done locally.

As automation helps visual testing teams scale their efforts to meet demanding release pipelines, more testing functionality will continue to shift to the cloud. As testing time decreases, teams must ensure the visual integrity of the mobile app. The must confirm that it delivers an aesthetically pleasing experience to the end user in any environment, language or device. This emerging approach demonstrates the importance of visual testing, as automated validation that the application looks and works correctly becomes more mainstream.

--HAPPY TESTING--

Want to learn more about mobile test automation? Watch this exclusive expert session with Appium Architect and Project Lead Jonathan Lipps, as he walked us through the state of mobile testing and the future of Appium.

#mobile test automation#test automation#Appium#visual testing#app testing#application visual management

0 notes

Text

The Next Phase Of The Applitools’ Journey

The Rise of Application Visual Management Category (AVM) and Our Enterprise-Ready Applitools Eyes V10

Guest post by Gil Sever, Applitools CEO and Co-founder

When Applitools first launched in 2013, we were united behind a shared vision to make it easier for software developers to confidently release their products with visually perfect UI. In those days, there was a fairly robust ecosystem of open-source and commercial tools helping developers manage applications from a functional standpoint, but the process of testing, monitoring, and managing user experience was either completely manual or totally absent from the development cycle. UI issues often went unnoticed until discovered in production by customers. Not an ideal situation for anyone, as it led to slower development times and a poorer overall application experience.

With this vision of Visual Testing tools in mind, we put together a great team of talented AI visionaries who could push the boundaries of what was previously thought possible in UI management. By leveraging the expertise gathered from decades of experience, we built proprietary Visual AI technology, and trained it with the task of automating the testing of visual UI. Together with innovative customers, we built software that could understand UI visually, understanding how colors worked together, if an element was blocking another element, if items were in the right location, or if a functional component of an application actually appeared correctly in the UI. Moreover, we could do this at scale, removing a critical bottleneck in the test automation chain.

Ultimately, we were fortunate enough to garner the support of hundreds, then thousands, and now tens of thousands of users who innovated in and around the possibilities of our Visual AI technology. Over these past 4 years, we have been working tirelessly to improve our Visual AI platform to make it the best automated UI testing solution in the market. We’ve watched with pride as our technology has changed the way organizations develop, test, monitor, and release software, and have watched as organizations fundamentally changed the software development landscape using Applitools as a key part of their stack. As you can imagine, it’s been a wild (and enjoyable) ride.

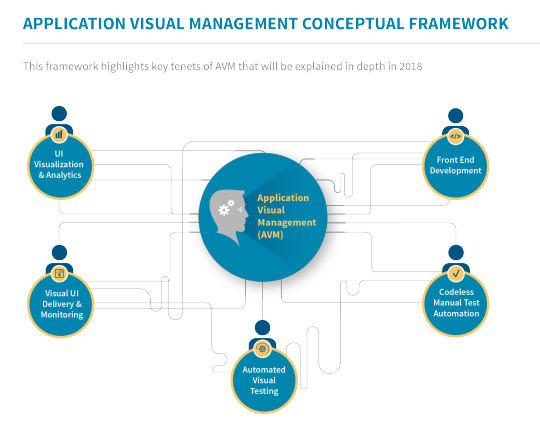

That brings us to today. A whole new category is being created right in front of our eyes. Across businesses of all sizes and verticals we are witnessing an explosion in the recognition of UI as “mission critical” to organizational success and a key tenet of a successful digital transformation. From development teams to the C-suite, companies are making UI a top priority. We found that there is no category that defines what we make possible at Applitools – so we’re taking a shot at defining it together with test automation engineers, manual testers, front-end developers, developer team leads, product people, business people, and DevOps.

Which is why I am excited to announce Application Visual Management (AVM). AVM outlines a new technology category that integrates automated visual management into software development workflows to increase test coverage, accuracy and frequency of user interface releases, and user experience monitoring. AVM is built around what we see as mega-trends in the industry, trends that necessitate the convergence of UI development, production, testing, monitoring, along with Visual AI to deliver a seamless experience across all environments, in real time, even as changes are made both within and outside your own organization. Furthermore, as the velocity of software development increases to meet ever-changing business demands, AVM makes it possible for organizations to stay united across teams and tackle visual issues and UI deficiencies head-on at the speed of CI/CD.

We at Applitools see UI as the “front door” of your digital transformation and believe the way your users experience your product at a visual level is essential to your overall success. AVM is uniquely positioned to help organizations leverage the power, consistency and dependability of Visual AI to make your products better, faster. With AVM, you can reduce costly rollbacks and dev changes, and gain a competitive advantage in your market by releasing more complete and well-tested products at a greater rate of speed.

Beyond this exciting announcement of a new category, we also are thrilled to introduce the latest iteration of our technology – Applitools Eyes Version 10. V10 is Applitools’ latest major release of our ground-breaking technology, and is designed to meet the demands of even the largest and most complex enterprises. Our VP Product & Strategy, Aakrit Prasad, will give more details, including screenshots, in a couple days - so be sure to check back in soon to get the scoop.

It is truly an exciting time to be part of the software development world. As more organizations undergo digital transformations and look to improve their overall product performance, Applitools is honored to be at the forefront of visual UI test automation. This represents an important next step in our journey together, as we begin to explore the fundamentally new, disruptive category of Application Visual Management. We hope you’ll join us as we continue to work to take Applitools to new heights and drive innovation forward.

Bonus: After formally announcing AVM and Version 10 of Applitools Eyes this week, we’ve already been seeing some great reactions from the industry! Check out these stories for more:

DevOps Digest

Business Insider

InfoQ

DevOps.com

And of course I invite you to read our AVM whitepaper: "The Rise of Application Visual Management: Visual AI Powered UI Management in the Era of Digital Transformation".

0 notes

Text

[Webinar Recording] Advanced Techniques for Testing Responsive Apps and Sites

Responsive web design has become the preferred approach for building sites and apps that provide an optimal viewing and interaction experience on any phone, tablet, desktop or wearable device.

However, automatically testing these responsive sites and apps can be quite a challenge, due to the need to cover all supported layouts, their respective navigation, and visible content.

Watch this advanced hands-on session, and learn how to:

Implement generic tests that work for all the layouts of your app

Control the browser and viewport size in order to accurately target layout transition points

Incorporate layout-specific assertions in your tests

Effectively design responsive page objects

Visually validate the correctness of your app’s layout

Bonus: tips for planning and executing responsive website testing

Aakrit's slide-deck can be found here -- and you can watch the full recording here:

youtube

0 notes

Text

Visually Testing React Components using the Eyes Storybook SDK

Guest post by Gil Tayar, Senior Architect at Applitools

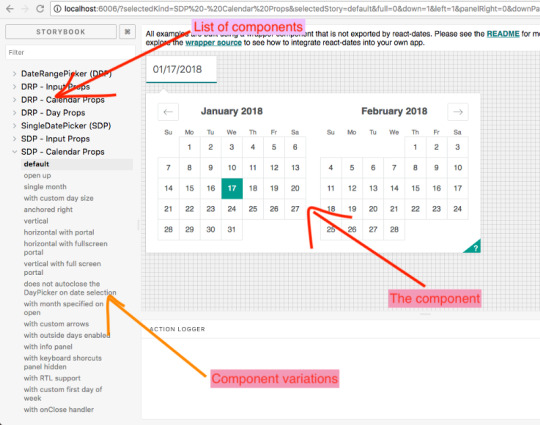

React is, first and foremost, a library for creating reusable components. But until React Storybook came along, reusability of components between applications was very limited. While a library of common components could be created, it was very difficult to use, as there was no useful way of documenting it.

Yes, documenting the common components via a Readme file is possible, even useful. But a component is, in its essence, something visual. A Readme file just doesn’t cut it, neither for a developer and definitely not for a designer that wants to browse a library of shared components and choose the one that fits their purpose.

This limited the usefulness of React’s component reusability .

And then React Storybook came along. React Storybook has changed the way many companies approach React by allowing them to create a library of components that can be visually browsed so as to be able to pick and choose the component they want:

React Storybook

Finally, a real library of shared components can be created and reused across the company. And examples abound, some of them open-source: from airbnb’s set of date components to Wix’s styled components.

But a library of widely used company-wide components brings its own set of problems: how does one maintain the set of components in a reliable way? Components that are internal to one application are easily maintained — they are part of the QA process of that application, whether manual or automated. But how does one change the code of a shared component library in a reliable manner? Any bug introduced into the library will immediately percolate to all the applications in the company. And in these agile days of continuous delivery, this bug will quickly reach production without anybody noticing.

Moreover, everyone in the company assumes that these shared components are religiously tested, but this is not always the matter. And even when they are tested, it is usually their functional aspect that is tested, while testing how they look is largely ignored.

Testing a Shared Library

So how does one go about writing tests for a library of shared components? Let’s look at one of the Shared Components libraries out there — Airbnb’s React Dates. I have forked it for the purpose of this article, so that we can look look at a specific version of that library, frozen in time.

Let’s git clone this fork:

$ git clone [email protected]:applitools/eyes-storybook-example.git $ cd eyes-storybook-example

To run the storybook there, just run npm run storybook, and open your browser to http://localhost:6006.

To run the tests, run the following:

$ npm install $ npm test

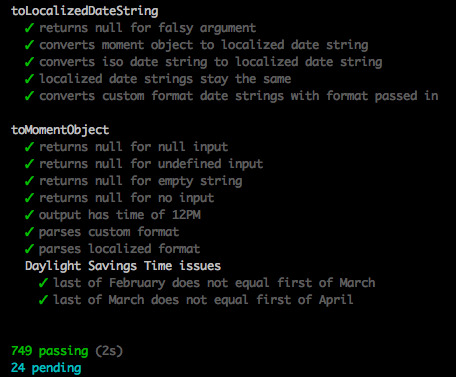

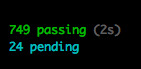

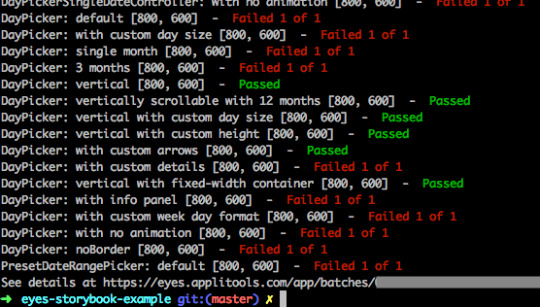

This runs the tests, which generate the following output:

Airbnb React Dates test output

Wow! 750 tests. I have to say that this component library is very well tested. And how do I know that it is very well tested? It’s not just because there are a lot of tests, but also (and mainly!) because I randomly went into some source file, changed something, and ran the tests again.

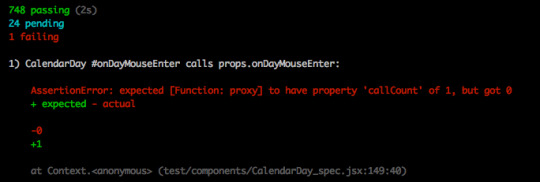

Let’s try it. Change line 77 in file: src/components/CalendarDay.jsx to:

if (!onDayMouseEnter) onDayMouseEnter(day, e);

(I just added the if to the line). Now let’s run the tests again using npm test:

Tests failing due to a change in the code

Perfect! The tests failed, as they should. Really great engineering from the Airbnb team.

(if you’re following with your own repository, please undo the change you just made to the file.)

Functional Testing Cannot Catch Visual Problems

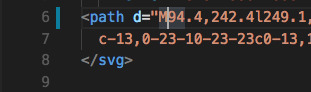

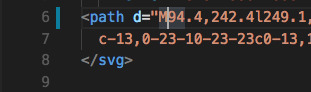

Let’s try again. Edit line 6 in the file src/svg/arrow-right.svg, by changing the first M694 to M94, thus:

Now let’s run the tests again using npm test:

Oops. They’re passing. We’ve changed the source code for the components, and yet the tests pass.

Why did this happen? Because we changed only the visual aspect of the components, without changing any of the functional aspect of it. And Airbnb does only functional testing.

This is not to denigrate the wonderful engineers at Airbnb who have given us this wonderful libraries (and other open-source contributions). This library is tested in a way that should make Airbnb proud. And I am sure that manual testing of the components is done from time to time to ensure that the components are OK.

(if you’re following with your own repository, please undo the change you just made to the file.)

Visual Testing of a Shared Library

So how does one go about testing the visual aspects of the components in a shared library? Well, if you’re using React Storybook, you’re in luck (and if you’re building a shared component library, you should be using React Storybook). You are already using React Storybook to visually display the components and all their variants, so testing them automatically is just a simple step (are you listening, Airbnb? 😉).

We’ll be using Applitools Eyes, which is a suite of SDKs that enable Visual Testing inside a variety of frameworks. In this case, we’ll use the Applitools Eyes SDK For React Storybook (yes, I know, a big mouthful…)

(full disclosure— I am a developer advocate and architect for Applitools. But I love the product, so I have no problem promoting it!)

To install the SDK in the project, run:

npm install @applitools/eyes.storybook --save-dev

This will install the Eyes SDK for Storybook (locally for this project). If you’re following with your own repository, please undo the changes you made to the files. or otherwise you will get failures. To run the test, just run:

./node_modules/.bin/eyes-storybook

You will get this error:

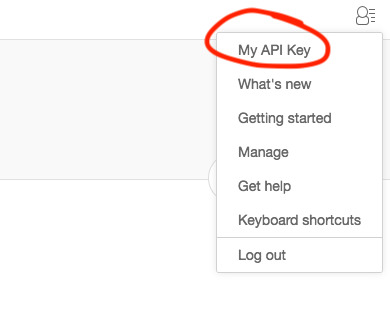

Yup. To use the Eyes SDK, you need an API Key, which you can get by registering at the Applitools site, here. Once you have that, login and go to the Eyes admin tool, here. You will find the API using the following menu:

Getting the Applitools API Key

To make Eyes Storybook use the API key, set the environment key APPLITOOLS_API_KEY to the key you have:

// Mac, Linux: $ export APPLITOOLS_API_KEY=your-api-key

// Window C:\> set APPLITOOLS_API_KEY=your-api-key

Now run it again:

./node_modules/.bin/eyes-storybook

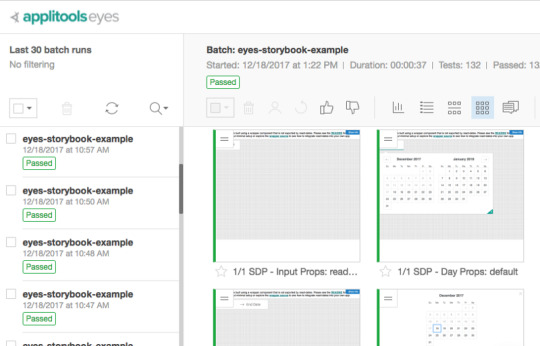

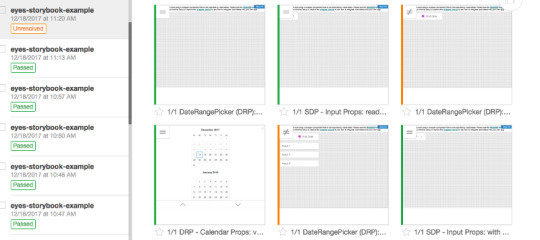

Eyes storybook will run Chrome headless, open your storybook, take a screenshot of all your components and variants, and send them to the Applitools service. When the test ends, as this is the first test, it will establish that these screenshots are the baseline against which all other tests will be checked against. You can check the results with the link it adds to the end of the tests, or just go to http://eyes.applitools.com:

Visual testing results

Failing the Test

So let’s test if the change we made to the SVG will fail the test. Re-edit line 6 in the file src/svg/arrow-right.svg, by changing the first M694 to M94, thus:

Now let’s run the test again using ./node_modules/.bin/eyes-storybook.

Failing Eyes Storybook test

Success! Or rather failure! The test recognized that some of the component screenshots differed from the baseline ones we created earlier. We can see the result of the diffs in the Applitools Eyes management UI:

Showing diffs of components in Storybook

We can either select these changes as the new baseline (which in this case we don’t want to!) or fix the bug and run the test again, in which case it will be green.

And that is the beauty of Storybook. Because it enables you to create a central repository of components, it feeds three birds with one bird feed (I’m sorry, I can’t kill three birds, even if it is with one stone…):

Creating and documenting a shared React components library, with little effort.

Testing that the functionality of the components works (albeit with lots of effort)

Visually testing the components with literally zero code.

Summary

React Storybook is a game changer for frontend development— it enables the creation and maintenance of a shared component library for the whole company.

And the best thing about React Storybook is that if you’ve already invested the effort to set it up, you get a suite of tests that check the visual look of your components… for free!

h2, h3, h4, h5 { font-weight: bold } figcaption { font-size: 10px; line-height: 1.4; color: grey; letter-spacing: 0; position: relative; left: 0; width: 100%; top: 0; margin-bottom: 15px; text-align: center; z-index: 300; } figure img { margin: 0; }

About the Author: 30 years of experience have not dulled the fascination Gil Tayar has with software development. From the olden days of DOS, to the contemporary world of Software Testing, Gil was, is, and always will be, a software developer. He has in the past co-founded WebCollage, survived the bubble collapse of 2000, and worked on various big cloudy projects at Wix. His current passion is figuring out how to test software, a passion which he has turned into his main job as Evangelist and Senior Architect at Applitools. He has religiously tested all his software, from the early days as a junior software developer to the current days at Applitools, where he develops tests for software that tests software, which is almost one meta layer too many for him. In his private life, he is a dad to two lovely kids (and a cat), an avid reader of Science Fiction, (he counts Samuel Delany, Robert Silverberg, and Robert Heinlein as favorites) and a passionate film buff. (Stanley Kubrick, Lars Von Trier, David Cronenberg, anybody?) Unfortunately for him, he hasn’t really answered the big question of his life - he still doesn't know whether static languages or dynamic languages are best.

0 notes

Text

2018 Test Automation Trends: Must-have Tools & Skills Required to Rock 2018

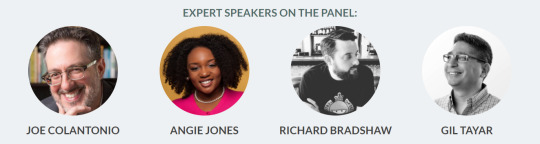

As 2017 comes to a close, it’s been a great time to reflect back on the year and look ahead to what 2018 might have in store, especially in regards to software testing and automation. To dig into this a little deeper, we recently hosted a webinar featuring some of the software testing industry’s most prominent experts and thought leaders discussing the hottest tools, technologies and trends to look out for in 2018. Our guests included host Joe Colantonio, founder of Automation Guild Conference; Angie Jones, prolific speaker and senior software engineer in test at Twitter; Richard Bradshaw, software tester, speaker and trainer at Friendly Testing; and, our very own Gil Tayar, senior architect and evangelist.

During the webinar, they discussed the top test automation strategies for 2018, how AI will affect automation, the new tools and tech you should explore, and much more. Continue reading for some of their top insights that will help prepare you for the year to come:

Colantonio reflects on some of the trends he has seen in 2017: “As I speak to a lot of companies I see a lot of digital transformations going on. I see a lot of studies saying QA and testing investment is going to be really heavy the next five years. Also, what I’ve been seeing is a lot of companies are shifting to the left, so they’re investing more and more in how they can automate their process to make it quicker for the software development.”

With the adoption of automation becoming more widespread, Jones says that companies will be thinking about how to use it more strategically in 2018: “I think in 2018 we’ll see people taking a step back and looking at what is it that they actually want to gain from automation, and how to best do that. I’m also seeing a lot of teams now wanting to embrace DevOps. As we are moving into that space we see testing, automation, development, and everything is moving a lot faster. There’s definitely a need for automation to not just be running on someone’s local machine or running along somewhere on a server, but to actually be gating check-ins and giving teams confidence.”

Bradshaw offers some advice on how to speed up your software delivery in 2018: “I think one of the opportunities to take advantage of in 2018 is to step back a bit, have a reflection of the skills that you’ve developed. How good is your programming now? How many tools are in your tool box? Start to look where we can apply them throughout the software development lifecycle. The things that I’m looking at myself is our automated checks and feedback loops. So, having a look at all the checks you have and reducing some of them. Do you need them all? Are they valuable? And that will help you speed up.”

Tayar hopes that more companies will embrace the shift-left movement and put more of the testing responsibility on developers: “From my perspective, the shift left movement, where the boring parts of testing, the regression testing, is moving towards development and towards developers. I think this is a trend that I’m seeing more and more 2017 and in mostly advanced, high performing companies. Hopefully in 2018 more companies will be able to do more and more testing on the developer side. That will enable testers to find the more interesting problems to do. Not just regression test, but more interesting things with their testing time.”

Colantonio on the importance of testing at the API level: “As more folks move towards continuous integration and continuous delivery, I think we need integration tests and to give faster feedback that isn’t just about the UI. I think API testing is really a critical piece of that. If the business layer is not in the UI, then we should test that at the API level. So that’s a great opportunity. I’m not sure why more people aren’t getting into it.”

Consider developing skills in web services automation in 2018, advises Jones: “I can probably count on one hand the people who are experts in web services automation, and that’s a problem because there’s a big demand for that right now. So I think this is an opportunity where if you are looking to get into automation, or if you’re already in automation and you’ve been focused very heavily on UI automation, there’s an opportunity here for people to advance their skills and look into web services automation.”

For AI tools to provide value, testers first need to understand the purpose of their test, explains Bradshaw: “The use of AI that I’m seeing advertised at the moment by lots of companies is to help them. They’re using AI to help the tool design automated checks. That sends alarm bells off in me because I don’t think many testers out there even know what they’re doing. Not that they don’t know when they’re testing, and that they don’t know their own thought process. They don’t actually understand what they’re going through. They don’t know why they find bugs. They don’t know why they do the tests that they do, they just do them.”

Simple advice from Tayar is to embrace agility: “Agility is the movement towards faster release cycles, and that fuels the need for developers to create their own tests. Once developers create their own tests that leaves testers to do the really, really important stuff. To test from a thinking perspective, and not so much doing the work that developers should’ve done by themselves. So if there’s one thing to remember, it’s to embrace agility. Use that to do better testing, better automation, better thinking about what you’re testing so that your company will get an advantage from that.”

Don’t just put blind faith into your AI tools, warns Jones: “In 2018, I don’t think we’re going to see a bunch of AI being used to assist us, but I think we could use 2018 to take the opportunity to understand, what is AI? And reveal how it will be used to help us, and if it can really even help us. A lot of times we look at AI as, ‘Oh, it’s this perfect thing.’ And I can tell you that it’s not perfect. I’m working on some applications here, and even before I got here to Twitter, products that are using machine learning, which is a subset of AI. It can’t be a black box that I think works magically. I have to understand what it’s supposed to do and how to test that.”

Bradshaw: “I would like to see some tools come into the market that are specifically designed to support me and my testing. They’re looking at what I’m doing on the screen and they might fire heuristics at me. They might say, ‘Richard, you seem to have done some kind of testing like this many years, or a few months ago, a few releases ago. And then you used this heuristic and found a bug, so why don’t you try that heuristic?’ Or have it as a little bot that’s just there helping me do my job, like take a screenshot for me and it automatically put it on my tickets. But in terms of the AI bit, just having it prompt me to help me think about what I would test based on what I’ve done in the past, I think that is a better use for AI at the moment.”

Will AI be taking over testers jobs? A resounding “No” says Tayar: “I get a lot of questions about, ‘Do I need to worry about my job?’ And the answer is a very, very emphatic, ‘No, you do not.’ We’re very early. We’re in the early, early phases of using AI in testing. And I believe that in 10 years and maybe even more we will be using AI as a tool and not so much as a replacement for testers. A tool in that it will be able to, for example, visually compare stuff so that you will be able to find your bugs in a quicker way, and not check every field that you need to fill, but just holistically check the whole page in one go. It will be able to find lots of changes in lots of pages at one time.”

Bradshaw: “My actionable advice right now would be go back into your office, go through your automated checks that you have now and try and delete five of them. And try and go understand them all, review them, study them, continuously review them, and delete them down to the ones that really matter. Because I can go until you probably all have some that are just providing no value at all, but they are taking a few minutes to run every time you do it. So continuously review those checks.”

Watch the full replay:

youtube

h2, h3, h4, h5 { font-weight: bold } figcaption { font-size: 10px; line-height: 1.4; color: grey; letter-spacing: 0; position: relative; left: 0; width: 100%; top: 0; margin-bottom: 15px; text-align: center; z-index: 300; } figure img { margin: 0; }

0 notes

Text

The 2017 Surprises and 2018 Predictions for Software Delivery, Testing and More!

With 2017 now behind us, we thought this would be a great time to reflect on what the year brought us, and to prepare for what 2018 may bring! From Cloud to DevOps to IoT, there was certainly a lot to learn and still plenty of room for growth. Some of our most shocking revelations were the rise of serverless architecture and seeing companies finally take security head-on, especially in regards to IoT. In 2018, we will see more data collection and analysis as a means to help improve security and the proliferation of mobile automation. There’s so much more that we learned and are looking forward to in regards to Cloud, DevOps, IoT, Java and mobile. Continue reading for more insights from Applitools contributors Daniel Puterman, director of R&D; Gil Tayar, senior architect and evangelist; and Ram Nathaniel, head of algorithms.

Cloud

2017 Surprise: The biggest surprise is that after being declared dead in previous years, PaaS has risen from the grave as “serverless architecture” — Gil Tayar

2018 Prediction: Serverless will continue to grow as a paradigm whereby your application doesn’t care where and how it runs — Gil Tayar

DevOps

2017 Surprise: Docker surprised everyone by declaring support for Kubernetes in Docker EE (alongside their own Docker Swarm), thus ceding victory and confirming that Kubernetes will be, for 2018, the industry standard orchestrator for Microservices. — Gil Tayar

2018 Prediction: Kubernetes will continue to grow and secure its place as the leading orchestrator for Microservices. The three big cloud vendors will support managed Kubernetes — Gil Tayar

IoT

2017 Surprise: With the widespread adoption of IoT development, IoT security had evolved from a theoretical problem to an actual issue to deal with — Daniel Puterman

2018 Predictions: With the appearance of cheap deep learning acceleration in hardware, in 2018 we will start seeing smarter IoT devices coming to the market. From computer vision based sensors to voice activated window shades — the world around us will become smarter, and more adaptive to our needs — Ram Nathaniel In addition to security, IoT data collection and analysis will become the next target of companies and entrepreneurs in the data science field — Daniel Puterman

Java

2017 Surprises: Kotlin comes from seemingly nowhere to dethrone the Java emperor, with the backing of two heavyweights of the field: Google and Jetbrains — Gil Tayar

It’s a surprise that with the rise of JVM based alternatives (such as Kotlin for Android development & Scala for data science), Java usage hadn’t actually declined. I expect that 2018 will show the same trend — Java development will continue to take a large portion of software development — Daniel Puterman

2018 Prediction: Kotlin will continue eating away at Java’s dominance of the non-MS enterprise market. It will be a long while till Java will be dethroned, but it will happen — Gil Tayar

Mobile

2017 Surprise: Was the year mobile automation frameworks entered the mainstream mobile development lifecycle, with the appearance of Espresso for Android and XCUI for iOS — Daniel Puterman

2018 Predictions: React Native and Progressive Web Apps will start being a credible alternative to native mobile web apps, thus continuing the increasing dominance of Web technologies on mobile development — Gil Tayar

2018 will continue to make mobile automation more prevalent, specifically with mobile cloud environments becoming more reliable — Daniel Puterman

What do you think? We’d love to hear some of your predictions and reflections on the world of software delivery and testing. 2018 should be another exciting, fast-paced year — we can’t wait to see what it has in store!

h2, h3, h4, h5 { font-weight: bold } figcaption { font-size: 10px; line-height: 1.4; color: grey; letter-spacing: 0; position: relative; left: 0; width: 100%; top: 0; margin-bottom: 15px; text-align: center; z-index: 300; } figure img { margin: 0; }

0 notes

Text

Top News of the Month: Testing & Test Automation Sources Heading into 2018

In case you haven’t heard, test automation is on the up and up! In fact, we recently hosted a webinar with a few of the top minds in the space to discuss the latest trends, opportunities ahead, and the tools and technologies you should familiarize yourself with as we head into the new year. But why stop there? There is a lot being discussed these days, and we want to help be a resource for people to continuously learn and grow.

Below you will find our top 10 test automation articles and posts from the past month. Everything from job skills and practical tips to use cases and tech predictions are covered — we hope you enjoy it as much as we did. Stay tuned for more industry news shared via @ApplitoolsEyes and be sure to subscribe to our blog for ongoing updates and resources for all things test automation.

Happy Holidays!

1. Why Domain Experts Are Vital For Devops Test Automation

By: Gerie Owen | Published on: SearchSoftwareQuality http://searchsoftwarequality.techtarget.com/tip/Why-domain-experts-are-vital-for-DevOps-test-automation

Automation is always seen as the way to speed up software testing, but it is more limited than people realize. Expert Gerie Owen argues domain experts must get involved.