BlueChasm is a digital development company focused on building and running open digital and cognitive platforms in partnership with enterprises, service providers, and software partners.

Don't wanna be here? Send us removal request.

Text

How Exif Data Can Affect Your Image Recognition Training

We apologize for the recent radio silence! We’ve been working on some amazing things for the past months and today we would like to share a small discovery we made.

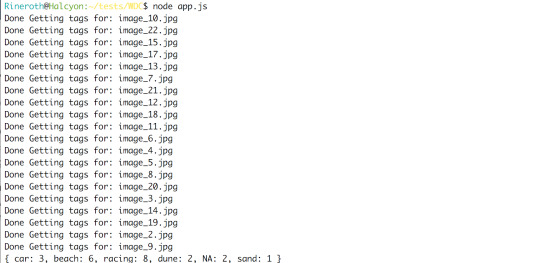

When doing TensorFlow image recognition retraining there are a lot of things to take into consideration. The biggest one we found is image rotation and it might be affecting your training data negatively!

We were playing around with our TensorFlow training tool and wanted to create a soda can recognition model. We handed out different cans to everyone at the office and asked them to take pictures of them in different settings (ie: different backgrounds, angles, lighting conditions). For about 20 minutes we were all running around the office taking pictures of soda cans with our phones and in the end we sent them all to our training tool.

We began training the TensorFlow model using the transfer learning method and then loaded the trained model into our simple testing application. Eager to see the results the team gathered around and we began testing. In the past, we’ve trained models pooling images from the internet but this time we used the data on our phones. When taking pictures, some phones add exif data which is a set of information about the conditions in which the picture was taken. One of these pieces of information is the rotation of the phone when the picture was taken.

The way the phone takes the picture varies with each manufacturer or even OS version. In our case 5 out of the 6 phones would take the picture and add exif data noting that the picture had been taken with a 90º rotation since we were holding our phones in portrait mode.

The 6th phone was natively taking the pictures in portrait mode and did not add any rotation in the exif data. It was simply denoting the orientation as “Normal”.

This means that the Diet Coke can was actually being pictured in landscape mode and the rotation of 90º was being added by the phone after taking the picture where as the Coke Zero was being captured in portrait so there was no need to add the rotation flag in the exif data.

What does this mean for TensorFlow Image Recognition training?

When testing the trained model we were getting incorrect results because the Diet Coke was being tagged as Coke Zero with a very high accuracy. This came as a surprise because both cans are completely different color and were pictured in a wide range of backgrounds.

When looking into the issue we noticed that in our data set, the Diet Coke images were being shown sideways which came as a surprise because we took the images in portrait mode.

We proved our suspicions by pointing the testing app at the can in landscape mode which in fact showed a very accurate tag.

Digging into it we found out that TensorFlow actually ignores exif data included in the jpeg encoding when processing images.

We concluded that the exif data does play a big role when training Image Recognition models in TensorFlow.

What can you do about it?

There are a lot of ways of dealing with this. You could pre-process the images and apply the exif rotation to them before sending them to TensorFlow or you could also set the flags that add random rotation to the image dataset so that the neural network does not take rotation into consideration when training.

Expect more frequent updates from the BlueChasm team. We are very excited to talk about our new ventures with artificial intelligence. If you have any questions, the best way to contact us is at [email protected] or via twitter @bluechasmco

0 notes

Text

Using BlueChasm's Cognitive Audio APIs to build a Cognitive Call Center

At BlueChasm, we built a platform using cognitive technologies on IBM Cloud to enable developers access to gain metadata and analytics on spoken audio. One prominent use case for this technology is to help call centers increase efficiency, improve employee productivity and make more informed decisions based on near real-time insights.

Most call centers record phone conversations as unstructured data; only searchable by manually entered “tags.” If a conversation is relevant to an audit, it must be transcribed manually, which means reports can take weeks, which can result in decreased productivity and potentially decreased customer satisfaction.

BlueChasm’s Cognitive Call Center platform transforms the traditional call center model by using IBM Cloud and Watson to help agents identify, filter, analyze and take actions on inbound and outbound calls. The platform uses IBM Cloud Object Storage to manage the unstructured data, and it uses Watson APIs, specifically Watson Speech to Text and Watson Tone Analyzer, to automate the transcription and tagging of audio, providing near real-time analytics and actions and enable deeper analytics for audit situations.

At BlueChasm, we leveraged virtually the entire IBM development deployment stack to create the cloud-based platform with an open API. Developers can quickly and easily integrate this technology into their own products to get the value of the IBM Watson platform.

Components

BlueChasm’s Cognitive Audio APIs contain three main features: single file transcription, batch upload, and streaming. The single file transcription and batch upload APIs are a RESTful, multipart/form-data POST requests that consist of a binary file and JSON string containing configuration parameters. Streaming transcriptions uses WebSockets to ensure the HTTP connection stays open.

BlueChasm supports multiple formats of audio for transcriptions. Developers must specify the audio format, or MIME type, of the data that you pass to the service. The following table lists the supported formats and, where necessary, documents the maximum number of supported channels.

Supported Audio Formats

Free Lossless Audio Codec (FLAC), a lossless compressed audio coding format.

MIME type - audio/flac

Linear 16-bit Pulse-Code Modulation (PCM), an uncompressed audio data format. Use this media type to pass a raw PCM file.

MIME type - audio/l16

Waveform Audio File Format (WAV), a standard audio format.

MIME type - audio/wav

Ogg is a free, open container format maintained by the Xiph.org Foundation. You can use audio streams compressed with the following codecs:

· Opus.

· Vorbis.

Both codecs are free, open, lossy audio-compression formats. Opus is the preferred codec. If you omit the codec, the service automatically detects it from the input audio.

MIME Type - audio/ogg, audio/ogg;codecs=opus, audio/ogg;codecs=vorbis

To process batch audio, simply update a zip file containing the audio files. Currently, all files must be in the same format. The Cognitive Audio API will automatically process the zip file and respond with the details of all the files contained in the zip.

Usage

To access the API, contact BlueChasm to get an access key and application secret as well as your custom URL. For streaming documentation, please contact BlueChasm.

Batch Transcription Endpoint URL

<https://customerURL>/api/v1/batch_transcribe

The Batch Transcribe endpoint returns the data pertaining to the audio files contained inside a zip file passed based on the parameter that are set in the call.

Required Headers:

Content-Type: multipart/form-data; boundary=BOUNDARY

AccessKey: XXXXXXX

ApplicationSecret: XXXXXXXXXXXX

Body:

--BOUNDARY

Content-Disposition: form-data; name="parameters"

{

"includeTone" : true,

"storeResults" : true,

"includeConcepts" : true,

"includeTimeStamps" : true,

"provideTranscript" : true,

"filename" : "anyfilename"

}

--BOUNDARY

Content-Disposition: form-data; name="audio"; Content-Type: "application/zip"

<zip file data>

--BOUNDARY—

Single Transcription Endpoint URL

<https://customerURL>/api/v1/transcribe

The Transcribe endpoint returns the data pertaining to the audio files passed based on the parameter that are set in the call.

Required Headers:

Content-Type: multipart/form-data; boundary=BOUNDARY

AccessKey: XXXXXXX

ApplicationSecret: XXXXXXXXXXXX

Body:

--BOUNDARY

Content-Disposition: form-data; name="parameters"

{

"includeTone" : true,

"storeResults" : true,

"includeConcepts" : true,

"includeTimeStamps" : true,

"provideTranscript" : true,

"filename" : "anyfilename"

}

--BOUNDARY

Content-Disposition: form-data; name="audio"; Content-Type: "audio/wav"

<Audio Data>

--BOUNDARY--

Watch our demo video here:

youtube

0 notes

Text

We are the {IBM} Champions, my friends

Earlier this year, IBM nominated candidates to be named IBM Champions for Cloud. The IBM Champion program recognizes innovative thought leaders in the technical community. We are excited to announce that we have not one but THREE Cloud Champions at BlueChasm!

Definition of an IBM Champion:

“An IBM Champion is an IT professional, business leader, developer, or educator who influences and mentors others to help them make best use of IBM software, solutions, and services. These individuals evangelize IBM solutions, share their knowledge and help grow the community of professionals who are focused on IBM Cloud. IBM Champions spend a considerable amount of their own time, energy and resources on community efforts—organizing and leading user group events, answering questions in forums, contributing wiki articles and applications, publishing podcasts, sharing instructional videos, and more!"

Meet our IBM Champions:

Andy Lin: Co-Founder

Andy Lin is the Co-Founder of BlueChasm where he is responsible for helping to drive strategy and execution for key initiatives company-wide. One of Andy’s focus areas has been leading BlueChasm’s capabilities in supporting digital IT strategies, which include helping clients build tech stacks not only for business-critical core applications, but also for cloud-native, hybrid platforms that revolve around open source, cloud, analytics, mobile, cognitive/AI, and IoT. Andy is an IBM Champion for Cloud and Power Systems, and an IBM Redbooks published author.

Ryan Van Alstine: CTO and Co-Founder

Ryan VanAlstine serves as CTO and Lead Developer for BlueChasm. Ryan is charged with product and technical direction and leads the team of developers in building platforms and prototypes, mostly on the IBM Cloud and Bluemix stacks. Ryan was a Co-Founder of BlueChasm in late 2014 with Andy and is an IBM Champion for Cloud. He is also an active community member and advocate for Server-side Swift, much of it after serving as an iOS consultant and working with both large companies and startups, prior to founding BlueChasm.

Robert Rios: Software Developer

Robert Rios serves as a software developer for BlueChasm. Robert grew up coding, having written his first script in middle school, which was designed to automate tasks on a Windows computer. At BlueChasm, Robert has been the inspiration for many of our platforms and prototypes, including creating the first version of VideoRecon. Robert also open sourced the community’s first npm package for IBM Cloud Object Storage (then Cleversafe) and has been active in writing about how to code efficiently on IBM Bluemix, IBM Cloud Object Storage, and on the IBM stack. Robert is an IBM Champion for Cloud and has led sessions at Watson Developer Conference, World of Watson, and InterConnect.

We are honored to be part of such an amazing community of thought leaders!

0 notes

Text

Press Release: Mark III Systems Launches Cognitive Call Center on IBM Cloud with Watson

May 2, 2017 Armonk, N.Y. —

IBM (NYSE: IBM) today announced that Texas-based IT solutions provider and IBM Business Partner Mark III Systems has built a platform using cognitive technologies on IBM Cloud to help call centers increase efficiency, improve employee productivity and make more informed decisions based on near real-time insights.

Most call centers record phone conversations as unstructured data, only searchable by manually entered “tags.” If a conversation is relevant to an audit, it must be transcribed manually, which means reports can take weeks, which can result in decreased productivity and potentially decreased customer satisfaction.

Mark III Systems’ Cognitive Call Center platform transforms the traditional call center model by using IBM Cloud and Watson to help agents identify, filter, analyze and take actions on inbound and outbound calls. The platform uses IBM Cloud Object Storage to manage the unstructured data, and it uses Watson APIs, specifically Watson Speech to Text and Watson Tone Analyzer, to automate the transcription and tagging of audio, provide near real-time analytics and actions and enable deeper analytics for audit situations.

Mark III’s flagship partner, Cistera Networks, a leading developer and global provider of cloud business communications and collaboration solutions, is already seeing dramatic benefits from the Cognitive Call Center platform built on IBM Cloud. By adding cognitive aspects for near real-time analytics and actions, as well as enabling deeper analytics for audit and compliance situations, institutions are speeding up response times (from weeks to just minutes) without adding costly overhead. Additionally, by automatically transcribing and analyzing calls, then tagging them with specific information for records search, Cistera customers are now able to determine trends that can lead to profitable business improvements or new opportunities in minutes rather than days or weeks -- then measure the outcome utilizing customer sentiments in future calls.

“Leading with Watson on the IBM Cloud has given us a unique way to guide and partner with enterprises like Cistera around their digital, cognitive and analytics strategies,” said Andy Lin, Vice President of Strategy, Mark III Systems. “The clients who have implemented our cognitive platforms have seen success so far, and we now view the platform approach as a future blueprint for our enterprise cognitive and analytics engagements going forward, including in support of enterprise call centers.”

Mark III’s development unit, BlueChasm, leveraged virtually the entire IBM development to deployment stack to create the cloud-based platform with an open API. With its highly repeatable, flexible solution, Mark III is set to revolutionize the call center market by providing cognitive business insights in near real time to its clients.

“Mark III Systems’ Cognitive Call Center is a powerful example of how our IBM Business Partners can create new business opportunities with Watson on IBM Cloud,” said David Wilson, Vice President, IBM Cloud Business Partners and Channel Innovation. “The innovative solution was built on IBM technology across the stack and was recently recognized as an IBM Beacon Award winner for Outstanding Solution Developed on Bluemix.”

IDC estimates that by 2020, global spending on cognitive and AI will be more than $46 billion.[1]

To help its global business partners take advantage of this opportunity, IBM has launched the Watson Build, a new challenge designed to support its channel partners as they bring a cognitive solution to market. The deadline to apply is May 15, 2017, and businesses can join or learn more at:

https://www-356.ibm.com/partnerworld/wps/static/watsonbuild/

0 notes

Text

The Future of Video Analytics

By Ann Marie Woodburn

Last week, IBM announced a new cloud service that will analyze videos. The tool will utilize the IBM Watson platform to analyze the video for keywords, concepts, images, tone, and emotional context. We are looking forward to the release of this new service at the end of the year as it complements our VideoRecon platform. IBM’s entrance in the market validates what we have believed for the past year and what our users have been testing for the past 6 months. Now that the ecosystem is starting to grow, we have extended our platform to focus on solving specific enterprise use cases.

VideoRecon is a platform that uses deep learning to “watch” and “listen” to videos. It returns “auto-tags” based on objects, audio, and descriptions it identifies in the video content. These tags make the video file easier to search, filter, and analyze. Currently, businesses manually tag each video by hand which can lead to human error, bias, and higher headcount depending on the quantity of videos and timeline for which the data is needed.

VideoRecon uses several of Watson’s APIs such as speech to text, tone analyzer, personality insights, natural language understanding, and visual recognition, IBM Cloud Object Storage plus our own unique services.

In the announcement, it discusses the impact this will have on media and entertainment companies to manage their content libraries. Not only in media and entertainment is this valuable but in education to analyze classroom videos, marketing or support videos posted online for clients, and even internal videos used for training or communications. The value of VideoRecon and IBM’s video service is that it enables users to more accurately tag videos, search and filter with ease, and view analytics more quickly.

Stay tuned as we roll out our new VideoRecon services and integrate with IBM’s video service in the next couple of months. If you would like to learn more about VideoRecon please reach out to us or sign up for beta access to our VideoRecon API or VideoRecon upload portal. If you would like to work with our platform in another way, please let us know!

0 notes

Text

Turning Visual Recognition into Video Recognition

Today we presented at the Watson Developer Conference and shared the node.js code that you can use in your web app to automatically recognize video using Watson’s Visual Recognition service.

We pass the video in the parameters to ffmpeg (video.mp4 in this example) and then we slice it into frames which then get sent to Watson’s Visual Recognition one by one. Once we’re done we just tally up the results!

NOTE: Make sure you have ffmpeg installed in your system

We’d like to thank the organizers of Watson Developer Conference for putting on such an amazing event and all the attendees for coming out and watching the presentation.

Here is a screenshot of it in action:

Here is the Video that’s being processed:

youtube

If you would like to know more feel free to contact us by commenting, email at [email protected] or tweet me @RobertFromBC

0 notes

Text

U of H CodeRed Hackathon 2016

The last weekend in October we attended The University of Houston’s CodeRed Curiosity Hackathon as a sponsor. You would think that after so many hackathons both as participants and sponsors we would get tired of them but the truth is we keep wanting more! This was our second CodeRed to sponsor and each time has been a completely new and improved experience. The organizers have done a wonderful job to keep this event fresh and entertaining with each year.

Our challenges this year were:

Best use of IBM Watson

Best use of Blockchain

The winner for our first challenge, Best Use of Watson, was Owl Security from Rice University. The students built an application that used the Visual Recognition Watson service to analyze a live video stream from a camera. It searches for security threats such as fire or weapons and when detected it uses Twilio to notify the user and the local authorities about the situation. The user then receives a screenshot of the threat and a description of the actions taken. The resourceful piece is the application is intended to be used with old phones that people have laying around, that way they would reuse discarded technology which is a growing problem today.

We’d like to make an honorable mention to 2 other projects that caught our eye in this category:

Hallo Doc was a tool that would help people with illness make their own diagnosis by using Watson’s Conversation Service and in their future plans they would use facial recognition to help with the diagnosis.

The other team, Wapi, used the Alchemy API on a video game engine to analyze any given Wikipedia page about a famous historical character and extract related places and people. The game engine would then generate the places on a map and the NPC’s based on the results coming from Alchemy API. We thought this usage of Watson was very creative and out of the box which is why we love these events.

Our second challenge, Best Use of Blockchain, was a little more complex and challenging! Team Ether Bank built the prototype for a “trust system” that would act in the same way credit score does, but it would be based on cryptocurrencies. That way, just as we do with regular money, people could ask for loans and lenders would be able to keep track of the borrower’s record using a blockchain which is virtually impossible to forge entries on.

We can’t wait for the next hackathon and to get our minds blown by the projects students can come up with in just 24 hours. We really enjoyed seeing people excited about learning from this experience just as we did when we were participants, it really is a beautiful view to see what comes out of the chaos of bright minds that is a hackathon.

Here is a video highlighting our weekend at CodeRed 2016:

youtube

0 notes

Text

The HackTX Hackathon Experience

BlueChasm had a great time at this year’s HackTX Hackathon and we would like to thank the organizers, hackers and sponsors for putting together an amazing event. In this hackathon, we had 2 challenges available for hackers to tackle. We also helped with the overall judging of the projects and we were impressed with what students can accomplish in just 24 hours.

“This was my first time on the other side of the table at a hackathon. When I was a student I attended many hackathons as a participant, but in this case it was my turn to be a judge and a sponsor. I was a little worried about not enjoying it as much as I did when I was a participant, but the experience was just as amazing as it was back then. At one point, I even started working on some code myself and went around the sponsor tables to get my share of stickers. I was happy to see people who attended my alma mater, UTRGV, and even saw some familiar faces who I met when they were underclassmen, but are now about to graduate and are eager to go into the industry. This will definitely not be the last hackathon I attend to.” - Robert Rios

We would like to congratulate the winners of our 2 challenges!

Best Use of Weather and Community:

Congratulations to Team Cast for their customer notification system that would send out important information to customers from their banking institution whenever there was a natural disaster that was going to affect them. They used The Weather Company’s API to poll weather information and trigger the notifications for the users and were awarded Personal BloomSky Weather Stations!

Best Use of Blockchain:

Congratulations to Team Footnote Analytics which by combining a Raspberry-Pi with the network tool Aircrack-ng was able to anonymously track devices via wi-fi polling and keep a trusted log of where the users were by verifying with IBM’s blockchain in order to securely keep track of the presence of a device. This system could even be used on multiple unrelated buildings since it was using the decentralized technology of blockchain to maintain a log. This one man team was awarded a Hyper X Stingray gaming headset!

We had a great time at HackTX and look forward to next year! Watch the video below for a sneek peak into our weekend!

youtube

This weekend we will be sponsoring The University of Houston’s CodeRed Hackathon for a second time. Come visit our table to learn more about our company, our exciting challenges, and to get a FREE t-shirt! We look forward to seeing what amazing ideas the students will come up with at this event!

0 notes

Text

HackTX Challenges

We are looking forward to HackTX this weekend at The University of Texas! If attending, come visit our table to learn more about BlueChasm, attempt our 2 challenges listed below, and get a FREE t-shirt!

The first challenge is on Weather and Community and you can win a Bloomsky Personal Weather Station!

The second challenge is Best Use of Blockchain and you can win a HyperX Cloud Stinger Gaming Headset!

Challenge #1: Weather and Community

Whether enjoying a sunny day at a water park, or being one of millions evacuating their hometowns during Hurricane Matthew, weather effects our lives on a very personal level. With technology keeping us constantly connected with our family and friends or our favorite sports teams or retailers, develop an application which bridges the gap and connects us closer as a community. Projects will be evaluated and graded by our development team.

Application ideas could include (but should not be limited to): • Development of personal sentiment around weather & social • Built in alerts for family and friends • Tracking of events and activities affected by weather • Filtering of social media around local / national weather

Download Weather API Documentation HERE!

Hints: • https://console.ng.bluemix.net/catalog/services/weather-company-data/

Extra Credit: Extra credit will be rewarded for any submissions with connections to a creative use case that is in some way also integrates in data from government, healthcare, retail/consumer, and/or social media data https://console.ng.bluemix.net/catalog/services/alchemyapi/

Prize: Bloomsky Personal Weather Station to showcase on Weather Underground

Help: Email [email protected]!

Challenge #2: Best Use of Blockchain

Blockchain is the open, digital framework that allows you to build trust and efficiency in the exchange of anything in virtually any application or use case.

The challenge will consist in creating a project that uses or is based around Blockchain technology.

Projects will be evaluated and graded by our development team.

Hints: • We recommend the use of the Hyperledger Project (open source) and/or IBM’s Blockchain service but it’s not required • https://www.hyperledger.org/ • https://www.ibm.com/blockchain/

Prize: HyperX Cloud Stinger Gaming Headsets

Help: Email [email protected]!

0 notes

Text

IBM Watson Developer Conference Giveaway

Hello everyone!

The very first Watson Developer Conference is going to be November 9th-10th in San Francisco, CA and we are giving away 30 free passes to the conference! We are also very excited to announce that we will be speaking at the conference. Our Flash Talk session is called “Turning Visual Recognition Into Video Recognition” on Monday at 11am. Come learn how to get more out of IBM Watson’s Visual Recognition API by integrating it with your app to strategically analyze video. We will be live coding and give tips on how to manipulate videos with Python!

We love developing with IBM Watson and if you do too or want to get to know the Watson services better this is an event you do not want to miss. We are giving away 30 passes for this 2-day conference! All you need to do is send an email to [email protected] with the following details for a chance to win:

State your name, company, and job title

Tell us at least 3 sessions you would be interested in attending at the conference. (Here is the agenda)

Write a short paragraph about why you love developing with IBM Watson or why you want to learn more about the Watson services.

If selected you will be contacted with next steps! Good luck!

Rules:

This is a first come first serve competition for the first 30 people who meet the requirements. The prize is non-exchangeable, non-transferable, and is not redeemable for cash or other prizes. The prize is open to people aged 18 and over. Only one entry per person. Entries on behalf of another person will not be accepted and joint submissions are not allowed. We will not cover the costs of travel or related expenses. If you work for a Government Owned Entity you are not eligible to win. The closing date of competition is 23:59 CST on November 1, 2016. Entries received outside this time period will not be considered.

0 notes

Text

Building and Contributing a Zendesk Integration Pack for StackStorm (DevOps/ChatOps)

By Casey

Recently, we've been doing work with some DevOps tools and decided to contribute to a powerful open-source project called StackStorm. StackStorm is (according to their website):

"...a powerful open-source automation platform that wires together all of your apps, services and workflows. It's extendable, flexible, and built with love for DevOps and ChatOps."

StackStorm enables enterprises and service providers to orchestrate event-based automation (IFTTT) between different common tools and services, including integrations with things like Chef, Puppet, GitHub, Docker, VMware, Jenkins, Slack, Twitter, Splunk, Twilio, Cassandra, and many more. And with the recent acquisition of StackStorm (the company) by Brocade, StackStorm now has the capability to tie its automation framework into networking and systems enterprise hardware, including Brocade.

After we realized there really weren’t any StackStorm integrations for any customer service ticketing software platforms, we decided to create one for Zendesk, which our team uses currently.

The first step I took when architecting a solution for integrating Zendesk into StackStorm's integration system is figuring out how I'd communicate with Zendesk's API. Since StackStorm is written in Python, I searched online for Zendesk Python API wrappers. It turns out that Zendesk has a great list of these wrappers on their developer site. After looking at examples of both of the top two libraries I decided to go with Zenpy. Zenpy is a great library that is very pythonic and implements every essential feature of the Zendesk API. You can see a few examples of how to use Zenpy at the project's GitHub page.

Once I had decided to use Zenpy, I tested it out by making a few manual calls to a test Zendesk environment I had setup. This helped me to get a feel for how it handles creating tickets and manipulating API objects. Zenpy has a very straightforward API that makes these kinds of calls very easy. The next steps were to begin actually creating my integration pack. I'm going to boil it down to the main points of how I setup my dev environment for creating an integration pack:

Create a fork on GitHub of the st2contrib repo (StackStorm/st2contrib)

Create a branch to work on in my forked repo

Create the base pack structure (guide here)

Start up a VM with StackStorm pre-installed by using Vagrant (guide here)

Once the Vagrant VM is up and running, clone the forked repo into the vagrant folder so that you can work locally and test on the VM

Then setup a hubot instance so that it can properly test chatops aliases (guide here)

Once all those steps were out of the way, it became easy to test out my integration as I was working. The main features of Zendesk that I was aiming to support were: creating tickets, updating tickets, changing ticket statuses, and searching tickets. In addition, I wanted all of those features to be useable through StackStorm's chatops feature. Creating the aliases for StackStorms actions can be a little confusing, but they also have great resources available on their online documentation for action aliases, or by looking through the other packs available in their st2contrib repo.

If you'd like to see more on the Zendesk StackStorm integration pack, you can view the pack here.

0 notes

Text

IBM Watson Visual Recognition V3 Python Tutorial

By Robert

Hello everyone, sorry about the recent radio silence. Here at BlueChasm, we’ve been working hard on a lot of projects and we’re excited about the next couple of months where we’ll be attending many events. More on that later.

Our previous tutorial uses V2 Beta of visual recognition but they have since moved to V3 which includes amazing services like face and text detection.

Today, I’m going to write a very simple How-To Tutorial on Watson Visual Recognition V3. Lets get started!

Prerequisites:

First, we need to install the Watson Developer Cloud SDK using pip.

sudo pip install --upgrade watson-developer-cloud

This python library contains the necessary tools to interact with the Watson services.

You will also need a Visual Recognition API Key. If you don’t have a key, check out this guide to get yours.

Making the calls:

There are three main API calls to identify Images: Classify, Detect Faces and Recognize Text. Here is a simple way to use them.

NOTE: Watson can take an image file and .zip file as well to get recognized by either of the 3 APIs Just do the following:

Flask Example:

Here is a flask example that sends an uploaded file to Watson and then prints out the resulting JSON from the call. From here, you can use the data for anything you want.

The file structure is as follows:

HTML File:

Server File:

Thank you for reading and be sure to check us out on twitter @BluechasmCo and @RobertFromBC for more updates!

1 note

·

View note

Text

Building a Cognitive Drone for the Enterprise with IBM Watson Visual Recognition and BlueChasm

By: Alex

For a recent Developer Day we put together a demo utilizing a custom made drone running off of a Raspberry Pi with the pi camera module. Using the drone we took a snap shot every several seconds and uploaded the images to IBM Cloud Object Storage, tagged the images using IBM Watson Visual Recognition, and stored the tags in a Cloudant database.

I left the drone camera pointed at me while I worked. It remotely uploaded a few dozen images with tags.

classes": [ { "class": "person", "score": 0.999994, "type_hierarchy": "/people" } ]

All of the images the drone takes are uploaded remotely and tagged in real time. We have a lot of opportunities with this method to then act on what the drone is seeing during the flight. And since the tagging is done using Watson and autonomous flight control software is improving, we have reduced the need for a human operator.

Here is a short example of how we are remotely storing and tagging the images. Note that I just assigned each image a random number for a file name. You can use any other method that works well for your use case.

function storePic(data, cloudant){ console.log('File loaded'); os.createContainer() .then(function(){ return os.setContainerPublicReadable(); }) .then(function(){ return os.uploadFileToContainer(Math.random()+'.jpg', 'image/jpeg', data, data.length); }) .then(function(file){ console.log('url to uploaded file:', file); tagPic(file, cloudant); return os.listContainerFiles(); }) .then(function(files){ console.log('list of files in container:', files); }); } function tagPic(url, cloudant){ console.log("tag called"); var params = {url: url}; visual_recognition.classify(params, function(err, res) { if (err) console.log(err); else{ console.log(JSON.stringify(res, null, 2)); var tagging_demo = cloudant.db.use('tagging_demo'); tagging_demo.insert(res, url, function(err, body, header){ if (err) { return console.log('[tagging_demo.insert] ', err.message); } console.log('You have inserted the doc.'); console.log(body); }) } }); }

We see a lot of uses for this technology in businesses that traditionally use human monitoring. Some examples include inspecting dangerous industrial equipment and managing inventory in large warehouses or shipyards. Due to the instant response generated by the visual classification there are also some potential uses in emergency response.

0 notes

Text

Creating Secure Passwords for Multiple Websites

By Robert

It is very important to have different passwords for your different internet accounts. Remember that a chain is as strong as its weakest links so you need to make sure that your accounts are not part of the same chain by using different passwords.

If you do have the same password for your accounts and one of them gets compromised, the malicious user will have access to all of your other accounts.

Just this year, there have been massive database breaches and you might have been a victim of these hacks.

It’s true that passwords are hard to remember; especially if you have multiple accounts. Hopefully, this method will help you remember them.

Star with a phrase, quote or song lyric that you know you won’t forget.

In this example I will use the song “Fire Coming Out of the Monkey's Head“ By Gorillaz

Start by turning the song title into an acronym:

fcootmh

Replace some letters for symbols or numbers:

fc0()tmh

Capitalize random letters in the password:

Fc0()tMh

Our password is now 8 characters long and it contains symbols, upper and lowercase letters and numbers. That alone ranks 86% in the Password Strength Checker which labels it as “Very Strong”.

Now we will tailor it for each account we have:

It is easier than it sounds! All you need to do is add the initials of the website that the password is for.

G-Mail:

Fc0()tMhGM

Facebook:

Fc0()tMhFB

Spotify:

Fc0()tMhS

This adds an entirely new level of security by adding more characters to the password which increases its security exponentially. All you need to do is remember the original password and you can add the page initials at the end so it is usable for all of your accounts.

If this is still too complex for you, try using a password manager to manage all your accounts safely.

BlueChasm is participating in the National Cyber Security Awareness Month organized by the National Cyber Security Alliance. Be sure to visit their website for more tips about securing your digital information.

0 notes

Text

It’s REAL: Personalized Advertising From “The Minority Report” Comes to Life with IBM Watson, Bluemix, and Cleversafe

by Habeeb

There is a scene in the movie “The Minority Report” where Tom Cruise is running (big surprise) through a mall (Click here to see the clip). As he is running, cameras are capturing his face and showing him personalized advertisements. Personalized advertisements would be a dream for advertisers, especially because “many consumers find personalized ads to be more engaging (54%), educational (52%), time-saving (49%) and memorable (45%).” IBM CEO, Ginni Rometty, said “I don’t think anybody’s just B2B or B2C anymore. You are B2I—business to individual.”

Now, thanks to IBM Watson's Visual Recognition and Text To Speech APIs we were able to make this dream a reality through our Visual Communications Platform (VisComm).

youtube

The platform focuses on delivering visual messaging to people based on a camera input. The service sends the captured photos to Watson and retrieves an Age and Gender for the faces in the image. It then delivers visual content tailored to the individual it sees from the IBM Cleversafe Object Storage platform (that we're using as a content repository to store our advertisements).

However, VisComm is more than just an advertisement platform. In fact, advertising is just the tip of the iceberg. Because our service leverages IBM Watson it can actually be trained to recognize people which makes it even more versatile (and even closer to the original concept introduced in “The Minority Report”).

For instance, if a retail store had a loyalty program, they would be able to (considering they already had pictures of their members) train the service to recognize each person. Then, in that same scenario, the service would be able to show them messages that were based on past buying history and known preferences.

Also, human greeters would be made a thing of the past. For example, someone could make a reservation at a restaurant and be greeted by name when they arrived and could be shown a map to their table. At the same time, the system would notify staff so they would be ready to help the individuals as they arrive.

Visual Communications represents the future of message delivery. Just imagine what it could do!

0 notes

Text

ATX Hack for Change

By Robert

This June, BlueChasm went to ATX Hack for Change Hackathon as a sponsor and Project Champion.

As a sponsor, we got to support this great cause which is a part of the National Day of Civic Hacking. This event is about getting great minds together and building something for the civil good. It ranges from mobile apps to websites and even hardware hacks that are meant to help the public.

This hackathon is different than others because unlike regular hackathons, there isn’t a “Grand Prize” to be awarded to the best idea at the end of the event. This hackathon is about building something to better our city and citizens of Austin. Project Champions come with an idea and present it to the crowd of talented programmers and designers at the beginning of the event. Shortly after, teams form and people join whichever project they like the most/are inspired by and they lend their skills to make the project come to life.

At ATX Hack for Change, BlueChasm was selected as a Project Champion. We came up with the idea of a smart sprinkler system that would not only check for the weather conditions but it would also take into consideration the city’s watering restrictions which are dictated by the Water Conservation Department.

With this project we hoped to help both the city and the citizens to save money and conserve water for commercial properties, retail properties, multifamily housing, farmers, greenhouses and single family residential which are required to irrigate often. They would see a big difference in their water consumption which would help them save money and protect the environment.

We had an amazing team of Engineering students and IT professionals that we teamed with. The team had fun building the software and developing on the hardware.

Most of the Project Champions came with their projects which they wanted to develop into full blown services or products. Our project was more of a way to spread the idea of smart watering devices and helping cities with their water conservation. Mainly by developing an API that would make it easier for developers to build water-smart devices.

In the end, the entire team had fun and some even decided to continue working on hardware related hacks. We had an amazing weekend at St.Edwards University and it was inspiring to see so many developers and designers work for a weekend in the spirit of civil good. We are definitely planning to attend next year’s ATX Hack for Change event!

1 note

·

View note

Text

Dev Day Houston 2016

Karbach Brewery: 2032 Karbach St, Houston, TX 77092

Click Here to Register!

Come to Dev Day Houston 2016 at the Karbach Brewery on July 14th! It is a meetup and expo for ALL Houston tech professionals that build amazing software for enterprises, organizations, service providers, research institutions, and everything in between.

Why Come to Dev Day Houston 2016?

Network with our extraordinary local developer ecosystem

See some innovative hacks, platforms, and digital apps AND hear how they were built! Click here for a sneak peak!

Learn about some platforms/frameworks in our mini-expo that are playing huge roles in enterprise tech stacks today

Come meet and play with IBM Watson!

Awesome complimentary beer and refreshments from our friends at the Karbach Brewery

Learn more from our great sponsors and featured technology: IBM, Mark III Systems, Avnet, BlueChasm, IBM Bluemix, IBM Watson, OpenStack, Docker, IBM Cleversafe, Ubuntu, StackStrom/Brocade, and OpenPOWER

Enter our drawing to win an Apple Watch or Apple gift card ($25-50)!

0 notes