Don't wanna be here? Send us removal request.

Text

Brealking Down The Benifits of Full Stack Development And Cloud Computing

Full Stack Development and Cloud Computing are two of the most exciting and rapidly evolving areas in the tech industry today. Understanding the benefits of these technologies is essential for anyone looking to say ahead in the ever-changing world of tech.

In this article, we'll dive into the world of both these domains, exploring the benefits and considerations of these technologies, and discussing why they are becoming increasingly important in today's tech landscape. Whether you're a tech professional or simply a tech enthusiast, you're sure to learn something new and thought-provoking as we dive into this exciting topic!

What is Full Stack Development?

Full Stack Development refers to the practice of developing both the front-end and back-end components of an application. Full Stack Developers possess a broad range of technical skills and knowledge, allowing them to develop, maintain and manage all aspects of an application.

To be a Full Stack Developer, you need to have a strong understanding of front-end technologies like HTML, CSS, and JavaScript, as well as back-end technologies such as servers, databases, and APIs. These technologies work together to create a seamless user experience, from the way a website looks and feels to the way it processes and stores data.

The front-end, or client-side, of an application is responsible for the visual design and user interaction. The back-end, or server-side, is responsible for processing data and communicating with the front-end. Full Stack Developers must have a good understanding of both sides of the application to ensure that they are working together smoothly.

What is Cloud Computing

Cloud Computing refers to the delivery of computing services—including servers, storage, databases, networking, software, analytics, and intelligence—over the Internet (“the cloud”) to offer faster innovation, flexible resources, and economies of scale.

There are three main categories of cloud computing services:

Infrastructure as a Service (IaaS): provides virtualized computing resources over the internet. This includes virtual machines, storage, and networking.

Platform as a Service (PaaS): provides a platform for developing, running, and managing applications and services.

Software as a Service (SaaS): provides access to software applications over the internet, without the need for installation or maintenance.

One of the biggest advantages of cloud computing is its scalability. You can easily scale your resources up or down depending on your needs, without having to invest in additional hardware. This means that you can quickly respond to changes in demand, without having to worry about the underlying infrastructure.

Benefits of Full Stack Development and Cloud Computing

These 2 fields are two of the most important technology trends in today's tech landscape. When combined, they bring a multitude of benefits that can enhance the development process, improve user experience, and save costs. Let's dive into some of the key benefits of cloud computing and full stack development.

A. Improved Collaboration and Communication

One of the biggest benefits of is improved collaboration and communication. With Full Stack Development, front-end and back-end developers work together seamlessly, leading to improved collaboration among teams. This also leads to better communication between developers and clients, as everyone is on the same page in terms of the development process.

B. Increased Flexibility and Scalability

Full Stack Development allows developers to be flexible in their development processes, allowing them to quickly pivot and make changes as needed. This is especially important in today's fast-paced tech landscape where projects can change quickly.

Cloud Computing also provides scalability, as applications can be easily scaled up or down based on the needs of the project. This means that as the demand for an application grows, it can be easily scaled to meet that demand, without having to worry about the costs associated with purchasing new hardware.

C. Reduced Costs

With cloud computing, organizations can reduce the costs of hardware and infrastructure, as well as the costs associated with maintenance and updates. This is because it allows organizations to pay for only what they use, rather than having to purchase and maintain their own hardware.

D. Improved Security

Security is a major concern for organizations in today's tech landscape, and full stack development and cloud computing can help improve security measures. Cloud computing provides enhanced security measures, such as encryption and data backup, to protect sensitive information. Further, full stack development can improve data protection, as developers can build security measures at every stage of the development process.

E. Better User Experience

Both these tech fields can also lead to a better user experience. With full stack development, developers can create applications with enhanced functionality, which can greatly improve the user experience. Also, cloud computing can provide a better user experience by allowing applications to run faster and more smoothly, without the need for expensive hardware.

Challenges and Considerations

While both cloud computing and full stack development offer a plethora of advantages, there are some challenges and considerations to keep in mind when implementing these technologies. Firstly, full stack development can be time-consuming and require a wide range of technical skills. This can make it difficult for developers to keep up with the latest advancements and technologies. Additionally, integrating cloud computing into a full stack development process can also be a challenge, as the technology is constantly evolving and requires a significant investment in terms of time and resources.

Another consideration is choosing the right technology stack and cloud computing services for your organization. It is essential to assess your organization's needs, budget, and goals before making a decision. It's also important to ensure that the technology stack and cloud computing services you choose are compatible and can work seamlessly together.

Summary

We have seen the different benefits that both Full Stack Development and Cloud Computing offer including improved collaboration and communication, increased flexibility and scalability, reduced costs, improved security, and better user experience. However, it's important to consider the challenges and weigh the benefits and considerations before implementing these technologies.

If you're looking to take your skills to the next level and become a Full Stack Developer, Skillslash's Full Stack Developer program is the perfect opportunity. The program provides comprehensive training in Full Stack Development, with a focus on practical hands-on learning. With a curriculum designed by industry experts and flexible online learning options, you'll acquire the skills you need to succeed in the tech industry. The program also provides you with a certificate of completion, making it easier for you to showcase your skills to potential employers.

Moreover, Skillslash also has in store, exclusive courses like ,System design course and Best Dsa Course to ensure aspirants of each domain have a great learning journey and a secure future in these fields. To find out how you can make a career in the IT and tech field with Skillslash, contact the student support team to know more about the course and institute.

0 notes

Text

Education useing data Science

Data science has the potential to revolutionize the way we approach education. With the increasing availability of data and the advances in technology, educators have an unprecedented opportunity to use data to improve student outcomes and support student success.

In this article, we will explore the ways in which data science can be used in education to enhance teaching and learning, and ultimately to create a more personalized and effective educational experience for students.

The Power of Predictive Analytics

One of the most exciting applications of data science in education is predictive analytics. Predictive analytics is a statistical technique that uses data, machine learning algorithms, and predictive models to analyze and identify patterns and relationships in data. By using this technology, educators can predict student performance and identify those students who are most at risk of falling behind. This can help schools to intervene early and provide targeted support to help students succeed.

Example - Schools could use predictive analytics to analyze student data such as attendance, grades, and behavior to identify students who are at risk of dropping out. They could then use this information to provide early interventions such as tutoring, mentoring, or counseling to help these students stay on track. This can help to improve student outcomes and increase the overall success rate of students.

Enhancing Personalization

Another way that data science can be used in education is to enhance personalization. Personalized learning is a teaching approach that provides students with customized instruction based on their individual needs and strengths. By using data, educators can understand each student's unique learning style, strengths, and weaknesses and create a personalized curriculum that best fits each student's needs.

Example - A teacher could use data to analyze student progress and identify those students who are struggling in a particular subject. The teacher could then use this information to tailor lessons and activities that are better suited to these students' learning styles and abilities. This can help to improve student engagement, motivation, and overall performance.

Improving Teacher Effectiveness

Data science can also be used to improve teacher effectiveness. By using data, educators can assess their teaching strategies and determine what is working and what is not. This can help teachers to make informed decisions about how to best support student learning and continuously improve their teaching practices.

Example - Teachers could use data to analyze student engagement in the classroom. This would further help them to identify which teaching strategies are most effective in engaging students and which strategies may need to be modified or revised. This can help to improve student engagement and motivation and ultimately to improve student outcomes.

The Importance of Ethics in Data Science in Education

While the use of data science in education can be incredibly powerful, it is also important to consider the ethical implications of this technology. With access to sensitive student information, it is essential that data is used responsibly and that privacy is protected.

Furthermore, data should be used to support and enhance student learning, not to penalize or judge students. It is important that data science is used in a way that promotes equity and fairness, and that all students are given the opportunity to succeed.

Summing it Up

Data science has the potential to revolutionize the way we approach education. By using data to improve student outcomes and support student success, we can create a more personalized and effective educational experience for students.

As we continue to explore the possibilities of data science in education, it is essential that we consider the ethical implications of this technology and use it in a responsible and equitable manner. By doing so, we can help to ensure that all students have the opportunity to succeed and reach their full potential.

Skillslash - Your Go-to Solution for a Thriving Career in Data Science

As we go deep into the topic of data science in the context of education, it is important to highlight the importance of upskilling and staying ahead of the curve. With the ever-evolving advancements in technology, it is crucial for educators and professionals to continuously improve their knowledge and skills in this field. The Advanced Data Science and AI program by Skillslash is a perfect solution for those looking to deepen their expertise in this area.

The program is designed to provide comprehensive and hands-on training in the latest data science techniques and tools. It covers topics such as predictive analytics, machine learning, and deep learning, and is taught by experienced industry professionals. The curriculum is designed to meet the demands of the fast-growing field of data science and is regularly updated to reflect the latest trends and best practices.

The benefits of this program are numerous, from gaining a competitive edge in the job market to having the ability to use data science to drive decision-making and improve outcomes in education. The program is flexible, allowing participants to study at their own pace and on their schedule, making it ideal for busy professionals. Additionally, the students will undergo an internship with top AI startups to gain real-world experience by working on industry-specific projects. And finally, the Skillslash team provides each student with unlimited job referrals to get them placed in one of the top MNCs successfully. Moreover, Skillslash also has in store, exclusive courses system design Course and Data Structures Course to ensure aspirants of each domain have a great learning journey and a secure future in these fields. To find out how you can make a career in the IT and tech field with Skillslash, contact the student support team to know more about the course and institute.

#datascience#datascientist#fullstacktraining#fullstackdeveloper#machinelearning#data science training#software engineering#students

0 notes

Text

Data science has the potential to revolutionize the way we approach education. With the increasing availability of data and the advances in technology, educators have an unprecedented opportunity to use data to improve student outcomes and support student success.

In this article, we will explore the ways in which data science can be used in education to enhance teaching and learning, and ultimately to create a more personalized and effective educational experience for students.

The Power of Predictive Analytics

One of the most exciting applications of data science in education is predictive analytics. Predictive analytics is a statistical technique that uses data, machine learning algorithms, and predictive models to analyze and identify patterns and relationships in data. By using this technology, educators can predict student performance and identify those students who are most at risk of falling behind. This can help schools to intervene early and provide targeted support to help students succeed.

Example - Schools could use predictive analytics to analyze student data such as attendance, grades, and behavior to identify students who are at risk of dropping out. They could then use this information to provide early interventions such as tutoring, mentoring, or counseling to help these students stay on track. This can help to improve student outcomes and increase the overall success rate of students.

Enhancing Personalization

Another way that data science can be used in education is to enhance personalization. Personalized learning is a teaching approach that provides students with customized instruction based on their individual needs and strengths. By using data, educators can understand each student's unique learning style, strengths, and weaknesses and create a personalized curriculum that best fits each student's needs.

Example - A teacher could use data to analyze student progress and identify those students who are struggling in a particular subject. The teacher could then use this information to tailor lessons and activities that are better suited to these students' learning styles and abilities. This can help to improve student engagement, motivation, and overall performance.

Improving Teacher Effectiveness

Data science can also be used to improve teacher effectiveness. By using data, educators can assess their teaching strategies and determine what is working and what is not. This can help teachers to make informed decisions about how to best support student learning and continuously improve their teaching practices.

Example - Teachers could use data to analyze student engagement in the classroom. This would further help them to identify which teaching strategies are most effective in engaging students and which strategies may need to be modified or revised. This can help to improve student engagement and motivation and ultimately to improve student outcomes.

The Importance of Ethics in Data Science in Education

While the use of data science in education can be incredibly powerful, it is also important to consider the ethical implications of this technology. With access to sensitive student information, it is essential that data is used responsibly and that privacy is protected.

Furthermore, data should be used to support and enhance student learning, not to penalize or judge students. It is important that data science is used in a way that promotes equity and fairness, and that all students are given the opportunity to succeed.

Summing it Up

Data science has the potential to revolutionize the way we approach education. By using data to improve student outcomes and support student success, we can create a more personalized and effective educational experience for students.

As we continue to explore the possibilities of data science in education, it is essential that we consider the ethical implications of this technology and use it in a responsible and equitable manner. By doing so, we can help to ensure that all students have the opportunity to succeed and reach their full potential.

Skillslash - Your Go-to Solution for a Thriving Career in Data Science

As we go deep into the topic of data science in the context of education, it is important to highlight the importance of upskilling and staying ahead of the curve. With the ever-evolving advancements in technology, it is crucial for educators and professionals to continuously improve their knowledge and skills in this field. The Advanced Data Science and AI program by Skillslash is a perfect solution for those looking to deepen their expertise in this area.

The program is designed to provide comprehensive and hands-on training in the latest data science techniques and tools. It covers topics such as predictive analytics, machine learning, and deep learning, and is taught by experienced industry professionals. The curriculum is designed to meet the demands of the fast-growing field of data science and is regularly updated to reflect the latest trends and best practices.

The benefits of this program are numerous, from gaining a competitive edge in the job market to having the ability to use data science to drive decision-making and improve outcomes in education. The program is flexible, allowing participants to study at their own pace and on their schedule, making it ideal for busy professionals. Additionally, the students will undergo an internship with top AI startups to gain real-world experience by working on industry-specific projects. And finally, the Skillslash team provides each student with unlimited job referrals to get them placed in one of the top MNCs successfully. Moreover, Skillslash also has in store, exclusive courses system design Course and Data Structures Course to ensure aspirants of each domain have a great learning journey and a secure future in these fields. To find out how you can make a career in the IT and tech field with Skillslash, contact the student support team to know more about the course and institute.

#datascience#datascientist#fullstacktraining#fullstackdeveloper#machinelearning#data science training#software engineering#cybersecurity#students

0 notes

Text

How Data Science is Revolutionizing Marketing and Advertising

Data science is one of the most significant technological advancements of our time.

It's revolutionizing the way businesses approach marketing and advertising. Gone are the days of guessing and assumptions when it comes to reaching and engaging with customers.

Data science has provided us with the ability to understand customer behavior and preferences like never before. With the help of data-driven insights, businesses are now able to craft personalized marketing strategies, improve decision-making and drive better results.

This article will explore the role of data science in marketing and advertising, the benefits it provides, and some of the most impactful case studies of its application. Whether you're a marketer, advertiser, or just curious about the future of marketing, you won't want to miss this article.

Get ready to discover how data science is revolutionizing marketing and advertising and why it matters to you.

The Role of Data Science in Marketing and Advertising

Data science plays a crucial role in the world of marketing and advertising. It's the process of collecting data from various sources, analyzing it, and using the insights gained to make informed decisions. In marketing and advertising, data science helps businesses to understand their customers on a deeper level. With the help of data science, companies can collect data from multiple sources such as social media, email marketing, customer feedback, and more. This data is then analyzed to uncover customer behavior, preferences, and pain points.

Data science also enables marketers to craft personalized marketing strategies. With a better understanding of customers, businesses can create targeted campaigns that resonate with their audience and drive better results. This level of personalization is not only more effective, but it also provides a better customer experience, increasing brand loyalty and trust.

The impact of data science in marketing and advertising extends far beyond just personalized marketing strategies. By analyzing customer data, businesses can make informed decisions about how to allocate their advertising budget. This leads to more efficient use of resources and higher returns on investment.

The Benefits of Data Science in Marketing and Advertising

Data science is a game-changer in the marketing and advertising industry. It provides numerous benefits that help businesses to achieve their goals and drive better results. Some of the most significant benefits include:

Increased efficiency and effectiveness of marketing campaigns

Data science helps businesses to create more effective marketing campaigns by providing insights into the behavior and preferences of target audiences. With this information, marketers can craft campaigns that resonate with customers and drive better results.

A better understanding of target audiences

With data science, businesses can gain a deep understanding of their target audiences. This includes their buying habits, preferences, and behaviors. This information is crucial for creating effective marketing strategies and building relationships with customers.

Optimization of ad spend

Data science provides insights into the effectiveness of advertising campaigns, which helps businesses to optimize their ad spending. Marketers can use this information to focus their ad spend on the channels that deliver the best results and cut back on those that are underperforming.

Improved customer engagement

Data science helps businesses to create personalized marketing campaigns that engage customers and build relationships. By understanding their target audiences, businesses can create campaigns that resonate with customers and drive better engagement.

Better measurement and analysis of campaign results

Data science provides businesses with the tools to measure and analyze the results of their marketing campaigns. This information is crucial for understanding what's working and what's not, and for making data-driven decisions about future campaigns.

These benefits are just the tip of the iceberg when it comes to the impact of data science in marketing and advertising. By using data-driven insights, businesses can create more effective campaigns, reach and engage with customers in a meaningful way, and drive better results.

Case Studies of Data Science in Marketing and Advertising

The impact of data science in marketing and advertising is best demonstrated through real-life examples. In this section, we'll look at three companies that have leveraged data science to drive success in their marketing and advertising efforts: Netflix, Amazon, and Google.

Netflix - The streaming giant is a master of personalized marketing. They use data science to analyze customer viewing habits, preferences, and ratings. With this information, they can suggest personalized recommendations for each subscriber, resulting in a highly personalized viewing experience. This has been a major driver of their success, as customers are more likely to stick around and subscribe when they feel like the content they are watching is tailored specifically to them.

Amazon - Amazon's vast amount of customer data provides them with valuable insights into customer behavior and preferences. They use this data to optimize their advertising spending and ensure that their ads reach the right customers at the right time. They are also able to measure the effectiveness of their advertising efforts, making data-driven decisions about where to allocate their advertising budget for maximum impact.

Google - Google has been using data science for years to drive its advertising efforts. Their advertising platform, Google AdWords, uses data science to determine the relevance of an advertisement to a particular search query. This allows advertisers to reach the right customers at the right time, making their advertising efforts more efficient and effective. Google's data-driven approach to advertising has been so successful that they have become the dominant player in the online advertising market.

Companies can craft more effective marketing strategies, reach the right customers, and drive better results by leveraging data-driven insights.

Conclusion

Data science has revolutionized the way businesses approach marketing and advertising. With the ability to collect and analyze data from various sources, businesses can now better understand their customers and create targeted marketing strategies. The benefits of data science in marketing and advertising are numerous, including increased efficiency, better customer engagement, and improved return on investment. Companies like Netflix, Amazon, and Google have already capitalized on the power of data science and are reaping the rewards.

The importance of data science in marketing and advertising cannot be overstated. To remain competitive in today's market, businesses must adopt data-driven strategies and techniques.

For those interested in taking their marketing and advertising skills to the next level, Skillslash offers an Advanced Data Science and AI program. This program will equip you with the knowledge and skills needed to harness the power of data science in your marketing and advertising efforts. With a focus on hands-on learning and real-life applications, this program will help you transform your marketing and advertising efforts, delivering better results and increased ROI. So, what are you waiting for? Join the program today and become a leader in the field of data-driven marketing and advertising.

Skillslash also has in store, exclusive courses like Data Science Course In Bangalore, System design Course and Web Development Course in pune to ensure aspirants of each domain have a great learning journey and a secure future in these fields. To find out how you can make a career in the IT and tech field with Skillslash, contact the student support team to know more about the course and institute.

#datascience#datascientist#fullstacktraining#fullstackdeveloper#machinelearning#data science training#software engineering

0 notes

Text

Data Science in Finance: Managing Risk and Improving Profits

Data Science in Risk Management Data science plays a critical role in identifying, assessing, and managing risks in finance. The goal of risk management is to minimize the potential losses associated with financial investments and operations. In the past, financial institutions relied on traditional methods such as rule-based systems and expert judgment to manage risk. However, these methods have proven to be insufficient in today's complex and rapidly changing financial landscape. This is where data science comes in. By leveraging large amounts of financial data and advanced analytical techniques, data scientists can develop more accurate risk assessments and make better-informed decisions. Let's take a look at some of the data-driven risk management techniques that are being used in the finance industry today. Monte Carlo simulations are one of the most widely used data-driven risk management techniques. These simulations use statistical methods to model and analyze the potential outcomes of a particular financial scenario. Monte Carlo simulations can help financial institutions understand the likelihood of different risk scenarios and make more informed decisions about investments and operations. Challenges and Best Practices for Data Science in Finance Data science in finance can be a game-changer when it comes to managing risk and improving profits, but implementing these techniques is not without challenges. In this section, we'll discuss some of the most common challenges faced by financial organizations and best practices for overcoming them. Data Quality: One of the biggest challenges in using data science in finance is ensuring the quality of the data being analyzed. Poor data quality can lead to incorrect results and poor decision-making. To overcome this challenge, financial organizations should focus on data governance, which involves establishing clear policies and procedures for managing data. This includes ensuring that data is complete, accurate, and consistent, as well as regular monitoring and verifying the quality of the data being used. Ethical Considerations: The use of data science in finance raises important ethical questions, such as privacy and bias. For example, algorithms used to assess risk may inadvertently discriminate against certain populations. To ensure that ethical considerations are taken into account, financial organizations should establish ethical guidelines and train their data scientists in ethical best practices. They should also regularly review their data science processes to identify and address any potential ethical issues. Best Practices for Overcoming Challenges: To overcome the challenges of using data science in finance, financial organizations should adopt best practices such as: ● Collaborating between IT and business teams to ensure data quality and regulatory compliance ● Establishing clear data governance policies and procedures ● Providing ethics training for data scientists ● Regularly reviewing data science processes to identify and address potential ethical and compliance issues ● Investing in technology and infrastructure to support data science initiatives Conclusion Data science has the potential to revolutionize the finance industry. Its applications in risk management and improving profits are vast and diverse and can bring numerous benefits to financial organizations. However, implementing data science in finance is not without its challenges. Ensuring data quality, following ethical considerations, and meeting regulatory compliance are just a few of the challenges that must be overcome to make the most of data science in finance. The best way to overcome these challenges and make the most of data science in finance is to adopt best practices.

The program provides hands-on experience and real-world case studies, which help participants apply their learning to real-world challenges in finance. The program also features an industry-recognized certification, which can help professionals showcase their expertise and advance their careers. Skillslash also has in store, exclusive courses like Data Science Course In Jaipur,Best system design course and Web Development Course in pune to ensure aspirants of each domain have a great learning journey and a secure future in these fields. To find out how you can make a career in the IT and tech field with Skillslash, contact the student support team to know more about the course and institute.

#datascience#datascientist#fullstacktraining#fullstackdeveloper#machinelearning#data science training#software engineering#cybersecurity#students

0 notes

Text

Top 7 NodeJS Libraries And Tools For Machine Learning

NodeJS is an open-source server-side platform built on Chrome's V8 JavaScript engine.

It is known for its fast and efficient performance, making it an ideal choice for web applications and real-time applications. On the other hand, Machine Learning refers to the process of teaching computers to perform tasks without explicit programming.

It enables computers to learn from data and improve from experience. The integration of NodeJS with Machine Learning has made it possible for developers to build and deploy ML applications on the web.

With NodeJS, developers can leverage the power of JavaScript to build applications with machine learning capabilities. This has opened up new avenues for the development of web applications with advanced features such as speech recognition, natural language processing, and computer vision.

The combination of NodeJS and Machine Learning is providing developers with the necessary tools to create highly sophisticated applications that can perform complex tasks with ease. NodeJS also provides a large community of developers, ensuring that there is always support and resources available to help developers build their applications.

We may say this marriage of NodeJS and Machine Learning is a powerful combination that is rapidly gaining popularity and is set to transform the way we build web applications.

7 NodeJS Libraries and Tools for Machine Learning

TensorFlow.js

TensorFlow.js is a powerful open-source library for machine learning that runs on NodeJS. It allows developers to implement, train, and deploy machine learning models directly in the browser or on a NodeJS server. TensorFlow.js also supports transfer learning, which means developers can use pre-trained models and fine-tune them for specific tasks, saving time and resources. The library provides a simple and intuitive API that enables developers to quickly build and deploy machine learning models.

TensorFlow.js is highly flexible and scalable, making it an ideal choice for developers who want to build and deploy machine learning applications with NodeJS. Whether you're a beginner or an experienced developer, TensorFlow.js offers a vast array of tools and resources that make machine learning with NodeJS easy and accessible.

Brain.js

Brain.js is one of the most powerful and user-friendly NodeJS libraries for machine learning. It is an open-source library that offers a range of machine learning algorithms, including feedforward neural networks, recurrent neural networks, and long short-term memory (LSTM) networks.

Brain.js is highly optimized for performance, allowing developers to create and train models in real time. This makes it an ideal choice for applications that require real-time prediction and decision-making. It also offers a simple and intuitive API, making it easy for developers to get started with machine learning, even if they have limited experience in the field. Brain.js is the perfect tool for developers looking to create intelligent applications in NodeJS.

Synaptic

Synaptic is an advanced neural network library for NodeJS that is both flexible and user-friendly. It provides developers with a high-level API that makes it easy to build, train, and validate neural networks in a variety of applications. The library's architecture allows for easy integration with other NodeJS tools and libraries, making it a great choice for machine learning projects.

Synaptic supports a wide range of algorithms, including multi-layer perceptrons, Hopfield networks, and Kohonen self-organizing maps, making it a versatile tool for a variety of use cases. In addition, Synaptic's modular structure makes it easy to fine-tune and optimize networks for specific applications, delivering improved performance and accuracy.

ConvNetJS

ConvNetJS is a powerful and user-friendly NodeJS library for machine learning. It enables developers to build and train complex deep learning models, including Convolutional Neural Networks (ConvNets), with ease. ConvNetJS is built on top of NodeJS, which makes it an ideal choice for web-based machine-learning applications. It is an open-source library with a large community of developers, who contribute to its development and improvement.

ConvNetJS is optimized for GPU acceleration, which makes it a great choice for large-scale machine-learning projects. With its intuitive interface and support for various activation functions, ConvNetJS is a great tool for developers to quickly build and train powerful machine learning models.

deeplearn.js

deeplearn.js is a library that enables the development of deep learning algorithms in JavaScript. It allows developers to easily integrate machine learning into their web applications and run neural networks in the browser or NodeJS. The library supports a wide range of models and algorithms, including convolutional neural networks, recurrent neural networks, and deep belief networks. deeplearn.js leverages the GPU acceleration capabilities of modern browsers to deliver fast and efficient performance, making it an ideal choice for complex machine-learning tasks.

Furthermore, the library is designed to be simple and user-friendly, making it accessible to developers with limited machine-learning experience. Whether you are a seasoned developer or just starting, deeplearn.js is a great tool to add to your machine-learning toolkit.

NeuralNets

NeuralNets is a powerful and flexible NodeJS library for creating and training artificial neural networks. It offers a wide range of features for developing complex machine-learning models, including a simple and intuitive API for defining network architecture, various activation functions, and efficient training algorithms.

The library is designed to work seamlessly with other NodeJS libraries and tools, making it an ideal choice for integrating machine learning capabilities into existing applications. With its ease of use, scalability, and high performance, NeuralNets is a great option for developers looking to incorporate machine learning into their projects.

ML.js

ML.js is a popular NodeJS library for machine learning that provides a high-level API for building and training machine learning models. It is designed to be easy to use and accessible to developers with limited experience in machine learning.

ML.js utilizes several popular open-source machine learning libraries and tools under the hood, making it a one-stop-shop for developers who want to start using machine learning in their NodeJS applications. With it, developers can easily build and train machine learning models without having to worry about the complexities of setting up and running machine learning algorithms.

It also provides several pre-trained models for common use cases, making it easy for developers to get started quickly. Doesn't matter if you are a beginner or an experienced machine learning developer, ML.js is a powerful tool that can help you quickly build and deploy machine learning models in your NodeJS applications.

Conclusion

These libraries and tools provide developers with the necessary tools to build, train and deploy machine learning models efficiently and effectively. The importance of using NodeJS libraries and tools in machine learning cannot be overstated as it allows for quick and efficient implementation of machine learning algorithms and models in various applications.

For those who want to take their understanding and skills of machine learning to the next level, theAdvanced Data Science and AI program by Skillslash is a great opportunity to gain hands-on experience in this field. The program covers the latest techniques and tools used in machine learning and artificial intelligence and provides participants with practical, real-world experience.

Moreover, Skillslash also has in store, exclusive courses like Data Science Course In Bangalore, system design Course and Web Development Course to ensure aspirants of each domain have a great learning journey and a secure future in these fields. To find out how you can make a career in the IT and tech field with Skillslash, contact the student support team to know more about the course and institute.

#datascience#datascientist#fullstacktraining#fullstackdeveloper#machinelearning#data science training#python

0 notes

Text

DBT: Build and Transform Data Models Faster and Easier

Data modeling is an essential part of any data-driven organization.

It helps to structure and organize data in a way that is easy to understand and use. However, traditional data modeling can be time-consuming and complex, requiring specialized knowledge and expertise.

DBT (Data Build Tool) is a powerful open-source tool that makes it easier and faster to build and transform data models. It provides an intuitive user interface that allows users to quickly create complex data models with minimal effort.

It also simplifies the process of transforming existing models into new ones, allowing for rapid prototyping and iteration. With DBT, organizations can quickly develop and deploy data models that meet their needs, saving time and money while improving accuracy.

Benefits of using DBT

Data Build Tool offers a wide range of benefits such as:

a. Increased efficiency

Data build tool helps organizations increase their data engineering efficiency.

DBT provides a platform for data engineers to create and maintain data models, transform data into useful insights, and deploy those insights into production. It automates the process of creating data models, which reduces the time spent on manual coding and debugging.

Additionally, it simplifies the process of transforming raw data into useful insights by providing a library of pre-built functions and templates. This allows data engineers to quickly build models without having to write complex code.

Furthermore, DBT simplifies the deployment process by providing a single platform for deploying models into production. This eliminates the need for manual deployment processes and ensures that models are deployed quickly and accurately.

DBT helps businesses become more efficient by automating tasks, making development easier, and reducing deployment time.

b. Improved accuracy

Dbt also helps to improve the accuracy of data models and improve the performance of data warehouses.

It provides an automated process for transforming raw data into usable insights. It automates the process of cleaning, transforming, and validating data so that it can be used for analysis and reporting.

Additionally, dbt helps to ensure that data is up-to-date and accurate by providing a platform for monitoring and auditing data quality. By providing an automated process for transforming raw data into usable insights, it helps to reduce manual errors and improve the accuracy of data models.

Furthermore, dbt provides a platform for testing and validating data models which helps to identify potential issues before they become problems. Through its automated processes, it enables organizations to quickly identify and correct any errors in their data models, leading to improved accuracy.

c. Reduced complexity

DBT simplifies data analysis complexity with SQL-based code that's simpler and easier to understand. It eliminates manual coding, breaking down complex tasks into smaller, more manageable pieces and automating data transformation. This reduces the time and resources needed to complete a task while improving data accuracy with consistent transformations. In short, DBT simplifies, automates, and improves - the perfect trifecta for success!

How DBT works

DBT (Data Build Tool) is a powerful open-source tool that enables data analysts, engineers, and scientists to transform raw data into meaningful insights. It helps organizations to build better data pipelines and optimize their data management processes. DBT works by allowing users to define and execute data transformations in a consistent, repeatable way.

Modeling Data with SQL

DBT provides a simple yet powerful way to model data using SQL. This allows users to create models that are easy to understand and maintain. The modeling process involves defining the structure of the data, including tables, columns, relationships, and constraints. Once the model is defined, DBT can generate the necessary SQL statements to create the model in the database.

Automating Data Transformations

DBT automates the process of transforming raw data into meaningful insights. It provides a comprehensive set of data transformation functions that can be used to cleanse, aggregate, and analyze data. These functions can be chained together to create complex transformations that are easy to understand and maintain. Additionally, DBT provides a visual interface for creating and debugging transformation pipelines.

Creating Reliable, Reusable Code

DBT helps users create reliable and reusable code by providing an integrated development environment (IDE). This IDE includes features such as syntax highlighting, auto-completion, linting, and debugging tools. Additionally, DBT provides version control capabilities so users can track changes over time and collaborate on projects with other team members. This makes it easier for teams to develop reliable code that is easy to maintain and share across different environments.

Use cases for DBT

Dbt is designed to simplify the process of transforming raw data into actionable insights. It is used by data analysts, engineers, and scientists to build and maintain complex data models and can be used for a variety of use cases, including:

Data Warehousing: Dbt is an ideal tool for building and maintaining data warehouses. It helps users quickly transform large amounts of raw data into meaningful insights that can be used for decision-making. Dbt provides a range of features that make it easy to create and manage complex data models, such as automated ETL processes, dynamic SQL queries, and version control. This makes it easier for users to quickly identify trends and anomalies in their datasets.

Data Lakes: It is also useful for creating and managing data lakes. Data lakes are large repositories of structured and unstructured data that can be used for analytics purposes. Dbt allows users to easily transform raw data into meaningful insights that can be used for predictive analytics or machine learning applications. It also provides features such as automated ETL processes, dynamic SQL queries, and version control which make it easier to manage large datasets in the cloud or on-premises.

Data Science Projects: Data build tool is also a great tool for building and managing complex data science projects. It allows users to quickly transform raw data into insights that can be used for predictive analytics or machine learning applications. DBT provides features such as automated ETL processes, dynamic SQL queries, and version control which make it easier to manage large datasets in the cloud or on-premises. Additionally, Dbt has built-in support for popular programming languages such as Python, R, and Scala which makes it easy to integrate with existing applications or create new ones from scratch.

Conclusion

DBT (Data Build Tool) is a powerful tool for data engineers and analysts to build and transform data models faster and easier. With its user-friendly interface and flexible syntax, DBT helps users streamline the data modeling process and increase efficiency. Whether you're a seasoned data professional or just starting out, DBT is a tool worth considering for your data modeling needs.

We at Skillslash believe that staying ahead of the curve in the data science domain is crucial in today's rapidly evolving tech landscape. That's why we've created the Advanced Data Science and AI program, designed to help you level up your skills and become an industry leader.

Highlights of the program include:

100% live interactive sessions

Hands-on learning with real-world projects

Industry-relevant curriculum developed by experts

Personalized mentorship and career support

Unlimited job referrals to get you placed in one of the top MNCs

With our program, you'll have the opportunity to gain practical experience, connect with professionals in your field, and take your career to the next level. Enroll today and start building your expertise in the tech domain!

Moreover, Skillslash also has in store, exclusive courses like System design best course , web Developer Course in pune and Web Development Course to ensure aspirants of each domain have a great learning journey and a secure future in these fields. To find out how you can make a career in the IT and tech field with Skillslash, contact the student support team to know more about the course and institute.

#datascience#datascientist#fullstacktraining#fullstackdeveloper#machinelearning#data science training#software engineering#dbt skills

0 notes

Text

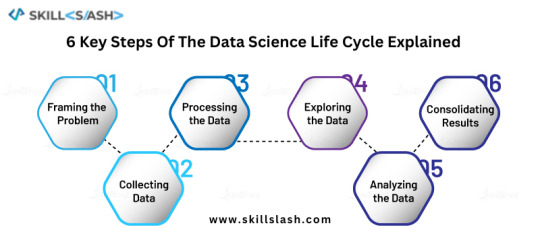

6 Key Steps Of The Data Science Life Cycle Explained

The field of data science is rapidly growing and has become an essential tool for businesses and organizations to make data-driven decisions. The data science life cycle is a step-by-step process that helps data scientists to structure their work and ensure that their results are accurate and reliable. In this article, we will be discussing the 6 key steps of the data science life cycle and how they play a crucial role in the data science process.

The data science life cycle is a cyclical process that starts with defining the problem or research question and ends with deploying the model in a production environment. The 6 key steps of the data science life cycle include problem definition, data collection and exploration, data cleaning and preprocessing, data analysis and modeling, evaluation, and deployment. Each step is crucial in the data science process and must be completed to produce accurate and effective models.

Problem Definition

When it comes to data science, the first and arguably most important step is defining the problem or research question. Without a clear understanding of what you are trying to achieve, it's impossible to move forward in the data science life cycle.

The problem definition step is where you determine the objectives of your project and what you hope to achieve through your analysis. This step is crucial because it lays the foundation for the rest of the project and guides the direction of the data collection, exploration, analysis, and modeling.

For example, if you're working in the retail industry, your problem might be to identify patterns in customer purchase behavior. This would then guide your data collection efforts to focus on customer demographics, purchase history, and other relevant data. On the other hand, if you're working in the healthcare industry, your problem might be predicting patient readmissions. This would then guide your data collection efforts to focus on patient health records, treatment history, and other relevant data.

It's important to note that the problem definition can change as the project progresses. As you explore and analyze the data, you may find that your original problem statement needs to be adjusted. This is normal and is a part of the iterative process of data science.

Data Collection and Exploration

Data collection and exploration are crucial steps in the data science life cycle. The goal of these steps is to gather and analyze the data that will be used to answer the research question or solve the problem defined in the first step.

There are several methods for data collection, including web scraping, APIs, and survey data. Web scraping involves using a program to automatically extract data from websites, while APIs (Application Programming Interfaces) allows for the retrieval of data from a specific source. Survey data is collected by conducting surveys or interviews with a sample of individuals.

Once the data is collected, it is important to explore it to identify patterns, outliers, and missing data. This can be done using tools such as R or Python, which allow for the visualization and manipulation of the data. During this step, it is also important to check for any potential issues with the data, such as missing or duplicate values.

Data exploration can be an iterative process, and it is important to keep the research question or problem in mind while exploring the data. This will help to ensure that the data being analyzed is relevant to the project and that any patterns or insights identified are useful for answering the research question or solving the problem.

Data Cleaning and Preprocessing

Data cleaning and preprocessing are crucial steps in the data science life cycle. These steps help to ensure that the data used in the analysis and modeling stages are accurate, complete, and ready for use. Data cleaning involves identifying and removing duplicates, missing data, and outliers. Data preprocessing involves preparing the data for analysis by converting it into a format that can be used by the chosen analysis and modeling tools.

One of the most important steps in data cleaning is identifying and removing duplicates. Duplicate data can lead to inaccuracies in the analysis and modeling stages, and can also increase the size of the dataset unnecessarily. This can be done by comparing unique identifiers such as ID numbers or email addresses.

Missing data is another issue that must be addressed during data cleaning. Missing data can occur for a variety of reasons such as survey participants not responding to certain questions or data not being recorded correctly. This can be addressed by either removing the missing data or imputing it with a suitable value.

Outliers are data points that lie outside the typical range of values. These points can have a significant impact on the results of the analysis and modeling stages, and therefore must be identified and dealt with accordingly. This can be done by using visualization tools such as box plots or scatter plots.

Data preprocessing involves converting the data into a format that can be used by the chosen analysis and modeling tools. This can include tasks such as converting categorical variables into numerical values, normalizing data, and dealing with missing data.

Data Analysis and Modeling

Data analysis and modeling is the fourth step in the data science life cycle. This step involves using various techniques to uncover insights and patterns in the data that were collected and preprocessed in the previous steps. The goal of data analysis is to understand the underlying structure of the data and extract meaningful information. The most common types of data analysis are descriptive, inferential, and predictive. Descriptive analysis is used to summarize the data, inferential analysis is used to make predictions based on the data, and predictive analysis is used to identify patterns and relationships in the data.

Modeling is the process of creating a mathematical representation of the data. The most common types of models used in data science are regression, classification, and clustering. Regression models are used to predict a continuous outcome, classification models are used to predict a categorical outcome, and clustering models are used to group data points into clusters.

Data analysis and modeling are important steps in the data science life cycle because they help to uncover insights and patterns in the data that can be used to make predictions and decisions. These insights and patterns can be used to improve business processes, make more informed decisions, and create new products and services.

Evaluation and Deployment

The final two steps in the data science life cycle are evaluating the model and deploying it. These steps are crucial in ensuring that the model is accurate and effective in solving the problem it was designed for.

First, the model's performance is evaluated using various metrics such as accuracy, precision, and recall. This helps to determine if the model is performing well and if any adjustments need to be made. For example, if a model is being used for a binary classification problem, it is important to check the precision and recall rates for both classes.

Once the model has been evaluated and any necessary adjustments have been made, it can be deployed to a production environment. This means that the model is now ready to be used by others to make predictions or decisions. The model can be deployed as an API, a web application, or integrated into an existing system.

It's important to note that the model should be regularly monitored and updated as needed. This is because the data and the problem it is solving may change over time, and the model may need to be retrained to reflect these changes.

Conclusion

The data science life cycle is a crucial process that helps to ensure accurate and effective models are produced. By following the six key steps of problem definition, data collection and exploration, data cleaning and preprocessing, data analysis and modeling, evaluation, and deployment, data scientists can ensure that their models are robust and can be deployed in a production environment.

At Skillslash, we understand the importance of mastering the data science life cycle, which is why our Advanced Data Science and AI program is designed to give you the knowledge and skills you need to become a successful data scientist. Our program covers all of the key steps in the data science life cycle and is taught by industry experts who have years of experience in the field.

By enrolling in our program, you will learn the latest techniques and tools used in data science and gain hands-on experience through real-world projects. Our program will also provide you with the opportunity to network with other like-minded individuals and gain the confidence and skills you need to succeed in this exciting field.

Moreover, Skillslash also has in store, exclusive courses like , system Design Course and Web Development Course to ensure aspirants of each domain have a great learning journey and a secure future in these fields. To find out how you can make a career in the IT and tech field with Skillslash, contact the student support team to know more about the course and institute.

#datascience#datascientist#fullstacktraining#fullstackdeveloper#machinelearning#data science training

0 notes

Text

5 Tips To Enhance Customer Service Using Data Science

Data science has become an integral part of customer service in the modern digital age.

Companies are using data-driven insights to better understand their customers, identify trends, and anticipate customer needs. But how can businesses use data science to enhance customer service?

In this article, we will explore 5 tips to enhance customer service using data science. From collecting and analyzing customer data to utilizing machine learning and natural language processing for automated responses, we will discuss how businesses can leverage data science to provide a better customer experience.

Can data science really improve customer service? How can businesses use predictive analytics to anticipate customer needs?

Read on to find out!

Importance of Data Science in Customer Service

Data science is an invaluable asset when it comes to customer service.

It helps companies understand their customers better, anticipate their needs, and offer better services. Data science can be used to analyze customer feedback, identify trends and patterns in customer behavior, and develop insights into customer preferences.

This information can be used to improve customer service by providing personalized experiences and tailored solutions. Companies can also use data science to identify opportunities for improvement in customer service processes, such as streamlining operations or introducing new technologies.

Additionally, data science can help companies better understand their customers' needs and expectations, allowing them to develop more effective strategies for meeting those needs. By leveraging data science, companies can provide a higher level of customer service that is both efficient and effective.

5 Tips to Enhance Customer Service Using Data Science

Data Science can be used to enhance customer service and improve customer experience, and here are 5 tips to get you started.

A. Collect and Analyze Customer Data

By collecting and analyzing customer data, businesses can gain valuable insights into customer behavior and preferences.

This information can then be used to create more personalized experiences for customers, improve customer service, and increase customer loyalty. Data science can also be used to identify potential problems before they arise, allowing businesses to proactively address issues and prevent them from becoming larger issues in the future.

Ultimately, data science can help businesses better understand their customers, leading to improved customer service and satisfaction.

B. Utilize Automation for Repetitive Tasks

One of the best ways to enhance customer service using data science is to utilize automation for repetitive tasks.

Automation can help streamline processes and reduce errors while freeing up customer service agents to focus on more complex tasks. Automation can also provide customers with faster and more accurate responses, leading to improved customer satisfaction.

Automation can also be used to analyze customer data and identify areas where customer service can be improved. By utilizing automation for repetitive tasks, businesses can improve their customer service and ensure a better experience for their customers.

C. Utilize Machine Learning to Identify Trends and Patterns

Utilizing machine learning to identify trends and patterns in customer service data can be a great way to enhance customer service.

Machine learning algorithms can be used to detect patterns in customer data, such as which products customers are buying, how often they make purchases, and what their preferences are. This data can then be used to create targeted marketing campaigns or to develop new products and services that better meet customer needs.

By leveraging machine learning, businesses can better understand their customers and provide more personalized services that lead to improved customer satisfaction.

D. Implement Predictive Analytics to Anticipate Customer Needs

Predictive analytics can be used to anticipate customer needs and provide better customer service.

By analyzing customer data, businesses can identify patterns and trends that can help them predict what customers may need in the future. This can be used to provide personalized offers and services tailored to each customer's needs.

Predictive analytics can also be used to anticipate customer problems before they arise, allowing businesses to proactively address any issues before they become a problem.

E. Use Natural Language Processing for Automated Responses

Natural Language Processing (NLP) is a powerful tool for enhancing customer service.

NLP can be used to create automated responses that are tailored to each customer's specific needs. This can help reduce response time and improve customer satisfaction, as customers can get the answers they need quickly and accurately.

NLP also allows customer service agents to focus on more complex customer inquiries, leading to improved customer service overall.

Conclusion

Data science is a powerful tool to enhance customer service. By collecting and analyzing customer data, utilizing automation for repetitive tasks, leveraging machine learning to identify trends and patterns, implementing predictive analytics to anticipate customer needs, and using natural language processing for automated responses, businesses can increase customer satisfaction and loyalty.

For working professionals looking to upskill in data science, Skillslash's Advanced Data Science and AI program is a perfect choice. The program offers an immersive learning experience with hands-on projects and industry-recognized certifications. It also provides access to a global network of experts and alumni from top universities. With a flexible schedule, interactive live sessions, and personal mentorship from industry experts, the program are designed to help professionals take their career to the next level. Make sure you don't miss out on this opportunity and enroll today to go on a world-class learning journey.

Moreover, Skillslash also has in store, exclusive courses like Data Science Course In Bangalore, System design Course and Web Development Course in pune to ensure aspirants of each domain have a great learning journey and a secure future in these fields. To find out how you can make a career in the IT and tech field with Skillslash, contact the student support team to know more about the course and institute.

#datascience#datascientist#fullstacktraining#fullstackdeveloper#machinelearning#data science training#software engineering

0 notes

Text

10 Ways Data Analytics Can Help You Generate More Leads

Data analytics has become a powerful tool for businesses to generate leads and increase their sales opportunities.

By leveraging data-driven insights, companies can gain a better understanding of their target markets and tailor their strategies to maximize lead generation. In this article, we will explore 10 ways that data analytics can help you generate more leads.

From understanding customer behavior to optimizing marketing campaigns, businesses can use data analytics to identify the right prospects and create the most effective strategies to convert leads into sales. By using data-driven insights, businesses can ensure they are targeting the right people and improve their lead-generation capabilities.

Definition of data analytics

Data Analytics is the process of collecting, organizing, and analyzing large volumes of data to uncover patterns, trends, and insights.

It is used to make informed decisions in a wide variety of industries such as healthcare, finance, retail, education, and manufacturing. Data Analytics can be used to identify customer

preferences, predict future trends, and optimize processes.

For example, in the retail industry, data analytics can be used to analyze customer buying patterns to determine which products are most popular and which should be discounted or removed from shelves. Data Analytics can also be used to identify potential fraud and improve operational efficiency.

10 Ways Data Analytics Can Help Generate More Leads

Data analytics can help businesses generate more leads in several ways.

A. Identifying Target Audiences

Data analytics can help identify target audiences in several ways.

Firstly, data analytics can help to identify the right target audience by analyzing customer behavior and demographics. This can be done by analyzing customer purchase history, website visits, social media interactions, and other data sources.

Secondly, data analytics can be used to understand the needs and preferences of potential customers. This can be done by collecting data from surveys, interviews, focus groups, and other sources.

Thirdly, data analytics can be used to identify trends in customer behavior that can help businesses target their marketing efforts more effectively. Finally, data analytics can help businesses segment their target audiences into smaller groups based on their interests and preferences.

By doing so, businesses can create tailored campaigns that are more likely to generate leads.

B. Analyzing Website Traffic

Data analytics can help analyze web traffic in a variety of ways.

First, it can help identify which pages on a website are generating the most leads and which ones are not. This information can then be used to optimize the website for better lead generation.

Additionally, data analytics can help identify which sources of web traffic are providing the most leads and which are not. This information can be used to focus marketing efforts on the sources that are providing the most leads.

Data analytics can also help identify trends in web traffic, such as what time of day or week is most popular for website visits. This information can be used to optimize marketing campaigns for maximum engagement and lead generation.

Leveraging data analytics, businesses can track customer behavior on their website to uncover potential opportunities for lead generation. By analyzing customer activity, companies can create more effective campaigns and boost their lead-generation efforts.

C. Understanding Buyer Personas

Data analytics can help understand the buyer persona by providing insights into customer behavior, preferences, and demographics.

This information can be used to create more targeted campaigns and content that will resonate with potential customers. By understanding the buyer persona, businesses can create campaigns that are tailored to their target audience's needs and interests.

Additionally, data analytics can help identify potential leads who may not have been considered before. By analyzing customer data, businesses can identify potential leads that may have been overlooked or missed in the past.

Data analytics can help businesses maximize their lead-generation success by providing valuable insights into which campaigns are most effective. By understanding the buyer persona, businesses can ensure their efforts are as impactful as possible. Unlocking the power of data analytics allows businesses to hone in on the strategies that generate the best leads.

D. Tracking Competitor Performance

Data analytics can help track competitor performance in a variety of ways.

By analyzing competitor data, companies can gain insights into their competitors' strategies and how they are performing in the market. Companies can use this data to identify areas where they are outperforming their competitors, as well as areas where they may be falling behind.

Data analytics can empower companies to gain insight into their competitors' pricing and customer acquisition strategies. This understanding can then be leveraged to craft more effective marketing plans and accurately target potential customers.

Data analytics can be a powerful tool for businesses, allowing them to track competitor performance and gain valuable insights into the competitive landscape. It can also help companies identify trends in their competitors' customer base, enabling them to tailor their products and services to better meet the needs of their target audience. Ultimately, data analytics is an invaluable asset for staying ahead of the competition.

E. Optimizing Landing Pages

Data analytics can help optimize landing pages in several ways.

Firstly, it can provide insights into how visitors are interacting with the page and what elements are working best. This information can be used to make changes to the page that will improve its effectiveness.

Secondly, data analytics can be used to identify which keywords are driving the most traffic to the page and which ones are not performing as well. This information can then be used to adjust the content and design of the page to ensure it is optimized for the right keywords.

Finally, data analytics can also be used to track the performance of different versions of the landing page, allowing marketers to determine which version is generating the most leads. By using data analytics to optimize landing pages, marketers can ensure they are creating an effective experience for their visitors and generating more leads in the process.

F. Identifying Influencers

Data Analytics can uncover influencers by delving into their activity data. It uncovers the power players with cutting-edge analysis and pinpoint accuracy.

It can track their interactions with customers, their content, and other factors. This data can be used to determine which influencers are most likely to generate leads for a company.

Data Analytics can also be used to measure the effectiveness of an influencer's efforts in terms of lead generation. It can provide insights into the types of content that resonates with customers and which influencers are driving more leads than others.

Additionally, Data Analytics can be used to track the performance of an influencer's campaigns and measure the ROI of their efforts. This helps companies make informed decisions about which influencers they should work with to generate more leads.

G. Analyzing Social Media Performance

Data analytics unlocks the potential of social media performance, providing valuable insights into campaign success, content efficacy, and ideal lead targeting. Harness the power of data to maximize your social media reach and engagement.

It can provide a comprehensive view of user engagement, including likes, comments, shares, and other interactions. Data analytics can also be used to identify trends in user behavior, such as what times of day are most effective for posting content or which topics are resonating with followers.

Additionally, data analytics can be used to determine which posts are driving the most leads and conversions. This information can then be used to refine marketing strategies and target more relevant audiences.

Finally, data analytics can be used to track the ROI of social media campaigns, enabling marketers to make better decisions about their investments in social media marketing.

H. Creating Customized Content

Data analytics can help generate more leads by providing insights into customer behavior and preferences.

This allows marketers to create customized content that is tailored to the interests of their target audience. For example, data analytics can be used to identify which types of content are most effective in driving leads, as well as which topics and keywords are most likely to resonate with potential customers.

Additionally, data analytics can be used to track the performance of content over time, allowing marketers to make adjustments as needed to maximize lead generation. By leveraging data analytics, marketers can create content that is specifically designed to attract and engage their target audience, resulting in more leads and ultimately more conversions.

I. Utilizing Automation Tools

Data Analytics can utilize automation tools to generate more leads by automating repetitive tasks, such as lead generation, email campaigns, and customer segmentation. Automation tools can help marketers identify potential leads and customers quickly and efficiently.

Automation tools can also be used to track customer interactions, analyze customer data, and identify trends in customer behavior.Automation tools can also be used to create targeted campaigns that are tailored to the specific needs of each customer segment.

By using automation tools, marketers can save time and resources while generating more leads for their business. Additionally, automation tools can help marketers better understand their customers' needs and preferences, allowing them to create more effective campaigns that will result in increased sales and revenue.

J. Leveraging Lead Scoring Systems

Data analysis can be used to develop and put into action lead-scoring systems to create more leads.

Lead scoring is the process of assigning a numerical value to each lead based on their interest, engagement, and other factors. This score can then be used to prioritize leads and determine which ones are more likely to convert into customers.

By using data analytics, businesses can identify key characteristics of leads that are most likely to convert, such as demographics, past purchases, website visits, and other activities. This data can then be used to assign a score to each lead and prioritize them accordingly.

Additionally, data analytics can be used to track the effectiveness of lead-scoring systems and make adjustments as needed for better results. Data analytics can help businesses generate more leads by utilizing lead-scoring systems that are tailored to their specific needs.

Conclusion

Businesses can use data analytics to identify their target audience and tailor their campaigns accordingly to generate more leads.

Additionally, data analytics can be used to track the performance of campaigns and measure the effectiveness of various strategies. Furthermore, data analytics can help businesses understand customer behavior and trends, enabling them to make more informed decisions about their marketing efforts. Finally, data analytics can help businesses identify new opportunities for growth and expansion.

Data analytics can be leveraged to track the success of campaigns and measure strategy efficacy. It also helps businesses uncover customer behavior and trends, empowering them to make smarter marketing decisions. Plus, data analytics can reveal new growth and expansion opportunities.

It is an invaluable tool that can help businesses generate more leads by providing them with actionable insights into their target audience's behavior and preferences.