Don't wanna be here? Send us removal request.

Text

Machine Learning for Data Analysis: K-Means Cluster Analysis (python)

SUMMARY

K-Means Cluster Analysis is an unsupervised machine learning method that groups together observations based on the similarity (low variance) of the responses of multiple variables. These groups or clusters, which are subsets of the dataset, group together responses that have low variance within cluster and greater variance between each cluster. The goal is to have little or no overlap between the responses grouped into each cluster. Clustering can be used to determine which variables are similar enough to each other that they can be possible candidates for being combined into a single categorical variable.

For this assignment I used the variable “incomeperperson” that I’ve used in previous lessons for the target variable, as the “clustering assignment” variable to externally validate the clusters created by k-means, using an Analysis of Variance (ANOVA). I used the set of explanatory variables from the previous assignment as the “clustering” variables. These variables were scaled to have a mean of 0 and a standard deviation of 1.

I’m interested to see how clustering assignment variable incomeperperson is related to the clustering variables. In previous assignments the results have varied in terms of which variables are the best predictors of lower or higher income, however life expectancy and urbanrate are the most consistent in having a positive relationship to incomeperson, with and being predictors of higher income when their values are also high. Other variables that some tests have indicated are also positively related to higher income are internetuserate, relectricperperson and polityscore.

I wrote the original python code for this assignment using the 3 cluster model that was included in the code provided by Coursera for the assignment. But after creating the elbow plot and the ANOVO analyses, which both indicated two clusters might be enough, I also ran the analyses for two clusters which is also included below.

All variables are quantitative: Clustering Assigment Validation Variable: incomeperperson Domestic Product per capita in constant 2000 US$.

Clustering Variables used for K-Means Cluster Analysis: armedforcesrate: Armed forces personnel (% of total labor force) alcconsumption: alcohol consumption per adult femaleemployrate: female employees (% of population) hivrate: estimated HIV Prevalence % - (Ages 15-49) internetuserate: Internet users (per 100 people) relectricperperson: residential electricity consumption, per person (kWh) polityscore: Democracy score (Polity) urbanrate: urban population (% of total) lifeexpectancy: 2011 life expectancy at birth (years) employrate: total employees age 15+ (% of population) suicideper100TH: suicide per 100 000 co2emissions: cumulative CO2 emission (metric tons)

RESULTS

The 12 variables above were used in the cluster analysis with a total of 107 observations.

The training set was randomly selected from 70% of the dataset (74 observations) and the test set was the remaining 30% (33 observations).

The k-means cluster analyses was run on the training set using Euclidean distance and 1 to 9 clusters and an elbow curve was plotted for the variance in the clustering variables based on the clusters r-square values.

ELBOW METHOD to determine number of clusters

This “elbow’ plot shows that the average minimum distance (y-axis) of the observations from the cluster centroids for each of the cluster solutions decreases with the increase in the number of clusters (x-axis). The bend in the elbow at cluster 2 indicates that the curve might be leveling off which suggests that 2 clusters may provide an optimum balance of the fewest number of clusters and lowest distance between observations and assigned clusters. There is also a subtle bend at 3 and 8 clusters. 8 clusters may not be much a reduction. Since I already ran the Python code with 3 clusters I started the analysis with 3. However, since the results indicated that 2 might be more optimal and also ran the analysis with 2 variables. CANONICAL DISCRIMINANT ANALYSES to reduce clustering variables

To reduce the number of clustering variables canonical discriminant analyses was used. The two canonical variables used are linear combinations of the 12 clustering variables listed above. Additional canonical variables can be created but most of the variance in the clustering variables is accounted for in the first two canonical variables. The scatterplot graph is created by assigning the y-axis to Canonical Variable 2 and the x-axis to Canonical Variable 1. The scatterplot itself is the result of plotting both the Canonical Variable 1 and 2 for each of the observations in the training set and showing by color the k-means cluster that was assigned to each observation.

Reviewing the scatterplot it appears that there is relatively little overlap between the 2 canonical variables which suggests there is good separation among the three variables but all three are somewhat spread out which indicates less correlation among the observations and higher variance within each cluster than is ideal. It also shows that the teal and blue clusters are mostly clustered with negative values for Canonical variable 1 and the yellow cluster is mostly positive, suggesting that the two cluster might be enough to include most of the variance between observations.

COMPARISON OF CLUSTER VARIABLE MEANS by each cluster

Clustering variable means by cluster index urbanrate employrate lifeexpectancy armedforcesrate \ cluster 0 109.15 0.21 -0.88 0.21 1.45 1 114.97 0.60 -0.14 0.68 -0.18 2 101.21 -0.64 0.47 -0.92 -0.30

polityscore relectricperperson internetuserate femaleemployrate \ cluster 0 -1.13 0.06 -0.07 -1.45 1 0.69 0.59 0.83 0.07 2 -0.23 -0.59 -0.93 0.48

alcconsumption hivrate suicideper100th co2emissions cluster 0 -1.02 -0.37 -0.75 -0.20 1 0.81 -0.32 0.24 0.30 2 -0.52 0.70 -0.28 -0.20

In reviewing the means for all 12 clustering variables by cluster it looks like two clusters, Cluster 1 and 2, include most of the variable values that in past assignments (LASSO, Random Forests, Decision trees, etc.) have been the best predictors for the incomeperperson target/assignment variable, suggesting that two clusters may be more optimal than three.

In Cluster 0, armedforcesrate is the highest value for the variable in all 3 clusters, and employrate, polity score, female employment, alconsumption, hivrate, suicideper100th are at the lowest. In previous assignments most of these variables had little or no significance in predicting income levels. The exceptions were polity score and hivrate which for some models did have some prediction value. It suggests that this cluster is comprised of the values that are the least valuable in predicting incomeperperson, and could possibly be combined in Cluster 2 which includes variable values that in the past predicted lower income.

In Cluster 1, urbanrate, lifeexpectancy, polityscore, relectricperperson, internetuserate, alconsumption, suicideper100th and co2emissions have the highest values of all 3 clusters. All but alconsumption, suicideper100th and co2emissions were significant in predicting incomeperperson in at least one model in previous assignments. Since all of the variables that have been significant in predicting higher income in previous models have their highest value, it suggests that this cluster could be a predictor for observations with higher income per person.

In Cluster 2, urbanrate, lifeexpectancy, armedforcesrate, relectricperperson, internetuserate have the lowest value of all clusters. All but armedforcesrate were significant in predicting lower incomeperperson when the values of the variables were low. Hivrate has its highest values, which in a few previous models predicted income would be lower. Overall, the results for this clusters indicate it includes observations which would predict lower income per person.

ANOVA with Cluster Assignment variable: incomeperson

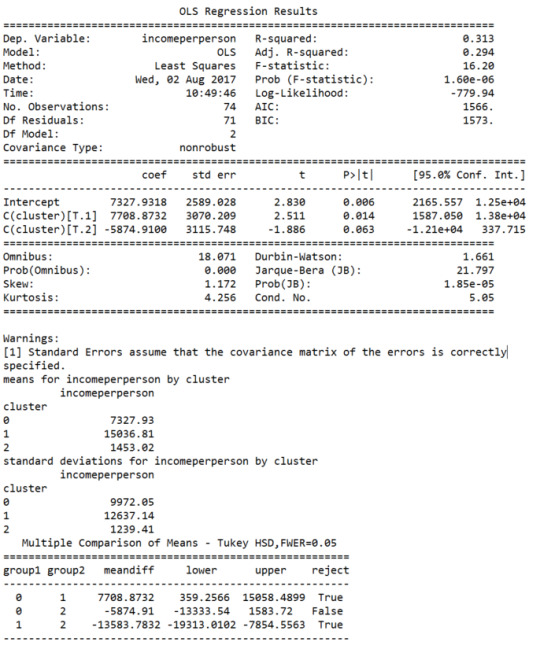

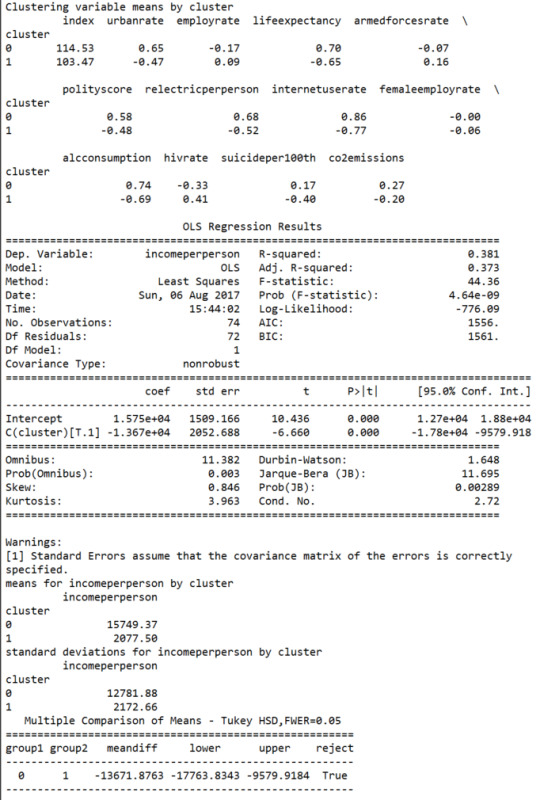

The ANOVA (Analysis of Variance) results above indicate the three clusters have significant variance : -statistic (16.20) and p value very close to 0 (1.60e-06) which suggests significant difference between clusters 0, 1 and 2.

However, the second tukey post hoc comparison test between cluster 0 and cluster 2, where “reject” is False indicates that the “null” hypothesis cannot be rejected. This result again suggests that cluster 0 may not have enough variance from cluster 2, and that two clusters may be more efficient than 3.

Below are all test result for two clusters which I think confirms that two clusters are more optimal.

RESULTS TWO CLUSTERS

The CANONICAL DISCRIMINANT ANALYSES for two clusters indicates less overlap and better separation between clusters than with three.

The F-statistic (44.36) and p-value (4.64e-09) for two clusters indicate greater variance between clusters than for three clusters.

CODE

# -*- coding: utf-8 -*- """ 08-1-17

@author: kbolam """

# -*- coding: utf-8 -*-

from pandas import Series, DataFrame import pandas import numpy as np import matplotlib.pylab as plt from sklearn.cross_validation import train_test_split from sklearn import preprocessing from sklearn.cluster import KMeans

""" Machine Learning for Data Analysis Random Forests """

# bug fix for display formats to avoid run time errors pandas.set_option('display.float_format', lambda x:'%.2f'%x)

data = pandas.read_csv('gapminderorig.csv')

# convert all variables to numeric format data['incomeperperson'] = pandas.to_numeric(data['incomeperperson'], errors='coerce') data['urbanrate'] = pandas.to_numeric(data['urbanrate'], errors='coerce') data['employrate'] = pandas.to_numeric(data['employrate'], errors='coerce') data['lifeexpectancy'] = pandas.to_numeric(data['lifeexpectancy'], errors='coerce') data['armedforcesrate'] = pandas.to_numeric(data['armedforcesrate'], errors='coerce') data['polityscore'] = pandas.to_numeric(data['polityscore'], errors='coerce') data['relectricperperson'] = pandas.to_numeric(data['relectricperperson'], errors='coerce') data['internetuserate'] = pandas.to_numeric(data['internetuserate'], errors='coerce') data['femaleemployrate'] = pandas.to_numeric(data['femaleemployrate'], errors='coerce') data['alcconsumption'] = pandas.to_numeric(data['alcconsumption'], errors='coerce') data['hivrate'] = pandas.to_numeric(data['hivrate'], errors='coerce') data['suicideper100th'] = pandas.to_numeric(data['suicideper100th'], errors='coerce') data['co2emissions'] = pandas.to_numeric(data['co2emissions'], errors='coerce')

data_clean=data.dropna() ''' # comment out for Cluster Assign4 #Change Target variable to binary categorical variable def incomegrp (row): if row['incomeperperson'] <= 3500: return 0 elif row['incomeperperson'] > 3500 : return 1

# added ".loc[:," to "data_clean['armedforcesgrp'] =" to get rid of copy error "Try using .loc[row_indexer,col_indexer] = value instead" # still getting error for another line but all predictors statement seem to be working with update and no more errors data_clean.loc[:,'incomegrp'] = data_clean.apply (lambda row: incomegrp (row),axis=1)

chk2 = data_clean['incomegrp'].value_counts(sort=False, dropna=False) print(chk2) ''' #select predictor variables and target variable as separate data sets #incomeperperson is sort of target variable and should not be included in cluster or clustervar cluster= data_clean[['urbanrate','employrate','lifeexpectancy','armedforcesrate', 'polityscore','relectricperperson','internetuserate','femaleemployrate','alcconsumption', 'hivrate','suicideper100th','co2emissions']]

# comment out for Cluster Assign4 #target = data_clean.incomegrp

cluster.describe()

clustervar=cluster.copy()

#need to remove incomeperperson from clustervar #clustervar['incomeperperson']=preprocessing.scale(clustervar[['incomeperperson']].astype('float64')) from sklearn.preprocessing import MinMaxScaler min_max=MinMaxScaler() clustervar['urbanrate']=preprocessing.scale(clustervar[['urbanrate']].astype('float64')) clustervar['employrate']=preprocessing.scale(clustervar[['employrate']].astype('float64')) clustervar['lifeexpectancy']=preprocessing.scale(clustervar[['lifeexpectancy']].astype('float64')) clustervar['armedforcesrate']=preprocessing.scale(clustervar[['armedforcesrate']].astype('float64')) clustervar['polityscore']=preprocessing.scale(clustervar[['polityscore']].astype('float64')) clustervar['relectricperperson']=preprocessing.scale(clustervar[['relectricperperson']].astype('float64')) clustervar['internetuserate']=preprocessing.scale(clustervar[['internetuserate']].astype('float64')) clustervar['femaleemployrate']=preprocessing.scale(clustervar[['femaleemployrate']].astype('float64')) clustervar['alcconsumption']=preprocessing.scale(clustervar[['alcconsumption']].astype('float64')) clustervar['hivrate']=preprocessing.scale(clustervar[['hivrate']].astype('float64')) clustervar['suicideper100th']=preprocessing.scale(clustervar[['suicideper100th']].astype('float64')) clustervar['co2emissions']=preprocessing.scale(clustervar[['co2emissions']].astype('float64'))

clustervar=clustervar.dropna()

clustervar.dtypes clustervar.describe()

print(type(clustervar)) #print(type(target))

# split data into train and test sets clus_train, clus_test = train_test_split(clustervar, test_size=.3, random_state=123)

# k-means cluster analysis for 1-9 clusters from scipy.spatial.distance import cdist clusters=range(1,10) meandist=[]

for k in clusters: model=KMeans(n_clusters=k) model.fit(clus_train) clusassign=model.predict(clus_train) meandist.append(sum(np.min(cdist(clus_train, model.cluster_centers_, 'euclidean'), axis=1)) / clus_train.shape[0])

""" Plot average distance from observations from the cluster centroid to use the Elbow Method to identify number of clusters to choose """ ''' plt.plot(clusters, meandist) plt.xlabel('Number of clusters') plt.ylabel('Average distance') plt.title('Selecting k with the Elbow Method') '''

# Interpret 3 cluster solution model3=KMeans(n_clusters=2) model3.fit(clus_train) clusassign=model3.predict(clus_train) # plot clusters

from sklearn.decomposition import PCA pca_2 = PCA(2) plot_columns = pca_2.fit_transform(clus_train) plt.scatter(x=plot_columns[:,0], y=plot_columns[:,1], c=model3.labels_,) plt.xlabel('Canonical variable 1') plt.ylabel('Canonical variable 2') plt.title('Scatterplot of Canonical Variables for 2 Clusters') plt.show()

""" BEGIN multiple steps to merge cluster assignment with clustering variables to examine cluster variable means by cluster """ # create a unique identifier variable from the index for the # cluster training data to merge with the cluster assignment variable clus_train.reset_index(level=0, inplace=True) # create a list that has the new index variable cluslist=list(clus_train['index']) # create a list of cluster assignments labels=list(model3.labels_) # combine index variable list with cluster assignment list into a dictionary newlist=dict(zip(cluslist, labels)) newlist # convert newlist dictionary to a dataframe newclus=DataFrame.from_dict(newlist, orient='index') newclus # rename the cluster assignment column newclus.columns = ['cluster']

# now do the same for the cluster assignment variable # create a unique identifier variable from the index for the # cluster assignment dataframe # to merge with cluster training data newclus.reset_index(level=0, inplace=True) # merge the cluster assignment dataframe with the cluster training variable dataframe # by the index variable merged_train=pandas.merge(clus_train, newclus, on='index') merged_train.head(n=100) # cluster frequencies merged_train.cluster.value_counts()

""" END multiple steps to merge cluster assignment with clustering variables to examine cluster variable means by cluster """

# FINALLY calculate clustering variable means by cluster clustergrp = merged_train.groupby('cluster').mean() print ("Clustering variable means by cluster") print(clustergrp)

# validate clusters in training data by examining cluster differences in income using ANOVA # first have to merge income with clustering variables and cluster assignment data

#may need to scale this variable income_data=data_clean['incomeperperson']

# split income data into train and test sets income_train, income_test = train_test_split(income_data, test_size=.3, random_state=123)

print ('income_train') chk1 = income_train.count() print(chk1)

print ('income_test') chk2 = income_test.count() print(chk2)

#print (income_test) income_train1=pandas.DataFrame(income_train)

#income_train1=income_train1.set_index(['incomeperperson']) income_train1.reset_index(level=0, inplace=True) #print('income_train1') #print (income_train1)

#merged_train_all=pandas.merge(income_train1, merged_train, on='incomeperperson') #THE FOLLOWING IS ORIGINAL merged_train_all=pandas.merge(income_train1, merged_train, on='index')

sub1 = merged_train_all[['incomeperperson', 'cluster']].dropna()

#sub1 = sub1.reindex(level=0, inplace=True)

import statsmodels.formula.api as smf import statsmodels.stats.multicomp as multi

incomemod = smf.ols(formula='incomeperperson ~ C(cluster)', data=sub1).fit() print (incomemod.summary())

print ('means for incomeperperson by cluster') m1= sub1.groupby('cluster').mean() print (m1)

print ('standard deviations for incomeperperson by cluster') m2= sub1.groupby('cluster').std() print (m2)

mc1 = multi.MultiComparison(sub1['incomeperperson'], sub1['cluster']) res1 = mc1.tukeyhsd() print(res1.summary())

0 notes

Text

Machine Learning for Data Analysis: LASSO Regression (python)

SUMMARY

LASSO stands for "Least Absolute Selection and Shrinkage Operator". It is a supervised machine learning method that can be useful for reducing a large set of predictor explanatory variables to a smaller set of the the most accurate predictors of the target variable. For those variables that the LASSO method determines are the least significant predictors, the coefficient is reduced to 0 and the coefficients for the variables that are the better predictors is calculated.

I added the additional explanatory variables of Carbon Dioxide Emissions and Suicide per 100th to those used for the last assignment for a total of 12 explanatory variables. All variables are from the Gapminder dataset provided by Coursera.

Target Variable: incomegrp. Gross Domestic Product per capita in constant 2000 US$. This is a binary categorial variable created from ‘incomeperperson’. 0=lower income, 1 = higher income

Predictor/Explanatory Variables:

For this assignment I used the original quantitative versions of these variables from the Gapminder dataset. I previous assignments I changed them to binary categorical variables.

armedforcesrate: Armed forces personnel (% of total labor force) alcconsumption: alcohol consumption per adult femaleemployrate: female employees (% of population) hivrate: estimated HIV Prevalence % - (Ages 15-49) internetuserate: Internet users (per 100 people) relectricperperson: residential electricity consumption, per person (kWh) polityscore: Democracy score (Polity) urbanrate: urban population (% of total) lifeexpectancy: 2011 life expectancy at birth (years) employrate: total employees age 15+ (% of population) suicideper100TH: suicide per 100 000 co2emissions: cumulative CO2 emission (metric tons)

RESULTS

The results below indicate that the following variables are the least effective predictors because their coefficient was was reduced to “0″: employrate, armedforcesrate, alcconsumption, suicideper100th, co2emissions.

The most effective predictors because they had the highest coefficent are urbanrate, hivrate, lifeexpectancy and internetuserate.

Compared to the results of the last assignment, which used Random Forest, the most effective predictors determined by the Random Forest and LASSO are different, but the least effective predictors that were included in both tests are the same: employrate, armedforcesrate, alccosumption. So it appears that LASSO could be useful when you have a very large set of predictor variables that you want to reduce to the most significant predictors.

The Mean Square Error (MSE) rate for the training data was .092 and for the test data was .061 which is reflected in the R-square values of 0.63 and 0.76, meaning that training data explained 63% and the test data 76% of the variance in predicting lower and higher income. It is preferable to have the MSE and R-square values for training and test data to be more consistent, and I’d like to do more research to determine why they aren’t closer in value.

Coefficients:

{'urbanrate': 0.7209398362572198, 'employrate': 0.0, 'lifeexpectancy': 0.41845865663194542, 'armedforcesrate': 0.0, 'polityscore': 0.14381892362581544, 'relectricperperson': 0.15238876795635295, 'internetuserate': 0.40935103458276811, 'femaleemployrate': -0.047801254916007535, 'alcconsumption': 0.0, 'hivrate': 0.59565425188405363, 'suicideper100th': 0.0, 'co2emissions': 0.0} training data MSE 0.0918224301364 test data MSE 0.0606194488686 training data R-square 0.632441792817 test data R-square 0.75550155623

CODE

# -*- coding: utf-8 -*- """ 07-29-17

@author: kbolam """

# -*- coding: utf-8 -*-

from pandas import Series, DataFrame import pandas import numpy as np import matplotlib.pylab as plt from sklearn.cross_validation import train_test_split from sklearn.linear_model import LassoLarsCV

""" Machine Learning for Data Analysis Random Forests """

# bug fix for display formats to avoid run time errors pandas.set_option('display.float_format', lambda x:'%.2f'%x)

data = pandas.read_csv('gapminderorig.csv')

# convert all variables to numeric format data['incomeperperson'] = pandas.to_numeric(data['incomeperperson'], errors='coerce') data['urbanrate'] = pandas.to_numeric(data['urbanrate'], errors='coerce') data['employrate'] = pandas.to_numeric(data['employrate'], errors='coerce') data['lifeexpectancy'] = pandas.to_numeric(data['lifeexpectancy'], errors='coerce') data['armedforcesrate'] = pandas.to_numeric(data['armedforcesrate'], errors='coerce') data['polityscore'] = pandas.to_numeric(data['polityscore'], errors='coerce') data['relectricperperson'] = pandas.to_numeric(data['relectricperperson'], errors='coerce') data['internetuserate'] = pandas.to_numeric(data['internetuserate'], errors='coerce') data['femaleemployrate'] = pandas.to_numeric(data['femaleemployrate'], errors='coerce') data['alcconsumption'] = pandas.to_numeric(data['alcconsumption'], errors='coerce') data['hivrate'] = pandas.to_numeric(data['hivrate'], errors='coerce') data['suicideper100th'] = pandas.to_numeric(data['suicideper100th'], errors='coerce') data['co2emissions'] = pandas.to_numeric(data['co2emissions'], errors='coerce')

data_clean=data.dropna()

#Change Target variable to binary categorical variable def incomegrp (row): if row['incomeperperson'] <= 3500: return 0 elif row['incomeperperson'] > 3500 : return 1

# added ".loc[:," to "data_clean['armedforcesgrp'] =" to get rid of copy error "Try using .loc[row_indexer,col_indexer] = value instead" # still getting error for another line but all predictors statement seem to be working with update and no more errors data_clean.loc[:,'incomegrp'] = data_clean.apply (lambda row: incomegrp (row),axis=1)

chk2 = data_clean['incomegrp'].value_counts(sort=False, dropna=False) print(chk2)

#select predictor variables and target variable as separate data sets predvar= data_clean[['urbanrate','employrate','lifeexpectancy','armedforcesrate', 'polityscore','relectricperperson','internetuserate','femaleemployrate','alcconsumption', 'hivrate','suicideper100th','co2emissions']]

target = data_clean.incomegrp

# standardize predictors to have mean=0 and sd=1 predictors=predvar.copy()

''' -- Used "MinMaxScalar" function with "fit_transform" instead of "preprocessing.scale" to get rid of "too large values" error below. MinMax scales value to between -1 and 1, I think.: UserWarning: Numerical issues were encountered when centering the data and might not be solved. Dataset may contain too large values. You may need to prescale your features. -- Added extra set of [] around predictor on right side to stop predictor from being transformed into numpy array AND to avoid getting Deprecation reshape warning https://stackoverflow.com/questions/35166146/sci-kit-learn-reshape-your-data-either-using-x-reshape-1-1 '''

from sklearn.preprocessing import MinMaxScaler min_max=MinMaxScaler() predictors['urbanrate']=min_max.fit_transform(predictors[['urbanrate']].astype('float64')) predictors['employrate']=min_max.fit_transform(predictors[['employrate']].astype('float64')) predictors['lifeexpectancy']=min_max.fit_transform(predictors[['lifeexpectancy']].astype('float64')) predictors['armedforcesrate']=min_max.fit_transform(predictors[['armedforcesrate']].astype('float64')) predictors['polityscore']=min_max.fit_transform(predictors[['polityscore']].astype('float64')) predictors['relectricperperson']=min_max.fit_transform(predictors[['relectricperperson']].astype('float64')) predictors['internetuserate']=min_max.fit_transform(predictors[['internetuserate']].astype('float64')) predictors['femaleemployrate']=min_max.fit_transform(predictors[['femaleemployrate']].astype('float64')) predictors['alcconsumption']=min_max.fit_transform(predictors[['alcconsumption']].astype('float64')) predictors['hivrate']=min_max.fit_transform(predictors[['hivrate']].astype('float64')) predictors['suicideper100th']=min_max.fit_transform(predictors[['suicideper100th']].astype('float64')) predictors['co2emissions']=min_max.fit_transform(predictors[['co2emissions']].astype('float64'))

predictors=predictors.dropna()

predictors.dtypes predictors.describe()

print(type(predictors)) print(type(target))

# split data into train and test sets. pred_train, pred_test, tar_train, tar_test = train_test_split(predictors, target, test_size=.3, random_state=123)

# specify the lasso regression model model=LassoLarsCV(cv=10, precompute=False).fit(pred_train,tar_train)

# print variable names and regression coefficients print (dict(zip(predictors.columns, model.coef_)))

# plot coefficient progression m_log_alphas = -np.log10(model.alphas_) ax = plt.gca() plt.plot(m_log_alphas, model.coef_path_.T) plt.axvline(-np.log10(model.alpha_), linestyle='--', color='k', label='alpha CV') plt.ylabel('Regression Coefficients') plt.xlabel('-log(alpha)') plt.title('Regression Coefficients Progression for Lasso Paths')

# plot mean square error for each fold m_log_alphascv = -np.log10(model.cv_alphas_) plt.figure() plt.plot(m_log_alphascv, model.cv_mse_path_, ':') plt.plot(m_log_alphascv, model.cv_mse_path_.mean(axis=-1), 'k', label='Average across the folds', linewidth=2) plt.axvline(-np.log10(model.alpha_), linestyle='--', color='k', label='alpha CV') plt.legend() plt.xlabel('-log(alpha)') plt.ylabel('Mean squared error') plt.title('Mean squared error on each fold')

# MSE from training and test data from sklearn.metrics import mean_squared_error train_error = mean_squared_error(tar_train, model.predict(pred_train)) test_error = mean_squared_error(tar_test, model.predict(pred_test)) print ('training data MSE') print(train_error) print ('test data MSE') print(test_error)

# R-square from training and test data rsquared_train=model.score(pred_train,tar_train) rsquared_test=model.score(pred_test,tar_test) print ('training data R-square') print(rsquared_train) print ('test data R-square') print(rsquared_test)

0 notes

Text

Machine Learning for Data Analysis: Random Forests (python)

SUMMARY

The random forest assignment expands on last week’s use of decision trees. Random forests is a data mining algorithm that creates multiple decision trees to potentially increase the accuracy of predicting the value of the target variable. The random forest trees are built differently than individual decision trees to reduce bias and over-fitting. For each split or decision point, unlike a the individual decision tree, the random forest tree does not include all predictor variables in making the decision, but rather a “random subset” of the predictor variables is used. The results for each tree are then averaged into one accuracy score and the average importance of each variable (by percentage) in predicting the target is also calculated.

To get a better idea of how random forests work, I added additional explanatory variables to the ones used for the last assignment, for a total of 10 predictor/explanatory variables that are listed below. I used the same target variable, income per person. All variables are from the Gapminder dataset provided by Coursera.

The python code provided for this assignment created 25 random forest trees. I ran the code several times and got very different accuracy rates due the use of random subsets to build the trees. Result 1 below had the highest at about 91% and Result 2 had one of the lowest about 82%.

All variables are categorical binary with “0″ being the lower rate and “1″ the higher rate.

Target Variable: incomegrp (incomeperperson) Gross Domestic Product per capita in constant 2000 US$.

Predictor/Explanatory Variables:

predictors = data2[['armedforcesgrp', 'alcconsumptiongrp', 'femaleemploygrp', 'hivrategrp', 'internetgrp', 'relectricgrp', 'politygrp', 'urbangrp', 'lifegrp','employgrp']]

1. armedforcesgrp (armedforcesrate): Armed forces personnel (% of total labor force) 2. alcconsumptiongrp (alcconsumption): alcohol consumption per adult 3. femaleemploygrp (femaleemployrate): female employees (% of population) 4. hivrategrp (hivrate): estimated HIV Prevalence % - (Ages 15-49) 5. internetgrp (internetuserate): Internet users (per 100 people) 6. relectricgrp (relectricperperson): residential electricity consumption, per person (kWh) 7. politygrp (polityscore): Democracy score (Polity) 8. urbangrp (urbanrate): urban population (% of total) 9. lifegrp (lifeexpectancy): 2011 life expectancy at birth (years) 10. employgrp (employrate): total employees age 15+ (% of population)

RESULT 1

Out of 44 observations, 26 were correctly predicted as Lower Income (0) and 14 were correctly predicted as Higher Income (1) with an accuracy rate of 91%

The Predictors output indicates by percentage, the importance of each variable in predicating the value of the Target Variable, Income per person. The value for each variable is listed in the order the variables are listed in the Summary above. In this Random Forest example “relectricgrp” at 27% was the most important variable in determining the outcome, with “politygrp” (18%), “urbangrp” (10%) and “lifegrp” (11%) also potentially significant.

The graph below includes the accuracy values for each of the 25 decision trees included in the Random Forest calculation (note the y-axis values start at .725)

RESULT 2

Out of 44 observations 19 were correctly predicted as Lower Income (0) and 17 were correctly predicted as Higher Income (1) with an accuracy rate of 82%

The Predictors output indicates by percentage, the importance of each variable in predicating the value of the Target Variable, Income per person. The value for each variable is listed in the order the variables are listed in the Summary above. In this Random Forest example “politygrp” at 23% was the most important variable in determining the outcome, with “internetgrp” (14%), “relectricgrp” (17%) and “lifegrp” (13%) also potentially significant.

The graph below includes the accuracy values for each of the 25 decision trees included in the Random Forest calculation (note the y-axis values start at .70)

CODE

# -*- coding: utf-8 -*- """ 07-22-17

@author: kbolam """

# -*- coding: utf-8 -*-

from pandas import Series, DataFrame import pandas import numpy as np import os import matplotlib.pylab as plt #from sklearn.cross_validation import train_test_split from sklearn.model_selection import train_test_split from sklearn.cross_validation import train_test_split from sklearn.tree import DecisionTreeClassifier from sklearn.metrics import classification_report import sklearn.metrics # Feature Importance from sklearn import datasets from sklearn.ensemble import ExtraTreesClassifier

""" Machine Learning for Data Analysis Random Forests """

# bug fix for display formats to avoid run time errors pandas.set_option('display.float_format', lambda x:'%.2f'%x)

data = pandas.read_csv('gapminderorig.csv')

# convert all variables to numeric format data['incomeperperson'] = pandas.to_numeric(data['incomeperperson'], errors='coerce') data['urbanrate'] = pandas.to_numeric(data['urbanrate'], errors='coerce') data['employrate'] = pandas.to_numeric(data['employrate'], errors='coerce') data['lifeexpectancy'] = pandas.to_numeric(data['lifeexpectancy'], errors='coerce') data['armedforcesrate'] = pandas.to_numeric(data['armedforcesrate'], errors='coerce') data['polityscore'] = pandas.to_numeric(data['polityscore'], errors='coerce') data['relectricperperson'] = pandas.to_numeric(data['relectricperperson'], errors='coerce') data['internetuserate'] = pandas.to_numeric(data['internetuserate'], errors='coerce') data['femaleemployrate'] = pandas.to_numeric(data['femaleemployrate'], errors='coerce') data['alcconsumption'] = pandas.to_numeric(data['alcconsumption'], errors='coerce') data['hivrate'] = pandas.to_numeric(data['hivrate'], errors='coerce')

data_clean=data.dropna()

#Change all variables into binary categorical variables

def armedforcesgrp (row): if row['armedforcesrate'] <= 1: return 0 elif row['armedforcesrate'] > 1 : return 1

# added ".loc[:," to "data_clean['armedforcesgrp'] =" to get rid of copy error "Try using .loc[row_indexer,col_indexer] = value instead" # still getting error for another line but all predictors statement seem to be working with update and no more errors data_clean.loc[:,'armedforcesgrp'] = data_clean.apply (lambda row: armedforcesgrp (row),axis=1)

chk1g = data_clean['armedforcesgrp'].value_counts(sort=False, dropna=False) print(chk1g)

def alcconsumptiongrp (row): if row['alcconsumption'] <= 7: return 0 elif row['alcconsumption'] > 7 : return 1

data_clean.loc[:,'alcconsumptiongrp'] = data_clean.apply (lambda row: alcconsumptiongrp (row),axis=1)

chk1f = data_clean['alcconsumptiongrp'].value_counts(sort=False, dropna=False) print(chk1f)

def femaleemploygrp (row): if row['femaleemployrate'] <= 47: return 0 elif row['femaleemployrate'] > 47 : return 1

data_clean.loc[:,'femaleemploygrp'] = data_clean.apply (lambda row: femaleemploygrp (row),axis=1)

chk1e = data_clean['femaleemploygrp'].value_counts(sort=False, dropna=False) print(chk1e)

def hivrategrp (row): if row['hivrate'] <= .25: return 0 elif row['hivrate'] > .25 : return 1

data_clean.loc[:,'hivrategrp'] = data_clean.apply (lambda row: hivrategrp (row),axis=1)

chk1d = data_clean['hivrategrp'].value_counts(sort=False, dropna=False) print(chk1d)

def internetgrp (row): if row['internetuserate'] <= 32: return 0 elif row['internetuserate'] > 32 : return 1

data_clean.loc[:,'internetgrp'] = data_clean.apply (lambda row: internetgrp (row),axis=1)

chk1c = data_clean['internetgrp'].value_counts(sort=False, dropna=False) print(chk1c)

def relectricgrp (row): if row['relectricperperson'] <= 550: return 0 elif row['relectricperperson'] > 550 : return 1

data_clean.loc[:,'relectricgrp'] = data_clean.apply (lambda row: relectricgrp (row),axis=1)

chk1b = data_clean['relectricgrp'].value_counts(sort=False, dropna=False) print(chk1b)

def politygrp (row): if row['polityscore'] <= 7: return 0 elif row['polityscore'] > 7 : return 1

data_clean.loc[:,'politygrp'] = data_clean.apply (lambda row: politygrp (row),axis=1)

chk1a = data_clean['politygrp'].value_counts(sort=False, dropna=False) print(chk1a)

def lifegrp (row): if row['lifeexpectancy'] <= 73.3: return 0 elif row['lifeexpectancy'] > 73.3 : return 1

data_clean.loc[:,'lifegrp'] = data_clean.apply (lambda row: lifegrp (row),axis=1)

chk1 = data_clean.loc[:,'lifegrp'].value_counts(sort=False, dropna=False) print(chk1)

def incomegrp (row): if row['incomeperperson'] <= 3500: return 0 elif row['incomeperperson'] > 3500 : return 1

data_clean.loc[:,'incomegrp'] = data_clean.apply (lambda row: incomegrp (row),axis=1)

chk2 = data_clean['incomegrp'].value_counts(sort=False, dropna=False) print(chk2)

def urbangrp (row): if row['urbanrate'] <= 61 : return 0 elif row['urbanrate'] > 61 : return 1

data_clean.loc[:,'urbangrp'] = data_clean.apply (lambda row: urbangrp (row),axis=1)

chk3 = data_clean['urbangrp'].value_counts(sort=False, dropna=False) print(chk3)

def employgrp (row): if row['employrate'] <= 58 : return 0 elif row['employrate'] > 58 : return 1

data_clean.loc[:,'employgrp'] = data_clean.apply (lambda row: employgrp (row),axis=1)

chk4 = data_clean['employgrp'].value_counts(sort=False, dropna=False) print(chk4)

data2=data_clean.dropna()

data2.dtypes data2.describe()

print(type(data2))

#predictors for random forest

predictors = data2[['armedforcesgrp', 'alcconsumptiongrp', 'femaleemploygrp', 'hivrategrp', 'internetgrp', 'relectricgrp', 'politygrp', 'urbangrp', 'lifegrp','employgrp']]

print(type(predictors))

#Rename response (target) variable "incomegrp" to "targets" targets = data2.incomegrp

pred_train, pred_test, tar_train, tar_test = train_test_split(predictors, targets, test_size=.4)

p_train=pred_train.shape print("p_train") print(p_train) p_test=pred_test.shape print("p_test") print(p_test) t_train=tar_train.shape print("t_train") print(t_train) t_test=tar_test.shape print("t_test") print(t_test) #Build model on training data from sklearn.ensemble import RandomForestClassifier

classifier=RandomForestClassifier(n_estimators=25) classifier=classifier.fit(pred_train,tar_train)

predictions=classifier.predict(pred_test)

# create and print confusion matrix and accuracy score my_conf_matrix = sklearn.metrics.confusion_matrix(tar_test,predictions) my_accuracy_score = sklearn.metrics.accuracy_score(tar_test, predictions)

print("Confusion Matrix") print(my_conf_matrix) print("Accuracy Score") print(my_accuracy_score)

# fit an Extra Trees model to the data model = ExtraTreesClassifier() model.fit(pred_train,tar_train) # display the relative importance of each attribute #print("Predictors") #print("1. armed forces rate 2. alcohol consumption rate 3. female employment rate 4. HIV rate 5. internet use rate 6. residential electricity consumption per person 7. polity (democracy rate) 8. urban population percentage 9. life expectancy rate 10. employment rate") #print("Importance of Predictors(percentage) listed in same order as above.") print(model.feature_importances_)

""" Running a different number of trees and see the effect of that on the accuracy of the prediction """

trees=range(25) accuracy=np.zeros(25)

for idx in range(len(trees)): classifier=RandomForestClassifier(n_estimators=idx + 1) classifier=classifier.fit(pred_train,tar_train) predictions=classifier.predict(pred_test) accuracy[idx]=sklearn.metrics.accuracy_score(tar_test, predictions)

plt.cla() plt.plot(trees, accuracy)

plt.savefig("graph.png")

0 notes

Text

Machine Learning for Data Analysis: Running a Classification Tree

Summary

A classification tree uses explanatory/predictor variables to predict the value of the response/target variable. Below are the target and predictor variables used to generate the classification tree included below. All variables are binary and are the same variables used in the previous blog for Course 3 (Regression Modeling in Practice), Assignment 4.

Variable Description

Datasource: Coursera Gapminder dataset

Target Variable

The target variable is assigned in the following python statement: targets: “targets = data2.incomegrp”

Target Variable = incomegrp (incomeperperson)

1 = Higher Income (Income per Person > $3500)

0 = Lower Income (Income per Person =< $3500)

Predictor Variables

The variables names of X[0], X[1], X[2] are assigned according to how they are listed in the following python statement: “predictors = data2[['urbangrp', 'lifegrp','employgrp']]”

Predictor X[0] is urbangrp (urbanization rate)

X[0] = 1 Higher Urban Rate (> 50%)

X[0] = 0 Lower Urban Rate (=< 50%)

Predictor X[1] is lifegrp (life expectancy)

X[1] = 1 Longer Life Expectancy (Years lived > 73.3 years)

X[1] = 0 Shorter Life Expectancy (Years lived =< 73.3 years)

Predictor X[1] is employgrp (employment rate)

X[2] = 1 High Employment Rate (> 50%)

X[2] = 0 Low Employment Rate (=< 50%)

Classification Tree and Confusion Matrix

Python Confusion Matrix and Accuracy:

Confusion Matrix with labels:

Accuracy Score: The accuracy score of approximately 0.78 indicates that the decision tree model has classified 78% of the test dataset sample correctly as either Higher or Lower Income.

Classification Tree

Overview of classification tree

Far Right Nodes: For the 99 sample/observation countries in the top node, the first split is on life expectancy (lifegrp). The far right nodes, indicate that out of these 99 countries those with a higher life expectancy (1st node), higher urbanization rate (2nd node), and higher employment rate (3rd node), 3 countries are classified as having a lower income and 31 are classified as having a higher income.

Far Left Nodes: Of the 99 countries with a lower life expectancy(1st node) and lower urbanization rate (2nd node), 35 countries are classified as having a lower income rate and 0 countries are classified as having a higher income rate.

Left interior nodes: Out of the 99 countries with a lower life expectancy (1st node), higher urbanization rate (2nd node), and higher employment rate (3rd node), 11 countries are classified as having alower income and 1 with a higher income.

Detailed interpretation of the splits of the far right nodes:

Top or 1st Node

The top node of the classification tree above splits on the X[1] (lifegrp) variable

X[1] <=0.5 -- initial criteria used to split the 99 samples

gini = 0.4853 -- the gini is a statistical measure of uncertainty. A lower gini index indicates a more uniform dataset with a gini of 0.0 indicating all values correspond to one of the target variables.

Samples = 99 – indicates the number of observations included in the split.

Value (58, 41) indicates 58 out of 99 samples have a Target variable = 0 (lower income) and the remaining 41 have Target variable = 1 (higher income) .

The 51 samples where X[1] = 0 and are less than 0.5 are classified as “True” and move to the left side of the split.

The 48 samples where X[1] = 1 and are greater than 0.5 and are classified as “False” they move to the left side of the split

2nd Node on far right

The 48 samples where X[1] <= 0.5) = False move down to the 2nd Node on far right and split on X[0] = urbangrp.

X[0] <=0.5 -- criteria used to split the 48 samples.

gini = 0.3299 -- the gini is a statistical measure of uncertainty used as the criteria for the split. The gini value of 0.3299 is less than the 1st node indicating that this classification has less uncertainty.

Samples = 48 – indicates the number of observations included in the split.

Value (10, 38) indicate 10 out of 38 samples have a Target variable = 0 (lower income) and the remaining 38 have Target variable = 1 (higher income).

The 6 samples where X[0] = 0 and are less than 0.5 are classified as “True” and move to the left side of the split.

The 42 samples where X[0] = 1 and are greater than 0.5 and are classified as “False” they move to the left side of the split

3rd Node on far right

The 42 samples where (X[0] <= 0.5) = False move down to the node on the right and split on X[2] = employgrp.

X[2] <=0.5 -- criteria used to split the 42 samples

gini = 0.2449 -- the gini is a statistical measure of uncertainty used as the criteria for the split. The gini value of 0.2449 is less than the 2nd node indicating that this classification has less uncertainty.

Samples = 42 – indicates the number of observations included in the split

Value (6, 36) indicates 6 out of 42 samples have a Target variable = 0 (lower income) and the remaining 36 have Target variable = 1 (higher income) .

The 8 samples where X[2] = 0 and are less than 0.5 are classified as “True” and move to the left side of the split.

The 34 samples where X[2] = 1 and are greater than 0.5 are classified as “False” an move to the left side of the split

4th Node on far right

The 34 samples where (X[2] <= 0.5) = False move down to the next node on the far right with no further splits. This is a terminal node.

gini = 0.1609 -- the gini is a statistical measure of uncertainty used as the criteria for the split. The gini value of 0.1609 is less than the 3rd node indicating that this classification has less uncertainty.

Samples = 34 – indicates the number of observations included in the terminal node

Value (3, 31) indicates 3 out of 34 samples have a Target variable = 0 (lower income) and the remaining 31 have Target variable = 1 (higher income).

References: http://chrisstrelioff.ws/sandbox/2015/06/08/decision_trees_in_python_with_scikit_learn_and_pandas.html

http://bhaskarjitsarmah.github.io/Implementing_Decision_Tree_in_Python

http://www3.nccu.edu.tw/~jthuang/Gini.pdf

http://www.dataschool.io/simple-guide-to-confusion-matrix-terminology/

http://dsdeepdive.blogspot.com/2015/09/decision-tree-with-python.html

Code

# -*- coding: utf-8 -*- """ 07-16-17

@author: kbolam """

# -*- coding: utf-8 -*-

from pandas import Series, DataFrame import pandas import numpy as np import os import matplotlib.pylab as plt #from sklearn.cross_validation import train_test_split from sklearn.model_selection import train_test_split from sklearn.tree import DecisionTreeClassifier from sklearn.metrics import classification_report import sklearn.metrics

#os.chdir("C:\TREES")

""" Data Engineering and Analysis """

# bug fix for display formats to avoid run time errors pandas.set_option('display.float_format', lambda x:'%.2f'%x)

data = pandas.read_csv('gapminderorig.csv')

# convert to numeric format data['incomeperperson'] = pandas.to_numeric(data['incomeperperson'], errors='coerce') data['urbanrate'] = pandas.to_numeric(data['urbanrate'], errors='coerce') data['employrate'] = pandas.to_numeric(data['employrate'], errors='coerce') data['lifeexpectancy'] = pandas.to_numeric(data['lifeexpectancy'], errors='coerce')

data_clean=data.dropna()

#Need to change incomeperperson, urbanrate, employrate and lifeexpectancy # into binary categorical variables

def lifegrp (row): if row['lifeexpectancy'] <= 73.3: return 0 elif row['lifeexpectancy'] > 73.3 : return 1

#data_clean=data.dropna()

data_clean['lifegrp'] = data_clean.apply (lambda row: lifegrp (row),axis=1)

chk1 = data_clean['lifegrp'].value_counts(sort=False, dropna=False) print(chk1)

def incomegrp (row): if row['incomeperperson'] <= 3500: return 0 elif row['incomeperperson'] > 3500 : return 1

data_clean['incomegrp'] = data_clean.apply (lambda row: incomegrp (row),axis=1)

chk2 = data_clean['incomegrp'].value_counts(sort=False, dropna=False) print(chk2)

def urbangrp (row): if row['urbanrate'] <= 50 : return 0 elif row['urbanrate'] > 50 : return 1

data_clean['urbangrp'] = data_clean.apply (lambda row: urbangrp (row),axis=1)

chk3 = data_clean['urbangrp'].value_counts(sort=False, dropna=False) print(chk3)

def employgrp (row): if row['employrate'] <= 50 : return 0 elif row['employrate'] > 50 : return 1

data_clean['employgrp'] = data_clean.apply (lambda row: employgrp (row),axis=1)

chk4 = data_clean['employgrp'].value_counts(sort=False, dropna=False) print(chk4)

data2=data_clean.dropna()

data2.dtypes data2.describe()

print(type(data2))

#predictors = data2[['incomegrp','urbangrp', 'lifegrp','employgrp']]

predictors = data2[['urbangrp', 'lifegrp','employgrp']]

print(type(predictors))

#Rename response (target) variable "incomegrp", "targets" targets = data2.incomegrp

pred_train, pred_test, tar_train, tar_test = train_test_split(predictors, targets, test_size=.4)

p_train=pred_train.shape print("p_train") print(p_train) p_test=pred_test.shape print("p_test") print(p_test) t_train=tar_train.shape print("t_train") print(t_train) t_test=tar_test.shape print("t_test") print(t_test)

#Build model on training data classifier=DecisionTreeClassifier() classifier=classifier.fit(pred_train,tar_train)

predictions=classifier.predict(pred_test)

my_conf_matrix = sklearn.metrics.confusion_matrix(tar_test,predictions) my_accuracy_score = sklearn.metrics.accuracy_score(tar_test, predictions)

print("Confusion Matrix") print(my_conf_matrix) print("Accuracy Score") print(my_accuracy_score)

#Displaying the decision tree from sklearn import tree #from StringIO import StringIO from io import StringIO #from StringIO import StringIO from IPython.display import Image out = StringIO() tree.export_graphviz(classifier, out_file=out) import pydotplus graph=pydotplus.graph_from_dot_data(out.getvalue()) my_image = Image(graph.create_png())

#Write image to file so it can be rotated and expanded

graph.write_png('my_graph.png')

0 notes

Text

Basics of Linear Regression: Logistic Regression (python)

Hypothesis

The Hypothesis is that higher Income Per Person (Response Variable) is significantly associated with higher Urban Rate (Primary Explanatory Variable) for the observations of the Countries included in the Gapminder dataset provided for this course. The possible confounding explanatory variables included in the model were Life Expectancy and Employment Rate.

Variable Description

Datasource: Coursera Gapminder dataset

Response Variable: Income Per Person (incomeperperson)

Changed to Binary Categorical variable “incomegrp”:

1 = High Income (Income per Person > $3500)

0 = Low Income (Income per Person =< $3500)

Primary Explanatory Variable: Urban Rate (urbanrate)

Changed to Binary Categorical variable “urbangrp”:

1 = High Urban Rate ( > 50%)

0 = Low Urban Rate ( =< 50% )

Possible confounding Explanatory Variables:

Life Expectancy (lifeexpectancy)

Changed to Binary Categorical variable “lifegrp”:

1 = Longer Life Expectancy (Years lived > 73.3 years)

0 = Shorter Life Expectancy (Years lived =< 73.3 years)

Employment Rate (employrate)

Changed to Binary Categorical variable “employgrp”:

1 = High Employment Rate ( > 50%)

0 = Low Employment Rate ( =< 50%)

Results

In the Logit regression model that includes only the Response variable of Income Per Person, changed into the binary variable “incomegrp” and the Primary Expanatory variable of Urban Rate changed into the binary variable “urbangrp”, the odds of having a higher income were more than 17 times higher in countries with a higher urban rate (greater than 50%) than in countries with a lower urban rate (50% or less): OR=17.15, 95% CI= 6.74 – 43.67. (Output Test 1 below)

After adding the possible confounding variables of Life Expectancy and Employment Rate, the odds of having a higher income were still significant but reduced to 8.35 times higher in countries with a higher urban rate (OR=8.35, 95% CI = 2.80 - 24.93, p=.0000). The decrease in odds from 17.15 to 8.35 was due to the variable Life Expectancy, acting as a confounding variable that was even more significantly associated with higher income than Urban Rate (OR= 16.45, 95% CI=6.44 – 41.99, p=.0000). The other possible confounding variable, Employment Rate, was not significantly associated with Income Per Person in this model as indicated by the p-value greater than 0.05 (OR=2.59, 95% CI=0.88 – 7.68, p=0.086). (Output Test 2 below)

The results of this regression test supports the hypothesis that Urban Rate is significantly associated with Income Per Person, but indicate that other variables, such as Life Expectancy, could be confounding factors that have a greater association with Income Per Person than Urban Rate.

NOTE on why all variables are Categorical Binary: In the first logistic regression test I used quantitative versions of “urbanrate”, “lifeexpectancy” and “employrate” but the results indicated there was no association or a negative association between these explanatory variables and “incomegrp” which was the binary categorical version of “incomeperperson”. Since this reversed the results of the regression tests I did in Assignment 3 which showed a significant association with the Income Per Person and these variables, I decided to change all the explanatory variables into binary categorical variables so that they would be more similar to the examples used in the Lesson 4 videos, which also used binary explanatory variables. I don’t recall that this was a requirement for the assignment, so I thought I would mention why I changed all of the explanatory variables to binary.

Output

1) Regression Test Variables:

Binary Categorical Response: Incomegrp

Binary Categorical Primary Explanatory: Urbangrp

2) Regression Test Variables:

Binary Categorical Response: Incomegrp

Binary Categorical Primary Explanatory: Urbangrp

Binary Categorical Possible Confounder: Lifegrp

Binary Categorical Possible Confounder: Employgrp

Code

# -*- coding: utf-8 -*-

""" Created July 1, 2017 @author: kb """ import numpy import pandas import matplotlib.pyplot as plt import statsmodels.api as sm import statsmodels.formula.api as smf import seaborn

# bug fix for display formats to avoid run time errors pandas.set_option('display.float_format', lambda x:'%.2f'%x)

data = pandas.read_csv('gapminderorig.csv')

# convert to numeric format data['incomeperperson'] = pandas.to_numeric(data['incomeperperson'], errors='coerce') data['urbanrate'] = pandas.to_numeric(data['urbanrate'], errors='coerce') data['employrate'] = pandas.to_numeric(data['employrate'], errors='coerce') data['lifeexpectancy'] = pandas.to_numeric(data['lifeexpectancy'], errors='coerce')

#Need to change incomeperperson, urbanrate, employrate and lifeexpectancy # into binary categorical variables

def lifegrp (row): if row['lifeexpectancy'] <= 73.3: return 0 elif row['lifeexpectancy'] > 73.3 : return 1

data_clean=data.dropna()

data_clean['lifegrp'] = data_clean.apply (lambda row: lifegrp (row),axis=1)

chk1 = data_clean['lifegrp'].value_counts(sort=False, dropna=False) print(chk1)

def incomegrp (row): if row['incomeperperson'] <= 3500: return 0 elif row['incomeperperson'] > 3500 : return 1

data_clean['incomegrp'] = data_clean.apply (lambda row: incomegrp (row),axis=1)

chk2 = data_clean['incomegrp'].value_counts(sort=False, dropna=False) print(chk2)

def urbangrp (row): if row['urbanrate'] <= 50 : return 0 elif row['urbanrate'] > 50 : return 1

data_clean['urbangrp'] = data_clean.apply (lambda row: urbangrp (row),axis=1)

chk3 = data_clean['urbangrp'].value_counts(sort=False, dropna=False) print(chk3)

def employgrp (row): if row['employrate'] <= 50 : return 0 elif row['employrate'] > 50 : return 1

data_clean['employgrp'] = data_clean.apply (lambda row: employgrp (row),axis=1)

chk4 = data_clean['employgrp'].value_counts(sort=False, dropna=False) print(chk4)

data2=data_clean.dropna()

# listwise deletion of missing values sub1 = data2[['incomegrp','urbangrp', 'lifegrp','employgrp']].dropna()

############################################################################## # LOGISTIC REGRESSION ##############################################################################

# logistic regression with social phobia lreg1 = smf.logit(formula = 'incomegrp ~ urbangrp', data = sub1).fit() print (lreg1.summary()) # odds ratios print ("Odds Ratios") print (numpy.exp(lreg1.params))

# odd ratios with 95% confidence intervals params = lreg1.params conf = lreg1.conf_int() conf['OR'] = params conf.columns = ['Lower CI', 'Upper CI', 'OR'] print (numpy.exp(conf))

# logistic regression with social phobia and depression lreg2 = smf.logit(formula = 'incomegrp ~ urbangrp + lifegrp', data = sub1).fit() print (lreg2.summary())

# odd ratios with 95% confidence intervals params = lreg2.params conf = lreg2.conf_int() conf['OR'] = params conf.columns = ['Lower CI', 'Upper CI', 'OR'] print (numpy.exp(conf))

# logistic regression with social phobia and depression lreg3 = smf.logit(formula = 'incomegrp ~ urbangrp + lifegrp + employgrp', data = sub1).fit() print (lreg3.summary())

# odd ratios with 95% confidence intervals params = lreg3.params conf = lreg3.conf_int() conf['OR'] = params conf.columns = ['Lower CI', 'Upper CI', 'OR'] print (numpy.exp(conf))

0 notes

Text

Basics of Linear Regression: Multiple Regression (python)

Hypothesis

Datasource: Coursera Gapminder dataset

Response Variable: Income Per Person (incomeperperson)

Primary Explanatory Variable: Urbanization (urbanrate)

The Hypothesis is that Income Per Person is significantly associated with Urbanization for the observations for the Countries included in the Gapminder dataset provided for this course. The Regression test below indicates there is significant association (R-squared = .42, p-value = 0.000) between the Response and Primary Explanatory Variable (see results below). However, additional Multiple Regression tests indicate there are other Explanatory variables that are also significantly associated with Income Per Person and Explanatory variables AND some that proved to be Confounding Variables (see Confounding Variable Rejected below) .

Regression Results: Income Per Person and Urbanization

Multiple Regression Test Results

The Multiple Regression Test results indicate there are other variables that are also significantly associated with Income Per Person.

Response Variable: Income Per Person (incomeperperson)

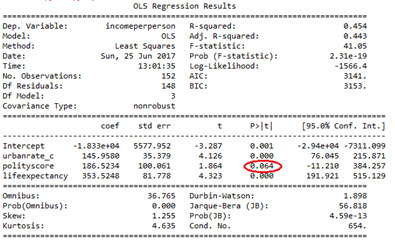

Primary Explanatory Variable: Urbanization (urbanrate: Beta=173.72, p=0.000)

Additional Explanatory variables included in model:

Life Expectancy (lifeexpectancy: Beta 577.816, p=0.000)

Employment rate (employrate: Beta=273.979, p=0.000)

After adjusting for the Potential Confounding Explanatory Variables listed above, Urban Rate, Life Expectancy and Employment Rate were found to be significantly and positively associated with Response Variable, Income Per Person. There does not appear to be evidence of confounding between the Response and Primary Explanatory variable of Urbanization..

Multiple Regression Test Results: Response: incomeperson; Explanatory: urbanrate, lifeexpectancy, employrate

Q-Q Plot:

Response: incomeperson; Explanatory: urbanrate, lifeexpectancy, employrate

The q-q plot indicates that the residuals are close to following a straight line but not close enough to perfectly estimate the variability of the income per person. The curve indicates it’s possible a quadratic variable might improve the distribution of the residuals, however when I included Employment rate as an Explanatory variable, the p-value of the quadratic urbanrate**2 variable increased to more than .05 and I removed it (see Confounding Variables Rejected section below). However, possibly adding other quadratic explanatory variables might improve the distribution.

Residual Plots:

Response: incomeperson; Explanatory: urbanrate, lifeexpectancy, employrate

The standarized residual distribution for the 4 outliers with absolute value of greater than 2.5 is 2.6%, which indicates that this model is possibly provides a good explanation of the variability in income per person.

Regression Plots

Explanatory: urbanrate, lifeexpectancy, employrate

The Regression plots indicate there are significant outliers and residuals however the Partial Regression Plots indicate a basic linear association. Also, the Residual Plot above indicates that when all three explanatory variables are included in the Multiple Regression Tests the outlier residual values are less than 5%.

Leverage Plot

Response: incomeperson; Explanatory: urbanrate, lifeexpectancy, employrate

The Leverage plot indicates, as does the Residual plot (included in blog above), that the outliers do not have significant influence on the estimation of the regression model. One value may have greater influence but it is not an outlier (178 with leverage value greater than .10)

Confounding Variables Rejected

Democracy Score (polityscore)(Calculated by subtracting an autocracy score from a democracy score. The summary measure of a country's democratic and free nature. -10 is the lowest value, 10 the highest.)

Rejected because p-value increased to .064 when Life Expectancy added.

Urbanrate Squared (urbanrate_c**2)

Rejected because p-value increased to .048 when Employment rate added.

CODE

# -*- coding: utf-8 -*- """ Created June 25, 2017 @author: kbolam """ import numpy import pandas import matplotlib.pyplot as plt import statsmodels.api as sm import statsmodels.formula.api as smf import seaborn

# bug fix for display formats to avoid run time errors pandas.set_option('display.float_format', lambda x:'%.2f'%x)

data = pandas.read_csv('gapminderorig.csv')

# convert to numeric format data['incomeperperson'] = pandas.to_numeric(data['incomeperperson'], errors='coerce') data['urbanrate'] = pandas.to_numeric(data['urbanrate'], errors='coerce') data['employrate'] = pandas.to_numeric(data['employrate'], errors='coerce') data['lifeexpectancy'] = pandas.to_numeric(data['lifeexpectancy'], errors='coerce')

# listwise deletion of missing values sub1 = data[['urbanrate', 'incomeperperson', 'employrate', 'lifeexpectancy']].dropna()

# center quantitative IVs for regression analysis sub1['urbanrate_c'] = (sub1['urbanrate'] - sub1['urbanrate'].mean()) sub1['employrate_c'] = (sub1['employrate'] - sub1['employrate'].mean()) sub1['lifeexpectancy_c'] = (sub1['lifeexpectancy'] - sub1['lifeexpectancy'].mean()) sub1[["urbanrate_c", "employrate_c", "lifeexpectancy_c"]].describe()

#Best fit model # adding life expetancy, employrate reg3 = smf.ols('incomeperperson ~ urbanrate_c + lifeexpectancy_c + employrate_c', data=sub1).fit() print (reg3.summary())

#Q-Q plot for normality fig4=sm.qqplot(reg3.resid, line='r')

# simple plot of residuals stdres=pandas.DataFrame(reg3.resid_pearson) plt.plot(stdres, 'o', ls='None') l = plt.axhline(y=0, color='r') plt.ylabel('Standardized Residual') plt.xlabel('Observation Number')

# additional regression diagnostic plots # NEEDED TO ADD "=" AFTER FIGSIZE IN LINE BELOW fig2 = plt.figure(figsize=(12,8)) fig2 = sm.graphics.plot_regress_exog(reg3, "employrate_c", fig=fig2)

# additional regression diagnostic plots # NEEDED TO ADD "=" AFTER FIGSIZE IN LINE BELOW fig2 = plt.figure(figsize=(12,8)) fig2 = sm.graphics.plot_regress_exog(reg3, "lifeexpectancy_c", fig=fig2)

# additional regression diagnostic plots # NEEDED TO ADD "=" AFTER FIGSIZE IN LINE BELOW fig2 = plt.figure(figsize=(12,8)) fig2 = sm.graphics.plot_regress_exog(reg3, "urbanrate_c", fig=fig2)

# leverage plot fig3=sm.graphics.influence_plot(reg3, size=8) print(fig3)

0 notes

Text

Basics of Linear Regression

Regression Modeling in Practice, Assignment 2

1) Code

# -*- coding: utf-8 -*- """ Created on 5/21/17

@author: kbolam """

import numpy as numpy import pandas as pandas import seaborn import statsmodels.api import statsmodels.formula.api as smf import scipy import matplotlib.pyplot as plt

# Needed to change to 'latin-1" encoding so csv would load data2 = pandas.read_csv('WB_Aid_Income_growth_2015new2.csv', encoding='latin-1', low_memory=False)

#setting variables you will be working with to numeric data2['income2015'] = data2['income2015'].convert_objects(convert_numeric=True) data2['aid2015'] = data2['aid2015'].convert_objects(convert_numeric=True) #data['Urban_pop_growth'] = data['Urban_pop_growth'].convert_objects(convert_numeric=True)

#remove blank values data2['income2015']=data2['income2015'].replace(' ', numpy.nan) data2['aid2015']=data2['aid2015'].replace(' ', numpy.nan)

data2=data2.dropna()

#group income2015 into 2 catgorical variables def incomegrp (row): if row['income2015'] <= 3200: return 0 elif row['income2015'] > 3200: return 1

data2['incomegrp'] = data2.apply (lambda row: incomegrp (row),axis=1)

#print frequency table for incomegrp variable print ('Income Group: 1 = HIGH INCOME; 0 = LOW INCOME') chk1 = data2['incomegrp'].value_counts(sort=False, dropna=False) print(chk1)

#run OLS regression with fit statistics and print summary print ("OLS regression model for the association between Aid Received and Income Group") reg1 = smf.ols('aid2015 ~ incomegrp', data=data2).fit() print (reg1.summary())

2) Output

The quantitative income per capita variable (income2015) was recoded as a binary Categorical Explanatory variable (incomegrp) :

Frequency table for Income Group: 0 = LOW INCOME, 1 = HIGH INCOME:

0 65

1 66

3) Summary

F-Statistic = 1.243

P-Value = 0.267

Coefficient/beta for 'incomegrp' = 98.0134

Intercept = 108.5436

The F-Statistic is close to 1.0, which is a indicator that the null hypothesis is true, and the P-Value is over .05 which is also an indicator that the null hypothesis is true.

The linear regression model results indicate that aid received per capita is not significantly associated with the per capita income of a county.

0 notes

Text

Writing About Your Data

Regression Modeling in Practice, Assignment 1

Sample

The sample is from data available on the World Bank website. The World Bank was established in 1944 and is a partnership that works to reduce poverty and support development worldwide. The World Bank Group is headquartered in Washington, D.C., and provides countries around the world with loans and grants to support education, health, financial, agricultural and other private and public sector development. The sample includes data gathered from 218 countries.

http://www.worldbank.org/

Procedure

The World Bank collects data reported by official sources of member countries around the world to track information relating to development. Information also comes from the Organisation for Economic Co-operation and Development (OECD) National Accounts data files; the Official Development Assistance disbursement information; the United Nations World Urbanization Prospects data; and other data sources that were not provided. [Ideally I’d like to find out more information about the sources of the World Bank information and how the data is collected.]

For more information:

http://data.worldbank.org/about

Measures

Three separate World Bank data sets are used and are described below. The ODA and GDP per capita datasets are measured using “current US dollars” as opposed to “constant US dollars (see NOTE below for more information.) Current United States Dollars are used because the responses from the three datasets used to compare the relationship between Aid Received, Income and Urban Population Growth are from for one year (2015) .

Response Variable - Aid received per capita: Net Official Development Assistance (ODA) received per capita (current US $): Consists of disbursements of loans and grants by official agencies of the members of the Development Assistance Committee (DAC) and also by non-DAC countries. Not all specific data sources were provided.

Moderating Variable - Income received per capita : GDP per capita (current US $): Gross Domestic Product (GDP) per capita is is the sum of gross value added by all resident producers in the economy plus any product taxes and minus any subsidies not included in the value of the products. The data source was the World Bank national accounts data and the Organisation for Economic Co-operation and Development (OECD) data files.

Explanatory Variable: Urban population growth (annual %): Urban population refers to people living in urban areas as defined by national statistical offices. It is calculated using World Bank population estimates and urban ratios from the United Nations World Urbanization Prospects.

NOTE:

Current US Dollars: The actual value of US dollars for the year specified that is not adjusted for inflation.

Constant US Dollars: The value of US dollars adjusted to use a “constant” value for the dollar for all years included in the sample. Usually the measure used is the value of the dollar for a specific year such as 2000 or 2010. This results in a measure that is adjusted for inflation and allows for comparison of economic responses across various years.

0 notes

Text

Assignment 4 - GAPMinder

1) CODE (python)

# -*- coding: utf-8 -*- """ Created on 5/21/17

@author: kbolam """

import pandas import numpy import seaborn import scipy import matplotlib.pyplot as plt

# Needed to change to 'latin-1" encoding so csv would load data = pandas.read_csv('income_and_aid_received_WB_2010_d.csv', encoding='latin-1', low_memory=False)

#setting variables you will be working with to numeric data['Income_pp'] = data['Income_pp'].convert_objects(convert_numeric=True) data['Aid_received_pp'] = data['Aid_received_pp'].convert_objects(convert_numeric=True) data['Urban_pop_growth'] = data['Urban_pop_growth'].convert_objects(convert_numeric=True)

data['Income_pp']=data['Income_pp'].replace(' ', numpy.nan)

# Apparently can only run one scatter plot at a time. # MAYBE TRY CHANGING X AND Y -- I'M NOT SURE WHICH SHOULD BE RESPONSE AND WHICH EXPLANATORY # I THINK THAT Y IS RESPONSE AND X IS EXPLANATORY BUT CHECK NOTES IN SUMMARY FOR THIS ASSIGNMENT data_clean=data.dropna()

print ('association between Urban_pop_growth and Aid_received_pp') print (scipy.stats.pearsonr(data_clean['Urban_pop_growth'], data_clean['Aid_received_pp']))

def incomegrp (row): if row['Income_pp'] <= 660: return 1 elif row['Income_pp'] <= 2300 : return 2 elif row['Income_pp'] > 2300: return 3

data_clean['incomegrp'] = data_clean.apply (lambda row: incomegrp (row),axis=1)

chk1 = data_clean['incomegrp'].value_counts(sort=False, dropna=False) print(chk1)

sub1=data_clean[(data_clean['incomegrp']== 1)] sub2=data_clean[(data_clean['incomegrp']== 2)] sub3=data_clean[(data_clean['incomegrp']== 3)]

print ('association between urban population growth and aid received per person for LOW income countries') print (scipy.stats.pearsonr(sub1['Urban_pop_growth'], sub1['Aid_received_pp'])) print (' ') print ('association between urban population growth and aid received per person for MIDDLE income countries') print (scipy.stats.pearsonr(sub2['Urban_pop_growth'], sub2['Aid_received_pp'])) print (' ') print ('association between urban population growth and aid received per person for HIGH income countries') print (scipy.stats.pearsonr(sub3['Urban_pop_growth'], sub3['Aid_received_pp']))

''' scat1 = seaborn.regplot(x="Urban_pop_growth", y="Aid_received_pp", data=sub1) plt.xlabel('Urban Population Growth') plt.ylabel('Aid Received Per Person') plt.title('Scatterplot for the Association Between Urban Population Growth and Aid Received Per Person Rate for LOW income countries') print (scat1)

scat2 = seaborn.regplot(x="Urban_pop_growth", y="Aid_received_pp", fit_reg=False, data=sub2) plt.xlabel('Urban Population Growth') plt.ylabel('Aid Received Per Person') plt.title('Scatterplot for the Association Between Urban Population Growth and Aid Received Per Person Rate for MIDDLE income countries') print (scat2) ''' scat3 = seaborn.regplot(x="Urban_pop_growth", y="Aid_received_pp", data=sub3) plt.xlabel('Urban Population Growth') plt.ylabel('Aid Received Per Person') plt.title('Scatterplot for the Association Between Urban Population Growth and Aid Received Per Person Rate for HIGH income countries') print (scat3)

2) OUTPUT/RESULTS

For this assignment I used the Pearson R Correlation Coefficient for the inferential test using a categorical Moderating variable because both the Response and Explanatory variables are quantitative.

The quantitative variable Income Per Person was broken down into three categories to create the categorical Moderating Variable of Income Group: Low, Medium and High Income. The Pearson R Correlation Coefficient was run for each group using the following Response and Explanatory variables:

Response Variable: Aid Received Per Person (Aid_received_pp) Explanatory Variable: Urban Population Growth (Urban_pop_growth) Moderating Variable: Income Group (incomegrp) created from Income Per Person (income_pp)

R and P values WITHOUT the Moderating variable: The R value is close to -.20 and the P value is about .026 which is less than .05. The values do not indicate a very strong correlation but do indicate that there is a possible negative relationship between the two variables: R value: -0.19824330900330461 P value: 0.026065670618157444

R and P values WITH the Moderating variable:

LOW Income R value: 0.094315436858260077 P value: 0.55243551756607689) The R value at .01 is close to 0 and the P value is high at .55, so for the countries with the lowest income there is no indication of a correlation between Urban Population Growth and Aid Received Per Person.

MIDDLE Income R value: -0.21125721901189495 P value: 0.17927184082898076 The R value at -0.21 is low enough to suggest a possible negative relationship but the P value is high at .17 which indicates there is not a relationship between Urban Population Growth and Aid Received Per Person for Middle Income Countries.

HIGH Income R value: -0.28051984252791295 P value: 0.07194985469995091 The R value at -0.28 suggests a possible negative relationship but the P value is higher than 0.05 at 0.07 which indicates there is not a relationship between Urban Population Growth and Aid Received Per Person for High Income countries.

3) SUMMARY

The results of the Pearson R Correlation Coefficient tests using a Moderating Variable derived from Income Per Person indicate that there is not a relationship between Aid Received and Urban Growth, however, as I mentioned in the previous blog, the GapMinder dataset has blank values for Aid Received Per Person for the 30 countries with the highest Income Per Person. This missing data may result in misleading results. I would be interested to see if there is any significant change to the results after adding more complete Aid Received Per Person information to the dataset .

0 notes

Text

Assignment 3 - GAPMinder

1) CODE (python)

# -*- coding: utf-8 -*- """ Created on 5/21/17

@author: kbolam """

import pandas import numpy import seaborn import scipy import matplotlib.pyplot as plt

# Needed to change to 'latin-1" encoding so csv would load data = pandas.read_csv('income_and_aid_received_WB_2010.csv', encoding='latin-1', low_memory=False)

# Chose quantitative variables from GAPMINDER database # Because Pearson Correleation tool requires quantitative variables # setting quantitative variables to numeric

data['Income_pp'] = data['Income_pp'].convert_objects(convert_numeric=True)

data['Aid_received_pp'] = data['Aid_received_pp'].convert_objects(convert_numeric=True) data['Urban_pop_growth'] = data['Urban_pop_growth'].convert_objects(convert_numeric=True)

data['Income_pp']=data['Income_pp'].replace(' ', numpy.nan)

# Apparently can only run one scatter plot at a time. # Y IS RESPONSE AND X IS EXPLANATORY ''' scat1 = seaborn.regplot(x="Income_pp", y="Aid_received_pp", fit_reg=True, data=data) plt.xlabel('Income_pp') plt.ylabel('Aid_received_pp') plt.title('Scatterplot for the Association Between Income_pp and Aid_received_pp') ''' scat2 = seaborn.regplot(x="Urban_pop_growth", y="Aid_received_pp", fit_reg=True, data=data) plt.xlabel('Urban_pop_growth') plt.ylabel('Aid_received_pp') plt.title('Scatterplot for the Association Between Urban_pop_growth and Aid_received_pp')

data_clean=data.dropna()

print ('association between Income_pp and Aid_received_pp') print (scipy.stats.pearsonr(data_clean['Income_pp'], data_clean['Aid_received_pp']))

print ('association between Urban_pop_growth and Aid_received_pp') print (scipy.stats.pearsonr(data_clean['Urban_pop_growth'], data_clean['Aid_received_pp']))

2) OUTPUT and SUMMARY

Assignment 3 covers the Pearson Correlation tool for quantitative variables. For previous assignments I used the MUDF dataset that has mostly categorical variables so for this assignment I decided to use quantitative variables from the Gapminder dataset. I was interested in the following two questions and used the indicated Response and Explanatory quantitative variables to generate a correlation coefficient (R-value) using the Pearson Correlation tool:

1. Is more aid received per person in countries that have a higher income per person?

Response Variable: Aid received per person (Aid_received_pp) Explanatory Variable: Income per person (Income_pp)

R value: 0.027092283689885945 P value: 0.76331186580458943

Summary: Since the R value is close to zero and P value is greater than 0.05 the results indicate there is little or no relationship between these two variables. However, in looking at the dataset description for the “Aid received per person” variable, the aid amount included is foreign aid and does not include domestic aid received per person in more affluent countries. The 30 Countries with the highest per capita income did not report any aid received, including the U.S., Norway, Sweden, Canada and UK. Most of these 30 countries have domestic programs that provide aid to residents and this aid is not included in the Gapminder data. It would be interesting to see if the R and P values change if the data included both foreign and domestic aid received per person.

2. Does Aid received per person increase if urban population growth increases?

Response Variable: Aid received per person (Aid_received_pp) Explanatory Variable: Urban Population Growth (Urban_pop_growth)

R value: -0.19824330900330464 P value: 0.026065670618157444