Fizzy | she/her | I like dirt | 18+

Last active 60 minutes ago

Don't wanna be here? Send us removal request.

Photo

could i offer you some round bears in these trying times?

161K notes

·

View notes

Text

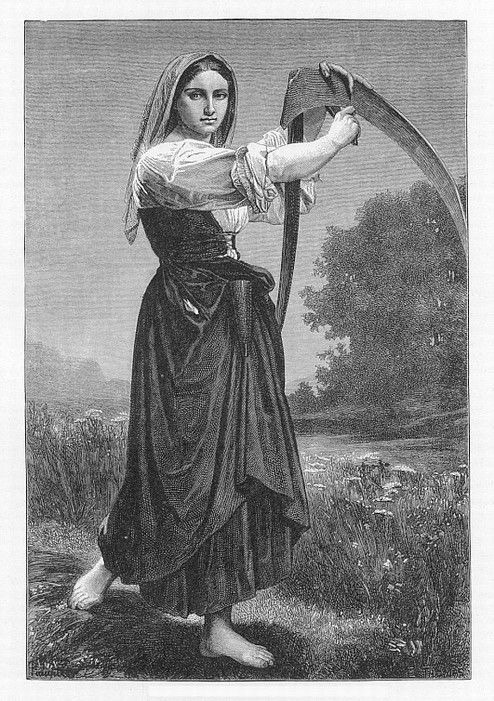

Feminist fantasy is funny sometimes in how much it wants to shit on femininity for no goddamned reason. Like the whole “skirts are tools of the patriarchy made to cripple women into immobility, breeches are much better” thing.

(Let’s get it straight: Most societies over history have defaulted to skirts for everyone because you don’t have to take anything off to relieve yourself, you just have to squat down or lift your skirts and go. The main advantage of bifurcated garments is they make it easier to ride horses. But Western men wear pants so women wearing pants has become ~the universal symbol of gender equality~)

The book I’m reading literally just had its medievalesque heroine declare that peasant women wear breeches to work in the field because “You can’t swing a scythe in a skirt!”

Hm yes story checks out

peasant women definitely never did farm labour in skirts

skirts definitely mean you’re weak and fragile and can’t accomplish anything

skirts are definitely bad and will keep you from truly living life

no skirts for anyone, that’s definitely the moral of the story here

100K notes

·

View notes

Text

i say this in all seriousness, a great way to resist the broad cultural shift of devaluing curiosity and critical thinking is to play my favorite game, Hey What Is That Thing

you play it while walking around with friends and if you see something and don't know what it is or wonder why its there, you stop and point and say Hey What Is That Thing. and everyone speculates about it. googling it is allowed but preferably after spending several minutes guessing or asking a passerby about it

weird structures, ambiguous signs, unfamiliar car modifications, anything that you can't immediately understand its function. eight times out of ten, someone in the group actually knows, and now you know!

a few examples from me and my friends the past few weeks: "why is there a piece of plywood sticking out of that pond in a way that looks intentional?" (its a ramp so squirrels that fall in to the pond can climb out) • "my boss keeps insisting i take a vacation of nine days or more, thats so specific" (you work at a bank, banks make employees take vacation in long chunks so if youre stealing or committing fraud, itll be more obvious) • "why does this brick wall have random wooden blocks in it" (theres actually several reasons why this could be but we asked and it was so you could nail stuff to the wall) • "most of these old factories we drive past have tinted windows, was that just for style?" (fun fact the factory owners realized that blue light keeps people awake, much like screen light does now, so they tinted the windows blue to keep workers alert and make them work longer hours)

been playing this game for a long time and ive learned (and taught) a fuckton about zoning laws, local history, utilities (did you know you can just go to your local water treatment plant and ask for a tour and if they have a spare intern theyll just give you a tour!!!) and a whole lot of fun trivia. and now suddenly you're paying more attention when youre walking around, thinking about the reasons behind every design choice in the place you live that used to just be background noise. and it fuckin rules.

61K notes

·

View notes

Text

One of the coolest things to remember is that because prey animals have eyes on the side of their head, they are looking at you when they're in profile, not facing you! Hot tip for artists and animal lovers!

57K notes

·

View notes

Text

When you accidentally say the q word (quest) and your knight starts gently clanking from their happy wiggles like now you've done it, you have to send them into the dragon's lair or their helmet ploom will droop and they'll start waxing sad poetic in the moonlight

24K notes

·

View notes

Text

AI Models and Energy Use: Google Search vs ChatGPT

In today's fast-evolving digital landscape, Google Search and OpenAI's ChatGPT model have dramatically changed how we access information. The phrase "I'll Google that" is now practically part of the English language.

While both tools offer knowledge, they function tremendously differently, leading to significant environmental implications. Google is a traditional search engine. It retrieves and ranks existing content. ChatGPT is powered by a large language model (LLM). It generates original responses based on patterns from extensive datasets.

It is only a matter of time until we say, "Let me ChatGPT that." And as LLMs become more common, it's crucial to understand their environmental impact. This includes energy use during regular operations. It also involves the substantial resources required for training these models.

1. Energy Consumption and Carbon Emissions

The main environmental concern with digital tools is energy consumption. Google Search has optimized its energy use to about 0.3 watt-hours per query. That may sound small, but with billions of daily searches, efficiency at scale is essential. Google's data centers use custom-built processors and serve pre-cached results, contributing to very low energy overhead per search. A typical individual's Google use for an entire year may produce about the same amount of CO₂ as just a single load of washing.

By contrast, ChatGPT uses OpenAI's GPT architecture, which requires real-time processing of billions of parameters for each user query. This means that instead of fetching a pre-written result, the system must compute the answer live, using intensive GPU resources. Some estimates suggest each ChatGPT query emits 2 to 3 grams of CO₂. In contrast, Google Search emits a fraction of a gram. This is, however, a somewhat hotly debated topic in the AI field.

However, what cannot be debated is that the training of LLMs remains significant. There are estimates that GPT-3, for instance, used 1,287 megawatt-hours of electricity and released roughly 552 metric tons of CO₂. which is roughly equivalent to the emissions of 112 gasoline-powered cars over a year.

GPT-4, presumed to be significantly larger, would have required orders of magnitude more energy. These emissions represent a "front-loaded" environmental cost. They occur once during model development. However, they are massive and increasingly frequent as newer models are developed.

As LLMs scale in size (e.g., GPT-4, Claude, Gemini), so does their energy intensity. The arms race to create bigger, more innovative models has generated concerns about AI's escalating carbon footprint. This is a trade-off between computational power and environmental sustainability.

The $500 billion Project Stargate is mind-boggling. It is the world’s first recently proposed AI data center for OpenAI, NVIDIA, and Oracle. Each data center will draw 100 megawatts of electricity, enough for a city of 100,000.

2. Water Usage and Cooling Needs

Behind the scenes of every LLM-powered AI model and search engine are vast data centers. Many of these data centers rely on water-based cooling systems. These facilities must manage enormous thermal loads to keep GPUs and servers from overheating.

Google has acknowledged this environmental impact and committed to becoming water-positive by 2030. It publishes detailed reports and data on water consumption and replenishment efforts.

ChatGPT uses Microsoft Azure's infrastructure. While Microsoft has improved water efficiency, OpenAI hasn't disclosed specific water usage figures during model training. LLMs like GPT-3 and GPT-4 - but the associated cooling needs and water usage must be significant.

Water consumption is becoming an increasingly important part of the climate discussion around AI. A 2023 study by the University of California, Riverside estimated that training OpenAI's GPT-3 model for two weeks may have consumed over 700,000 liters of fresh water. This is about the same amount of water used to manufacture approximately 370 BMW cars or 320 Tesla electric vehicles. This consumption was primarily for cooling during compute-heavy training runs.

3. Renewable Energy and Sustainability Commitments

Google is a leader in green infrastructure. It has been carbon neutral since 2007. The company has matched 100% of its electricity use with renewable energy since 2017. The company aims for 24/7 carbon-free energy by 2030. It wants to ensure all data centers and products run on clean power throughout the day - this includes Google Search.

OpenAI doesn't now operate its own data centers (subject to Project Stargate, which sounds like it eventually will!) Instead, it runs ChatGPT through Microsoft Azure. Microsoft Azure has committed to 100% renewable energy use by 2025. While this is promising, OpenAI does not publish its own detailed climate or sustainability reports. As a result, we must rely on Microsoft's broader commitments to infer progress.

With the rise of LLMs, energy demands are expected to increase dramatically. This occurs not just during training but also for frequent, casual use. For instance, deploying an LLM like GPT-4 to millions of daily users introduces real-time GPU inference. This occurs at a scale previously unseen in consumer tech. The environmental sustainability of this model depends on how quickly providers can shift to low-carbon and renewable energy sources.

4. Transparency and Public Accountability

Google and OpenAI differ notably in transparency. Google releases annual sustainability reports with detailed data on carbon emissions. They also report on water usage and energy consumption - these reports include research on their climate strategies.

This openness allows for public scrutiny. It sets an industry standard. This makes it easier for the public to understand. It also helps hold companies accountable for their environmental impact.

OpenAI, by contrast, is relatively opaque. It has not disclosed key metrics like the exact energy used in training GPT-4. The operational impact per user query is also undisclosed. For researchers, journalists, and environmentally conscious LLM users, this lack of openness around LLM performance is concerning. The impact is especially worrisome as AI systems are integrated into more products and services.

Without better transparency, evaluating how responsibly LLMs are being deployed is difficult. Holding developers accountable for their environmental impact is also challenging.

5. Innovation and Long-Term Solutions

Both companies are investing in technologies to reduce the long-term environmental impact of their services. Google is exploring advanced energy storage and cooling systems. They are also investigating small modular nuclear reactors to help power AI workloads sustainably. It is also a major player in carbon offset and capture initiatives.

Microsoft, OpenAI's cloud provider, is pursuing similar goals. These include investments in sustainable AI infrastructure, advanced clean energy, and green software design. However, the lack of specific climate targets or emissions reduction pathways from OpenAI itself remains a gap.

More broadly, there is a growing call for "green AI." This calls for developing and deploying LLMs to prioritize efficiency, transparency, and sustainability. Some researchers have suggested caps on model size or incentives for lower-carbon training techniques. Whether industry leaders will adopt such practices remains an open question.

Conclusion

Large language models like ChatGPT are becoming central to how we work, learn, and communicate. We cannot ignore their environmental footprint. Google Search is a mature and highly optimized system. It has a clear path toward zero-carbon operation. ChatGPT, and the LLMs that power it, are still in the early stages of balancing performance with sustainability.

ChatGPT is more energy-intensive, water-dependent, and less transparent—particularly during its training phase—but it also offers remarkable utility and innovation. With proper investment and leadership, LLMs can evolve toward a greener future. That future, though, will require not only technical solutions.

It will also require greater accountability. Environmental awareness from developers and users alike is necessary as well.

The era of large-scale AI is unfolding. The challenge we now face is to ensure our most advanced technologies don't harm the planet.

Chris Garrod, May 12, 2023

Source: AI Models and Energy Use: Google Search vs ChatGPT

490 notes

·

View notes

Text

I copy pasted parts of this but I do hand letter everything, because while I'm trying to work easier as I'm chronically ill, I am still chronically stupid

30K notes

·

View notes

Text

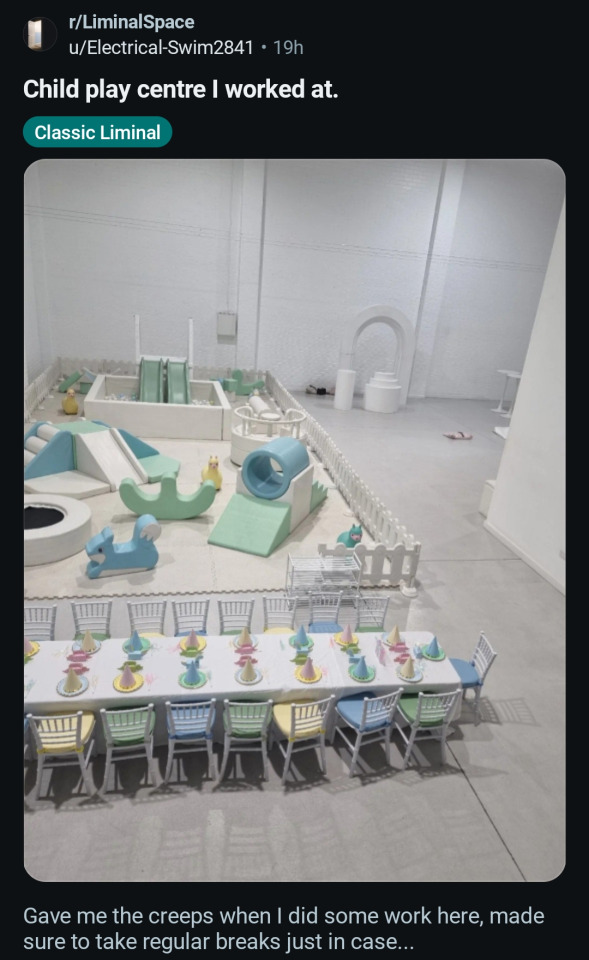

This shit literally looks like those evil underground science labs in movies where they do unethical experiments on children

59K notes

·

View notes

Photo

Spirit: Stallion of The Cimarron & the Indian Boarding Schools/Residential Schools allegory

#and people wonder why I deeply love allegory as an adult#I knew this movie was special when I watched it obsessively as an undiagnosed four year old

93K notes

·

View notes

Text

I feel like in the rush of “throw out etiquette who cares what fork you use or who gets introduced first” we actually lost a lot of social scripts that the younger generations are floundering without.

91K notes

·

View notes

Text

11K notes

·

View notes

Text

sometimes you just need to summon this flowchart as an overlay of your vision

20K notes

·

View notes