Don't wanna be here? Send us removal request.

Text

MaxwellChu-Self-evaluation

Throughout the course of IGME 480, the skills that I have learned include javascript art production, AI data analysis, creating an un-drawing machine, and a basic understanding of how openAI works in python. To list the work I have done, they are the Training Camp (skill-building), Chance, Indeterminacy & Rule-based Systems, Un-Drawing Machine, AI & ML I: Augmented Body, AI/ML II: Image Synthesis & Classification, and Self-directed Final Project. The first on this list involved programming art in p5.js on the website OpenProcessing. The next one focused on generative art theory and études. Then comes machines learning to replicate drawing styles based on humans, but they can’t due to creative limitations. Augmented bodies showed how user interface can be shown throughout the entire body. After that is the study of how AI can analyze data and images to output various solutions. Finally, the self-directed final project focused on daily generative AI programming. Based on course activities, some new ideas I was thinking of were from the final project. I was considering exploring what else openAI can develop and be posted on social media in the same way as what was done in that project. A change that has stimulated my art activities would involve the assignment where I used poseNet in p5.js to create a filter. It made me think about facial tracking in a different way since it's so common in social media apps. My weakest area was the image synthesis assignment. I encountered too many errors while trying to complete it. I don't believe anything else is relevant to know about my experiences. If I were to give myself a letter grade based on what was answered in the labor contract, it would be a B. This is from missing two additional projects, as seen in Missed Deliverables II. I don’t believe I have had at least three unexcused absences, so that should not affect my grade. All in all, I believe I have done a satisfactory job in this course.

0 notes

Text

MaxwellChu-Deliverables 8D

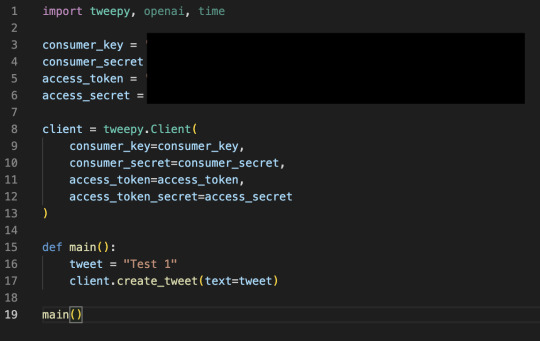

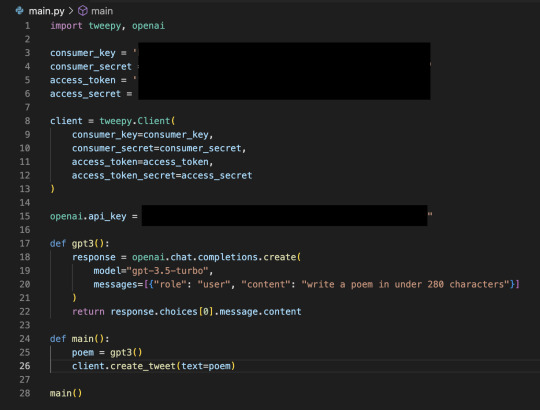

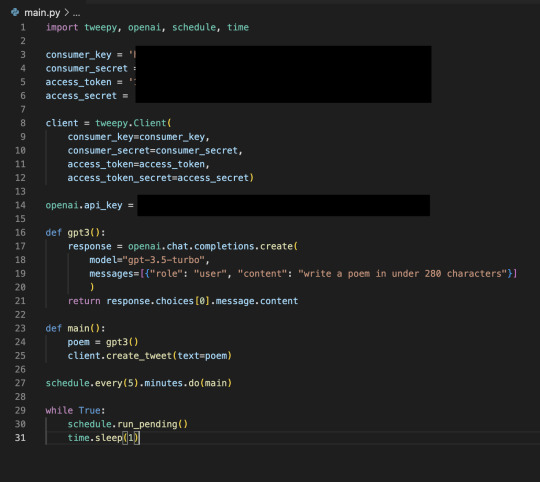

For the final project, the goal was to create a daily generative poetry Twitter bot. An implementation of various skills and tools were necessary to finalize the product, and a process was undertaken to ensure a quality result. To start off, a concept drawing was sketched to get a clear image of what the bot should look like. The idea was that it would be just like any other bot made on that social media platform, so the content of each post that the bot provides would be the considerable focus of effort. Next was researching references to get a better understanding of how to manage this bot. According to multiple sources, there was a consensus to have written a program in python using the Twitter API. ChatGPT would then take place in conjuring up poems. Then comes physically programming the actual bot. Visual Studio Code was the application used in programming python. The terminal was used to download the python library “tweepy” so that it could interact with the Twitter API. A first test was conducted to ensure that whatever was coded in VS Code would output onto the bot account. After that would be to establish the content of each post so that it would be generative poetry. The approach was to use an Openai API even though it cost a few dollars to utilize it. A python function was implemented to get the “gpt-3.5.turbo” model and ask it to “write a poem in under 280 characters” since the limit for twitter is that amount. This step would be completed once confirmed that the generation of each post using Openai would differentiate in its content. Finally, the last code to put in is the automating. The python libraries of schedule and time were called, and the trial run consisted of posting every 5 minutes. Once it was successful, a change was inserted so that the code would run every day at 5:20 PM EST. In conclusion, a Twitter bot was created via python in VS Code with a select few choices of libraries that ultimately depended on AI that is outputted once a day. The first struggle that I came across in this project was the paywall behind using Openai’s API, since my original thought was that it was entirely free. It was a small hurdle since I just paid $5 for it. Another struggle was to provide a daily occurrence of just one Twitter post. I was not sure if the program would run on my computer even if my laptop was off. Not only that, but I was hesitant whether it would be even possible to run this without leaving VS Code on for days. In the end, the post times were fine. My successes include signing up for a Twitter Developer’s account and downloading the necessary libraries so they can be imported onto VS Code. There were no hiccups there. Looking at the bigger picture, I believe that this project was an overall success. I managed to somewhat automate a Twitter account using python programming and AI. This is a link to the Twitter page: https://twitter.com/PoetryBot198374

The link to the twitter bot

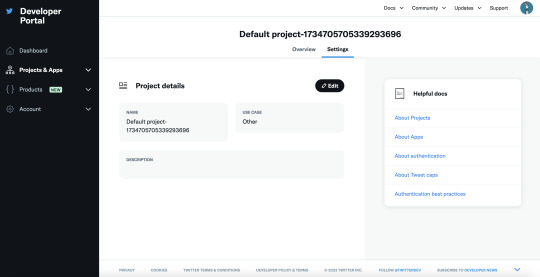

Signing up for a Twitter Developer Account under the Bot account

The "first draft" of my python code that tests if the Twitter API works

The result of the "first draft"

Purchasing a usage of openAI API for $5

The "second draft" of the code that uses GPT 3.5 turbo with the query "write a poem in under 280 characters"

The result of the "second draft" after running the program twice to see the variation between poems

The "third draft" uses the schedule and time libraries to post on Twitter every 5 minutes

What the python code looks like now where there is a single post every day at 5:20 PM

0 notes

Text

Maxwell Chu-Deliverables 8C

I've been researching the different ways of attempting to create this kind of bot. Below are some links that relate to the subject of my final project. A few sources used a combination of the Python language Chat GPT, and Twitter API. One of these links is for an actual Twitter bot that posts poetry multiple times a day.

youtube

0 notes

Text

Maxwell Chu-Deliverables 8B

For my final project, I would like to create a daily generative poetry bot for Twitter/X. Once a day, the bot will generate and post a poetic blurb of some sort on that specific social media platform. By using the Twitter API, an account will be utilized through AI and such to write poetry. It'll look like any other bot on Twitter/X, and any user will be able to interact with it like any other bot on that platform. The theme that I will be applying in this project include software like AI. Below is a diagram of what the bot might look like.

0 notes

Text

MaxwellChu-Deliverables 8A

For the final project in this class, the one that I would like to pursue is the twitter/X daily generative poetry bot idea. It would be considered a tool-focused topic. To start off, I would need to sign up for a free twitter developer account to create a bot account. Then, while utilizing some programming, the goal would be to have the bot post a poetic blurb only once a day.

0 notes

Text

MaxwellChu-Deliverables 7B

Throughout these tutorials, data compilation, image analysis, and machine learning and training took place throughout each tutorial. These were only some of the results I gathered for the outputs of all the tutorials found on the website. The computer gathers information regarding specific data, and then analyzes and detects the necessary components to successfully complete what the program intends on it to do.

0 notes

Text

MaxwellChu-Deliverables 7A

The results I gathered after attempting to complete the CoLab notebook were unaccommodating. There were many issues throughout this process, yet even after trying to solve these problems there were blunders in conclusion. The set of images that was intended to be put through this generate adversial network was the "fraser_river.zip". After implementing it into my Google Drive, the CoLab program was able to successfully run through these files. However, the results of training this model seemed to not work as intended for me. I could not locate the media needed for interpolation. Nevertheless, based on the in-class lecture, notes, and example image shown in the instructions, I understand what was supposed to happen in this assignment.

0 notes

Text

MaxwellChu-Deliverables 6B

This project was dedicated to creating a facial filter that would apply and move along with the video. The software would grab key points on a frame of that video that would be recognized as a face. The first point grabbed would be the one that the image would be displayed at. There would be a slight displacement and resizing of the actual image, but it would cover the face entirely and make a filter. In the video shown, as the body and face move around the video, the image filter would follow that specific point and display in front of the face.

Throughout this project there were both successes and struggles. The success was a project itself, having an image follow where the face would move on a video. A struggle would be to provide a link for the actual code via GitHub. There were inconveniences that GitHub denied me to implement my code. The next best thing I thought to be available was to screenshot the p5.js code itself from Visual Studio Code and paste it onto the blogpost. Overall, I believe that this was a general success based on the intentional goal of creating a facial filter that follows a specific point.

0 notes

Text

MaxwellChu-Deliverables6A

In "Appropriating New Technologies", I found the claim of the development of visual colors in human evolution being connected to empathy interesting. Each different color is able to represent a certain mood and emotion. For example, red can be anger and blue can be sadness.

In "How I'm fighting bias in algorithms", I found the overall premise of an algorithm not being able to detect certain ranges of skin tones and facial structures intriguing. It does bring up a few issues in this software, and its excellent that this was brought up. Being able to include everyone is a progressive step forward in this development.

In "Enactive-performative perspectives on cognition and the arts", I found the connection of cognition and experience thought-provoking. One's thinking and behavior would not be without at least a hint of what they have been through. Their sense of self would have no conception if not for the choices they have made in the past.

0 notes

Text

MaxwellChu-FieldReport04

The "Invisible Cities" project, by Gene Kogan, utilizes Machine Learning to develop artwork that can be recognized as a hidden message found within a view of city artwork. A neural network was capable of creating an aerial view of a computer drawn city. The city is then formed to generate an illusion for a user's desired words. I found this project interesting because I have seen this before on social media. This was recognizable to me, and learning about how it works behind the scenes was intriguing.

0 notes

Text

MaxwellChu-Deliverables 4B

This project was supposed to show different natural atmospheres. By switching between the 'a', 's', and 'd' keys, the user can view how the outside can look at very different perspectives. The first scene is a plain day with clear skies. The next scene could be a dark and stormy snowstorm. Another scene could be the sun rising and falling.

I believe this was a successful project. A struggle could be figuring out what the exact concept could be and how to implement that said concept.

0 notes

Text

MaxwellChu-Deliverables4A

The first project, "Crayon Physics Deluxe", expands on the idea of drawing by giving creative properties to the user. The crayon aspect presents a child-like theme as if someone were to actually draw with crayons on a piece of paper. There is an imaginative factor that is explored through this project. The second project, Drawing Operations, analyzes the difference between how humans and robots have an artistic technique. A human is able to break limits a robot cannot through the means of mechanical movement, thought, and creativity. With this in mind, a robot can only do so much without the help of a human.

0 notes

Text

MaxwellChu-Deliverables 3C

By flipping a coin, a line on the canvas will be drawn with either a pen or pencil. Heads was to use a pen, and tails was to use a pencil.

The working process was comparably simpler to other processes in my usual line of work. A flip of a coin meant that there were only two possible outcomes to be made. With this limited amount of choices, not many options could be used to conduct work. However, the experience of chance decisions was enjoyable and interesting to watch unfold. Leaving the choice up to chance was fascinating. I believe that if I had different colors of pens or pencils, the aesthetic appeal would have been more pleasing. This assignment, compared to the previous one, was more low-tech. In conclusion, this experience was unique.

0 notes

Text

MaxwellChu-Deliverables 3B

https://openprocessing.org/sketch/2026895

https://openprocessing.org/sketch/2026896

https://openprocessing.org/sketch/2026898

The random walk simulates a chosen point on the canvas and then uses random coordinates to displace the following point. The DLA uses an initial seed to conduct a web-like structure and pattern of multiple points across the canvas. The L-Systems construct a symmetrical layout that will continuously split in patterns.

0 notes

Text

MaxwellChu-Deliverables3A

1A. An example of something that exhibits effective complexity could be the human nervous system. There is a degree of method to this madness that humans can view. By meeting in the middle of order and disorder on the chart, this biological function is able to fulfill its purpose by transmitting signals throughout the body to keep the body well.

1B. The Problem of Authenticity discusses what exactly should be qualified as art in this day and age. In my opinion, as long as someone views something as art, who's to say it shouldn't be? There can't be these kind of rules when discussing something as creative as art. In conclusion, generative art should be declared as a form of art.

0 notes

Text

MaxwellChu-FieldReport02

I selected the Land Lines project, made by Zachary Lieberman, because I found the concept of analyzing a simple line to a specific location on this planet extremely interesting.

Compared to John Cage's legacy, there were noticeable similarities and differences between this and the "Land Lines" project of Zachary Lieberman. They are alike based on the premise of exploring new found innovations in their fields to provide an in depth idea to what can be used daily by people. A difference can be seen in what those fields exactly are. Cage dives into the artistry of music, but Lieberman researches computation in a more poetic area. To summarize, they are the same in both explore art. However, Cage focuses on music and Lieberman focuses on poetry.

0 notes

Text

MaxwellChu-FieldReport01

An interactive and computational project that I found intriguing was “The Bumper Engine”, created by SuperSonic68. This software was created for the use of developing one’s own Sonic the Hedgehog levels in 3D Unity. It was based on another software called “HedgePhysics”, created by LakeFeperd. The audience was anyone who had an interest in making their own Sonic the Hedgehog games. This project was based upon both custom software/scripts and commercial software. The overall experience that was created was enjoyment. The opportunities this project points to could be the amount of potential level design this software provided will reveal. About this project, I admire the creative possibilities of 3D level design within Sonic the Hedgehog that this project exploits, compared to linear level design used at that time for this overall series. It gives a fresh perspective on how these kinds of games can be played.

BumperEngine_MasterFolder.zip - SuperSonic68

youtube

1 note

·

View note