As a Web Programmer / Application Developer, my objective is to make a positive impact on clients, co-workers, and the Internet using my skills and experience to design & develop compelling and attractive websites, web apps & mobile apps. I enjoy working on projects that involve a mix of web and graphic design, web development, mobile application development, database management and programming.

Don't wanna be here? Send us removal request.

Text

Scope and Closures in JavaScript – Explained with Examples

You may have come across or written code similar to this when writing JavaScript:

function sayWord(word) { return () => console.log(word); } const sayHello = sayWord("hello"); sayHello(); // "hello"

This code is interesting for a couple of reasons. First, we can access word in the function returned from sayWord. Second, we have access to word’s value when we call sayHello – even though we call sayHello where we do not otherwise have access to word.

In this article, we’ll learn about scope and closures, which enable this behavior.

Introducing Scope in JavaScript

Scope is the first piece that will help us understand the previous example. A variable’s scope is the part of a program where it is available for use.

JavaScript variables are lexically scoped, meaning that we can determine a variable’s scope from where it is declared in the source code. (This is not entirely true: var variables are not lexically scoped, but we will discuss that shortly.)

Take the following example:

if (true) { const foo = "foo"; console.log(foo); // "foo" }

The if statement introduces a block scope by using a block statement. We say that foo is block-scoped to the if statement. This means it can only be accessed from within that block.

If we try to access foo outside of the block, we get a ReferenceError because it is out of scope:

if (true) { const foo = "foo"; console.log(foo); // "foo" } console.log(foo); // Uncaught ReferenceError: foo is not defined

Block statements in other forms, such as for and while loops, will also create a scope for block-scoped variables. For instance, foo is scoped within a function body below:

function sayFoo() { const foo = "foo"; console.log(foo); } sayFoo(); // "foo" console.log(foo); // Uncaught ReferenceError: foo is not defined

Nested Scopes and Functions

JavaScript allows nested blocks and therefore nested scopes. Nested scopes create a scope tree or scope chain.

Consider the code below, which nests multiple block statements:

if (true) { const foo = "foo"; console.log(foo); // "foo" if (true) { const bar = "bar"; console.log(foo); // "foo" if (true) { console.log(foo, bar); // "foo bar" } } }

JavaScript also allows us to nest functions:

function foo(bar) { function baz() { console.log(bar); } baz(); } foo("bar"); // "bar"

As expected, we can access variables from their direct scope (the scope where they get declared). We can also access variables from their inner scopes (the scopes that nest within their direct scope). That is, we can access variables from the scope they get declared in and from every inner scope.

Before we go further, we should clarify the difference in this behavior between variable declaration types.

Scope of let, const, and var in JavaScript

We can create variables with the let, const, and var declarations. For let and const, block scoping works as explained above. However, var behaves differently.

let and const

let and const create block-scoped variables. When declared within a block, they are only accessible within that block. This behavior was demonstrated in our previous examples:

if (true) { const foo = "foo"; console.log(foo); // "foo" } console.log(foo); // Uncaught ReferenceError: foo is not defined

var

Variables created with var are scoped to their nearest function or the global scope (which we will discuss shortly). They are not block scoped:

function foo() { if (true) { var foo = "foo"; } console.log(foo); } foo(); // "foo"

var can create confusing situations, and this information is only included for completeness. It is best to use let and const when possible. The rest of this article will pertain only to let and const variables.

If you’re interested in how var behaves in the example above, you should check out my article on hoisting.

Global and Module Scope in JavaScript

In addition to block scopes, variables can be scoped to the global and module scope.

In a web browser, the global scope is at the top level of a script. It is the root of the scope tree that we described earlier, and it contains all other scopes. Thus, creating a variable in the global scope makes it accessible in every scope:

<script> const foo = "foo"; </script> <script> console.log(foo); // "foo" function bar() { if (true) { console.log(foo); } } bar(); // "foo" </script>

Each module also has its own scope. Variables declared at the module level are only available within that module – they are not global:

<script type="module"> const foo = "foo"; </script> <script> console.log(foo); // Uncaught ReferenceError: foo is not defined </script>

Closures in JavaScript

Now that we understand scope, let’s go back to the example that we saw in the introduction:

function sayWord(word) { return () => console.log(word); } const sayHello = sayWord("hello"); sayHello(); // "hello"

Recall that there were two interesting points about this example:

The returned function from sayWord can access the word parameter

The returned function maintains the value of word when sayHello is called outside the scope of word

The first point can be explained by lexical scope: the returned function can access word because it exists in its outer scope.

The second point is because of closures: A closure is a function combined with references to the variables defined outside of it. Closures maintain the variable references, which allow functions to access variables outside of their scope. They “enclose” the function and the variables in its environment.

Examples of Closures in JavaScript

You have probably encountered and used closures frequently without being aware of it. Let’s explore some more ways to use closures.

Callbacks

It is common for a callback to reference a variable declared outside of itself. For example:

function getCarsByMake(make) { return cars.filter(x => x.make === make); }

make is available in the callback because of lexical scoping, and the value of make is persisted when the anonymous function is called by filter because of a closure.

Storing state

We can use closures to return objects from functions that store state. Consider the following makePerson function which returns an object that can store and change a name:

function makePerson(name) { let _name = name; return { setName: (newName) => (_name = newName), getName: () => _name, }; } const me = makePerson("Zach"); console.log(me.getName()); // "Zach" me.setName("Zach Snoek"); console.log(me.getName()); // "Zach Snoek"

This example illustrates how closures do not just freeze the values of variables from a function’s outer scope during creation. Instead, they maintain the references throughout the closure’s lifetime.

Private methods

If you’re familiar with object-oriented programming, you might have noticed that our previous example closely resembles a class that stores private state and exposes public getter and setter methods. We can extend this object-oriented parallel further by using closures to implement private methods:

function makePerson(name) { let _name = name; function privateSetName(newName) { _name = newName; } return { setName: (newName) => privateSetName(newName), getName: () => _name, }; }

privateSetName is not directly accessible to consumers and it can access the private state variable _name through a closure.

React event handlers

Lastly, closures are common in React event handlers. The following Counter component is modified from the React docs:

function Counter({ initialCount }) { const [count, setCount] = React.useState(initialCount); return ( <> <button onClick={() => setCount(initialCount)}>Reset</button> <button onClick={() => setCount((prevCount) => prevCount - 1)}> - </button> <button onClick={() => setCount((prevCount) => prevCount + 1)}> + </button> <button onClick={() => alert(count)}>Show count</button> </> ); } function App() { return <Counter initialCount={0} />; }

Closures make it possible for:

the reset, decrement, and increment button click handlers to access setCount

the reset button to access initialCount from Counter's props

and the “Show count” button to display the count state.

Closures are important in other parts of React, such as props and hooks. Discussion about these topics is out of scope for this article. I recommend reading this post from Kent C. Dodds or this post from Dan Abramov to learn more about the role that closures play in React.

Conclusion

Scope refers to the part of a program where we can access a variable. JavaScript allows us to nest scopes, and variables declared in outer scopes are accessible from all inner ones. Variables can be globally-, module-, or block-scoped.

A closure is a function enclosed with references to the variables in its outer scope. Closures allow functions to maintain connections with outer variables, even outside the scope of the variables.

There are many uses of closures, from creating class-like structures that store state and implement private methods to passing callbacks to event handlers.

Let's connect

If you’re interested in more articles like this, subscribe to my newsletter and connect with me on LinkedIn and Twitter!

Acknowledgements

Thanks to Bryan Smith for providing feedback on drafts of this post.

Cover photo by Karine Avetisyan on Unsplash.

via freeCodeCamp.org https://ift.tt/M4sX1a6

0 notes

Text

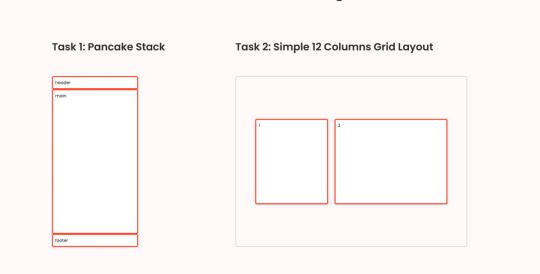

Demystifying Grids For Developers and Designers

Designers and developers each have their own individual definition and use of grids, making the concept a relatively nebulous and unclear concept to all. To some, grids could mean layout and structure, while to others grids refer to interactive tables that manage data. Understanding the target audience is key here because there might not be a universally understandable direction which can lead designers to be misguided during the cross-collaboration process. When given the time, developers and designers can fully evaluate the user story and create a thoughtful user experience together through the use of grids. But first, we need to find common ground to work from.

Identifying a Grid

As we mentioned, it is important to know your audience when talking about Grids. If you come from a typical design background, the word “Grid” instantly brings you to think about layout (either print or online). The term has even penetrated CSS with the Grid Layout. As you can see in this article, that demonstrates the use of the term “Grid” generically for design layout purposes.

via DZone Web Dev Zone https://ift.tt/zOXYu6qIs

1 note

·

View note

Text

How Web Workers Work in JavaScript – With a Practical JS Example

In this article, I will walk you through an example that will show you how web workers function in JavaScript with the help of WebSockets.

I think it's helpful to work with a practical use case because it is much simpler to understand the concepts when you can relate them to real life.

So in this guide, you will be learning what web workers are in JavaScript, you'll get a brief introduction to WebSockets, and you'll see how you can manage sockets in the proper way.

This article is quite application/hands-on oriented, so I would suggest trying the example out as you go along to get a much better understanding.

Let’s dive in.

Table of contents

Prerequisites

Before you start reading this article, you should have a basic understanding of the following topics:

What are web workers in JavaScript?

A web worker is a piece of browser functionality. It is the real OS threads that can be spawned in the background of your current page so that it can perform complex and resource-intensive tasks.

Imagine that you have some large data to fetch from the server, or some complex rendering needs to be done on the UI. If you do this directly on your webpage then the page might get jankier and will impact the UI.

To mitigate this, you can simply create a thread – that is a web worker – and let the web worker take care of the complex stuff.

You can communicate with the web worker in a pretty simple manner which can be used to transfer data to and fro from the worker to the UI.

Common examples of web workers would be:

Dashboard pages that display real-time data such as stock prices, real-time active users, and so on

Fetching huge files from the server

Autosave functionality

You can create a web worker using the following syntax:

const worker = new Worker("<worker_file>.js");

Worker is an API interface that lets you create a thread in the background. We need to pass a parameter, that is a <worker_file>.js file. This specifies the worker file the API needs to execute.

NOTE: A thread is created once a Worker call is initiated. This thread only communicates with its creator, that is the file which created this thread.

A worker can be shared or used by multiple consumers/scripts. These are called shared workers. The syntax of the shared worker is very similar to that of the above mentioned workers.

const worker = new SharedWorker("<worker_file>.js");

You can read more about SharedWorkers in this guide.

History of web workers

Web workers execute in a different context, that is they do not execute in a global scope such as window context. Web workers have their own dedicated worker context which is called DedicatedWorkerGlobalScope.

There are some cases where you can't use web workers, though. For example, you can't use them to manipulate the DOM or the properties of the window object. This is because the worker does not have the access to the window object.

Web workers can also spawn new web workers. Web workers communicate with their creator using certain methods like postMessage, onmessage, and onerror. We will look into these methods closely in the later sections of this article.

Brief Introduction to Web Sockets

A web socket is a type of communication that happens between two parties/entities using a WebSocket protocol. It actually provides a way to communicate between the two connected entities in a persistent manner.

You can create a simple web socket like below:

const socket = new WebSocket("ws://example.com");

Over here we have created a simple socket connection. You'll notice that we have passed a parameter to the WebSocket constructor. This parameter is a URL at which the connection should be established.

You can read more about web sockets by referring to the Websockets link in the prerequisites.

Use Case Description

NOTE: Context, Container, and Class diagrams drawn in this blog post don't accurately follow the exact conventions of these diagrams. They're approximated here so that you can understand the basic concepts.

Before we start, I would suggest reading up on c4models, container diagrams, and context diagrams. You can find resources about them in the prerequisites section.

In this article, we are going to consider the following use case: data transfer using web workers via socket protocol.

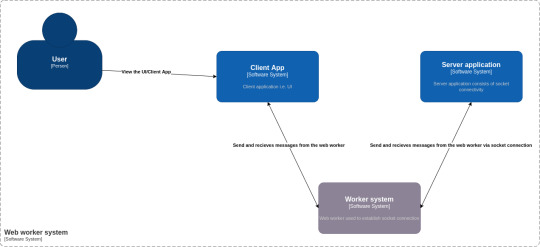

We are going to build a web application which will plot the data on a line chart every 1.5 seconds. The web application will receive the data from the socket connection via web workers. Below is the context diagram of our use case:

Container Diagram

As you can see from the above diagram, there are 4 main components to our use case:

Person: A user who is going to use our application

Software system: Client App – This is the UI of our application. It consists of DOM elements and a web worker.

Software system: Worker system – This is a worker file that resides in the client app. It is responsible for creating a worker thread and establishing the socket connection.

Software system: Server application – This is a simple JavaScript file which can be executed by node to create a socket server. It consists of code which helps to read messages from the socket connection.

Now that we understand the use case, let's dive deep into each of these modules and see how the whole application works.

Project Structure

Please follow this link to get the full code for the project that I developed for this article.

Our project is divided into two folders. First is the server folder which consists of server code. The second is the client folder, which consists of the client UI, that is a React application and the web worker code.

Following is the directory structure:

├── client │ ├── package.json │ ├── package-lock.json │ ├── public │ │ ├── favicon.ico │ │ ├── index.html │ │ ├── logo192.png │ │ ├── logo512.png │ │ ├── manifest.json │ │ └── robots.txt │ ├── README.md │ ├── src │ │ ├── App.css │ │ ├── App.jsx │ │ ├── components │ │ │ ├── LineChartSocket.jsx │ │ │ └── Logger.jsx │ │ ├── index.css │ │ ├── index.js │ │ ├── pages │ │ │ └── Homepage.jsx │ │ ├── wdyr.js │ │ └── workers │ │ └── main.worker.js │ └── yarn.lock └── server ├── package.json ├── package-lock.json └── server.mjs

To run the application, you first need to start the socket server. Execute the following commands one at a time to start the socket server (assuming you are in the parent directory):

cd server node server.mjs

Then start the client app by running the following commands (assuming you are in the parent directory):

cd client yarn run start

Open http://localhost:3000 to start the web app.

Client and Server Application

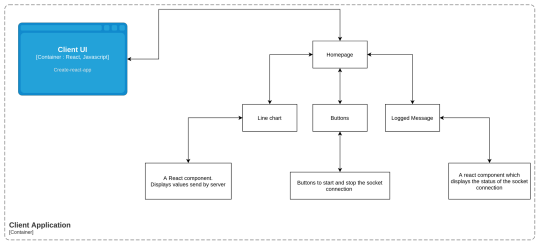

The client application is a simple React application, that is CRA app, which consists of a Homepage. This home page consists of the following elements:

Two buttons: start connection and stop connection which will help to start and stop the socket connection as required.

A line chart component - This component will plot the data that we receive from the socket at regular intervals.

Logged message - This is a simple React component that will display the connection status of our web sockets.

Below is the container diagram of our client application.

Container Diagram: Client Application

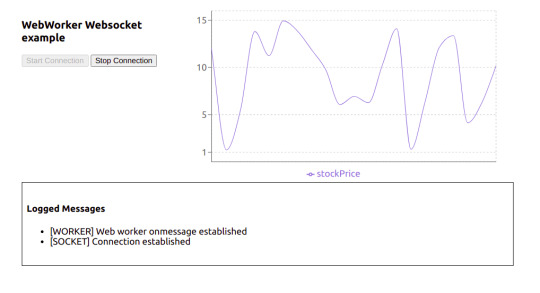

Below is how the UI will look:

Actual UI

To check out the code for the client UI, go to the client folder. This is a regular create-react-app, except that I have removed some boilerplate code that we don't need for this project.

App.jsx is actually the starter code. If you check this out, we have called the <Homepage /> component in it.

Now let's have a look at the Homepage component.

const Homepage = () => { const [worker, setWorker] = useState(null); const [res, setRes] = useState([]); const [log, setLog] = useState([]); const [buttonState, setButtonState] = useState(false); const hanldeStartConnection = () => { // Send the message to the worker [postMessage] worker.postMessage({ connectionStatus: "init", }); }; const handleStopConnection = () => { worker.postMessage({ connectionStatus: "stop", }); }; //UseEffect1 useEffect(() => { const myWorker = new Worker( new URL("../workers/main.worker.js", import.meta.url) ); //NEW SYNTAX setWorker(myWorker); return () => { myWorker.terminate(); }; }, []); //UseEffect2 useEffect(() => { if (worker) { worker.onmessage = function (e) { if (typeof e.data === "string") { if(e.data.includes("[")){ setLog((preLogs) => [...preLogs, e.data]); } else { setRes((prevRes) => [...prevRes, { stockPrice: e.data }]); } } if (typeof e.data === "object") { setButtonState(e.data.disableStartButton); } }; } }, [worker]); return ( <> <div className="stats"> <div className="control-panel"> <h3>WebWorker Websocket example</h3> <button id="start-connection" onClick={hanldeStartConnection} disabled={!worker || buttonState} > Start Connection </button> <button id="stop-connection" onClick={handleStopConnection} disabled={!buttonState} > Stop Connection </button> </div> <LineChartComponent data={res} /> </div> <Logger logs={log}/> </> ); };

As you can see, it's just a regular functional component that renders two buttons – a line chart, and a custom component Logger.

Now that we know how our homepage component looks, let's dive into how the web worker thread is actually created. In the above component you can see there are two useEffect hooks used.

The first one is used for creating a new worker thread. It's a simple call to the Worker constructor with a new operator as we have seen in the previous section of this article.

But there are some difference over here: we have passed an URL object to the worker constructor rather than passing the path of the worker file in the string.

const myWorker = new Worker(new URL("../workers/main.worker.js", import.meta.url));

You can read more about this syntax here.

If you try to import this web worker like below, then our create-react-app won’t be able to load/bundle it properly so you will get an error since it has not found the worker file during bundling:

const myWorker = new Worker("../workers/main.worker.js");

Next, we also don’t want our application to run the worker thread even after the refresh, or don’t want to spawn multiple threads when we refresh the page. To mitigate this, we'll return a callback in the same useEffect. We use this callback to perform cleanups when the component unmounts. In this case, we are terminating the worker thread.

We use the useEffect2 to handle the messages received from the worker.

Web workers have a build-in property called onmessage which helps receive any messages sent by the worker thread. The onmessage is an event handler of the worker interface. It gets triggered whenever a message event is triggered. This message event is generally triggered whenever the postMessage handler is executed (we will look more into this in a later section).

So in order for us to send a message to the worker thread, we have created two handlers. The first is handleStartConnection and the second is handleStopConnection. Both of them use the postMessage method of the worker interface to send the message to the worker thread.

We will talk about the message {connectionStatus: init} in our next section.

You can read more about the internal workings of the onmessage and postMessage in the following resources:

Since we now have a basic understanding about how our client code is working, then let's move on to learn about the Worker System in our context diagram above.

Worker System

To understand the code in this section, make sure you go through the file src/workers/main.worker.js.

To help you understand what's going on here, we will divide this code into three parts:

A self.onmessage section

How the socket connection is managed using the socketManagement() function

Why we need the socketInstance variable at the top

How self.onmessage works

Whenever you create a web worker application, you generally write a worker file which handles all the complex scenarios that you want the worker to perform. This all happens in the main.worker.js file. This file is our worker file.

In the above section, we saw that we established a new worker thread in the useEffect. Once we created the thread, we also attached the two handlers to the respective start and stop connection buttons.

The start connection button will execute the postMessage method with message: {connectionStatus: init} . This triggers the message event, and since the message event is triggered, all the message events are captured by the onmessage property.

In our main.worker.js file, we have attached a handler to this onmessage property:

self.onmessage = function (e) { const workerData = e.data; postMessage("[WORKER] Web worker onmessage established"); switch (workerData.connectionStatus) { case "init": socketInstance = createSocketInstance(); socketManagement(); break; case "stop": socketInstance.close(); break; default: socketManagement(); } }

So whenever any message event is triggered in the client, it will get captured in this event handler.

The message {connectionStatus: init} that we send from the client is received in the event e. Based on the value of connectionStatus we use the switch case to handle the logic.

NOTE: We have added this switch case because we need to isolate some part of the code which we do not want to execute all the time (we will look into this in a later section).

How the socket connection is managed using the socketManagement() function

There are some reasons why I have shifted the logic of creating and managing a socket connection into a separate function. Here is the code for a better understanding of the point I am trying to make:

function socketManagement() { if (socketInstance) { socketInstance.onopen = function (e) { console.log("[open] Connection established"); postMessage("[SOCKET] Connection established"); socketInstance.send(JSON.stringify({ socketStatus: true })); postMessage({ disableStartButton: true }); }; socketInstance.onmessage = function (event) { console.log(`[message] Data received from server: ${event.data}`); postMessage( event.data); }; socketInstance.onclose = function (event) { if (event.wasClean) { console.log(`[close] Connection closed cleanly, code=${event.code}`); postMessage(`[SOCKET] Connection closed cleanly, code=${event.code}`); } else { // e.g. server process killed or network down // event.code is usually 1006 in this case console.log('[close] Connection died'); postMessage('[SOCKET] Connection died'); } postMessage({ disableStartButton: false }); }; socketInstance.onerror = function (error) { console.log(`[error] ${error.message}`); postMessage(`[SOCKET] ${error.message}`); socketInstance.close(); }; } }

This is a function that will help you manage your socket connection:

For receiving the message from the socket server we have the onmessage property which is assigned an event handler.

Whenever a socket connection is opened, you can perform certain operations. To do that we have the onopen property which is assigned to an event handler.

And if any error occurs or when we are closing the connection then, we use onerror and onclose properties of the socket.

For creating a socket connection there is a separate function altogether:

function createSocketInstance() { let socket = new WebSocket("ws://localhost:8080"); return socket; }

Now all of these functions are called in a switch case like below in the main.worker.js file:

self.onmessage = function (e) { const workerData = e.data; postMessage("[WORKER] Web worker onmessage established"); switch (workerData.connectionStatus) { case "init": socketInstance = createSocketInstance(); socketManagement(); break; case "stop": socketInstance.close(); break; default: socketManagement(); } }

So based on what message the client UI sends to the worker the appropriate function will be executed. It is pretty self-explanatory on what message which particular function should be triggered, based on the above code.

Now consider a scenario where we placed all the code inside self.onmessage.

self.onmessage = function(e){ console.log("Worker object present ", e); postMessage({isLoading: true, data: null}); let socket = new WebSocket("ws://localhost:8080"); socket.onopen = function(e) { console.log("[open] Connection established"); console.log("Sending to server"); socket.send("My name is John"); }; socket.onmessage = function(event) { console.log(`[message] Data received from server: ${event.data}`); }; socket.onclose = function(event) { if (event.wasClean) { console.log(`[close] Connection closed cleanly, code=${event.code} reason=${event.reason}`); } else { // e.g. server process killed or network down // event.code is usually 1006 in this case console.log('[close] Connection died'); } }; socket.onerror = function(error) { console.log(`[error] ${error.message}`); }; }

This would cause the following problems:

On every postMessage call made by the client UI, there would have been a new socket instance.

It would have been difficult to close the socket connection.

Because of these reasons, all the socket management code is written in a function socketManagement and catered using a switch case.

Why we need the socketInstance variable at the top

We do need a socketInstance variable at the top because this will store the socket instance which was previously created. It is a safe practice since no one can access this variable externally as main.worker.js is a separate module altogether.

Communication between the UI and the socket via web worker

Now that we understand which part of the code is responsible for which section, we will take a look at how we establish a socket connection via webworkers. We'll also see how we respond via socket server to display a line chart on the UI.

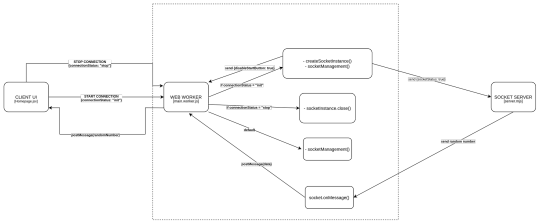

End-to-end flow of the application

NOTE: Some calls are purposefully not shown in the diagram since it will make the diagram cluttered. Make sure you refer to the code as well while referring to this diagram.

Now let's first understand what happens when you click on the start connection button on the UI:

One thing to notice over here is that our web worker thread is created once the component is mounted, and is removed/terminated when the component is unmounted.

Once the start connection button is clicked, a postMessage call is made with {connectionStatus: init}

The web worker’s onmessage event handler which is listening to all the message events comes to know that it has received connectionStatus as init. It matches the case, that is in the switch case of main.worker.js. It then calls the createSocketInstance() which returns a new socket connection at the URL: ws://localhost:8080

After this a socketManagement() function is called which checks if the socket is created and then executes a couple of operations.

In this flow, since the socket connection is just established therefore, socketInstance’s onpen event handler is executed.

This will send a {socketStatus: true} message to the socket server. This will also send a message back to the client UI via postMessage({ disableStartButton: true}) which tells the client UI to disable the start button.

Whenever the socket connection is established, then the server socket’s on('connection', ()=>{}) is invoked. So in step 3, this function is invoked at the server end.

Socket’s on('message', () => {}) is invoked whenever a message is sent to the socket. So at step 6, this function is invoked at the server end. This will check if the socketStatus is true, and then it will start sending a random integer every 1.5 seconds to the client UI via web workers.

Now that we understood how the connection is established, let's move on to understand how the socket server sends the data to the client UI:

As discussed above, socket server received the message to send the data, that is a random number every 1.5 second.

This data is recieved on the web worker’s end using the onmessage handler.

This handler then calls the postMessage function and sends this data to the UI.

After receiving the data it appends it to an array as a stockPrice object.

This acts as a data source for our line chart component and gets updated every 1.5 seconds.

Now that we understand how the connection is established, let's move on to understand how the socket server sends the data to the client UI:

As discussed above, socket server recieved the message to send the data, that is a random number, every 1.5 seconds.

This data is recieved on the web worker’s end using the socket's onmessage handler.

This handler then calls the postMessage function of the web worker and sends this data to the UI.

After receiving the data via useEffect2 it appends it to an array as a stockPrice object.

This acts as a data source for our line chart component and gets updated every 1.5 seconds.

NOTE: We are using recharts for plotting the line chart. You can find more information about it at the official docs.

Here is how our application will look in action:

Working Example

Summary

So this was a quick introduction to what web workers are and how you can use them to solve complex problems and create better UIs. You can use web workers in your projects to handle complex UI scenarios.

If you want to optimize your workers, read up on the below libraries:

Thank you for reading!

Follow me on twitter, github, and linkedIn.

If you read this far, tweet to the author to show them you care.

1 note

·

View note

Text

5 Big Web Design Predictions for 2022

Every year, at this time, blogs like this one like to try and predict what’s going to happen in the year ahead. It’s a way of drawing a line under the archive and starting afresh. A rejuvenation that, as humans, we find life-affirming.

Ten years ago, I would have had high confidence in these predictions — after all I was eventually right about SVG adoption, even if it took a decade. But the last few years have shown that web design is tightly interwoven with the muggle world, and that world is anything but predictable.

So as we look at what might occur in the next year (or five), think of it less as a set of predictions and more as a wishlist.

Last Year’s Predictions

When I write this post every January, I like to keep myself honest by glancing back at the previous year’s predictions to gauge how accurate (or not) my predictions have been.

Last year I predicted the long-term trend for minimalism would end, WordPress would decline, cryptocurrency would go mainstream, and then hedged my bets by saying we’d make both more and fewer video calls.

Gradients, maximalism, and the nineties revival pulled us away from minimalism. It’s still popular, just not as dominant.

WordPress is still the biggest CMS in the world and will continue to be for some time. But the relentless grind of no-code site builders at the low end, and being outperformed by better CMS at the high end, mean that WordPress has passed its peak.

Over-inflated predictions for BitCoin reaching $100k by December 2021 turned out to be a damp squib. In the end, Bitcoin only tripled in value in 2021. However, with micro-tipping and major tech companies moving into the arena, it’s clear digital currency arrived in the public consciousness in 2021.

And how could I be wrong about more but also fewer video calls? So I’m calling that my first clean sweep ever. With that heady boast, let’s take a look at the next twelve months.

What Not to Expect in 2022

Do not expect the Metaverse to be significant in anything but marketing speak. Yes, the hardware is slowly becoming more available, but the Metaverse in 2022 is like playing an MMORPG on PS5: theoretically, great fun, until you discover that absolutely none of your friends can get their hands on a console.

Ignore the blog posts predicting a noughties-era retro trend. All those writers have done is looked at the nineties-era trend and added a decade. Fashions aren’t mathematical; they’re poetic. Retro happens when people find a period that rhymes with present-day hopes and fears. After the last couple of years, if we revisit a decade, it’s likely to be the late-forties.

Finally, don’t expect seismic change. Material design, parallax scrolling, and jQuery are still with us and are still valid choices under the right circumstances. Trends aren’t neat; they don’t start in January and conclude in December.

5 Web Design Predictions for 2022

Predictions tend to be self-fulfilling. So we’ve limited ourselves to five trends that we believe are either positive or, at worst harmless. Of course, there are no guarantees, but if these come to pass, we’ll be in good shape for 2023.

1. The Blockchain is Coming

Underpinning the cryptocurrency industry are blockchains. In simple terms, they’re a set of data that can be appended to but can’t be edited or deleted. Think of it as version control for data.

As with most technology, the first wave has been a way to make a fast buck. However, the exciting development is blockchain technology itself and the transformative nature of the approach. For example, Médecins Sans Frontières reportedly stores refugees’ medical records on the blockchain.

Imagine the Internet as a set of data, editable for a micro-fee, and freely accessed by anyone anywhere. Instead of millions of sites, a single, secure, autonomous source of truth. Someone somewhere’s working on it.

2. Positivity & Playfulness & A11y

Even before world events descended into an endless tirade of grim news, time was running out for dull, corporate, geometric sans-serif design.

We added gradients, we added personality, we embraced humor. And contrary to the established business logic, we still make money. Over the past few years, there have been extraordinary efforts by designers and developers to examine, test, and champion accessibility, and thanks to them, inclusive design is no longer reliant on the lowest common denominator.

In 2022 you can get experimental without obstructing 10%+ of your users.

3. Everything Green

Green is a fascinating color, the primary that isn’t (except in RGB, when it is).

Green has the same visual weight as blue, is substantially more flexible, and yet to date, has been radically underutilized in digital design.

Green has a prominent cultural association with the environment. At a time when tech companies are desperate to emphasize their ethical credentials, marketing companies will inevitably begin promoting a brand color shift to green as a quick fix for all those dumped chemicals, strip mines, and plastic-filled seas.

We’ve already seen earthy hues acquire popular appeal. At the other end of the vibrancy scale, neons are popular. Green spans both approaches with everything from calm sages to acidic neons.

In 2022, if you’re looking for a color to capture the moment, look to green.

4. Hero Text

A picture is supposed to be worth 1000 words, although I’m not sure anyone has actually tried to measure it. The problem is that sites increasingly rely on stock images, so the 1000 words that we’re getting may or may not accurately reflect 100% of our message.

In 2022, a handful of well-chosen words will be worth more than an image, with hero images taking a back seat to large hero text. This is aided by a number of minor trends, the most notable of which is the willingness of businesses to look beyond the geometric sans-serif to a more expressive form of typography.

Reading through the prediction posts on sites other than this, almost everyone agrees on large hero text replacing images, which virtually guarantees it won’t happen. Still, at the start of 2022, this seems to be the direction we’re taking.

5. Bring the Noise

One of the unexpected consequences of the past couple of years has been a renewed connection with nature. The effortless complexity in nature is endlessly engaging.

We’ve already begun to popularise gradients — there are no flat colors in nature — and the next logical step is the addition of noise.

In visual terms, noise is the grainy texture that sits so beautifully in vector illustrations. Noise has dipped in and out of trends for years, hampered a little by the leap in file size it creates. However, with WebP and Avif file types, noise is now usable on production sites.

Designing in 2022, when in doubt, throw some noise at it.

Featured image via Unsplash.

Source

The post 5 Big Web Design Predictions for 2022 first appeared on Webdesigner Depot.

via Webdesigner Depot https://ift.tt/gPuemDhTy

0 notes

Text

Creating a Typography Motion Trail Effect with Three.js

Framebuffers are a key feature in WebGL when it comes to creating advanced graphical effects such as depth-of-field, bloom, film grain or various types of anti-aliasing and have already been covered in-depth here on Codrops. They allow us to “post-process” our scenes, applying different effects on them once rendered. But how exactly do they work?

By default, WebGL (and also Three.js and all other libraries built on top of it) render to the default framebuffer, which is the device screen. If you have used Three.js or any other WebGL framework before, you know that you create your mesh with the correct geometry and material, render it, and voilà, it’s visible on your screen.

However, we as developers can create new framebuffers besides the default one and explicitly instruct WebGL to render to them. By doing so, we render our scenes to image buffers in the video card’s memory instead of the device screen. Afterwards, we can treat these image buffers like regular textures and apply filters and effects before eventually rendering them to the device screen.

Here is a video breaking down the post-processing and effects in Metal Gear Solid 5: Phantom Pain that really brings home the idea. Notice how it starts by footage from the actual game rendered to the default framebuffer (device screen) and then breaks down how each framebuffer looks like. All of these framebuffers are composited together on each frame and the result is the final picture you see when playing the game:

youtube

So with the theory out of the way, let’s create a cool typography motion trail effect by rendering to a framebuffer!

Our skeleton app

Let’s render some 2D text to the default framebuffer, i.e. device screen, using threejs. Here is our boilerplate:

const LABEL_TEXT = 'ABC' const clock = new THREE.Clock() const scene = new THREE.Scene() // Create a threejs renderer: // 1. Size it correctly // 2. Set default background color // 3. Append it to the page const renderer = new THREE.WebGLRenderer() renderer.setClearColor(0x222222) renderer.setClearAlpha(0) renderer.setSize(innerWidth, innerHeight) renderer.setPixelRatio(devicePixelRatio || 1) document.body.appendChild(renderer.domElement) // Create an orthographic camera that covers the entire screen // 1. Position it correctly in the positive Z dimension // 2. Orient it towards the scene center const orthoCamera = new THREE.OrthographicCamera( -innerWidth / 2, innerWidth / 2, innerHeight / 2, -innerHeight / 2, 0.1, 10, ) orthoCamera.position.set(0, 0, 1) orthoCamera.lookAt(new THREE.Vector3(0, 0, 0)) // Create a plane geometry that spawns either the entire // viewport height or width depending on which one is bigger const labelMeshSize = innerWidth > innerHeight ? innerHeight : innerWidth const labelGeometry = new THREE.PlaneBufferGeometry( labelMeshSize, labelMeshSize ) // Programmaticaly create a texture that will hold the text let labelTextureCanvas { // Canvas and corresponding context2d to be used for // drawing the text labelTextureCanvas = document.createElement('canvas') const labelTextureCtx = labelTextureCanvas.getContext('2d') // Dynamic texture size based on the device capabilities const textureSize = Math.min(renderer.capabilities.maxTextureSize, 2048) const relativeFontSize = 20 // Size our text canvas labelTextureCanvas.width = textureSize labelTextureCanvas.height = textureSize labelTextureCtx.textAlign = 'center' labelTextureCtx.textBaseline = 'middle' // Dynamic font size based on the texture size // (based on the device capabilities) labelTextureCtx.font = `${relativeFontSize}px Helvetica` const textWidth = labelTextureCtx.measureText(LABEL_TEXT).width const widthDelta = labelTextureCanvas.width / textWidth const fontSize = relativeFontSize * widthDelta labelTextureCtx.font = `${fontSize}px Helvetica` labelTextureCtx.fillStyle = 'white' labelTextureCtx.fillText(LABEL_TEXT, labelTextureCanvas.width / 2, labelTextureCanvas.height / 2) } // Create a material with our programmaticaly created text // texture as input const labelMaterial = new THREE.MeshBasicMaterial({ map: new THREE.CanvasTexture(labelTextureCanvas), transparent: true, }) // Create a plane mesh, add it to the scene const labelMesh = new THREE.Mesh(labelGeometry, labelMaterial) scene.add(labelMesh) // Start out animation render loop renderer.setAnimationLoop(onAnimLoop) function onAnimLoop() { // On each new frame, render the scene to the default framebuffer // (device screen) renderer.render(scene, orthoCamera) }

This code simply initialises a threejs scene, adds a 2D plane with a text texture to it and renders it to the default framebuffer (device screen). If we are execute it with threejs included in our project, we will get this:

See the Pen Step 1: Render to default framebuffer by Georgi Nikoloff (@gbnikolov) on CodePen.0

Again, we don’t explicitly specify otherwise, so we are rendering to the default framebuffer (device screen).

Now that we managed to render our scene to the device screen, let’s add a framebuffer (THEEE.WebGLRenderTarget) and render it to a texture in the video card memory.

Rendering to a framebuffer

Let’s start by creating a new framebuffer when we initialise our app:

const clock = new THREE.Clock() const scene = new THREE.Scene() // Create a new framebuffer we will use to render to // the video card memory const renderBufferA = new THREE.WebGLRenderTarget( innerWidth * devicePixelRatio, innerHeight * devicePixelRatio ) // ... rest of application

Now that we have created it, we must explicitly instruct threejs to render to it instead of the default framebuffer, i.e. device screen. We will do this in our program animation loop:

function onAnimLoop() { // Explicitly set renderBufferA as the framebuffer to render to renderer.setRenderTarget(renderBufferA) // On each new frame, render the scene to renderBufferA renderer.render(scene, orthoCamera) }

And here is our result:

See the Pen Step 2: Render to a framebuffer by Georgi Nikoloff (@gbnikolov) on CodePen.0

As you can see, we are getting an empty screen, yet our program contains no errors – so what happened? Well, we are no longer rendering to the device screen, but another framebuffer! Our scene is being rendered to a texture in the video card memory, so that’s why we see the empty screen.

In order to display this generated texture containing our scene back to the default framebuffer (device screen), we need to create another 2D plane that will cover the entire screen of our app and pass the texture as material input to it.

First we will create a fullscreen 2D plane that will span the entire device screen:

// ... rest of initialisation step // Create a second scene that will hold our fullscreen plane const postFXScene = new THREE.Scene() // Create a plane geometry that covers the entire screen const postFXGeometry = new THREE.PlaneBufferGeometry(innerWidth, innerHeight) // Create a plane material that expects a sampler texture input // We will pass our generated framebuffer texture to it const postFXMaterial = new THREE.ShaderMaterial({ uniforms: { sampler: { value: null }, }, // vertex shader will be in charge of positioning our plane correctly vertexShader: ` varying vec2 v_uv; void main () { // Set the correct position of each plane vertex gl_Position = projectionMatrix * modelViewMatrix * vec4(position, 1.0); // Pass in the correct UVs to the fragment shader v_uv = uv; } `, fragmentShader: ` // Declare our texture input as a "sampler" variable uniform sampler2D sampler; // Consume the correct UVs from the vertex shader to use // when displaying the generated texture varying vec2 v_uv; void main () { // Sample the correct color from the generated texture vec4 inputColor = texture2D(sampler, v_uv); // Set the correct color of each pixel that makes up the plane gl_FragColor = inputColor; } ` }) const postFXMesh = new THREE.Mesh(postFXGeometry, postFXMaterial) postFXScene.add(postFXMesh) // ... animation loop code here, same as before

As you can see, we are creating a new scene that will hold our fullscreen plane. After creating it, we need to augment our animation loop to render the generated texture from the previous step to the fullscreen plane on our screen:

function onAnimLoop() { // Explicitly set renderBufferA as the framebuffer to render to renderer.setRenderTarget(renderBufferA) // On each new frame, render the scene to renderBufferA renderer.render(scene, orthoCamera) // 👇 // Set the device screen as the framebuffer to render to // In WebGL, framebuffer "null" corresponds to the default // framebuffer! renderer.setRenderTarget(null) // 👇 // Assign the generated texture to the sampler variable used // in the postFXMesh that covers the device screen postFXMesh.material.uniforms.sampler.value = renderBufferA.texture // 👇 // Render the postFX mesh to the default framebuffer renderer.render(postFXScene, orthoCamera) }

After including these snippets, we can see our scene once again rendered on the screen:

See the Pen Step 3: Display the generated framebuffer on the device screen by Georgi Nikoloff (@gbnikolov) on CodePen.0

Let’s recap the necessary steps needed to produce this image on our screen on each render loop:

Create renderTargetA framebuffer that will allow us to render to a separate texture in the users device video memory

Create our “ABC” plane mesh

Render the “ABC” plane mesh to renderTargetA instead of the device screen

Create a separate fullscreen plane mesh that expects a texture as an input to its material

Render the fullscreen plane mesh back to the default framebuffer (device screen) using the generated texture created by rendering the “ABC” mesh to renderTargetA

Achieving the persistence effect by using two framebuffers

We don’t have much use of framebuffers if we are simply displaying them as they are to the device screen, as we do right now. Now that we have our setup ready, let’s actually do some cool post-processing.

First, we actually want to create yet another framebuffer – renderTargetB, and make sure it and renderTargetA are let variables, rather then consts. That’s because we will actually swap them at the end of each render so we can achieve framebuffer ping-ponging.

“Ping-ponging” in WebGl is a technique that alternates the use of a framebuffer as either input or output. It is a neat trick that allows for general purpose GPU computations and is used in effects such as gaussian blur, where in order to blur our scene we need to:

Render it to framebuffer A using a 2D plane and apply horizontal blur via the fragment shader

Render the result horizontally blurred image from step 1 to framebuffer B and apply vertical blur via the fragment shader

Swap framebuffer A and framebuffer B

Keep repeating steps 1 to 3 and incrementally applying blur until desired gaussian blur radius is achieved.

Here is a small chart illustrating the steps needed to achieve ping-pong:

So with that in mind, we will render the contents of renderTargetA into renderTargetB using the postFXMesh we created and apply some special effect via the fragment shader.

Let’s kick things off by creating our renderTargetB:

let renderBufferA = new THREE.WebGLRenderTarget( // ... ) // Create a second framebuffer let renderBufferB = new THREE.WebGLRenderTarget( innerWidth * devicePixelRatio, innerHeight * devicePixelRatio )

Next up, let’s augment our animation loop to actually do the ping-pong technique:

function onAnimLoop() { // 👇 // Do not clear the contents of the canvas on each render // In order to achieve our ping-pong effect, we must draw // the new frame on top of the previous one! renderer.autoClearColor = false // 👇 // Explicitly set renderBufferA as the framebuffer to render to renderer.setRenderTarget(renderBufferA) // 👇 // Render the postFXScene to renderBufferA. // This will contain our ping-pong accumulated texture renderer.render(postFXScene, orthoCamera) // 👇 // Render the original scene containing ABC again on top renderer.render(scene, orthoCamera) // Same as before // ... // ... // 👇 // Ping-pong our framebuffers by swapping them // at the end of each frame render const temp = renderBufferA renderBufferA = renderBufferB renderBufferB = temp }

If we are to render our scene again with these updated snippets, we will see no visual difference, even though we do in fact alternate between the two framebuffers to render it. That’s because, as it is right now, we do not apply any special effects in the fragment shader of our postFXMesh.

Let’s change our fragment shader like so:

// Sample the correct color from the generated texture // 👇 // Notice how we now apply a slight 0.005 offset to our UVs when // looking up the correct texture color vec4 inputColor = texture2D(sampler, v_uv + vec2(0.005)); // Set the correct color of each pixel that makes up the plane // 👇 // We fade out the color from the previous step to 97.5% of // whatever it was before gl_FragColor = vec4(inputColor * 0.975);

With these changes in place, here is our updated program:

See the Pen Step 4: Create a second framebuffer and ping-pong between them by Georgi Nikoloff (@gbnikolov) on CodePen.0

Let’s break down one frame render of our updated example:

We render renderTargetB result to renderTargetA

We render our “ABC” text to renderTargetA, compositing it on top of renderTargetB result in step 1 (we do not clear the contents of the canvas on new renders, because we set renderer.autoClearColor = false)

We pass the generated renderTargetA texture to postFXMesh, apply a small offset vec2(0.002) to its UVs when looking up the texture color and fade it out a bit by multiplying the result by 0.975

We render postFXMesh to the device screen

We swap renderTargetA with renderTargetB (ping-ponging)

For each new frame render, we will repeat steps 1 to 5. This way, the previous target framebuffer we rendered to will be used as an input to the current render and so on. You can clearly see this effect visually in the last demo – notice how as the ping-ponging progresses, more and more offset is being applied to the UVs and more and more the opacity fades out.

Applying simplex noise and mouse interaction

Now that we have implemented and can see the ping-pong technique working correctly, we can get creative and expand on it.

Instead of simply adding an offset in our fragment shader as before:

vec4 inputColor = texture2D(sampler, v_uv + vec2(0.005));

Let’s actually use simplex noise for more interesting visual result. We will also control the direction using our mouse position.

Here is our updated fragment shader:

// Pass in elapsed time since start of our program uniform float time; // Pass in normalised mouse position // (-1 to 1 horizontally and vertically) uniform vec2 mousePos; // <Insert snoise function definition from the link above here> // Calculate different offsets for x and y by using the UVs // and different time offsets to the snoise method float a = snoise(vec3(v_uv * 1.0, time * 0.1)) * 0.0032; float b = snoise(vec3(v_uv * 1.0, time * 0.1 + 100.0)) * 0.0032; // Add the snoise offset multiplied by the normalised mouse position // to the UVs vec4 inputColor = texture2D(sampler, v_uv + vec2(a, b) + mousePos * 0.005);

We also need to specify mousePos and time as inputs to our postFXMesh material shader:

const postFXMaterial = new THREE.ShaderMaterial({ uniforms: { sampler: { value: null }, time: { value: 0 }, mousePos: { value: new THREE.Vector2(0, 0) } }, // ... })

Finally let’s make sure we attach a mousemove event listener to our page and pass the updated normalised mouse coordinates from Javascript to our GLSL fragment shader:

// ... initialisation step // Attach mousemove event listener document.addEventListener('mousemove', onMouseMove) function onMouseMove (e) { // Normalise horizontal mouse pos from -1 to 1 const x = (e.pageX / innerWidth) * 2 - 1 // Normalise vertical mouse pos from -1 to 1 const y = (1 - e.pageY / innerHeight) * 2 - 1 // Pass normalised mouse coordinates to fragment shader postFXMesh.material.uniforms.mousePos.value.set(x, y) } // ... animation loop

With these changes in place, here is our final result. Make sure to hover around it (you might have to wait a moment for everything to load):

See the Pen Step 5: Perlin Noise and mouse interaction by Georgi Nikoloff (@gbnikolov) on CodePen.0

Conclusion

Framebuffers are a powerful tool in WebGL that allows us to greatly enhance our scenes via post-processing and achieve all kinds of cool effects. Some techniques require more then one framebuffer as we saw and it is up to us as developers to mix and match them however we need to achieve our desired visuals.

I encourage you to experiment with the provided examples, try to render more elements, alternate the “ABC” text color between each renderTargetA and renderTargetB swap to achieve different color mixing, etc.

In the first demo, you can see a specific example of how this typography effect could be used and the second demo is a playground for you to try some different settings (just open the controls in the top right corner).

Further readings:

How to use post-processing in threejs

Filmic effects in WebGL

Threejs GPGPU flock simulation

The post Creating a Typography Motion Trail Effect with Three.js appeared first on Codrops.

via Codrops https://ift.tt/3BshHiM

0 notes

Text

How to Scrape Websites with Node.js and Cheerio

There might be times when a website has data you want to analyze but the site doesn't expose an API for accessing those data.

To get the data, you'll have to resort to web scraping.

In this article, I'll go over how to scrape websites with Node.js and Cheerio.

Before we start, you should be aware that there are some legal and ethical issues you should consider before scraping a site. It's your responsibility to make sure that it's okay to scrape a site before doing so.

The sites used in the examples throughout this article all allow scraping, so feel free to follow along.

Prerequisites

Here are some things you'll need for this tutorial:

You need to have Node.js installed. If you don't have Node, just make sure you download it for your system from the Node.js downloads page

You need to have a text editor like VSCode or Atom installed on your machine

You should have at least a basic understanding of JavaScript, Node.js, and the Document Object Model (DOM). But you can still follow along even if you are a total beginner with these technologies. Feel free to ask questions on the freeCodeCamp forum if you get stuck

What is Web Scraping?

Web scraping is the process of extracting data from a web page. Though you can do web scraping manually, the term usually refers to automated data extraction from websites - Wikipedia.

What is Cheerio?

Cheerio is a tool for parsing HTML and XML in Node.js, and is very popular with over 23k stars on GitHub.

It is fast, flexible, and easy to use. Since it implements a subset of JQuery, it's easy to start using Cheerio if you're already familiar with JQuery.

According to the documentation, Cheerio parses markup and provides an API for manipulating the resulting data structure but does not interpret the result like a web browser.

The major difference between cheerio and a web browser is that cheerio does not produce visual rendering, load CSS, load external resources or execute JavaScript. It simply parses markup and provides an API for manipulating the resulting data structure. That explains why it is also very fast - cheerio documentation.

If you want to use cheerio for scraping a web page, you need to first fetch the markup using packages like axios or node-fetch among others.

How to Scrape a Web Page in Node Using Cheerio

In this section, you will learn how to scrape a web page using cheerio. It is important to point out that before scraping a website, make sure you have permission to do so – or you might find yourself violating terms of service, breaching copyright, or violating privacy.

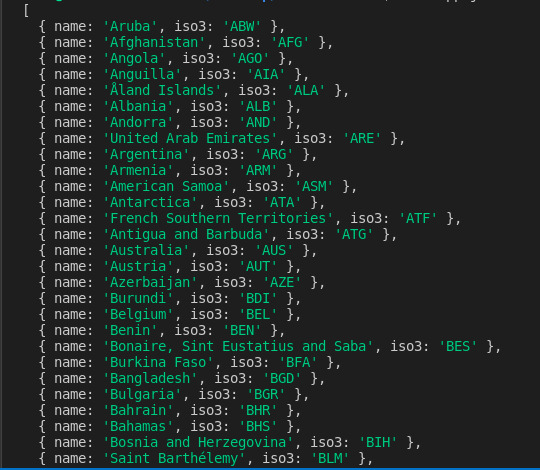

In this example, we will scrape the ISO 3166-1 alpha-3 codes for all countries and other jurisdictions as listed on this Wikipedia page. It is under the Current codes section of the ISO 3166-1 alpha-3 page.

This is what the list of countries/jurisdictions and their corresponding codes look like:

You can follow the steps below to scrape the data in the above list.

Step 1 - Create a Working Directory

In this step, you will create a directory for your project by running the command below on the terminal. The command will create a directory called learn-cheerio. You can give it a different name if you wish.

mkdir learn-cheerio

You should be able to see a folder named learn-cheerio created after successfully running the above command.

In the next step, you will open the directory you have just created in your favorite text editor and initialize the project.

Step 2 - Initialize the Project

In this step, you will navigate to your project directory and initialize the project. Open the directory you created in the previous step in your favorite text editor and initialize the project by running the command below.

npm init -y

Successfully running the above command will create a package.json file at the root of your project directory.

In the next step, you will install project dependencies.

Step 3 - Install Dependencies

In this step, you will install project dependencies by running the command below. This will take a couple of minutes, so just be patient.

npm i axios cheerio pretty

Successfully running the above command will register three dependencies in the package.json file under the dependencies field. The first dependency is axios, the second is cheerio, and the third is pretty.

axios is a very popular http client which works in node and in the browser. We need it because cheerio is a markup parser.

For cheerio to parse the markup and scrape the data you need, we need to use axios for fetching the markup from the website. You can use another HTTP client to fetch the markup if you wish. It doesn't necessarily have to be axios.

pretty is npm package for beautifying the markup so that it is readable when printed on the terminal.

In the next section, you will inspect the markup you will scrape data from.

Step 4 - Inspect the Web Page You Want to Scrape

Before you scrape data from a web page, it is very important to understand the HTML structure of the page.

In this step, you will inspect the HTML structure of the web page you are going to scrape data from.

Navigate to ISO 3166-1 alpha-3 codes page on Wikipedia. Under the "Current codes" section, there is a list of countries and their corresponding codes. You can open the DevTools by pressing the key combination CTRL + SHIFT + I on chrome or right-click and then select "Inspect" option.

This is what the list looks like for me in chrome DevTools:

In the next section, you will write code for scraping the web page.

Step 5 - Write the Code to Scrape the Data

In this section, you will write code for scraping the data we are interested in. Start by running the command below which will create the app.js file.

touch app.js

Successfully running the above command will create an app.js file at the root of the project directory.

Like any other Node package, you must first require axios, cheerio, and pretty before you start using them. You can do so by adding the code below at the top of the app.js file you have just created.

const axios = require("axios"); const cheerio = require("cheerio"); const pretty = require("pretty");

Before we write code for scraping our data, we need to learn the basics of cheerio. We'll parse the markup below and try manipulating the resulting data structure. This will help us learn cheerio syntax and its most common methods.

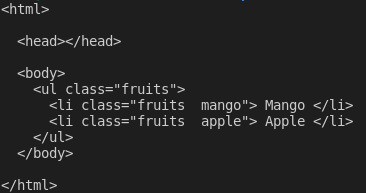

The markup below is the ul element containing our li elements.

const markup = ` <ul class="fruits"> <li class="fruits__mango"> Mango </li> <li class="fruits__apple"> Apple </li> </ul> `;

Add the above variable declaration to the app.js file

How to Load Markup in Cheerio

You can load markup in cheerio using the cheerio.load method. The method takes the markup as an argument. It also takes two more optional arguments. You can read more about them in the documentation if you are interested.

Below, we are passing the first and the only required argument and storing the returned value in the $ variable. We are using the $ variable because of cheerio's similarity to Jquery. You can use a different variable name if you wish.

Add the code below to your app.js file:

const $ = cheerio.load(markup); console.log(pretty($.html()));

If you now execute the code in your app.js file by running the command node app.js on the terminal, you should be able to see the markup on the terminal. This is what I see on my terminal:

How to Select an Element in Cheerio

Cheerio supports most of the common CSS selectors such as the class, id, and element selectors among others. In the code below, we are selecting the element with class fruits__mango and then logging the selected element to the console. Add the code below to your app.js file.

const mango = $(".fruits__mango"); console.log(mango.html()); // Mango

The above lines of code will log the text Mango on the terminal if you execute app.js using the command node app.js.

How to Get the Attribute of an Element in Cheerio

You can also select an element and get a specific attribute such as the class, id, or all the attributes and their corresponding values.

Add the code below to your app.js file:

const apple = $(".fruits__apple"); console.log(apple.attr("class")); //fruits__apple

The above code will log fruits__apple on the terminal. fruits__apple is the class of the selected element.

How to Loop Through a List of Elements in Cheerio

Cheerio provides the .each method for looping through several selected elements.

Below, we are selecting all the li elements and looping through them using the .each method. We log the text content of each list item on the terminal.

Add the code below to your app.js file.

const listItems = $("li"); console.log(listItems.length); // 2 listItems.each(function (idx, el) { console.log($(el).text()); }); // Mango // Apple

The above code will log 2, which is the length of the list items, and the text Mango and Apple on the terminal after executing the code in app.js.

How to Append or Prepend an Element to a Markup in Cheerio

Cheerio provides a method for appending or prepending an element to a markup.

The append method will add the element passed as an argument after the last child of the selected element. On the other hand, prepend will add the passed element before the first child of the selected element.

Add the code below to your app.js file:

const ul = $("ul"); ul.append("<li>Banana</li>"); ul.prepend("<li>Pineapple</li>"); console.log(pretty($.html()));

After appending and prepending elements to the markup, this is what I see when I log $.html() on the terminal:

Those are the basics of cheerio that can get you started with web scraping.

To scrape the data we described at the beginning of this article from Wikipedia, copy and paste the code below in the app.js file:

// Loading the dependencies. We don't need pretty // because we shall not log html to the terminal const axios = require("axios"); const cheerio = require("cheerio"); const fs = require("fs"); // URL of the page we want to scrape const url = "https://en.wikipedia.org/wiki/ISO_3166-1_alpha-3"; // Async function which scrapes the data async function scrapeData() { try { // Fetch HTML of the page we want to scrape const { data } = await axios.get(url); // Load HTML we fetched in the previous line const $ = cheerio.load(data); // Select all the list items in plainlist class const listItems = $(".plainlist ul li"); // Stores data for all countries const countries = []; // Use .each method to loop through the li we selected listItems.each((idx, el) => { // Object holding data for each country/jurisdiction const country = { name: "", iso3: "" }; // Select the text content of a and span elements // Store the textcontent in the above object country.name = $(el).children("a").text(); country.iso3 = $(el).children("span").text(); // Populate countries array with country data countries.push(country); }); // Logs countries array to the console console.dir(countries); // Write countries array in countries.json file fs.writeFile("coutries.json", JSON.stringify(countries, null, 2), (err) => { if (err) { console.error(err); return; } console.log("Successfully written data to file"); }); } catch (err) { console.error(err); } } // Invoke the above function scrapeData();

Do you understand what is happening by reading the code? If not, I'll go into some detail now. I have also made comments on each line of code to help you understand.

In the above code, we require all the dependencies at the top of the app.js file and then we declared the scrapeData function. Inside the function, the markup is fetched using axios. The fetched HTML of the page we need to scrape is then loaded in cheerio.

The list of countries/jurisdictions and their corresponding iso3 codes are nested in a div element with a class of plainlist. The li elements are selected and then we loop through them using the .each method. The data for each country is scraped and stored in an array.

After running the code above using the command node app.js, the scraped data is written to the countries.json file and printed on the terminal. This is part of what I see on my terminal:

Conclusion

Thank you for reading this article and reaching the end! We have covered the basics of web scraping using cheerio. You can head over to the cheerio documentation if you want to dive deeper and fully understand how it works.

Feel free to ask questions on the freeCodeCamp forum if there is anything you don't understand in this article.

Finally, remember to consider the ethical concerns as you learn web scraping.

If you read this far, tweet to the author to show them you care.

0 notes

Text

Beginner JavaScript Notes

Wes has a heck of a set of “notes” for learning JavaScript. It’s organized like a curriculum, meaning if you teach JavaScript, you could do a lot worse. It’s actually more like 85 really fleshed-out blog posts organized into sections and easily navigable. If you want to be walked through it via video, then buy the course. Smart.

If you’re looking for other curriculum for JavaScript, your best bets are:

Frontend Masters bootcamp (free) and other great courses (paid)

MDN Guides

Vets Who Code have an open source curriculum getting into JavaScript by Week 6.

Like any other learning experience in life, the best way to learn is multiple angles. If HTML & CSS are more your target, we have a bunch of suggestions there.

Direct Link to Article — Permalink

The post Beginner JavaScript Notes appeared first on CSS-Tricks. You can support CSS-Tricks by being an MVP Supporter.

via CSS-Tricks https://ift.tt/3uK7Foo

0 notes

Text

Microservices Architecture – Explained in Plain English

Over the last few years, microservices have gone from an overhyped buzzword to something you should understand as a software engineer.

According to an O'Reilly developer survey in 2020:

61% of companies have been using microservices in the last year

29% say at least half of their company systems are built using microservices

74% say their teams own the build/test/deploy phases of their applications

These numbers will only continue to increase over time as the ecosystem around microservices matures and makes adoption even easier.

This doesn't mean you need to be an expert on microservices to get a job, but it is definitely a bonus to at least understand the basic fundamentals.

The truth is, microservices aren't that hard to understand when you boil it down to the basics. The biggest problem is that most of the resources available are written to impress readers instead of actually educating them.

Another reason is that there isn’t even a true concrete definition about what a microservice is. The result is that there are tons of overlapping definitions and jargon which leads to confusion for people trying to learn about microservices.

In this article I will cut through all the chaff and focus on the core concepts of what microservices actually are. I'll use a variety of real world examples and metaphors to make abstract concepts and ideas easier to understand.

Here's what we'll cover:

Brief history of software design

Benefits and downsides of monoliths

Benefits and downsides of microservices

4 Minute Microservice Summary

If you prefer a quick introduction to microservices, you can watch this video first:

youtube

How to Understand Microservices with an Analogy of Starting Your Own Business

Let's say you are a software engineer and decide to start freelancing to earn some money. At the beginning you have a few clients and things go smoothly. You spend most of your time writing code and clients are happy.

But over time you start to slow down as the business grows. You spend more and more of your time doing customer service, answering emails, making minor changes for past customers, and other tasks that don't move the needle for you in terms of revenue.

You realize that you aren't optimizing your time as a software engineer so you hire a dedicated employee to handle customer service.

As you continue to grow you add more employees with specialized skills. You hire a marketer to focus on attracting new customers. You add project managers, more software engineers, and eventually an HR department to help with all these employees.

This was all necessary for your business to grow beyond what you could do by yourself as a single person, but there are of course growing pains.

Sometimes there are miscommunications between teams or departments and clients get upset when details slip through the cracks. There is the direct cost of having to pay employee salaries, internal rivalries between teams, and numerous other issues that arise when a company grows larger.

This example is somewhat representative of how a software company might move from a monolith to a microservice type architecture. What starts out with one person doing all the work gradually becomes a collection of specialized teams working together to achieve a common goal for the company.

This is very similar to how tech companies with monoliths have migrated to microservice architectures. While these examples aren't a perfect 1-1 match for microservices, the general problems are the same:

Scaling – Ideally you want to be able to quickly hire new employees and linearly scale how productive your company is.

Communication - Adding more employees adds the overhead of needing to coordinate and communicate across the organization. There are numerous strategies that companies try to use to make this efficient, especially in this era of remote work.

Specialization - Allowing certain groups in the organization to have autonomy to solve problems the most efficient way possible rather than trying to enforce a standard protocol for all situations. Certain customers might have different needs than others, so it makes sense to allow teams to have some flexibility in how they handle things.

How to Go from a Monolith to Microservices

To understand the present it helps to understand the past. Traditionally, software was designed in a monolithic style, and everything ran together as a single application. Like everything else in life, there are some pros and cons to this style of application.

Monoliths aren't inherently bad – and many microservice advocates actually recommend starting out with a monolith and sticking with it until you start running into problems. You can then break your monolith into microservices naturally over time.

Advantages of a Monolith Architecture

Faster development time initially

With a small team, development speed can be extremely fast when you're just starting off.

This is because the project is small, everybody understands the entire app, and things move smoothly. The members of the team know exactly how everything works together and can rapidly implement new features.

Simple deployment

Because monoliths work as a single unit, things like testing and logging are fairly simple. It's also easier to build and deploy a single monolith compared to a bunch of separate microservices.

Disadvantages of a Monolith Architecture

Despite the early benefits of monoliths, as companies grow they often encounter several problems on organizational and technical levels as a result of their monolithic application.

Tight-coupling of modules

Most companies with monolithic applications try to logically break the monolith into functional modules by use case to keep things organized. Think things like Authentication, Comments, Users, and Blog posts.

The problem is that this requires extreme engineering discipline to maintain long term. Established rules often get thrown out the window when a deadline approaches. This results in shortcuts being taken during a crunch and tangled interconnected code that accumulates as technical debt over time.

Real world example - Trying to stay disciplined with monoliths is a lot like sticking to an exercise routine or diet. Many people get excited and can stay disciplined with their diet for a few weeks, but eventually life gets in the way and you revert back to your normal routine. Trying to enforce loose coupling with monoliths is like that – there's just too much temptation to cut corners when you get in a time crunch.

Onboarding new hires becomes hard