Don't wanna be here? Send us removal request.

Text

AI and Data Privacy in the Insurance Industry: What You Need to Know

The insurance industry is no stranger to the requirements and challenges that come with data privacy and usage. By nature, those in insurance deal with large amounts of Personally Information (PI) which includes names, phone numbers, and Social Security numbers, financial information, health information, and so forth. This widespread use of multiple categories of PI by insurance companies demands that measures are taken to prioritize individuals’ privacy.

Further, in recent months, landmark cases of privacy violations and misuse of AI technology involving financial institutions have alarmed the insurance industry. While there is no doubt that embracing new technology like AI is a requirement to stay profitable and competitive going forward, let’s look at three main considerations for those in the insurance industry to keep in mind when it comes to data privacy: recent landmark cases, applicable regulations and AI governance.

Recent noteworthy cases of privacy violations and AI misuse

One important way to understand the actions of enforcement agencies and anticipate changes in the regulatory landscape is to look at other similar and noteworthy cases of enforcement. In recent months, we have seen two cases that stood out

First is the case of General Motors (G.M.), an American automotive manufacturing company. Investigations by journalist Kashmir Hill found that vehicles made by G.M. were collecting data of its customers without their knowledge and sharing it with data brokers like LexisNexis, a company that maintains a “Risk Solutions” division that caters to the auto insurance industry and keeps tabs on car accidents and tickets. The data collected and shared by G.M. included detailed driving habits of its customers that influenced their insurance premiums. When questioned, G.M. confirmed that certain information was shared with LexisNexis and other data brokers.

Another significant case is that of health insurance giant Cigna using computer algorithms to reject patient claims en masse. Investigations found that the algorithm spent an average of merely 1.2 seconds on each review, rejecting over 300,000 payment claims in just 2 months in 2023. A class-action lawsuit was filed in federal court in Sacramento, California.

Applicable Regulations and Guidelines

The Gramm-Leach-Bliley Act (GLBA) is a U.S. federal regulation focused on reforming and modernizing the financial services industry. One of the key objectives of this law is consumer protection. It requires financial institutions offering consumers loan services, financial or investment advice, and/or insurance, to fully explain their information-sharing practices to their customers. Such institutions must develop and give notice of their privacy policies to their own customers at least annually.

The ‘Financial Privacy Rule’ is a requirement of this law that financial institutions must give customers and consumers the right to opt-out and not allow a financial institution to share their information with non-affiliated third parties prior to sharing it. Further, financial institutions are required to develop and maintain appropriate data security measures.

This law also prohibits pretexting, which is the act of tricking or manipulating an individual into providing non-public information. Under this law, a person may not obtain or attempt to obtain customer information about another person by making a false or fictitious statement or representation to an officer or employee. The GLBA also prohibits a person from knowingly using forged, counterfeit, or fraudulently obtained documents to obtain consumer information.

National Association of Insurance Commissioners (NAIC) Model Bulletin: Use of Artificial Intelligence Systems by Insurers

The NAIC adopted a bulletin in December of last year as an initial regulatory effort to understand and gain insight into the technology. It outlines guidelines that include governance, risk management and internal controls, and controls regarding the acquisition and/or use of third-party AI systems and data. According to the bulletin, insurers are required to develop and maintain a written program for the responsible use of AI systems. Currently, 7 states have adopted these guidelines; Alaska, Connecticut, New Hampshire, Illinois, Vermont, Nevada, and Rhode Island, while others are expected to follow suit.

What is important to note, is that the NAIC outlined the use of AI by insurers in their Strategic Priorities for 2024, which include adopting the Model Bulletin, proposing a framework for monitoring third-party data and predictive models, and completing the development of the Cybersecurity Event Response Plan and enhancing consumer data privacy through the Privacy Protections Working Group.

State privacy laws and financial institutions

In the U.S., there are over 15 individual state privacy laws. Some have only recently been introduced, some go into effect in the next two years, and others like the California Consumer Privacy Act (CCPA) and Virginia Consumer Data Protection Act (VCDPA) are already in effect. Here is where some confusion exists. Most state privacy regulations such as Virginia, Connecticut, Utah, Tennessee, Montana, Florida, Texas, Iowa, and Indiana provide entity exemptions to financial institutions. This means that as regulated entities, these businesses fall outside the scope of these state laws. In other words, if entities are regulated by the GLBA then they are exempt from the above-mentioned state regulations.

Some states, like California and Oregon, have data-level exemptions for consumer financial data regulated by the GLBA. For example, under the CCPA, Personal Information (PI) not subject to the GLBA would fall under the scope of the CCPA. Further, under the CCPA, financial institutions are not exempt from its privacy right-of-action concerning data breaches.

As for the Oregon Consumer Privacy Act (OCPA), only 'financial institutions,' as defined under §706.008 of the Oregon Revised Statutes (ORS), are subject to a full exemption. This definition of a ‘financial institution’ is narrower than that defined by the GLBA. This means that consumer information collected, sold and processed in compliance with the GLBA may still not be exempt under the OCPA. We can expect other states with upcoming privacy laws to have their own takes on how financial institutions’ data is regulated.

AI and Insurance

Developments with Artificial Intelligence (AI) technology has been a game changer for the insurance industry. Generative AI can ingest vast amounts of information and determine the contextual relationship between words and data points. With AI, insurers can automate insurance claims and enhance fraud detection, both of which would require the use of PI by AI models. Undoubtedly, the integration of AI has multiple benefits including enabling precise predictions, handling customer interactions, and increasing accuracy and speed overall. In fact, a recent report by KPMG found that Insurance CEOs are actively utilizing AI technology to modernize their organizations, increase efficiency, and streamline their processes. This not only includes claims and fraud detection, but also general business uses such as HR, hiring, marketing, and sales. Each likely use different models with their own types of data and PI.

However, the insurance industry’s understanding of Generative AI related risk is still in its infancy. And according to Aon's Global Risk Management Survey, AI is likely to become a top 20 risk in the next three years. In fact, according to Sandeep Dani, Senior Risk Management Leader at KPMG, Canada, “The Chief Risk Officer now has one of the toughest roles, if not THE toughest role, in an insurance organization”

In the race to maximise the benefits of AI, consumers’ data privacy cannot take a backseat, especially when it comes to PI and sensitive information. As of 2024, there is no federal AI law in the U.S., and we are only starting to see statewide AI regulations like with the Colorado AI Act and the Utah AI Policy Act. Waiting around for regulations is not an effective approach. Instead, proactive AI governance measures can act as a key competitive differentiator for companies, especially in an industry like insurance where consumer trust is a key component.

Here are some things to keep in mind when integrating AI:

Transparency is key: Consumers need to have visibility over their data being used by AI models, including what AI models are being used, how these models use their data, and the purposes for doing so. Especially in the case of insurance, where the outcome of AI models has serious implications, consumers need to be kept in the loop about their data.

Taking Inventory: To ensure accuracy and quality of outputs, it is important to take inventory of and understand the AI systems, the training data sources, the nature of the training data, inputs and outputs, and the other components in play to gain an understanding of the potential threats and risks.

Performing Risk Assessments: Different laws consider different activities as high-risk. For example, biometric identification and surveillance is considered high-risk under the EU AI Act but not under the NIST AI Risk Management Framework. As new AI laws are introduced in the U.S., we can expect the risk-based approach to be adopted by many. Here, it becomes important to understand the jurisdiction, and the kind of data in question, then categorize and rank risks accordingly.

Regular audits and monitoring: Internal reviews will have to be maintained to monitor and evaluate the AI systems for errors, issues, and biases in the pre-deployment stage. Regular AI audits will also need to be conducted to check for accuracy, robustness, fairness, and compliance. Additionally, post-deployment audits and assessments are just as important to ensure that the systems are functioning as required. Regular monitoring of risks and biases is important to identify emerging risks or those that may have been missed previously. It is beneficial to assign responsibility to team members to overlook risk management efforts.

Conclusion

People care about their data and their privacy, and for insurance consumers and customers, trust is paramount. Explainability is the term commonly used when describing what an AI usage goal or expected output is meant to be. Fostering explainability when governing AI helps stakeholders make informed decisions while protecting privacy, confidentiality and security. Consumers and customers need to trust the data collection and sharing practices and the AI systems involved. That requires transparency so they may understand those practices, how their data gets used, the AI systems, and how those systems reach their decisions.

About Us

Meru Data designs, implements, and maintains data strategy across several industries, based on their specific requirements. Our combination of best-inclass data mapping and automated reporting technology, along with decades of expertise around best practices in training, data management, AI governance, and law gives Meru Data the unique advantage of being able to help insurance organizations secure, manage, and monetize their data while preserving customer trust and regulatory compliance.

0 notes

Text

Better Understand DataMaps – A Google Maps Analogy

The shift from physical maps to dynamic digital applications has transformed how we navigate the world, both physically and digitally. Paper maps, like those by Rand McNally, were essential for decades, but they had inherent limitations. Static and fixed in time, they required constant updates to remain useful. Changes to roads and terrain after printing meant extra work (and time) from drivers. Visualizing the bigger picture, such as understanding multiple routes or zooming into specific locations, demanded several resources. Similarly, early DataMaps offered static representations of data. These could be lists of system inventories, isolated database schemas, or sporadic architecture diagrams, providing snapshots of information without integration, interaction, or real-time updates.

With the advent of digital mapping tools like Google Maps and Apple Maps, navigation became intuitive, accessible, and dynamic. These tools incorporate static data, like street maps and landmarks, with real-time information like traffic conditions, construction updates, and weather influences. This evolution from static to dynamic mapping tools offers a direct analogy for understanding the transformation of DataMaps in organizations.

DataMaps represent the organization’s data ecosystem, detailing where data resides, how it flows between systems, and where it interacts with internal and external entities. Early DataMaps, much like paper maps, often existed as disconnected resources. A list of databases here, a flowchart there, or maybe a spreadsheet outlining access permissions. These were useful but far from comprehensive or user-friendly. Modern DataMaps, on the other hand, integrate diverse data sources, reflect real-time updates, and provide a clear visual interface that adapts to user needs.

Consider Google Maps. Beyond navigation, it allows users to explore surroundings, find services, and even navigate indoors. Similarly, modern DataMaps should enable stakeholders to drill down into specific data pipelines, understand connections, and monitor data integrity and security in real-time.

Building a DataMap manually is akin to creating a map of the world by hand: technically possible, but time-intensive and prone to errors. Automation revolutionizes this process, much as satellite imagery and AI have advanced digital cartography. Modern DataMaps leverage automation to ingest and reconcile data from disparate sources. This ensures accuracy, reduces manual effort, and allows organizations to focus on interpreting and acting upon insights rather than gathering basic information.

Yet automation alone isn’t enough. Just as mapping tools allow user corrections, like reporting a closed road or a new business location, DataMaps require user input for fine-tuning. This symbiotic relationship between automation and manual refinement ensures that the DataMap remains accurate, relevant, and useful across diverse use cases.

Much like navigation apps, which continuously evolve to incorporate new technologies, modern DataMaps are not static tools. Once implemented, they often expand beyond their original purpose. Initially created to map data flows for compliance or operational oversight, they can quickly become invaluable for risk management, strategic planning, and even innovation.

For instance, in a healthcare organization, a DataMap might start as a tool to track patient data flows for regulatory compliance. Over time, it could evolve to optimize data sharing across departments, support research initiatives by identifying valuable datasets, or even enhance patient care through predictive analytics.

This adaptability is key. Organizations that adopt DataMaps often discover new applications, from identifying inefficiencies in data transfer to supporting AI model training by ensuring clean, well-structured data pipelines.

The success of apps like Google Maps offers key lessons for DataMaps:

Data Integration: Digital maps combine multiple data layers (like geographic, traffic, and business location data) into a cohesive user experience. Similarly, effective DataMaps must integrate diverse data sources, from cloud storage to on-premise systems, providing a unified view.

User-Friendly Interfaces: A key strength of mapping apps is their intuitive design, enabling users to navigate effortlessly. DataMaps should aim for the same simplicity, offering clear visualizations that allow stakeholders to explore data relationships without needing technical expertise.

Continuous Improvement: Mapping tools constantly evolve, adding features like real-time traffic updates or indoor navigation. DataMaps should similarly grow, incorporating new datasets, improving analytics capabilities, and adapting to organizational needs.

Just as the mapping world has expanded to include augmented reality (AR) for visualizing complex datasets in real-world environments, the future of DataMaps lies in integrating advanced technologies like AI. Unlike AR, which enhances spatial visualization, AI enables DataMaps to predict potential bottlenecks or vulnerabilities proactively. By leveraging machine learning algorithms, these tools can provide automated recommendations to optimize workflows or secure sensitive information, offering predictive and prescriptive insights beyond traditional data mapping.

As organizations increasingly rely on data to drive decisions, the demand for robust, dynamic DataMaps will only grow. These tools will not just document the data ecosystem but actively shape it, providing the insights and foresight needed to stay ahead in a competitive landscape. DataMaps provide foundational insights into how information flows within an organization, unlocking multiple applications across different tasks. They can be used to identify and address potential data bottlenecks, ensure compliance with privacy regulations like GDPR or HIPAA, optimize resource allocation, and enhance data security by pinpointing vulnerabilities. Additionally, DataMaps are invaluable for streamlining workflows in complex systems, supporting audits, and enabling better decision-making by providing a clear visual representation of interconnected data processes. This versatility demonstrates their importance across industries and operational needs.

The analogy between digital maps and DataMaps highlights a fundamental truth: tools that integrate diverse data sources, provide dynamic updates, and prioritize user experience are transformative. Just as Google Maps redefined navigation, DataMaps are redefining how organizations understand and leverage their data. By embracing automation, fostering adaptability, and prioritizing ease of use, DataMaps empower organizations to navigate their data ecosystems with clarity and confidence. In a world driven by data, the journey is just as important as the destination—and a well-designed DataMap ensures you reach it effectively.

0 notes

Text

Third-Party Governance: Ensuring hygienic vendor data handling practices

When we collect, store and use data on a daily basis, there are a number of regulatory requirements that we are meant to comply with. This includes:

Ensuring informed consent from users is obtained.

Privacy notices on websites are up to date and easy to understand.

Appropriate security measures are in place for the data collected, and

DSARs are handled efficiently and in a timely manner, etc.

Complying with these requirements isn’t always straightforward. Since most companies deal with multiple third parties (which can be service providers, vendors, contractors, suppliers, partners, and other external entities) we are required, by law, to ensure that these third parties are also compliant with the applicable regulatory requirements.

Third party, vendor and service provider governance are a crucial component of a strong and sustainable privacy program. In October of 2024, the Data Protection Authority in the Netherlands, imposed a €290 million fine on Uber for failing to have appropriate transfer mechanisms for personal data that it was sharing to third-party countries including its headquarters in the U.S. According to Article 44 of EU General Data Protection Regulation (GDPR), data controllers and processors must comply with the data transfer provisions laid out in Chapter V of EU GDPR when transferring personal data to a third-party based outside of the EEA. This includes the provisions of Article 46 which mandates data controllers and processors implement appropriate safeguards where transfers are to a country that has not been given an adequacy ruling by the EU. In the U.S., the Federal Trade Commission (FTC) brought action against General Motors (GM) and OnStar (owned by GM) for collecting sensitive information and sharing it with third parties without consumer’s consent.

These are just examples of companies knowingly selling and sharing data with third parties. In some cases, data is collected by third parties, through Software Development Kits (SDKs) and pixels embedded on company websites without the full and proper knowledge of the first party companies. Some websites are built using third party service providers, these third parties also collect data from website visitors without the knowledge of the first party. The AdTech ecosystem in general is a complex environment; data changes hands with so many parties that it's difficult to understand how the data gets used and which third parties are actually involved.

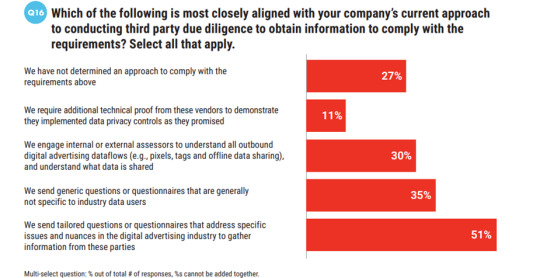

The CCPA and other state regulations require that businesses conduct due diligence of their service providers and third parties to avoid potential liability for acts of non-compliance on the part of these third parties. However, the Interactive Advertising Bureau’s (IAB) recent survey report provided interesting statistics, with 27% of the companies reporting that they had not yet finalized an approach to meet these third-party due diligence requirements.

In an already complex and evolving legal landscape, how do we ensure that our third-party governance is adequate?

This is where DataMapping comes in. A comprehensive and effective DataMap provides clear insights into what data is collected, its’ source, its’ storage location, the security measures in place, to which third-parties the data is shared with and how, points of contact and security measures during data transfers. A relation map within a DataMap provides an overview of different systems within the organisation and third parties to understand what data passes between them, the frequency of the transfers, security measures, etc. All this information is key in when sharing data to third parties.

It’s important to be aware of the complexities around DataMapping when it involves third parties. Often, the contracts and documentation provided by third parties will allocate a lot of responsibility on the business to ensure the data is collected and managed in a privacy compliant way. Further, when Privacy Impact Assessments (PIAs) are made, the business owners are sometimes not able to provide accurate and complete information as they themselves might not understand all the nuances of the data collected and processed by the third-party.

There is a need for automation and audits to capture detailed information about the data collected by third parties.

This information is also vital when ensuring that third parties handle Data Subject Access Requests (DSARs) and opt-out requests in a timely and efficient manner, which is a regulatory requirement. Depending on the requirement, sometimes passthrough requests are made in the case of service providers and processors, whereas sometimes we are required to disclose the third party and provide contact information. The vendor can be the same in both cases.

When there is a clear understanding of what data has been shared with which third party, DSARs and opt-out requests can be handled effectively; Whenever an opt-out request is received, or a signal is detected, the company should have the capability to automatically communicate the information of the request to the third parties involved so that they honor the request as well. A DSAR or an opt-out request is not effectively and completely honored until the third parties involved are also in compliance with the requirements of the request

The Interactive Advertising Bureau (IAB) has provided a solution to reach out to hundreds of third parties in the AdTech ecosystem. Companies can register with IAB and set up the IAB Global Privacy Platform (GPP), which informs those third parties tied with IAB of the users’ preferences.

In the case of SDK’s which are used in mobile apps and smart devices, maintaining a privacy-compliant app environment is vital. Periodic auditing of SDKs is a best practice to keep track of SDKs as they might change their policies or update their policies on privacy and data collection, especially while upgrading. The responsibility falls on developers to ensure that any SDKs to be integrated are privacy compliant and to thoroughly study the documentation provided, as the specifications for maintaining compliance are generally included here.

Appropriate security measures when data is being transferred is also necessary. Privacy enhancing technologies (PETs) can be used to anonymize or pseudonymize data so that it is not vulnerable to data breaches and bad actors during the transfer. Differential Privacy, a privacy enhancing technology used in data analytics can also be utilized. The National Institute of Standards and Technology (NIST) recently published their guidelines for evaluating differential privacy.

Finally, third parties and service providers need to be audited and assessed on a regular basis. Often, third party data handling processes are overlooked in order to focus on other matters. However, third parties need to be audited to ensure that they are complying with the requirements of their contracts and to ensure that Service Level Agreements (SLAs) and Master Service Agreements (MSAs) are met. In fact, third party, service provider and vendor contracts, first need to be assessed and audited to ensure they meet industry standards and compliance requirements.

In an environment where different companies interact with and work very closely with one another, whether it is to build websites, using SDKs, for AdTech purposes, or for additional tech support, ensuring that, the risk of facing regulatory heat for noncompliance is high. When substantial efforts are being made to guarantee that our data handling practices are compliant with regulations, we should ensure that we don’t face heat for the data handling practices of those third parties that we interact with. In fact, it is safe to say that a privacy program is not complete and sustainable until third party governance is also strong. However, there is often a lack of technical talent and expertise to handle the demands of the third-party governance in the complex AdTech ecosystem. As the IAB survey report found, 30% of the companies require internal and external assessors to fully understand the scope of what data is shared. Sustainable solutions require deep technical knowledge and skill in multiple areas. This is often not easy to find.

0 notes

Text

How AI will change AdTech and Data Privacy

Artificial Intelligence and Machine Learning models have impacted nearly every industry, and we can expect that it will make a noteworthy impact on the Ad Tech industry too. In fact, a report by Forrester found that in the US, more than 60% of advertising agencies are already using generative AI, while 31% are exploring use cases for the technology. Over the years we’ve seen that data privacy requirements have greatly affected Ad Tech and its processes. In the EU, for example, we’re seeing Apple and Meta facing heat for violating the Digital Marketers Act by forcing customers into a restrictive “pay or consent” model for ads on Instagram and Facebook.

Ad Tech companies now have to strike a balance between employing beneficial AI technologies and meeting data privacy compliance requirements. What does this balance look like? In this article, we take a close look at the changes we can expect AI to make on Ad Tech and how these changes might affect users’ data privacy.

How will Ad Tech benefit from AI?

At the first glance, we’re seeing AI change Ad Tech in these ways:

AI can analyze vast datasets and automatically categorize users into specific groups based on their likes, interests, and online activities, allowing for high quality advertising.

AI and ML can help forecast customer behavior by predicting which individuals might convert, unsubscribe, or engage with the advertisements.

AI can be used for Programmatic Advertising, where in AI places targeted ads to the relevant audiences in real time after analyzing behavior and bidding on the most effective ad placements.

Overall, one of the biggest impacts that AI is expected to have on Ad Tech is Personalization, which is evolving from pulling insights from demographics to tailored individual interactions based on searches, preferences and context. Generative AI solutions can deliver hyper-personalized ad experiences at scale whether it’s email marketing or website interactions. Further, predictive analysis can anticipate customer needs and place content, offers and experiences accordingly.

But, while AI is increasing the effectiveness of personalized and targeted advertising, it will also have an impact on data privacy. So far however, we’re expecting AI’s influence on data privacy in the Ad Tech industry to be largely positive.

Will AI replace cookies?

AI will most likely replace cookies. One way in which this will happen is through contextual advertising where in ads are placed on websites based on the content of the webpages not on the activity of the users. Another way in which AI will replace cookies is through probabilistic and cohort-based modelling, which essentially means using AI to predict user behaviour.

However, with this approach data still needs to be fed into the AI and ML models. Where does this data come from? How do can we ensure that it is accurate? Have users consented to their data being used by AL and ML models? These concerns question the effectiveness of completely replacing cookies.

A new type of cookie

The good news is that AI can be used to power a new type of cookie. Agentic cookies act as intermediaries between users and websites. They observe how users act online, their data sharing preferences, their location sharing preferences, their interests, etc. These agentic cookies then act as a liaison between the user and the website, negotiating what data gets shared based on the user’s past behaviour. What differentiates agentic cookies from traditional ones is that these cookies continually learn users’ behaviours and preferences. This also means that users won’t have to constantly deal with cookie banners that pop-up. The cookie, having learnt the user’s behaviour will make the choice on their behalf.

The cookie’s ability to constantly learn about the user’s behaviour helps it discriminate between normal activity and potential data privacy threats. For example, if a visitor visits a frequently used shopping website, the cookie will ensure the users gets signed in without hassle. However, if the user clicks on a link that hasn't been visited before, the cookie will allow that website’s consent preference banner to be shown to the user.

This type of cookie is beneficial to advertisers too because while advertisers will receive lesser data, the data that they do receive, being directly from the user itself (zero party data) will be far more usable than first or third-party data. Further, this data will be up to date. Advertisers won’t have to spend time combing through irrelevant data sets.

AI can also be used to follow competitor’s ad campaigns and pull insights from their processes. AI techniques can also predict which advertisement position would generate the best return per placement, thus saving the advertisers’ spending. Parameters such as performance of prior campaigns, current trends, and changes in supply and demand, which are key insights can be provided by AI.

Another interesting way in which AI is changing Ad Tech is using AI to test the creative effectiveness of ads. A creative testing company, DAIVID, uses their AI driven platform that has been trained using millions of human responses to ads that allow advertisers to understand the emotional impact of their ad and can then predict the business outcome of the ad.

Employing AI, of course, comes with its challenges. We are seeing that Ad Tech companies are collaborating to deal with the rising cost of tech innovation and privacy compliance, and the demand for integrated and compliant solutions. The digital media and marketing ecosystem experienced a 13% annual increase in overall mergers and acquisitions compared to the previous year. For example, Trade Desk recently acquired Sincera, a data management company.

It has to be noted that given how effective and beneficial AI technologies will be, it will take a while before most of them are fully functional and ready to be used. In the meantime, companies need to focus on maintaining hygienic data collection, processing and selling practices. Cookie banners that are compliant with privacy regulations and free of dark patterns will have to be used. AI will undoubtedly revolutionize the Ad Tech industry; this means that lawmakers will be quick to ensure that data privacy is not compromised because of this. While companies are adopting newer, more efficient technologies, they also need to be adopting strong and sustainable privacy programs that ensure compliance.

1 note

·

View note