Software Testing | Quality Assurance Testing | Automation & Manual Testing

Don't wanna be here? Send us removal request.

Text

Java Exception Handling Cheat sheet.....

0 notes

Text

Well Explained Picture

0 notes

Text

Quality assurance testing is not the final development phase.

Quality assurance testing is not the last phase in the software development process. It is one step in the ongoing process of an agile development environment. Testing takes place in each iteration before the development components are implemented. Accordingly, software quality assurance testing should be integrated as a regular and ongoing element in the everyday development process.

0 notes

Text

How to handle iFrames in Selenium WebDriver?

In Webdriver, you should use driver.switchTo().defaultContent(); to get out of a frame.

You need to get out of all the frames first, then switch into the desired frame.

driver.switchTo().defaultContent(); // you are now out of the frames

driver.switchTo().frame("your frame id comes here");// move to the desired frame

0 notes

Text

What is a timer in JMeter and what are the different types?

A JMeter thread by default will send requests continuously without any pause. To get a pause between the requests, timers are used.

Some of the Timers used are

Constant Timer

Gaussian Random Timer

Synchronizing Timer

Uniform Random Timer and so on.

0 notes

Text

What is the ideal execution order of Test Elements on your JMeter script?

The test plans elements execution order is:

Configuration elements.

Pre-processors.

Timers.

Samplers.

Post-processors.

Assertions.

Listeners.

0 notes

Text

What is the difference between driver.close() and driver.quit command?

It is really important for you to be very clear on these differences.

close(): WebDriver’s close() method closes the web browser window that the user is currently working on, or we can also say the window that is being currently accessed by the WebDriver. The command neither requires any parameter nor does return any value.

quit(): Unlike close() method, quit() method closes down all the windows that the program has opened. Same as close() method, the command neither requires any parameter nor does return any value.

0 notes

Text

Are the Test Plans built using JMeter OS dependent?

Usually, test plans are saved in their XML format, so there is nothing to do with any particular O.S. It can be run on any OS where JMeter can run.

0 notes

Text

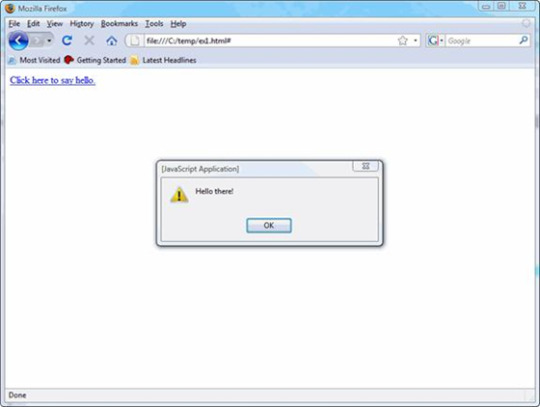

How do you automate elements on web-based pop up?

WebDriver offers the users with a very efficient way to handle these pop ups using Alert interface. There are the four methods that we would use along with the Alert interface.

void dismiss() – The dismiss() method clicks on the “Cancel” button as soon as the pop up window appears. void accept() – The accept() method clicks on the “Ok” button as soon as the pop up window appears. String getText() – The getText() method returns the text displayed on the alert box. void sendKeys(String stringToSend) – The sendKeys() method enters the specified string pattern into the alert box.

Syntax:

driver.switchTo().alert().accept();

0 notes

Text

What are the advantages of an Automation framework?

Sometimes testers implement frameworks without knowing why we have frameworks implemented. Below are the reasons for you to have framework implementation:

Re-usability of code. Maximum coverage. Recovery scenario. Low cost maintenance. Minimal manual intervention. Easy reporting.

0 notes

Text

Type of Performance Test

The focus of Performance testing is checking a software program's

Speed - Determines whether the application responds quickly

Scalability - Determines maximum user load the software application can handle.

Stability - Determines if the application is stable under varying loads

Below are the type of performance tests that you could perform to determine the above factors:

Load testing - checks the application's ability to perform under anticipated user loads. The objective is to identify performance bottlenecks before the software application goes live.

Stress testing - involves testing an application under extreme workloads to see how it handles high traffic or data processing. The objective is to identify breaking point of an application.

Endurance testing - is done to make sure the software can handle the expected load over a long period of time.

Spike testing - tests the software's reaction to sudden large spikes in the load generated by users.

Volume testing - Under Volume Testing large no. of. Data is populated in database and the overall software system's behavior is monitored. The objective is to check software application's performance under varying database volumes.

Scalability testing - The objective of scalability testing is to determine the software application's effectiveness in "scaling up" to support an increase in user load. It helps plan capacity addition to your software system.

0 notes

Text

Automation Testing - Process

To perform automation testing, the idea process is shown below. This is a commonly asked interview question.

Test tool selection - phase where you identify and select the automation tool for automation testing (refer to earlier post to understand how to choose a test automation tool)

Define scope of automation - what are you going to automate and won't you automate (refer to earlier post to understand how to choose a test automation tool)

Planning, Design and Development - Once you have identified the test cases to be automated, you can design and develop the automation suite, implementing relevant design pattern and framework.

Test Execution - Execute the above designed test case and record the test result.

Maintenance - Depending on the changes the Application Under Test goes through, update the existing automation test cases.

The post picture(image) gives the best illustration of the process.

0 notes

Text

What are the different types of testing that can be automated?

This is another common question in your interview. It is important for you to mention why and how you automated these types with an example from your experience.

Smoke Testing

Unit Testing

Integration Testing

Functional Testing

Keyword Testing

Regression Testing

Data Driven Testing

Black Box Testing

These are the test types that could be automated ideally.

0 notes

Text

Automation Test Cases - Suitable vs. Unsuitable

Automation Test Cases - Suitable vs. Unsuitable

What test cases are suitable for automation?

Test cases to be automated can be selected using the following criterion to increase the automation ROI:

High Risk - Business Critical test cases.

Test Cases that are executed repeatedly.

Test Cases that are very tedious or difficult to perform manually.

Test Cases which are time consuming.

What test cases are NOT suitable for automation?

The following test cases are not suitable for automation:

Test Cases that are newly designed and not executed manually at least once.

Test Cases for which the requirements are changing frequently.

Test Cases which are executed on an ad-hoc basis.

0 notes

Text

Define the scope of Automation

The following question is usually asked in interviews, "How do you identify test cases for automation?" or "What are the test cases that you will automate?" or "On what basis do you choose the test cases to be automated?"

Scope of automation is the area of your Application Under Test which will be automated. The following points help determine scope:

Feature that are important for the business

Scenarios which have large amounts of data

Common functionalities across applications

Technical feasibility

Extent to which business components are reused

Complexity of test cases

Ability to use the same test cases for cross browser testing

0 notes

Text

Automation Testing - Best Practices

To get maximum ROI from automation, observe the following

Scope of Automation needs to be determined in detail before the start of the project. This sets the expectations from Automation correctly.

Select the right automation tool: A tool must not be selected based on its popularity but its fit to the automation requirements.

Choose appropriate framework

Scripting Standards- Standards have to be followed while writing the scripts for Automation .Some of them are to create uniform scripts, comments and indentation of the code

Adequate Exception handling - How error is handled on system failure or unexpected behavior of the application.

User defined messages should be coded or standardized for Error Logging for testers to understand.

Measure metrics- Success of automation cannot be determined by comparing the manual effort with the automation effort but by also capturing the following metrics.

Percent of defects found

Time required for automation testing for each and every release cycle

Minimal Time taken for release

Customer satisfaction Index

Productivity improvement

The above guidelines if observed can greatly help in making your automation successful.

0 notes