Don't wanna be here? Send us removal request.

Text

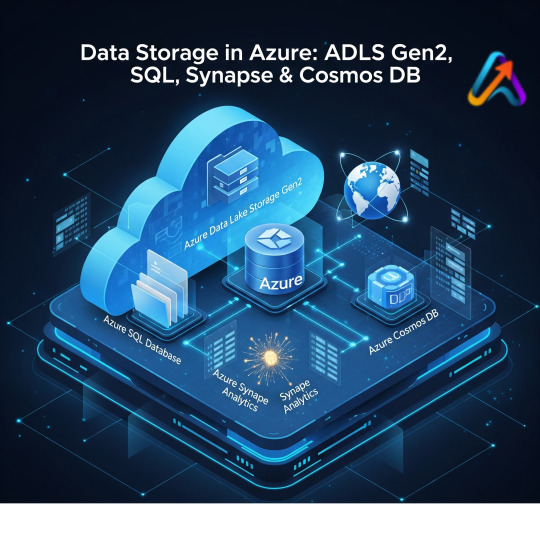

Azure Data Engineer Online Training | Azure Data Engineer Online Course

Master Azure Data Engineering with AccentFuture. Get hands-on training in data pipelines, ETL, and analytics on Azure. Learn online at your pace with real-world projects and expert guidance.

🚀 Enroll Now: https://www.accentfuture.com/enquiry-form/

📞 Call Us: +91–9640001789

📧 Email Us: [email protected]

🌐 Visit Us: AccentFuture

#Azure data engineer course#Azure data engineer course online#Azure data engineer online training#Azure data engineer training

0 notes

Text

Snowflake data cloud | Snowflake data integration

Unlock the power of Snowflake with AccentFuture! Explore real-world Snowflake use cases in finance, healthcare, and retail. Learn data cloud, analytics, integration & warehousing with experts.

#Benefits of Snowflake#Real-world Snowflake examples#Snowflake analytics use cases#Snowflake data cloud#Snowflake data integration#Snowflake data warehouse#Snowflake for finance#Snowflake for healthcare#Snowflake for retail#Snowflake use cases.

0 notes

Text

🚀Enroll Now: https://www.accentfuture.com/enquiry-form/

📞Call Us: +91-9640001789

📧Email Us: [email protected]

🌍Visit Us: AccentFuture

0 notes

Text

Databricks Training

Master Databricks with AccentFuture! Learn data engineering, machine learning, and analytics using Apache Spark. Gain hands-on experience with labs, real-world projects, and expert guidance to accelerate your journey to data mastery.

0 notes

Text

0 notes

Text

Boost your career with AccentFuture's Databricks online training. Learn from industry experts, master real-time data analytics, and get hands-on experience with Databricks tools. Flexible learning, job-ready skills, and certification support included.

#course training#databricks online training#databricks training#databricks training course#learn databricks

0 notes

Text

How Databricks is Changing the Future of Data Engineering

Introduction

Your online store supports the sale of electronics and clothes and each second creates significant amounts of data regarding customer activities and product view data. Every passing second your website records dramatic data volumes concerning customer actions alongside buying patterns and item view patterns and additional information. The extensive dataset you have access to poses an opportunity to generate smarter choices regarding future sales predictions of target products.

Databricks serves as the solution for this requirement. This advanced data platform enables businesses to gather data while performing cleaning tasks and analysis before using it to generate real-time insights and reports and artificial learning results. Apache Spark underpins Databricks platform that transforms traditional work methodologies for data engineers while providing them improved productivity.

Let’s explore how.

From Chaos to Clarity: The Unified Lakehouse

Firms keep raw data within a data lake storage system while placing structured data along with cleaning in separate data warehouse locations. Two different systems and multiple organizational protocols lead to both difficulties in understanding and duplication of work effort.

Databricks delivers a unified Lakehouse infrastructure which unifies superior aspects of data lakes and warehouses within a single environment. By using the Lakehouse you gain a unified storage solution for both raw and cleaned data structures. Users can work without needing to carry out repeated data distribution.

Example:

Back to the online store—let’s say you store website click logs (raw data) and also clean sales data (structured data). With Databricks, you can keep them together in one system and build reports or run machine learning models on both at the same time.

Cloud-Native and Super Scalable

Every component of Databricks functions in cloud environments that span across AWS, Azure and Google Cloud platforms. There is no need for you to purchase servers or establish software since Databricks operates within a cloud environment. Your data processing system exists within cloud infrastructure which adjusts its capacity based on your current information volume.

You pay Databricks only for the resources you need while its team maintains your infrastructure needs without extra costs.

Built on Apache Spark, But Better

Data engineers employ Apache Spark as a quick data processing system which functions worldwide. Databricks improves the functionality of Spark through enhanced performance optimization that accompanies an intuitive graphical user interface.

The processing of real-time or batch data through Python, SQL, R and Scala coding languages is enabled. Notebooks allow you to view results directly inside the document similar to Google Docs platform for creating code.

Real-life analogy:

It’s like Spark is a powerful sports car. Databricks adds GPS, a comfy interior, and automatic gears—making it fast and smooth to drive even for beginners.

Delta Lake: The Data Guardian

The main issue facing data engineers involves the problems that arise from dealing with corrupted data and file absences together with data type discrepancies. Databricks enables data reliability and consistency through its Delta Lake tool which helps resolve problems when errors occur.

Delta Lake enables users to track data changes together with recovery of old versions and prevention of system entry for bad data.

Use case:

If someone accidentally uploads a wrong sales file, Delta Lake allows you to go back in time and undo the damage—like using Ctrl+Z on your data.

Collaboration Made Easy

Databricks gives multiple teams consisting of data engineers and analysts and data scientists collaborative access to a shared notebooks environment. The system enables each member to view code alongside allowing commentary and query execution and visualization simultaneously.

Faster project delivery occurs through a system which eliminates email communication and reduces both waiting time and work processes.

Built-in Machine Learning Tools

After the data pipeline solution Databricks continues to provide users with additional tools called MLflow. The platform includes MLflow as well as other tools that enable users to build train track and deploy machine learning models.

The integration allows data engineers to enhance their support for data scientists while they acquire skills to develop their own ML models.

A Real-World Example

Let’s say your online store wants to reduce cart abandonment. You collect user behavior data—pages visited, items added, and when they drop off. With Databricks, you:

Ingest that data from your website in real time

Clean and transform it using Spark

Store it in Delta Lake

Share it with the data science team via a notebook

Train a machine learning model to predict abandonment risk

Automatically send alerts or discount coupons to users likely to leave

All this happens within one platform—Databricks.

Conclusion

The Databricks solution stands beyond the level of standard tools. The complete modern data engineering platform provides simplicity together with powerful functionality across a user-friendly structure designed to support future operations. Databricks gives its users all the necessary tools for processing real-time streaming data as well as ML model development within a single platform.

The combination of cloud infrastructure with immediate data processing capabilities and storage systems alongside teamwork features makes Databricks a solution which allows businesses to generate swift actionable decisions from their information.

Businesses require tools like Databricks to develop real business value from their raw data while more organizations move toward data-driven cultural leadership.

Related Topics :-

#databricks course#databricks online course#databricks online course training#databricks online training#databricks training#databricks training course#learn databricks

0 notes

Text

Real-time Data Processing with Azure Stream Analytics

Introduction

The current fast-paced digital revolution demands organizations to handle occurrences in real-time. The processing of real-time data enables organizations to detect malicious financial activities and supervise sensor measurements and webpage user activities which enables quicker and more intelligent business choices.

Microsoft’s real-time analytics service Azure Stream Analytics operates specifically to analyze streaming data at high speed. The introduction explains Azure Stream Analytics system architecture together with its key features and shows how users can construct effortless real-time data pipelines.

What is Azure Stream Analytics?

Algorithmic real-time data-streaming functions exist as a complete serverless automation through Azure Stream Analytics. The system allows organizations to consume data from different platforms which they process and present visual data through straightforward SQL query protocols.

An Azure data service connector enables ASA to function as an intermediary which processes and connects streaming data to emerging dashboards as well as alarms and storage destinations. ASA facilitates processing speed and immediate response times to handle millions of IoT device messages as well as application transaction monitoring.

Core Components of Azure Stream Analytics

A Stream Analytics job typically involves three major components:

1. Input

Data can be ingested from one or more sources including:

Azure Event Hubs – for telemetry and event stream data

Azure IoT Hub – for IoT-based data ingestion

Azure Blob Storage – for batch or historical data

2. Query

The core of ASA is its SQL-like query engine. You can use the language to:

Filter, join, and aggregate streaming data

Apply time-window functions

Detect patterns or anomalies in motion

3. Output

The processed data can be routed to:

Azure SQL Database

Power BI (real-time dashboards)

Azure Data Lake Storage

Azure Cosmos DB

Blob Storage, and more

Example Use Case

Suppose an IoT system sends temperature readings from multiple devices every second. You can use ASA to calculate the average temperature per device every five minutes:

This simple query delivers aggregated metrics in real time, which can then be displayed on a dashboard or sent to a database for further analysis.

Key Features

Azure Stream Analytics offers several benefits:

Serverless architecture: No infrastructure to manage; Azure handles scaling and availability.

Real-time processing: Supports sub-second latency for streaming data.

Easy integration: Works seamlessly with other Azure services like Event Hubs, SQL Database, and Power BI.

SQL-like query language: Low learning curve for analysts and developers.

Built-in windowing functions: Supports tumbling, hopping, and sliding windows for time-based aggregations.

Custom functions: Extend queries with JavaScript or C# user-defined functions (UDFs).

Scalability and resilience: Can handle high-throughput streams and recovers automatically from failures.

Common Use Cases

Azure Stream Analytics supports real-time data solutions across multiple industries:

Retail: Track customer interactions in real time to deliver dynamic offers.

Finance: Detect anomalies in transactions for fraud prevention.

Manufacturing: Monitor sensor data for predictive maintenance.

Transportation: Analyze traffic patterns to optimize routing.

Healthcare: Monitor patient vitals and trigger alerts for abnormal readings.

Power BI Integration

The most effective connection between ASA and Power BI serves as a fundamental feature. Asustream Analytics lets users automatically send data which Power BI dashboards update in fast real-time. Operations teams with managers and analysts can maintain ongoing key metric observation through ASA since it allows immediate threshold breaches to trigger immediate action.

Best Practices

To get the most out of Azure Stream Analytics:

Use partitioned input sources like Event Hubs for better throughput.

Keep queries efficient by limiting complex joins and filtering early.

Avoid UDFs unless necessary; they can increase latency.

Use reference data for enriching live streams with static datasets.

Monitor job metrics using Azure Monitor and set alerts for failures or delays.

Prefer direct output integration over intermediate storage where possible to reduce delays.

Getting Started

Setting up a simple ASA job is easy:

Create a Stream Analytics job in the Azure portal.

Add inputs from Event Hub, IoT Hub, or Blob Storage.

Write your SQL-like query for transformation or aggregation.

Define your output—whether it’s Power BI, a database, or storage.

Start the job and monitor it from the portal.

Conclusion

Organizations at all scales use Azure Stream Analytics to gain processing power for real-time data at levels suitable for business operations. Azure Stream Analytics maintains its prime system development role due to its seamless integration of Azure services together with SQL-based declarative statements and its serverless architecture.

Stream Analytics as a part of Azure provides organizations the power to process ongoing data and perform real-time actions to increase operational intelligence which leads to enhanced customer satisfaction and improved market positioning.

#azure data engineer course#azure data engineer course online#azure data engineer online course#azure data engineer online training#azure data engineer training#azure data engineer training online#azure data engineering course#azure data engineering online training#best azure data engineer course#best azure data engineer training#best azure data engineering courses online#learn azure data engineering#microsoft azure data engineer training

0 notes

Text

Future in Data Analytics: Best Databricks Online Course to Get You Started 🚀

Are you ready to supercharge your data skills and launch your career in data analytics? If you've heard of Databricks but aren’t sure where to start, we’ve got you covered. At Accent Future, we offer the best Databricks online course for beginners and professionals alike!

Whether you're a data enthusiast, data engineer, or aspiring data scientist, our Databricks training course is designed to help you learn Databricks from the ground up.

✅ What You’ll Learn:

Unified data analytics with Databricks

Real-time data processing with Apache Spark

Building scalable data pipelines

Machine learning integrations

Hands-on projects for real-world experience

Our Databricks online training is 100% flexible and self-paced. Whether you prefer weekend sessions or deep-dive weekday learning, we’ve got options to suit every schedule.

Why Choose Our Databricks Course?

Industry-recognized certification

Expert trainers with real-world experience

Affordable pricing

Lifetime access to resources

👉 Perfect for beginners, our Databricks online course training is tailored to make you job-ready in just a few weeks.

If you’re looking for the best Databricks course online, start your journey with us at accentfuture

🔗 Explore our full Databricks training program and level up your data game today!

🚀Enroll Now: https://www.accentfuture.com/enquiry-form/

📞Call Us: +91-9640001789

📧Email Us: [email protected]

🌍Visit Us: AccentFuture

#Databricks#DatabricksCourse#DatabricksOnlineTraining#LearnDatabricks#DataAnalytics#Spark#BigData#DatabricksOnlineCourse#AccentFuture

0 notes

Text

Master Databricks with AccentFuture – Online Training

0 notes

Text

Introduction to AWS Data Engineering: Key Services and Use Cases

Introduction

Business operations today generate huge datasets which need significant amounts of processing during each operation. Data handling efficiency is essential for organization decision making and expansion initiatives. Through its cloud solutions known as Amazon Web Services (AWS) organizations gain multiple data-handling platforms which construct protected and scalable data pipelines at affordable rates. AWS data engineering solutions enable organizations to both acquire and store data and perform analytical tasks and machine learning operations. A suite of services allows business implementation of operational workflows while organizations reduce costs and boost operational efficiency and maintain both security measures and regulatory compliance. The article presents basic details about AWS data engineering solutions through their practical applications and actual business scenarios.

What is AWS Data Engineering?

AWS data engineering involves designing, building, and maintaining data pipelines using AWS services. It includes:

Data Ingestion: Collecting data from sources such as IoT devices, databases, and logs.

Data Storage: Storing structured and unstructured data in a scalable, cost-effective manner.

Data Processing: Transforming and preparing data for analysis.

Data Analytics: Gaining insights from processed data through reporting and visualization tools.

Machine Learning: Using AI-driven models to generate predictions and automate decision-making.

With AWS, organizations can streamline these processes, ensuring high availability, scalability, and flexibility in managing large datasets.

Key AWS Data Engineering Services

AWS provides a comprehensive range of services tailored to different aspects of data engineering.

Amazon S3 (Simple Storage Service) – Data Storage

Amazon S3 is a scalable object storage service that allows organizations to store structured and unstructured data. It is highly durable, offers lifecycle management features, and integrates seamlessly with AWS analytics and machine learning services.

Supports unlimited storage capacity for structured and unstructured data.

Allows lifecycle policies for cost optimization through tiered storage.

Provides strong integration with analytics and big data processing tools.

Use Case: Companies use Amazon S3 to store raw log files, multimedia content, and IoT data before processing.

AWS Glue – Data ETL (Extract, Transform, Load)

AWS Glue is a fully managed ETL service that simplifies data preparation and movement across different storage solutions. It enables users to clean, catalog, and transform data automatically.

Supports automatic schema discovery and metadata management.

Offers a serverless environment for running ETL jobs.

Uses Python and Spark-based transformations for scalable data processing.

Use Case: AWS Glue is widely used to transform raw data before loading it into data warehouses like Amazon Redshift.

Amazon Redshift – Data Warehousing and Analytics

Amazon Redshift is a cloud data warehouse optimized for large-scale data analysis. It enables organizations to perform complex queries on structured datasets quickly.

Uses columnar storage for high-performance querying.

Supports Massively Parallel Processing (MPP) for handling big data workloads.

It integrates with business intelligence tools like Amazon QuickSight.

Use Case: E-commerce companies use Amazon Redshift for customer behavior analysis and sales trend forecasting.

Amazon Kinesis – Real-Time Data Streaming

Amazon Kinesis allows organizations to ingest, process, and analyze streaming data in real-time. It is useful for applications that require continuous monitoring and real-time decision-making.

Supports high-throughput data ingestion from logs, clickstreams, and IoT devices.

Works with AWS Lambda, Amazon Redshift, and Amazon Elasticsearch for analytics.

Enables real-time anomaly detection and monitoring.

Use Case: Financial institutions use Kinesis to detect fraudulent transactions in real-time.

AWS Lambda – Serverless Data Processing

AWS Lambda enables event-driven serverless computing. It allows users to execute code in response to triggers without provisioning or managing servers.

Executes code automatically in response to AWS events.

Supports seamless integration with S3, DynamoDB, and Kinesis.

Charges only for the compute time used.

Use Case: Lambda is commonly used for processing image uploads and extracting metadata automatically.

Amazon DynamoDB – NoSQL Database for Fast Applications

Amazon DynamoDB is a managed NoSQL database that delivers high performance for applications that require real-time data access.

Provides single-digit millisecond latency for high-speed transactions.

Offers built-in security, backup, and multi-region replication.

Scales automatically to handle millions of requests per second.

Use Case: Gaming companies use DynamoDB to store real-time player progress and game states.

Amazon Athena – Serverless SQL Analytics

Amazon Athena is a serverless query service that allows users to analyze data stored in Amazon S3 using SQL.

Eliminates the need for infrastructure setup and maintenance.

Uses Presto and Hive for high-performance querying.

Charges only for the amount of data scanned.

Use Case: Organizations use Athena to analyze and generate reports from large log files stored in S3.

AWS Data Engineering Use Cases

AWS data engineering services cater to a variety of industries and applications.

Healthcare: Storing and processing patient data for predictive analytics.

Finance: Real-time fraud detection and compliance reporting.

Retail: Personalizing product recommendations using machine learning models.

IoT and Smart Cities: Managing and analyzing data from connected devices.

Media and Entertainment: Streaming analytics for audience engagement insights.

These services empower businesses to build efficient, scalable, and secure data pipelines while reducing operational costs.

Conclusion

AWS provides a comprehensive ecosystem of data engineering tools that streamline data ingestion, storage, transformation, analytics, and machine learning. Services like Amazon S3, AWS Glue, Redshift, Kinesis, and Lambda allow businesses to build scalable, cost-effective, and high-performance data pipelines.

Selecting the right AWS services depends on the specific needs of an organization. For those looking to store vast amounts of unstructured data, Amazon S3 is an ideal choice. Companies needing high-speed data processing can benefit from AWS Glue and Redshift. Real-time data streaming can be efficiently managed with Kinesis. Meanwhile, AWS Lambda simplifies event-driven processing without requiring infrastructure management.

Understanding these AWS data engineering services allows businesses to build modern, cloud-based data architectures that enhance efficiency, security, and performance.

References

For further reading, refer to these sources:

AWS Prescriptive Guidance on Data Engineering

AWS Big Data Use Cases

Key AWS Services for Data Engineering Projects

Top 10 AWS Services for Data Engineering

AWS Data Engineering Essentials Guidebook

AWS Data Engineering Guide: Everything You Need to Know

Exploring Data Engineering Services in AWS

By leveraging AWS data engineering services, organizations can transform raw data into valuable insights, enabling better decision-making and competitive advantage.

youtube

#aws cloud data engineer course#aws cloud data engineer training#aws data engineer course#aws data engineer course online#Youtube

0 notes