Don't wanna be here? Send us removal request.

Text

Revolutionizing Machine Learning at Scale: DevOps + AI Integration

Throughout the years, DevOps consulting methodologies and AI solutions have revolutionized how organizations develop, deploy, and maintain machine learning models at scale. The landscape of machine learning and operations continues to evolve rapidly. With new platforms and MLOps tools, it’s emerging even faster to address and resolve the complex challenges of operationalizing AI solutions. This comprehensive guide aims to explore the most impactful MLOps tools, aiming to reshape the industry by enabling seamless integration between operations and development teams.

The Evolution of ML + Ops in 2025

MLOps represents the method of managing machine learning projects, and with time, it has matured significantly by moving beyond the basic model deployment. For instance, it encompasses comprehensive lifecycle management, intelligent monitoring, and automated governance by aiding the foundations of operationalizing AI. Right from model training to deployment to monitoring and governance, the technology allows DevOps consulting organizations to utilize productivity and produce reliable AI solutions for their clients, like Spiral Mantra does. Comprising the definition of what MLOps tools are, these are software programs aiming to help machine learning engineers, IT operations teams, and data scientists streamline workflows by integrating DevOps-driven AI solutions effectively.

Let’s Divide These Machine Learning + Operation Tools: (Category-Wise)

Workflow Orchestration and Pipeline Management Apache Airflow continues to dominate the orchestration space by executing the orchestrator tasks for designing, scheduling, and monitoring workflows. Additionally, the tool is versatile enough to handle complex dependencies, and its extensive plugin ecosystem makes it indispensable for organizations with diverse ML pipeline requirements. In terms of workflow orchestration and pipeline management, Metaflow is another astounding tool poised to function for workflow management solutions, allowing machine learning experts to create, execute, and deploy workflow models into production. The tool is compatible with running on multiple clouds, including GCP, Azure, and AWS, and major Python packages like TensorFlow and Scikit-learn, with APIs accessible for the R language. Perfect introduces modern workflow orchestration with improved developer experience, featuring dynamic workflow generation and robust error handling. Its hybrid execution model allows for flexible deployment strategies, from cloud-native to on-premises environments, and comes in two major variants, which are Perfect Cloud, utilized to see flows and deployments, and Orion UI, which allows you to host the orchestration API and engine server locally. Kubeflow has evolved into a comprehensive ML platform built on Kubernetes, offering end-to-end pipeline management with cloud-native scalability. Its integration with container orchestration makes it perfect for organizations adopting microservices architectures for their AI solutions.

Continuous Integration and Deployment

Jenkins remains a stalwart in the continuous integration and continuous development space, with CI CD tools like GitLab CI/CD and CircleCI gaining more adoption to enable automated testing, integration, and deployment of machine learning models. Modern Jenkins deployments incorporate ML-specific plugins for model validation and automated testing. GitLab CI/CD offers integrated MLOps capabilities with a built-in container registry and deployment tools. Its tight integration with version control makes it ideal for teams following GitOps practices for ML model deployment. ArgoCD and Tekton are gaining traction for Kubernetes-native CI/CD, providing declarative deployment strategies and advanced rollback capabilities essential for production ML systems. Both are open-source platforms for data versioning and can be integrated seamlessly with Git to enable metadata, pipelines, and models. The tools can be used for experiment tracking of parameters and model metrics, along with model registration and ML deployment using CML.

Data Versioning and Model Management

MLflow revolutionizes the industry by offering DevOps-driven AI solutions, delivering robust experiment tracking, deployment, and model versioning capabilities. Backed by its open-source nature and extensive integration capability, which makes it ideal for teams transitioning. Right from traditional development practices to machine learning-centric workflows, MLflow is best suited to provide a centralized model management function, enabling teams to collaborate efficiently while maintaining strict version control. DVC (Data Version Control) and LakeFS revolutionize data management by bringing Git-like versioning to datasets and ML experiments. Its integration with cloud storage systems and ability to handle large files make it essential for reproducible ML workflows. The tools are ideal for data lake architecture, enabling branch-merge workflows for data and providing atomic operations for large-scale data management.

Cloud-Native MLOps Platforms

Considering the cloud-native platforms, Amazon SageMaker continues to evolve in the dynamic tech environment by fulfilling machine learning + operations capabilities, which include features like model registry and monitoring with automated storage capabilities. It can be integrated with AWS services, making it ideal for organizations already associated with the AWS ecosystem. Besides this, Google Vertex AI is a unified platform working with the capabilities of AutoML, combining to produce robust pipeline orchestration. Its integration with Google Cloud's data services creates seamless workflows from data ingestion to model deployment. On the other hand, Azure Machine Learning aims to provide strong integration to Microsoft's developer ecosystem with artificial intelligence, making it particularly appealing for organizations using .NET and Azure DevOps. Organizations succeeding in MLOps adoption focus on gradual implementation, starting with experiment tracking and progressively incorporating advanced features like automated monitoring and deployment. The key is selecting tools that align with existing DevOps practices while providing growth paths for advanced ML capabilities.

Looking Forward: Secure DevOps-Driven AI Solutions with Spiral Mantra

Being the leading IT consulting firm, our expert team combines deep technical expertise with strategic insight to implement robust MLOps pipelines, automate deployment workflows, and optimize your AI infrastructure for maximum efficiency. From cloud-native architectures to intelligent automation, we partner with forward-thinking organizations to unlock the full potential of their digital transformation initiatives.

#devops services#devops consulting#devops consulting services#ai solutions#azure devops consulting services

0 notes

Text

GitOps for Kubernetes: The Future of Infrastructure as Code

GitOps automation, Kubernetes, and Infrastructure as Code have become a pillar of the modern DevOps landscape. On the other hand, the convergence of “GitOps for Kubernetes” has significantly emerged as a transformative approach to executing operations. Considering the usage, then IaC refers to managing the data infrastructure of any organization via machine-readable files. At the same time, Kubernetes is a container orchestration platform used to deploy and scale containerized applications.

As companies increasingly adopt cloud-native operations, the requirement for prominent DevOps Consulting Services is growing to secure reliable and scalable infrastructure management. Additionally, GitOps in the complete scenario represents a paradigm shift to force Git repositories for declarative infrastructure and application definitions. No one can deny the fact that the software deployment process has been revolutionized; thus, tools like Helm and Terraform facilitate GitOps for Kubernetes, streamlining software and application delivery lifecycle throughout various stages – development, testing, and deployment/production.

Understanding GitOps in the Kubernetes Ecosystem

GitOps has gained maximum engagement, as it extends its principles for the DevOps Services Company by leveraging them to implement version control practices on the existing data infrastructure management. To deep devolve, then GitOps treats IAC configurations, and later stores them in repositories, allowing the GitOps automation process to synchronize with the desired state of Kubernetes clusters. The approach is necessary to eliminate the traditional deployment model and replace it with a pull-based system, aiding in monitoring Git repositories continuously.

Considering the workflow, GitOps adheres to a powerful pattern, where developers are required to make necessary infrastructure changes, and with the automated process, repositories will validate, test, and deploy the action changes to the Kubernetes clusters.

The Technical Architecture of GitOps Automation

GitOps automation depends on multiple key components to establish seamless infrastructure management. These start with Git repositories containing Helm charts, YAML manifests, and customized configurations, which define the application infrastructure and state by implementing tools like Flux, ArgoCD, or Jenkins X.

Whenever discrepancies are detected, the GitOps automation system begins its reconciliation process to fetch the cluster from the repository and align it to get synchronized results. The process eventually ensures that all the changes revert automatically to the cluster, maintaining its consistency on the containerized app container while preventing configuration drift.

Modern GitOps implementation unifies the monitoring and observability features, which fosters running and provides real-time insights by understanding the deployment status and system performance, along with the application's health. These capabilities allow DevOps engineers to seamlessly identify and resolve encountered bugs while maintaining audit trails in every infrastructure change made by the DevOps engineer.

Transforming DevOps Practices with GitOps

In recent years, the adoption of GitOps has significantly represented the evolution in executing major DevOps practices and methodologies, while addressing multiple challenges associated with software deployment. DevOps consulting services like Spiral Mantra recommend GitOps for DevOps practices, enabling them to improve their clients’ deployment reliability with operational efficiency. The declarative nature of Git + Operations has significantly eliminated the need for complex software deployment scripts by reducing the risks associated with manual intervention in critical operations.

Further, it accelerates the collaboration between operations and development teams by providing insights into infrastructure management, where developers can easily propose major changes by utilizing features like merge processes, pull requests, and code reviews by utilizing Git workflows. The approach is impactful to democratize infrastructure management while adhering to and maintaining appropriate security and governance controls.

The GitOps automation for Kubernetes with the existing CI CD pipelines executes a comprehensive framework for automation, relying on extending the horizons of code commit to final software production deployment. DevOps services company implements the best practices of DevOps consulting to create end-to-end CI CD automation pipelines using the latest technologies and skills.

Security and Compliance Advantages

The practice of managing the infrastructure of application configurations has inherently strengthened the security parameters within the deployment processes. Custom deployment methods often require production environments to create potential security vulnerabilities. Further, within the cluster boundary, pulling changes drawn from external repositories can allow the developer to access tokens. However, securing the Git-based approach allows access to comprehensive audit trails by documenting the required changes with specific modifications to implement. Additionally, it facilitates to implementation of policy-as-code practices, where data security policies should be defined declaratively and enforced automatically across all deployments.

Best Practices and Considerations for Kubernetes

Businesses should initiate the process by establishing clear repository structures, separating the application code from the existing infrastructure configurations, and allowing teams to manage and maintain their respective domains and system coherence.

On the other hand, environment promotion strategies have become a crucial factor for Kubernetes and IaC implementations, as many companies are looking to have a repository-based approach to circulate and manage varied deployment stages. The choice amongst these strategies varies from organizational structure to security requirements and deployment pipeline complexity.

Modern solutions integration with management systems can securely retrieve the data during the software deployment phase; thus, companies like Spiral Mantra have developed erudite approaches helping to maintain security while preserving Kubernetes automation benefits.

The Future Landscape of Infrastructure as Code

Kubernetes and Infrastructure as Code represent more than just a deployment methodology, as they exemplify automated infrastructure management aligning with the cloud-native principles. With advanced features like multi-cluster management and progressive delivery, IaC practices are becoming increasingly adopted by companies.

The incorporation of ML and AI solutions into infrastructure as code workflows promises to enhance further by adding automation capabilities, and predictive deployment strategies with automated performance optimization to detect and resolve data issues on time.

0 notes

Text

Hi all, We’re a team working in the mobile app development services, and we’ve been noticing some interesting shifts in client expectations lately—especially around timelines, tech stacks, and post-launch support.

I’m curious to hear from others here—either as developers or clients:

What do you prioritize when choosing a development partner or building an app?

Are you leaning more toward cross-platform solutions like Flutter/React Native or still going native for iOS/Android?

How important is scalability or backend integration in your recent projects?

Would love to exchange insights and see how others are navigating this fast-moving space.

0 notes

Text

Top Use Cases of AI/ML in Modern DevOps Pipelines

The integration of AI ML into DevOps workflows signifies a transformative approach to the new-age software development and operations. Often termed as “AIOps” or “MLOps”, this convergence is reforming how teams build, develop, and maintain applications by optimizing predictive capabilities, automating tasks, and augmenting source allocation within the capabilities of Azure DevOps.

That’s when the cloud consulting team at Spiral Mantra leverages AI/ML technologies to address the challenges and complexity that arise due to modern software systems. By incorporating intelligent algorithms into CI CD pipelines, we have enabled our clients to achieve unprecedented levels of efficiency. AI in DevOps workflows facilitates robust integration capabilities with machine learning solutions, allowing teams to implement intelligent automation within their software development lifecycle.

The Role of AI ML in DevOps

The application of AI in DevOps Consulting extends beyond simple automation, whereas ML models have a unified approach to analyze patterns from traditional datasets available and concisely predict potential failures. Apart from optimizing adhesive testing strategies and perceptively prioritizing work items, this predictive capability of machine learning allows businesses and teams to make the switch from reactive to proactive operational models while addressing problems before they impact the end users.

There are multiple key areas where the role of AI ML in DevOps has transformed and continues to do so.

Automated code review and quality assurance

Intelligent test selection and execution

Predictive infrastructure scaling

Anomaly detection and automated incident response

Release risk assessment and deployment optimization

Herein, with Spiral Mantra, our certified Azure DevOps team provides a comprehensive ecosystem and functioning capabilities where these AI-powered competencies can seamlessly be integrated. Right in consideration from Azure Pipelines boosted with ML-based optimization to execute the intelligent work item classified by the Azure Boards, the platform aids as an ideal foundation employing AI-driven cloud strategies—GCP and AWS.

Get The Best Use for Real-Time Monitoring

Since artificial intelligence has excelled in the genre of spotting patterns and real-time monitoring. GCP and AWS DevOps engineers can implement artificial intelligence algorithms with a unified approach to monitor and deploy software while reporting anomalies once they are forecast. This would help professionals stay alert whenever issues occur, thus helping experts detect and prevent small issues in the initial phase. Likewise, AI is useful to scrutinize resource allocation, helping engineers to scale their task automation.

Fostering Help To Coders

With each passing day, AI is becoming more prominent, helping coders to redefine their existing ones. This is where LLMs came as a handy model to analyze existing code, mentioning errors that were across, and even suggesting replacements. Besides this, it also apprehends and suggests code curated on natural-language suggestions, abolishing major trial-and-error.

AI-Driven Automation in Routine Tasks

AI and machine learning have already taken the storm in the technical industry, as they are useful to automate repetitive tasks by implementing testing, code deployment, and monitoring to advance strategic activities. ML algorithms can be pre-defined from past processes, helping to improve accuracy and competence. Naming the benefits, they are predefined consistency, speed, and scalability, mitigating the impact of errors while also helping to grow the project and scale operations without adding further manpower.

Automate Incident Management

AI ML in DevOps Services is beneficial to streamlining intelligent incident management by detecting anomalies automatically while helping the Azure DevOps team to classify incidents and activate responses on time. The feature list includes resolution workflows and initiating automated alerts while extensively applying quick fixes.

Consider acclimatizing with the benefits, then faster resolution with (MTTR) methodology to boost swift identification and response. Improved accuracy with continuous learning accelerates incident classification and further improves responses.

Why Spiral Mantra Believes in Practical AI ML Solutions in DevOps Consulting

Here at Spiral Mantra, we’ve implemented AI‑assisted workflows for our clients across varied industries, ranging from finance to healthcare, retail, and manufacturing, leaving our cloud developers to focus on:

Deploying features resonates with artificial intelligence, helpful to reduce manual overhead and complexity.

Aligning the ML use case with real-time business goals to achieve faster resolution and stronger collaboration.

Conclusion

Since AI-ML is so versatile, knowing exactly where to start is a hardship that the majority of companies are facing currently. As organizations are in a league to adopt AI in DevOps practices, we're witnessing the growth and progression of truly intelligent software delivery pipelines that are not just automating routine tasks but continuously improving operational experience. And this guide is exactly going to highlight this transformation and provide practical insights to execute AI solutions in your cloud workflows.

#DevOps Consulting Services#DevOps Consulting#DevOps Services#DevOps Services Company#Machine Learning Solutions#Hire Machine Learning Expert#AI Solutions

0 notes

Text

Top Trends in 2025: AI, Cloud, and Beyond

Top Trends in 2025: AI, Cloud, and Beyond

Big data engineering is a new normal for every organization. This is because the current stats show the figure of 402.74 million terabytes (by Exploding Topics) of data generated every day, leaving companies to work progressively with the latest tools and trends. In 2025, everything will revolve around cloud data engineering, AI trends, big data technologies, and machine learning solutions trends. Multi-cloud strategies, hybrid cloud solutions, serverless computing, and generative AI are just buzzwords at the moment, but they will shape the IT competitive landscape.

Instead, this article gets straight to the point and hits the cloud data engineering, along with big data engineering trends in 2025 that will motivate CIOs, CTOs, and IT leaders to rethink their approach to their digital transformation goals.

Did You Know?

$3.1 trillion businesses lose annually due to poor data quality, while $4.88 million of that amount has been costed to corporations in 2024 due to data breach activity. Challenges like this can be excruciating for any business; thus, Spiral Mantra helps in this matter by offering productive hybrid cloud solutions joined with leading ML trends to forecast productivity. Reach out to our machine learning experts or mail us at [email protected] to discuss your next move in the market.

Advanced Analytics

By utilizing tools like Azure Synapse Analytics, predictive and prescriptive analytics will dominate decision-making, helping transform raw information into strategic insights.

Why it matters: Companies that use advanced analytics typically go through and research their competitors because they can identify opportunities earlier or mitigate risks.

Technical insight: Azure Machine Learning Solutions allows you to build predictive models that integrate with Synapse Analytics for real-time analytics.

Example: An insurance company was able to reduce fraudulent claims by 42% by enabling predictive analytics with Azure Synapse and ML algorithms.

Graph databases for complex relationships

Graph databases like Azure Cosmos DB also play a key role in analyzing connected data sets, which is becoming increasingly important in fraud detection, recommendation systems, and social network research.

Why this matters: Relational information bases cannot handle complex data relationships. Therefore, a graph database is the best solution for such scenarios.

For example, Azure Cosmos DB graph queries improved a social network’s user recommendation engine by 50%.

Data Fabric Architecture

In the data engineering trends, Data Fabric provides seamless access to distributed information flow, fast-tracking integration, and analytics in hybrid environments.

Why it matters: Breaking down raw details Silos give companies more flexibility and speed to implement their data strategies.

Tech Insights: Consolidate data management with Azure Purview. Use Azure Synapse Link to provide near real-time analytics on operational information.

Example: A retail giant reduced data integration time by 60% after implementing a data fabric model with Azure tools.

Trends in AI Solutions

Generative AI

The power of Azure OpenAI services and other generative AI has driven industries to automate the creation of more engaging customer experiences with AI solutions, including content generation.

Why this matters: This saves a lot of time and allows companies to scale their content strategy.

Technical insight: Embedding APIs of generative AI models into CRM can help generate auto-responses and customized marketing materials.

Example: One marketing company increased campaign throughput by 45% by automating content creation with Azure OpenAI.

Explainable AI

Regarding machine learning trends, explainable AI, or XAI, plays a key role in trust-based industries like healthcare and finance, where decision-making transparency is required.

Why it matters: Regulatory compliance and user trust depend on understanding how AI models reach their conclusions.

Technical insight: Use Azure Machine Learning Interpretability to understand how your models behave and whether they comply with ethical guidelines.

Example: After deploying late AI trends to support clinical decision-making, a healthcare organization saw a 22% increase in diagnostic accuracy.

Conclusion

In the technology world of 2025, it’s no longer about keeping up with the information but staying ahead. How organizations respond to new machine learning trends by adapting innovation and cost reduction in a dynamic marketplace varies from organization to organization. In each of the above-mentioned sections, you might find actionable insights combined with Microsoft technologies like Azure to help you adjust your strategy and make informed decisions.

1 note

·

View note

Text

Why You Need DevOps Consulting for Kubernetes Scaling

With today’s technological advances and fast-moving landscape, scaling Kubernetes clusters has become troublesome for almost every organization. The more companies are moving towards containerized applications, the harder it gets to scale multiple Kubernetes clusters. In this article, you’ll learn the exponential challenges along with the best ways and practices of scaling Kubernetes deployments successfully by seeking expert guidance.

The open-source platform K8s, used to deploy and manage applications, is now the norm in containerized environments. Since businesses are adopting DevOps services in USA due to their flexibility and scalability, cluster management for Kubernetes at scale is now a fundamental part of the business.

Understanding Kubernetes Clusters

Before moving ahead with the challenges along with its best practices, let’s start with an introduction to what Kubernetes clusters are and why they are necessary for modern app deployments. To be precise, it is a set of nodes (physical or virtual machines) connected and running containerized software. K8’s clusters are very scalable and dynamic and are ideal for big applications accessible via multiple locations.

The Growing Complexity Organizations Must Address

Kubernetes is becoming the default container orchestration solution for many companies. But the complexity resides with its scaling, as it is challenging to keep them in working order. Kubernetes developers are thrown many problems with consistency, security, and performance, and below are the most common challenges.

Key Challenges in Managing Large-Scale K8s Deployments

Configuration Management: Configuring many different Kubernetes clusters can be a nightmare. Enterprises need to have uniform policies, security, and allocations with flexibility for unique workloads.

Resource Optimization: As a matter of course, the DevOps consulting services would often emphasize that resources should be properly distributed so that overprovisioning doesn’t happen and the application can run smoothly.

Security and Compliance: Security on distributed Kubernetes clusters needs solid policies and monitoring. Companies have to use standard security controls with different compliance standards.

Monitoring and Observability: You’ll need advanced monitoring solutions to see how many clusters are performing health-wise. DevOps services in USA focus on the complete observability instruments for efficient cluster management.

Best Practices for Scaling Kubernetes

Implement Infrastructure as Code (IaC)

Apply GitOps processes to configure

Reuse version control for all cluster settings.

Automate cluster creation and administration

Adopt Multi-Cluster Management Tools

Modern organizations should:

Set up cluster management tools in dedicated software.

Utilize centralized control planes.

Optimize CI CD Pipelines

Using K8s is perfect for automating CI CD pipelines, but you want the pipelines optimized. By using a technique like blue-green deployments or canary releases, you can roll out updates one by one and not push the whole system. This reduces downtime and makes sure only stable releases get into production.

Also, containerization using Kubernetes can enable faster and better builds since developers can build and test apps in separate environments. This should be very tightly coupled with Kubernetes clusters for updates to flow properly.

Establish Standardization

When you hire DevOps developers, always make sure they:

Create standardized templates

Implement consistent naming conventions.

Develop reusable deployment patterns.

Optimize Resource Management

Effective resource management includes:

Implementing auto-scaling policies

Adopting quotas and limits on resource allocations.

Accessing cluster auto scale for node management

Enhance Security Measures

Security best practices involve:

Role-based access control (RBAC)—Aim to restrict users by role

Network policy isolation based on isolation policy in the network

Updates and security audits: Ongoing security audits and upgrades

Leverage DevOps Services and Expertise

Hire dedicated DevOps developers or take advantage of DevOps consulting services like Spiral Mantra to get the best of services under one roof. The company comprehends the team of experts who have set up, operated, and optimized Kubernetes on an enterprise scale. By employing DevOps developers or DevOps services in USA, organizations can be sure that they are prepared to address Kubernetes issues efficiently. DevOps consultants can also help automate and standardize K8s with the existing workflows and processes.

Spiral Mantra DevOps Consulting Services

Spiral Mantra is a DevOps consulting service in USA specializing in Azure, Google Cloud Platform, and AWS. We are CI/CD integration experts for automated deployment pipelines and containerization with Kubernetes developers for automated container orchestration. We offer all the services from the first evaluation through deployment and management, with skilled experts to make sure your organizations achieve the best performance.

Frequently Asked Questions (FAQs)

Q. How can businesses manage security on different K8s clusters?

Businesses can implement security by following annual security audits and security scanners, along with going through network policies. With the right DevOps consulting services, you can develop and establish robust security plans.

Q. What is DevOps in Kubernetes management?

For Kubernetes management, it is important to implement DevOps practices like automation, infrastructure as code, continuous integration and deployment, security, compliance, etc.

Q. What are the major challenges developers face when managing clusters at scale?

Challenges like security concerns, resource management, and complexity are the most common ones. In addition to this, CI CD pipeline management is another major complexity that developers face.

Conclusion

Scaling Kubernetes clusters takes an integrated strategy with the right tools, methods, and knowledge. Automation, standardization, and security should be the main objectives of organizations that need to take advantage of professional DevOps consulting services to get the most out of K8s implementations. If companies follow these best practices and partner with skilled Kubernetes developers, they can run containerized applications efficiently and reliably on a large scale.

1 note

·

View note

Text

Is Your Business Ready for DevOps?

Well, this is the exact question that the majority of companies have been looking for, and Google is flooded with a mixed group of answers promoting different companies’ skillset and professionalism. Since 2025 is all about digital transformation, thus, businesses are on the verge of leveraging DevOps tools and technologies to stay competitive.

Why Businesses Need DevOps Consulting Services

Finding the best DevOps services company in USA can be tricky, especially in a large pool of talent, however, with the right direction, adopting DevOps practices can significantly:

Improve collaboration between teams

Enhance software development processes

Speed up overall operational efficiency

Key Services Offered by Azure DevOps Consultants

However, transitioning to a cloud like AWS, Azure, or GCP requires expertise and the right skillset, thus outsourcing DevOps consulting services in USA from professionals like Spiral Mantra gives you a hands-down experience to utilize the right tools, expertise, and culture.

Additionally, top providers of Azure DevOps consulting services offered a wide spectrum, including automation, CI CD pipeline build and integration, cloud infrastructure management, and performance monitoring. Furthermore, the professionals can help integrate DevOps to the existing workflow ensuring effective teams’ collaboration.

0 notes

Text

How to improve software development with Azure DevOps consulting services?

With the advancement of the technological sphere, Azure DevOps consulting services have created a storm to the internet. As the service provides end-to-end automation while enhancing development efficiency by utilizing tools like Azure Pipelines, Repos, and Artifacts, businesses can achieve smooth CI CD workflows. Consultants like us help to implement cloud-based infrastructure, automate deployments, and improve security, while ensuring rapid software releases with minimal downtime, optimizing cloud and on-premises environments.

0 notes

Text

Leveraging AI and Automation in Azure DevOps Consulting for Smarter Workflows

Azure DevOps consulting is evolving with the integration of Artificial Intelligence (AI) and automation, enabling organizations to optimize their CI CD pipelines to enhance predictive analytics. Spiral mantra , your strategic DevOps consultants in USA helps businesses harness AI-powered DevOps tools, ensuring seamless deployment and increased efficiency in software development lifecycles. Backed with certified experts, we help businesses to stay ahead by adopting AI-driven Azure DevOps consulting services for smarter, faster, and more reliable software delivery.

0 notes

Text

Hire DevOps Engineers for CI CD & Cloud Automation

Looking to Hire DevOps engineers for cloud automation? Reach out to professionals like Spiral Mantra leveraging services like DevOps pipeline management, DevOps configuration management, and managed DevOps services for enterprises. Whether you need AWS DevOps consulting or Azure DevOps consulting services, we provide tailored solutions to enhance your DevOps tools & platform efficiency.

0 notes

Text

A Comprehensive Guide to Deploy Azure Kubernetes Service with Azure Pipelines

A powerful orchestration tool for containerized applications is one such solution that Azure Kubernetes Service (AKS) has offered in the continuously evolving environment of cloud-native technologies. Associate this with Azure Pipelines for consistent CI CD workflows that aid in accelerating the DevOps process. This guide will dive into the deep understanding of Azure Kubernetes Service deployment with Azure Pipelines and give you tips that will enable engineers to build container deployments that work. Also, discuss how DevOps consulting services will help you automate this process.

Understanding the Foundations

Nowadays, Kubernetes is the preferred tool for running and deploying containerized apps in the modern high-speed software development environment. Together with AKS, it provides a high-performance scale and monitors and orchestrates containerized workloads in the environment. However, before anything, let’s deep dive to understand the fundamentals.

Azure Kubernetes Service: A managed Kubernetes platform that is useful for simplifying container orchestration. It deconstructs the Kubernetes cluster management hassles so that developers can build applications instead of infrastructure. By leveraging AKS, organizations can:

Deploy and scale containerized applications on demand.

Implement robust infrastructure management

Reduce operational overhead

Ensure high availability and fault tolerance.

Azure Pipelines: The CI/CD Backbone

The automated code building, testing, and disposition tool, combined with Azure Kubernetes Service, helps teams build high-end deployment pipelines in line with the modern DevOps mindset. Then you have Azure Pipelines for easily integrating with repositories (GitHub, Repos, etc.) and automating the application build and deployment.

Spiral Mantra DevOps Consulting Services

So, if you’re a beginner in DevOps or want to scale your organization’s capabilities, then DevOps consulting services by Spiral Mantra can be a game changer. The skilled professionals working here can help businesses implement CI CD pipelines along with guidance regarding containerization and cloud-native development.

Now let’s move on to creating a deployment pipeline for Azure Kubernetes Service.

Prerequisites you would require

Before initiating the process, ensure you fulfill the prerequisite criteria:

Service Subscription: To run an AKS cluster, you require an Azure subscription. Do create one if you don’t already.

CLI: The Azure CLI will let you administer resources such as AKS clusters from the command line.

A Professional Team: You will need to have a professional team with technical knowledge to set up the pipeline. Hire DevOps developers from us if you don’t have one yet.

Kubernetes Cluster: Deploy an AKS cluster with Azure Portal or ARM template. This will be the cluster that you run your pipeline on.

Docker: Since you’re deploying containers, you need Docker installed on your machine locally for container image generation and push.

Step-by-Step Deployment Process

Step 1: Begin with Creating an AKS Cluster

Simply begin the process by setting up an AKS cluster with CLI or Azure Portal. Once the process is completed, navigate further to execute the process of application containerization, and for that, you would need to create a Docker file with the specification of your application runtime environment. This step is needed to execute the same code for different environments.

Step 2: Setting Up Your Pipelines

Now, the process can be executed for new projects and for already created pipelines, and that’s how you can go further.

Create a New Project

Begin with launching the Azure DevOps account; from the screen available, select the drop-down icon.

Now, tap on the Create New Project icon or navigate further to use an existing one.

In the final step, add all the required repositories (you can select them either from GitHub or from Azure Repos) containing your application code.

For Already Existing Pipeline

Now, from your existing project, tap to navigate the option mentioning Pipelines, and then open Create Pipeline.

From the next available screen, select the repository containing the code of the application.

Navigate further to opt for either the YAML pipeline or the starter pipeline. (Note: The YAML pipeline is a flexible environment and is best recommended for advanced workflows.).

Further, define pipeline configuration by accessing your YAML file in Azure DevOps.

Step 3: Set Up Your Automatic Continuous Deployment (CD)

Further, in the next step, you would be required to automate the deployment process to fasten the CI CD workflows. Within the process, the easiest and most common approach to execute the task is to develop a YAML file mentioning deployment.yaml. This step is helpful to identify and define the major Kubernetes resources, including deployments, pods, and services.

After the successful creation of the YAML deployment, the pipeline will start to trigger the Kubernetes deployment automatically once the code is pushed.

Step 4: Automate the Workflow of CI CD

Now that we have landed in the final step, it complies with the smooth running of the pipelines every time the new code is pushed. With the right CI CD integration, the workflow allows for the execution of continuous testing and building with the right set of deployments, ensuring that the applications are updated in every AKS environment.

Best Practices for AKS and Azure Pipelines Integration

1. Infrastructure as Code (IaC)

- Utilize Terraform or Azure Resource Manager templates

- Version control infrastructure configurations

- Ensure consistent and reproducible deployments

2. Security Considerations

- Implement container scanning

- Use private container registries

- Regular security patch management

- Network policy configuration

3. Performance Optimization

- Implement horizontal pod autoscaling

- Configure resource quotas

- Use node pool strategies

- Optimize container image sizes

Common Challenges and Solutions

Network Complexity

Utilize Azure CNI for advanced networking

Implement network policies

Configure service mesh for complex microservices

Persistent Storage

Use Azure Disk or Files

Configure persistent volume claims

Implement storage classes for dynamic provisioning

Conclusion

Deploying the Azure Kubernetes Service with effective pipelines represents an explicit approach to the final application delivery. By embracing these practices, DevOps consulting companies like Spiral Mantra offer transformative solutions that foster agile and scalable approaches. Our expert DevOps consulting services redefine technological infrastructure by offering comprehensive cloud strategies and Kubernetes containerization with advanced CI CD integration.

Let’s connect and talk about your cloud migration needs

2 notes

·

View notes

Text

Implementing DevOps Practices in Azure, AWS, and Google Cloud

Nowadays, in the fast-paced digital world, companies are using DevOps techniques to automate their software/application development and final launch activities. Spiral Mantra is one of the best providers of DevOps services in USA, and we assist enterprises with effective utility solutions on top of all the leading cloud platforms, such as Microsoft Azure DevOps, Google Cloud Platform, and AWS. The full guide outlines the process for migrating and utilizing these platforms and what benefits each one brings.

Getting straight to the numbers, then, it has been seen that the utility computing industry is expected to witness a mind-blowing surge from $6.78 billion to $14.97 billion by the end of the month of 2026. The services of each provider are different from one another.

CodeDeploy and CodePipeline from AWS make it easier and faster to release software.

Azure slashes the team hustling with their Azure DevOps Services, serving up dope reports and allowing them to make predictive decisions.

GCP Cloud Build and integration with Google Kubernetes Engine help DevOps companies take on big computing tasks like professionals.

Master Cloud Computing (Azure, AWS, GCP) with DevOps

This combination of utility computing methodology with technologies changed how companies work on software development and delivery. All major cloud vendors have specific tools and services that can be used in operational workflows, so the DevOps engineer should know what they can and can’t do.

Microsoft Azure DevOps

Azure DevOps provide services that enable organizations to run end-to-end practices effectively. Some comprehensive key features include:

1. Azure Pipelines

Automated CI/CD pipeline creation

Multiple languages and platform compatibility

Compatibility with GitHub and Azure Repos

2. Azure Boards

Agile planning and project management

Work item tracking

Sprint planning capabilities

3. Azure Artifacts

Package management

Compatibility with popular package formats

Secure artifact storage

We are a leading DevOps company and consulting firm that can help startups and enterprises take advantage of these features to create scalable and agile utility computing processes for their business goals.

AWS DevOps Consulting Solutions

Amazon Web Services is a top-tier cloud provider and provides a complete AWS DevOps consulting toolkit enabling organizations to automate their workflow by offering true-tone services:

AWS CodePipeline: A fully hosted continuous integration and continuous delivery CI CD pipeline service automating the build, test, and deploy phases of your release cycle.

AWS CodeBuild: A scalable build service that builds source code, tests, and creates packages.

AWS CodeDeploy: Automate code deployments to any instance, such as EC2 instances, on-premises servers, and Lambda functions.

Infrastructure as Code (IaC): With AWS CloudFormation, you can create and provision infrastructure by using templates, making it repeatable and version-controlled.

Spiral Mantra AWS DevOps consulting experts aid businesses in implementing these solutions wisely, ensuring the utilization of AWS capabilities.

Google Cloud Platform DevOps

The Google Cloud Platform is known to implement the modern-day approach for DevOps services in USA by offering its integrated services and tools. It decreases operational headaches by around 75% with its dope orchestration abilities.

The Google Cloud Platform is rife with all types of utility computing tools that give you many benefits:

Cloud Deployment Manager — Manages deployments like a pro and schedules Google Cloud resources like a pro.

Stackdriver — A new member of utility computing monitoring, now combined with GKE, brings you an absolute killer app performance dashboard with its monitoring, logging, and diagnostics to the party.

Cloud Pub/Sub — Supports asynchronous messaging, which is critical for scaling and decoupling in microservices architectures.

Feature areas of the GCP Console are:

1. Cloud Build

Serverless CI/CD platform

Container and application building

Integration with popular repositories

2. Cloud Deploy

Managed continuous delivery service

Progressive delivery support

Environment management

3. Container Registry

Secure container storage

Vulnerability scanning

Integration with CI/CD pipelines

Cloud-Native DevOps: Making The Most of Utility Computing Services

This is all about cloud-native DevOps, where you use grid computing to automate, scale, and optimize your operations. You can take advantage of AWS DevOps Consulting, Azure, and Google cloud-native services like this to:

Automated Serverless Architectures: Serverless computing, available in all three major infrastructures (AWS Lambda, Azure Functions, and Google Cloud Functions), lets you execute code without provisioning and hosting servers. This allows for autoscaling, high availability, and low overhead.

Event-Driven Workflows: Create custom workflows that respond to real-time events by using event-driven services available in utility computing, like AWS Step Functions, Azure Logic Apps, or Google Cloud Workflows. This can be to automate pipelines, CI/CD, data processing, etc.

Container Orchestration: Implement container orchestration tools such as Amazon EKS, Azure Kubernetes Service (AKS), or Google Kubernetes Engine (GKE) to scale and automate microservice deployments.

Implementing utility computing using Azure, AWS, or GCP takes planning and knowledge. Spiral Mantra’s complete DevOps services can assist your organization with all this and more by enabling you to implement and maximize technological practices on all of the leading utility computing providers.

Spiral Mantra’s DevOps Consulting Services

We are a renowned DevOps company in USA, that provides one-stop consulting services for all major cloud infrastructures. Our expertise includes:

Estimation and Strategy Development

Grid computing readiness assessment

Custom implementation roadmap creation

Implementation Services

CI/CD pipeline setup and optimization

Infrastructure automation

Container orchestration

Security integration

Monitoring and logging implementation

Platform-Specific Services

1. Azure DevOps Services in USA

Azure pipeline optimization

Integration with existing tools

Custom workflow development

Security and compliance implementation

2. AWS Consulting Services in USA

AWS infrastructure setup

CodePipeline implementation

Containerization strategies

Cost optimization

3. GCP Services in USA

Cloud Build configuration

Kubernetes implementation

Monitoring and logging setup

Performance optimization

We provide customized solutions geared toward business requirements with security, scalability, and performance that will support organizations to adopt or automate their DevOps activities.

Conclusion

Understanding cloud computing systems and methodology is important for today’s companies. Whether using AWS, Azure, or the Google Cloud Platform, getting things right takes experience, planning, and continual improvement.

Spiral Mantra’s complete DevOps services in USA help organizations with the complexity of cloud migration and integration. Our prominent AWS DevOps consulting services and vast experience in all of the leading cloud platforms help businesses reach their digital transformation objectives on time and effectively.

Call Spiral Mantra now to know how our utility computing knowledge can assist your company in meeting its technology objectives. We are the trusted AWS consulting and DevOps company that makes sure your organization takes full advantage of utility computing and practices.

#devops#azure#azure devops consulting services#software developers#software development#devops services

0 notes

Text

See how Spiral Mantra leverages Azure Data Pipelines for real-time analytics, ETL, and machine learning workflows..

0 notes

Text

Best Data Pipeline Tools for Streamlining Your Workflow (2025 Edition)

This post describes the name of best data pipeline tools to streamline a company’s workflow by optimizing its growth journey. Seek assistance for your big data analytics by opting out of Spiral Mantra’s data engineering services on budget.

Any business will agree that today having effective data management in place is a vital aspect of the entire operation. A smoothly running operations system will follow the concept of raw details collection till its delivery without causing hindrances in a workflow. But what exactly a data pipeline is and what its effective tools are the biggest questions to search for.

A data pipeline is a succession of steps that takes unstructured information from one system to another, including extraction, transformation (purification, filtering, etc.), and loading, either received by sensors, picked from an unstructured base, or logged in real-time — all automated. The objective of the information pipeline is to move details from its origin to the end destination, including the data warehouse or analytics platform. With this process, businesses can process large amounts of information to make firm decisions based on correct, real-time details.

For businesses effectively, selecting tools has been an essential factor, and that’s the reason we have covered the best data pipeline tools for your unstructured information and how they effectively streamline workflows.

A note to remember: If you are searching for a trusted data engineering service provider in the USA, then take a full stop to your searches by reaching out to us. We bring the best in services like big data analytics, DevOps consulting services, IT outsourcing, and web & mobile app development companies; we understand the needs of businesses, thus providing the right solutions that fit under your budget, which is what we have been offering for ages.

The Best Data Pipeline Tools List You Must Know

Data has been working progressively by charging up the growth of any business. Be you representing the healthcare sector, financial, or offering services for the edtech industry, raw details are the most significant factor of business operations that you cannot avoid.

Now, we know you are scrolling this page just to read the effective tools for pipeline management for your raw information. So, without wasting your time, let’s get into the names of top data pipeline tools that belong to the list of AWS, Apache, and many more.

#1 Apache Airflow: №1 Tool for Monitor Workflow

Apache Airflow is among the most frequently used programming platforms to create data pipelines. Using the tool helps businesses create, schedule, and monitor workflows that facilitate information flow. As a flexible tool, Apache Airflow facilitates a wide spectrum of customization options for various workflows. It provides a comprehensive framework to facilitate and integrate different sources and platforms, making it a common choice for businesses that work across different types of details.

Apache Airflow, for example, is especially useful for handling the flow of data collection, conversion, and delivery by periodic processes, as well as the sequencing and communication of those activities while keeping errors traceable.

Key Highlights For You:

⦁ This open-source platform is adhesively ideal for developers and other advanced users.

⦁ Robust architecture to upskill efficiency for managing large-scale information.

⦁ Large documentation and ecosystem community with comprehensive support resources.

Follow the latest in technology: The Upskilling Future of Generative AI

#2 AWS Glue: Popular Choice in the USA Wide

AWS Glue is popular in the list as it offers extract, transform, and load (ETL) services provided by Amazon Web Services that help users build and manage big data pipelines. ETL development is a complex process for the collection of unstructured details from multiple sources that usually get modified to fit the intended production systems and users. Before AWS Glue, it was a highly technical endeavor. AWS Glue offers pre-built connectors to many information sources. While also automating the process of detail discovery so that nontechnical users can build big data pipelines without spending too much time on highly technical tasks.

Integration with AWS Services

AWS Glue works well with all other AWS services, including Redshift or S3, and allows businesses to easily build reliable big-data pipelines at scale. Considering the apt amount of its key highlights, it facilitates;

Cost-effective integration that sounds good for serverless and scalable AWS environments.

Automated data cataloging with robust schema discovery.

Leverage integration with multiple AWS services.

#3 Jenkins: Best to Implement CI CD pipeline

Another tool in a row is Jenkins, another open-source army knife when it comes to controlling information flow. Jenkins offers a set of tools to execute the workflow, and that too includes unstructured information integration, its quality, and operations. It is primarily used for process simplification by taking over control of unstructured information from different origins, transforming it into the desired format, and loading it into data warehouses or other systems. In other terms, the best tool to automate tasks starts from building to the final deployment process and is ideal to implement CI CD pipeline.

Jenkins is especially useful if you’re working on a big data pipeline that involves a large flow of details. Spiral Mantra, being the best data engineering service provider and DevOps consulting company in the USA, uses it to make enterprise integrations smoother with big data cloud platforms and on-premise systems.

#4 Azure Data Factory: All-In-One Data Platform

Just like any other tool, Azure Data Factory (ADF) is a prominent tool helping businesses out by synchronizing their useful information stores and warehouses with their other sources. Instead of building large feeds with their resources (which can be time-consuming and require a team to manage), ADF takes on the responsibility of fabricating, deploying, and maintaining unstructured information for users. Instead of being manually connected, a company’s database sources and information warehouses are connected through APIs, which Azure handles and updates when necessary.

Real-Time Information Movement

Real-time synchronization of unstructured information across systems is not the only capability of Azure. However, if your company also needs an information pipeline that can combine applications with real-time synchronization, then ADF might be a suitable option.

#5 Apache Kafka: Prominent Data Streaming Tool

Apache Kafka runs with an algorithm for breaking vast amounts of information into tiny chunks and then processing it later for easier configuration. Apache Kafka focuses on real-time activities, with streams being designed for continuous information flows. Kafka is used in more real-time scenarios that require ongoing details flows. Common examples of these include systems and transaction logs, among others. With Kafka, users can capture concurrent information, process it, and then send the results to a database or analytical platform.

Kafka excels at streaming large sets of unstructured details at high speeds, which makes it particularly well-suited to capturing huge sets of details in real time.

Brownie post you might like: Configuration Management Best DevOps Tools

Additional technology for your easy understanding:

Besides Kafka, Apache Nifi is another major tool, excelling in the platform and technological advancements for a developer framework to automate the movement of unstructured information between various systems. It supports diverse data formats and makes data pipeline development for IoT devices, cloud services, or on-premise systems easier by drag-and-drop interface.

How Spiral Mantra Helps Execute Data Pipeline Tools in Your Business Project

Developing an infrastructure to build and manage unstructured data could be challenging. However, acquiring the right processes to make it straightforward takes days and hours, but not with us.

At Spiral Mantra, our passion lies in developing tools to aid a business in bringing process changes through implementation, thus, we count ourselves as the best Data Engineering Services and DevOps Consulting Services. Spiral Mantra does the following for your business by leveraging big data analytics:

1. End-to-End Pipeline Management

We at Spiral Mantra have built and monitored the pipeline lifecycle by following the ETL process, utilizing industry-leading tools like Apache Airflow, Kafka, and AWS Glue to make the flow of details from source to destination smooth and hence provide businesses with timely data-driven decisions.

2. Customization Based on Business Needs

Depends! Every business has very different requirements. The Spiral Mantra will help figure out how to fulfill it with the best-proven results. With the effective team of Spiral Mantra, we will help you design your integration pipeline according to your business needs.

3. Real-Time Information Flow Processing

For these entities, Spiral Mantra implements tools such as Apache Kafka or Jenkins so that actual ‘raw information’ can be processed in real-time and analyzed accurately, so that companies can harness the potential of their details without having to wait for the ‘waiting time’ of traditional processing methods.

4. Integration with Existing Systems

Since many businesses already have solid information systems set up, Spiral Mantra assists in developing a data pipeline with pre-built tools that can integrate into existing systems so they run uninterrupted.

Conclusion

These methods are of particular importance given the fact that pipelines play a crucial role in converting raw details into structured information that leads to better business decisions. Regardless of the size of your business and the complexity of your unstructured information — whether it is small or big data — the choice of the tool you use will make all the difference.

Count us to avail our top-notch Data Engineering Services with the upper hand on premium solutions for big data analytics, AI/ML integration, and mobile app development.

0 notes

Text

Data Engineering Guide to Build Strong Azure Data Pipeline

This data engineering guide is useful for understanding the concept of building a strong data pipeline in Azure. Information nowadays is considered the main indicator in decision-making, business practice, and analysis, still, the main issue is having this data collected, processed, and maintained effectively and properly. Azure Data Engineering, Microsoft’s cloud services, offers solutions that help businesses design massive amounts of information transport pipelines in a structured, secure way. In this guide, we will shed light on how building an effective information pipeline can be achieved using Azure Data Engineering services even if it was your first time in this area.

Explain the concept of a data pipeline

A data pipeline refers to an automated system. It transmits unstructured information from a source to a designated endpoint where it can be eventually stored and analyzed. Data is collected from different sources, such as applications, files, archives, databases, and services, and may be converted into a generic format, processed, and then transferred to the endpoint. Pipelines facilitate the smooth flow of information and the automation of such a process helps to keep the system under control by avoiding any human intervention and lets it process information in real-time or in batches at established intervals. Finally, the pipeline can handle an extremely high volume of data while tracking workflow actions comprehensively and reliably. This is essential for data-driven business processes that rely on huge amounts of information.

Why Use Azure Data Engineering Services for Building Data Pipelines?

There are many services and tools provided by Azure to strengthen the pipelines. Azure DevOps consulting services are cloud-based services which means ‘anytime anywhere’ and scale. Handling a small one-line task (DevOps) to a complex task (workflow) can easily be implemented without thinking about the hardware resources. Another benefit of having services on the cloud is the scalability for the future. It also provides security for all the information. Now, let’s break down the steps involved in creating a strong pipeline in Azure.

Steps to Building a Pipeline in Azure

Step 1: Understand Your Information Requirements

The first step towards building your pipeline is to figure out what your needs are. What are the origins of the information that needs to be liquidated? Does it come from a database, an API, files, or a different system? Second, what will be done to the information as soon as it is extracted? Will it need to be cleaned? Transformed or aggregated? Finally, what will be the destination of the liquid information? Once, you identify the needs, you are good to implement the second step.

Step 2: Choose the Right Azure Services

Azure offers many services that you can use to compose pipelines. The most important are:

Azure Data Factory (ADF): This service allows you to construct and monitor pipelines. It orchestrates operations both on-premises and in the cloud and runs workflows on demand.

Azure Blob Storage: For business data primarily collected in raw form from many sources, for instance, Azure Blob Storage provides storage of unstructured data.

Azure SQL Database: Eventually, when the information is sufficiently processed, it could be written to a relational database, such as Azure SQL Database, for the ultimate in structured storage and ease of querying.

Azure Synapse Analytics: This service is suited to big-data analytics.

Azure Logic Apps: It allows the automation of workflows, integrating various services and triggers.

Each of these services offers different functionalities depending on your pipeline requirements.

Step 3: Setting Up Azure Data Factory

Having picked the services, the next activity is to set up an Azure Data Factory (ADF). ADF serves as the central management engine to control your pipeline and directs the flow of information from source to destination.

Create an ADF instance: In the Azure portal, the first step is to create an Azure Data Factory instance. You may use any name of your choice.

Set up linked services: Connections to sources such as databases, APIs, and destinations such as storage services to interact with them through ADF.

Data sets: All about what’s coming into or going out – the data. They’re about what you dictate the shape/type/nature of the information is at

Pipeline acts: A pipeline is a type of activity – things that do something. Pipelines are composed of acts that define how information flows through the system. You can add multiple steps with copy, transform, and validate types of operations on the incoming information.

Step 4: Data Ingestion

Collecting the information you need is called ingestion. In Azure Data Factory, you can ingest information collected from different sources, from databases to flat files, to APIs, and more. After you’ve ingested the information, it’s important to validate that the information still holds up. You can do this by using triggers and monitors to automate your ingestion process. For near real-time ingestion, options include Azure Event Hubs and Azure Stream Analytics, which are best employed in a continuous flow.

Step 5: Transformation and Processing

After it’s consumed, the data might need to be cleansed or transformed before processing. In Azure, this can be done through Mapping Data Flows built into ADF, or through the more advanced capabilities of Azure Databricks. For instance, if you have information that has to be cleaned (to weed out duplicates, say, or align different datasets that belong together), you’ll design transformation tasks to be part of the pipeline. Finally, the processed information will be ready for an analysis or reporting task, so it can have the maximum possible impact.

Step 6: Loading the Information

The final step is to load the processed data into a storage system that you can query and retrieve data from later. For structured data, common destinations are Azure SQL Database or Azure Synapse Analytics. If you’ll be storing files or unstructured information, the location of choice is Azure Blob Storage or Azure Data Lake. It’s possible to set up schedules within ADF to automate the pipeline, ingesting new data and storing it regularly without requiring human input.

Step 7: Monitoring and Maintenance

Once your pipeline is built and processing data, the scaled engineering decisions are all in the past, and all you have to do is make sure everything is working. You can use Azure Monitor and the Azure Data Factory (ADF) monitoring dashboard to track the channeled information – which routes it’s taking, in what order, and when it failed. Of course, you’ll tweak the flow as data changes, queries come in, and all sorts of black swans rear their ugly heads. You also need regular maintenance to keep things humming along nicely. As your corpus grows, you will need to tweak things here and there to handle larger information loads.

Conclusion

Designing an Azure pipeline can be daunting but, if you follow these steps, you will have a system capable of efficiently processing large amounts of information. Knowing your domain, using the right Azure data engineering services, and monitoring the system regularly will help build a strong and reliable pipeline.

Spiral Mantra’s Data Engineering Services

Spiral Mantra specializes in building production-grade pipelines and managing complex workflows. Our work includes collecting, processing, and storing vast amounts of information with cloud services such as Azure to create purpose-built pipelines that meet your business needs. If you want to build pipelines or workflows, whether it is real-time processing or a batch workflow, Spiral Mantra delivers reliable and scalable services to meet your information needs.

0 notes

Text

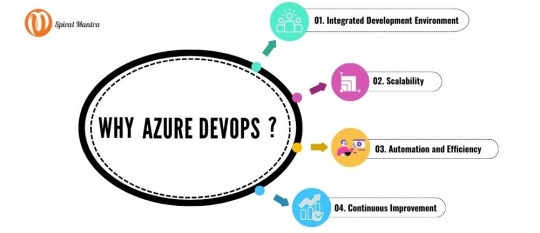

Choose the Right Azure DevOps Services For Your Needs

To compete in today's business landscape, having a hold on the right tools with major technological trends is a way to achieve excellence and growth. Azure DevOps provides a solid programmable framework for easing software development in this trait. Whether managing a small project or working on more elaborate enterprise-scale workflows, choosing the right Azure DevOps services can mean the difference between the successful management of your operations or deadlock. Through this article, let’s get deeper to understand what Azure DevOps stands for and how Spiral Mantra DevOps consulting services can make your workflow seamless.

Azure DevOps proffers a set of tools aiming to build, test, and launch applications. These different processes are integrated and provided in a common environment to enhance collaboration and faster time-to-market. It always takes care to promote teamwork, as it's a cloud-based service that we can access at any time from anywhere aspiring to provide a better quality of work. It creates a shared environment for faster day-to-day work without replicating physical infrastructures.

At Spiral Mantra, you can hire DevOps engineers who concentrate on delivering top-notch Azure DevOps services in USA. We are best known for providing services and a special workforce to accelerate work.

Highlighting Components of Azure DevOps Services at Spiral Mantra

Spiral Mantra is your most trusted DevOps consulting company, tailored to discuss and meet all the possible needs and unique requirements of our potential clients. Our expertise in DevOps consulting services is not only limited to delivering faster results but also lies in offering high-performing solutions with scalability. You can outsource our team to get an upper hand on the industry competition, as we adhere to the following core components:

1. Azure Pipelines: Automates the process of building testing and deployment of code across popular platforms like VMs, containers, or cloud services. CI CD is useful to help developers automate the deployment process. Additionally, it gains positive advantages with fewer errors, resulting in delivering high-quality results.

2. Azure Boards: A power project management tool featuring the process of strategizing, tracking, and discussing work by clasping the development process. Being in touch with us will leverage you in many ways, as our DevOps engineers are skilled enough to make customizations on Azure Boards that fit your project requirements best.

3. Azure Repos: Providing version control tools by navigating teams to manage code effectively. So, whether you prefer to work on GIT or TFVC, our team ensures to implement best version control practices by reducing any risk.

4. Azure Test Plans: In the next step, quality assurance is another crucial aspect of any software or application development lifecycle. It stabilizes to provide comprehensive tools useful for testing while concentrating on the application's performance. We, as the top Azure DevOps consulting company, help our clients by developing robust testing strategies that meet the determined quality standards.

Want to accelerate your application development process? Connect with us to boost your business productivity.

Identifying Your Necessity for Azure DevOps Services

It might be tempting to dive right into what Azure DevOps services you’ll need once you start using it, but there are some important things to be done beforehand. To start with, you’ll have to assess your needs:

Project scale: Is this a small project or an enterprise one? Small teams might want the lightweight solution Azure Repos and Azure Pipelines, while larger teams will need the complete stack, including Azure Boards and Test Plans.

Development language: What language are you developing in? The platform supports many different languages, but some services pair better with specific setups.

CI/CD frequency: How often do you need to publish updates and make changes? If you want to release new builds quickly, use Azure Pipelines, while for automated testing, go with Azure Test Plans.

Collaboration: How large is your development team? Do you have many people contributing to your project? If so, you can go with Azure Repos for version control and, on the other hand, Azure Boards for tracking team tasks.

Choosing the Right DevOps Services in USA

Deciding on the best company for your DevOps requirement can be a daunting task, especially if you have no clue how to begin with. In the following paragraph, get through the need to fulfill while selecting the right agency to make your things work in line.

1. For Version Control

If your project has more than one developer, you’ll probably need enterprise-grade version control so your team can flawlessly work on code. Azure Repos is currently working for both Git and TFVC as the basis for your version control system. If you have a distributed team, then Git is the best choice. If your team prefers working in a centralized workflow, then TFVC may be a little easier. If you’re new to version control, Git is still a pretty good place to start, though. The distributed nature of Git makes it easy for anyone to get up and running with version control.

2. For Automated Tasks (Builds and Deployments)

Building, testing, and using your code in an automated way will keep you going fast and in sync. That’s why running Azure Pipelines on multiple platforms allows CI/CD for any project. The platform also provides the flexibility your team needs to deploy their application to various environments (cloud, containers, virtual machines) as you want your software to be released early and continuously.

3. For Project Management

Now, getting that work done is another thing. A project isn’t just about writing code. Doing release notes requires at least one meeting where you need to understand what’s fixed and what broke. Tracking bugs, features, and tasks helps keep your project on track. Azure Boards provides Kanban boards, Scrum boards, backlogs for managing all your project work, and release boards used to track work for a specific release. You can even adjust it to map to existing workflows used by your team, such as Agile or Scrum.

4. For Testing

Testing is another crucial step to make sure your application behaves as expected with the validation steps or predetermined conditions. You can enable manual testing, exploratory testing, and test automation through Azure Test Plans. This will allow you to integrate testing into your CI/CD pipeline and keep your code base healthy for delivering a more predictable experience to your users.

5. For Package Management

Many development projects use one or more external libraries or packages. Azure Artifacts enables the capture of these dependencies so your team can host, share, and use these packages to build complex projects. It massively reduces the complexity of maintaining a depot of packages so your developers can access them for the development process.

How does the Spiral Mantra Assist? Best DevOps Consulting Company

Spiral Mantra’s expert DevOps services will help you fasten your development process so you can get the most out of the process. Whether it’s setting up continuous integration, a CI CD pipeline, configuring version control, or optimizing any other aspect of your software process through boards or any other aspect of your software process, call Spiral Mantra to make it happen.

Partner with Spiral Mantra, and with our team proficient at using Azure DevOps for small and large projects ranging from your start-up to large teams, you can ensure success for your development projects.

Getting the Most from Azure DevOps

Having found the relevant services, you then need to bring these together. How can you get maximum value out of the service? Here's the process you might consider.

⦁ Azure Repos and Pipelines: To initiate the process, set up version control and automated pipelines to boost the development process.

⦁ Add Boards for Big Projects: Once the team has grown, add Azure Boards for task, bug, and feature request tracking for teams using pull requests.

⦁ Testing Early: Use Test Plans to engage in testing right at the beginning of the CI/CD pipeline and pick up the bugs early.

⦁ Managing Packages with Azure Artifacts: If your project requires more than one library, then set up for Artifacts.

Conclusion

The process of developing software will become easier if you choose the right DevOps consulting company to bring innovations to your business. Spiral Mantra is there to support you with the best DevOps services in USA according to your budding business requirements and provides you with guidance to enhance your workflows of development operations.

1 note

·

View note

Text

Best Practices to Build a CI CD Pipeline on Azure Kubernetes Service