Text

WWDC Wish List

I don’t often do this, but this year I think it’s important; Apple is more open & receptive to feedback today than it ever has been. With iOS 9 and iPad Pro, iOS has made a tremendous leap in the past year on iPad. With that in mind, I wanted to note down all the things in my head that I really want to see the iOS computing platform grow to cover.

What follows is an unordered list of things I’d like to see from Apple over the next few years, starting with the easy & obvious things upfront. Most of these have Radars filed against them, but since they’re more often than not dupes of existing Radars I won’t post the numbers here. Most of this is about iOS, but not all - I’ll say upfront that I don’t think OS X has a future with the way it’s going currently, and has been running on fumes for most of iOS’ lifetime. Even if you disagree with where I’m coming from, perhaps there’s still something for you here. 😁

• Split-screen letting you spawn multiple windows for the same app

This is a really easy evolution to see - right now, split-screen in iOS only enables two different apps side by side. The next obvious step is to enable an app to say it’s capable of handling a second window, so that you could have two web pages or documents side-by-side.

• OpenURL screen-side hinting

Another tiny tweak, but this would allow an app to say how it prefers opened links/apps to open - either side by side, or replacing the current app. This would allow e.g. URLs in Mail or Messages to automatically open Safari side-by-side instead of kicking you out of the app you’re using. Twitter on iOS has some clever logic to simulate this - if Twitter is pinned at the side of the screen, it will open URLs directly in Safari (side-by-side).

• Custom View Controller Extension Providers

With Extensions, Apple has created an ideal way to present a view controller from one app inside another app. This is used throughout the OS, but most obviously with Safari View Controllers. Apple has already identified key areas where this is useful - this is how photo editing Extensions work, right now. I would love to see this expanded upon such that apps can register view controller providers for all kinds of different functionality, which could then be presented inside other apps.

• QuickLook generators

In a similar vein, QuickLook seems like a really obvious system Extension point to add. OS X apps can register ‘QuickLook generators’ so that they can create thumbnail previews of their document contents such that other apps can render them. As document handling becomes more pervasive on iOS, no doubt QuickLook will expand to include this.

• System-level drawing/markup views

Very simple one - Apple has, in Notes, created one of the best drawing/markup views in any apps on the platform, ideal for Apple Pencil. This would be great to offer to developers in some standardized, customizable fashion such that they can implement it in their apps without having to reinvent the wheel - a drawing engine like that is an incredibly complex OpenGL/Metal renderer, which is difficult to reimplement.

• ⌘ key for iPad keyboard

With UIKeyCommand and keyboard shortcut support on iOS, I would really like to see a command key added to the iPad on-screen keyboard. Understandably it would only work when the keyboard is visible (i.e. in a text editor), but it would enable editing shortcuts beyond what fits in the bar atop the software keyboard. It would also help train developers that iOS apps should have keyboard shortcuts by default - in an era where most iPads support hardware keyboards, I think this is an important step forward.

• Drag & drop

With the addition of split-screen multitasking, much has been said about drag & drop on iOS. It seems like an obvious thing to add, on the surface, but when you think it through there are a lot of ways it could be detrimental to the OS. Finding a way to enable drag & drop without screwing over all the existing gestures in the OS, whilst still making it faster than copy/paste - that’s not as easy as you think. Despite that, I do think it’s worth figuring out, and makes so much sense on a touchscreen with its direct manipulation model.

• UIKit native apps on watchOS

WatchKit was an amazing stopgap, enabling a competent app platform on watchOS and all of the apps you use today. Sadly, third-party apps on watchOS suck, and 'native’ WatchKit in watchOS 2.0 hasn’t really helped here. If watchOS is to succeed as an app platform, I think it will need the ability to run real (read: UIKit) apps. As someone who’s dabbled in this already, I’m not convinced the first-generation hardware is good enough to enable this without serious compromises. My wish, therefore, is that future hardware makes it possible. Third-party apps need to be as capable as the fantastic first-party apps. WatchKit is a shit sandwich.

• AppleTV gamepad situation

A very easy thing to fix: right now, a game on tvOS cannot require a gamepad (unless, of course, it’s Activision requiring a hardware accessory because that’s clearly so different. /s). All games must support the Siri Remote: the problem with that is the Siri Remote is awful for games, and means any developer attempting to make anything even remotely complex (read: good) on tvOS has to include some ridiculous, often-patronizing, and mostly-unplayable Remote-only mode. This is a policy issue - I understand where Apple marketing is coming from, but the godawful experiences it’s resulted in are worse than the alternative, and reflect terribly on the platform.

• iBooks Author for iOS

iBooks Author is an offshoot of the iWork suite that seems like it would be perfectly suited to running on iOS. For book-writers, iBooks Author on iOS could mean a fully-integrated writing & publishing solution that doesn’t require a desktop computer. One could even build multi-touch 'enhanced’ iBooks on-device. To me, firmly rooted in the iOS camp, it seems like a no-brainer to bring to iOS.

• All system iOS apps should support split-screen

I’m surprised this is still the case, but a bunch of system apps on iPad outright refuse to implement split-screen multitasking. My guess is that there are some concerns about security - iBooks, for example, tells you to make the app fullscreen if you want to see the iBookstore. Obviously the App Store, iTunes Store and Apple Music should support split-screen. It’s very hard to justify any (non-game) app not doing so (though I do know there are reasons some third-party apps have opted-out). Windowing support shouldn’t be optional, especially for system apps.

Now that the easy stuff is out of the way…

• Unified App Platform between iOS and OS X

Right now, I really believe that OS X is a dead platform. It’s been coasting on iOS’ wake for years, picking up features often long after they’ve been implemented on iOS. Apple needs to create a unified app platform between the two OSes.

This doesn’t mean a desktop would just run iOS apps, much like tvOS doesn’t 'just run’ iOS apps. The same ideas should apply: a shared codebase, with minor platform-specific elements, and an optimized UI for the OS’ primary interaction model.

I could see this being UIKit-based. After tvOS, it is no longer valid to say that UIKit just flat-out wouldn’t work on a non-touchscreen - we know that’s just not the case. It’s all built on CoreAnimation, so it would make sense that you would be able to interleave legacy AppKit views/layers inside a (hybrid) UIKit Mac app, at least for the time being. AppKit itself should have a protracted deprecation period, like Carbon before it, as new functionality gradually gets built into UIKit-based frameworks instead. AppKit would remain on the desktop and not come to iOS, and eventually fade out as it gets replaced inside hybrid apps piece by piece.

In this way, iOS (primarily iPad) and OS X could grow together. Functionality built for one would much more easily translate to the other. iPad apps would have a migration path to the desktop, and legacy desktop apps to iPad - both platforms would evolve & grow as one, and not one at the expense of the other.

• Xcode for iPad

A longstanding request of mine, but software development remains a key artform woefully under-represented on iOS. When I say I want 'Xcode for iPad’, I mean 'a means to write, debug & deploy Cocoa Touch apps on iPad without having to use a Mac’. It’s very likely that a project like this from Apple would look nothing like Xcode does on the desktop. It most probably would be Swift-only (something that makes me very sad). I imagine it would also involve Swift Playgrounds. Nonetheless, a complete software development toolchain is a huge missing piece of the iOS software ecosystem.

There are some great apps on iOS that have managed to pull something similar off - Pythonista is a prime example: a fully on-device Python IDE with bridges to C and ObjC code, powerful enough to let you interface with and rewrite its own UI using Cocoa (in Python), but there’s a learned unease that Apple will decide something like that is not allowed and remove it from the Store. This is a terrible situation to be in - things that push the boundaries of what people think iOS is capable of should be embraced.

• File & disk management for iOS

From the start, iOS has tried to do the 'right thing’ re file management. However, nine years on, this imaginary era where physical filesystems don’t exist hasn’t come to pass. Finally, we have an iCloud Drive app and third-party document providers, but we can’t interface with files on external storage, beyond importing photos to the camera roll. I think it’s time to implement this at the system level: allow document pickers to open files in place from external storage, and allow apps to copy files to external storage. On OS X, document pickers provide sandbox exceptions to the folders you, as a user, choose to give an app access to. Build on this model - maintain security, but stop pretending filesystems don’t exist.

• Terminal environment for iOS

Worth a shot, huh? 😛 I would be so happy to see a Terminal/BSD environment on iOS, even if it were limited to its own sandbox and not the OS filesystem. Let technical users build the kinds of things that technical users need, that can’t be addressed in any other way by a GUI iOS. The only way I see Apple committing to this is if it were completely jailed from the rest of the OS - but even that would be a major step forward (or, backward, depending on your point of view).

• iOS to pick up the remaining OS X apps

As iPad grows to replace more of what the Mac used be for, it makes a lot of sense (to me) that Apple should close the gap between the system apps on both platforms. I would like to see (interpretations of) TextEdit, Automator, Font Book, Keychain Access, and, with external storage support, Disk Utility. TextEdit on the Mac seems like a trivial one, but there is no built-in text editor on iOS that can access TextEdit documents on iCloud Drive - personally, I think that’s crazy. Automator is one of those tools that few people use, but those that do realize how incredibly powerful and useful it is. In fact, Workflow, one of the best third-party iOS apps on the platform, is pretty much an expanded Automator. Font management & keychain support are other areas that do not really have third-party equivalents on iOS, despite being essential for certain kinds of users.

• iOS devices to be able to install the latest OS from Recovery mode without iTunes

Right now, one of the last remaining reasons to connect an iOS app to a desktop computer is to install the OS. Fixing this would be difficult, no doubt, but NetBoot & Internet Recovery have long been things on the Mac. It’s been a while, so I may be misremembering, but I think the first-generation (x86) Apple TV could redownload its OS from the internet in recovery mode if something went wrong. Eventually, I think iOS needs an expanded Recovery environment that can do this for itself.

• ’AppleScript’ for iOS

Perhaps a legacy of a forgotten age, but with AppleScript gaining brand-new JavaScript language support lately, perhaps AppleScript has a place in the iOS ecosystem. That AppleScript still exists is fascinating in and of itself - it was one of the first environments in which I learned to program, back in System 7.

• Expanded USB device support for iOS

A hard sell, especially when the Made for iPhone program is such a big thing for Apple, but there are all kinds of devices I’d like to be able to use on iOS through the USB adapter beyond audio, keyboard & mass storage devices. I’d like developers to be able to write user-mode driver apps to talk to existing hardware - in my case: capture cards, TV tuners, serial adapters. External cameras, input devices, etc. Having every single USB device require an MFi authentication chip and certified accessory apps really hurts. You could buy a pre-existing iPhone MFi RS232 adapter and use their already-approved SDK to make an app that talked to (/synced with) e.g. a Newton, Raspberry Pi, or Arduino. You can’t use Apple’s USB adapter to do the same with a non-MFi USB serial adapter. I don’t expect this to change, but I wish it did.

• Fix Mac App Store

Finally, this is an important one: it’s become very clear that the Mac App Store is not fit for purpose. To be reductive, sandboxing restrictions & monetization issues have driven-out so many longtime, respected Mac developers. Those that stay often have MAS and non-MAS versions of the same app - the non-MAS generally being the one with the full featureset. Third-parties have resorted to coercing MAS customers to crossgrade to non-MAS versions of the apps. This never should have been allowed happen - it’s bad for developers, and it’s bad for users. It shouldn’t be crazy to think that all Mac software should be available through the Mac App Store. Microsoft Office, Creative Cloud, etc - every effort should have been made such that the MAS is the only place you’d even consider selling your Mac apps. Even today, Apple provides software through the MAS that does not play by its own self-imposed sandboxing rules, the same restrictions that drove everybody else out. This was so fixable, and perhaps still is. Right now it’s a travesty; there is no leadership here.

55 notes

·

View notes

Video

youtube

As an internal demo, I put together a set of RTÉ channels for tvOS - quickly thrown together using official public HD streams. It's nice having one 'app'/icon per channel - though switching between them is slower than channel switching on a television.

I prefer the generic chromeless approach to each network having its own crummy special snowflake portal/live TV/VOD experience. What we really need is a singular UI from Apple to switch between all available channels in an integrated streaming system with EPG. Buy channels à la carte - not limited to network bundles.

(Won’t be open-sourcing this one, is only a couple lines of code anyway and I’m sure RTÉ wants to work on their own [special snowflake? 😜] app)

3 notes

·

View notes

Text

Native UIKit apps on Apple Watch

Security

This is not a jailbreak. This will not make a jailbreak possible. This is no less secure than regular, sandboxed iOS apps. It's not a way to bypass the security systems in place on your device. It does, however, let you build/prototype the kinds of apps you can do on iOS, but can't on watchOS right now. Thought it important to say this up front 😜.

Update 21 Sep 2015

I've updated one of my open-source projects to use the methods here to build a UIKit watchOS 2 app. You can check it out here, for a concrete example of how to do this.

Preamble

Now that watchOS 2 and iOS 9 have GMed I figured it was a good time to write up one of the methods we came to for running ‘real’ native apps, i.e. ones that can use UIKit, on watchOS.

All of watchOS is based on the same frameworks we use on iOS - system apps use a framework that subclasses a bunch of UIKit apps, called PepperUICore. However, as developers, we’re not given the same kind of access to the system. We can’t use UIKit directly, or any high level graphics frameworks like OpenGLES, SpriteKit, SceneKit, CoreAnimation, etc. WatchKit apps are handcuffed, as it were, only able to display UI through the elements WatchKit provides. They cannot get the position of touches onscreen, or use swipe gestures, or multitouch.

I did not have an Apple Watch at launch, but I was convinced UIKit apps were possible with the existing SDKs. However, WWDC came and went, and still nobody had revealed a method to use UIKit on watchOS. I roped @b3ll into a torturous day & night of testing things, he in a cafe across from WWDC (with @saurik for much-needed moral support!), me across the Atlantic.

At the time, watchOS did not provide a system console log of any kind. It would provide crash reports, when it felt like it, and usually an hour after they happened. I had no hardware to test on here, so you can imagine how much pain & frustration this was. We were relying on the visual cues of different kind of app crashes to debug - endless spinner & black screen meant our code didn’t load, instant crash back to Carousel meant any one of three possible linker errors.

Eventually, a crashlog confirmed what I was hoping: watchOS was actually trying to load our modified binary, and our native code!

So… spent a night hacking with @b3ll (& @saurik!) - we got UIKit (& SceneKit) apps running on Apple Watch 🎉 (video) pic.twitter.com/khcpHgVsZo

— Steve T-S (@stroughtonsmith)

June 12, 2015

My [Nano]FileBrowser app running on Apple Watch natively 😁 (as modeled by @b3ll) pic.twitter.com/KRhvkJBMox

— Steve T-S (@stroughtonsmith)

June 13, 2015

After getting something reproducible, and porting over some UIKit apps, I celebrated by ordering an Apple Watch of my own - I did want to see if the same method worked on watchOS 1, after all. With a few tweaks, it did!

Method

The method I stumbled upon is far from elegant, but it works across watchOS 1.x and watchOS 2.x. There are a half-dozen similar ways to do it on watchOS 2, but I haven’t seen others for watchOS 1 thus far. Objective-C used here to sidestep the complexity of embedded Swift libraries.

What follows is a brain dump of things necessary to get this working. I don't have any easy 'just download this sample project' method right now. If you don't understand Xcode targets, mach-o linking, and codesigning - turn back now! Xcode 7 (with WatchOS 2 SDK) required.

Key Elements

Modified WatchKit Stub

WatchOS Framework target

Build script to copy your framework binary to a dylib inside your WatchKit app

(Ideally) you’ll want to copy the missing iOS headers and frameworks to the WatchOS SDK

Desired Result

You want to end up with an iOS app, with a WatchKit 1 Extension, with an embedded WatchKit 1 App, with “MyApp.dylib” inside it. The WatchKit 1 App binary needs to have a linker reference to MyApp.dylib instead of SockPuppetGizmo (check this with otool -l), and the same goes for _WatchKitStub/WK inside the WatchKit 1 App bundle. You want to make sure each element is signed properly, too.

The steps are pretty similar for watchOS 2-only apps, though the layout on disk changes (you have to place your dylib in the WatchKit App's embedded frameworks directory).

Missing Headers & Frameworks

watchOS is iOS; most of the frameworks you expect to be there are actually on-disk. However, the SDK will not include them, or have complete headers for them. Fortunately, Xcode 7 makes it ridiculously easy to link to frameworks through new text-based .tbd files that list all the symbols, and the supported architectures, for a framework. For the most part, you just need to copy the tbd files from the iPhoneOS SDK into the appropriate places in the WatchOS SDK, as well as their headers, then edit the tbd files to include ‘armv7k’. I’m sure somebody will automate this repetitive and thankless job ;-p

Modified WatchKit Stub

The trick is to use install_name_tool to change the WatchKit’s stub’s dependency on SockPuppetGizmo (the framework that boots up WatchKit) to your framework. As this is a non-vital framework for a UIKit app, you don’t have to worry about it. Now, when the WatchKit stub app is loaded, instead of loading up WatchKit, it will load your framework/dylib.

N.B. apps that include a modified stub will not be accepted by iTunes Connect, so make sure to make a backup before changing it, and switch back to the original when you’re done with UIKit apps and need to actually ship things.

PLATFORMSDIR=`xcode-select -p`/Platforms

install_name_tool -change /System/Library/PrivateFrameworks/SockPuppetGizmo.framework/SockPuppetGizmo @rpath/MyApp.dylib $PLATFORMSDIR/iPhoneOS.platform/Developer/SDKs/iPhoneOS.sdk/Library/Application\ Support/WatchKit/WK

New in watchOS 2 - you cannot modify the stub's rpath, but ./Frameworks works as an existing library location, though the validator will now trip if you have an actual .framework bundle in there. On watchOS 1, you can modify the rpaths to include custom locations inside the WatchKit app bundle.

WatchOS Framework Target

Effectively, all your app’s code will be in a WatchOS framework target, instead of an app. Your resources can be added to the WatchKit app’s assets bundle, and accessed through regular APIs (imageNamed, etc).

Then, all you need to do is call UIApplicationMain in your constructor, like regular UIKit apps. You can use __attribute__((constructor)) or linker flags to achieve this.

void __attribute__((constructor)) injected_main()

{

@autoreleasepool {

UIApplicationMain(0, nil, @"UIApplication", @"NativeAppDelegate");

}

}

You'll want to take the compiled binary from your framework as a dynamic library (as per 'MyApp.dylib').

Build Script

You might notice at this point that WatchKit app targets can’t have build scripts, according to Xcode. Here’s where a little knowledge of the xcodeproj format might help - you can add a build script to the iOS target, then hand-edit the pbxproj file to add the reference for that shell script to the WatchKit App target. Or you could manually piece together your app with dylib and sign it all manually. There are many less clunky ways to do this than mine, so this part I’ll leave up to you. It took hours of trial and error to get something that ‘worked’, and I’m still not happy with it.

Next Steps

If you want to start building native apps that look like system apps, you'll want to class-dump PepperUICore and investigate some of the apps in the Simulator. PepperUICore classes are prefixed PUIC and are mostly subclasses of UIKit classes. Your root UIApplication subclass should be PUICApplication. To implement a Force Touch menu on a view controller, you override -canProvideActionController and -actionController. There's also an ORBTapGestureRecognizer if you want direct usage of Force Touch. Writing native PepperUICore apps deserves its own post, sometime…

Misc Notes

watchOS can be so incredibly fickle when installing binaries. I’ve seen this with non-hacked regular WatchKit apps too, but you can get into a state where the OS refuses to install a binary and gives you random error messages. Sometimes rebooting fixes this, sometimes removing the app from your phone helps. Sometimes the exact same binary won’t install one minute, and works the next.

watchOS 1’s ABI is incompatible with Xcode 7’s compiler, for various reasons that I’m sure Apple want to keep to themselves. While you can build working apps with the toolchain, all kinds of things crash inexplicably. Ideally, unless you have specific reason to try this on watchOS 1, stick to watchOS 2.

Most importantly - SockPuppet apps have strict entitlements that disallow all kinds of things, like networking. URL loading will only work with local files. You can’t touch networking frameworks, or AirDrop, or seemingly anything of use. While that is a pain, all kinds of other frameworks work fine like OpenGLES, SpriteKit, SceneKit, UIKit, etc, so it lets you prototype things impossible with WatchKit apps. With luck, WatchKit apps will grow those features over time, and make hacks like this irrelevant.

Addendum

Adam has an addendum on his side of the process, and where he took it from here. Check it out! 🍕

youtube

15 notes

·

View notes

Text

Redux: When is a Smartphone OS not a Smartphone OS?

On the eve of Windows 10 and the reveal of Continuum for Phones, I thought it was worth revisiting something I posted three years ago.

With Windows Phone, many posit that Microsoft is moving everything to the Windows RT kernel (aka Windows 8); the phone could be as much Windows 8 as the tablet or desktop. Microsoft have been pretty candid about their duelling Metro/Desktop environments in Windows 8, but it starts to paint a familiar picture once all the information is in play. Your Windows Phone, which may run on ARM or perhaps x86, could dock and power a desktop display, with a mouse, keyboard, and even legacy Windows apps. In the future, I see no distinction between Windows Phone and Windows; for Windows Phone to fail, the entirety of Windows has to go down with it.

Indeed, with Windows 10 on phones Microsoft is delivering just that - Windows Phones will be able to power monitors, keyboards & mice and run Universal Windows apps just like you can on your Windows 10 PC. They see it as a huge opportunity for all the people whose first & only computer is their smartphone.

A whole new generation of computing, where everything can be powered by one device; that's where Microsoft and Google are positioning themselves. They are platform makers, this is the most obvious and inevitable possible platform play. If you extrapolate a little, it's quite clear that this goes beyond devices; if we're talking about dumb desktop shells that you dock into, why not the same for phones and tablets? What if the computer itself is something you always wear - a wristband perhaps. Wirelessly, it could beam its display to a blank shell of a smartphone in your pocket, or the blank shell of a tablet, or a desktop PC, or augmented reality glasses.

Microsoft intends for Continuum to power 2-in-1 laptop/tablet-looking devices with no internals, wirelessly - your entire computing experience beamed from your smartphone.

Eventually the physical connectors will go away, and the docking will all be wireless - at that point, for how long will we still need distinct devices, CPUs, memory and data connections for each display and input mechanism in our lives?

The future present is kinda awesome, huh?

0 notes

Text

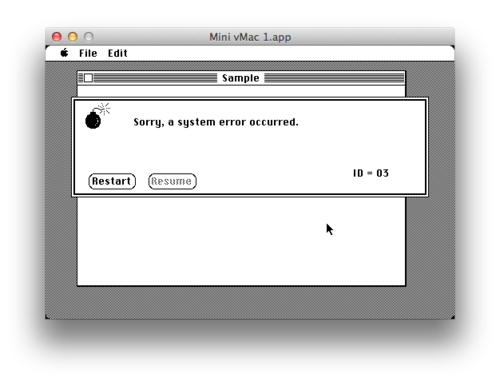

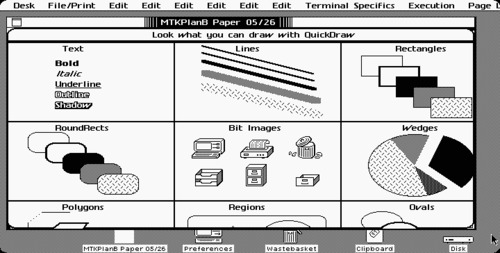

MPW, Carbon and building Classic Mac OS apps in OS X

MPW

In 2014 I came across a project on Github described as "Macintosh Programmer's Workshop (mpw) compatibility layer".

There has never been a good way to compile Classic Mac OS apps on modern OS X - for the most part, you were stuck using ancient tools, either Apple's MPW or CodeWarrior, running in a VM of some sort. CodeWarrior, of course, is not free, and MPW only runs on Classic Mac OS, which is unstable at the best of times and downright nightmarish when trying to use it for development in an emulator like SheepShaver.

Enter 'mpw' (which I will refer to in lowercase throughout as something distinct from Apple's MPW toolset).

mpw is an m68k binary translator/emulator whose sole purpose is to try and emulate enough of Classic Mac OS to run MPW's own tools directly on OS X. MPW is unique in that it provided a shell and set of commandline tools on Classic Mac OS (an OS which itself has no notion of shells or commandlines) - this makes it particularly suited to an emulation process like mpw attempts to provide, as emulating a commandline app is a lot easier than one built for UI.

At the time I came across the project, the author himself had never attempted using mpw to build a Classic MacOS app - only commandline tools and Apple II-related stuff. Naturally, building a UI app was the first thing I'd try.

The Experiment

I started off by writing code just to see how well mpw emulated the MPW compilers, and over time managed to write a working shim of an app that could run on System 1.1g. This in itself was a learning process, not only in code but in piecing together the build process. All the sample code and documentation of the time was in Pascal, so I had to translate that to C - not so difficult, it turns out (technically, first I had to transcribe it from a PDF…).

Eventually I had something that worked, built a few sample projects, uploaded some to Github and left the classic Mac stuff for a while.

More recently, towards the end of 2014, mpw added support for the PowerPC tools, so I immediately set out to update my build processes to support that - a trivial effort.

However, now that it was possible, I really wanted to try Carbonization.

Why Carbon?

I vaguely knew what Carbon was from having lived through the OS 9 -> OS X transition, and that knowledge came with a certain amount of bias. "Carbon is that thing that badly ported OS 9 apps used, right?" It always felt 'off' in OS X, in the same way that cross-platform UI toolkits invariably feel off.

I knew I wanted to understand the process better, however, and see what would be involved in porting my sample projects to Carbon (and thusly, OS X). I read some books, and set to work.

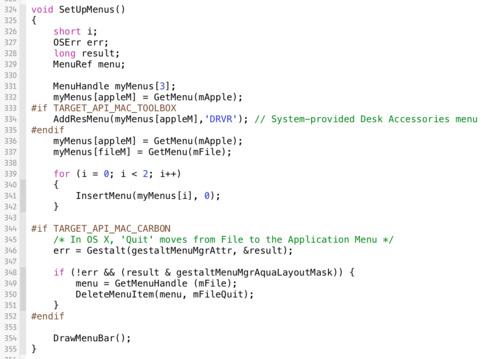

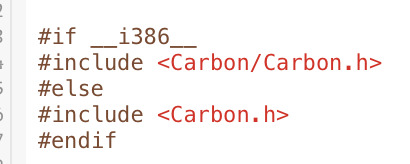

The actual porting process didn't take much time at all, and for the most part I ended up with fewer lines of code than where I started. Most of the changes involved #ifdef-ing out lines of code that weren't necessary anymore, and changing anything that directly accessed system structs to using accessor functions - a trivial amount of work (for an admittedly trivial set of projects).

What interested me the most is how so much of the API remained identical - I was still using only functions that existed on System 1.0 in my app, but they were working just the same as ever in a Carbonized version. The single built binary ran on OS 8.1 all the way to 10.6 (care of Rosetta).

My mind wandered to Carbon as it exists in 10.10. While Apple decided not to port it to 64-bit (for all the right reasons), the 32-bit version of Carbon is still here in the latest release of OS X - I wondered how much of it was intact.

Turns out the answer is: all of it.

The only change I had to make was to point my header includes at the right place, but after that the whole app came to life exactly as it did on Classic Mac OS.

With the same source file, and only a handful of #ifdefs, I could build the same app for 1984's System 1.0 all the way up to the current release of OS X, Yosemite.

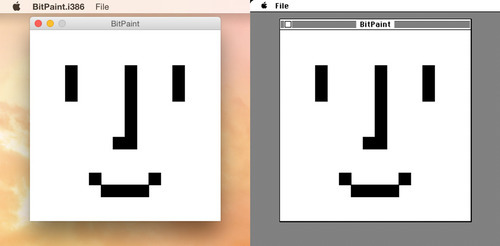

The Sample Project

Just to provide an example for this post, I put together a trivial drawing app called BitPaint. It isn't very interesting, but it should illustrate a few things:

What's involved in bringing a trivial classic Mac app to Carbon

How the Classic Mac OS build process works

How much source compatibility exists between 1984's Toolbox and Carbon today

Carbon, redux

The more I dug into it, the more I came to the conclusion that Carbon was probably one of the most important things Apple did in building OS X. Even today it provides source compatibility for a huge chunk of the classic Mac OS software base. It kept the big companies from ditching Apple outright when they were needed the most, and gave them a huge runway - 16 years to port perhaps millions of lines of code to OS X while still being able to iterate and improve without spending thousands of man-years upfront starting from scratch. Over time, of course, Carbon has improved a lot and you can mix/match Carbon & Cocoa views/code to the point where you can't realistically tell which is which. I appreciate what a monumental effort Carbon was, from a technical standpoint. That Cocoa apps always felt 'better' is more to Cocoa's credit than Carbon being a bad thing - it's a lot easier to see that in hindsight.

Final Thoughts

I am incredibly psyched about mpw. Its developer, ksherlock, has been very responsive to everything I've come up against as I stress test it against various tools and projects.

Right now it's a fully usable tool that makes Classic Mac OS compilation possible and easy to do on modern versions of OS X, without requiring emulators or ancient IDEs or the like. To my knowledge, this is the first time this has been possible (excluding legacy versions of CodeWarrior).

I have used this toolset to build all kinds of things, including fun ports of my own apps. I'm sure I'll be coming back to it for a long time to come.

I'm hoping I'm not the only person who'll ever get to use it :-)

Misc Gotchas

I ran into a few things along the way that are worth noting, mostly because information about them either doesn't exist or is difficult to find on the web - check BitPaint's makefile for context on any of these:

Pascal Strings

You want to tell clang to enable Pascal-style strings (-fpascal-strings).

-mmacosx-version-min=10.4

If you specify -mmacosx-version-min=10.4, your Intel binary will work all the way back to 10.4, otherwise it will crash on launch trying to use invalid instructions.

PICTv1

Systems 1-6 support only one picture format for resources, and that's PICTv1. Helpfully, it seems like nothing on Earth supports the creation of PICTv1 files anymore, so I wrote a very suboptimal one (but it works well): https://github.com/steventroughtonsmith/image2pict1

OS X Packages

When you package a Carbon binary into a .app folder structure, as necessary for OS X, you'll find it won't be able to find its resource fork anymore, despite the fact that running it from the commandline will work fine. Instead, you can put the resource fork into a data file inside the bundle's Resources folder and it will work as expected.

'SIZE'

If you accidentally your SIZE resource, your app will launch on OS X but appear to hang, unresponsive, in the background. I ran into this more than once.

'carb'

From what I can tell, including a 'carb' resource in your binary will stop it from launching on System-7.x, but be fine on System 1-6 and 8-9.2.2. Not sure if this is an MPW problem or a me problem, but I lost quite a bit of time to "This version of MPW is not compatible with your system" alerts from my apps before realizing this.

Packaging!

Those who knew Classic Mac OS will be well accustomed to type/creator codes and resource forks; those who did not will be absolutely baffled by trying to figure out why they can't open their files/disk images/binaries. I run SetFile on my disk images after creation so that DiskCopy will be able to see/open them, and I binhex encode the disk images so I can safely transfer them to a real Mac using Internet Explorer without losing the resource fork. Neither Samba (as used in VMWare's Shared Folders) or FAT32 support resource forks, so they will get stripped and render your files unusable. SheepShaver's external folder support does indeed support resource forks, so you're totally fine there.

Rez

MPW includes a version of the Rez tool (which compiles your resource forks for you), but currently mpw is unable to emulate it successfully. Fortunately, Xcode still ships with Rez and today's Rez seems almost unchanged from the version included with MPW all those years ago. Pass it the Classic Mac OS set of includes and it's happy to spit out resource forks compatible with System 1.0.

104 notes

·

View notes

Text

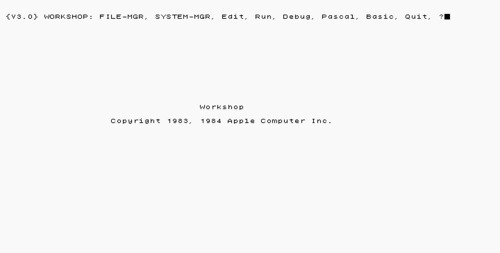

Lisa Pascal Development in Lisa Workshop

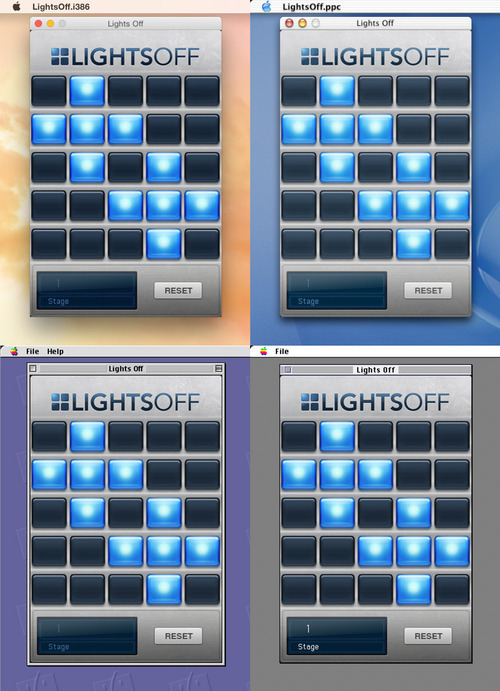

Tonight's project: learn how to write code that runs on Apple's LisaOS. In this piece, I am using Lisa Office System 3.1, with Workshop 3.0.

As you can imagine, there hasn't been any kind of documentation on this in decades, so it was all learned through painful trial and error, and scouring old manuals for information. Fun!

Getting Started

Lisa's development environment is Workshop, though insert the disks into your running Lisa[emulator] and you'll find they can't be read by the OS - this is no mistake: you have to boot from the Workshop disk 1 and install it to your hard disk, effectively creating a dual-boot environment.

Boot into Workshop, and you're greeted with a text-based UI. Virtually everything you do in this environment works like this (except for the text editor, thankfully).

You will have to become very acquainted with these tools to get your own code up and running. Pressing a letter on the keyboard will open the corresponding named option onscreen. Open Edit with 'E'.

The Sample App

Let's write a simple 'Hello World' Pascal app - just about the only program code I can fit in a single screenshot. In Edit's File menu, choose 'Tear Off Stationery', and hit enter for the next prompt. Use the following code, then File -> Save & Continue (name it LisaTest - the .TEXT extension is automatically added).

Exit the editor to drop back to the shell.

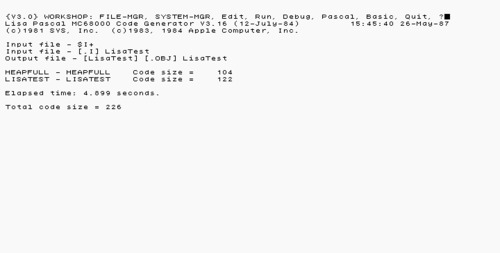

Compiling

Next up, the Pascal compiler! Press 'P'. Type the name of the file (extension automatically implied), give it nothing for a list file, and you can leave the output file (.OBJ) as-is. If all goes well, you should get the following.

Linking

Now, you have an object file, but to run the app you will need to link it first. Press 'L' at the Workshop prompt (it's a hidden option) to enter the Linker. Here's where it gets complicated - not only do you have to pass it your OBJ file, but you also need to know which libraries to link (good luck finding any documentation online!). The Lisa Pascal 3.0 Reference Manual has a complete listing & description of all the Workshop files & libraries as an appendix if you run into issues finding the right libraries to link. In this example, you need three: 'sys1lib', 'iospaslib', and 'qd/support'.

Completing the Installation

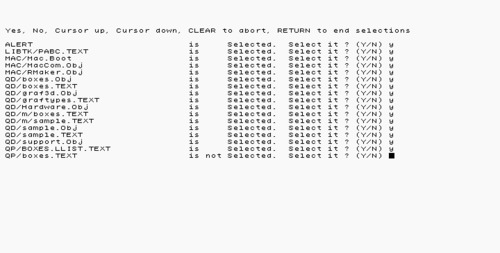

There is some bad news; the qd/support (QuickDraw) interface is not installed on the hard disk by default. I recommend copying all the files from disks 7-9 using the FILE-MGR.

This is a convoluted process; you need to insert each disk, copy everything, then open the Editor to use the File menu option to eject the disk (!).

The copy syntax is as follows: the from option should be '-#13-' and the to option should be '='.

This will then ask you to Y/N every single file on the disk and then start the copy.

After each disk has finished copying, eject it from the Editor. Once you have everything, you're ready to continue.

Back to Linking!

Give Linker the input as shown above (note the output file name - you don't want it to accidentally overwrite your compiled Pascal OBJ), and you should end up with a compiled, runnable app.

Running

Let's test this theory!

Press 'R' at the Workshop prompt, give it your app's name (LisaTestApp, in this case - and the .OBJ extension is implied), and hit enter.

Success! You now have a running app that uses QuickDraw to display text. Getting this installed to the Office System (the regular LisaOS) is a whole other hassle.

Installing

You need to copy your LisaTestApp.OBJ to {T*}Obj (replace * with any high number, this is your 'Tool' number - you don't want to overwrite/conflict with any existing Tool numbers - system or otherwise). In my case, {T1337}Obj, of course.

From the Run prompt, use InstallTool. This is pretty straightforward, and should be easy to navigate. Give it your chosen Tool ID and it will do the rest.

With that, quit & reboot into Lisa Office System. You should have a new icon on your hard drive, that, when double-clicked, runs your code!

MASSIVE DISCLAIMER

…and at this point, you realize your app has locked up, and the only thing you can do is reboot LisaOS. Welp.

Lisa's Office System environment needs a lot more boilerplate code to actually make a running app, with windows and menus. This is so much more complicated that it's well outside the scope of this piece. There is a very helpful set of tutorials and labs (Lisa ToolKit Self-Paced Training, published by Apple) from 1984 that will help you get up and running with a ToolKit stationery app. There's also plenty of QuickDraw sample code on the Workshop drive from which you can learn and explore.

Sadly, as I mentioned at the start, virtually no documentation for any of this exists online. If you're lucky (like I was), you might be able to glean enough information from ancient PDFs and manuals.

As a parting gift, I leave you with some QuickDraw sample code ported to a working object-oriented Pascal (Clascal) ToolKit app in Lisa Office System:

UPDATE

Rather than try and tutorialize it, I've posted my complete ToolKit sample code on Github. Perhaps someday it will be useful to somebody. :-)

5 notes

·

View notes

Text

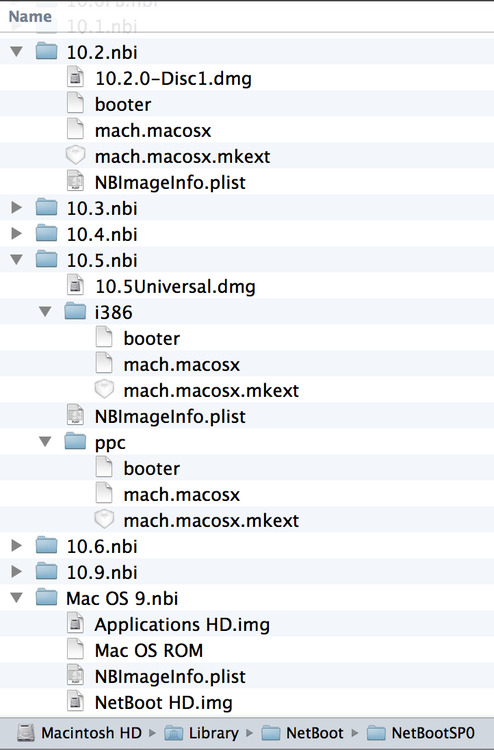

NetBoot PowerPC & Intel Macs from Mavericks Server

As part of some maintenance here, I did a little research as to how to set up NetBoot for various different Macs. For this piece, interchange 'NetBoot' with 'NetInstall' if you're being pedantic - I'm NetBooting the install disc for a particular OS. NetBooting a full install should also be possible using the same techniques.

Mavericks Server (an app free to all developers) has a built-in NetBoot (NetInstall) server GUI, but it only supports a handful of modern versions of OS X. Thankfully, if you follow the instructions in the bootpd manpage you can manually build NetBoot images supporting both PowerPC and Intel Macs going back to OS X v10.2.

It should be noted that 10.0, 10.1, and even Mac OS 9 NetBoot images are also 'supported' in theory, however the OSes themselves will not actually mount the OS over the network (presumably due to changes in OS X, AppleTalk, AFP, etc over time moreso than intentional deprecation) so none of them will boot as intended. If you want to hack together a custom Mac OS ROM to boot Classic over NetBoot, be my guest!

Reasons to use NetBoot

You regularly need an easy, fast, way of installing OS X on multiple machines. NetBoot ALL the things!

You may not have a functional disc drive anymore (it happens!)

Installing over ethernet is much faster than from the CD-ROM drives in older Macs

It works over WiFi on modern Macs

You do custom kernel or OS development, and need a faster way of booting PPC or x86 Macs

Gigabit ethernet is quite likely faster than any SSD you could install in an older Mac

Directory Structure

NetBoot images are folders in your NetBoot storage location (typically /Library/NetBoot/NetBootSP0) with a .nbi extension and a handful of files inside - typically a single disk image, a settings plist, a bootloader, a kernel and a kernel extension cache. You can provide both PowerPC and x86 versions of the latter three items for OSes that support both PowerPC and x86, like 10.4 or 10.5.

Building a NetBoot Install Image

First thing to do is make or find a disk image of the OS you want to install. Be warned that a Mac usually comes with the minimum OS that will boot it, so if you make e.g. a 10.2.0 install disc image, it will not work on a first-gen PowerMac G5 even though that G5 came with 10.2.7. As updated retail discs are generally hard to come by, you may want to make images of the discs that came with your Mac when you bought it. Common sense applies, YMMV.

You will need some things from the disk image to build the NetBoot image in the first place; these files are architecture-specific if you're making a Universal image, so provide copies with the right arch in the corresponding ppc or i386 folder. If you don't use an architecture subfolder, it will assume the files are ppc-only.

Disk Image

Make a disk image of your chosen install disc; various versions can also be downloaded from Apple's Developer Site going back to 10.2 Server. You will want to convert any ISOs to a UDRW dmg first (hdiutil convert -format UDRW image.iso -o output.dmg).

Bootloader

The bootloader file, typically renamed 'booter', aka BootX (the CHRP PPC Open Firmware Mac OS X booter) or boot.efi (for x86 Macs), can be found on the install disc of your choice in the following places:

/usr/standalone/ppc/bootx.bootinfo

/usr/standalone/i386/boot.efi

This file is what shows the initial Apple logo or Happy Mac while it loads the kernel & kextcache over the network (you'll know the kernel is loaded successfully when you see the spinner or rainbow wheel).

Kernel & Kextcache

The OS X Kernel is /mach_kernel on your install disc. Copy this to your NetBoot image as 'mach.macosx'. Similarly, the kernel cache will be found at /System/Library/Extensions.mkext, which you copy as 'mach.macosx.mkext'. You may find you need or want to 'lipo' the specific architecture you want from both of these binaries to the corresponding subfolder.

NBImageInfo.plist

Refer to the bootpd manpage for detailed instructions as to how to build this settings file. For reference, here's the one I use for my Universal 10.5 image. 'NFS' seems to be the best supported Type for older versions of OS X, from what I've found. You must use a unique 'Index' for each image.

Booting

Once you have a properly-built image, have restarted your NetBoot server instance (toggle the switch in Mavericks Server), and have your target Mac connected via Ethernet, you should see a NetBoot icon if you hold alt/option while booting. At this point, you can select it and hold down the usual modifiers when booting (v for verbose, for instance).

Limitations

Intel Macs support multiple NetBoot sources, with names, and modern devices will NetBoot happily over WiFi.

PowerPC Macs have certain limitations where NetBoot is involved; they seem to only show a single NetBoot disk, and do not show its name. This can cause problems if you have more than one ppc NetBoot volume, so you can either use a supported device model/MAC address whitelist or disable other PPC images. Typically they are only supported over Ethernet, and are far more finicky.

Misc Notes

At the time of this writing, OS 9, 10.0 and 10.1 do not boot completely anymore. If this changes in the future, it's worth noting the following:

10.0's install disc does not have an Extensions.mkext. You can build one yourself using mkextcache on a machine (or VM) running 10.1.

Mac OS 9 NetBoot images are crafted in a different way (documented in the manpage!), but fortunately Apple still provides a pre-made image on its website. You can use the excellent Pacifist to extract its contents on a modern machine.

Earlier versions of OS X, like the Public Beta or Developer Previews have a very different disc layout, so they couldn't be NetBooted in the same way anyway. That's not to say it can't be done… ;-)

9 notes

·

View notes

Text

The Quest for A Perfect Lotto Machine Simulation

Today, Lotto Machine went live on Google Play for the first time, 23 months after the launch of the original on iOS.

Why did it take so long? Actually, it didn't! This new version is the result of a project I've been working on for [just] a week that is a complete rewrite of the app - in 3D, with 3D physics.

How did we get here?

I've always maintained that the physics simulation is my inspiration for making this app - originally built in Cocos2D, then later ported to SpriteKit for the iOS 7 launch. Both of these, however, are 2D simulations (since apps are generally 2D, this seemed the obvious choice).

After launching the first wave of High Caffeine Content's iOS 7 ports, I turned my attention to Unity3D as my next learning project. After spending some months learning how to build things with it, and after the launch of Unity3D's 2D workflow, it suddenly became clear that one could build a 2D app relatively easily in it.

Lotto Machine seemed like the perfect choice. In fact, after a few hours I had a running prototype that provided a much better simulation than I had on iOS, and all without a single line of code.

Of course, the primary benefit of using Unity3D over pretty much anything else is the support for virtually any platform out there. Web? Check. Desktop? Check. iOS, Android, Windows Phone? Check.

Not only could I use Unity to build a better app for iOS, but I could also use it to bring the app to a whole host of new platforms.

Fast-forward to now, and we have one finished Android app. As the Google Play store process is the fastest of the lot, it makes sense to be the first port of call - if there were problems, I could push 240 updates (one per hour) to the app on Google Play before the first binary would even have hit the App Store or Windows Phone Marketplace. If all goes well, there can be a Windows Phone release before long, and eventually it will make its way full-circle to iOS.

The quest for a perfect simulation has taken me through many engines and rewrites, and has ended up with me rebuilding the app in a 3D game engine tool, with 3D physics and lighting. It's still not perfect, by any means, but it feels like an achievement.

4 notes

·

View notes

Text

2007's pre-M3 version of Android: the Google Sooner

When Google first showed off Android, they showed it running on a device very similar to Blackberries or Nokia E-class devices of the time. This device was the Google Sooner - an OMAP850 device built by HTC, with no touchscreen or WiFi. This was the Android reference device, the device they originally built the OS on.

Recently, I got access to a Google Sooner running a very early version of Android. With all the recent information coming out of the Oracle vs Google trial, I thought it would be interesting to take you on a brief tour of the OS. The build of Android this is running was built on May 15th 2007 - four months after the iPhone was announced; the first M3 version of Android was announced in November 2007, and Android 1.0 didn't come 'till a year later.

Hardware

The Google Sooner, aka the HTC EXCA 300, runs on an OMAP850 with 64MB RAM, and comes in two colors: black, and white. It has a 320x240 LCD screen (non touch) and a 1.3 megapixel camera sensor on the back, which supports video recording. Its curvy profile is surprisingly light and has a certain quality to it. It has a full QWERTY keyboard, a four-way d-pad, four system buttons (menu, back, home, and favourites) and call/end call buttons. Inside is a 2G radio, which is capable of EDGE speeds, but no WiFi or 3G. It has a mini-SD slot (not micro-SD), and a mini-USB port.

Software

This device runs build htc-2065.0.8.0.0, and was built on May 15th of 2007. This means it's much earlier than any previous look we've had at Android to date - a good six months before the milestone 3 (M3) version of Android, the initial release, was announced.

Home Screen

This is the primary interface to Android. You get a handful of Gadgets (a Clock, for example, and applications can provide their own), and a Google Search bar (that pops up when you hit the down arrow). There is no conventional homescreen with widgets - this is literally all you get when you turn on the device. It was an OS designed to search Google from the very start.

Apps

Hit the Home button and a drawer of apps shows up. This appears to be the shortcuts bar - any time you're inside an app you can hit the menu key and add the app to this. You can also add specific activities in an app to the favorites bar - for example Bluetooth settings, similar to those allowed on Windows Phone 7. You can also access your notifications and Cell/Battery settings from the shortcuts bar.

Hit the down arrow and the shortcuts bar expands to show all applications installed on the system. This acts just as you'd expect from a 2006-era non-touch device. There are no sorting or view options; what you see is what you get. The applications drawer appears as an overlay, so you can access it from any app without navigating back to a home screen.

Funnily enough, there's a second 'All Applications' screen, this time housed inside an app. It has a slightly different look and feel, but works exactly the same.

Future home screen

If you remember the M3 version of Android, as shown in the original announcement video, it had a very different home screen. This homescreen actually exists on this Sooner's build of the OS, but as an app. I imagine it wasn't finished yet, and as they prototyped this new homescreen they just left it as an app you can launch (similar to how you can have multiple launchers on Android today). Here you have the shortcut dock across the bottom of the home screen. Eventually, by the time Android was released, this became the traditional homescreen we know today.

Browser

The browser on the Sooner is based on WebKit [ Mozilla/5.0 (Macintosh ; U; Intel Mac OS X; en) AppleWebKit/522+ (KHTML, like Gecko) Safari/419.3 ] and seems to pretend to be a Mac (perhaps to help mask itself, since this is many months before Android was announced). Browsing is a painful and slow experience, even though rendering isn't too bad.

Gmail

A rudimentary Gmail app is included, with basic access to your email. This is a far cry from Gmail on Android today.

Google Talk

Google Talk is present and seems to work great (if you like green…).

Other Apps

Here are just a few of the included apps. Some work, some don't, and some work partially. All are very rudimentary at this stage. I'm not sure if this was before or after The Astonishing Tribe [re]designed Android, but I'm betting before. Although Maps, YouTube and Google Earth come on this device, I wasn't able to get any of them working to show you (Maps and YouTube launch, but neither seem to be able to access content. It's quite possible that the server endpoints they used for testing no longer resolve.

Note Pad

Address Book

Calculator

Calendar

This doesn't quite work in my build, but here is what the error looks like.

Camera

Text Messages

Wrap-up

It's quite clear that Android was being designed to a completely different target before the iPhone was released. What we see here would have fitted in perfectly with the world of Symbian and BlackBerry. This early build of Android is in fact even less capable and mature than the 2004 release of Symbian Series 90 (Hildon), the OS that runs on the Nokia 7700 and 7710 - Nokia's first, and only, pre-iPhone touchscreen smartphones. It's not hard to see that iPhone really changed the thinking across the entire industry, and caused everybody to start from scratch. Android, webOS, Windows Phone 7, Windows 8, BlackBerry 10 - all of these exist because of the iPhone, and standing on its shoulders they have made some amazing and unique contributions to the ecosystem.

As I mentioned in my Úll talk last week, the moment we saw the iPhone for the first time it was so clear that everything beyond this point would be completely different - it wasn't just about smartphones, it was about the future of computing. We live in a world that would have seemed distantly futuristic only 5 years ago, thanks to all these OSes. It's amazing how far we've come in such a short time, and I can't wait to see what comes next.

5 notes

·

View notes

Text

When is a Smartphone OS not a Smartphone OS?

If you're thinking purely in terms of 'smartphones' whenever you think of iOS, Android and Windows Phone, you've blown it. It's so incredibly short sighted to think of these OSes as a smartphone play - they are all so much more than that.

These are the three OSes going to power consumer devices (phones, tablets, laptops, desktops, TVs, etc) for the next 20-30 years, or be the template for such. Google recently bought Motorola, so many assume they'll be making smartphones and tablets under a Google brand. But a key thing being overlooked is that Motorola's smartphones are also PCs; starting with the Atrix, every flagship Motorola phone has had the capability to turn into a laptop or desktop PC, or media center with the requisite dock. You drop the phone in the dock, start using the keyboard and mouse on your big screen LCD, and you have an instant computer - one that was in your pocket seconds earlier.

This is as much a part of Google's strategy as devices; Google is poised to take on the 'PC' market, and Android is finally their vehicle to let them do this.

Many originally called ChromeOS a shot across Microsoft's bow; today, several years later it's unclear where ChromeOS fits into an Android-everywhere strategy. Maybe the two will merge in the future, or maybe ChromeOS is more a research project for 'future disruption', to steal a term from Nokia. I don't think we're ready for a browser-only platform just yet, and I don't think the browser is the final evolution of the web by any stretch. Apps are as much part of the web as websites are; the web won't always mean HTML/JavaScript. Some key problems to solve are compatibility and search across this new web, of course, but that's a whole other article.

With Windows Phone, many posit that Microsoft is moving everything to the Windows RT kernel (aka Windows 8); the phone could be as much Windows 8 as the tablet or desktop. Microsoft have been pretty candid about their duelling Metro/Desktop environments in Windows 8, but it starts to paint a familiar picture once all the information is in play. Your Windows Phone, which may run on ARM or perhaps x86, could dock and power a desktop display, with a mouse, keyboard, and even legacy Windows apps. In the future, I see no distinction between Windows Phone and Windows; for Windows Phone to fail, the entirety of Windows has to go down with it.

A whole new generation of computing, where everything can be powered by one device; that's where Microsoft and Google are positioning themselves. They are platform makers, this is the most obvious and inevitable possible platform play. If you extrapolate a little, it's quite clear that this goes beyond devices; if we're talking about dumb desktop shells that you dock into, why not the same for phones and tablets? What if the computer itself is something you always wear - a wristband perhaps. Wirelessly, it could beam its display to a blank shell of a smartphone in your pocket, or the blank shell of a tablet, or a desktop PC, or augmented reality glasses.

Of course, this won't happen tomorrow, but it could easily happen in the next five years. It's not something on the distant horizon, either. We will start to see more docking phones like Motorola's, or phone/tablet devices like Padfone, or Google's Glasses technology. Eventually the physical connectors will go away, and the docking will all be wireless - at that point, for how long will we still need distinct devices, CPUs, memory and data connections for each display and input mechanism in our lives?

Apple, unlike the other companies, is a device maker, and completely opaque. I'm not sure a single-device strategy would make sense for them, at least in the short term, but I do expect iOS to spread to more devices and form factors. We've already seen it with iPad and AppleTV, and I still feel that iOS and OS X are on a collision course for the desktop. All of these OSes are the mainstream personal computer platforms of tomorrow.

The personal computer hasn't yet come. One day we will be the personal computer. Current generation smartphone OSes are only the tip of the iceberg, and the big players understand this. It's a completely different game being played now. And this, more than anything else, is why companies who think they're just making smartphones (RIM, for example) will have no place in the world of tomorrow.

0 notes

Text

Return AirPrint sharing to Mac OS X v10.6.5

Today, Apple released Mac OS X v10.6.5 which was supposed to bring AirPrint to any printer connected to your Mac. Only problem is, a last minute issue (due to patent trolling?) has caused them to pull support for AirPrint in OS X.

Don’t panic! You can return it, but you’re going to have to pull some files from a prerelease version of 10.6.5.

The files you need are:

/usr/libexec/cups/filter/urftopdf/usr/share/cups/mime/apple.convs/usr/share/cups/mime/apple.types

If you migrate those from a 10.6.5 prerelease build (there seem to be many floating around torrent sites and file sharing sites - build 10H542 works; naturally I can’t link you to the files themselves, sorry!) to your machine you’re just one step away from having AirPrint working.

The final key thing is you have to remove and re-add your printer in the Print & Fax preferences pane. Once you do that (and share your printer in the Sharing preferences pane) it should show up on any iOS devices that support AirPrint.

Nerdy extra info: Basically, doing this should add the image/urf mimetype to your shared printer, and a new Bonjour field ‘URF’. Once you have those, it should work. Theoretically, there’s no reason someone can’t write a server application that broadcasts said Bonjour info and prints for you, so you don’t have to use files from a prerelease build. I would expect something like that to appear over the next few weeks.

Thanks to Patrick McCarron for helping me debug this method

Good luck!

0 notes

Text

Apple's iBooks Dynamic Page Curl

A week ago at NSConference I decided to try and figure out how Apple performed the page curl animation shown in the iPad announcement keynote.

It ended up being pretty easy to implement, and the page curl code has been in the OS since the beginning (although it’s currently a private class - I’ve filed a radar asking to open the API that you can dupe if you’d like. rdar://problem/7616859). Basically you have to add in the finger angle tracking code and figure out the basic physics to make it believable. My code is pretty rough but it gives you a basic idea of how to get this working.

Here’s hoping it’s opened up because if it’s not, then the iBooks app (distributed through the App Store) will be provably using private APIs which doesn’t help anyone.

Here’s a sample project that you should be able to build and run which shows the effect in action; the samplecode currently works for iPhone 3.0 and above.

Download Sample Code

0 notes

Text

Nook v1.0 on Android Emulator

I decided to check out the Nook earlier today; naturally it’s not available in Ireland so I downloaded the firmware update from Barnes and Noble and set to work on getting it to run on something.

I didn’t expect to get very far, but after a little hacking it was actually working.

To get it to run I had to:

• grab the firmware update using the tools at http://code.nookdevs.com/

• unpack the system folder out of the firmware update using said tools and gunzip

• replace lib/libaudioflinger.so with that from a clean Cupcake build of Android (took it from the emulator)

• disassemble the classes.dex file inside framework/services.jar using baksmali

• modify ‘ServerThread.smali’ to remove the line ” if-lt v0, v1, :cond_483 ” (it looped on waiting for eInk display)

• recompile the dex file using smali and re-insert it into the jar

• create a system.img from the system folder using mkyaffs2image

• replace the system.img of my Cupcake emulator build with the Nook one just created

• boot the emulator with a resolution of 480x944 (the highest I could get it; it’s about 120px too thin for the eInk display)

This is by no means a guide, but it should hopefully point more capable Android hackers in the right direction if they want to get this booting. I’m pretty sure that’s all that was required.

Networking is working fine; and the arrow keys on the keyboard control the page turning

Here are some screenshots!

UPDATE:

A video for you non-believers ;-)

Nook on Android Emulator from Steven Troughton-Smith on Vimeo.

0 notes

Text

Speech Synthesis on iPhone 3GS

Posted this on Twitter a week back, but maybe it’s of some interest to blog readers; here’s how to do simple voice synthesis on the iPhone 3GS (3GS-only, I’m afraid). It’s a private API, but hopefully if we file enough Radars they’ll make it a public one.

To enable the following code to work you’ll need to link the VoiceServices.framework (from the PrivateFrameworks folder of the SDK) in your app.

NSObject *v = [[NSClassFromString(@”VSSpeechSynthesizer”) alloc] init]; [v startSpeakingString:@”All your base are belong to us”];

Apologies for my lazy ‘NSObject’ define above, but you get the idea :-)

With that, you have simple speech synthesis for your application (obviously you cannot include this when you submit to the App Store as it links to a private framework, but you can use it in your internal applications). It requires the 3GS because the Speech stuff just isn’t in the firmware for the older devices.

0 notes

Text

Using Dynamic Library Injection with the iPhone Simulator [REDUX]

Previously I had blogged about developing dynamic libraries / SpringBoard plugins using the iPhone Simulator included with the SDK.

Unfortunately, starting with iPhone OS 2.2, the old method of using a shell script to bootstrap SpringBoard no longer works. While the long-way-round gdb method is still usable, I decided that I’d have to find a way to make my plugins work again.

I devised a simple ‘Foundation Tool’ to bootstrap as before, in place of my previous shell script. The code is available here.

I’m not sure what the issue is, whether it was an intentional obfuscation or not (I’m watching you Apple!), but I can confirm that this new method works fine.

Simply edit the source file linked above, compile it, rename your original SpringBoard binary to something else (“SpringBoard_b” in the example) and save your newly compiled bootstrapper in place of the original SpringBoard binary.

Now when you launch iPhone Simulator, it should insert your library as before, so you can get your quick development turn around time back.

0 notes

Text

How to enable Emoji systemwide

As an addendum to my last post, I decided to figure out how to enable Emoji systemwide. My findings were first posted on Twitter, but I’m putting them here for persistence.

You need to edit the file /User/Library/Preferences/com.apple.Preferences.plist on the device -> whether you use a jailbreak to achieve this or merely some iTunes backup editor is up to you. Add the following boolean key as ‘true’: KeyboardEmojiEverywhere

Then merely go to the Keyboards section of the Settings app, hit Japanese, and turn on Emoji. Will work for any text field/view in the OS, including on websites, AND including the titles of items on SpringBoard (e.g. if you save a bookmark to the home screen).

Have fun!

1 note

·

View note

Text

Using Dynamic Library Injection with the iPhone Simulator

UPDATE: This method no longer works for iPhone OS SDK 2.2 - please see my new article for an updated method.

Many developers who are not targeting the App Store are developing extensions or plugins to SpringBoard, the iPhone’s shell, using a piece of software called MobileSubstrate which simplifies the loading of your own code into SpringBoard. But, for development and debugging purposes, this takes valuable time as each time you build your plugin you have to delete the old version from the phone, sign your plugin, push the new version to the phone, and restart SpringBoard. All these steps take a little time off your life, but if you have access to the iPhone Simulator included with the SDK things are a bit easier!

You can set up automatic loading of your dynamic library by replacing the SpringBoard binary with a shell script and passing the DYLD_INSERT_LIBRARIES option to the original SpringBoard with the path to your library, or if you want a quick once-off test, you can use gdb as below:

• Run the iPhone Simulator

• Get the PID of the running SpringBoard

• Use gdb to attach to said PID

• Use the following gdb commands to load your code

call (int)dlopen(“/path/to/MyPlugin.dylib”, 2)

continue

Your code should now inject itself into SpringBoard on the iPhone Simulator so you can test on the desktop, take screenshots, check for leaks, or merely stress test your application against the different builds of the iPhone OS (the Simulator allows version switching on the fly). For me, I find this very useful (I use the DYLD_INSERT_LIBRARIES method) so that I can iterate very quickly when developing Stack. You can also get the console output from SpringBoard by launching the iPhone Simulator from Terminal, so for debugging it’s very important. Hope this post may be of some use.

0 notes