#@dataclass

Explore tagged Tumblr posts

Text

Scope Computers

Scope Computers

📊 Master the Future with Data Science: Your Path to Innovation Starts Here! 🚀

#data#science#training#learndatascience#studydata#techtraining#onlinecourse#dataeducation#datalearning#scienceclass#techskills#dataclass#sciencetraining#courseonline#educationtech#skillup#studyonline#techscience#datastudy#scienceeducation

0 notes

Text

A Technical Guide to Python DataClasses

As the name suggests, these are classes that act as data containers. But what exactly are they? In this blog, MarsDevs presents you with the introduction and technicalities of Python DataClasses. You can now define the classes with less code and more out-of-the-box functionality. MarsDevs illustrates how.

Click here to read more: https://www.marsdevs.com/blogs/a-technical-guide-to-python-data-classes

0 notes

Text

Today was figuring out how to do an n long modular list. Next is figuring out the formula for damage calculation and resist refactoring the 17 skills into a dataclass and a for loop.

3 notes

·

View notes

Link

[ad_1] In this tutorial, we introduce the Gemini Agent Network Protocol, a powerful and flexible framework designed to enable intelligent collaboration among specialized AI agents. Leveraging Google’s Gemini models, the protocol facilitates dynamic communication between agents, each equipped with distinct roles: Analyzer, Researcher, Synthesizer, and Validator. Users will learn to set up and configure an asynchronous agent network, enabling automated task distribution, collaborative problem-solving, and enriched dialogue management. Ideal for scenarios such as in-depth research, complex data analysis, and information validation, this framework empowers users to harness collective AI intelligence efficiently. import asyncio import json import random from dataclasses import dataclass, asdict from typing import Dict, List, Optional, Any from enum import Enum import google.generativeai as genai We leverage asyncio for concurrent execution, dataclasses for structured message management, and Google’s Generative AI (google.generativeai) to facilitate interactions among multiple AI-driven agents. It includes utilities for dynamic message handling and structured agent roles, enhancing scalability and flexibility in collaborative AI tasks. API_KEY = None try: import google.colab IN_COLAB = True except ImportError: IN_COLAB = False We initialize the API_KEY and detect whether the code is running in a Colab environment. If the google.colab module is successfully imported, the IN_COLAB flag is set to True; otherwise, it defaults to False, allowing the script to adjust behavior accordingly. class AgentType(Enum): ANALYZER = "analyzer" RESEARCHER = "researcher" SYNTHESIZER = "synthesizer" VALIDATOR = "validator" @dataclass class Message: sender: str receiver: str content: str msg_type: str metadata: Dict = None Check out the Notebook We define the core structures for agent interaction. The AgentType enum categorizes agents into four distinct roles, Analyzer, Researcher, Synthesizer, and Validator, each with a specific function in the collaborative network. The Message dataclass represents the format for inter-agent communication, encapsulating sender and receiver IDs, message content, type, and optional metadata. class GeminiAgent: def __init__(self, agent_id: str, agent_type: AgentType, network: 'AgentNetwork'): self.id = agent_id self.type = agent_type self.network = network self.model = genai.GenerativeModel('gemini-2.0-flash') self.inbox = asyncio.Queue() self.context_memory = [] self.system_prompts = AgentType.ANALYZER: "You are a data analyzer. Break down complex problems into components and identify key patterns.", AgentType.RESEARCHER: "You are a researcher. Gather information and provide detailed context on topics.", AgentType.SYNTHESIZER: "You are a synthesizer. Combine information from multiple sources into coherent insights.", AgentType.VALIDATOR: "You are a validator. Check accuracy and consistency of information and conclusions." async def process_message(self, message: Message): """Process incoming message and generate response""" if not API_KEY: return "❌ API key not configured. Please set API_KEY variable." prompt = f""" self.system_prompts[self.type] Context from previous interactions: json.dumps(self.context_memory[-3:], indent=2) Message from message.sender: message.content Provide a focused response (max 100 words) that adds value to the network discussion. """ try: response = await asyncio.to_thread( self.model.generate_content, prompt ) return response.text.strip() except Exception as e: return f"Error processing: str(e)" async def send_message(self, receiver_id: str, content: str, msg_type: str = "task"): """Send message to another agent""" message = Message(self.id, receiver_id, content, msg_type) await self.network.route_message(message) async def broadcast(self, content: str, exclude_self: bool = True): """Broadcast message to all agents in network""" for agent_id in self.network.agents: if exclude_self and agent_id == self.id: continue await self.send_message(agent_id, content, "broadcast") async def run(self): """Main agent loop""" while True: try: message = await asyncio.wait_for(self.inbox.get(), timeout=1.0) response = await self.process_message(message) self.context_memory.append( "from": message.sender, "content": message.content, "my_response": response ) if len(self.context_memory) > 10: self.context_memory = self.context_memory[-10:] print(f"🤖 self.id (self.type.value): response") if random.random() < 0.3: other_agents = [aid for aid in self.network.agents.keys() if aid != self.id] if other_agents: target = random.choice(other_agents) await self.send_message(target, f"Building on that: response[:50]...") except asyncio.TimeoutError: continue except Exception as e: print(f"❌ Error in self.id: e") Check out the Notebook The GeminiAgent class defines the behavior and capabilities of each agent in the network. Upon initialization, it assigns a unique ID, role type, and a reference to the agent network and loads the Gemini 2.0 Flash model. It uses role-specific system prompts to generate intelligent responses based on incoming messages, which are processed asynchronously through a queue. Each agent maintains a context memory to retain recent interactions and can either respond directly, send targeted messages, or broadcast insights to others. The run() method continuously processes messages, promotes collaboration by occasionally initiating responses to other agents, and manages message handling in a non-blocking loop. class AgentNetwork: def __init__(self): self.agents: Dict[str, GeminiAgent] = self.message_log = [] self.running = False def add_agent(self, agent_type: AgentType, agent_id: Optional[str] = None): """Add new agent to network""" if not agent_id: agent_id = f"agent_type.value_len(self.agents)+1" agent = GeminiAgent(agent_id, agent_type, self) self.agents[agent_id] = agent print(f"✅ Added agent_id to network") return agent_id async def route_message(self, message: Message): """Route message to target agent""" self.message_log.append(asdict(message)) if message.receiver in self.agents: await self.agents[message.receiver].inbox.put(message) else: print(f"⚠️ Agent message.receiver not found") async def initiate_task(self, task: str): """Start a collaborative task""" print(f"🚀 Starting task: task") analyzer_agents = [aid for aid, agent in self.agents.items() if agent.type == AgentType.ANALYZER] if analyzer_agents: initial_message = Message("system", analyzer_agents[0], task, "task") await self.route_message(initial_message) async def run_network(self, duration: int = 30): """Run the agent network for specified duration""" self.running = True print(f"🌐 Starting agent network for duration seconds...") agent_tasks = [agent.run() for agent in self.agents.values()] try: await asyncio.wait_for(asyncio.gather(*agent_tasks), timeout=duration) except asyncio.TimeoutError: print("⏰ Network session completed") finally: self.running = False Check out the Notebook The AgentNetwork class manages the coordination and communication between all agents in the system. It allows dynamic addition of agents with unique IDs and specified roles, maintains a log of all exchanged messages, and facilitates message routing to the correct recipient. The network can initiate a collaborative task by sending the starting message to an Analyzer agent, and runs the full asynchronous event loop for a specified duration, enabling agents to operate concurrently and interactively within a shared environment. async def demo_agent_network(): """Demonstrate the Gemini Agent Network Protocol""" network = AgentNetwork() network.add_agent(AgentType.ANALYZER, "deep_analyzer") network.add_agent(AgentType.RESEARCHER, "info_gatherer") network.add_agent(AgentType.SYNTHESIZER, "insight_maker") network.add_agent(AgentType.VALIDATOR, "fact_checker") task = "Analyze the potential impact of quantum computing on cybersecurity" network_task = asyncio.create_task(network.run_network(20)) await asyncio.sleep(1) await network.initiate_task(task) await network_task print(f"\n📊 Network completed with len(network.message_log) messages exchanged") agent_participation = aid: sum(1 for msg in network.message_log if msg['sender'] == aid) for aid in network.agents print("Agent participation:", agent_participation) def setup_api_key(): """Interactive API key setup""" global API_KEY if IN_COLAB: from google.colab import userdata try: API_KEY = userdata.get('GEMINI_API_KEY') genai.configure(api_key=API_KEY) print("✅ API key loaded from Colab secrets") return True except: print("💡 To use Colab secrets: Add 'GEMINI_API_KEY' in the secrets panel") print("🔑 Please enter your Gemini API key:") print(" Get it from: try: if IN_COLAB: from google.colab import userdata API_KEY = input("Paste your API key here: ").strip() else: import getpass API_KEY = getpass.getpass("Paste your API key here: ").strip() if API_KEY and len(API_KEY) > 10: genai.configure(api_key=API_KEY) print("✅ API key configured successfully!") return True else: print("❌ Invalid API key") return False except KeyboardInterrupt: print("\n❌ Setup cancelled") return False Check out the Notebook The demo_agent_network() function orchestrates the entire agent workflow: it initializes an agent network, adds four role-specific agents, launches a cybersecurity task, and runs the network asynchronously for a fixed duration while tracking message exchanges and agent participation. Meanwhile, setup_api_key() provides an interactive mechanism to securely configure the Gemini API key, with tailored logic for both Colab and non-Colab environments, ensuring the AI agents can communicate with the Gemini model backend before the demo begins. if __name__ == "__main__": print("🧠 Gemini Agent Network Protocol") print("=" * 40) if not setup_api_key(): print("❌ Cannot run without valid API key") exit() print("\n🚀 Starting demo...") if IN_COLAB: import nest_asyncio nest_asyncio.apply() loop = asyncio.get_event_loop() loop.run_until_complete(demo_agent_network()) else: asyncio.run(demo_agent_network()) Finally, the above code serves as the entry point for executing the Gemini Agent Network Protocol. It begins by prompting the user to set up the Gemini API key, exiting if not provided. Upon successful configuration, the demo is launched. If running in Google Colab, it applies nest_asyncio to handle Colab’s event loop restrictions; otherwise, it uses Python’s native asyncio.run() to execute the asynchronous demo of agent collaboration. In conclusion, by completing this tutorial, users gain practical knowledge of implementing an AI-powered collaborative network using Gemini agents. The hands-on experience provided here demonstrates how autonomous agents can effectively break down complex problems, collaboratively generate insights, and ensure the accuracy of information through validation. Check out the Notebook. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 99k+ ML SubReddit and Subscribe to our Newsletter. Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences. [ad_2] Source link

0 notes

Text

Project Title: Massive-Scale NLP Preprocessing Pipeline with Pandas, Hugging Face Transformers, and Regex for Document Classification.

✅ Code created for the advanced project: # Project Title: Massive-Scale NLP Preprocessing Pipeline with Pandas, Hugging Face Transformers, and Regex for Document Classification # Project Ref: ai-ml-ds-vXn7kZrP2Bh from typing import List, Tuple, Dict, Any import pandas as pd import numpy as np import re import os import string import torch from dataclasses import dataclass from transformers…

0 notes

Text

Project Title: Massive-Scale NLP Preprocessing Pipeline with Pandas, Hugging Face Transformers, and Regex for Document Classification.

✅ Code created for the advanced project: # Project Title: Massive-Scale NLP Preprocessing Pipeline with Pandas, Hugging Face Transformers, and Regex for Document Classification # Project Ref: ai-ml-ds-vXn7kZrP2Bh from typing import List, Tuple, Dict, Any import pandas as pd import numpy as np import re import os import string import torch from dataclasses import dataclass from transformers…

0 notes

Text

Project Title: Massive-Scale NLP Preprocessing Pipeline with Pandas, Hugging Face Transformers, and Regex for Document Classification.

✅ Code created for the advanced project: # Project Title: Massive-Scale NLP Preprocessing Pipeline with Pandas, Hugging Face Transformers, and Regex for Document Classification # Project Ref: ai-ml-ds-vXn7kZrP2Bh from typing import List, Tuple, Dict, Any import pandas as pd import numpy as np import re import os import string import torch from dataclasses import dataclass from transformers…

0 notes

Text

Project Title: Massive-Scale NLP Preprocessing Pipeline with Pandas, Hugging Face Transformers, and Regex for Document Classification.

✅ Code created for the advanced project: # Project Title: Massive-Scale NLP Preprocessing Pipeline with Pandas, Hugging Face Transformers, and Regex for Document Classification # Project Ref: ai-ml-ds-vXn7kZrP2Bh from typing import List, Tuple, Dict, Any import pandas as pd import numpy as np import re import os import string import torch from dataclasses import dataclass from transformers…

0 notes

Text

Project Title: Massive-Scale NLP Preprocessing Pipeline with Pandas, Hugging Face Transformers, and Regex for Document Classification.

✅ Code created for the advanced project: # Project Title: Massive-Scale NLP Preprocessing Pipeline with Pandas, Hugging Face Transformers, and Regex for Document Classification # Project Ref: ai-ml-ds-vXn7kZrP2Bh from typing import List, Tuple, Dict, Any import pandas as pd import numpy as np import re import os import string import torch from dataclasses import dataclass from transformers…

0 notes

Text

𝐓𝐨𝐩 5 𝐏𝐲𝐭𝐡𝐨𝐧 𝐒𝐤𝐢𝐥𝐥𝐬 𝐭𝐨 𝐌𝐚𝐬𝐭𝐞𝐫 𝐢𝐧 2025 | 𝐁𝐨𝐨𝐬𝐭 𝐘𝐨𝐮𝐫 𝐏𝐲𝐭𝐡𝐨𝐧 𝐏𝐫𝐨𝐠𝐫𝐚𝐦𝐦𝐢𝐧𝐠

𝐓𝐨𝐩 5 𝐏𝐲𝐭𝐡𝐨𝐧 𝐒𝐤𝐢𝐥𝐥𝐬 𝐭𝐨 𝐌𝐚𝐬𝐭𝐞𝐫 𝐢𝐧 2025 | 𝐁𝐨𝐨𝐬𝐭 𝐘𝐨𝐮𝐫 𝐏𝐲𝐭𝐡𝐨𝐧 𝐏𝐫𝐨𝐠𝐫𝐚𝐦𝐦𝐢𝐧𝐠 Ready to take your Python skills to the next level in 2025? In this video, we break down the Top 5 Python Skills you need to master for better performance, scalability, and flexibility in your coding projects. Top 5 Python Skills: Object-Oriented Programming (OOP) – Learn about classes, objects, inheritance, polymorphism, and encapsulation. Python Memory Management & Performance Optimization – Master garbage collection, memory profiling, and optimize code performance with generators and multiprocessing. Asynchronous Programming – Handle concurrent tasks efficiently using asyncio, threading, and multiprocessing. Exception Handling & Debugging – Learn to write robust code with try-except blocks and debug using tools like pdb and PyCharm. Advanced Python Typing & Decorators – Use type hints, dataclasses, and decorators to write cleaner and more maintainable code.

By mastering these skills, you'll be well on your way to becoming a Python expert! Don’t forget to like, comment, and subscribe for more programming tips and tutorials. Watch complete video https://lnkd.in/gF6nwnKf

#SkillsOverDegrees#FutureOfWork#HiringTrendsIndia#SkillBasedHiring#HackathonHiring#PracticalSkillsMatter#DigitalTransformation#WorkforceInnovation#TechHiring#UpskillingIndia#NewAgeRecruitment#HandsOnExperience#TataCommunications#Zerodha#IBMIndia#SmallestAI#NSDCIndia#NoDegreeRequired#InclusiveHiring#WorkplaceRevolution

0 notes

Text

Python: Dealing with unknown fields in dataclass

I have been using dataclasses in my python data retrieval scripts but on the occasions when a field is added to the API return I needed a way to make the scripts continue to run. The added benefit of notifying the script user about any unknown fields helps too. The following code with a Warning message to show any fields that are not predefined in the dataclass. from dataclasses import…

0 notes

Text

What are the benefits of python dataclasses

Introduction

If you just started or already coded using Python and like Object Oriented Programming but aren't familiar with the dataclasses module, you came to the right place! Data classes are used mainly to model data in Python. It decorates regular Python classes and has no restrictions, which means it can behave like a typical class. Special methods build-in implementation. In the world of Python programming, data manipulation and management play a crucial role in many applications. Whether you’re working with API responses, modeling entities, or simply organizing your data, having a clean and efficient way to handle data is essential. This is where Python data classes come into the picture

What Are Python Dataclasses?

Python dataclasses are classes from the standard library to be added to the code for specific functionality. These can be used for making changes to user-defined classes using the dataclass decorator. we don't have to implement special methods ourselves, which helps us avoid boilerplate code, like the init method (_init_ ), string representation method (_repr_ ), methods that are used for ordering objects (e.g. lt, le, gt, and ge), these compare the class as if it were a tuple of its fields, in order.The advantage of using Python dataclasses is that the special methods can be automatically added, leaving more time for focusing on the class functions instead of the class itself.Python, a data class is a class that is primarily used to store data, and it is designed to be simple and straightforward. Data classes are introduced in Python 3.7 and later versions through the data class decorator in the data classes module.The purpose of a Python data class is to reduce boilerplate code that is typically associated with defining classes whose main purpose is to store data attributes. With data classes, you can define the class and its attributes in a more concise and readable manner.

Python's datetime module provides classes for working with dates and times. The main classes include:

There Are Two Types

First Type

Date: Represents a date (year, month, day).

Time: Represents a time (hour, minute, second, microsecond).

Datetime: Represents both date and time.

Timedelta: Represents a duration, the difference between two dates or times.

Tzinfo: Base abstract class for time zone information objects.

Second Type

Datetime: Represents a specific point in time, including both date and time information.

Date: Represents a date (year, month, day) without time information.

Time: Represents a time (hour, minute, second, microsecond) without date information.

Timedelta: Represents the difference between two datetime objects or a duration of time.

How Are Python Dataclasses Effective?

Python dataclasses provide a convenient way to create classes that primarily store data. They are effective for several reasons:Now that you know the basic concept of Python dataclasses decorator, we’ll explore in more detail why you must consider using it for your code. First, using dataclasses will reduce the number of writing special methods. It will help save time and enhance your productivity.

Reduced Boilerplate Code

Easy Declaration

Immutable by Default

Integration with Typing

Customization

Interoperability

Use Less Code to Define Class

Easy Conversion to a Tuple

Eliminates the Need to Write Comparison Methods

Overall, Python dataclasses offer a convenient and effective way to define simple, data-centric classes with minimal effort, making them a valuable tool for many Python developers.

0 notes

Text

Python DataClasses

Howdy Gang!!

Have you ever wanted to create a class in Python that has no methods associated with it? At first it might perplex you why someone might want that in the first place, and I had to think about it for a while myself before I thought of some use cases that could be useful. For example:

you have a list of information that you also need to store some metadata surrounding that list. For example, a list that stores values between a min and max range.

you have a CSV file you need to work with, and you want to create an object where each primitive in the object represents a column of the CSV

There are tons of other applications of this object type, but these are the ones I could think of off the top of my head.

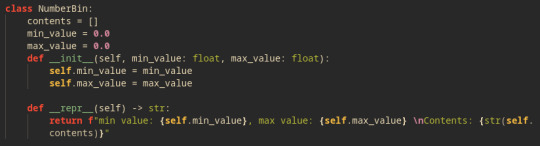

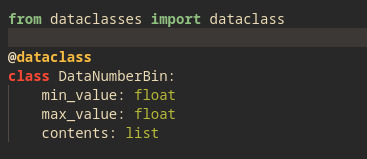

How you might write a data class using traditional python classes is something like this:

We had to specify an initializer and also a __repr__ method so when we print the object to the terminal it does not just give back a memory address. With a dataclass, we could shorten this class declaration to only a few lines of code:

As you can see, this is a lot simpler syntactically and it has all the same functionality and even some extra features! For example, the __repr__ function is implemented implicitly so it will print the data members of the class to the terminal in an easy to read manner, and functions like __eq__ are also implicitly declared to allow you to compare dataclasses of the same type against each other with no additional code.

I really like structs from C/C++ and data classes from Java, so I am happy to see that python is gaining its own dataclass paradigm. Another advantage to dataclasses is a developer who is familiar with dataclasses will immediately know the functionality of your class; there is no need to think about if the equality operator will work because dataclasses implement those by design.

1 note

·

View note

Text

Short Guide to Python Dataclasses- 2

What are dataclasses? While we can take a step back to read our guide. But what next? Well, why should you use the python dataclass? Simply the reason is to increase the code's efficiency and decrease the boilerplate code c. In this blog, MarsDevs illustrates the reason why you should use Python Dataclasses.

Click here to know more: https://www.marsdevs.com/blogs/short-guide-to-python-dataclasses-2

0 notes

Text

yeah fp is the way to go here. let's make it a little more rigorous (sticking with Python for the fun of it):

from dataclasses import dataclass from functools import reduce from typing import Callable, TypeVar

#type variable support for Python <3.12 A = TypeVar("") B = TypeVar("")

@dataclass class Nat: """Inductive type for natural numbers.""" pass

@dataclass class Zero(Nat): """Zero constructor for Nat.""" pass

@dataclass class Succ(Nat): """Successor constructor for Nat.""" pred: Nat

def to_nat(i: int) -> Nat: """Surjection into Nat. Non-natural numbers saturate at Zero.""" return reduce(lambda n, _: Succ(n), range(i), Zero())

def fold_nat(z: B, s: Callable[[A], B], n: Nat) -> B: """Fold for Nat.""" match n: case Zero(): return z case Succ(p): return s(fold_nat(z, s, p))

def from_nat(n: Nat) -> int: """Injection into int.""" return fold_nat(0, lambda i: i + 1, n)

def add_nat(a: Nat, b: Nat) -> Nat: """Addition on Nat, defined via fold.""" return fold_nat(a, Succ, b)

@dataclass class Int: """Integers defined as pairs of Nats with an equivalence relation. Int(a, b) ~ Int(c, d) if and only if a + d == b + c.""" pos: Nat neg: Nat

def to_int(i: int) -> Int: """Bijection into Int.""" return Int(to_nat(i), to_nat(-i))

def from_int(i: Int) -> int: """Bijection into int.""" return from_nat(i.pos) - from_nat(i.neg)

def equivalence(i: Int) -> Int: """Constructs the canonical representation for the equivalence class of an Int, of either form Int(Zero(), ...) or Int(..., Zero()).""" match i: case Int(Succ(p1), Succ(p2)): return equivalence(Int(p1, p2)) case _: return i

def add_int(a: Int, b: Int) -> Int: """Addition on Int, defined via Nat addition.""" return equivalence(Int(add_nat(a.pos, b.pos), add_nat(a.neg, b.neg)))

def addition(a: int, b: int) -> int: """Addition on the builtin int performed on the structural Int type.""" return from_int(add_int(to_int(a), to_int(b)))

if you want floating-point support, it's trivially easy to extend this framework to include a Ratio type that is a pair of Ints with an equivalence relation 'Ratio(a, b) ~ Ratio(c, d)' iff 'a*d == b*c'

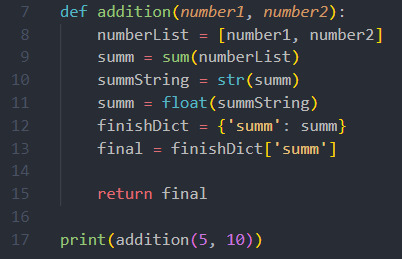

Just did a very easy normal Addition Function hah (⌐■_■)

Please some improvement ideas ;)

132 notes

·

View notes

Text

Class inheritance in Python 3.7 dataclasses

The way dataclasses combines attributes prevents you from being able to use attributes with defaults in a base class and then use attributes without a default (positional attributes) in a subclass.

Thats because the attributes are combined by starting from the bottom of the MRO, and building up an ordered list of the attributes in first-seen order; overrides are kept in their original location. So Parent starts out with [name, age, ugly], where ugly has a default, and then Child adds [school] to the end of that list (with ugly already in the list). This means you end up with [name, age, ugly, school] and because school doesnt have a default, this results in an invalid argument listing for __init__.

0 notes