#AJAX and XML

Explore tagged Tumblr posts

Text

Why is Childe's name Ajax (Asynchronous JavaScript and XML) 😭?

#genshin impact fatui#genshin impact#capitano#childe tartagalia#childe#childe tartaglia ajax#zhongli

8 notes

·

View notes

Text

Btw if you're looking for a cool sounding computer-themed name for your character you can call them Ajax. It's an acronym that stands for Asynchronous JavaScript and XML (Extensible Markup Language) and in extremely simple terms is a method for getting/handling incoming data in a web application. I just think it would be really neat as a character name

#yes programmers put acronyms inside of acronyms..... yes i hate it as much as you do.... no i cant do anything about it#they also seem to really like using the letter X as shown by XML and XSS (cross site scripting) and probably some others im forgetting#though with the ungodly amount of acronyms being thrown around in this profession i really cant blame them for wanting something unique#rambling#anyway sometimes programmers have really cool acronyms and sometimes they have CRUD. cant win em all

6 notes

·

View notes

Text

How To Kahoot Bot Spammer Unblocked | WUSCHOOL

If you are looking for a website where you can get all the information to unblock Kahoot bot spammers, then WUSCHOOL is one of the best options for you. We cover many tech-related topics like HTML, CSS, JavaScript, Bootstrap, PHP, Python, AngularJS, JSON, SQL, React.js, Sass, Node.js, jQuery, XQuery, AJAX, XML, Raspberry Pi, C++, etc. Provide complete information. Our goal is to solve all web-related issues worldwide.

#kahoot bots unblocked#kahoot bot spammer unblocked#benefits of playing video games#timestamp on text messages#how to remove timestamps from text messages android mobile#how do i check my bvn number

2 notes

·

View notes

Note

heyooo is it possible to get mituna themed names thank you

2ure, here'2 2ome name 2ugge2tiion2 for you.

(sure, here's some name suggestions for you.)

Xanthe, meaning golden or yellow.

Elio, meaning the sun.

Zesiro, meaning first born of twins.

Abaddon, meaning doom or destruction.

Ajax, from asynchronous javascript and XML.

Corbin, meaning crow, raven, a popular gemini name.

hope 2ome of the2e 2uiit you. ii 2tuck wiith generally ma2culiine name2 ju2t iin ca2e.

(hope some of these suit you. i stuck with generally masculine names just in case.)

2 notes

·

View notes

Text

Exploring HTMX: Revolutionizing Interactive Web Development

In the fast-paced realm of web development, new technologies are constantly emerging to enhance user experiences and streamline coding processes. HTMX is one such innovation that has gained significant attention for its ability to transform the way we build interactive web applications.

What is HTMX?

HTMX, standing for HyperText Markup eXtension, is a cutting-edge library that empowers developers to create dynamic web pages with minimal effort. It achieves this by combining the principles of AJAX (Asynchronous JavaScript and XML) and HTML, allowing developers to update parts of a webpage in real-time without the need for complex JavaScript code. HTMX Offical Site https://htmx.org/

The Power of HTMX

Seamless User Experience HTMX facilitates a seamless and fluid user experience by enabling developers to update specific portions of a webpage without triggering a full page reload. This translates to faster load times and reduced server load, enhancing overall performance. Simplified Development Workflow Gone are the days of writing extensive JavaScript code to achieve interactive features. With HTMX, developers can leverage their existing HTML skills to add dynamic behavior to their web applications. This not only streamlines the development process but also makes the codebase more maintainable and easier to understand. Accessibility Compliance In today's digital landscape, accessibility is paramount. HTMX shines in this aspect by promoting accessibility best practices. Since HTMX relies on standard HTML elements, it naturally aligns with accessibility guidelines, ensuring that web applications built with HTMX are usable by individuals with disabilities. SEO-Friendly One of the concerns with traditional single-page applications is their impact on SEO. HTMX addresses this by rendering content on the server side while still providing a dynamic and interactive frontend. This means search engines can easily crawl and index the content, contributing to better SEO performance.

How HTMX Works

HTMX operates by adding special attributes to HTML elements, known as HX attributes. These attributes define the behavior that should occur when a certain event takes place. For example, the hx-get attribute triggers a GET request to fetch new content from the server, while the hx-swap attribute updates the specified element with the retrieved content. This declarative approach eliminates the need for intricate JavaScript code and promotes a more intuitive development experience. Click Me

Getting Started with HTMX

- Installation: To begin, include the HTMX library in your project by referencing the HTMX CDN or installing it through a package manager. - Adding HX Attributes: Identify the elements you want to make dynamic and add the appropriate HX attributes. For instance, you can use hx-get to fetch data from the server when a button is clicked. - Defining Server-Side Logic: HTMX requires server-side endpoints to handle requests and return updated content. Set up these endpoints using your preferred server-side technology. - Enhancing Interactions: Leverage various HX attributes like hx-swap or hx-trigger to define how different parts of your page interact with the server. Using HTMX to enhance the interactivity of your web application involves a few simple steps: - Include HTMX Library: Begin by including the HTMX library in your project. You can do this by adding the HTMX CDN link to the section of your HTML file or by installing HTMX using a package manager like npm or yarn. htmlCopy code - Add HTMX Attributes: HTMX works by adding special attributes to your HTML elements. These attributes instruct HTMX on how to handle interactions. Some common HTMX attributes include: - hx-get: Triggers a GET request to fetch content from the server. - hx-post: Triggers a POST request to send data to the server. - hx-swap: Updates the content of an element with the fetched content. - hx-target: Specifies the element to update with the fetched content. - hx-trigger: Defines the event that triggers the HTMX action (e.g., "click"). Here's an example of how you can use the hx-get attribute to fetch content from the server when a button is clicked: htmlCopy code Fetch Data - Create Server-Side Endpoints: HTMX requires server-side endpoints to handle requests and provide data. Set up these endpoints using your preferred server-side technology (e.g., Node.js, Python, PHP). These endpoints should return the desired content in response to HTMX requests. - Use Data Attributes: HTMX also supports data attributes for passing additional information to the server. You can use attributes like data-* or hx-* to send specific data to the server when an action is triggered. - Leverage HTMX Features: HTMX offers additional features like animations, history management, and more. You can explore the documentation to discover how to implement these features according to your needs. - Test and Iterate: As with any development process, it's essential to test your HTMX-enhanced interactions thoroughly. Make sure the interactions work as expected and provide a seamless user experience. Remember that HTMX aims to simplify web development by allowing you to achieve dynamic behaviors with minimal JavaScript code. It's important to familiarize yourself with the HTMX documentation to explore its full potential and capabilities. For more detailed examples and guidance, you can refer to the official HTMX documentation at https://htmx.org/docs/.

Conclusion

In a digital landscape where speed, accessibility, and user experience are paramount, HTMX emerges as a game-changer. By combining the best of AJAX and HTML, HTMX empowers developers to create highly interactive and responsive web applications without the complexity of traditional JavaScript frameworks. As you embark on your journey with HTMX, you'll find that your development process becomes smoother, your codebase more efficient, and your user experiences more delightful than ever before. Read the full article

2 notes

·

View notes

Text

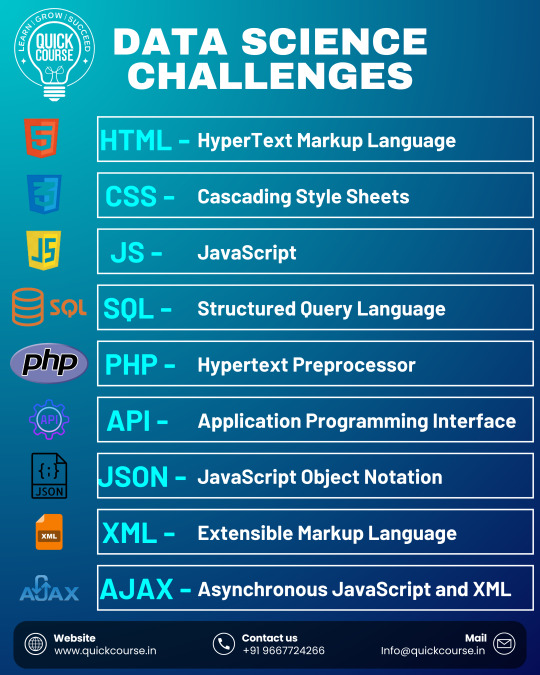

Master the Foundations of Data Science! At Quick Course, we believe a strong foundation in key technologies is the first step toward becoming a successful Data Scientist. 🚀

💻 Here are the essential tools you’ll need to understand and conquer: 🔹 HTML – Structure your web content 🔹 CSS – Style and design like a pro 🔹 JavaScript – Add interactivity 🔹 SQL – Manage and query databases 🔹 PHP – Build server-side logic 🔹 API – Connect and communicate between systems 🔹 JSON & XML – Structure and transport data 🔹 AJAX – Enable seamless user experiences

🎯 Learn | Grow | Succeed with Quick Course — your go-to platform for mastering tech skills that matter.

📞 Contact: +91 9667724266 🌐 Website: www.quickcourse.in 📧 Email: [email protected]

0 notes

Text

Performance checking out is essential for comparing the reaction time, scalability, reliability, speed, and aid utilization packages and net offerings below their anticipated workloads. The software program marketplace presently has diverse overall performance checking out gear. Nevertheless, whilst we talk of overall performance checking out gear, Apache Jmeter and Micro Focus LoadRunner (former HP LoadRunner) are the 2 names that routinely come to mind. Both those gear paintings nicely for detecting insects and locating obstacles of software program packages with the aid of using growing their load. But in this text we additionally would like to inform approximately our in-residence device Boomq.io. A clever manner to find out which device is applicable on your commercial enterprise wishes is to evaluate the important capabilities of Jmeter vs Loadrunner vs Boomq. In this text, we talk about the important variations among Jmeter, Loadrunner and Boomq. Jmeter Features in Performance and Load Testing Apache Jmeter has the subsequent key capabilities.

- GUI Design and Interface

- Result Analysis and Caches

- Highly Extensible Core

- 100% Java scripted

- Pluggable Samplers

- Multithreading Framework

- Data Analysis and Visualization

- Dynamic Input

- Compatible with HTTP, HTTPS, SOAP / REST, FTP, Database through JDBC, LDAP, Message-orientated middleware (MOM), POP3, IMAP, and SMTP

- Scriptable Samplers (JSR223-well matched languages, BSF-well matched languages, and BeanShell)

Pros and Cons of the Jmeter Application Jmeter is a sturdy overall performance checking-out device with numerous awesome capabilities. However, the utility nevertheless has many professionals, in addition to cons. Jmeter Advantages Here are a few key benefits that stand out the most. Available free of price Data extraction in famous reaction formats, including JSON, XML, HTML, and many others Although the Apache Jmeter has numerous benefits, it additionally has some shortcomings, which might be noted below: Doesn`t assist JavaScript so it doesn`t assist AJAX requests with the aid of using an extension Memory intake for the utility may be excessive whilst utilized in GUI mode After a sure limit, excessive reminiscence intake reasons mistakes for a big range of customers Can be hard to check complicated packages the use of JavaScript or dynamic content, including CSRF tokens. Less gifted than paid overall performance checking out gear including LoadRunner What is LoadRunner? HP Loadrunner (now Micro-Focus Loadrunner) is an especially state-of-the-art software program overall performance checking out a device that detects and stops overall performance troubles in net packages. It makes a specialty of detecting bottlenecks earlier than the utility enters the implementation or the deployment phase. Similarly, the device is extraordinarily useful in detecting overall performance gaps earlier than a brand-new gadget is applied or upgraded. However, Loadrunner isn't restrained from checking out net packages or offerings. The utility is likewise optimized for checking out ERP software programs, and legacy gadget utility, in addition to Web 2. zero technology. Loadrunner allows software program testers to have complete visibility over their gadget`s cease-to-cease overall performance. As a result, those customers are capable of examining every factor for my part earlier than it is going to stay. At the same time, Loadrunner additionally offers its customers especially superior forecasting capabilities for forecasting prices for up-scaling utility ability. By exactly forecasting prices associated with each software program and hardware, it's miles less difficult to decorate the ability and scalability of your utility. Loadrunner isn't open source and is owned by the era-large Hewlett Packard. Therefore, the code of the utility isn't to be had by customers. However, because the utility already gives many superior and excessive-stage checking-out capabilities, it isn`t essential to customize present capabilities. Loadrunner Features in Performance and Load Testing

#software testing training#unicodetechnologies#automation testing training in ahmedabad#manual testing

0 notes

Text

蜘蛛池优化方法有哪些?TG@yuantou2048

蜘蛛池优化方法有哪些?

蜘蛛池(Spider Pool)是一种用于提高网站收录和排名的SEO技术,通过模拟搜索引擎爬虫的行为来增加网站被搜索引擎抓取的频率。以下是几种常用的蜘蛛池优化方法:

1. 高质量内容:确保你的网站内容是高质量、原创且对用户有价值的。搜索引擎更倾向于抓取和索引那些提供有价值信息的网站。

2. 合理的内部链接结构:良好的内部链接结构可以帮助搜索引擎更好地理解网站的结构和内容。合理设置锚文本链接,可以引导搜索引擎爬虫更有效地抓取网站内容。

3. 定期更新内容:保持网站内容的定期更新,可以吸引搜索引擎爬虫频繁访问你的网站。新鲜的内容会吸引更多爬虫,从而提高网站的收录率。

4. 提升页面加载速度:页面加载速度慢会影响用户体验和搜索引擎爬虫的抓取效率。优化图片、压缩文件、使用CDN等手段可以有效提升页面加载速度。

5. 使用robots.txt文件:正确配置robots.txt文件,告诉搜索引擎哪些页面需要被抓取,哪些不需要。这有助于爬虫更高效地抓取重要页面。

6. 利用Sitemap:提交站点地图(Sitemap)给搜索引擎,帮助爬虫更快地发现新内容或已更新的内容。

7. 外链建设:优质的外部链接不仅可以提高网站权重,还能引导爬虫更多地访问你的网站。

8. 元标签优化:优化标题标签(Title Tag)、描述标签(Meta Description)以及关键词布局,让搜���引擎更容易理解和抓取你的网页。

7. 移动优先设计:随着移动设备使用的普及,确保你的网站在移动设备上也能快速加载,提高用户体验和搜索引擎友好性。

8. 定期检查并修复死链:死链不仅影响用户体验,也会影响搜索引擎爬虫的工作效率。定期检查并修复死链,确保所有链接都能正常工作。

9. 使用友好的URL结构:简洁、清晰的URL结构有助于搜索引擎爬虫更高效地抓取和索引你的网页。

10. 定期更新robots.txt文件:确保robots.txt文件中的规则是最新的,避免不必要的抓取请求,同时确保重要���页面能够被优先抓取。

11. 使用Canonical标签:避免重复内容问题,使用Canonical标签告诉搜索引擎哪个版本是主版本,避免重复内容带来的负面影响。

12. 定期更新内容:定期更新网站内容,尤其是对于动态生成的页面,确保没有错误链接,提高爬虫抓取效率。

13. 利用重定向:正确设置301重定向,避免重复内容,减少爬虫浪费在无效页面上的时间。

14. 定期更新robots.txt文件:确保robots.txt文件中没有阻止爬虫抓取的关键页面。

15. 利用JavaScript和AJAX:虽然搜索引擎爬虫可能无法很好地解析JavaScript和AJAX生成的内容,因此,确保这些技术生成的内容能被正确索引。

16. 利用XML Sitemap:创建并提交XML Sitemap,帮助搜索引擎爬虫更高效地抓取和索引你的网页。

17. 定期分析日志文件:分析服务器日志文件,了解爬虫行为,识别并解决潜在的问题。

18. 定期更新robots.txt文件:确保robots.txt文件中的指令准确无误,避免爬虫浪费资源在不重要的页面上。

19. 使用结构化数据:使用结构化数据标记,如Schema.org,可以帮助搜索引擎更好地理解你的网站结构。

20. 监控爬虫抓取频率:通过Google Search Console等工具监控爬虫抓取频率,确保爬虫能够顺利抓取到最新内容。

21. 使用robots.txt文件:合理设置robots.txt文件,指导爬虫如何抓取你的网站,避免爬虫抓取低质量或重复内容。

22. 定期检查并优化:定期检查并优化robots.txt文件,避免爬虫浪费在非必要页面上的抓取。

23. 使用缓存策略:合理使用缓存策略,减少爬虫抓取低质量或重复内容,提高爬虫抓取效率。

24. 利用内链:合理使用内链,帮助爬虫更高效地抓取和索引你的网站。

25. 定期更新robots.txt文件:确保robots.txt文件中的规则是正确的,避免爬虫抓取无用的页面,提高爬虫抓取效率。

26. 定期更新robots.txt文件:确保robots.txt文件中的规则是最新的,避免爬虫抓取无用页面,提高爬虫抓取效率。

27. 利用重定向:使用301重定向,避免爬虫抓取无用页面,提高爬虫抓取效率。

28. 利用重定向:使用301重定向,避免爬虫抓取无用页面,提高爬虫抓取效率。

29. 利用重定向:使用301重定向,避免爬虫抓取无用页面,提高爬虫抓取效率。

28. 利用重定向:使用301重定向,避免爬虫抓取无用页面,提高爬虫抓取效率。

29. 利用重定向:使用301重定向,避免爬虫抓取无用页面,提高爬虫抓取效率。

24. 利用重定向:使用301重定向,避免爬虫抓取无用页面,提高爬虫抓取效率。

25. 利用重定向:使用301重定向,避免爬虫抓取无用页面,提高爬虫抓取效率。

26. 利用重定向:使用301重定向,避免爬虫抓取无用页面,提高爬虫抓取效率。

27. 利用重定向:使用301重定向,避免爬虫抓取无用页面,提高爬虫抓取效率。

28. 利用重定向:使用301重定向,避免爬虫抓取无用页面,提高爬虫抓取效率。

29. 利用重定向:使用301重定向,避免爬虫抓取无用页面,提高爬虫抓取效率。

30. 利用重定向:使用301重定向,避免爬虫抓取无用页面,提高爬虫抓取效率。

31. 利用重定向:使用301重定向,避免爬虫抓取无用页面,提高爬虫抓取效率。

32. 利用重定向:使用301重定向,提高爬虫抓取效率。

33. 利用重定向:使用301重定向,提高爬虫抓取效率。

34. 利用重定向:使用301重定向,提高爬虫抓取效率。

35. 利用重定向:使用301重定向,提高爬虫抓取效率。

36. 利用重定向:使用301重定向,提高爬虫抓取效率。

37. 利用重定向:使用301重定向,提高爬虫抓取效率。

38. 利用重定向:使用301重定向,提高爬虫抓取效率。

39. 利用重定向:使用301重定向,提高爬虫抓取效率。

310. 利用重定向:使用301重定向,提高爬虫抓取效率。

32. 利用重定向:使用301重定向,提高爬虫抓取效率。

33. 利用重定向:使用301重定向,提高爬虫抓取效率。

34. 利用重定向:使用301重定向,提高爬虫抓取效率。

35. 利用重定向:使用301重定向,提高爬虫抓取效率。

36. 利用重定向:使用301重定向,提高爬虫抓取效率。

37. 利用重定向:使用301重定向,提高爬虫抓取效率。

38. 利用重定向:使用301重定向,提高爬虫抓取效率。

39. 利用重定向:使用301重定向,提高爬虫抓取效率。

311. 利用重定向:使用301重定向,提高爬虫抓取效率。

312. 利用重定向:使用301重定向,提高爬虫抓取效率。

313. 利用重定向:使用301重定向,提高爬虫抓取效率。

314. 利用重定向:使用301重定向,提高爬虫抓取效率。

315. 利用重定向:使用301重定向,提高爬虫抓取效率。

316. 利用重定向:使用301重定向,提高爬虫抓取效率。

317. 利用重定向:使用301重定向,提高爬虫抓取效率。

318. 利用重定向:使用301重定向,提高爬虫抓取效率。

319. 利用重定向:使用301重定向,提高爬虫抓取效率。

320. 利用重定向:使用301重定向,

加飞机@yuantou2048

负面删除

币圈推广

0 notes

Text

Sure, here is the article in markdown format as requested:

```markdown

Website Scraping Tools TG@yuantou2048

Website scraping tools are essential for extracting data from websites. These tools can help automate the process of gathering information, making it easier and faster to collect large amounts of data. Here are some popular website scraping tools that you might find useful:

1. Beautiful Soup: This is a Python library that makes it easy to scrape information from web pages. It provides Pythonic idioms for iterating, searching, and modifying parse trees built with tools like HTML or XML parsers.

2. Scrapy: Scrapy is an open-source and collaborative framework for extracting the data you need from websites. It’s fast and can handle large-scale web scraping projects.

3. Octoparse: Octoparse is a powerful web scraping tool that allows users to extract data from websites without writing any code. It supports both visual and code-based scraping.

4. ParseHub: ParseHub is a cloud-based web scraping tool that allows users to extract data from websites. It is particularly useful for handling dynamic websites and has a user-friendly interface.

5. Scrapy: Scrapy is a Python-based web crawling and web scraping framework. It is highly extensible and can be used for a wide range of data extraction needs.

6. SuperScraper: SuperScraper is a no-code web scraping tool that enables users to scrape data from websites by simply pointing and clicking on the elements they want to scrape. It's great for those who may not have extensive programming knowledge.

7. ParseHub: ParseHub is a cloud-based web scraping tool that offers a simple yet powerful way to scrape data from websites. It is ideal for large-scale scraping projects and can handle JavaScript-rendered content.

8. Apify: Apify is a platform that simplifies the process of scraping data from websites. It supports automatic data extraction and can handle complex websites with JavaScript rendering.

9. Diffbot: Diffbot is a web scraping API that automatically extracts structured data from websites. It is particularly good at handling dynamic websites and can handle most websites out-of-the-box.

10. Data Miner: Data Miner is a web scraping tool that allows users to scrape data from websites and APIs. It supports headless browsers and can handle dynamic websites.

11. Import.io: Import.io is a web scraping tool that turns any website into a custom API. It is particularly useful for extracting data from sites that require login credentials or have complex structures.

12. ParseHub: ParseHub is another cloud-based tool that can handle JavaScript-heavy sites and offers a variety of features including form filling, CAPTCHA solving, and more.

13. Bright Data (formerly Luminati): Bright Data provides a proxy network that helps in bypassing IP blocks and CAPTCHAs.

14. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features such as form filling, AJAX-driven content, and deep web scraping.

15. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features such as automatic data extraction and can handle dynamic content and JavaScript-heavy sites.

16. ScrapeStorm: ScrapeStorm is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

17. Scrapinghub: Scrapinghub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

18. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

19. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

20. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

Each of these tools has its own strengths and weaknesses, so it's important to choose the one that best fits your specific requirements.

20. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

21. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

22. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

23. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

24. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

25. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

26. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

27. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

28. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

29. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

28. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

30. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

31. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

32. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

33. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

34. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

35. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

36. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

37. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

38. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites and offers a range of features including automatic data extraction and can handle JavaScript-heavy sites.

39. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

38. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

39. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

40. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

41. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

42. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

43. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

44. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

45. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

46. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

47. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

48. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

49. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

50. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

51. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

52. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

53. ParseHub: ParseHub is a cloud-based web scraping tool that can handle JavaScript-heavy sites.

54. ParseHub: ParseHub

加飞机@yuantou2048

王腾SEO

蜘蛛池出租

0 notes

Text

The Rise of Automation Applications: Revolutionizing Workflows Through Web Development

In today’s fast-paced digital landscape, automation has become a cornerstone of efficiency, enabling businesses to streamline operations, reduce costs, and enhance productivity. Automation applications, powered by advanced web development technologies, are transforming industries by handling repetitive tasks, optimizing workflows, and enabling data-driven decision-making. From backend scripting to frontend interactivity, the integration of automation into web development is reshaping how applications are built, deployed, and maintained. This article delves into the key aspects of developing automation applications, highlighting the technologies, trends, and challenges that define this rapidly evolving field.

At the core of automation applications lies the ability to eliminate manual effort and minimize human error. Server-side scripting languages like Python, Node.js, and Ruby on Rails are commonly used to automate tasks such as data processing, report generation, and system monitoring. For instance, an e-commerce platform can use cron jobs to automatically update inventory levels, generate sales reports, and send email notifications to customers. Similarly, webhooks enable real-time automation by triggering actions in response to specific events, such as processing a payment or updating a database. These automated workflows not only save time but also improve accuracy, ensuring that systems run smoothly and efficiently.

The rise of APIs (Application Programming Interfaces) has further expanded the possibilities of automation in web development. APIs allow different systems and applications to communicate with each other, enabling seamless integration and data exchange. For example, a CRM system can integrate with an email marketing platform via an API, automatically syncing customer data and triggering personalized email campaigns based on user behavior. Payment gateway APIs, such as those provided by Stripe or PayPal, automate the processing of online transactions, reducing the need for manual invoicing and reconciliation. By leveraging APIs, developers can create interconnected ecosystems that operate efficiently and cohesively.

Frontend automation is another area where web development is driving innovation. Modern JavaScript frameworks like React, Angular, and Vue.js enable developers to build dynamic, interactive web applications that respond to user inputs in real-time. Features like form autofill, input validation, and dynamic content loading automate routine tasks, enhancing the user experience and reducing the burden on users. For example, an online booking system can use AJAX (Asynchronous JavaScript and XML) to automatically update available time slots as users select dates, eliminating the need for page reloads and providing a smoother experience. These frontend automation techniques not only improve usability but also increase user engagement and satisfaction.

The integration of artificial intelligence (AI) and machine learning (ML) into web development has taken automation to new heights. AI-powered tools can automate complex decision-making processes and deliver personalized experiences at scale. For instance, an e-commerce website can use recommendation engines to analyze user behavior and suggest products tailored to individual preferences. Similarly, chatbots powered by natural language processing (NLP) can handle customer inquiries, provide support, and even process orders, reducing the workload on human agents. These technologies not only enhance efficiency but also enable businesses to deliver more value to their customers.

DevOps practices have also been revolutionized by automation, particularly in the context of continuous integration and continuous deployment (CI/CD). CI/CD pipelines automate the testing, integration, and deployment of code updates, ensuring that new features and bug fixes are delivered to users quickly and reliably. Tools like Jenkins, GitLab CI, and GitHub Actions enable developers to automate these processes, reducing the risk of human error and accelerating the development lifecycle. Additionally, containerization technologies like Docker and orchestration platforms like Kubernetes automate the deployment and scaling of applications, ensuring optimal performance even during peak usage periods.

Security is another critical area where automation is making a significant impact. Automated security tools can monitor web applications for vulnerabilities, detect suspicious activities, and respond to threats in real-time. For example, web application firewalls (WAFs) can automatically block malicious traffic, while SSL/TLS certificates can be automatically renewed to ensure secure communication. Additionally, automated penetration testing tools can identify potential security weaknesses, allowing developers to address them before they can be exploited. These automated security measures not only protect sensitive data but also help businesses comply with regulatory requirements and build trust with their users.

In conclusion, automation is transforming web development by enabling businesses to operate more efficiently, deliver better user experiences, and stay ahead in a competitive market. From backend scripting and API integration to frontend interactivity and AI-driven decision-making, automation is reshaping how applications are built and maintained. As web technologies continue to evolve, the potential for automation will only grow, paving the way for smarter, more responsive, and more secure digital ecosystems. By embracing automation, developers can unlock new levels of efficiency and innovation, driving progress and success in the digital age.

Make order from us: @Heldbcm

Our portfolio: https://www.linkedin.com/company/chimeraflow

0 notes

Text

Mastering QA Automation: Top Courses to Boost Your Testing Skills?

Introduction

Software testing and quality assurance play a crucial role in software development. With the increasing demand for robust applications, businesses rely on skilled Quality Assurance (QA) professionals to ensure software meets industry standards. Mastering automation testing can elevate your career, making you a valuable asset in the software industry.

In this guide, we’ll explore some of the best Software testing and quality assurance course to help you build a strong foundation in automation testing. Whether you are a beginner or a seasoned tester, these courses provide comprehensive knowledge and practical insights.

youtube

Why QA Automation Matters

With rapid advancements in technology, businesses aim to deliver software faster without compromising on quality. This is where QA automation comes into play. Here’s why learning automation testing is crucial:

Faster Testing Cycles: Automated tests execute faster than manual testing, improving software release speed.

Higher Accuracy: Eliminates human errors, ensuring precise testing results.

Cost Efficiency: Reduces the need for repetitive manual testing, saving time and resources.

Better Coverage: Automation allows running multiple test cases, covering various scenarios.

Essential Skills for QA Automation

Before diving into the best QA software testing courses, it’s important to understand the core skills you need to succeed in QA automation.

Understanding Software Testing Principles – Knowledge of manual testing, test plans, and test cases.

Programming Basics – Familiarity with programming languages like Java, Python, or JavaScript.

Automation Tools – Experience with Selenium, Appium, JUnit, TestNG, and more.

CI/CD Integration – Understanding of Jenkins, Git, and Docker for continuous testing.

API Testing – Experience with Postman, REST Assured, and SOAP UI.

Performance Testing – Knowledge of JMeter or LoadRunner.

By enrolling in a software quality assurance course, you can gain expertise in these key areas and build a rewarding career in QA automation.

Top QA Automation Courses to Enhance Your Skills

1. Comprehensive QA Automation Training

A well-rounded QA software testing course should cover both manual and automation testing. The course should introduce fundamental concepts and gradually progress to advanced automation techniques.

Key Topics Covered:

Introduction to Software Testing Life Cycle (STLC)

Manual Testing vs. Automation Testing

Selenium WebDriver with Java/Python

TestNG and JUnit Frameworks

Behavior-Driven Development (BDD) with Cucumber

API Testing with Postman and REST Assured

Performance Testing using JMeter

Real-World Application:

Companies like Google and Amazon use Selenium for automation testing. Learning these skills can open doors to various job opportunities.

2. Advanced Selenium WebDriver Training

Selenium is one of the most popular automation tools. This course focuses on advanced Selenium features for robust test automation.

Key Topics Covered:

Selenium WebDriver Architecture

Locators and Web Elements

Handling Dynamic Elements and AJAX Requests

Cross-Browser Testing with Selenium Grid

Integration with Jenkins for CI/CD

Headless Browser Testing

Practical Example:

A banking application needs to test its login functionality across multiple browsers. Selenium WebDriver allows automated execution on Chrome, Firefox, and Edge simultaneously.

3. API Testing and Automation

API testing is a critical part of QA automation. This course teaches how to test RESTful and SOAP APIs effectively.

Key Topics Covered:

Fundamentals of API Testing

Automating API Tests with Postman

REST Assured Framework

Handling JSON and XML Responses

API Security Testing

Real-World Application:

E-commerce platforms extensively use APIs to process payments. API testing ensures seamless transactions across payment gateways.

4. Performance Testing with JMeter

Performance testing ensures applications can handle heavy traffic without crashes. JMeter is widely used for load testing.

Key Topics Covered:

Introduction to Performance Testing

JMeter Test Plan and Thread Groups

Parameterization and Correlation

Stress Testing and Load Testing

Integrating JMeter with CI/CD Pipelines

Case Study:

A social media platform anticipated high traffic during a product launch. Load testing with JMeter helped optimize server capacity.

5. QA Automation with H2K Infosys

H2K Infosys offers an extensive software quality assurance course, covering end-to-end automation testing. Their curriculum is industry-focused, ensuring practical exposure to real-world projects.

Key Topics Covered:

Software Testing Fundamentals

Selenium WebDriver with Java

API and Database Testing

CI/CD Integration

Agile and Scrum Practices

H2K Infosys provides hands-on training and live projects, helping learners gain job-ready skills.

Benefits of Enrolling in a QA Software Testing Course

Industry-Relevant Curriculum: Gain practical insights and hands-on experience with real-world projects.

Certification Opportunities: Earn industry-recognized certifications to boost your resume.

Career Growth: QA automation testers are in high demand with competitive salaries.

Flexible Learning: Online courses offer flexibility to learn at your own pace.

Job Assistance: Some training programs, like those offered by H2K Infosys, provide job placement support.

Choosing the Right QA Automation Course

When selecting a software quality assurance course, consider these factors:

Course Content: Ensure it covers automation testing fundamentals and advanced techniques.

Hands-On Practice: Practical experience is crucial for mastering automation tools.

Instructor Expertise: Choose courses taught by experienced professionals.

Student Reviews: Check feedback to ensure quality training.

Certification Options: Certifications add credibility to your skillset.

Future of QA Automation

The QA industry continues to evolve with advancements in AI and machine learning. Some future trends include:

AI-Powered Testing: AI-driven tools like Testim and Applitools enhance automation efficiency.

Shift-Left Testing: Early testing integration in the development cycle reduces defects.

Codeless Automation: Tools like Katalon and TestComplete enable automation with minimal coding.

Cloud-Based Testing: Scalable cloud platforms improve testing speed and efficiency.

By staying updated with the latest trends, you can remain competitive in the QA automation field.

Conclusion

Mastering QA automation is essential for a successful career in software testing. By enrolling in a well-structured QA software testing course, you can gain in-demand skills and improve job prospects. Courses offered by H2K Infosys provide hands-on training and industry insights to help you excel in automation testing.

Take the next step in your career—enroll in a software quality assurance course today and become a QA automation expert!

0 notes

Text

DET-Sitefinity Senior Developer - GDSN02

, DEVOPS deployment. PHP, PHP Frameworks like Symfony JavaScript, CSS, Ajax, jQuery MySQL 5.x, Git, XML/XSLT Object… Apply Now

0 notes

Text

Staff Software Engineer (UI/Frontend)

, Bootstrap, React, Redux, Node.js, Vue.js Knowledge of AJAX, JSON, HTML, XML, CSS, SOAP, REST and associated frameworks… Apply Now

0 notes

Text

How to Utilize jQuery's ajax() Function for Asynchronous HTTP Requests

In the dynamic world of web development, user experience is paramount. Asynchronous HTTP requests play a critical role in creating responsive applications that keep users engaged. One of the most powerful tools for achieving this in JavaScript is jQuery's ajax() function. With its straightforward syntax and robust features, jquery ajax simplifies the process of making asynchronous requests, allowing developers to fetch and send data without refreshing the entire page. In this blog, we'll explore how to effectively use the ajax() function to enhance your web applications.

Understanding jQuery's ajax() Function

At its core, the ajax() function in jQuery is a method that allows you to communicate with remote servers using the XMLHttpRequest object. This function can handle various HTTP methods like GET, POST, PUT, and DELETE, enabling you to perform CRUD (Create, Read, Update, Delete) operations efficiently.

Basic Syntax

The basic syntax for the ajax() function is as follows:

javascript

Copy code

$.ajax({ url: 'your-url-here', type: 'GET', // or 'POST', 'PUT', 'DELETE' dataType: 'json', // expected data type from server data: { key: 'value' }, // data to be sent to the server success: function(response) { // handle success }, error: function(xhr, status, error) { // handle error } });

Each parameter in the ajax() function is crucial for ensuring that your request is processed correctly. Let’s break down some of the most important options.

Key Parameters

url: The endpoint where the request is sent. It can be a relative or absolute URL.

type: Specifies the type of request, which can be GET, POST, PUT, or DELETE.

dataType: Defines the type of data expected from the server, such as JSON, XML, HTML, or script.

data: Contains data to be sent to the server, formatted as an object.

success: A callback function that runs if the request is successful, allowing you to handle the response.

error: A callback function that executes if the request fails, enabling error handling.

Making Your First AJAX Request

To illustrate how to use jQuery’s ajax() function, let’s create a simple example that fetches user data from a placeholder API. You can replace the URL with your API endpoint as needed.

javascript

Copy code

$.ajax({ url: 'https://jsonplaceholder.typicode.com/users', type: 'GET', dataType: 'json', success: function(data) { console.log(data); // Log the user data }, error: function(xhr, status, error) { console.error('Error fetching data: ', error); } });

In this example, when the request is successful, the user data will be logged to the console. You can manipulate this data to display it dynamically on your webpage.

Sending Data with AJAX

In addition to fetching data, you can also send data to the server using the POST method. Here’s how you can submit a form using jQuery’s ajax() function:

javascript

Copy code

$('#myForm').on('submit', function(event) { event.preventDefault(); // Prevent the default form submission $.ajax({ url: 'https://your-api-url.com/submit', type: 'POST', dataType: 'json', data: $(this).serialize(), // Serialize form data success: function(response) { alert('Data submitted successfully!'); }, error: function(xhr, status, error) { alert('Error submitting data: ' + error); } }); });

In this snippet, when the form is submitted, the data is sent to the specified URL without refreshing the page. The use of serialize() ensures that the form data is correctly formatted for transmission.

Benefits of Using jQuery's ajax() Function

Simplified Syntax: The ajax() function abstracts the complexity of making asynchronous requests, making it easier for developers to write and maintain code.

Cross-Browser Compatibility: jQuery handles cross-browser issues, ensuring that your AJAX requests work consistently across different environments.

Rich Features: jQuery provides many additional options, such as setting request headers, handling global AJAX events, and managing timeouts.

Cost Considerations for AJAX Development

When considering AJAX for your web application, it’s important to think about the overall development costs. Using a mobile app cost calculator can help you estimate the budget required for implementing features like AJAX, especially if you’re developing a cross-platform app. Knowing your costs in advance allows for better planning and resource allocation.

Conclusion

The ajax() function in jQuery is a powerful tool that can significantly enhance the user experience of your web applications. By enabling asynchronous communication with servers, it allows developers to create dynamic and responsive interfaces. As you delve deeper into using AJAX, you’ll discover its many advantages and how it can streamline your web development process.

Understanding the differences between AJAX vs. jQuery is also vital as you progress. While AJAX is a technique for making asynchronous requests, jQuery is a library that simplifies this process, making it more accessible to developers. By mastering these concepts, you can elevate your web applications and provide users with the seamless experiences they expect.

0 notes

Text

5 Most Common Google Indexing Issues on Large Websites

Even the best digital marketing agency in the game will agree that running large websites with thousands of pages and URLs can be tricky. Site growth comes with its own set of SEO challenges, and page indexing often ranks at the top.

A poorly indexed website is a bit like sailing in the dark—you might be out there, but no one can spot you.

Google admits it, too. To them, the web is infinite, and proper indexing gives the search engine a compass to navigate. Of course, since the web is boundless, not every page can be indexed. So, when traffic dips, an indexing issue could be the culprit. From duplicate content to poorly made sitemaps, here’s the lowdown on Google’s most common indexing issues, with insights from our very own SEO expert.

Duplicate Content

It’s one of the most common Google indexing issues on larger sites. “In simple words, it’s content that’s often extremely similar or identical on several pages with a website, sometimes across different domains,” says First Page SEO Expert Selim Goral. Take an e-commerce website, for instance; with countless product pages and similar descriptions, getting indexed can be a real headache.

The fix? Use tools like canonical tags. They help indicate specific or preferred pages. “Add meta no-index tags to the pages with thin content and ensure your taxonomy pages have no-index tags. Adding rel nofollow tags to faceted navigations will also show search engine bots whether you care about faceted pages or not,” suggests Goral. It also helps to merge your content, making it concise enough to fill one page.

Crawl Budget Limitations

What exactly is a crawl budget? “It’s just the number of pages a search engine crawls and indexes on a website within a given timeframe,” explains Goral. Larger websites need more resources to achieve a 100% indexing rate, making an efficient crawl budget critical. When your crawl budget is drained, some essential pages, especially those deeper in the site’s structure, might not get indexed.

So, how do you tackle this? For starters, use robots.txt to guide bots to crawl specific pages. Block pages that are not critical for search using robots.txt; this lowers the chance of them being indexed. Goral suggests monitoring your log files and ensuring search engine bots are not stuck on a page(s) while they try to crawl your website.

Quality of Content

Google’s Gary Illyes says the final step in indexing is ‘index selection,’ which relies heavily on the site’s quality based on collected signals. “These signals vary, and there is not one simple formula that works for every SERP (Search Engine Result Page). Adding information to a service page can sometimes improve rankings, but it can also backfire. Managing this balance is a key responsibility of an SEO tech”, says Goral.

One of Google’s priorities this year is to crawl content that “deserves” to be crawled and deliver only valuable content to users, which is why focusing on the quality of your site’s content is critical.

XML Sitemap Issues

We cannot emphasize this enough: sitemaps are essential to SEO success, so it’s important to execute them well. Google says XML sitemaps work best for larger websites, but with frequently changing URLs and constant content modifications, incomplete sitemaps are inevitable and can mean missing pages in search results.

The fix? “Sitemap issues are one of the most common Google indexing issues. If your sitemap is too big, try breaking it into smaller, more organized sitemaps. It makes it easier for search engines and their bots to process your page”, suggests Goral.

JavaScript and AJAX Issues

Many large websites rely on JavaScript and AJAX because they’re crucial for creating dynamic web content and interactions. However, using these technologies can sometimes lead to indexing issues, especially with new content.

For example, search engines might not immediately render and execute JavaScript, inevitably delaying indexing. Also, if search engines can’t interpret or access AJAX dynamic content, it might not get indexed at all.

This insightful information is brought to you by Prowess marketing, a top With a strong history of enhancing online visibility, they are the experts you can rely on for cutting-edge digital marketing strategies.

0 notes

Text

Selenium WebDriver Automation Testing

In today’s competitive software development landscape, ensuring the quality and functionality of web applications is critical. Automation testing has emerged as the most efficient way to verify applications across browsers and devices, and Selenium WebDriver stands out as one of the most powerful tools for this task. Its flexibility, open-source nature, and support for a wide range of programming languages have made Selenium WebDriver a favorite among developers and testers alike. In this article, we will explore the capabilities, features, and best practices of Selenium WebDriver automation testing to help you master it.

What is Selenium WebDriver?

Selenium WebDriver is a tool designed for automating the testing of web applications. It enables developers and testers to simulate user interactions with a web browser to validate whether the application performs as expected. Unlike Selenium’s earlier versions, WebDriver interacts directly with the browser, offering more reliable testing by mimicking a real user’s behavior. It supports multiple browsers, including Chrome, Firefox, Edge, and Safari, and can be used with several programming languages like Java, Python, C#, JavaScript, and Ruby.

Key Features of Selenium WebDriver

Cross-Browser Compatibility Selenium WebDriver supports all major browsers, making it a valuable tool for cross-browser testing. This capability ensures that your web application functions smoothly on different browsers without issues.

Support for Multiple Languages One of Selenium WebDriver’s strengths is its support for numerous programming languages. Whether you are proficient in Java, Python, or C#, WebDriver allows you to write test scripts in the language you’re most comfortable with.

Scalability Selenium WebDriver can be integrated with other tools like Selenium Grid for parallel execution of test scripts, which significantly reduces testing time, especially for large-scale applications.

Direct Browser Interaction WebDriver communicates directly with the browser without using any intermediary, ensuring faster and more accurate testing compared to earlier versions like Selenium RC.

Open-Source Being open-source, Selenium WebDriver is free to use, and it has a thriving community of developers who continuously contribute to its improvement.

Why Selenium WebDriver for Automation Testing?

There are numerous automation testing tools available, but Selenium WebDriver is widely regarded as the go-to solution for several reasons:

Ease of Use: Selenium WebDriver is relatively easy to set up and use, especially if you are familiar with basic programming.

Robustness: Its ability to handle complex testing scenarios, including dynamic elements and AJAX-based applications, makes it a powerful choice.

Integration with CI/CD Pipelines: Selenium integrates seamlessly with continuous integration/continuous deployment (CI/CD) tools like Jenkins, allowing automated tests to be run as part of the deployment process.

Community Support: Selenium’s large community offers extensive resources, tutorials, and plugins that facilitate easier learning and faster troubleshooting.

Setting Up Selenium WebDriver for Testing

To get started with Selenium WebDriver, follow these steps:

Step 1: Install WebDriver for the Desired Browser

First, you need to install the WebDriver specific to the browser you intend to automate. For instance:

ChromeDriver for Chrome: Download it from the official Selenium site.

GeckoDriver for Firefox: Available from Mozilla’s repository.

bash

Copy code

# Example for ChromeDriver installation in JavaScript

npm install selenium-webdriver

Step 2: Configure the Development Environment

Once you have the WebDriver downloaded, set up the programming environment. For example, in Java, you would create a new Maven or Gradle project and include Selenium dependencies:

xml

Copy code

<dependency>

<groupId>org.seleniumhq.selenium</groupId>

<artifactId>selenium-java</artifactId>

<version>4.0.0</version>

</dependency>

Step 3: Writing Test Scripts

The next step is to write test scripts that interact with the application under test. In this example, we’ll use Java to automate a simple login page test:

java

Copy code

import org.openqa.selenium.WebDriver;

import org.openqa.selenium.chrome.ChromeDriver;

public class LoginTest {

public static void main(String[] args) {

// Set the path of the ChromeDriver

System.setProperty("webdriver.chrome.driver", "path/to/chromedriver");

WebDriver driver = new ChromeDriver();

driver.get("https://example.com/login");

// Find the username and password fields

driver.findElement(By.id("username")).sendKeys("testuser");

driver.findElement(By.id("password")).sendKeys("password123");

// Submit the login form

driver.findElement(By.id("loginButton")).click();

// Check if login was successful

String expectedUrl = "https://example.com/dashboard";

if(driver.getCurrentUrl().equals(expectedUrl)){

System.out.println("Login Successful");

} else {

System.out.println("Login Failed");

}

driver.quit();

}

}

Step 4: Running the Tests

After writing the script, execute the test to see whether the application functions as expected. Selenium WebDriver automatically launches the browser, performs the actions defined in the script, and then closes the browser.

Step 5: Generating Test Reports

You can integrate test reporting tools like TestNG or JUnit to generate detailed reports that offer insights into pass/fail status, execution times, and any errors encountered.

Best Practices for Selenium WebDriver Automation Testing

1. Use Explicit Waits Over Implicit Waits

When testing dynamic web applications, using explicit waits is crucial for ensuring that your test script waits for a specific condition to be met before proceeding. This is much more reliable than implicit waits, which may cause unnecessary delays.

java

Copy code

WebDriverWait wait = new WebDriverWait(driver, 10);

wait.until(ExpectedConditions.visibilityOfElementLocated(By.id("elementID")));

2. Modularize Your Code

Keep your test scripts clean and maintainable by dividing your code into modules. Use Page Object Model (POM) to separate the logic of the test from the UI elements.

3. Perform Cross-Browser Testing

One of the key strengths of Selenium WebDriver is its support for cross-browser testing. Always verify your application across multiple browsers to ensure consistent behavior.

4. Run Tests in Parallel

Utilize Selenium Grid to run multiple tests in parallel across different browsers and environments. This approach saves time, especially when dealing with large-scale applications.

5. Integrate with CI/CD Tools

Integrating Selenium WebDriver tests with CI/CD tools like Jenkins or GitLab ensures that your test scripts are automatically executed with every code commit, allowing for continuous testing and faster releases.

Challenges in Selenium WebDriver Testing

Although Selenium WebDriver is a powerful tool, it comes with certain challenges:

Handling Dynamic Elements: Web elements that frequently change their IDs or classes can make testing difficult. In such cases, use XPath or CSS selectors to locate elements more reliably.

Pop-up Windows and Alerts: Selenium can handle browser alerts, but handling multiple pop-ups or system-level alerts may require additional handling.

Captchas and Two-Factor Authentication: Automated testing cannot bypass captchas or two-factor authentication, and you’ll need to collaborate with developers to create a test environment with these features disabled.

Conclusion

Mastering Selenium WebDriver Automation Testing can significantly improve the speed, accuracy, and efficiency of your web application testing efforts. With its cross-browser capabilities, support for multiple languages, and integration with CI/CD pipelines, it is the ideal choice for modern software testing. Following best practices and understanding the challenges that come with automation will set you on the path to becoming a Selenium WebDriver expert.

0 notes