#AzureKubernetesServices

Explore tagged Tumblr posts

Text

Advanced Container Networking Services Features Now In AKS

With Advanced Container Networking Services, which are now widely accessible, you may improve your Azure Kubernetes service’s operational and security capabilities.

Containers and Kubernetes are now the foundation of contemporary application deployments due to the growing popularity of cloud-native technologies. Workloads in containers based on microservices are more portable, resource-efficient, and easy to grow. Organizations may implement cutting-edge AI and machine learning applications across a variety of computational resources by using Kubernetes to manage these workloads, greatly increasing operational productivity at scale. Deep observability and built-in granular security measures are highly desired as application design evolves, however this is difficult due to containers’ transient nature. Azure Advanced Container Networking Services can help with that.#Machinelearning #AzureKubernetesServices #Kubernetes #DomainNameService #DNSproxy #News #Technews #Technology #Technologynews #Technologytrendes #Govindhtech @Azure @govindhtech

Advanced Container Networking Services for Azure Kubernetes Services (AKS), a cloud-native solution designed specifically to improve security and observability for Kubernetes and containerized environments, is now generally available. Delivering a smooth and integrated experience that enables you to keep strong security postures and obtain comprehensive insights into your network traffic and application performance is the major goal of Advanced Container Networking Services. You can confidently manage and scale your infrastructure since this guarantees that your containerized apps are not only safe but also satisfy your performance and reliability goals.Image credit to Microsoft Azure

Let’s examine this release’s observability and container network security features.

Container Network Observability

Although Kubernetes is excellent at coordinating and overseeing various workloads, there is still a significant obstacle to overcome: how can we obtain a meaningful understanding of the interactions between these services? Reliability and security must be guaranteed by keeping an eye on microservices’ network traffic, tracking performance, and comprehending component dependencies. Performance problems, outages, and even possible security threats may go unnoticed in the absence of this degree of understanding.

You need more than just virtual network logs and basic cluster level data to fully evaluate how well your microservices are doing. Granular network metrics, such as node-, pod-, and Domain Name Service (DNS)-level insights, are necessary for thorough network observability. Teams can use these metrics to track the health of each cluster service, solve problems, and locate bottlenecks.

Advanced Container Networking Services offers strong observability features designed especially for Kubernetes and containerized settings to overcome these difficulties. No element of your network is overlooked thanks to Advanced Container Networking Services’ real-time and comprehensive insights spanning node-level, pod-level, Transmission Control Protocol (TCP), and DNS-level data. These indicators are essential for locating performance snags and fixing network problems before they affect workloads.

Among the network observability aspects of Advanced Container Networking Services are:

Node-level metrics: These metrics give information about the volume of traffic, the number of connections, dropped packets, etc., by node. Grafana can be used to view the metrics, which are saved in Prometheus format.

Hubble metrics, DNS, and metrics at the pod level: By using Hubble to gather data and using Kubernetes context, such as source and destination pod names and namespace information, Advanced Container Networking Services makes it possible to identify network-related problems more precisely. Traffic volume, dropped packets, TCP resets, L4/L7 packet flows, and other topics are covered by the metrics. DNS metrics that cover DNS faults and unanswered DNS requests are also included.

Logs of Hubble flow: Flow logs offer insight into workload communication, which facilitates comprehension of the inter-microservice communication. Questions like whether the server received the client’s request are also addressed by flow logs. How long does it take for the server to respond to a client’s request?

Map of service dependencies: Hubble UI is another tool for visualizing this traffic flow; it displays flow logs for the chosen namespace and builds a service-connection graph from the flow logs.

Container Network Security

The fact that Kubernetes by default permits all communication between endpoints, posing significant security threats, is one of the main issues with container security. Advanced fine-grained network controls employing Kubernetes identities are made possible by Advanced Container Networking Services with Azure CNI powered by Cilium, which only permits authorized traffic and secure endpoints.

External services regularly switch IP addresses, yet typical network policies use IP-based rules to regulate external traffic. Because of this, it is challenging to guarantee and enforce consistent security for workloads that communicate outside of the cluster. Network policies can be protected against IP address changes using the Advanced Container Networking Services’ fully qualified domain name (FQDN) filtering and security agent DNS proxy.

FQDN filtering and security agent DNS proxy

The Cilium Agent and the security agent DNS proxy are the two primary parts of the solution. When combined, they provide for more effective and controllable management of external communications by easily integrating FQDN filtering into Kubernetes clusters.

Read more on Govindhtech.com

#Machinelearning#AzureKubernetesServices#Kubernetes#DomainNameService#DNSproxy#News#Technews#Technology#Technologynews#Technologytrendes#govindhtech

0 notes

Photo

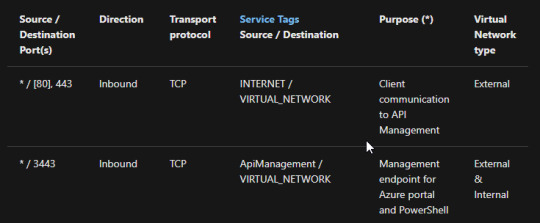

How to install AKS with internal ingress controller and API Management – Part III https://www.devopscheetah.com/how-to-install-aks-with-internal-ingress-controller-and-api-management-part/?feed_id=900&_unique_id=60e3b4e62bd72

#azurekubernetesservicepricing#azurekubernetesservices#azurestackkubernetes#microsoftazurekubernetes

1 note

·

View note

Text

Sample K8s ARM Templates for Infrastructure as Code (IaC) Deployments

I recently rolled off of a customer engagement where I generated a lot of sample infrastructure as code ARM Templates for Kubernetes clusters in Azure (a.k.a. AKS, which is short for Azure Kubernetes Service). These templates mixed in the AKS clusters with networking resources that fall underneath the PaaS side of things (namely Application Gateways and API Management Gateways). Ultimately it was a bit of a learning curve even for me, who has become fairly proficient in cranking out ARM Templates. Some of the feats I had to overcome are as follows: I had to figure out how to pass a SSL cert to an Application Gateway, I had to figure out how to stand up an echo service within a Kubernetes cluster using an internal NGINX ingress controller so the Application Gateway would pass traffic, and how to build an API Management Gateway with the right health check API + health check probe to successfully pass traffic. The easiest thing to do next will be to examine all the code that I've presented to the customer as example code: Reference Architecture with ARM Templates - Azure Kubernetes Service The code itself is outlined in the following way (folder by folder - note the ReadMe's go into this detail as well): 1) setup folder - walks how to set up a local environment (or the Azure Cloud Shell) with PowerShell, the Azure CLI, the Kubernetes CLI, the Helm CLI, enabling Windows Subsystem for Linux (if required), and how to generate a Service Principal for RBAC permissioning on the cluster. 2) keyVault folder - walks through how to set up a Key Vault via code, plus input secrets into the Key Vault for retrieval during the ARM Templates. Using Key Vault means your passwords are never stored in the code you generate, which is a must for all of us in this software defined world. 3) k8s folder - this folder sets up a K8s cluster on Azure using your Service Principal Key Vault secreted password, has the K8s cluster report to an existing Log Analytics workspace, and walks you through how to set up an internal ingress controller. All of this can be adjusted to fit your deployment needs, meaning you can add more pods, increase the node size, etc. 4) appGw folder - this folder sets up a K8s cluster the same way as the k8s folder template files and adds an Application Gateway into the mix. I will call out the specifics for this folder down below related to what I learned over the course of deploying a cluster behind an Application Gateway. 5) apim-appGw folder - this folder sets up a K8s cluster the same way as the k8s folder template files and adds an Application Gateway + API Management Gateway in front of the cluster. Like with the Application Gateway folder, I'll call out specifics for this deployment down below. From there, I wanted to call out the few learnings here in greater detail: 1) Application Gateways require .pfx certs, which in turn require a password. After stumbling through various different deployments using .pem, .cer, etc. for certificate file formats, I found a short sentence on Stack Overflow (that I can't find now), which stated Application Gateways need .pfx certs. Ultimately that makes sense to me, given .pfx certs are used for public and private encryption in most deployments I've supported in former roles. I still wound up getting an error about the password and it being incorrectly being passed whenever I'd try to deploy the Application Gateway. I then did a lot of reading in tech forums and noticed someone tried a deployment where they passed the password through as a regular string (not secure string). I plugged that into my Application Gateway ARM Templates and that worked for each deployment going forward. The password is still not stored in code, it's listed in Key Vault, and passed through as a regular string during the time of deployment. That's not called out too clearly, so hopefully that saves someone some cycles in the future. 2) In order for the Application Gateway's backend health to report healthy using just the K8s cluster, an echo service needed to be deployed on a pod within the cluster. From there, I created a custom probe for the echo API service. I stumbled across the internal NGINX GitHub and altered the following yaml service file to fit my needs: Echo API Service - NGINX The basic gist, is I took out the authorization components of the echo API service yaml definition. Surprisingly, that worked for purposes of standing up baseline K8s infrastructure where all requests hitting the Application Gateway will be sent to the internal ingress controller based upon the rules I configured for this specific deployment. So at the end of this deployment, you will have a 3 node K8s cluster, 1 pod with the echo API service, an internal ingress controller, an Application Gateway, and custom probes that point to the echo API service on the K8s cluster. 3) For the API Management Gateway, I configured a health check API service on the gateway and pointed the Application Gateway to the health check with a custom probe. Note, there are a few things in public preview at the moment related to WAF, Managed Identities for AKS, and making the WAF your internal ingress controller. Since my customer needed a production ready solution, this sample code is current as of July 2019 (and will probably get some revisions as time unfolds). I also anticipate going into detail about the Application Gateway and APIM deployments in a future blog post. For now, bookmark this blog post, clone/save the repo, and give it a whirl!

0 notes

Photo

Deploy Docker image to Kubernetes Cluster | CI-CD for Azure Kubernetes Service http://ehelpdesk.tk/wp-content/uploads/2020/02/logo-header.png [ad_1] Learn how to configure CI/CD pip... #androiddevelopment #angular #azurecontainerregistry #azurekubernetesservice #c #cicdpipelines #css #dataanalysis #datascience #deeplearning #development #devops #docker #iosdevelopment #java #javascript #kubernetes #kubernetescluster #machinelearning #node.js #python #react #unity #webdevelopment

0 notes

Photo

Azure Kubernetes Service (AKS) makes it simple to deploy and manage the Kubernetes cluster in Azure. Microsoft has announced the general availability for organizations to run Windows applications in containers using AKS.

#Microsoft #Azure #AzureKubernetesService #Windowsapplications #WindowsContainers #sourcesoft

0 notes

Text

Advanced Network Observability: Hubble for AKS Clusters

Advanced Container Networking Services

The Advanced Container Networking Services are a new service from Microsoft’s Azure Container Networking team, which follows the successful open sourcing of Retina: A Cloud-Native Container Networking Observability Platform. It is a set of services designed to address difficult issues related to observability, security, and compliance that are built on top of the networking solutions already in place for Azure Kubernetes Services (AKS). Advanced Network Observability, the first feature in this suite, is currently accessible in public preview.

Advanced Container Networking Services: What Is It?

A collection of services called Advanced Container Networking Services is designed to greatly improve your Azure Kubernetes Service (AKS) clusters’ operational capacities. The suite is extensive and made to handle the complex and varied requirements of contemporary containerized applications. Customers may unlock a new way of managing container networking with capabilities specifically designed for security, compliance, and observability.

The primary goal of Advanced Container Networking Services is to provide a smooth, integrated experience that gives you the ability to uphold strong security postures, guarantee thorough compliance, and obtain insightful information about your network traffic and application performance. This lets you grow and manage your infrastructure with confidence knowing that your containerized apps meet or surpass your performance and reliability targets in addition to being safe and compliant.

Advanced Network Observability: What Is It?

The first aspect of the Advanced Container Networking Services suite, Advanced Network Observability, gives Linux data planes running on Cilium and Non-Cilium the power of Hubble’s control plane. It gives you deep insights into your containerized workloads by unlocking Hubble metrics, the Hubble user interface (UI), and the Hubble command line interface (CLI) on your AKS clusters. With Advanced Network Observability, users may accurately identify and identify the underlying source of network-related problems within a Kubernetes cluster.

This feature leverages extended Berkeley Packet Filter (eBPF) technology to collect data in real time from the Linux Kernel and offers network flow information at the pod-level granularity in the form of metrics or flow logs. It now provides detailed request and response insights along with network traffic flows, volumetric statistics, and dropped packets, in addition to domain name service (DNS) metrics and flow information.

eBPF-based observability driven by Retina or Cilium.

Experience without a Container Network Interface (CNI).

Using Hubble measurements, track network traffic in real time to find bottlenecks and performance problems.

Hubble command line interface (CLI) network flows allow you to trace packet flows throughout your cluster on-demand, which can help you diagnose and comprehend intricate networking behaviours.

Using an unmanaged Hubble UI, visualise network dependencies and interactions between services to guarantee optimal configuration and performance.

To improve security postures and satisfy compliance requirements, produce comprehensive metrics and records.

Image credit to Microsoft Azure

Hubble without a Container Network Interface (CNI)

Hubble control plane extended beyond Cilium with Advanced Network Observability. Hubble receives the eBPF events from Cilium in clusters that are based on Cilium. Microsoft Retina acts as the dataplane surfacing deep insights to Hubble in non-Cilium based clusters, giving users a smooth interactive experience.

Visualizing Hubble metrics with Grafana

Grafana Advanced Network Observability facilitates two integration techniques for visualization of Hubble metrics:

Grafana and Prometheus managed via Azure

If you’re an advanced user who can handle more administration overhead, bring your own (BYO) Grafana and Prometheus.

Azure provides integrated services that streamline the setup and maintenance of monitoring and visualization using the Prometheus and Grafana methodology, which is maintained by Azure. A managed instance of Prometheus, which gathers and maintains metrics from several sources, including Hubble, is offered by Azure Monitor.

Hubble CLI querying network flows

Customers can query for all or filtered network flows across all nodes using the Hubble command line interface (CLI) while using Advanced Network Observability.

Through a single pane of glass, users will be able to discern if flows have been discarded or forwarded from all nodes.

Hubble UI service dependency graph

To visualize service dependencies, customers can install Hubble UI on clusters that have Advanced Network Observability enabled. Customers can choose a namespace and view network flows between various pods within the cluster using Hubble UI, which offers an on-demand view of all flows throughout the cluster and surfaces detailed information about each flow.

Advantages

Increased network visibility

Unmatched network visibility is made possible by Advanced Network Observability, which delivers detailed insights into network activity down to the pod level. Administrators can keep an eye on traffic patterns, spot irregularities, and get a thorough grasp of network behavior inside their Azure Kubernetes Service (AKS) clusters thanks to this in-depth insight. Advanced Network Observability offers real-time metrics and logs that reveal traffic volume, packet drops, and DNS metrics by utilizing eBPF-based data collecting from the Linux Kernel. The improved visibility guarantees that network managers can quickly detect and resolve possible problems, preserving the best possible network security and performance.

Tracking of cross-node network flow

Customers in their Kubernetes clusters can monitor network flows over several nodes using Advanced Network Observability. This makes it feasible to precisely trace packet flows and comprehend intricate networking behaviors and node-to-node interactions. Through the ability to query network flows, Hubble CLI allows users to filter and examine particular traffic patterns. The ability to trace packets across nodes and discover dropped and redirected packets in a single pane of glass makes cross-node tracking a valuable tool for troubleshooting network problems.

Monitoring performance in real time

Customers can monitor performance in real time using Advanced Network Observability. Through the integration of Cilium or Retina-powered Hubble measurements, customers can track network traffic in real time and spot performance problems and bottlenecks as they arise. Maintaining high performance and making sure that any decline in network performance is quickly detected and fixed depend on this instantaneous feedback loop. Proactive management and quick troubleshooting are made possible by the continuous, in-depth insights into network operations provided by the monitored Hubble metrics and flow logs.

Historical analysis using several clusters

When combined with Azure Managed Prometheus and Grafana, Advanced Network Observability offers advantages that can be extended to multi-cluster systems. These capabilities include historical analysis, which is crucial for long-term network management and optimization. Network performance and dependability may be affected in the future by trends, patterns, and reoccurring problems that administrators can find by archiving and examining past data from several clusters. For the purposes of capacity planning, performance benchmarking, and compliance reporting, this historical perspective is essential. Future decisions about network setup and design are influenced by the capacity to examine and evaluate historical network data, which aids in understanding how network performance has changed over time.

Read more on Govindhtech.com

#AzureKubernetesServices#NetworkObservability#MicrosoftRetina#azure#LinuxKernel#AzureManaged#microsoft#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

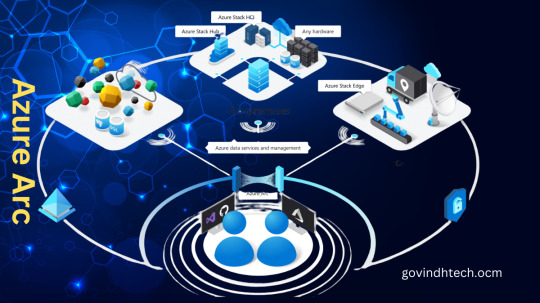

Azure Arc: Bridging the Gap Between On-Premises & the Cloud

Utilize Azure to innovate anywhere

With the help of Azure Arc, you can expand the Azure platform and create services and applications that can operate in multicloud environments, at the edge, and across datacenters. Create cloud-native apps using a unified approach to development, management, and security. Azure Arc is compatible with virtualization and Kubernetes platforms, hardware that is both new and old, Internet of Things gadgets, and integrated systems. Utilize your current investments to modernize with cloud-native solutions, and accomplish more with less.

Dependable development and management expertise to run cloud-native applications on any Kubernetes platform, anywhere.

Deployment of cloud-native data services, such as SQL and PostgreSQL, in the environment of your choice for data insights.

Azure governance and security for infrastructure, data, and apps in a variety of settings.

Adaptable connectivity and infrastructure choices to satisfy your latency and regulatory needs.

Create cloud-native applications and use them anywhere

Create and update Kubernetes-based cloud-native applications.

Complement your DevOps toolkit with Azure security, compliance, and monitoring.

GitOps and policy-driven deployment and configuration across environments help decrease errors and boost innovation.

Launch right away using your current workflow and tools, such as Visual Studio, Terraform, and GitHub.

Write to the same application service APIs so they can be used consistently in edge environments with any version of Kubernetes, on-premises, and across multiple clouds.

Reduce expenses with Azure Hybrid Benefit of free Azure Stack HCI and Azure Kubernetes Service running on Windows Server for users with CSP or Windows Server Software Assurance subscriptions.

Utilize data insights from the edge to the cloud

With an end-to-end solution that includes local data collection, storage, and real-time analysis, you can create applications more quickly.

Integrate data security and governance tools to lower risk exposure and management overhead.

Boost operational effectiveness by using AI tools, services, automations, and consistent data.

Install PostgreSQL (in preview) or an Azure Arc-enabled SQL Managed Instance on any cloud or Kubernetes distribution.

With Azure Machine Learning’s one-click managed machine learning add-on deployment, you can get started in minutes and train models on any Kubernetes cluster.

Protect and manage infrastructure, data, and apps in a variety of settings

Make use of Microsoft Defender for Cloud to get threat detection, response, and analytics on the cloud.

Manage a variety of resources centrally, such as SQL server, Windows and Linux servers running on Azure, Azure Kubernetes services, and Azure Arc-enabled data services.

Manage the virtual machine (VM) lifecycle for your VMware and Azure Stack HCI environments from one central location.

Role-based access control (RBAC) and Azure Lighthouse are used to manage security policies and assign access to resources.

Utilize the Azure portal to manage your various environments in order to streamline multicloud administration and increase operational effectiveness.

Adapt to changing regulatory and connectivity requirements

Meet requirements for residency and sovereignty using a range of infrastructure solutions, such as Azure Stack HCI.

Utilize Azure Policy to adhere to governance and compliance standards for data, infrastructure, and apps.

Get low-latency applications with a streamlined edge computing infrastructure.

Use a full, sporadic, or no internet connection to operate.

Services enabled by Azure Arc

Azure Kubernetes Service (AKS)

Install containerized Windows and Linux applications in datacenters and at the edge, and run AKS on customer-managed infrastructures that are supported. To maintain Kubernetes cluster synchronization and automate updates for both new and old deployments, create GitOps configurations. Give your workloads access to features like traffic management, policy, resiliency, security, strong identity, and observability with service mesh.

Application services

Azure App Service, Azure Functions, Azure Logic Apps, Azure API Management, Azure Event Grid, and Azure Container Apps are just a few of the application services available for selection.

Data services

Install essential Arc-enabled data services on-premises, in multicloud environments, or on any Kubernetes distribution, such as SQL managed instances and PostgreSQL.

Machine learning

Train machine learning models and achieve reliability with service-level objectives with Azure Machine Learning training . Use Azure Arc-enabled machine learning to deploy trained models with Azure Machine Learning.

Azure Arc-enabled infrastructure

Servers

Use bare-metal servers, virtual machines (VMs) running Linux and Windows, and other clouds with a consistent server management experience across platforms. You can view and search for noncompliant servers thanks to built-in Azure policies for servers.

Kubernetes

Kubernetes clusters can be tagged, organized, and built-in policies and inventory can be added using the container platform of your choice. Use GitOps to deploy applications and configuration as code, offering pre-built support for the majority of CNCF (Cloud Native Computing Foundation)-certified Kubernetes.

Azure Stack HCI

Connect your datacenter to the cloud, install cloud-native applications and computational resources at your remote locations, and control everything through the Azure portal. Reuse hardware that satisfies validation requirements, or select from over 25 hardware-validated partners.

VMware

With VMware virtual machines (VMs), manage their entire lifecycle and use Azure RBAC to provision and manage them whenever needed through the Azure portal. Using Azure VMware Solution, Kubernetes clusters, VMware Tanzu Application Service, or your own datacenters, you can access governance, monitoring, update management, and security at scale for VMware virtual machines.

System Center Virtual Machine Manager (SCVMM)

Using Virtual Machine Manager (VMM), configure and oversee the components of your datacenter as a single fabric. Hosts and clusters for VMware and Hyper-V virtualization can be added, configured, and maintained. Find, categorize, provision, assign, and distribute local and remote storage. To build and launch virtual machines (VMs) and services on virtualization hosts, use VMM fabric.

Entire security and conformance, integrated

Every year, Microsoft spends more than $1 billion on research and development related to cybersecurity.

More than 3,500 security professionals who are committed to data security and privacy work for us.

Azure Arc cost

Although there are fees for additional Azure management services, Azure Arc is provided free of charge for managing Azure Arc-enabled servers and Azure Arc-enabled Kubernetes. A SQL Managed Instance with Azure Arc enabled is typically available for an extra fee. Currently available in preview and provided at no additional cost are additional data and application services.

Start by registering for a free Azure account

Begin without cost. Receive a $200 credit that you can use in 30 days. Get free amounts of over 55 other services that are always free in addition to many of our most popular services while you have your credit.

Proceed to pay as you go to continue building with the same free services after your credit. Only make a payment if your monthly usage exceeds your free amounts.

You’ll still receive over 55 always-free services after a year, and you’ll only pay for the extra services you use over your free monthly allotment.

Read more on Govindhtech.com

#AzureArc#Azure#Kubernetesplatforms#SQL#PostgreSQL#AzureKubernetesService#virtualmachine#VMware#Machinelearning#AzureMachineLearning#TechNews#technology#govindhtech

0 notes

Text

Learning Azure’s GPU Future Strategy

Azure’s GPU future strategy is private

They innovate to improve security at Microsoft Azure. Their collaboration with hardware partners to create a silicon-based foundation that protects memory data using confidential computing is a pioneering effort.

Data is created, computed, stored, and moved. Customers already encrypt their data at rest and in transit. They haven’t had the means to protect their data at scale. Confidential computing is the missing third stage in protecting data in hardware-based trusted execution environments (TEEs) that secure data throughout its lifecycle.

Microsoft co-founded the Confidential Computing Consortium (CCC) in September 2019 to protect data Azure’s GPU in use with hardware-based TEEs. These TEEs always protect data by preventing unauthorized access or modification of applications and data during computation. TEEs guarantee data integrity, confidentiality, and code integrity. Attestation and a hardware-based root of trust prove the system’s integrity and prevent administrators, operators, and hackers from accessing it.

For workloads that want extra security in the cloud, confidential computing is a foundational defense in depth capability. Verifiable cloud computing, secure multi-party computation, and data analytics on sensitive data sets can be enabled by confidential computing.

Confidentiality has recently become available for CPUs, but Azure’s GPU based scenarios that require high-performance computing and parallel processing, such as 3D graphics and visualization, scientific simulation and modeling, and AI and machine learning, have also required it. Confidential computing is possible for GPU scenarios processing sensitive data and code in the cloud, including healthcare, finance, government, and education.

Azure has worked with NVIDIA for years to implement GPU confidentiality. This is why previewed Azure confidential VMs with NVIDIA H100-PCIe Tensor Core GPUs at Microsoft Ignite 2023. The growing number of Azure confidential computing (ACC) services and these Virtual Machines will enable more public cloud innovations that use sensitive and restricted data.

GPU confidential computing unlocks use cases with highly restricted datasets and model protection. Scientific simulation and modeling can use confidential computing to run simulations and models on sensitive data like genomic, climate, and nuclear data without exposing the data or code (including model weights) to unauthorized parties. Azure’s GPU This can help scientists collaborate and innovate while protecting data.

Medical image analysis may use confidential computing for image generation. Confidential computing allows healthcare professionals to analyze medical images like X-rays, CT scans, and MRI scans using advanced image processing methods like deep learning without exposing patient data or proprietary algorithms. Keeping data private and secure can improve diagnosis and treatment accuracy and efficiency. Confidential computing can detect medical image tumors, fractures, and anomalies.

Given AI’s massive potential, confidential AI refers to a set of hardware-based technologies that provide cryptographically verifiable protection of data and models throughout their lifecycle, including use. Confidential AI covers AI lifecycle scenarios.

Inference confidentiality. Protects model IP and inferencing requests and responses from model developers, service operations, and cloud providers.

Private multi-party computation. Without sharing models or data, organizations can train and run inferences on models and enforce policies on how outcomes are shared.

Training confidentiality. Model builders can hide model weights and intermediate data like checkpoints and gradient updates exchanged between nodes during training with confidential training. Confidential AI can encrypt data and models to protect sensitive information during AI inference.

Computing components that are private

A robust platform with confidential computing capabilities is needed to meet global data security and privacy demands. It uses innovative hardware and Virtual Machines and containers for core infrastructure service layers. This is essential for services to switch to confidential AI. These building blocks will enable a confidential GPU ecosystem of applications and AI models in the coming years.

Secret Virtual Machines

Confidential Virtual Machines encrypt data in use, keeping sensitive data safe while being processed. Azure was the first major cloud to offer confidential Virtual Machines powered by AMD SEV-SNP CPUs with memory encryption that protects data while processing and meets the Confidential Computing Consortium (CCC) standard.

In the DCe and ECe virtual machines, Intel TDX-powered Confidential Virtual Machines protect data in use. These virtual machines use 4th Gen Intel Xeon Scalable processors to boost performance and enable seamless application onboarding without code changes.

Azure offers confidential virtual machines, which are extended by confidential GPUs. Azure is the sole provider of confidential virtual machines with 4th Gen AMD EPYC processors, SEV-SNP technology, and NVIDIA H100 Tensor Core GPUs in our NCC H100 v5 series.Azure’s GPU Data is protected during processing due to the CPU and GPU’s encrypted and verifiable connection and memory protection mechanisms. This keeps data safe during processing and only visible as cipher text outside CPU and GPU memory.

Containers with secrets

Containers are essential for confidential AI scenarios because they are modular, accelerate development/deployment, and reduce virtualization overhead, making AI/machine learning workloads easier to deploy and manage.

Azure innovated CPU-based confidential containers:

Serverless confidential containers in Azure Container Instances reduce infrastructure management for organizations. Serverless containers manage infrastructure for organizations, lowering the entry barrier for burstable CPU-based AI workloads and protecting data privacy with container group-level isolation and AMD SEV-SNP-encrypted memory.

Azure now offers confidential containers in Azure Kubernetes Service (AKS) to meet customer needs. Organizations can use pod-level isolation and security policies to protect their container workloads and benefit from Kubernetes’ cloud-native standards. Our hardware partners AMD, Intel, and now NVIDIA have invested in the open-source Kata Confidential Containers project, a growing community.

These innovations must eventually be applied to GPU-based confidential AI.

Road ahead

Hardware innovations mature and replace infrastructure over time. They aim to seamlessly integrate confidential computing across Azure, including all virtual machine SKUs and container services. This includes data-in-use protection for confidential GPU workloads in more data and AI services.

Pervasive memory encryption across Azure’s infrastructure will enable organizations to verify cloud data protection throughout the data lifecycle eventually making confidential computing the norm.

Read more on Govindhtech.com

#Azure#GPU#MicrosoftAzure#Microsoft#CPUs#MachineLearning#NVIDIAH100#AI#Intel#VirtualMachines#AzureKubernetesService#AMD#4thGenIntelXeonScalableprocessors#technews#technology#govindhtech

0 notes

Photo

How to install AKS with internal ingress controller and API Management – Part III https://www.devopscheetah.com/how-to-install-aks-with-internal-ingress-controller-and-api-management-part/?feed_id=1498&_unique_id=6125524f0cd52

#azurekubernetesservicepricing#azurekubernetesservices#azurestackkubernetes#microsoftazurekubernetes

0 notes