#Computer Vision Applications in 2022

Explore tagged Tumblr posts

Text

AI in the Fertilizer Industry: Revolutionizing Agriculture with Smart Technology

Artificial Intelligence (AI) is transforming industries worldwide, and the fertilizer sector is no exception. As global populations grow and arable land becomes scarcer, optimizing fertilizer use has become critical for sustainable agriculture. AI technologies are helping fertilizer companies and farmers make smarter decisions, reduce environmental impact, and increase crop yields.

How AI Benefits the Fertilizer Industry

AI brings numerous advantages to the fertilizer sector:

Key AI Applications Explained:

Precision Formulation: AI algorithms optimize nutrient combinations based on soil data and crop requirements

Smart Manufacturing: Machine learning improves production efficiency and predictive maintenance

Supply Chain Optimization: AI models forecast demand and optimize logistics

Quality Control: Computer vision systems detect product inconsistencies

Field Application: AI-powered equipment enables precision fertilization

Source: Fertilizer Industry AI Adoption Report 2023

Emerging Trends in AI for Fertilizers

The fertilizer industry is witnessing several exciting AI developments:

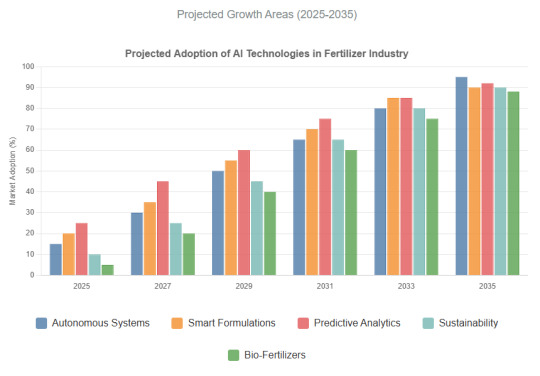

Key Findings:

Overall AI adoption in fertilizer industry projected to reach 78% by 2030 (up from 32% in 2023)

Precision Formulation will maintain leadership with 85% adoption expected by 2030

Field Application will be the fastest growing segment at 28% CAGR

2026 will be the tipping point with over 50% of fertilizer companies deploying AI solutions

AI-driven yield optimization could increase fertilizer efficiency by 30-40% by 2030

Hyper-localized Recommendations: AI systems combining satellite imagery, soil sensors, and weather data to provide field-specific fertilizer advice

Autonomous Application Systems: Self-driving equipment that applies precisely the right amount of fertilizer at the optimal time

Carbon Footprint Reduction: AI models helping reduce nitrogen losses and greenhouse gas emissions from fertilizer use

Biological Fertilizer Development: Machine learning accelerating the discovery of new microbial-based fertilizers

The Future of AI in Fertilizers

As AI technologies mature, we can expect even more transformative changes:

Data Source: Market Research & Industry Projections (2023) | Visualization: Chart.js

Key Emerging Trends

1. Autonomous Fertilization Systems

AI-powered robotic systems that autonomously monitor fields and apply precise amounts of fertilizer based on real-time plant needs, reducing waste by 30-40%.

2. Dynamic Nutrient Formulations

Machine learning algorithms that create customized fertilizer blends adapting to changing soil conditions and crop growth stages throughout the season.

3. Predictive Nutrient Management

Advanced analytics predicting soil nutrient depletion weeks in advance, enabling proactive fertilization strategies.

4. Emission-Reducing Solutions

AI models that optimize application methods to minimize nitrogen losses and greenhouse gas emissions by 25-35%.

5. Next-Gen Bio-Fertilizers

AI-assisted discovery of novel microbial combinations that enhance nutrient absorption while improving soil health.

Industry experts predict that by 2030, AI could help reduce global fertilizer overuse by 40%, significantly decreasing environmental pollution while maintaining food production levels. The integration of AI with other emerging technologies like blockchain for supply chain transparency and CRISPR for crop optimization will create a new era of precision agriculture.

References:

1. Smith, J. (2023). "AI Applications in Modern Agriculture". Journal of Agricultural Technology.

2. FAO Report (2022). "Digital Transformation in the Fertilizer Sector". United Nations.

3. Market Research Future (2023). "AI in Agriculture Market Forecast 2023-2030".

0 notes

Text

Why Choose Heriot-Watt University?

Heriot-Watt University stands out as a premier destination for students seeking quality education, innovative research, and a vibrant campus life. Located in Edinburgh, Scotland—one of the most student-friendly cities in the world—Heriot-Watt offers a supportive environment for both local and international students.

Heriot-Watt University: Admission Requirements, Top Departments, Student Enrollments, and Merit Scholarships

Are you considering Heriot-Watt University for your higher education journey? As one of the UK’s leading public universities, Heriot-Watt is renowned for its academic excellence, state-of-the-art facilities, and strong industry connections. If you are searching for the best consultancy in Hyderabad for abroad studies, Edwest Global International Educational Consultants is your trusted partner for end-to-end support. In this blog, we’ll explore everything you need to know about Heriot-Watt University, including admission requirements, top departments, student enrollments, master’s programs, and merit scholarships.

Admission Requirements

Heriot-Watt University offers a streamlined admission process for both undergraduate (UG) and postgraduate (PG) aspirants. The university has two main intakes: September (Fall) and January (Spring), with some programs offering a May intake as well. The application deadlines vary for each intake.

Key Admission Requirements:

International Students:

Copy of passport (picture page)

Degree certificate or equivalent

Evidence of English language proficiency (IELTS, PTE, or equivalent)

CV and academic references

Statement of purpose

Application Portal:

UG: UCAS

PG: University’s official website

Application Fee:

UG: GBP 22.50 (single course) or GBP 28.50 (multiple choices)

Acceptance Rate: Around 60% (unofficial sources)

Heriot-Watt is recognized for its inclusive environment, with 60% of students reporting no discrimination. The university is also known for its cost-effectiveness and supportive faculty.

Top Departments

Heriot-Watt University is highly regarded for its programs across various disciplines. Some of the top-rated departments include:

Business and Economics

Management

Engineering and Technology

Psychology

Arts and Humanities

Mathematics

Biological Sciences

These departments offer a wide range of undergraduate, postgraduate, and research programs, making Heriot-Watt a preferred choice for students from around the globe.

Student Enrollments

Heriot-Watt’s enrollment statistics reflect its growing reputation and international appeal:

Foundation Programs: Steady growth, indicating strong preparatory support.

Undergraduate (UG) Enrollments: Slight decline in recent years, despite a peak in new admissions in 2022-23.

Postgraduate Taught (PGT) Programs: Overall growth.

Postgraduate Research Courses: Stable, but with fewer students progressing.

Exchange and Non-Graduate Students: Increasing numbers, signaling more international interest and alternative study routes.

The total student population has remained relatively stable, with fluctuations reflecting global trends and the university’s evolving academic offerings.

Master’s Programs: Duration and Highlights

Heriot-Watt offers a diverse portfolio of master’s programs, both on-campus and online. Most on-campus master’s programs are one year in duration, but some, like the MSc Computing, are two years and are designed for students from any academic background.

Popular Master’s Programs Include:

MSc Global Sustainability Engineering

MSc Imaging, Vision and High Performance Computing

MSc Renewable Energy Engineering

MSc Robotics

MSc Toxicology

Applied Cyber Security

Artificial Intelligence (2 years)

Data Science (2 years)

MSc Actuarial Management

Advanced Computer Science

Heriot-Watt Online also offers 25 online master’s degrees in business and STEM subjects, providing flexibility for working professionals and international students.

Merit Scholarships

Heriot-Watt University offers several merit-based scholarships to support outstanding students:

Postgraduate UK Merit Scholarship:

Award Value: 20% tuition fee discount

Eligibility: UK fee status, 2:1 undergraduate degree or higher, full-time enrollment in eligible programs (e.g., MSc Real Estate, MSc Brewing & Distilling, MSc Investment Management)

Application: Submit transcript/award certificate with proof of 2:1 degree

Closing Date: 29 August 2025

Students can only receive one Heriot-Watt funded scholarship per program. These scholarships make studying at Heriot-Watt even more accessible and affordable.

Why Choose Edwest Global International Educational Consultants?

If you are looking for abroad education consultants in Hyderabad or overseas education consultants Hyderabad, Edwest Global International Educational Consultants stands out as a trusted advisor. With expert guidance on university applications, visa processing, and scholarship opportunities, we help you navigate every step of your journey to Heriot-Watt University and beyond.

Start your international education journey with Edwest Global International Educational Consultants and unlock your potential at Heriot-Watt University!

0 notes

Text

The Rise of Laser Applications Across Sectors – Market Insights Revealed

Laser Technology Market Growth & Trends

According to a recent report published by Grand View Research, Inc., the global laser technology market is poised for robust growth, with its market size projected to reach USD 32.69 billion by 2030. This market is expected to expand at a compound annual growth rate (CAGR) of 7.8% from 2023 to 2030. Key drivers of this growth include rapid advancements in medical infrastructure and the rising number of cosmetic procedures being performed globally. In particular, the increasing demand for aesthetic laser treatments in emerging economies such as China and India is significantly contributing to the market expansion.

For instance, in November 2022, Apollo Hospitals Enterprise Ltd. in Bengaluru, India, unveiled the country's first Moses 2.0 laser technology system. This advanced system enables bloodless and painless treatments for conditions such as prostate enlargement and large kidney stones, offering a highly effective solution for high-risk patients.

Laser technology also plays a critical role in the evolution of Industry 4.0, which emphasizes smart manufacturing and automation. The integration of laser systems into industrial processes has led to enhanced productivity, cost efficiency, better quality control, and greater scalability. Laser technology supports a wide range of mechanical applications, including laser printing, cutting, welding, engraving, and brazing. Moreover, its convergence with modern technologies—such as the Internet of Things (IoT), cloud computing, artificial intelligence (AI), machine learning, and cyber-physical systems—further broadens its industrial application scope.

A notable example of this trend is the October 2022 launch of HIGHvision by Coherent Corp., a prominent U.S.-based manufacturer of semiconductors and optical components. This smart machine vision system, integrated with Industry 4.0 frameworks, enhances the precision and efficiency of laser welding heads. It is particularly effective in the manufacturing of electric vehicle (EV) motors and batteries, where speed and accuracy are essential.

In the medical field, laser technology has witnessed transformative growth. Medical lasers are non-invasive, high-intensity light sources that can target tissue accurately without causing discoloration or scarring. These devices are now routinely used across various disciplines, including urology, dermatology, ophthalmology, and dentistry. The increasing preference for non-invasive treatments and the rising number of laser-based procedures for disease management are expected to significantly boost market demand.

For example, in October 2021, Quantel Medical, a leading manufacturer of ophthalmic medical devices, launched a dedicated website focused on laser therapies for retinal disorders. The platform serves as a centralized resource, offering access to the latest clinical information, research, and advancements in retinal laser treatments, further demonstrating the growing application of laser technology in healthcare.

Laser Technology Market Report Highlights

North America is witnessing strong market growth due to increased healthcare investment, the expansion of medical infrastructure, and technological advancements across multiple sectors.

The rising adoption of laser technologies in augmented and virtual reality (AR/VR) as well as in LiDAR (light detection and ranging) applications is propelling growth across both consumer electronics and automotive industries.

The implementation of 3D laser technologies has significantly lowered the cost and improved the efficiency of producing prosthetic devices. These technologies offer high precision, minimal contamination, and faster manufacturing times.

The market is further driven by increased demand in healthcare, particularly for non-invasive procedures, and the expanding use of lasers in the fabrication of nanodevices and microdevices—key components in next-generation electronics and medical technologies.

Get a preview of the latest developments in the Laser Technology Market? Download your FREE sample PDF copy today and explore key data and trends

Laser Technology Market Segmentation

Grand View Research has segmented the global laser technology market based on type, product, application, vertical, and region:

Laser Technology Type Outlook (Revenue, USD Million, 2017 - 2030)

Solid-state Lasers

Fiber Lasers

Ruby Lasers

YAG Lasers

Thin-Disk Lasers

Gas Lasers

CO2 Lasers

Excimer Lasers

He-Ne Lasers

Argon Lasers

Chemical Lasers

Liquid Lasers

Semiconductor Lasers

Laser Technology Product Outlook (Revenue, USD Million, 2017 - 2030)

Laser

System

Laser Technology Application Outlook (Revenue, USD Million, 2017 - 2030)

Laser Processing

Macroprocessing

Cutting

Drilling

Welding

Microprocessing

Optical Communications

Optoelectronic Devices

Other Applications

Laser Technology Vertical Outlook (Revenue, USD Million, 2017 - 2030)

Telecommunications

Industrial

Semiconductor & Electronics

Commercial

Aerospace & Defence

Automotive

Healthcare

Other End Users

Laser Technology Regional Outlook (Revenue, USD Million, 2017 - 2030)

North America

US

Canada

Mexico

Europe

Germany

UK

France

Asia Pacific

China

India

Japan

Central & South America

Brazil

Middle East and Africa (MEA)

List of Key Players of the Laser Technology Market

Coherent, Inc.

TRUMPF GmbH + Co. KG

Han's Laser Technology Industry Group Co., Ltd.

Lumentum Holdings Inc.

JENOPTIK AG

Novanta Inc.

LUMIBIRD

Gravotech Marking

Corning Incorporated

Bystronic Laser AG

Order a free sample PDF of the Market Intelligence Study, published by Grand View Research.

0 notes

Text

RFID Sensor Demand Surging Across Global Retail and Healthcare Sectors

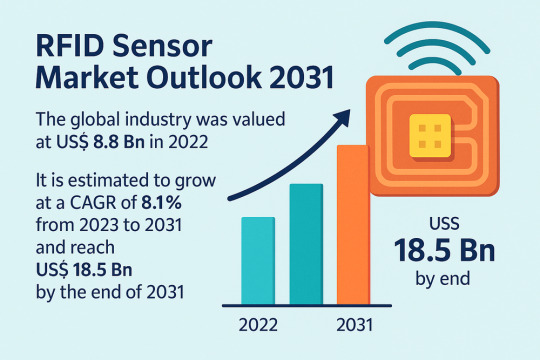

The global Radio Frequency Identification (RFID) Sensor Market is poised for a significant growth trajectory, with market value projected to more than double from US$ 8.8 billion in 2022 to US$ 18.5 billion by the end of 2031. Registering a robust compound annual growth rate (CAGR) of 8.1% during the forecast period (2023–2031), the market is driven by increasing demand across logistics, healthcare, retail, and sports industries.

Market Overview: RFID sensors are electronic devices that use radio waves to identify and track tags attached to objects. These sensors, which include a tag and a reader, are revolutionizing data collection and real-time tracking. Their ability to reduce manual errors and optimize operations makes them highly valuable in modern digital ecosystems.

From healthcare to warehouse automation and sports analytics, RFID technology is enhancing performance monitoring, improving patient safety, and boosting inventory visibility globally.

Market Drivers & Trends

1. Logistics & Healthcare Boom: Post-pandemic globalization and the e-commerce surge have amplified demand for efficient supply chain solutions. RFID sensors are being increasingly adopted to streamline warehouse management, reduce operational delays, and enhance real-time tracking.

Healthcare applications—such as medication authentication, patient tracking, and vaccine logistics—have demonstrated RFID’s potential in boosting safety and efficiency. The growing aging population and demand for digital healthcare systems further fuel this trend.

2. Sports Analytics Integration: Athletic performance tracking has embraced RFID sensors for their precision and real-time data capabilities. Embedded in sportswear or equipment, these sensors are particularly advantageous in indoor sports, where GPS signals may falter.

Leagues such as the NHL are deploying RFID systems to track puck and player movements, offering deeper game insights and improving training analytics.

Latest Market Trends

IoT & AI Integration: RFID sensors are increasingly being merged with Internet of Things (IoT) and Artificial Intelligence (AI) technologies. This fusion enhances smart decision-making and predictive analytics in retail, manufacturing, and transportation.

Smart Packaging & Wearables: Companies are designing RFID tags for emerging uses such as smart packaging in retail and embedded sensors in healthcare wearables.

Self-service Retail Innovations: In 2023, Amazon integrated RFID into its Just Walk Out technology, allowing customers to shop and exit without traditional checkout processes. This trend highlights RFID’s transformative potential in enhancing customer experience and operational efficiency.

Key Players and Industry Leaders

The RFID sensor market is characterized by fierce competition and continuous innovation. Leading companies include:

Alien Technology, LLC

Applied Wireless

Avery Dennison Corporation

CAEN RFID S.R.L.

Honeywell International Inc.

Checkpoint Systems, Inc.

Impinj, Inc.

Invengo Information Technology Co. Ltd

Motorola Solutions, Inc.

NXP Semiconductors

Savi Technology

These players are investing in R&D to enhance sensor accuracy, expand RFID reader capabilities, and explore new applications in agriculture, defense, and advertisement sectors.

Access an overview of significant conclusions from our Report in this sample - https://www.transparencymarketresearch.com/sample/sample.php?flag=S&rep_id=50925

Recent Developments

Amazon’s Retail Revolution (Sep 2023): The tech giant deployed RFID sensors across its physical retail outlets, integrating AI and computer vision to enable seamless checkout, driving customer convenience and cost reduction.

Expansion in RFID-based Sports Solutions: Wearable RFID devices and clothing-integrated sensors are now commercially available for use in athlete monitoring and training optimization.

Collaborations & Mergers: Companies are engaging in strategic partnerships to strengthen their market position. For instance, partnerships between RFID tech firms and logistics providers are on the rise to build robust, end-to-end tracking solutions.

Market Opportunities

Emerging Economies: Asia Pacific and Latin America present untapped potential due to increasing digitization, supportive government policies, and growth in e-commerce and healthcare sectors.

AI-Powered RFID Systems: Integrating RFID with AI algorithms opens opportunities for predictive supply chain analytics, real-time threat detection in security, and automated inventory audits.

Defense and Aerospace Expansion: RFID is gaining traction in military logistics and equipment tracking, particularly for real-time asset monitoring in critical missions.

Future Outlook

The future of the RFID sensor market is heavily influenced by digitization and automation trends. By 2031, RFID systems are expected to play a foundational role in smart cities, precision agriculture, and autonomous logistics.

Technological advancements such as energy harvesting passive tags and integration with blockchain for data security will shape the next decade. Manufacturers focusing on reducing costs, improving battery life, and enhancing reader-tag communication range will lead the market.

Market Segmentation

The RFID sensor market can be segmented as follows:

By Type:

Active

Passive

By Frequency Range:

Low Frequency (LF)

High Frequency (HF)

Ultra High Frequency (UHF)

By Application:

Access Control

Livestock Tracking

Ticketing

Cashless Payment

Inventory Management

Others

By End-use Industry:

Transportation

Logistics & Supply Chain

Manufacturing

Retail

Healthcare

Aerospace & Defense

Agriculture

Others (Sports, Advertisement, etc.)

Regional Insights

North America held the largest market share in 2022, driven by technological maturity, regulatory frameworks, and high adoption in healthcare and logistics. The U.S. government’s cyber-security budget (US$ 12.7 Bn in FY2024) is indirectly supporting RFID growth as these sensors are critical to secure and traceable systems.

Asia Pacific is expected to witness the highest growth during the forecast period, propelled by rapid urbanization, digitization policies in countries like India and China, and rising RFID usage in retail and agriculture.

Europe follows closely, supported by stringent regulations around product tracking and data transparency.

Why Buy This Report?

Comprehensive Analysis: Includes detailed insights on market segmentation, regional dynamics, and competitive landscape.

Strategic Forecasting: Reliable market projections and future opportunity mapping to support investment and business decisions.

Innovation Spotlight: Highlights recent technological advancements and key industry developments.

Competitive Intelligence: In-depth profiles of leading players and emerging companies.

Custom Data Models: Access to Excel-based quantitative datasets for tailored analysis.

Frequently Asked Questions

Q1. What is the projected size of the RFID Sensor Market by 2031? A1. The global RFID sensor market is estimated to reach US$ 18.5 billion by 2031.

Q2. Which industries are major adopters of RFID sensors? A2. Logistics, healthcare, retail, sports, and manufacturing are leading industries utilizing RFID sensors.

Q3. What factors are driving market growth? A3. The key drivers include expansion in logistics and healthcare, increasing need for real-time tracking, and the rise of smart retail and sports analytics.

Q4. Who are the key players in the RFID sensor industry? A4. Some top players are Honeywell, Avery Dennison, NXP Semiconductors, Impinj, and Alien Technology.

Q5. Which region leads the global RFID sensor market? A5. North America currently holds the largest market share, with Asia Pacific projected to grow rapidly.

Explore Latest Research Reports by Transparency Market Research:

Lithography Equipment Market: https://www.transparencymarketresearch.com/lithography-equipment-market.html

3D Sensor Market: https://www.transparencymarketresearch.com/3d-sensors-market.html

Metal Composite Power Inductor Market: https://www.transparencymarketresearch.com/metal-composite-power-inductor-market.html

Fluid Sensors Market: https://www.transparencymarketresearch.com/fluid-sensors-market.html

About Transparency Market Research

Transparency Market Research, a global market research company registered at Wilmington, Delaware, United States, provides custom research and consulting services. Our exclusive blend of quantitative forecasting and trends analysis provides forward-looking insights for thousands of decision makers. Our experienced team of Analysts, Researchers, and Consultants use proprietary data sources and various tools & techniques to gather and analyses information.

Our data repository is continuously updated and revised by a team of research experts, so that it always reflects the latest trends and information. With a broad research and analysis capability, Transparency Market Research employs rigorous primary and secondary research techniques in developing distinctive data sets and research material for business reports.

Contact:

Transparency Market Research Inc.

CORPORATE HEADQUARTER DOWNTOWN,

1000 N. West Street,

Suite 1200, Wilmington, Delaware 19801 USA

Tel: +1-518-618-1030

USA - Canada Toll Free: 866-552-3453

Website: https://www.transparencymarketresearch.com

Email: [email protected]

0 notes

Text

Everything You Need to Know About B.Tech in AI & ML in 2025

Artificial Intelligence (AI) and Machine Learning (ML) are no longer just buzzwords; they are at the forefront of technological innovation, revolutionizing industries across the globe. For aspiring engineers, pursuing a B.Tech in AI and ML offers a pathway to engage with these transformative technologies. Mohan Babu University (MBU) in Andhra Pradesh, India, provides a robust program designed to equip students with the necessary skills and knowledge.

Program Overview

MBU's Department of Artificial Intelligence and Machine Learning, established in 2022, offers a comprehensive B.Tech AI and ML program in Computer Science and Engineering (CSE) with a specialization in AI and ML. This undergraduate program integrates core computer science principles with advanced AI techniques, preparing students for the challenges of the digital age.

In this B Tech AI course, students will not only learn the fundamentals of computer science but will also dive into the intricacies of AI, ML, and their applications in real-world scenarios.

Curriculum Highlights

The curriculum for the B Tech Artificial Intelligence and Machine Learning program is meticulously designed to provide both theoretical foundations and practical applications:

Core Subjects: Data Structures, Algorithms, Operating Systems, and Database Management Systems.

Specialized AI & ML Topics: Machine Learning, Deep Learning, Natural Language Processing, Computer Vision, and Robotics.

Hands-on Experience: Students engage in real-time capstone projects, workshops, and seminars to apply their learning in real-world scenarios.

The B Tech CSE with AI and ML program at MBU ensures that students are well-equipped with the knowledge and skills needed to excel in the fields of AI and Machine Learning.

Infrastructure & Research Facilities

MBU boasts state-of-the-art infrastructure to support AI & ML education:

IBM Centre of Excellence: Collaborative programs with IBM provide students with industry-relevant training and exposure to cutting-edge technologies.

Machine Learning Lab: Equipped with the latest hardware and software tools, enabling students to conduct experiments and research in AI & ML.

Research Collaborations: The department has established the Center for Research in Machine Learning in collaboration with Central Connecticut State University, USA, focusing on smart applications to solve real-world problems.

Industry Collaborations

MBU's B Tech AI and ML program emphasizes industry partnerships to enhance learning:

IBM Collaboration: Students undergo specialized training programs and workshops aligned with IBM's curriculum, ensuring industry readiness.

Industry Visits & Internships: Regular industry visits and internship opportunities provide students with practical exposure and networking prospects.

Career Prospects

Graduates of the B Tech in AI and ML program are well-equipped to pursue careers in various domains:

AI Research Scientist: Conducting research to develop new AI algorithms and models.

Machine Learning Engineer: Designing and implementing machine learning models to solve complex problems.

Data Scientist: Analyzing large datasets to extract meaningful insights and inform decision-making.

Robotics Engineer: Developing intelligent robotic systems for automation and other applications.

Conclusion

A B.Tech in AI and ML offers an exciting opportunity to dive into the world of cutting-edge technology. With increasing demand for AI and ML professionals, pursuing a B Tech AI course equips students with the skills needed for a successful career in AI, machine learning, and related fields. The comprehensive curriculum, industry collaborations, and hands-on experience ensure graduates are well-prepared for the future. If you're passionate about innovation and technology, a B Tech in AI and ML is a step towards a promising career.

0 notes

Text

OQC Sets 2034 Goal for 50,000 Logical Qubits In Quantum Plan

Oxford Quantum Circuits (OQC), a UK quantum computing company, announced its ambitious fault-tolerant quantum computer roadmap. OQC leads the global effort to build commercial quantum machines.

Vision and Milestones of OQC

OQC is a bold quantum computing vision with explicit logical qubit goals. Businesses aim to:

200 logic qubits by 2028: Quantum computers may revolutionise essential applications including vulnerability analysis, fraud detection, arbitrage, and cyber threat identification. OQC predicts that by 2028, smartphones with this capabilities will surpass supercomputers on certain workloads.

By 2034, 50,000 logical qubits According to other quantum computing roadmaps, this objective is over ten times the highest, making it extremely ambitious. This size is expected to boost quantum computer applications including decryption, drug discovery, and quantum chemistry. Gerald Mullally, OQC's interim CEO, calls this initiative a “landmark for quantum computing, in the UK and globally,” indicating that quantum computing is “closer than many realise” to changing lives. He stresses that enterprises, notably financial and national security firms, must prepare for a “quantum-transformed world”.

Transfer to the “Logical Era” and OQC's Main Advantage The shift from the “physical era” to the “logical era” of quantum computing is central to OQC's roadmap.

Physical qubits are noisy and defective, requiring error correction in the “physical era”.

A quantum computer's capabilities depend on the number of error-corrected logical qubits in the “logical era”. Physical qubits are fragile and error-prone, hence logical qubits are needed to build successful quantum computers.

Oxford Quantum Circuits' patented technology provides them an edge in this move. Their device uses 10 times fewer physical qubits than current approaches to generate each error-corrected logical qubit. This shows that OQC's technique uses fewer than 100 physical qubits per logical gate, while others can use up to 1,000. They scale better due to their “resource ratio” efficiency.

Exclusive Technology: 3D Superconducting Transmon Circuits

OQC's technology relies on Oxford University superconducting transistor circuits. The 3D architecture is unique to their design. This 3D architecture has performance and scaling advantages:

Easy control and readout: Making qubit manipulation and reading easier, which is difficult.

By reducing qubit interactions, reduced crosstalk preserves quantum coherence and reduces mistakes in larger arrays.

OQC qubit architecture detects faults and their locations. With location data, errors can be reduced. Their design allows them to identify energetic qubit states degrading to less energetic ones, the main source of architecture mistakes.

In addition to architectural design, OQC improves physical error rates. They intend to lower these rates to less than 0.1% by carefully tuning qubits to reduce errors and improving chip materials to extend qubit coherence.

Their qubit gates' accuracy and speed demonstrate the technology's capability. In under 25 ns, OQC's two-qubit gate achieves 99.8% fidelity. This makes it one of the most precise and fast gates ever seen. Scaling quantum machines for economic benefits and efficiently performing more complex algorithms requires rapid gate speeds.

Leadership, Funding, and Strategic Partnerships

OQC's ambitious ambition relies on strategic connections and ongoing fundraising.

They partner with Riverlane, which develops quantum computer fault-tolerant algorithms. Riverlane CEO Steve Brierley called OQC's strategy a “bold vision” and “clear statement of intent” that places the UK at the forefront of quantum computing.

Organisational leadership has changed recently. Gerald Mullally replaced inventor Ilana Wisby as interim CEO last year. In April, Jack Boyer became board chairman.

A successful Series A investment round in 2022 raised £38 million for OQC, the biggest for a UK quantum computing business. Series B fundraising, estimated at $100 million, is underway. Backed by Oxford Science Enterprises (OSE), University of Tokyo Edge Capital Partners (UTEC), Lansdowne Partners, and OTIF, SBI Investment in Japan is leading this round.

As part of its global expansion, OQC will install its first quantum computer in New York City alongside a data centre partner later this year. They signed their first quantum computing co-location data centre arrangement.

OQC's roadmap also includes an Application Optimised Compute strategy that designs quantum computing systems for applications where quantum technology has a clear advantage. This strategic goal ensures that their ideas immediately benefit businesses in national security and financial services. The sources briefly mention Google, IBM, Rigetti, and IQM in Finland, but OQC claims their 50,000 logical qubit goal is better than other roadmaps.

#OxfordQuantumCircuits#logicalqubits#drugdiscovery#physicalqubits#quantummachines#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

0 notes

Text

Exploring the Spiritual Wellness Apps Market: Detailed Industry Insights

The global spiritual wellness apps market size was valued at USD 4.84 billion in 2030 and is projected to grow at a CAGR of 14.6% from 2025 to 2030, according to a new report by Grand View Research, Inc. Apps such as yoga training, meditation management, and spiritual wellness are beneficial in maintaining an individual's overall mental and emotional health, promoting a better lifestyle, and reducing stress. Growing awareness among people regarding the importance of meditation and yoga and its benefits boosts the demand for these apps. For instance, in February 2024, Mindvalley, Inc., a personal growth platform, launched an immersive meditation and personal development app on Apple's spatial computing headset Vision Pro. Users can experience immersive meditations in natural environments such as deserts, forests, and mountains.

Technological advancements, the integration of artificial intelligence (AI) in apps, and the rise in the launch of meditation and mindfulness apps drive market growth. For instance, in March 2024, Gwyneth Paltrow, an American actress, businesswoman, and the founder of Goop, launched Moments Of Space, an AI-powered meditation app. This application leads users through a gentle meditation approach that can be easily integrated into a busy lifestyle.

The Mobile Economy 2023 report published by GSMA reported that smartphone adoption stood at around 76% in 2022 and is expected to increase to 92% by 2030. Thus, smartphone adoption is expected to drive market growth over the forecast period. Moreover, the growing adoption of 5G technology globally fuels market growth. For instance, according to the Mobile Economy 2023 report published by GSMA, by the end of 2030, the penetration of 5G is estimated to be 54% globally. Thus, such factors drive market growth.

Some of the key players in the market are Headspace Inc., Sattva, Insight Network Inc., Breethe, Simple Habit, Muse, Mindbliss Inc., Enso Meditation, Flowtime, and Calm. Players are adopting key strategic initiatives to expand their business footprint and grow their clientele. For instance, in March 2024, Headspace Inc., a digital mental health company, introduced Headspace XR. This mindfulness app employs mixed and virtual reality to aid users in strengthening their mind-body connection through breathwork and movement.

For More Details or Sample Copy please visit link @: Spiritual Wellness Apps Market Report

Spiritual Wellness Apps Market Report Highlights

Based on platform, the android segment dominated the spiritual wellness apps market in 2024 with a revenue share of 47.8%. Owing to high adoption of android devices among customers.

Based on device, the smartphones segment dominated the spiritual wellness apps market in 2024 and accounted for the largest revenue share of 57.1%. Owing to increasing smartphone penetration globally and the presence of strong internet connection.

Based on subscription, the paid (In App Purchase) segment dominated the spiritual wellness apps market in 2024. Additional features over free apps such as yoga, meditation, and spiritual videos drive this segment’s growth.

Based on type, the meditation and mindfulness apps segment dominated the spiritual wellness apps market in 2024, owing to growing awareness regarding mental health and meditation practices and their benefits.

North America dominated the global market due to the presence of a large number of major market players, government initiatives and the launch of a diverse range of meditation and yoga training apps.

#SpiritualWellnessApps#WellnessTech#MindBodyApps#HolisticHealth#MentalWellnessMarket#HealthAndWellnessApps#DigitalWellness#MeditationApps#MindfulnessApps#HealthTechMarket#MobileAppTrends#EmergingTechMarkets

0 notes

Text

A new way to create realistic 3D shapes using generative AI

New Post has been published on https://thedigitalinsider.com/a-new-way-to-create-realistic-3d-shapes-using-generative-ai/

A new way to create realistic 3D shapes using generative AI

Creating realistic 3D models for applications like virtual reality, filmmaking, and engineering design can be a cumbersome process requiring lots of manual trial and error.

While generative artificial intelligence models for images can streamline artistic processes by enabling creators to produce lifelike 2D images from text prompts, these models are not designed to generate 3D shapes. To bridge the gap, a recently developed technique called Score Distillation leverages 2D image generation models to create 3D shapes, but its output often ends up blurry or cartoonish.

MIT researchers explored the relationships and differences between the algorithms used to generate 2D images and 3D shapes, identifying the root cause of lower-quality 3D models. From there, they crafted a simple fix to Score Distillation, which enables the generation of sharp, high-quality 3D shapes that are closer in quality to the best model-generated 2D images.

These examples show two different 3D rotating objects: a robotic bee and a strawberry. Researchers used text-based generative AI and their new technique to create the 3D objects.

Image: Courtesy of the researchers; MIT News

Some other methods try to fix this problem by retraining or fine-tuning the generative AI model, which can be expensive and time-consuming.

By contrast, the MIT researchers’ technique achieves 3D shape quality on par with or better than these approaches without additional training or complex postprocessing.

Moreover, by identifying the cause of the problem, the researchers have improved mathematical understanding of Score Distillation and related techniques, enabling future work to further improve performance.

“Now we know where we should be heading, which allows us to find more efficient solutions that are faster and higher-quality,” says Artem Lukoianov, an electrical engineering and computer science (EECS) graduate student who is lead author of a paper on this technique. “In the long run, our work can help facilitate the process to be a co-pilot for designers, making it easier to create more realistic 3D shapes.”

Lukoianov’s co-authors are Haitz Sáez de Ocáriz Borde, a graduate student at Oxford University; Kristjan Greenewald, a research scientist in the MIT-IBM Watson AI Lab; Vitor Campagnolo Guizilini, a scientist at the Toyota Research Institute; Timur Bagautdinov, a research scientist at Meta; and senior authors Vincent Sitzmann, an assistant professor of EECS at MIT who leads the Scene Representation Group in the Computer Science and Artificial Intelligence Laboratory (CSAIL) and Justin Solomon, an associate professor of EECS and leader of the CSAIL Geometric Data Processing Group. The research will be presented at the Conference on Neural Information Processing Systems.

From 2D images to 3D shapes

Diffusion models, such as DALL-E, are a type of generative AI model that can produce lifelike images from random noise. To train these models, researchers add noise to images and then teach the model to reverse the process and remove the noise. The models use this learned “denoising” process to create images based on a user’s text prompts.

But diffusion models underperform at directly generating realistic 3D shapes because there are not enough 3D data to train them. To get around this problem, researchers developed a technique called Score Distillation Sampling (SDS) in 2022 that uses a pretrained diffusion model to combine 2D images into a 3D representation.

The technique involves starting with a random 3D representation, rendering a 2D view of a desired object from a random camera angle, adding noise to that image, denoising it with a diffusion model, then optimizing the random 3D representation so it matches the denoised image. These steps are repeated until the desired 3D object is generated.

However, 3D shapes produced this way tend to look blurry or oversaturated.

“This has been a bottleneck for a while. We know the underlying model is capable of doing better, but people didn’t know why this is happening with 3D shapes,” Lukoianov says.

The MIT researchers explored the steps of SDS and identified a mismatch between a formula that forms a key part of the process and its counterpart in 2D diffusion models. The formula tells the model how to update the random representation by adding and removing noise, one step at a time, to make it look more like the desired image.

Since part of this formula involves an equation that is too complex to be solved efficiently, SDS replaces it with randomly sampled noise at each step. The MIT researchers found that this noise leads to blurry or cartoonish 3D shapes.

An approximate answer

Instead of trying to solve this cumbersome formula precisely, the researchers tested approximation techniques until they identified the best one. Rather than randomly sampling the noise term, their approximation technique infers the missing term from the current 3D shape rendering.

“By doing this, as the analysis in the paper predicts, it generates 3D shapes that look sharp and realistic,” he says.

In addition, the researchers increased the resolution of the image rendering and adjusted some model parameters to further boost 3D shape quality.

In the end, they were able to use an off-the-shelf, pretrained image diffusion model to create smooth, realistic-looking 3D shapes without the need for costly retraining. The 3D objects are similarly sharp to those produced using other methods that rely on ad hoc solutions.

“Trying to blindly experiment with different parameters, sometimes it works and sometimes it doesn’t, but you don’t know why. We know this is the equation we need to solve. Now, this allows us to think of more efficient ways to solve it,” he says.

Because their method relies on a pretrained diffusion model, it inherits the biases and shortcomings of that model, making it prone to hallucinations and other failures. Improving the underlying diffusion model would enhance their process.

In addition to studying the formula to see how they could solve it more effectively, the researchers are interested in exploring how these insights could improve image editing techniques.

This work is funded, in part, by the Toyota Research Institute, the U.S. National Science Foundation, the Singapore Defense Science and Technology Agency, the U.S. Intelligence Advanced Research Projects Activity, the Amazon Science Hub, IBM, the U.S. Army Research Office, the CSAIL Future of Data program, the Wistron Corporation, and the MIT-IBM Watson AI Laboratory.

#2022#3d#3D object#3d objects#ADD#ai#ai model#Algorithms#Amazon#Analysis#applications#artificial#Artificial Intelligence#artists#Arts#Augmented and virtual reality#author#bee#bridge#computer#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#Computer science and technology#Computer vision#conference#creators#dall-e#data#data processing#defense

0 notes

Text

𝐍𝐯𝐢𝐝𝐢𝐚 & 𝐆𝐨𝐨𝐠𝐥𝐞 𝐈𝐧𝐯𝐞𝐬𝐭 $150𝐌 𝐢𝐧 𝐒𝐚𝐧𝐝𝐛𝐨𝐱𝐀𝐐 𝐭𝐨 𝐏𝐫𝐨𝐩𝐞𝐥 𝐐𝐮𝐚𝐧𝐭𝐮𝐦-𝐀𝐈 𝐈𝐧𝐧𝐨𝐯𝐚𝐭𝐢𝐨𝐧

In a noteworthy advancement highlighting the growing significance of quantum technology, SandboxAQ has successfully raised $150 million in funding from industry leaders Nvidia and Google. This substantial investment not only emphasizes the increasing interest in quantum computing and artificial intelligence (AI) but also signals their potential to transform various sectors. By backing SandboxAQ, these tech giants reinforce their commitment to advancing technologies that promise to reshape our world.

Quantum computing is often described as the next major leap in computing. It offers extraordinary processing capabilities that traditional computers cannot match. As industries explore how quantum technology can optimize their operations, the backing from prominent players like Nvidia and Google reflects confidence in SandboxAQ's vision and innovations.

Understanding SandboxAQ: A Brief Overview

Founded in 2021, SandboxAQ is dedicated to leveraging quantum technology and AI to solve some of the most pressing business challenges. The company's aim is to harness the strengths of both fields to unlock solutions once thought impossible.

SandboxAQ operates where quantum computing meets traditional software development, striving to create practical applications that can bolster security, streamline supply chains, and enhance data analytics processes, among other uses. For instance, in 2022, their collaboration with a leading pharmaceutical firm resulted in a 30% reduction in drug discovery timelines using quantum algorithms.

The Significance of the Investment

The recent $150 million investment is not only a financial achievement but also an affirmation of SandboxAQ's potential to impact future technological advancements. Both Nvidia and Google are renowned for their innovation in technology, providing invaluable expertise and resources through this partnership.

Nvidia’s Role in Quantum Development

Nvidia's reputation stems from its revolutionary graphics processing units (GPUs), which have immensely influenced the computing landscape. Given its emphasis on AI and machine learning, Nvidia stands as a key figure in the quantum realm. The investment in SandboxAQ will likely enhance its quantum computing capabilities, maintaining its market leadership as technology evolves.

Nvidia's partnership could foster the creation of groundbreaking algorithms and technologies. For instance, the integration of quantum processing with NVIDIA's existing AI frameworks could lead to a 50% improvement in the efficiency of machine learning tasks, allowing organizations to make decisions faster and more accurately.

Google’s Commitment to Quantum Technology

Google has long been a leader in quantum research, famously achieving quantum supremacy in 2019. By investing in SandboxAQ, Google reaffirms its dedication to accelerating advancements in quantum computing. The collaboration could significantly enhance Google's existing quantum projects within its Quantum AI lab.

Additionally, Google’s involvement may improve access to critical resources and infrastructure, essential for developing quantum technology applications relevant to real-world situations. For example, by pooling resources with SandboxAQ, they aim to expedite the rollout of quantum-driven solutions that can enhance cloud security and data processing capabilities.

The Impact of Quantum Technology on Industries

The backing from Nvidia and Google acts as a catalyst for growth across multiple sectors as organizations actively seek to integrate quantum solutions.

Healthcare

Quantum computing can fundamentally shift how healthcare approaches drug discovery and personalized medicine. For instance, researchers using quantum algorithms can analyze complex biological data much more quickly. A recent study suggested that integrating quantum computing into drug discovery could reduce the time needed to bring new therapies to market by up to 40%.

Financial Services

In finance, quantum technologies promise to refine trading strategies, bolster risk management practices, and strengthen fraud detection. Institutions like JPMorgan Chase are exploring quantum solutions to improve their predictive analytics, aiming for a 20% increase in the accuracy of their financial models over the next five years.

Cybersecurity

The field of cybersecurity could benefit greatly from quantum technology advancements. With cyber threats on the rise, quantum encryption methods may provide unprecedented data protection. Research indicates that quantum encryption could decrease the likelihood of successful breaches by an estimated 90%, enhancing the security of sensitive information.

The Future of SandboxAQ and Quantum Technology

With significant financial backing, SandboxAQ is positioned to accelerate its research and development initiatives, bringing its quantum solutions to market more effectively.

Scaling Innovations

One of SandboxAQ’s main goals will be to scale these avant-garde innovations to serve a broader spectrum of industries. Collaborating with Nvidia and Google offers insights that push quantum applications from theoretical concepts into accessible, practical solutions. Such efforts could allow businesses to adopt quantum technology faster, ensuring they remain competitive in their respective fields.

Fostering Collaboration

Partnerships among SandboxAQ, Nvidia, and Google can cultivate an ecosystem ripe for innovation. Collaborating with academic institutions and research organizations helps to unite experts in various related fields. By facilitating dialogues and projects that include blockchain developers and researchers, they can uncover fresh approaches to quantum technology.

Talent Development

To harness the growing interest in quantum technology, SandboxAQ is likely to prioritize talent development. By investing in education and training initiatives, the company could significantly advance the workforce ready to implement quantum applications, thus further entrenching itself as a leader in the sector.

Market Implications of the Investment

The influx of $150 million into SandboxAQ has implications that can reshape the competitive fabric of the quantum technology market. Companies, both new and established, will need to be vigilant regarding trends and advancements stemming from this partnership.

Competitive Landscape

This investment reflects a rising trend of increasing funding in quantum technology across the tech spectrum. Competing firms may feel compelled to accelerate their respective quantum strategies or pursue alternative investments to remain relevant.

Future Forward

As developments unfold from SandboxAQ in the upcoming months and years, expect the quantum technology market to become more dynamic. This funding round is likely to drive innovations that catalyze competition and continuous progression within the sector.

Challenges Ahead

While the investment opens numerous avenues for opportunity, challenges remain. Quantum technology is still evolving, and significant technical obstacles exist.

Technological Barriers

Overcoming challenges related to qubit stability, error rates, and computational complexity is paramount. Addressing these issues will demand substantial time and resources, and firms will need effective strategies to tackle them.

Regulatory Concerns

As with any groundbreaking technology, questions around regulations and ethical implications arise, particularly regarding data security and privacy. SandboxAQ and its partners must carefully navigate these regulatory landscapes while striving for innovation.

Forward-Looking Perspective

The $150 million investment from Nvidia and Google signifies a transformative moment for both SandboxAQ and the broader field of quantum technology. With this level of support, SandboxAQ is set to propel advancements that could redefine technology across various sectors.

As the company forges ahead, it stresses the importance of fostering innovation and collaboration in the fast-evolving tech world. Market responses, competitor initiatives, and regulatory developments will significantly influence the successful realization of these advancements.

The journey into quantum technology is just beginning, and with pioneers like SandboxAQ leading the way, the future appears filled with extraordinary possibilities.

#quantumcomputing#ai#sandboxaq#nvidia#google#futuretech#deeplearning#quantumai#startups#techinvestment#cybersecurity#healthtech#fintech#quantumencryption#machinelearning#quantumfuture#datasecurity#drugdiscovery#cloudsecurity#innovation#fraoula

0 notes

Text

A new way to make graphs more accessible to blind and low-vision readers

New Post has been published on https://sunalei.org/news/a-new-way-to-make-graphs-more-accessible-to-blind-and-low-vision-readers/

A new way to make graphs more accessible to blind and low-vision readers

Bar graphs and other charts provide a simple way to communicate data, but are, by definition, difficult to translate for readers who are blind or low-vision. Designers have developed methods for converting these visuals into “tactile charts,” but guidelines for doing so are extensive (for example, the Braille Authority of North America’s 2022 guidebook is 426 pages long). The process also requires understanding different types of software, as designers often draft their chart in programs like Adobe Illustrator and then translate it into Braille using another application.

Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have now developed an approach that streamlines the design process for tactile chart designers. Their program, called “Tactile Vega-Lite,” can take data from something like an Excel spreadsheet and turn it into both a standard visual chart and a touch-based one. Design standards are hardwired as default rules within the program to help educators and designers automatically create accessible tactile charts.

The tool could make it easier for blind and low-vision readers to understand many graphics, such as a bar chart comparing minimum wages across states or a line graph tracking countries’ GDPs over time. To bring your designs to the real world, you can tweak your chart in Tactile Vega-Lite and then send its file to a Braille embosser (which prints text as readable dots).

This spring, the researchers will present Tactile Vega-Lite in a paper at the Association of Computing Machinery Conference on Human Factors in Computing Systems. According to lead author Mengzhu “Katie” Chen SM ’25, the tool strikes a balance between the precision that design professionals want for editing and the efficiency educators need to create tactile charts quickly.

“We interviewed teachers who wanted to make their lessons accessible to blind and low-vision students, and designers experienced in putting together tactile charts,” says Chen, a recent CSAIL affiliate and master’s graduate in electrical engineering and computer science and the Program in System Design and Management. “Since their needs differ, we designed a program that’s easy to use, provides instant feedback when you want to make tweaks, and implements accessibility guidelines.”

Data you can feel

The researchers’ program builds off of their 2017 visualization tool Vega-Lite by automatically encoding both a flat, standard chart and a tactile one. Senior author and MIT postdoc Jonathan Zong SM ’20, PhD ’24 points out that the program makes intuitive design decisions so users don’t have to.

“Tactile Vega-Lite has smart defaults to ensure proper spacing, layout, and texture and Braille conversion, following best practices to create good touch-based reading experiences,” says Zong, who is also a fellow at the Berkman Klein Center for Internet and Society at Harvard University and an incoming assistant professor at the University of Colorado. “Building on existing guidelines and our interviews with experts, the goal is for teachers or visual designers without a lot of tactile design expertise to quickly convey data in a clear way for tactile readers to explore and understand.”

Tactile Vega-Lite’s code editor allows users to customize axis labels, tick marks, and other elements. Different features within the chart are represented by abstractions — or summaries of a longer body of code — that can be modified. These shortcuts allow you to write brief phrases that tweak the design of your chart. For example, if you want to change how the bars in your graph are filled out, you could change the code in the “Texture” section from “dottedFill” to “verticalFill” to replace small circles with upward lines.

To understand how these abstractions work, the researchers added a gallery of examples. Each one includes a phrase and what change that code leads to. Still, the team is looking to refine Tactile Vega-Lite’s user interface to make it more accessible to users less familiar with coding. Instead of using abstractions for edits, you could click on different buttons.

Chen says she and her colleagues are hoping to add machine-specific customizations to their program. This would allow users to preview how their tactile chart would look before it’s fabricated by an embossing machine and make edits according to the device’s specifications.

While Tactile Vega-Lite can streamline the many steps it usually takes to make a tactile chart, Zong emphasizes that it doesn’t replace an expert doing a final check-over for guideline compliance. The researchers are continuing to incorporate Braille design rules into their program, but caution that human review will likely remain the best practice.

“The ability to design tactile graphics efficiently, particularly without specialized software, is important for providing equal access of information to tactile readers,” says Stacy Fontenot, owner of Font to Dot, who wasn’t involved in the research. “Graphics that follow current guidelines and standards are beneficial for the reader as consistency is paramount, especially with complex, data-filled graphics. Tactile Vega-Lite has a straightforward interface for creating informative tactile graphics quickly and accurately, thereby reducing the design time in providing quality graphics to tactile readers.”

Chen and Zong wrote the paper with Isabella Pineros ’23, MEng ’24 and MIT Associate Professor Arvind Satyanarayan. The researchers’ work was supported by a National Science Foundation grant.

The CSAIL team also incorporated input from Rich Caloggero from MIT’s Disability and Access Services, as well as the Lighthouse for the Blind, which let them observe technical design workflows as part of the project.

0 notes

Text