#Facebook Applications Development

Explore tagged Tumblr posts

Text

Using Pages CMS for Static Site Content Management

New Post has been published on https://thedigitalinsider.com/using-pages-cms-for-static-site-content-management/

Using Pages CMS for Static Site Content Management

Friends, I’ve been on the hunt for a decent content management system for static sites for… well, about as long as we’ve all been calling them “static sites,” honestly.

I know, I know: there are a ton of content management system options available, and while I’ve tested several, none have really been the one, y’know? Weird pricing models, difficult customization, some even end up becoming a whole ‘nother thing to manage.

Also, I really enjoy building with site generators such as Astro or Eleventy, but pitching Markdown as the means of managing content is less-than-ideal for many “non-techie” folks.

A few expectations for content management systems might include:

Easy to use: The most important feature, why you might opt to use a content management system in the first place.

Minimal Requirements: Look, I’m just trying to update some HTML, I don’t want to think too much about database tables.

Collaboration: CMS tools work best when multiple contributors work together, contributors who probably don’t know Markdown or what GitHub is.

Customizable: No website is the same, so we’ll need to be able to make custom fields for different types of content.

Not a terribly long list of demands, I’d say; fairly reasonable, even. That’s why I was happy to discover Pages CMS.

According to its own home page, Pages CMS is the “The No-Hassle CMS for Static Site Generators,” and I’ll to attest to that. Pages CMS has largely been developed by a single developer, Ronan Berder, but is open source, and accepting pull requests over on GitHub.

Taking a lot of the “good parts” found in other CMS tools, and a single configuration file, Pages CMS combines things into a sleek user interface.

Pages CMS includes lots of options for customization, you can upload media, make editable files, and create entire collections of content. Also, content can have all sorts of different fields, check the docs for the full list of supported types, as well as completely custom fields.

There isn’t really a “back end” to worry about, as content is stored as flat files inside your git repository. Pages CMS provides folks the ability to manage the content within the repo, without needing to actually know how to use Git, and I think that’s neat.

User Authentication works two ways: contributors can log in using GitHub accounts, or contributors can be invited by email, where they’ll receive a password-less, “magic-link,” login URL. This is nice, as GitHub accounts are less common outside of the dev world, shocking, I know.

Oh, and Pages CMS has a very cheap barrier for entry, as it’s free to use.

Pages CMS and Astro content collections

I’ve created a repository on GitHub with Astro and Pages CMS using Astro’s default blog starter, and made it available publicly, so feel free to clone and follow along.

I’ve been a fan of Astro for a while, and Pages CMS works well alongside Astro’s content collection feature. Content collections make globs of data easily available throughout Astro, so you can hydrate content inside Astro pages. These globs of data can be from different sources, such as third-party APIs, but commonly as directories of Markdown files. Guess what Pages CMS is really good at? Managing directories of Markdown files!

Content collections are set up by a collections configuration file. Check out the src/content.config.ts file in the project, here we are defining a content collection named blog:

import glob from 'astro/loaders'; import defineCollection, z from 'astro:content'; const blog = defineCollection( // Load Markdown in the `src/content/blog/` directory. loader: glob( base: './src/content/blog', pattern: '**/*.md' ), // Type-check frontmatter using a schema schema: z.object( title: z.string(), description: z.string(), // Transform string to Date object pubDate: z.coerce.date(), updatedDate: z.coerce.date().optional(), heroImage: z.string().optional(), ), ); export const collections = blog ;

The blog content collection checks the /src/content/blog directory for files matching the **/*.md file type, the Markdown file format. The schema property is optional, however, Astro provides helpful type-checking functionality with Zod, ensuring data saved by Pages CMS works as expected in your Astro site.

Pages CMS Configuration

Alright, now that Astro knows where to look for blog content, let’s take a look at the Pages CMS configuration file, .pages.config.yml:

content: - name: blog label: Blog path: src/content/blog filename: 'year-month-day-fields.title.md' type: collection view: fields: [heroImage, title, pubDate] fields: - name: title label: Title type: string - name: description label: Description type: text - name: pubDate label: Publication Date type: date options: format: MM/dd/yyyy - name: updatedDate label: Last Updated Date type: date options: format: MM/dd/yyyy - name: heroImage label: Hero Image type: image - name: body label: Body type: rich-text - name: site-settings label: Site Settings path: src/config/site.json type: file fields: - name: title label: Website title type: string - name: description label: Website description type: string description: Will be used for any page with no description. - name: url label: Website URL type: string pattern: ^(https?://)?(www.)?[a-zA-Z0-9.-]+.[a-zA-Z]2,(/[^s]*)?$ - name: cover label: Preview image type: image description: Image used in the social preview on social networks (e.g. Facebook, Twitter...) media: input: public/media output: /media

There is a lot going on in there, but inside the content section, let’s zoom in on the blog object.

- name: blog label: Blog path: src/content/blog filename: 'year-month-day-fields.title.md' type: collection view: fields: [heroImage, title, pubDate] fields: - name: title label: Title type: string - name: description label: Description type: text - name: pubDate label: Publication Date type: date options: format: MM/dd/yyyy - name: updatedDate label: Last Updated Date type: date options: format: MM/dd/yyyy - name: heroImage label: Hero Image type: image - name: body label: Body type: rich-text

We can point Pages CMS to the directory we want to save Markdown files using the path property, matching it up to the /src/content/blog/ location Astro looks for content.

path: src/content/blog

For the filename we can provide a pattern template to use when Pages CMS saves the file to the content collection directory. In this case, it’s using the file date’s year, month, and day, as well as the blog item’s title, by using fields.title to reference the title field. The filename can be customized in many different ways, to fit your scenario.

filename: 'year-month-day-fields.title.md'

The type property tells Pages CMS that this is a collection of files, rather than a single editable file (we’ll get to that in a moment).

type: collection

In our Astro content collection configuration, we define our blog collection with the expectation that the files will contain a few bits of meta data such as: title, description, pubDate, and a few more properties.

We can mirror those requirements in our Pages CMS blog collection as fields. Each field can be customized for the type of data you’re looking to collect. Here, I’ve matched these fields up with the default Markdown frontmatter found in the Astro blog starter.

fields: - name: title label: Title type: string - name: description label: Description type: text - name: pubDate label: Publication Date type: date options: format: MM/dd/yyyy - name: updatedDate label: Last Updated Date type: date options: format: MM/dd/yyyy - name: heroImage label: Hero Image type: image - name: body label: Body type: rich-text

Now, every time we create a new blog item in Pages CMS, we’ll be able to fill out each of these fields, matching the expected schema for Astro.

Aside from collections of content, Pages CMS also lets you manage editable files, which is useful for a variety of things: site wide variables, feature flags, or even editable navigations.

Take a look at the site-settings object, here we are setting the type as file, and the path includes the filename site.json.

- name: site-settings label: Site Settings path: src/config/site.json type: file fields: - name: title label: Website title type: string - name: description label: Website description type: string description: Will be used for any page with no description. - name: url label: Website URL type: string pattern: ^(https?://)?(www.)?[a-zA-Z0-9.-]+.[a-zA-Z]2,(/[^s]*)?$ - name: cover label: Preview image type: image description: Image used in the social preview on social networks (e.g. Facebook, Twitter...)

The fields I’ve included are common site-wide settings, such as the site’s title, description, url, and cover image.

Speaking of images, we can tell Pages CMS where to store media such as images and video.

media: input: public/media output: /media

The input property explains where to store the files, in the /public/media directory within our project.

The output property is a helpful little feature that conveniently replaces the file path, specifically for tools that might require specific configuration. For example, Astro uses Vite under the hood, and Vite already knows about the public directory and complains if it’s included within file paths. Instead, we can set the output property so Pages CMS will only point image path locations starting at the inner /media directory instead.

To see what I mean, check out the test post in the src/content/blog/ folder:

--- title: 'Test Post' description: 'Here is a sample of some basic Markdown syntax that can be used when writing Markdown content in Astro.' pubDate: 05/03/2025 heroImage: '/media/blog-placeholder-1.jpg' ---

The heroImage now property properly points to /media/... instead of /public/media/....

As far as configurations are concerned, Pages CMS can be as simple or as complex as necessary. You can add as many collections or editable files as needed, as well as customize the fields for each type of content. This gives you a lot of flexibility to create sites!

Connecting to Pages CMS

Now that we have our Astro site set up, and a .pages.config.yml file, we can connect our site to the Pages CMS online app. As the developer who controls the repository, browse to https://app.pagescms.org/ and sign in using your GitHub account.

You should be presented with some questions about permissions, you may need to choose between giving access to all repositories or specific ones. Personally, I chose to only give access to a single repository, which in this case is my astro-pages-cms-template repo.

After providing access to the repo, head on back to the Pages CMS application, where you’ll see your project listed under the “Open a Project” headline.

Clicking the open link will take you into the website’s dashboard, where we’ll be able to make updates to our site.

Creating content

Taking a look at our site’s dashboard, we’ll see a navigation on the left side, with some familiar things.

Blog is the collection we set up inside the .pages.config.yml file, this will be where we we can add new entries to the blog.

Site Settings is the editable file we are using to make changes to site-wide variables.

Media is where our images and other content will live.

Settings is a spot where we’ll be able to edit our .pages.config.yml file directly.

Collaborators allows us to invite other folks to contribute content to the site.

We can create a new blog post by clicking the Add Entry button in the top right

Here we can fill out all the fields for our blog content, then hit the Save button.

After saving, Pages CMS will create the Markdown file, store the file in the proper directory, and automatically commit the changes to our repository. This is how Pages CMS helps us manage our content without needing to use git directly.

Automatically deploying

The only thing left to do is set up automated deployments through the service provider of your choice. Astro has integrations with providers like Netlify, Cloudflare Pages, and Vercel, but can be hosted anywhere you can run node applications.

Astro is typically very fast to build (thanks to Vite), so while site updates won’t be instant, they will still be fairly quick to deploy. If your site is set up to use Astro’s server-side rendering capabilities, rather than a completely static site, the changes might be much faster to deploy.

Wrapping up

Using a template as reference, we checked out how Astro content collections work alongside Pages CMS. We also learned how to connect our project repository to the Pages CMS app, and how to make content updates through the dashboard. Finally, if you are able, don’t forget to set up an automated deployment, so content publishes quickly.

#2025#Accounts#ADD#APIs#app#applications#Articles#astro#authentication#barrier#Blog#Building#clone#cloudflare#CMS#Collaboration#Collections#content#content management#content management systems#custom fields#dashboard#data#Database#deploying#deployment#Developer#easy#email#Facebook

0 notes

Text

Cybercriminals are abusing Google’s infrastructure, creating emails that appear to come from Google in order to persuade people into handing over their Google account credentials. This attack, first flagged by Nick Johnson, the lead developer of the Ethereum Name Service (ENS), a blockchain equivalent of the popular internet naming convention known as the Domain Name System (DNS). Nick received a very official looking security alert about a subpoena allegedly issued to Google by law enforcement to information contained in Nick’s Google account. A URL in the email pointed Nick to a sites.google.com page that looked like an exact copy of the official Google support portal.

As a computer savvy person, Nick spotted that the official site should have been hosted on accounts.google.com and not sites.google.com. The difference is that anyone with a Google account can create a website on sites.google.com. And that is exactly what the cybercriminals did. Attackers increasingly use Google Sites to host phishing pages because the domain appears trustworthy to most users and can bypass many security filters. One of those filters is DKIM (DomainKeys Identified Mail), an email authentication protocol that allows the sending server to attach a digital signature to an email. If the target clicked either “Upload additional documents” or “View case”, they were redirected to an exact copy of the Google sign-in page designed to steal their login credentials. Your Google credentials are coveted prey, because they give access to core Google services like Gmail, Google Drive, Google Photos, Google Calendar, Google Contacts, Google Maps, Google Play, and YouTube, but also any third-party apps and services you have chosen to log in with your Google account. The signs to recognize this scam are the pages hosted at sites.google.com which should have been support.google.com and accounts.google.com and the sender address in the email header. Although it was signed by accounts.google.com, it was emailed by another address. If a person had all these accounts compromised in one go, this could easily lead to identity theft.

How to avoid scams like this

Don’t follow links in unsolicited emails or on unexpected websites.

Carefully look at the email headers when you receive an unexpected mail.

Verify the legitimacy of such emails through another, independent method.

Don’t use your Google account (or Facebook for that matter) to log in at other sites and services. Instead create an account on the service itself.

Technical details Analyzing the URL used in the attack on Nick, (https://sites.google.com[/]u/17918456/d/1W4M_jFajsC8YKeRJn6tt_b1Ja9Puh6_v/edit) where /u/17918456/ is a user or account identifier and /d/1W4M_jFajsC8YKeRJn6tt_b1Ja9Puh6_v/ identifies the exact page, the /edit part stands out like a sore thumb. DKIM-signed messages keep the signature during replays as long as the body remains unchanged. So if a malicious actor gets access to a previously legitimate DKIM-signed email, they can resend that exact message at any time, and it will still pass authentication. So, what the cybercriminals did was: Set up a Gmail account starting with me@ so the visible email would look as if it was addressed to “me.” Register an OAuth app and set the app name to match the phishing link Grant the OAuth app access to their Google account which triggers a legitimate security warning from [email protected] This alert has a valid DKIM signature, with the content of the phishing email embedded in the body as the app name. Forward the message untouched which keeps the DKIM signature valid. Creating the application containing the entire text of the phishing message for its name, and preparing the landing page and fake login site may seem a lot of work. But once the criminals have completed the initial work, the procedure is easy enough to repeat once a page gets reported, which is not easy on sites.google.com. Nick submitted a bug report to Google about this. Google originally closed the report as ‘Working as Intended,’ but later Google got back to him and said it had reconsidered the matter and it will fix the OAuth bug.

11K notes

·

View notes

Text

Astoria Media Group

Website: https://astoriamediagroup.com

Address: 4400 Buffalo Gap Rd Suite 2250, Abilene, TX 79606

Phone: +1 325-600-4396

Astoria Media Group is on a steadfast mission to empower organizations with state-of-the-art go-to-market strategies. We are your trusted guide in the ever-evolving digital landscape, focused on compressing timelines and optimizing resources. Through strategic planning, application development, website creation, and immersive content marketing, we pave the way for success while significantly reducing project completion hours. We understand the power of preparedness and are committed to fostering efficiency, quality, and affordability in every project we helm.

#website developer near me#mobile application development#website hosting in Abilene#digital marketing agency#social media marketing#Facebook marketing#Website Design and Development#marketing company in Abilene#advertising agency near me#video production

1 note

·

View note

Text

5 Visual Regression Testing Tools for WordPress

Introduction:

In the world of web development, maintaining the visual integrity of your WordPress website is crucial. Whether you're a WordPress development company, a WordPress developer in India, or a WordPress development agency, ensuring that your WordPress site looks and functions correctly across various browsers and devices is essential. Visual regression testing is the solution to this problem, and in this blog post, we'll explore five powerful visual regression testing tools that can help you achieve pixel-perfect results.

Applitools:

Applitools is a widely recognized visual regression testing tool that offers a robust solution for WordPress developers and development agencies. With its AI-powered technology, Applitools can detect even the slightest visual differences on your WordPress site across different browsers and screen sizes. It offers seamless integration with popular testing frameworks like Selenium and Appium, making it a favorite among WordPress developers.

Percy:

Percy is another exceptional visual regression testing tool that is specifically designed for developers and agencies working on WordPress projects. Percy captures screenshots of your WordPress site during each test run and highlights any visual changes, making it easy to identify and fix issues before they become a problem. Percy's dashboard provides a comprehensive view of all visual tests, making it a valuable asset for any WordPress development company.

BackstopJS:

BackstopJS is an open-source visual regression testing tool that has gained popularity in the WordPress development community. It allows you to create automated visual tests for your WordPress site, making it easy to spot discrepancies between different versions of your site. BackstopJS offers command-line integration, making it convenient for WordPress developers to incorporate visual testing into their workflows.

Wraith:

Wraith is a visual regression testing tool that is highly customizable and offers seamless integration with WordPress development projects. It allows you to capture screenshots of your WordPress site before and after changes, then compare them to identify any differences. Wraith's flexibility and versatility make it a valuable choice for WordPress development agencies looking to streamline their testing processes.

Visual Regression Testing with Puppeteer:

Puppeteer is a Node.js library that provides a high-level API to control headless browsers. WordPress developers can leverage Puppeteer to create custom visual regression testing scripts tailored to their specific needs. While it requires more coding expertise, it provides complete control over the testing process and is an excellent choice for WordPress developers who want to build a bespoke visual testing solution.

Conclusion:

In today's competitive online landscape, ensuring that your WordPress website looks consistent and functions flawlessly is of utmost importance. Visual regression testing tools play a vital role in achieving this goal, helping WordPress development companies, WordPress developers in India, and WordPress development agencies maintain the visual integrity of their projects.

Whether you choose the AI-powered capabilities of Applitools, the user-friendly interface of Percy, the open-source flexibility of BackstopJS, the customization options of Wraith, or the coding prowess of Puppeteer, these visual regression testing tools empower you to identify and resolve visual discrepancies efficiently.

In the ever-evolving world of WordPress development, staying ahead of the curve is essential. Integrating a visual regression testing tool into your workflow can save time, improve the quality of your WordPress projects, and enhance the user experience. So, whether you're a WordPress developer or part of a WordPress development agency, consider incorporating one of these tools into your toolkit to ensure your WordPress sites continue to impress visitors across all devices and browsers.

#digital marketing company#digital marketing company in indore#facebook ad agency#application for android app development#flutter app development company#facebook ads expert#facebook ad campaign#google ads management services

0 notes

Text

Tips For Running Successful Facebook Ad Campaign

Are you ready to take your Facebook ad game to the next level? Whether you’re a seasoned marketer or just starting out, running a successful ad campaign on this social media giant can be an absolute game-changer for your business. With billions of users and powerful targeting options, Facebook offers endless opportunities to reach and engage with your target audience. But how do you ensure that your ads stand out from the crowd and actually generate results? Fear not! In this blog post, we’ll reveal some top-notch tips and tricks that will skyrocket your success in running Facebook ad campaigns. Get ready to unlock the secrets behind creating captivating content, nailing precise targeting strategies, optimizing for conversions, and so much more. Get comfortable as we delve into the world of Facebook advertising – it’s time to make things happen!

Introduction To Facebook Ads

Facebook, with its massive user base of over 2.8 billion active monthly users, has become an essential platform for businesses to reach their target audience and advertise their products or services. And one of the most effective ways to do this is through Facebook Ads.

Facebook Ads are paid advertisements that appear on the Facebook platform, including the news feed, right column, and Instagram feed. These ads allow businesses to target specific demographics, interests, behaviors, and locations of users based on their profile information and online activity.

In this section, we will provide a detailed introduction to Facebook Ads and how they work so that you can understand how to leverage them for your business’s success.

Why use Facebook Ads?

With traditional advertising methods becoming less effective in today’s digital age, social media platforms like Facebook have become crucial for businesses’ marketing strategies. Here are some reasons why you should consider using Facebook Ads:

1. Massive Reach: With billions of users worldwide and advanced targeting options available on the platform, Facebook Ads offer unparalleled reach potential for businesses looking to expand their customer base.

2. Cost-Effective: Compared to traditional forms of advertising such as TV or print ads, Facebook Ads are relatively affordable. You can set your budget and adjust it at any time based on your campaign’s performance.

Understanding The Target Audience

Understanding the target audience is a crucial aspect of running successful Facebook ad campaigns. In order to effectively reach and engage with potential customers, it is important to have a deep understanding of who they are, their interests, behaviors, and preferences.

1. Define Your Ideal Customer Avatar: The first step in understanding your target audience is to create an ideal customer avatar. This is a detailed profile of your ideal customer, including demographic information such as age, gender, location, income level, education level, and occupation. It also includes psychographic information like their interests, hobbies, values, and lifestyle choices. Having a clear picture of your ideal customer will help you tailor your ad campaigns to their specific needs and desires.

2. Conduct Market Research: Market research plays a crucial role in understanding your target audience. It involves gathering data about your potential customers through surveys, focus groups, interviews or by using tools like Facebook Audience Insights. This research will provide valuable insights into the demographics and interests of your audience which can then be used to create targeted ads that resonate with them.

3. Analyze Your Existing Customers: Your existing customers are a goldmine of information when it comes to understanding your target audience. Analyzing their behavior on social media platforms can provide valuable insights into what type of content they engage with the most and what motivates them to make a purchase. You can also use tools like Google Analytics or Facebook Pixel to track website visitors and understand their actions on your site.

Setting Objectives And Goals For The Campaign

When it comes to running a successful Facebook ad campaign, setting objectives and goals is crucial. Without a clear direction or purpose, your campaign may not have the impact or reach that you desire. In this section, we will delve into the importance of setting objectives and goals for your Facebook ad campaign and provide tips on how to do so effectively.

1. Understand Your Overall Marketing Goals

Before diving into setting specific objectives and goals for your Facebook ad campaign, it is important to understand your overall marketing goals. These can include increasing brand awareness, driving sales, generating leads, or promoting a new product/service. Identifying these overarching goals will help guide your decision-making when it comes to creating and implementing your Facebook ad campaign.

2. Use SMART Goal Framework

One effective framework for setting objectives and goals is the SMART criteria – Specific, Measurable, Achievable, Relevant, and Time-bound. This means that each goal should be clearly defined (specific), have measurable metrics (measurable), be realistic yet challenging (achievable), align with your overall marketing goals (relevant), and have a set timeline for completion (time-bound).

3. Consider Your Target Audience

Your target audience plays a significant role in determining the objectives and goals for your Facebook ad campaign. Understanding their demographics, interests, behaviors, and pain points can help you tailor your messaging and choose appropriate objectives that resonate with them.

Choosing The Right Ad Format

When it comes to running a successful Facebook ad campaign, choosing the right ad format is crucial. The type of ad you choose will impact how your audience perceives your brand and how effective your campaign will be in achieving its goals. With multiple options available, it can be overwhelming to determine which ad format is best suited for your business. Here are some tips to help you choose the right ad format for your Facebook ads.

1. Understand Your Objectives: Before selecting an ad format, it’s essential to have a clear understanding of what you want to achieve with your Facebook ad campaign. Are you looking to increase brand awareness, drive traffic to your website, or generate leads? Each objective requires a different approach and therefore, a different type of ad format.

2. Consider Your Target Audience: Knowing who your target audience is can also influence the type of ad format you should use. For example, if you’re targeting younger audiences, then video ads or Instagram Stories may be more effective than static image ads.

3. Utilize Different Ad Formats: It’s always best practice to test different ad formats and see which ones perform better for your business goals. You can create multiple versions of the same ad in various formats and run them simultaneously to see which one resonates better with your audience.

Budgeting And Scheduling The Campaign

Budgeting and scheduling are crucial aspects of running a successful Facebook ad campaign. They determine the overall success of your campaign, as they impact the reach, engagement, and ROI (return on investment) of your ads. In this section, we will discuss some tips to ensure that you budget and schedule your Facebook ad campaigns effectively.

1. Set a Realistic Budget: The first step in budgeting for your Facebook ad campaign is to set a realistic budget based on your marketing goals and objectives. This will depend on factors such as your target audience, industry, product or service cost, and competition. It’s important to allocate enough funds to achieve your desired results without overspending.

2. Utilize Facebook Ad Manager: Facebook Ad Manager is an essential tool for managing and tracking your ad campaigns’ performance. It allows you to set daily or lifetime budgets for each campaign, making it easier to monitor spending and make adjustments accordingly. You can also use the Ad Manager to track key metrics such as impressions, clicks, conversions, and costs.

3. Consider Your Bidding Strategy: When setting up your ads in the Ad Manager, you have the option to choose between automatic or manual bidding strategies. Automatic bidding lets Facebook optimize bids based on your budget while manual bidding gives you more control over how much you want to spend per click or impression.

Analyzing And Optimizing The Campaign Performance

When it comes to running successful Facebook ad campaigns, analyzing and optimizing the campaign performance is a crucial step that should not be overlooked. By carefully monitoring and adjusting your ad campaign, you can maximize its effectiveness and achieve better results. In this section, we will discuss some key strategies for analyzing and optimizing your Facebook ad campaign performance.

1. Set Measurable Goals: Before launching your ad campaign, it is important to clearly define your goals and objectives. This will help you measure the success of your campaign and make informed decisions while optimizing it. Whether you want to increase brand awareness, generate leads or drive conversions, having measurable goals in place will act as a roadmap for your campaign.

2. Use Facebook Ads Manager: Facebook Ads Manager is a powerful tool that provides valuable insights into the performance of your ads. It allows you to track key metrics such as clicks, impressions, reach, engagement rates, conversions, etc. You can also use filters to view data by specific date ranges or demographics which can help you identify trends and patterns in your ad performance.

3. Monitor Your Ad Frequency: Ad frequency refers to the number of times an individual sees your ad on their newsfeed within a given time period. While reaching a large audience may seem like a good thing at first glance, bombarding them with too many ads can lead to ad fatigue and result in decreased engagement rates. Keep an eye on your ad frequency and adjust the budget or targeting if needed.

Utilizing Facebook Ad Tools And Features

One of the most powerful tools for running successful Facebook ad campaigns is utilizing the various ad tools and features offered by the platform. These tools can help you reach your target audience, create engaging ads, and measure the success of your campaigns. In this section, we will discuss some of the key Facebook ad tools and features that you should be using to maximize your campaign’s effectiveness.

1. Audience Insights: This tool allows you to gain a deeper understanding of your target audience by providing valuable demographic, location, and interest data. By using this information, you can create more targeted ads that are relevant to your audience.

2. Ad Manager: The Ad Manager is where you will set up and manage all of your Facebook ad campaigns. It provides a user-friendly interface for creating and editing ads, targeting specific audiences, setting budgets and schedules, and tracking performance metrics.

3. Custom Audiences: With custom audiences, you can upload your own customer list or website visitors’ list to target them with specific ads on Facebook. This feature is particularly useful for retargeting previous customers or reaching out to potential customers who have shown interest in your brand.

4. Lookalike Audiences: This feature uses Facebook’s algorithm to find new audiences similar to those in your existing custom audiences. It is an excellent way to expand your reach and connect with potential customers who share similar interests as your current ones.

Staying Up-To-Date With Facebook Advertising Trends

Staying up-to-date with Facebook advertising trends is crucial for running successful ad campaigns. Facebook is constantly evolving and introducing new features, targeting options, and algorithms that can significantly impact the effectiveness of your ads. To ensure that your campaigns are reaching their full potential, it is important to stay informed about these changes and adjust your strategies accordingly.

Here are some tips on how to stay updated with Facebook advertising trends:

1. Follow official Facebook resources: The first step in staying up-to-date with Facebook advertising trends is to follow official resources such as the Facebook Business Page, Ads Help Center, and their blog. This will give you direct access to any updates or announcements from the platform itself. You can also join Facebook’s Official Advertiser Community Group where experts share tips and insights on current trends.

2. Monitor industry publications: There are many industry publications and websites dedicated to covering social media marketing news and updates, including those specific to Facebook advertising. Some popular ones include Social Media Examiner, Adweek, Marketing Land, and Social Media Today. By regularly checking these sources, you can stay informed about any changes or new features on the platform.

3. Attend webinars or conferences: Facebook often hosts webinars or conferences focused on educating businesses about their advertising platform and upcoming changes. These events not only provide valuable information but also allow you to network with other advertisers and learn from their experiences.

Conclusion:

Running a successful Facebook ad campaign requires careful planning, consistent monitoring, and constant optimization. By following the tips outlined in this article, you can maximize the effectiveness of your campaigns and reach your target audience effectively.

Here are some key takeaways to keep in mind:

1. Define Your Objectives: Before launching any Facebook ad campaign, it’s crucial to have a clear understanding of your goals and objectives. This will help guide your strategy and ensure that all your efforts align with your overall business objectives.

2. Know Your Target Audience: Understanding who your target audience is crucial for running a successful ad campaign on Facebook. Use the platform’s extensive targeting options to reach the right people based on their demographics, interests, behaviors, and more.

3. Create High-Quality Visuals: With so much content competing for attention on Facebook, it’s essential to create eye-catching visuals that will grab users’ attention as they scroll through their newsfeed. Make sure that the images or videos you use are high-quality and relevant to your brand.

4. Test Different Ad Formats: Facebook offers various ad formats such as image ads, video ads, carousel ads, etc., each with its unique advantages. Experiment with different formats to see which ones resonate best with your audience.

#Tips For Running Successful Facebook Ad Campaign#best web development company in united states#magento development#web designing company#asp.net web and application development#logo design company#web design#website landing page design#web development#digital marketing company in usa#web development company

0 notes

Text

https://beebigdigital.com/blogs/what-is-web-development

Web development refers to the process of creating, building, and maintaining websites Web development refers to the process of creating, building, and maintaining websites and web applications that are accessible via the internet. digital marketing company, digital marketing agency, digital marketing services, digital marketing agency, digital marketing company in Mumbai, digital marketing agency in mumbai, Search Engine Optimization Company, SEO Company, Social Media Marketing Company, Social Media Marketing Agency, Google Adword Company, Facebook Advertisment Company, Advertisment Company, Advertisment Agency,

#Web development refers to the process of creating#building#and maintaining websites#and maintaining websites and web applications that are accessible via the internet.#digital marketing company#digital marketing agency#digital marketing services#digital marketing company in Mumbai#digital marketing agency in mumbai#Search Engine Optimization Company#SEO Company#Social Media Marketing Company#Social Media Marketing Agency#Google Adword Company#Facebook Advertisment Company#Advertisment Company#Advertisment Agency

0 notes

Text

Web Design Services | Digital Marketing Agency - Olygex Solutions Pvt. Ltd.

🏆Olygex Solutions Pvt. Ltd. offers Best Web Design Services, Website and Application development Company, Digital Marketing and SEO Agency, Paid Media Marketing Services at affordable price.

#: Application Development#Web Design Services#Mobile Application Development#Paid Media Marketing Services#Google Ads#Facebook Meta Ads#SEO#SMO#Local SEO#Mobile SEO#Olygextech#UI/UX Design Services

1 note

·

View note

Text

What kind of bubble is AI?

My latest column for Locus Magazine is "What Kind of Bubble is AI?" All economic bubbles are hugely destructive, but some of them leave behind wreckage that can be salvaged for useful purposes, while others leave nothing behind but ashes:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Think about some 21st century bubbles. The dotcom bubble was a terrible tragedy, one that drained the coffers of pension funds and other institutional investors and wiped out retail investors who were gulled by Superbowl Ads. But there was a lot left behind after the dotcoms were wiped out: cheap servers, office furniture and space, but far more importantly, a generation of young people who'd been trained as web makers, leaving nontechnical degree programs to learn HTML, perl and python. This created a whole cohort of technologists from non-technical backgrounds, a first in technological history. Many of these people became the vanguard of a more inclusive and humane tech development movement, and they were able to make interesting and useful services and products in an environment where raw materials – compute, bandwidth, space and talent – were available at firesale prices.

Contrast this with the crypto bubble. It, too, destroyed the fortunes of institutional and individual investors through fraud and Superbowl Ads. It, too, lured in nontechnical people to learn esoteric disciplines at investor expense. But apart from a smattering of Rust programmers, the main residue of crypto is bad digital art and worse Austrian economics.

Or think of Worldcom vs Enron. Both bubbles were built on pure fraud, but Enron's fraud left nothing behind but a string of suspicious deaths. By contrast, Worldcom's fraud was a Big Store con that required laying a ton of fiber that is still in the ground to this day, and is being bought and used at pennies on the dollar.

AI is definitely a bubble. As I write in the column, if you fly into SFO and rent a car and drive north to San Francisco or south to Silicon Valley, every single billboard is advertising an "AI" startup, many of which are not even using anything that can be remotely characterized as AI. That's amazing, considering what a meaningless buzzword AI already is.

So which kind of bubble is AI? When it pops, will something useful be left behind, or will it go away altogether? To be sure, there's a legion of technologists who are learning Tensorflow and Pytorch. These nominally open source tools are bound, respectively, to Google and Facebook's AI environments:

https://pluralistic.net/2023/08/18/openwashing/#you-keep-using-that-word-i-do-not-think-it-means-what-you-think-it-means

But if those environments go away, those programming skills become a lot less useful. Live, large-scale Big Tech AI projects are shockingly expensive to run. Some of their costs are fixed – collecting, labeling and processing training data – but the running costs for each query are prodigious. There's a massive primary energy bill for the servers, a nearly as large energy bill for the chillers, and a titanic wage bill for the specialized technical staff involved.

Once investor subsidies dry up, will the real-world, non-hyperbolic applications for AI be enough to cover these running costs? AI applications can be plotted on a 2X2 grid whose axes are "value" (how much customers will pay for them) and "risk tolerance" (how perfect the product needs to be).

Charging teenaged D&D players $10 month for an image generator that creates epic illustrations of their characters fighting monsters is low value and very risk tolerant (teenagers aren't overly worried about six-fingered swordspeople with three pupils in each eye). Charging scammy spamfarms $500/month for a text generator that spits out dull, search-algorithm-pleasing narratives to appear over recipes is likewise low-value and highly risk tolerant (your customer doesn't care if the text is nonsense). Charging visually impaired people $100 month for an app that plays a text-to-speech description of anything they point their cameras at is low-value and moderately risk tolerant ("that's your blue shirt" when it's green is not a big deal, while "the street is safe to cross" when it's not is a much bigger one).

Morganstanley doesn't talk about the trillions the AI industry will be worth some day because of these applications. These are just spinoffs from the main event, a collection of extremely high-value applications. Think of self-driving cars or radiology bots that analyze chest x-rays and characterize masses as cancerous or noncancerous.

These are high value – but only if they are also risk-tolerant. The pitch for self-driving cars is "fire most drivers and replace them with 'humans in the loop' who intervene at critical junctures." That's the risk-tolerant version of self-driving cars, and it's a failure. More than $100b has been incinerated chasing self-driving cars, and cars are nowhere near driving themselves:

https://pluralistic.net/2022/10/09/herbies-revenge/#100-billion-here-100-billion-there-pretty-soon-youre-talking-real-money

Quite the reverse, in fact. Cruise was just forced to quit the field after one of their cars maimed a woman – a pedestrian who had not opted into being part of a high-risk AI experiment – and dragged her body 20 feet through the streets of San Francisco. Afterwards, it emerged that Cruise had replaced the single low-waged driver who would normally be paid to operate a taxi with 1.5 high-waged skilled technicians who remotely oversaw each of its vehicles:

https://www.nytimes.com/2023/11/03/technology/cruise-general-motors-self-driving-cars.html

The self-driving pitch isn't that your car will correct your own human errors (like an alarm that sounds when you activate your turn signal while someone is in your blind-spot). Self-driving isn't about using automation to augment human skill – it's about replacing humans. There's no business case for spending hundreds of billions on better safety systems for cars (there's a human case for it, though!). The only way the price-tag justifies itself is if paid drivers can be fired and replaced with software that costs less than their wages.

What about radiologists? Radiologists certainly make mistakes from time to time, and if there's a computer vision system that makes different mistakes than the sort that humans make, they could be a cheap way of generating second opinions that trigger re-examination by a human radiologist. But no AI investor thinks their return will come from selling hospitals that reduce the number of X-rays each radiologist processes every day, as a second-opinion-generating system would. Rather, the value of AI radiologists comes from firing most of your human radiologists and replacing them with software whose judgments are cursorily double-checked by a human whose "automation blindness" will turn them into an OK-button-mashing automaton:

https://pluralistic.net/2023/08/23/automation-blindness/#humans-in-the-loop

The profit-generating pitch for high-value AI applications lies in creating "reverse centaurs": humans who serve as appendages for automation that operates at a speed and scale that is unrelated to the capacity or needs of the worker:

https://pluralistic.net/2022/04/17/revenge-of-the-chickenized-reverse-centaurs/

But unless these high-value applications are intrinsically risk-tolerant, they are poor candidates for automation. Cruise was able to nonconsensually enlist the population of San Francisco in an experimental murderbot development program thanks to the vast sums of money sloshing around the industry. Some of this money funds the inevitabilist narrative that self-driving cars are coming, it's only a matter of when, not if, and so SF had better get in the autonomous vehicle or get run over by the forces of history.

Once the bubble pops (all bubbles pop), AI applications will have to rise or fall on their actual merits, not their promise. The odds are stacked against the long-term survival of high-value, risk-intolerant AI applications.

The problem for AI is that while there are a lot of risk-tolerant applications, they're almost all low-value; while nearly all the high-value applications are risk-intolerant. Once AI has to be profitable – once investors withdraw their subsidies from money-losing ventures – the risk-tolerant applications need to be sufficient to run those tremendously expensive servers in those brutally expensive data-centers tended by exceptionally expensive technical workers.

If they aren't, then the business case for running those servers goes away, and so do the servers – and so do all those risk-tolerant, low-value applications. It doesn't matter if helping blind people make sense of their surroundings is socially beneficial. It doesn't matter if teenaged gamers love their epic character art. It doesn't even matter how horny scammers are for generating AI nonsense SEO websites:

https://twitter.com/jakezward/status/1728032634037567509

These applications are all riding on the coattails of the big AI models that are being built and operated at a loss in order to be profitable. If they remain unprofitable long enough, the private sector will no longer pay to operate them.

Now, there are smaller models, models that stand alone and run on commodity hardware. These would persist even after the AI bubble bursts, because most of their costs are setup costs that have already been borne by the well-funded companies who created them. These models are limited, of course, though the communities that have formed around them have pushed those limits in surprising ways, far beyond their original manufacturers' beliefs about their capacity. These communities will continue to push those limits for as long as they find the models useful.

These standalone, "toy" models are derived from the big models, though. When the AI bubble bursts and the private sector no longer subsidizes mass-scale model creation, it will cease to spin out more sophisticated models that run on commodity hardware (it's possible that Federated learning and other techniques for spreading out the work of making large-scale models will fill the gap).

So what kind of bubble is the AI bubble? What will we salvage from its wreckage? Perhaps the communities who've invested in becoming experts in Pytorch and Tensorflow will wrestle them away from their corporate masters and make them generally useful. Certainly, a lot of people will have gained skills in applying statistical techniques.

But there will also be a lot of unsalvageable wreckage. As big AI models get integrated into the processes of the productive economy, AI becomes a source of systemic risk. The only thing worse than having an automated process that is rendered dangerous or erratic based on AI integration is to have that process fail entirely because the AI suddenly disappeared, a collapse that is too precipitous for former AI customers to engineer a soft landing for their systems.

This is a blind spot in our policymakers debates about AI. The smart policymakers are asking questions about fairness, algorithmic bias, and fraud. The foolish policymakers are ensnared in fantasies about "AI safety," AKA "Will the chatbot become a superintelligence that turns the whole human race into paperclips?"

https://pluralistic.net/2023/11/27/10-types-of-people/#taking-up-a-lot-of-space

But no one is asking, "What will we do if" – when – "the AI bubble pops and most of this stuff disappears overnight?"

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/12/19/bubblenomics/#pop

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

--

tom_bullock (modified) https://www.flickr.com/photos/tombullock/25173469495/

CC BY 2.0 https://creativecommons.org/licenses/by/2.0/

4K notes

·

View notes

Text

Gut Move

Introducing helpful drugs into the body is often challenged by our own defences. Probiotics, beneficial microbes that can help to adjust the balance of our gut microbiome, may not stay around long enough to do their job as they’re quickly corroded by stomach acids and bile. Here, scientists develop structures capable of delivering probiotics orally – but with a new trick. These tiny scaffolds, about the half the size of a polo mint, are 3D-printed from a ‘bio-ink’ of cellulose, a form of dietary fibre. Fibre has many health benefits on its own, but it’s also resistant to damage from stomach acid. Instead, these tiny containers stick to the walls of the gut while they’re slowly digested. As they can be printed in a variety of shapes suited to different applications, they may be just the thing to tackle conditions like obesity or inflammatory bowel disease.

Written by John Ankers

Image from work by Yue Zhang and colleagues

Laboratory for Biomaterial and Immunoengineering, Institute of Functional Nano and Soft Materials (FUNSOM), Soochow University, Suzhou, Jiangsu, China

Image originally published with a Creative Commons Attribution 4.0 International (CC BY-NC 4.0)

Published in Science Advances, August 2024

You can also follow BPoD on Instagram, Twitter and Facebook

30 notes

·

View notes

Text

BIG ANNOUNCEMENT! EXCITING NEWS!

Hello my dear friends and siblings! I am so terribly sorry for my absence but I have been working on some big things and I have some VERY exciting news.

As some of you may know, I have been working with an affirming and inclusive parish, Saint Thekla Independent Orthodox Church. The priest and I have been working together for several months now and share a vision of building an inclusive and affirming community here in Indiana. To that end:

ANNOUNCEMENT ONE: I have been granted permission to found an offspring community under the umbrella of Saint Thekla. I am proud to announce the official opening of Holy Protection Orthodox Christian Community! Although we are mostly online currently, Mother Thekla and I will be working diligently to establish in-person meetings locally. That said, our online ministry will continue and I invite you all to participate. Our virtual Coffee Hour is especially great and not to be missed! (links below). Eventually we hope, by the grace of God, to grow into a fully functioning parish with a priest serving weekly Divine Liturgy. Which brings me to my second announcement:

ANNOUNCEMENT TWO: I have been granted permission to begin the process of reading for Holy Orders with the goal of ordination to the priesthood. I will submit my official application later this week but my spiritual director is confident given my ministry experience (and my brief time in Anglican seminary before my transition) my application will be welcomed and granted quickly. This process will take a couple of years. but I'm very excited to finally complete my journey to the priesthood after so many years. Glory to God for all things!

I will try to post here more regularly and consider this blog as an extension of the online ministry of Holy Protection. I invite you all to please participate in our online community and for those of you who live in my neck of the woods, I hope to invite you to in-person meetings soon! In the meantime, please like the Facebook page and join the Facebook group to stay up to date on our development!

Facebook page: https://www.facebook.com/profile.php?id=61563480251752

Facebook group: https://www.facebook.com/share/g/ecxTi3GAJ8iZiAa6/

Youtube channel (in development): https://www.youtube.com/@AffirmingOrthodoxy

#orthodoxy#queer christian#orthodox christianity#orthodox church#trans christian#faithfullylgbtq#orthodoxleftist#inclusiveorthodoxy#thisglassdarkly#gay christian#holyprotection#hoosier orthodoxy#appalachian orthodocy#queer appalachia#orthodox appalachia#affirming orthodoxy

27 notes

·

View notes

Text

A Cascading Fix

The floating garbage patch in the Pacific Ocean a huge ongoing issue. Plastic is the worst offender (and no it’s not all drinking straws and plastic bags— it’s mostly discarded fishing nets). Skimming would be too costly and unrealistic (it’s country sized— big country). Plus is almost a biome at this point— you couldn’t scoop out the trash without also scooping animals/eggs/plants basically causing more havoc trying to clean it up.

So what do you turn to? Bacteria

So this area has been in active research for 25+ years as the ultimate solution to dealing with plastic waste.

Should be great right? The lifespan of a bottle in a landfill falls from centuries to weeks in a vat. It’s such an alluring goal that people gloss over the path

Brute forcing thousands upon thousands of mutations on an enzyme that specializes in breaking down hydrocarbons sounds wildly risky.

Because what if you get one that does too well?

Future Forecaste

Silicon Valley Tech Bro Billion wants to try and improve his image as a part of the private jet class by holding a big public competition for innovative solutions for the garbage patch. Encourages all of his tech bro buddies to pitch their ideas. Billionaire promises to fund a pilot project for the top idea.

What wins out? Bacteria

And they apply the Facebook ‘move fast and break things’ philosophy to brute forcing mutations. Garbage patch is in international waters, so no approval (or oversight) to go and test your ideas.

In fact, with being out in the middle of the ocean, you can build your lab right on a boat and sail out there. And test your iterations right there. In the ocean. Why test on a simulated garbage patch when the real one is right there?

Success! A strain that breaks down plastics in a short timeframe in the cold of the ocean! Your test site quickly goes from floating landfill to. something?

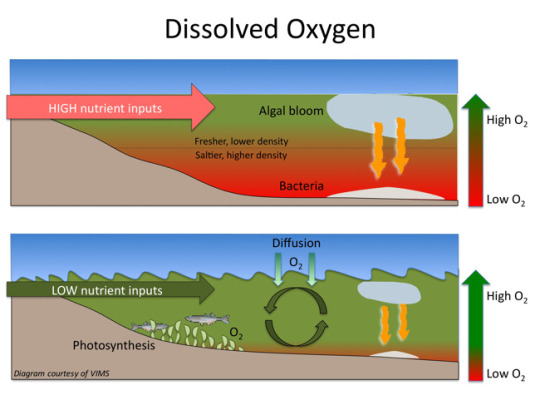

The enzymes broke the plastic into component nutrients. So you now have effectively dumped a whole flood of nutrients into the water. Kinda like dumping fertilizer

Cool. So now you you have turned garbage patch into the Great Pacific Algal Bloom and Dead Zone

And the bacteria isn’t staying put. Oceans have a way of moving things, and you just built a hardy sailor with a plentiful food supply.

The bacteria makes it to shore and suddenly, plastic isn’t permanent. Plastic isn’t safe in water. Every seal and gasket is now prone to failure. There is a rush to figure out which types of plastics are susceptible, which are resistant. New plastics with bacteria resistance are developed.

But that’s not even going to be the biggest issue

We’ve Got a Fuel Pox on our Hands

If it likes to eat plastic know what it’ll love? Gasoline and any other hydrocarbon

This bacteria would essentially turn gas into soy sauce. Think about fuel rotting

Suddenly world’s energy supply is at risk. Fear of contamination becomes the oil and gas industry’s number one concern. Gas becomes an even more precious commodity, and is only used when application demands. The industry takes on surgical level of cleanliness.

Meanwhile other people are prepping ‘Kombucha’ for their local pipeline

57 notes

·

View notes

Text

Native vs. Hybrid Mobile App Development: Making the Right Choice

Introduction

In the rapidly evolving landscape of mobile technology, businesses are presented with a crucial decision when developing mobile applications: Should they opt for native app development or go the hybrid route? This decision significantly affects user experience, development cost, and time-to-market. This comprehensive guide will delve into the differences between native and hybrid mobile app development and help you make an informed choice for your project.

Understanding Native App Development

Native apps provide unparalleled performance and seamless integration with the device's hardware and software. They offer a superior user experience due to their responsiveness and access to platform-specific features. involves creating applications designed to run on a specific platform or operating system, such as iOS or Android. These apps are built using platform-specific programming languages like Swift for iOS and Java/Kotlin for Android. Native App Development

The Benefits of Native App Development

1. Performance:

Native apps are optimized for the specific platform they are developed for, resulting in better performance and smoother user interactions.

2. User Experience:

Native apps provide a consistent and intuitive user experience, adhering to the design guidelines of each platform. It contributes to higher user engagement and satisfaction.

3. Access to Device Features:

Native apps can leverage device-specific features like GPS, camera, and push notifications, enhancing the app's functionality.

4. Offline Functionality:

Native apps often function offline, allowing users to access certain features without an internet connection.

Challenges of Native App Development

1. Cost and Time:

Developing separate apps for different platforms can be time-consuming and expensive. It requires hiring specialized developers for each platform.

2. Updates:

Managing updates and bug fixes for multiple native apps can be challenging, especially if the development team is not well-equipped.

Understanding Hybrid App Development

Hybrid app development aims to create applications that run on multiple platforms using a single codebase. These apps are typically built using web technologies like HTML, CSS, and JavaScript, wrapped in a native container. This approach allows developers to write code once and deploy it across different platforms, saving time and resources.

The Benefits of Hybrid App Development

1. Cost-Efficiency:

Since a single codebase is used for multiple platforms, development costs are significantly lower than native app development.

2. Faster Development:

Building a hybrid app is shorter due to the reuse of code across platforms, resulting in quicker time-to-market.

3. More accessible Updates:

Updating a hybrid app involves changing the shared codebase, simplifying the process, and ensuring platform consistency.

4. Wide Reach:

Hybrid apps can reach a broader audience as they are compatible with iOS and Android devices.

Challenges of Hybrid App Development

1. Performance:

Hybrid apps sometimes lag behind native apps, especially for complex animations and intensive graphics.

2. Limited Access to Device Features:

While hybrid apps can access certain device features, they might not offer the same level of integration as native apps.

3. User Experience:

Due to platform-specific design guidelines and differences, achieving a seamless user experience across different platforms can be challenging.

Making the Right Choice

When deciding between native and hybrid mobile app development, consider the following factors:

1. App Complexity:

For complex apps that require intensive graphics or intricate interactions, native development might be more suitable.

2. Budget:

Hybrid development could be cost-effective if you have budget constraints and want to develop for both platforms.

3. Time-to-Market:

If speed is crucial, hybrid development can help you launch your app faster.

4. User Experience:

If delivering a seamless and platform-specific user experience is your priority, native development is the way forward.

5. Long-Term Goals:

Consider your app's long-term goals and the potential need for ongoing updates and improvements.

Finding the Right Mobile App Development Company

Whether you choose native or hybrid development, partnering with the right mobile app development company is crucial for success. Look for a company that specializes in both web and mobile app development. Consider their expertise in native and hybrid approaches, portfolio, client reviews, and ability to understand your project's unique requirements.

Conclusion

In the realm of mobile app development, choosing between native and hybrid approaches is a decision that requires careful consideration. Native apps offer unparalleled performance and user experience, while hybrid apps provide cost-efficiency and quicker development. Ultimately, the right choice depends on your project's complexity, budget, and goals. Whichever path you choose, collaborating with a reputable mobile app development company will ensure your project's success in the competitive app market.

#digital marketing company#application for android app development#digital marketing company in indore#facebook ad campaign#google ads management services#flutter app development company#facebook ad agency#ios app development company in india#ionic app development company#facebook ads expert

0 notes

Text

Facebook Marketing Services in USA

Did you know that effective Facebook marketing can increase your brand visibility and sales? Let GMA Technology's experts handle your social media strategy for remarkable results! For More: https://www.gmatechnology.com/ Call Now : 1770-235-4853 #GMAExperts #DigitalMarketing #GMABoost #FacebookMarketing #SocialMediaSuccess #FacebookAds #SocialMediaMagic #StayAhead #SmallBizSuccess #GMATechnology

#web development#best web development company in united states#asp.net web and application development#logo design company#facebook#website landing page design#magento development#web designing company#digital marketing company in usa#pretty face#web development company

1 note

·

View note

Text

AI FOMO

A lot of "AI" content these days – where "AI" means machine learning trained on a large corpus of unstructured natural language to solve no specific task, with a prompt-based developer interface to allow building applications for any and every problem domain and also none in particular – is driven by fear of missing out, or FOMO. I am talking about content about AI.

It's not driven by a genuine need for "AI", which, again in this case usually means ChatGPT or something similar. I am not happy about this, but it's the nomenclature they use. There is no urgent problem that could not be solved before, that AI has now solved. Content about AI is also not disseminating new expert knowledge about prompt engineering or training methods. It's about a vague sense of unease about possibly being "left behind" in a world where all your colleagues are writing their e-mails by just giving a prompt to ChatGPT and copying and pasting, or asking ChatGPT so summarise what that e-mail they just got said. It's driven by a fear that there is a lot of "value" left on the table for people who don't automate their e-mail job.

Maybe that is a real problem. Instead of AI generated bullshit getting 100% automated, like all those "shrimp Jesus" facebook bots I read so much about, many e-mail jobs can be basically automated by copying and pasting text between your e-mail program and a language model, but you still need a warm body in a chair somewhere.

Isn't this odd? Language models and AI assistants are marketed to people who aren't tech-savvy, to people who don't even need these systems, by people who don't know how these systems work either. The articles have headlines like Ten ways you can incorporate AI into your work day. It's ingenious. There is no promise that this will make your life easier. There is no indication that the people who wrote it are experts. It's critic-proofed. Even if AI doesn't solve your problem, even if it doesn't make sense, even if I don't know what the hell I am talking about, here are ten things you can do with ChatGPT. Because it's the future. It's what's coming. Whether you need it or not.

Once you see the pattern, you can't unsee it: Here's how we use ChatGPT in the classroom. Here's how we asked Grok about political trends. Here's how an entrepreneur uses Copilot to generate business ideas.

So you talk to ChatGPT, because you don't want to miss out.

11 notes

·

View notes

Text

The opposite approaches taken towards Alaska by the two men to have occupied the Oval Office since 2016 helps illustrate why it has become increasingly difficult to finance major energy projects in the US over the past decade. The policy tug-of-war between Democrat and Republican administrations has damaged the ability of corporate management teams and major financial institutions to have confidence in the consistent application of US laws and regulations.

Biden’s Alaska approach

In an editorial published in late January, The Wall Street Journal editorial board wrote that “progressives want Alaska to be a natural museum untouched by humans”. It is an accurate assessment made obvious by the initiatives Biden took to prohibit development of oil, gas and mined minerals in the state over the past four years.

After Biden banned development on 3m acres on Alaska’s North Slope on 17 January, Alaska Senator Dan Sullivan wrote via a post on Facebook that his office had catalogued no fewer than 70 actions the administration had taken designed to damage the state’s energy sector and economy.

5 notes

·

View notes

Text

July 24 (UPI) -- Meta said Wednesday it removed tens of thousands accounts based in Nigeria in a crackdown on sextortion schemes stemming from the country.

The company said it took down 63,000 Instagram accounts in Nigeria, including a smaller coordinated network of roughly 2,500 accounts linked to 20 people.

"Financial sextortion is a horrific crime that can have devastating consequences. Our teams have deep experience in fighting this crime and work closely with experts to recognize the tactics scammers use, understand how they evolve and develop effective ways to help stop them," Meta said.

Meta also removed Facebook accounts, Pages and Groups it said were run by Yahoo Boys, a loosely organized group of cybercriminals operating largely out of Nigeria. Those accounts are banned under Meta's Dangerous Organizations and Individuals policy.

According to Meta those accounts were trying to "organize, recruit and train new scammers."

The removed Nigerian Instagram accounts targeted primarily adult men in the United States. Those accounts were identified using new technical signals Meta has developed to spot accounts engaging in sextortion.

Meta also removed approximately 7,200 Facebook assets, including 1,300 accounts, 200 Facebook pages and 5,700 Facebook Groups based in Nigeria that were allegedly providing tips for how to conduct sextortion scams.

"While these investigations and disruptions are critical, they're just one part of our approach," Meta's statement said. "We continue to support law enforcement in investigating and prosecuting these crimes, including by responding to valid legal requests for information and by alerting them when we become aware of someone at risk of imminent harm, in accordance with our terms of service and applicable law."

In April, two people in Nigeria were arrested and charged in the sexual extortion case of an Australian teen who died by suicide.

The boy took his own life after threats that intimate pictures he shared online with someone he thought was a female would be sent to family and friends.

The term sextortion refers to the act of getting victims to create and send sexually explicit material, then demanding money for not releasing that material.

Also in April, Meta tested new features to fight sextortion by automatically blurring nude images in Instagram by default on accounts for users younger than 18.

Meta said then that it had spent years working closely with experts to understand how scammers use sextortion to find and extort victims online.

16 notes

·

View notes