#JSON constructs for data querying

Explore tagged Tumblr posts

Text

Data Analyst Interview Questions: A Comprehensive Guide

Preparing for an interview as a Data Analyst is difficult, given the broad skills needed. Technical skill, business knowledge, and problem-solving abilities are assessed by interviewers in a variety of ways. This guide will assist you in grasping the kind of questions that will be asked and how to answer them.

By mohammed hassan on Pixabay

General Data Analyst Interview Questions

These questions help interviewers assess your understanding of the role and your basic approach to data analysis.

Can you describe what a Data Analyst does? A Data Analyst collects, processes, and analyzes data to help businesses make data-driven decisions and identify trends or patterns.

What are the key responsibilities of a Data Analyst? Responsibilities include data collection, data cleaning, exploratory data analysis, reporting insights, and collaborating with stakeholders.

What tools are you most familiar with? Say tools like Excel, SQL, Python, Tableau, Power BI, and describe how you have used them in past projects.

What types of data? Describe structured, semi-structured, and unstructured data using examples such as databases, JSON files, and pictures or videos.

Technical Data Analyst Interview Questions

Technical questions evaluate your tool knowledge, techniques, and your ability to manipulate and interpret data.

What is the difference between SQL's inner join and left join? The inner join gives only the common rows between tables, whereas a left join gives all rows of the left table as well as corresponding ones of the right.

How do you deal with missing data in a dataset? Methods are either removing rows, mean/median imputation, or forward-fill/backward-fill depending on context and proportion of missing data.

Can you describe normalization and why it's significant? Normalization minimizes data redundancy and enhances data integrity by structuring data effectively between relational tables.

What are some Python libraries that are frequently used for data analysis? Libraries consist of Pandas for data manipulation, NumPy for numerical computations, Matplotlib/Seaborn for data plotting, and SciPy for scientific computing.

How would you construct a query to discover duplicate values within a table? Use a GROUP BY clause with a HAVING COUNT(*) > 1 to find duplicate records according to one or more columns.

Behavioral and Situational Data Analyst Interview Questions

These assess your soft skills, work values, and how you deal with actual situations.

Describe an instance where you managed a challenging stakeholder. Describe how you actively listened, recognized their requirements, and provided insights that supported business objectives despite issues with communication.

Tell us about a project in which you needed to analyze large datasets. Describe how you broke the dataset down into manageable pieces, what tools you used, and what you learned from the analysis.

Read More....

0 notes

Text

Amazon Braket SDK Architecture And Components Explained

A comprehensive framework that abstracts the complexity of quantum hardware and simulators, Amazon Braket SDK is gradually becoming a major tool for quantum computing. It aims to give developers a consistent interface for using a variety of quantum resources and inspire creativity in the fast-growing field of quantum computing. Its multilayered construction.

SDK Architecture Amazon Braket

Abstraction for Comfort and Flexibility The SDK's core is its powerful device abstraction layer. This vital portion provides a single interface to Oxford Quantum Circuits, IonQ, Rigetti, and Xanadu quantum backends as well as simulators. This layer largely safeguards developers from understanding quantum processor details by turning user-defined quantum circuits into backend-specific instruction sets and protocols.

Quantum programs are portable and interoperable thanks to standardised quantum circuit representations and backend-specific adapters. Quantum computing is dynamic, therefore its modular architecture lets you add new backends without disrupting the core functionality. The main quantum development modules are: Braket.circuits: Hub Quantum Circuit The Braket SDK's main module, braket.circuits, offers comprehensive tools for building, altering, and refining quantum circuits. This module's DAG model of quantum circuits permits complicated optimisations like subexpression elimination and gate cancellation. Ability to construct bespoke gates and allow many quantum gate sets provides it versatility. Quantum computing frameworks like PennyLane and Qiskit allow developers to use current tools and knowledge. Compatible quantum computing platforms benefit from OpenQASM compliance. Braket.jobs: Management of Quantum Execution Braket.jobs controls quantum circuits on simulators and hardware. It tracks the Braket service's job submission process and receives results. This module is crucial for error handling, prioritisation, and job queue management. Developers can customise the execution environment by setting parameters like shots, random number seed, and experiment duration. The module supports synchronous and asynchronous execution, so developers can choose the right one. It also tracks resource use and cost to optimise quantum processes. Braket.devices: Hardware Optimisation and Access The braket.devices module is essential for accessing quantum processors and simulators. Developers can query qubit count, connection, and gate integrity. This module gives methods for selecting the optimum equipment for a task based on cost and performance. A device profile system that uniformly describes each device's capabilities allows the SDK to automatically optimise quantum circuits for the chosen device, enhancing efficiency and reducing errors. Device characterisation and calibration are also possible with the module, ensuring peak efficiency.

Amazon Braket SDK Parts

Smooth Amazon S3 Integration: The SDK's seamless interface with Amazon S3, a scalable and affordable storage alternative, is key to its architecture. Quantum circuits are usually saved in S3 as JSON files for easy sharing and version management. A persistent calculation record is established by saving job results in S3. The SDK's S3 APIs simplify data analysis and visualisation. The SDK can use AWS Lambda and Amazon SageMaker using this interface to construct more complex quantum applications. A Solid Error Mitigation Framework: Due to quantum hardware noise and defects, the Amazon Braket SDK includes a robust error mitigation system. This framework includes crucial error detection, correction, and noise characterisation algorithms. These procedures can be set up and implemented using SDK APIs, allowing developers to customise error mitigation. It helps developers improve their error mitigation strategy with tools to analyse approaches. As methods and algorithms become available, the framework will be updated. Security for Enterprises with AWS IAM Enterprise-grade security is possible with AWS IAM. The SDK's architecture relies on AWS IAM, making security crucial. IAM's fine-grained access control lets developers set policies that restrict quantum resource access to users and programs. Data in transit and at rest is encrypted by the SDK to prevent unauthorised access to sensitive quantum data. The SDK protects quantum data and meets enterprise clients' high security standards. Connects to AWS CloudTrail and GuardDuty for complete security monitoring and auditing. In conclusion

The Amazon Braket SDK provides a customised, secure quantum computing framework. Abstraction of hardware difficulties, powerful circuit design and execution tools, integration with scalable AWS services, and prioritisation of security and error prevention lower the barrier to entry, allowing developers to fully explore quantum computing's possibilities.

#AmazonBraketSDK#SDKArchitecture#IonQ#QuantumCircuitHub#AmazonS3#AWSLambda#AmazonSageMaker#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

0 notes

Text

A Deep Dive into Modern Backend Development for Web Application

Creating seamless and dynamic web experiences often comes down to what happens behind the scenes. While front-end design captures initial attention, the backend is where much of the magic unfolds — handling data, security, and server logic. This article explores the core concepts of modern backend development, examines how evolving practices can fuel growth, and offers insights on selecting the right tools and partners.

1. Why the Backend Matters

Imagine a beautifully designed website that struggles with slow loading times or frequent errors. Such issues are typically rooted in the server’s logic or the infrastructure behind it. A robust backend ensures consistent performance, efficient data management, and top-tier security — elements that collectively shape user satisfaction.

Moreover, well-designed backend systems are better equipped to handle sudden traffic spikes without sacrificing load times. As online platforms scale, the backend must keep pace, adapting to increased user demands and integrating new features with minimal disruption. This flexibility helps businesses stay agile in competitive environments.

2. Core Components of Backend Development

Server

The engine running behind every web application. Whether you choose shared hosting, dedicated servers, or cloud-based virtual machines, servers host your application logic and data endpoints.

Database

From relational systems like MySQL and PostgreSQL to NoSQL options such as MongoDB, databases store and manage large volumes of information. Choosing the right type hinges on factors like data structure, scalability needs, and transaction speed requirements.

Application Logic

This code handles requests, processes data, and sends responses back to the front-end. Popular languages for writing application logic include Python, JavaScript (Node.js), Java, and C#.

API Layer

Application Programming Interfaces (APIs) form a communication bridge between the backend and other services or user interfaces. RESTful and GraphQL APIs are two popular frameworks enabling efficient data retrieval and interactions.

3. Emerging Trends in Backend Development

Cloud-Native Architectures

Cloud-native applications leverage containerization (Docker, Kubernetes) and microservices, making it easier to deploy incremental changes, scale specific components independently, and limit downtime. This modular approach ensures continuous delivery and faster testing cycles.

Serverless Computing

Platforms like AWS Lambda, Google Cloud Functions, and Azure Functions allow developers to run code without managing servers. By focusing on logic rather than infrastructure, teams can accelerate development, paying only for the computing resources they actually use.

Microservices

Rather than constructing one large, monolithic system, microservices break an application into smaller, independent units. Each service manages a specific function and communicates with others through lightweight protocols. This design simplifies debugging, accelerates deployment, and promotes autonomy among development teams.

Real-Time Communication

From chat apps to collaborative tools, real-time functionality is on the rise. Backend frameworks increasingly support WebSockets and event-driven architectures to push updates instantly, boosting interactivity and user satisfaction.

4. Balancing Performance and Security

The need for speed must not compromise data protection. Performance optimizations — like caching and query optimization — improve load times but must be paired with security measures. Popular practices include:

Encryption: Safeguarding data in transit with HTTPS and at rest using encryption algorithms.

Secure Authentication: Implementing robust user verification, perhaps with JWT (JSON Web Tokens) or OAuth 2.0, prevents unauthorized access.

Regular Audits: Scanning for vulnerabilities and patching them promptly to stay ahead of evolving threats.

Organizations that neglect security can face breaches, data loss, and reputational harm. Conversely, a well-fortified backend can boost customer trust and allow businesses to handle sensitive tasks — like payment processing — without fear.

5. Making the Right Technology Choices

Selecting the right backend technologies for web development hinges on various factors, including project size, performance targets, and existing infrastructure. Here are some examples:

Node.js (JavaScript): Known for event-driven, non-blocking I/O, making it excellent for real-time applications.

Python (Django, Flask): Valued for readability, a large ecosystem of libraries, and strong community support.

Ruby on Rails: Emphasizes convention over configuration, speeding up development for quick MVPs.

Java (Spring): Offers stability and scalability for enterprise solutions, along with robust tooling.

.NET (C#): Integrates deeply with Microsoft’s ecosystem, popular in enterprise settings requiring Windows-based solutions.

Evaluating the pros and cons of each language or framework is crucial. Some excel in rapid prototyping, while others shine in large-scale, enterprise-grade environments.

6. Customizing Your Approach

Off-the-shelf solutions can help businesses get started quickly but may lack flexibility for unique requirements. Customized backend development services often prove essential when dealing with complex workflows, specialized integrations, or a need for extensive scalability.

A tailored approach allows organizations to align every feature with operational goals. This can reduce technical debt — where one-size-fits-all solutions require extensive workarounds — and ensure that the final product supports long-term growth. However, custom builds do require sufficient expertise, planning, and budget to succeed.

7. The Role of DevOps

DevOps practices blend development and operations, boosting collaboration and streamlining deployment pipelines. Continuous Integration (CI) and Continuous Deployment (CD) are common components, automating tasks like testing, building, and rolling out updates. This not only reduces human error but also enables teams to push frequent, incremental enhancements without risking application stability.

Infrastructure as Code (IaC) is another DevOps strategy that uses configuration files to manage environments. This approach eliminates the guesswork of manual setups, ensuring consistent conditions across development, staging, and production servers.

8. Measuring Success and Ongoing Improvement

A robust backend setup isn’t a one-time project — it’s a continuous journey. Monitoring key performance indicators (KPIs) like uptime, response times, and error rates helps identify problems before they escalate. Logging tools (e.g., ELK stack) and application performance monitoring (APM) tools (e.g., New Relic, Datadog) offer deep insights into system performance, user behavior, and resource allocation.

Regular reviews of these metrics can inform incremental improvements, from refactoring inefficient code to scaling up cloud resources. This iterative process not only keeps your application running smoothly but also maintains alignment with evolving market demands.

Conclusion

From blazing-fast load times to rock-solid data integrity, a well-crafted backend paves the way for exceptional digital experiences. As businesses continue to evolve in competitive online spaces, adopting modern strategies and frameworks can yield remarkable benefits. Whether leveraging serverless architectures or working with microservices, organizations that prioritize performance, security, and scalability remain better positioned for future growth.

0 notes

Text

Maximizing Business Insights with Dynamics 365 & Elasticsearch-Py

In a data-driven world, businesses that effectively leverage customer insights and manage large datasets stand out in competitive markets. Microsoft Dynamics 365 Customer Insights provides businesses with the tools to centralize and analyze customer data, while Elasticsearch-Py empowers them to search and manage vast datasets efficiently.

This article explores how these technologies, often implemented by innovators like Bluethink Inc., can transform operations, optimize decision-making, and elevate customer experiences.

Dynamics 365 Customer Insights: Centralizing Customer Data

Microsoft Dynamics 365 Customer Insights is a robust Customer Data Platform (CDP) designed to consolidate data from multiple sources into unified customer profiles. It equips businesses with AI-powered analytics to personalize interactions and enhance customer engagement.

Key Features of Dynamics 365 Customer Insights

Unified Customer Profiles The platform aggregates data from CRM, ERP, marketing tools, and external sources to create a single source of truth for each customer.

AI-Driven Analytics Advanced machine learning models uncover trends, segment customers, and predict behaviors, enabling data-driven decisions.

Customizable Insights Tailor dashboards and reports to meet the specific needs of sales, marketing, and customer support teams.

Real-Time Updates Customer profiles are dynamically updated, ensuring teams have the most accurate information at their fingertips.

Benefits for Businesses

Personalized Customer Experiences Leverage detailed profiles to deliver targeted offers, tailored recommendations, and relevant communication.

Proactive Engagement Predict churn risks, identify upselling opportunities, and optimize customer journeys using AI insights.

Operational Efficiency Eliminate data silos and align cross-departmental strategies through centralized data management.

Use Case

A retail company using Dynamics 365 Customer Insights can analyze purchasing trends to segment customers. High-value shoppers might receive exclusive loyalty rewards, while occasional buyers are targeted with personalized offers to boost engagement.

Elasticsearch-Py: Managing and Searching Large Datasets

Elasticsearch is a powerful, open-source search and analytics engine designed to handle large datasets efficiently. Elasticsearch-Py, the official Python client for Elasticsearch, simplifies integration and enables developers to build advanced search functionalities with ease.

Why Use Elasticsearch-Py?

Efficient Data Handling Elasticsearch-Py provides a Pythonic way to index, search, and analyze large datasets, ensuring optimal performance.

Advanced Query Capabilities Developers can construct complex queries for filtering, sorting, and aggregating data using JSON syntax.

Real-Time Search Elasticsearch delivers near-instant search results, even for massive datasets.

Scalability and Reliability Its distributed architecture ensures high availability and fault tolerance, making it ideal for large-scale applications.

Getting Started with Elasticsearch-Py

Install Elasticsearch-Py Use pip to install the library:

Connect to Elasticsearch Establish a connection to your Elasticsearch instance:

Index Data Add documents to your Elasticsearch index for future searches.

Search Data Query your data to retrieve relevant results

Refine Queries

Use advanced search features like filters, faceted search, and aggregations to enhance results.

Use Case

An e-commerce platform can integrate Elasticsearch-Py to power its search bar. Customers can find products using filters like price, brand, or category, with results displayed in milliseconds.

Synergizing Dynamics 365 Customer Insights and Elasticsearch

When combined, Dynamics 365 Customer Insights and Elasticsearch-Py can create a powerhouse for managing customer data and delivering exceptional user experiences.

Integration Benefits

Enhanced Search for Customer Insights Elasticsearch-Py can index customer data from Dynamics 365 Customer Insights, providing advanced search and filtering capabilities for internal teams.

Personalized Recommendations Use insights from Dynamics to tailor search results for customers, boosting satisfaction and sales.

Scalable Data Management With Elasticsearch, businesses can handle expanding datasets from Dynamics, ensuring consistent performance as they grow.

Real-World Example

A travel agency uses Dynamics 365 Customer Insights to understand customer preferences and Elasticsearch-Py to provide lightning-fast searches for vacation packages. Customers receive curated results based on their past bookings, enhancing satisfaction and increasing conversion rates.

Bluethink Inc.: Empowering Businesses with Cutting-Edge Solutions

Bluethink Inc. specializes in integrating advanced technologies like Dynamics 365 Customer Insights and Elasticsearch-Py to optimize operations and elevate customer experiences.

Why Choose Bluethink Inc.?

Expertise in Integration: Seamlessly connect Dynamics 365 with Elasticsearch for a unified platform.

Tailored Solutions: Customize implementations to meet unique business needs.

Comprehensive Support: From consultation to deployment, Bluethink ensures a smooth transition to advanced systems.

Success Story

A logistics company partnered with Bluethink Inc. to centralize customer data using Dynamics 365 and implement Elasticsearch for real-time tracking and search capabilities. The result was improved operational efficiency and enhanced customer satisfaction.

Conclusion

Dynamics 365 Customer Insights and Elasticsearch-Py are transformative tools that enable businesses to harness the power of data. By unifying customer profiles and leveraging advanced search capabilities, organizations can drive personalized engagement, streamline operations, and scale effectively. With Bluethink Inc.’s expertise, businesses can seamlessly implement these technologies, unlocking their full potential and achieving long-term success in a competitive marketplace.

0 notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] Week 1: Foundations of Programming in C#Day 1: Boolean Expressions - Importance in handling user data.Day 2: Variable Scope & Logic Control - Understanding code blocks.Day 3: Switch Constructs - Creating branching logic.Day 4: For Loops - Iterating code.Day 5: While & Do-While Loops - Controlling execution flow.Day 6: String Built-in Methods - Extracting information.Day 7: Advanced String Methods - IndexOfAny() and LastIndexOf().Week 2: Intermediate Topics and Data HandlingDay 8: Exception Handling - Patterns and practices.Day 9: Null Safety - Nullable context in C# projects.Day 10: File Paths - Built-in methods for handling paths.Day 11: Array Helper Methods - Sort, Reverse, Clear, Resize.Day 12: Azure Functions - Serverless applications.Day 13: ConfigureAwait(false) - Avoiding deadlock in async code.Day 14: Limiting Concurrent Async Operations - Improving performance.Day 15: Lazy Initialization - Using Lazy class.Week 3: Advanced Techniques and Performance OptimizationDay 16: In-Memory Caching - Enhancing application performance.Day 17: Interlocked Class - Reducing contention in multi-threaded applications.Day 18: AggressiveInlining Attribute - Influencing JIT compiler behavior.Day 19: Stack vs. Heap Allocation - Understanding memory usage.Day 20: Task vs. ValueTask - Optimizing resource usage in async code.Day 21: StringComparison - Efficient string comparison.Day 22: Array Pool - Reducing garbage collection cycles.Day 23: Span over Arrays - Optimizing memory manipulation.Day 24: Avoiding Exceptions in Flow Control - Enhancing readability and performance.Day 25: Exception Filters - Improving readability.Week 4: Mastering C# and .NETDay 26: Loop Unrolling - Enhancing loop performance.Day 27: Query vs. Method Syntax - Writing LINQ queries.Day 28: Stackalloc - Using stack memory for performance.Day 29: Generics & Custom Interfaces - Avoiding unnecessary boxing.Day 30: XML vs. JSON Serialization - Improving efficiency and effectiveness. ASIN : B0DC12RQYJ Publisher : Independently Published (1 August 2024) Language : English Paperback : 80 pages ISBN-13 : 979-8334726963 Item Weight : 118 g Dimensions : 15.24 x 0.46 x 22.86 cm [ad_2]

0 notes

Text

Technical Issues with e-Invoice Portals: Causes and Fixes

Another enhancement of tax compliance in India was introduced by the e-Invoice system that substantially optimizes the invoicing system and enhances transparency. It has reduced the extent of manual input and errors, and has also made a positive impact in the overall administration of invoices and especially the monitoring of compliance with GST. But, organizations encounter various technical issues with e-Invoice portals including slow response time, system crashes or freezes, and sometimes difficulties in integrating with other business applications.

These types of problems can be time consuming, interfere with the normal flow of business, and most of all create annoyance. They commonly result from high levels of traffic on the portal, system constraints or inexperience in implementing the digital support structure. In the light of these challenges, appropriate measures that organizations should take include; enhancing it systems, training employees, and applying GST compatible software for integration. Updates and enhanced capability from the government also lessen interruptions, as can redesigns from the external environment. If properly implemented these barriers can be breached and the full advantages of the e-Invoice system realised for business.

Common Technical Issues with e-Invoice Portals

Portal Downtime:

The downtime show due to server overload, especially during peak filing time like month-end or tax deadlines.

Due to the normal planned workouts or technical glitches, sometimes the portal may become a little inaccessible.

Slow Portal Performance:

Traffic volumes during business hours flood the portal, making it challenging to generate, verify, or download invoices promptly.

Inefficient tuning of your system can, however, worsen these latencies to the detriment of your user, making it challenging to generate, verify, or download invoices promptly.

Login Issues:

It is not unusual for the user to have problems with their login credentials, passwords, expired passwords, or two-factor authentication issues.

System failures may also interfere with the ability to reset passwords or access specific accounts they hold, including expired passwords or two-factor authentication failures.

API Integration Failures:

Companies that have integrated their ERP or accounting software with the e-Invoice portal may encounter connectivity issues, including timeouts or incorrect data transmission.

API version changes can also pose problems to integration, always necessitating further debugging.

Error Codes During Submission:

Specific errors come up from invoices, and they include "GSTIN not valid," "Invoice date mismatch," "Duplicate IRN," and "Item details missing." Such mistakes are often caused by differences in data representation, template non-conformity with portal standards, and they frequently occur during invoice uploads.

These errors are typically caused by variations in the data presented or partial adherence to the requirements of the portal.

Data Upload Problems:

Occasionally, there is a failure in JSON data uploads because of poor format or missing one or more mandatory fields or the file size is too big to be handled by the system. This triggers failed uploads, corrupt or incompatible files, as well as missing records regarding invoices, which may only be partially created if the file size is too large to be processed by the system.

Incompatible Another problematic could be incompatible or corrupt files may be in uploading documents or queried records that have to do with invoices may not be fully constructed.

Security Concerns:

Some of the problematic that can arose from having spoofing links that resemble the official e-Invoice link include.

Information prompts that include messages that relate to the navigation of web browsers and lead to insecure connections or expired SSL certificates also dissuades the users from visiting the portal.

Inaccurate Validation Rules:

The most common issue occurs when the validation rules in the portal fail to match actual GST regulations, causing correct invoices to be rejected.

Incomplete Documentation and Support:

This then means that users cannot get enough technical documentations or else have been provided with insufficient notes whenever there are faults, it takes time to work on them.

Lack of, or even worse, delayed customer service, or slow methods of addressing a customer’s concern just introduces more frustration.

Session Expiry Issues:

Short session timeouts disrupt productivity by forcing repeated logins, wasting time, and interrupting workflow.

Causes Behind These Issues

High User Traffic:

At points like end of the month or before tax due dates more users visit the portal, in the process they put pressure on the servers by slowing them or pausing them.

This can be made worse by a lack of available server space or no possibility of load balancing.

System Maintenance and Updates:

Scheduled It may get temporarily locked during scheduled maintenance, which may include updates, patches, or other changes.

Frequent updates or back-end alterations can result in functionality problems or undesirable bugs that interfere with operations during core tasks.

Outdated Browsers or Software:

The major problems are as follows: Using older versions of browsers or incompatible operating systems on a computer makes it impossible for key elements, such as form submission or invoice creation, to function properly.

Sparing it rarely may lead to insecure environment or maybe the user will miss the opportunities introduced in that portal.

Improper Data Entry:

Incorrect or misplaced GSTIN, wrong HSN Codes, wrong invoice dates or section numbers can cause failure while furnishing statement or display error messages.

Misformatted JSON files or, worse, JSON files that do not include mandatory fields cause rejection or validation errors when uploading invoices.

Technical Glitches:

There may be a temporary stoppage of service due to back-end errors encountered either in the portal or the API.

These issues may include timeouts, data synchronization problems, or improper error management, all of which affect the normal functioning of the portal.

Connectivity Problems:

The exchange rate can also impact the cost of consumables used for entering data into the e-Invoice portal.

At the same time, while using the network, especially in areas where broadband connection is not very stable, users will often come across certain rate limits, which make the uploads partial, the waiting time rather long and the submissions fail.

User Training and Preparedness:

Lapses, arising from inadequate training or prior experience with the e-Invoice portal, may lead to misunderstandings of error codes or incorrect data being entered in wrong fields.

Failure to understand what is expected by the system may cause issues like poor data matching or non-compliance with portal standards.

System Configuration Issues:

Issues with integration between the business's internal systems, such as ERP and accounting software, and the e-Invoice portal result in data transfer errors or format discrepancies.

Mistakes, such as an incorrect API key or wrong endpoint, can lead to an inability to generate IRNs or transmit data.

Excessive Load on Third-Party Services:

Users with third-party software solutions for creating invoices or interfacing with the e-Invoice system may be negatively affected by these services' output or availability, exacerbating the invoicing issue.

Security Concerns:

An erroneous approach to portal protection, with weak or outdated encryption methods, exposes the portal to cyberattacks and subsequent outages or compromises in system security.

Fixes and Best Practices

Monitor Portal Notifications:

Refer to the official e-Invoice portal for information on regularly scheduled services, temporary shutdowns, or updates to the systems.

Make use of the portal by setting up alerts or subscribing to the channels through which it communicates in the event of a change.

Optimize Usage Timing:

Do not handle transactions close to the end of the month or near tax season, as this will expose the portal to high traffic, leading to instability.

Decide which invoices to submit at any given time and do so during less busy periods.

Update Systems and Software:

Make sure that your browser, operating system, and integrated software match the technical requirements of the e-Invoice portal.

Frequently update these systems to ensure compatibility, better protection, and avoid technical challenges.

Verify Data Accuracy:

Cross-check all the elements of the invoice, including GSTIN, HSN codes, invoice date, and item descriptions before submitting it.

Use your ERP or accounting software to install automated validation tools to help minimize the risk of incorrect data.

Strengthen API Integrations:

Consult technical professionals to ensure interoperability between your internal system and the e-Invoice system (ERP/accounting software).

Conduct frequent testing on your system to ensure that no connectivity or data transmission issues affect invoicing.

Use Reliable Internet Connections:

When accessing the portal or submitting invoices, ensure you are connected to a stable and reliable internet connection to avoid interruptions.

Do not use public or unreliable connections that may compromise the bandwidth required by you and the portal.

Contact Support:

For recurring problems, immediately communicate with the GST helpdesk or consult tax professionals.

When seeking help to solve an error or issue, avoid providing generalized information that may cause your problem to go in circles and waste valuable resources.

Implement Security Measures:

Only log in to the e-Invoice portal from the official website link to avoid being redirected to a phishing site.

Never use a simple password for your accounts. Incorporate two-factor authentication and ensure your network security includes an encryption system to protect your data.

Prepare for System Failures:

Develop a backup plan, such as using a secondary portal or manually storing invoice data, in case of system downtimes.

Regularly back up your invoicing data to ensure business continuity during unforeseen disruptions.

Train Staff and Users:

Provide training to employees managing the invoicing process to ensure they understand the system and can troubleshoot common issues effectively.

Ensure that your team is always informed about any changes in the portal or new features that may help increase their preparedness.

Conclusion

Technical issues with e-Invoice portals are bound to occur, but with proper user awareness and corporate measures in place, these problems can be effectively managed. By analyzing these difficulties and applying the suggested solutions, interruptions can be minimized, leading to improved operations and compliance. Staying updated, using effective systems, and adhering to standard practices will ensure a smoother, more compliant invoicing process.

For organizations like The Legal Dost, where timely and efficient tax filing is crucial, implementing these strategies is essential to avoid disruptions in operations. With adequate preparation and foresight before adopting e-Invoicing, The Legal Dost and other related businesses can navigate the process smoothly, with minimal complications.

0 notes

Text

Mastering Elasticsearch Query DSL Ultimate Guide and Tutorial

=========================================================== Introduction Elasticsearch is a powerful search and analytics engine that uses the Conceptual Query Language (CQL) for building complex search queries. The Query DSL (Domain Specific Language) is a JSON-based query language that enables you to construct sophisticated queries to retrieve data from Elasticsearch indexes. Mastering the…

0 notes

Text

Integrating Address Lookup API: Step-by-Step Guide for Beginners

Address Lookup APIs offer businesses a powerful tool to verify and retrieve address data in real-time, reducing the risk of errors and improving the user experience. These APIs are particularly valuable in e-commerce, logistics, and customer service applications where accurate address information is essential. Here’s a step-by-step guide to help beginners integrate an Address Lookup API seamlessly into their systems.

1. Understanding Address Lookup API Functionality

Before integrating, it’s crucial to understand what an Address Lookup API does. This API connects with external databases to fetch validated and standardized addresses based on partial or full input. It uses auto-completion features, suggesting addresses as users type, and provides accurate, location-specific results.

2. Choose the Right API Provider

Several providers offer address lookup services, each with unique features, pricing, and regional coverage. When selecting an API, consider factors like reliability, data accuracy, response speed, ease of integration, and support for international addresses if needed. Some popular options include Google Maps API, SmartyStreets, and Loqate.

3. Obtain API Credentials

Once you've chosen a provider, sign up on their platform to get your API credentials, usually consisting of an API key or token. These credentials are necessary for authorization and tracking API usage.

4. Set Up the Development Environment

To start integrating the API, set up your development environment with the necessary programming language and libraries that support HTTP requests, as APIs typically communicate over HTTP/HTTPS.

5. Make a Basic API Request

Construct a basic API request to understand the structure and response. Most address lookup APIs accept GET requests, with parameters that include the API key, address input, and preferred settings. By running a basic test, you can see how the API responds and displays potential address matches.

6. Parse the API Response

When the API returns address suggestions, parse the response to format it into user-friendly options. Typically, responses are in JSON or XML formats. Extract the needed data fields, such as street name, postal code, city, and state, to create a clean, organized list of suggestions.

7. Implement Error Handling and Validation

Errors can occur if the API service is unavailable, if the user enters incorrect information, or if there are connectivity issues. Implement error-handling code to notify users of any problems. Also, validate address entries to ensure they meet any specific format or regional requirements.

8. Test and Optimize Integration

Once you’ve integrated the API, test it thoroughly to ensure it works seamlessly across different devices and platforms. Pay attention to response times, as this affects user experience. Some API providers offer caching options or allow for request optimization to improve speed.

9. Monitor API Usage and Costs

Most address lookup APIs charge based on the number of requests, so monitor your usage to avoid unexpected charges. Optimize your API calls by limiting requests per session or using caching to reduce redundant queries.

10. Keep Up with API Updates

API providers often update their services, offering new features or making changes to endpoints. Regularly check for updates to keep your integration running smoothly and utilize any new functionalities that enhance the user experience.

By following these steps, businesses can integrate Address Lookup APIs effectively, providing a smoother, more reliable user experience and ensuring accurate address data collection.

youtube

SITES WE SUPPORT

Mail PO Box With API – Wix

0 notes

Text

How to Set Up Postman to Call Dynamics 365 Services

Overview

A wide range of setup postman to call d365 services to allow developers and administrators to work programmatically with their data and business logic. For calling these D365 services, Postman is an excellent tool for testing and developing APIs. Your development process can be streamlined by properly configuring Postman to call D365 services, whether you're integrating third-party apps or running regular tests. You may ensure seamless and effective API interactions by following this guide, which will help you through the process of configuring Postman to interface with D365 services.

How to Set Up Postman Step-by-Step to Call D365 Services

Set up and start Postman:

Install Postman by downloading it from the official website.

For your D365 API interactions, open Postman, create a new workspace, or use an existing one.

Obtain Specifics of Authentication:

It is necessary to use OAuth 2.0 authentication in order to access D365 services. If you haven't previously, start by registering an application in Azure Active Directory (Azure AD).

Go to "Azure Active Directory" > "App registrations" on the Azure portal to register a new application.

Make a note of the Application (Client) ID and the Directory (Tenant) ID. From the "Certificates & Secrets" area, establish a client secret. For authentication, these credentials are essential.

Set up Postman's authentication:

Make a new request in Postman and choose the "Authorization" tab.

After selecting "OAuth 2.0" as the type, press "Get New Access Token."

Complete the necessary fields:

Name of Token: Assign a moniker to your token.

Type of Grant: Choose "Client Credentials."

URL for Access Token: For your tenant ID, use this URL: https://login.microsoftonline.com/oauth2/v2.0/token Client ID: From Azure AD, enter the Application (Client) ID.

Client Secret: Type in the secret you made for the client.

Format: https://.crm.dynamics.com/.default is the recommended one.

To apply the token to your request, select "Request Token" and then "Use Token."

Construct API Requests:

GET Requests: Use the GET technique to retrieve data from D365 services. To query client records, for instance:

.crm.dynamics.com/api/data/v9.0/accounts is the URL.

POST Requests: POST is used to create new records. Provide the information in the request body in JSON format. Creating a new account, for instance:

.crm.dynamics.com/api/data/v9.0/accounts is the URL.

JSON body: json

Copy the following code: {"telephone1": "123-456-7890", "name": "New Account"}

PATCH Requests: Use PATCH together with the record's ID to update already-existing records:

.crm.dynamics.com/api/data/v9.0/accounts() is the URL.

JSON body: json

Code {"telephone1": "987-654-3210"} should be copied.

DELETE Requests: Utilize DELETE together with the record's ID: .crm.dynamics.com/api/data/v9.0/accounts()

Add the parameters and headers:

In the "Headers" tab, make sure to include:

Bearer is authorized.

Application/json is the content type for POST and PATCH requests.

For filtering, sorting, or pagination in GET requests, use query parameters as necessary. As an illustration, consider this URL: https://.crm.dynamics.com/api/data/v9.0/accounts?$filter=name eq 'Contoso'

Submit Requests and Evaluate Answers:

In order to send your API queries, click "Send."

Check if the response in Postman is what you expected by looking at it. The answer will comprise status codes, headers, and body content, often in JSON format.

Deal with Errors and Issues:

For further information, look at the error message and status code if you run into problems. Authentication failures, misconfigured endpoints, or badly formatted request data are typical problems.

For information on specific error codes and troubleshooting techniques, consult the D365 API documentation.

Summary

Getting Postman to make a call A useful method for testing and maintaining your D365 integrations and API interactions is to use Dynamics 365 services. Through the configuration of Postman with required authentication credentials and D365 API endpoints, you may effectively search, create, update, and remove records. This configuration allows for smooth integration with other systems and apps in addition to supporting thorough API testing. Developing, testing, and maintaining efficient integrations will become easier with the help of Postman for D365 services, which will improve data management and operational effectiveness in your Dynamics 365 environment.

0 notes

Text

5 Essential Tips for Mastering the MEAN Stack Development

Mastering the MEAN stack (MongoDB, Express.js, Angular, and Node.js) offers up new possibilities in modern web development. This strong combination of technologies enables developers to create dynamic, robust, and scalable online applications. However, both novice and experienced engineers may find it difficult to get started with MEAN stack development. Here are five vital ideas to help you traverse this new technology stack and become a professional MEAN stack developer.

Strengthen your JavaScript skills.

The MEAN stack is built around JavaScript, which is used at all tiers of the application, including the client-side (Angular) and server-side (Node.js). To master the MEAN stack, you must have a solid understanding of JavaScript principles, including ES6+ features like arrow functions, promises, async/await, and modules. Take the time to learn more about JavaScript ideas and best practices, as these will serve as the foundation for your MEAN stack development skills.

Learn MongoDB and Mongoose.

MongoDB is a NoSQL database that stores data as flexible JSON-style documents. As a MEAN stack developer, you'll often work with MongoDB to store and retrieve data for your applications. Familiarize yourself with MongoDB's querying language, indexing, and aggregation capabilities. In addition, learn Mongoose, an attractive MongoDB object modeling tool for Node.js that offers a simple schema-based solution for modeling your application data.

Master Express.js for Server-Side Development

Express.js is a Node.js-based lightweight web framework that makes it easier to construct strong server-side apps and APIs. Learn Express.js by exploring its middleware design, routing system, request handling, and integration with other Node.js modules. Learn how to use Express.js to build RESTful APIs that interface with your MongoDB database and serve data to your Angular frontend.

Dive Deep into Angular

Angular is a robust frontend framework used in MEAN stack development to create dynamic and interactive single-page applications (SPAs). Invest time in understanding Angular principles like components, services, modules, data binding, routing, and dependency injection. Explore sophisticated features such as RxJS for handling asynchronous activities, Angular forms for validating user input, and Angular CLI for quick project construction and management.

Build full-stack projects.

The greatest method to solidify your understanding of the MEAN stack is through hands-on experience. Begin with creating full-stack projects that incorporate MongoDB, Express.js, Angular, and Node.js. Begin with basic apps and progress to more complicated tasks as you develop confidence. Implement user authentication, data persistence, real-time updates using WebSockets, and deployment to cloud platforms such as Heroku or AWS.

conclusion

The world of web development is continuously changing, and the MEAN stack is no exception. Following blogs, attending webinars, and engaging in online communities such as GitHub, Stack Overflow, and developer forums will keep you up to date on the newest breakthroughs, best practices, and tools in MEAN stack development. Engage with other developers, share your experiences, and seek advice when you meet difficulties.

By following these vital guidelines and constantly improving your skills, you'll be well on your way to mastering the MEAN stack and creating innovative web applications that use MongoDB, Express.js, Angular, and Node.js. Accept the learning process, try new ideas, and enjoy the gratifying experience of becoming a skilled MEAN stack developer.The three-month mean stack course offered by Zoople Technologies is a good option if you'd want to learn mean stack.

To read more content like this visit https://zoople.in/blog/

Visit our website https://zoople.in/

#python#programming#digital marketing#kerala#kochi#seo#artificial intelligence#software engineering#machine learning#google

1 note

·

View note

Text

Prefab Cloud Spanner And PostgreSQL: Flexible And Affordable

Prefab’s Cloud Spanner with PostgreSQL: Adaptable, dependable, and reasonably priced for any size

PostgreSQL is a fantastic OLTP database that can serve the same purposes as Redis for real-time access, MongoDB for schema flexibility, and Elastic for data that doesn’t cleanly fit into tables or SQL. It’s like having a Swiss Army knife in the world of databases. PostgreSQL manages everything with elegance, whether you need it for analytics queries or JSON storage. Its transaction integrity is likewise flawless.

NoSQL databases, such as HBase, Cassandra, and DynamoDB, are at the other end of the database spectrum. Unlike PostgreSQL’s adaptability, these databases are notoriously difficult to set up, comprehend, and work with. However, their unlimited scalability compensates for their inflexibility. NoSQL databases are the giants of web-scale databases because they can handle enormous amounts of data and rapid read/write performance.

However, is there a database that can offer both amazing scale and versatility?

It might have it both ways after its experience with Spanner.

Why use the PostgreSQL interface from Spanner?

At Prefab, Google uses dynamic logging, feature flags, and secrets management to help developers ship apps more quickly. To construct essential features, including evaluation charts, that aid in it operations, scaling, and product improvement, it employ Cloud Spanner as a data store for its customers’ setups, feature flags, and generated client telemetry.

The following are some of the main features that attracted to Spanner:

99.99% uptime by default (multi-availability zone); if you operate in many regions, you can reach up to 99.999% uptime.

Robust ACID transactions

Scaling horizontally, even for writes

Clients, queries, and schemas in PostgreSQL

To put it another way, Spanner offers the ease of use and portability that make PostgreSQL so alluring, along with the robustness and uptime of a massively replicated database on the scale of Google.

How Spanner is used in Prefab

Because Prefab’s architecture is divided into two sections, it made perfect sense for us to have a separate database for each section. This allowed us to select the most appropriate technology for the task. The two aspects of its architecture are as follows:

Using Google’s software development kits (SDKs), developers can leverage its core Prefab APIs to serve their clients.

Google Cloud clients utilize a web application to monitor and manage their app settings.

In addition to providing incredibly low latency, Google’s feature flag services must be scalable to satisfy the needs of the developers’ downstream clients. With Spanner’s support, Java and the Java virtual machine (JVM) are the ideal options for this high throughput, low latency, and high scalability sector. Although it has a much lower throughput, the user interface (UI) of its program must still enable us to provide features to its clients quickly. It uses PostgreSQL, React, and Ruby on Rails for this section of its architecture.

Spanner in operation

The backend for Google Cloud’s dynamic logging’s volume tracking is one functionality that currently makes use of Cloud Spanner. Its SDK transmits the volume for each log level and logger to Spanner after detecting log requests in its customers’ apps. Then, using the Prefab UI, Google Cloud leverages this information to assist users in determining how many log statements will be output to their log aggregator if they enable logging at different settings.

It need a table with the following shape in order to enable this capture:

CREATE TABLE logger_rollup ( id varchar(36) NOT NULL, start_at timestamptz NOT NULL, end_at timestamptz NOT NULL, project_id bigint NOT NULL, project_env_id bigint NOT NULL, logger_name text NOT NULL, trace_count bigint NOT NULL, debug_count bigint NOT NULL, info_count bigint NOT NULL, warn_count bigint NOT NULL, error_count bigint NOT NULL, fatal_count bigint NOT NULL, created_at spanner.commit_timestamp, client_id bigint, api_key_id bigint, PRIMARY KEY (project_env_id, logger_name, id) );

As clients provide the telemetry for Google Cloud’s dynamic logging, this table scales really quickly and erratically. Yes, a time series database or some clever windowing and data removal techniques might potentially be used for this. However, for the sake of this post, this is a simple method to show how Spanner aids in performance management for a table with a large amount of data.

Get 100X storage with no downtime for ⅓ of the cos

It must duplicate Prefab’s database among several zones during production. Because feature flags and dynamic configuration systems are single points of failure by design, reliability is crucial.

Here, Google adopts a belt and suspenders strategy, but its “belt” is robust with Spanner’s uptime SLA and multi-availability zone replication. You would need to treble the cost of a single instance of PostgreSQL to accomplish this. However, replication and automatic failover are included in Cloud Spanner pricing right out of the box. Additionally, you only pay for the bytes you use, and each node has a ton of storage space up to 10TB with Spanner’s latest improvements. This gives the comparison the following appearance for:

The best practice of having a database instance for each environment can become exorbitantly costly at small scales. This was a problem when I initially looked into Spanner a few years back because the least instance size was 1,000 PUs, or one node. Spanner’s scale has since been modified to scale down to less than a whole node, which makes our selection much simpler. Additionally, it allows us to scale up anytime we need to without having to restructure our apps or deal with outages.

Recent enhancements to the Google Cloud ecosystem with Spanner

When we first started using the PostgreSQL interface for Spanner, we encountered several difficulties. Nonetheless, we are thrilled that the majority of the first issues we ran into have been resolved because Google Cloud is always developing and enhancing its goods and services.

Here are a few of our favorite updates:

Query editor: Having a query editor in the Google Cloud console is quite handy as it enables us to examine and optimize any queries that perform poorly.

Key Visualizer: Understanding row keys becomes crucial when examining large-volume NoSQL databases with HBase. It can identify typical problems that lead to hotspots and examine Cloud Spanner data access trends over time with the Key Visualizer.

In brief

Although it has extensive prior experience with HBase and PostgreSQL, it is quite with its choice to use Spanner as Prefab’s preferred horizontally scalable operational database. For its requirements, it has found it to be simple to use, offering all the same scaling capabilities as HBase without the hassles of developing it yourself. It saves time and money when there are fewer possible points of failure and fewer items to manage.

Consider broadening your horizons if you’re afraid of large tables but haven’t explored options other than PostgreSQL. Spanner’s PostgreSQL interface combines the dependable and scalable nature of Cloud Spanner and Google Cloud with the portability and user-friendliness of PostgreSQL.

Start Now

Spanner is available for free for the first ninety days or for as low as $65 a month after that. Additionally, it would be delighted to establish a connection with you and would appreciate it if you could learn more about its Feature Flags, Dynamic Logging, and Secret Management, which are components of the solution built on top of Cloud Spanner.

Read more on Govindhtech.com

#Prefab#CloudSpanner#PostgreSQL#database#SQL#DynamoDB#SDK#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

1 note

·

View note

Text

Unlocking Business Potential with Dynamics 365 Customer Insights and Elasticsearch-Py

In a data-driven world, businesses that effectively leverage customer insights and manage large datasets stand out in competitive markets. Microsoft Dynamics 365 Customer Insights provides businesses with the tools to centralize and analyze customer data, while Elasticsearch-Py empowers them to search and manage vast datasets efficiently.

This article explores how these technologies, often implemented by innovators like Bluethink Inc., can transform operations, optimize decision-making, and elevate customer experiences.

Dynamics 365 Customer Insights: Centralizing Customer Data

Microsoft Dynamics 365 Customer Insights is a robust Customer Data Platform (CDP) designed to consolidate data from multiple sources into unified customer profiles. It equips businesses with AI-powered analytics to personalize interactions and enhance customer engagement.

Key Features of Dynamics 365 Customer Insights

Unified Customer Profiles The platform aggregates data from CRM, ERP, marketing tools, and external sources to create a single source of truth for each customer.

AI-Driven Analytics Advanced machine learning models uncover trends, segment customers, and predict behaviors, enabling data-driven decisions.

Customizable Insights Tailor dashboards and reports to meet the specific needs of sales, marketing, and customer support teams.

Real-Time Updates Customer profiles are dynamically updated, ensuring teams have the most accurate information at their fingertips.

Benefits for Businesses

Personalized Customer Experiences Leverage detailed profiles to deliver targeted offers, tailored recommendations, and relevant communication.

Proactive Engagement Predict churn risks, identify upselling opportunities, and optimize customer journeys using AI insights.

Operational Efficiency Eliminate data silos and align cross-departmental strategies through centralized data management.

Use Case

A retail company using Dynamics 365 Customer Insights can analyze purchasing trends to segment customers. High-value shoppers might receive exclusive loyalty rewards, while occasional buyers are targeted with personalized offers to boost engagement.

Elasticsearch-Py: Managing and Searching Large Datasets

Elasticsearch is a powerful, open-source search and analytics engine designed to handle large datasets efficiently. Elasticsearch-Py, the official Python client for Elasticsearch, simplifies integration and enables developers to build advanced search functionalities with ease.

Why Use Elasticsearch-Py?

Efficient Data Handling Elasticsearch-Py provides a Pythonic way to index, search, and analyze large datasets, ensuring optimal performance.

Advanced Query Capabilities Developers can construct complex queries for filtering, sorting, and aggregating data using JSON syntax.

Real-Time Search Elasticsearch delivers near-instant search results, even for massive datasets.

Scalability and Reliability Its distributed architecture ensures high availability and fault tolerance, making it ideal for large-scale applications.

Getting Started with Elasticsearch-Py

Install Elasticsearch-Py Use pip to install the library:

Connect to Elasticsearch Establish a connection to your Elasticsearch instance:

Index Data Add documents to your Elasticsearch index for future searches.

Search Data Query your data to retrieve relevant results

Refine Queries

Use advanced search features like filters, faceted search, and aggregations to enhance results.

Use Case

An e-commerce platform can integrate Elasticsearch-Py to power its search bar. Customers can find products using filters like price, brand, or category, with results displayed in milliseconds.

Synergizing Dynamics 365 Customer Insights and Elasticsearch

When combined, Dynamics 365 Customer Insights and Elasticsearch-Py can create a powerhouse for managing customer data and delivering exceptional user experiences.

Integration Benefits

Enhanced Search for Customer Insights Elasticsearch-Py can index customer data from Dynamics 365 Customer Insights, providing advanced search and filtering capabilities for internal teams.

Personalized Recommendations Use insights from Dynamics to tailor search results for customers, boosting satisfaction and sales.

Scalable Data Management With Elasticsearch, businesses can handle expanding datasets from Dynamics, ensuring consistent performance as they grow.

Real-World Example

A travel agency uses Dynamics 365 Customer Insights to understand customer preferences and Elasticsearch-Py to provide lightning-fast searches for vacation packages. Customers receive curated results based on their past bookings, enhancing satisfaction and increasing conversion rates.

Bluethink Inc.: Empowering Businesses with Cutting-Edge Solutions

Bluethink Inc. specializes in integrating advanced technologies like Dynamics 365 Customer Insights and Elasticsearch-Py to optimize operations and elevate customer experiences.

Why Choose Bluethink Inc.?

Expertise in Integration: Seamlessly connect Dynamics 365 with Elasticsearch for a unified platform.

Tailored Solutions: Customize implementations to meet unique business needs.

Comprehensive Support: From consultation to deployment, Bluethink ensures a smooth transition to advanced systems.

Success Story

A logistics company partnered with Bluethink Inc. to centralize customer data using Dynamics 365 and implement Elasticsearch for real-time tracking and search capabilities. The result was improved operational efficiency and enhanced customer satisfaction.

Conclusion

Dynamics 365 Customer Insights and Elasticsearch-Py are transformative tools that enable businesses to harness the power of data. By unifying customer profiles and leveraging advanced search capabilities, organizations can drive personalized engagement, streamline operations, and scale effectively. With Bluethink Inc.’s expertise, businesses can seamlessly implement these technologies, unlocking their full potential and achieving long-term success in a competitive marketplace.

0 notes

Text

Why Must You Choose Mean Stack Development For Your Next Project?

Mean stack application development requires innovative planning and forward-thinking. The main goal of Mean Stack application development is to prioritize its structure so that it meets the needs of its users and can adapt to changing conditions as needed. Developers can use repositories and libraries to design mean-stack applications that provide a simple user experience and efficient backup.

With the right tools, however, you can optimize development timing and strategic resources.

This blog emphasizes how choosing a mean stack will be a great idea for your project.

You will learn about mean stack technology and its benefits in designing an application by reading the following article: To use this tool, you will need to approach the MEAN Stack Development Company.

What Is Mean Stack Application Development?

MEAN (MongoDB, Express.js, Angular.js, and Node.js) is an open-source framework and a collection of Javascript technology. Each technology is compatible with the others and useful in designing executable and dynamic middle-stack applications.

Further, it provides developers with rapid and organized methods for prototyping MEAN-based Web apps.

A key advantage of creating MEAN stack applications lies in its language utilization. JavaScript is employed throughout all stages of MEAN stack application development.

Additionally, this is highly beneficial in improving the efficiency and continuous nature of web and app development strategies.

Further, it provides valuable insights and practical guidance to enhance the quality and efficiency of the development process, resulting in better outcomes and a more satisfying user experience.

1. MongoDB

MongoDB is a JSON query that interacts with the backend of a middle-stack application. Furthermore, MongoDB is used in many stack applications to store data. By using JavaScript in both the database and application, it does not require any transition when objects go from application to database.

Further, a large amount of data is easily managed with it thanks to its foolproof management.

Additionally, it allows you to insert files into the database without having to reload the table.

2. Express.Js

Exprеss.js is a popular web framework for Nodе.js. It streamlines the web application design process by providing a set of powerful features and tools.

Further, Express.js allows developers to build a web application with minimal code and effort. It provides robust routing, middleware support, and easy integration with other libraries.

Exprеss.js is popular for building APIs, Web servers, and single-page applications. Its flexibility and scalability make it an excellent choice for both small and large-scale projects.

3. Angular.Js

Angular.js is a Javascript framework designed by Google. It is useful for constructing dynamic Web applications.

Further, HTML can be used as a standard language and extended to create content.

Additionally, Angular is the key component of most stack applications. Angular provides a front-end framework for dynamic and interactive web applications. Angular developers build single-page applications. It also provides exceptional features and tools for complex user interface development, statistics management, and user experience management.

Overall, Angular.js plays a crucial role in medium-stack application development by providing a powerful front-end framework for building modern web applications.

4. Node.Js

Nodе.js is a critical component of the MEAN stack framework. It is the Javascript runtime environment that establishes server-side scripting, making it possible to build scalable and high-performance applications.

Nodе.js uses an event-driven,non-blocking I/O module, making it an ideal option for making real-time applications that require a constant exchange of data.

With nodе.js, developers can design sеrvеr-sidе Javascript code, which promotes code reuse and streamlines the development process. Additionally, nodе.js provides access to a vast library of open-source modules, making it easier to integrate third-party functionality into an application.

Advantages In The Mean Stack Development

One of the critical advantages of MEAN Stack is that it allows developers to use Javascript on both the client and server sides.

One of the critical advantages of MEAN Stack is that it allows developers to use JavaScript on both the client and server sides. Further, this eliminates the need to switch between different programming languages, which can save time and complexity.

The cost of MEAN stack development companies or Mеan Web application development services is lower than LAMP stacks because libraries and public repositories are used instead of in-house developers.

With Mean Stack-Based Web App Development, you can transfer your code to another platform with less effort.

MeanStack is highly scalable and is useful for building applications that handle a large volume of traffic and users.

MEAN stack is known for its rapid development capabilities due to the use of Nodе.js, which facilitates the MEAN stack application development process.

Its main advantage is that it is open source, so it uses JavaScript for all the code development and uses Node.js to make it scalable and fast.

In addition, MEANStack's components are updated frequently and available for both backend and front-end developers. MVC architecture provides quality user interfaces for backend and front-end developers.

The JavaScript modules make stack application development easier and faster.

MongoDB, an enterprise-level database framework, stores all its data in JSON format. Additionally, the MEAN stack allows JSON to be used everywhere within it.

The MeanStack has a modular architecture that helps developers add or remove modules according to their needs. Additionally, it makes the development process more flexible and efficient.

Why Should You Choose Mean Stack Application Development?

Here are a few reasons to opt for custom web app development:

1. Scalability:

MeanStack is highly scalable, making it a great option for building large, scalable applications. Further, it uses NOSQL databases like MongoDB, allowing it to scale horizontally, and adding more servers to accommodate increased traffic and data without compromising performance.

2. Agile Development:

Mean stack modular architecture facilitates agile development. Further, developers can make quick changes to applications without affecting the entire process. Additionally, it makes it easier to adapt to changing business needs.

3. Community:

As a popular technology, Mean Stack has a lot of community support from developers. Further, they provide immense support and share knowledge. Additionally, this makes it easier to find solutions to problems and get help from them.

4. One Language for Both the Client and Server Sides:

It allows developers to design MEAN stack applications in a single language, Javascript. Additionally, it eliminates the need to learn multiple frameworks, making the workflow simpler and faster.

5. Cost-Effective:

MEAN stack is a cost-effective web development technology stack as all of its components are open source and free to use. Further, it eliminates the need for expensive licenses or subscriptions. Thus, it is a great option for businesses of all sizes looking to build MEAN stack applications.

6. High-Speed and Reusable:

MeanStack application development is known for its high speed and reusability. Further, it allows developers to reuse codes and components across different projects. Additionally, it is a great option for businesses looking to design scalable and responsive Web applications.

7. Facilitates Isomorphic Coding:

MEAN stack application development is advantageous for isomorphic coding. Developers use a uniform language (javascript) in both front-end and back-end development. Further, it enables the sharing of codes between clients and suppliers, leading to more efficient and effective products.

8. Flexibility:

Mean stack web development offers a high level of flexibility due to the use of Javascript throughout the entire development process. Further, the application’s front and back sides can switch quickly. They can make changes to database schemas without having to learn new technology frameworks and languages. Additionally, its modular structure simplifies easy customisation and scalability.

Why do companies use Mean Stack technology?

Many prominent companies use MEAN stack application development for their businesses, including Walmart, Ubеr, LinkеdIn, Accenture, UNIQLO, Fivеrr, Sisеnsе, AngularClass, and many more, because it offers full-stack solutions for web development using open source technologies.

Further, it offers a robust and scalable platform for building dynamic Web applications.

MEAN stack application development provides a standard development process, reduced development time and cost, and flexibility for building complex applications.

Additionally, it uses open-source technologies, making MEAN stack an affordable and accessible option for companies of all sizes.

Conclusion

The MEAN stack application development process continues to evolve and improve as developers find new ways to optimize its capabilities.

With a focus on modern applications, the MEAN stack allows for greater flexibility, scalability, and ease of use, making it an ideal choice for custom dynamic app development. Developers can take advantage of the latest technologies and technologies to create more powerful and efficient applications.

0 notes

Text

CHEAT SHEET TO

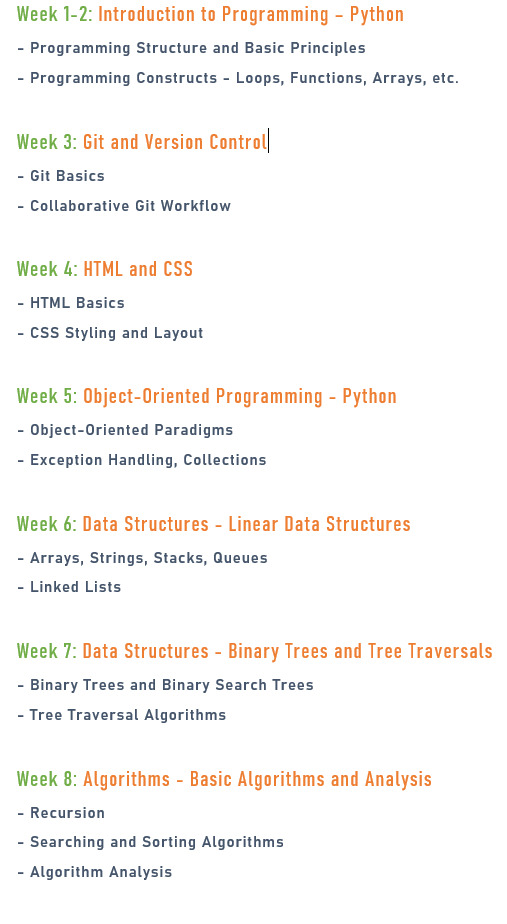

FULL STACK SOFTWARE DEVELOPMENT (IN 21 WEEKS)

Below is a more extended schedule to cover all the topics we've listed for approximately 2-3 months. This schedule assumes spending a few days on each major topic and sub-topic for a comprehensive understanding. Adjust the pace based on your comfort and learning progress:

Week 1-2: Introduction to Programming – Python

- Programming Structure and Basic Principles

- Programming Constructs - Loops, Functions, Arrays, etc.

Week 3: Git and Version Control

- Git Basics

- Collaborative Git Workflow

Week 4: HTML and CSS

- HTML Basics

- CSS Styling and Layout

Week 5: Object-Oriented Programming - Python

- Object-Oriented Paradigms

- Exception Handling, Collections

Week 6: Data Structures - Linear Data Structures

- Arrays, Strings, Stacks, Queues

- Linked Lists

Week 7: Data Structures - Binary Trees and Tree Traversals

- Binary Trees and Binary Search Trees

- Tree Traversal Algorithms

Week 8: Algorithms - Basic Algorithms and Analysis

- Recursion

- Searching and Sorting Algorithms

- Algorithm Analysis

Week 9: Algorithms - Advanced Algorithms and Evaluation

- Greedy Algorithms

- Graph Algorithms

- Dynamic Programming

- Hashing

Week 10: Database Design & Systems

- Data Models

- SQL Queries

- Database Normalization

- JDBC

Week 11-12: Server-Side Development & Frameworks

- Spring MVC Architecture

- Backend Development with Spring Boot

- ORM & Hibernate

- REST APIs

Week 13: Front End Development - HTML & CSS (Review)

- HTML & CSS Interaction

- Advanced CSS Techniques

Week 14-15: Front-End Development - JavaScript

- JavaScript Fundamentals

- DOM Manipulation

- JSON, AJAX, Event Handling

Week 16: JavaScript Frameworks - React

- Introduction to React

- React Router

- Building Components and SPAs

Week 17: Linux Essentials

- Introduction to Linux OS

- File Structure

- Basic Shell Scripting

Week 18: Cloud Foundations & Containers

- Cloud Service Models and Deployment Models

- Virtual Machines vs. Containers

- Introduction to Containers (Docker)

Week 19-20: AWS Core and Advanced Services

- AWS Organization & IAM

- Compute, Storage, Network

- Database Services (RDS, DynamoDB)

- PaaS - Elastic BeanStalk, CaaS - Elastic Container Service

- Monitoring & Logging - AWS CloudWatch, CloudTrail

- Notifications - SNS, SES, Billing & Account Management

Week 21: DevOps on AWS

- Continuous Integration and Continuous Deployment

- Deployment Pipeline (e.g., AWS CodePipeline, CodeCommit, CodeBuild, CodeDeploy)

- Infrastructure as Code (Terraform, CloudFormation)

Please adjust the schedule based on your individual learning pace and availability. Additionally, feel free to spend more time on topics that particularly interest you or align with your career goals. Practical projects and hands-on exercises will greatly enhance your understanding of these topics.

0 notes

Text

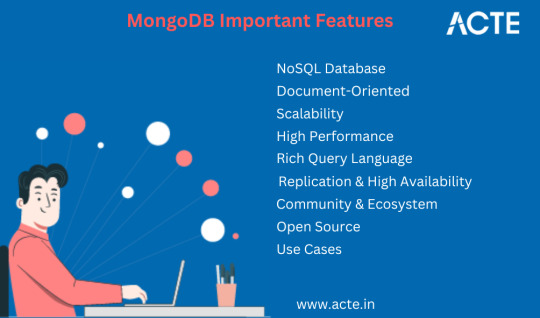

"Mastering MongoDB: Comprehensive Course for NoSQL Database Mastery"

I'm thrilled to address your query concerning MongoDB. My life underwent a transformation upon acquainting myself with MongoDB. MongoDB stands out as one of the most renowned and widely employed database management systems.

MongoDB is a prevalent open-source NoSQL database management system meticulously crafted for the storage, retrieval, and administration of substantial data volumes in a manner that's both flexible and capable of high-performance scalability. Its name, "MongoDB," is a portmanteau of "humongous," underscoring its proficiency in handling immense data sets.

Here are several key attributes and reasons that underscore the importance of MongoDB.

1. NoSQL Database: MongoDB belongs to the NoSQL (Not Only SQL) database family, distinguishing itself from traditional relational databases by its schema-less nature, which obviates the need for predefined data schemas. This flexibility accommodates the storage of unstructured or semi-structured data.

2. Document-Oriented: MongoDB is categorized as a document-oriented database, given its storage of data in a JSON-like BSON (Binary JSON) format. Each piece of data takes the form of a document, capable of containing nested arrays and subdocuments, making it ideal for housing intricate and hierarchical data structures.

3. Scalability: MongoDB is engineered for horizontal scaling, enabling the addition of more servers to manage burgeoning data volumes and increased user traffic. This scalability feature caters effectively to applications with rapidly expanding data requirements, such as those found in the realms of web and mobile technologies.

4. High Performance: MongoDB prioritizes high performance, leveraging internal memory-mapped storage engines for expedited data access. It further supports diverse indexing techniques to optimize query execution speed.