#Mysql drop database

Explore tagged Tumblr posts

Text

Structured Query Language (SQL): A Comprehensive Guide

Structured Query Language, popularly called SQL (reported "ess-que-ell" or sometimes "sequel"), is the same old language used for managing and manipulating relational databases. Developed in the early 1970s by using IBM researchers Donald D. Chamberlin and Raymond F. Boyce, SQL has when you consider that end up the dominant language for database structures round the world.

Structured query language commands with examples

Today, certainly every important relational database control system (RDBMS)—such as MySQL, PostgreSQL, Oracle, SQL Server, and SQLite—uses SQL as its core question language.

What is SQL?

SQL is a website-specific language used to:

Retrieve facts from a database.

Insert, replace, and delete statistics.

Create and modify database structures (tables, indexes, perspectives).

Manage get entry to permissions and security.

Perform data analytics and reporting.

In easy phrases, SQL permits customers to speak with databases to shop and retrieve structured information.

Key Characteristics of SQL

Declarative Language: SQL focuses on what to do, now not the way to do it. For instance, whilst you write SELECT * FROM users, you don’t need to inform SQL the way to fetch the facts—it figures that out.

Standardized: SQL has been standardized through agencies like ANSI and ISO, with maximum database structures enforcing the core language and including their very own extensions.

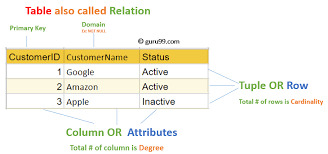

Relational Model-Based: SQL is designed to work with tables (also called members of the family) in which records is organized in rows and columns.

Core Components of SQL

SQL may be damaged down into numerous predominant categories of instructions, each with unique functions.

1. Data Definition Language (DDL)

DDL commands are used to outline or modify the shape of database gadgets like tables, schemas, indexes, and so forth.

Common DDL commands:

CREATE: To create a brand new table or database.

ALTER: To modify an present table (add or put off columns).

DROP: To delete a table or database.

TRUNCATE: To delete all rows from a table but preserve its shape.

Example:

sq.

Copy

Edit

CREATE TABLE personnel (

id INT PRIMARY KEY,

call VARCHAR(one hundred),

income DECIMAL(10,2)

);

2. Data Manipulation Language (DML)

DML commands are used for statistics operations which include inserting, updating, or deleting information.

Common DML commands:

SELECT: Retrieve data from one or more tables.

INSERT: Add new records.

UPDATE: Modify existing statistics.

DELETE: Remove information.

Example:

square

Copy

Edit

INSERT INTO employees (id, name, earnings)

VALUES (1, 'Alice Johnson', 75000.00);

three. Data Query Language (DQL)

Some specialists separate SELECT from DML and treat it as its very own category: DQL.

Example:

square

Copy

Edit

SELECT name, income FROM personnel WHERE profits > 60000;

This command retrieves names and salaries of employees earning more than 60,000.

4. Data Control Language (DCL)

DCL instructions cope with permissions and access manage.

Common DCL instructions:

GRANT: Give get right of entry to to users.

REVOKE: Remove access.

Example:

square

Copy

Edit

GRANT SELECT, INSERT ON personnel TO john_doe;

five. Transaction Control Language (TCL)

TCL commands manage transactions to ensure data integrity.

Common TCL instructions:

BEGIN: Start a transaction.

COMMIT: Save changes.

ROLLBACK: Undo changes.

SAVEPOINT: Set a savepoint inside a transaction.

Example:

square

Copy

Edit

BEGIN;

UPDATE personnel SET earnings = income * 1.10;

COMMIT;

SQL Clauses and Syntax Elements

WHERE: Filters rows.

ORDER BY: Sorts effects.

GROUP BY: Groups rows sharing a assets.

HAVING: Filters companies.

JOIN: Combines rows from or greater tables.

Example with JOIN:

square

Copy

Edit

SELECT personnel.Name, departments.Name

FROM personnel

JOIN departments ON personnel.Dept_id = departments.Identity;

Types of Joins in SQL

INNER JOIN: Returns statistics with matching values in each tables.

LEFT JOIN: Returns all statistics from the left table, and matched statistics from the right.

RIGHT JOIN: Opposite of LEFT JOIN.

FULL JOIN: Returns all records while there is a in shape in either desk.

SELF JOIN: Joins a table to itself.

Subqueries and Nested Queries

A subquery is a query inside any other query.

Example:

sq.

Copy

Edit

SELECT name FROM employees

WHERE earnings > (SELECT AVG(earnings) FROM personnel);

This reveals employees who earn above common earnings.

Functions in SQL

SQL includes built-in features for acting calculations and formatting:

Aggregate Functions: SUM(), AVG(), COUNT(), MAX(), MIN()

String Functions: UPPER(), LOWER(), CONCAT()

Date Functions: NOW(), CURDATE(), DATEADD()

Conversion Functions: CAST(), CONVERT()

Indexes in SQL

An index is used to hurry up searches.

Example:

sq.

Copy

Edit

CREATE INDEX idx_name ON employees(call);

Indexes help improve the performance of queries concerning massive information.

Views in SQL

A view is a digital desk created through a question.

Example:

square

Copy

Edit

CREATE VIEW high_earners AS

SELECT call, salary FROM employees WHERE earnings > 80000;

Views are beneficial for:

Security (disguise positive columns)

Simplifying complex queries

Reusability

Normalization in SQL

Normalization is the system of organizing facts to reduce redundancy. It entails breaking a database into multiple related tables and defining overseas keys to link them.

1NF: No repeating groups.

2NF: No partial dependency.

3NF: No transitive dependency.

SQL in Real-World Applications

Web Development: Most web apps use SQL to manipulate customers, periods, orders, and content.

Data Analysis: SQL is extensively used in information analytics systems like Power BI, Tableau, and even Excel (thru Power Query).

Finance and Banking: SQL handles transaction logs, audit trails, and reporting systems.

Healthcare: Managing patient statistics, remedy records, and billing.

Retail: Inventory systems, sales analysis, and consumer statistics.

Government and Research: For storing and querying massive datasets.

Popular SQL Database Systems

MySQL: Open-supply and extensively used in internet apps.

PostgreSQL: Advanced capabilities and standards compliance.

Oracle DB: Commercial, especially scalable, agency-degree.

SQL Server: Microsoft’s relational database.

SQLite: Lightweight, file-based database used in cellular and desktop apps.

Limitations of SQL

SQL can be verbose and complicated for positive operations.

Not perfect for unstructured information (NoSQL databases like MongoDB are better acceptable).

Vendor-unique extensions can reduce portability.

Java Programming Language Tutorial

Dot Net Programming Language

C ++ Online Compliers

C Language Compliers

2 notes

·

View notes

Text

Why Tableau is Essential in Data Science: Transforming Raw Data into Insights

Data science is all about turning raw data into valuable insights. But numbers and statistics alone don’t tell the full story—they need to be visualized to make sense. That’s where Tableau comes in.

Tableau is a powerful tool that helps data scientists, analysts, and businesses see and understand data better. It simplifies complex datasets, making them interactive and easy to interpret. But with so many tools available, why is Tableau a must-have for data science? Let’s explore.

1. The Importance of Data Visualization in Data Science

Imagine you’re working with millions of data points from customer purchases, social media interactions, or financial transactions. Analyzing raw numbers manually would be overwhelming.

That’s why visualization is crucial in data science:

Identifies trends and patterns – Instead of sifting through spreadsheets, you can quickly spot trends in a visual format.

Makes complex data understandable – Graphs, heatmaps, and dashboards simplify the interpretation of large datasets.

Enhances decision-making – Stakeholders can easily grasp insights and make data-driven decisions faster.

Saves time and effort – Instead of writing lengthy reports, an interactive dashboard tells the story in seconds.

Without tools like Tableau, data science would be limited to experts who can code and run statistical models. With Tableau, insights become accessible to everyone—from data scientists to business executives.

2. Why Tableau Stands Out in Data Science

A. User-Friendly and Requires No Coding

One of the biggest advantages of Tableau is its drag-and-drop interface. Unlike Python or R, which require programming skills, Tableau allows users to create visualizations without writing a single line of code.

Even if you’re a beginner, you can:

✅ Upload data from multiple sources

✅ Create interactive dashboards in minutes

✅ Share insights with teams easily

This no-code approach makes Tableau ideal for both technical and non-technical professionals in data science.

B. Handles Large Datasets Efficiently

Data scientists often work with massive datasets—whether it’s financial transactions, customer behavior, or healthcare records. Traditional tools like Excel struggle with large volumes of data.

Tableau, on the other hand:

Can process millions of rows without slowing down

Optimizes performance using advanced data engine technology

Supports real-time data streaming for up-to-date analysis

This makes it a go-to tool for businesses that need fast, data-driven insights.

C. Connects with Multiple Data Sources

A major challenge in data science is bringing together data from different platforms. Tableau seamlessly integrates with a variety of sources, including:

Databases: MySQL, PostgreSQL, Microsoft SQL Server

Cloud platforms: AWS, Google BigQuery, Snowflake

Spreadsheets and APIs: Excel, Google Sheets, web-based data sources

This flexibility allows data scientists to combine datasets from multiple sources without needing complex SQL queries or scripts.

D. Real-Time Data Analysis

Industries like finance, healthcare, and e-commerce rely on real-time data to make quick decisions. Tableau’s live data connection allows users to:

Track stock market trends as they happen

Monitor website traffic and customer interactions in real time

Detect fraudulent transactions instantly

Instead of waiting for reports to be generated manually, Tableau delivers insights as events unfold.

E. Advanced Analytics Without Complexity

While Tableau is known for its visualizations, it also supports advanced analytics. You can:

Forecast trends based on historical data

Perform clustering and segmentation to identify patterns

Integrate with Python and R for machine learning and predictive modeling

This means data scientists can combine deep analytics with intuitive visualization, making Tableau a versatile tool.

3. How Tableau Helps Data Scientists in Real Life

Tableau has been adopted by the majority of industries to make data science more impactful and accessible. This is applied in the following real-life scenarios:

A. Analytics for Health Care

Tableau is deployed by hospitals and research institutions for the following purposes:

Monitor patient recovery rates and predict outbreaks of diseases

Analyze hospital occupancy and resource allocation

Identify trends in patient demographics and treatment results

B. Finance and Banking

Banks and investment firms rely on Tableau for the following purposes:

✅ Detect fraud by analyzing transaction patterns

✅ Track stock market fluctuations and make informed investment decisions

✅ Assess credit risk and loan performance

C. Marketing and Customer Insights

Companies use Tableau to:

✅ Track customer buying behavior and personalize recommendations

✅ Analyze social media engagement and campaign effectiveness

✅ Optimize ad spend by identifying high-performing channels

D. Retail and Supply Chain Management

Retailers leverage Tableau to:

✅ Forecast product demand and adjust inventory levels

✅ Identify regional sales trends and adjust marketing strategies

✅ Optimize supply chain logistics and reduce delivery delays

These applications show why Tableau is a must-have for data-driven decision-making.

4. Tableau vs. Other Data Visualization Tools

There are many visualization tools available, but Tableau consistently ranks as one of the best. Here’s why:

Tableau vs. Excel – Excel struggles with big data and lacks interactivity; Tableau handles large datasets effortlessly.

Tableau vs. Power BI – Power BI is great for Microsoft users, but Tableau offers more flexibility across different data sources.

Tableau vs. Python (Matplotlib, Seaborn) – Python libraries require coding skills, while Tableau simplifies visualization for all users.

This makes Tableau the go-to tool for both beginners and experienced professionals in data science.

5. Conclusion

Tableau has become an essential tool in data science because it simplifies data visualization, handles large datasets, and integrates seamlessly with various data sources. It enables professionals to analyze, interpret, and present data interactively, making insights accessible to everyone—from data scientists to business leaders.

If you’re looking to build a strong foundation in data science, learning Tableau is a smart career move. Many data science courses now include Tableau as a key skill, as companies increasingly demand professionals who can transform raw data into meaningful insights.

In a world where data is the driving force behind decision-making, Tableau ensures that the insights you uncover are not just accurate—but also clear, impactful, and easy to act upon.

#data science course#top data science course online#top data science institute online#artificial intelligence course#deepseek#tableau

3 notes

·

View notes

Text

Backend update

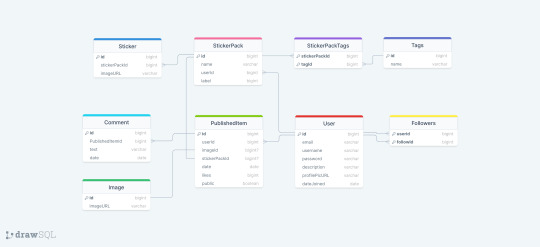

Had the most horrible time working with Sequelize today! As I usually do whenever I work with Sequelize! Sequelize is an SQL ORM - instead of writing raw SQL, ORM gives you an option to code it in a way that looks much more like an OOP, which is arguably simpler if you are used to programming that way. So to explain my project a little bit, it's a full stack web app - an online photo editor for dragging and dropping stickers onto canvas/picture. Here is the diagram.

I'm doing it with Next which I've never used before, I only did vanilla js, React and a lil bit of Angular before. The architecture of a next project immediately messed me up so much, it's way different from the ones I've used before and I often got lost in the folders and where to put stuff properly (this is a huge thing to me because I always want it to be organized by the industry standard and I had no reference Next projects from any previous jobs/college so it got really overwhelming really soon :/) . The next problem was setting up my MySQL database with Sequelize because I know from my past experience that Sequelize is very sensitive to where you position certain files/functions and in which order are they. I made all the models (Sequelize equivalent of tables) and when it was time to sync, it would sync only two models out of nine. I figured it was because the other ones weren't called anywhere. Btw a fun fact

So I imported them to my index.js file I made in my database folder. It was reporting an db.define() is not a function error now. That was weird because it didn't report that for the first two tables that went through. To make a really long story short - because I was used to an server/client architecture, I didn't properly run the index.js file, but just did an "npm run dev" and was counting on all of the files to run in an order I am used to, that was not the case tho. After about an hour, I figured I just needed to run index.js solo first. The only reasons those first two tables went through in the beginning is because of the test api calls I made to them in a separate file :I I cannot wait to finish this project, it is for my bachelors thesis or whatever it's called...wish me luck to finish this by 1.9. XD

Also if you have any questions about any of the technologies I used here, feel free to message me c: <3 Bye!

#codeblr#code#programming#webdevelopment#mysql#nextjs#sequelize#full stack web development#fullstackdeveloper#student#computer science#women in stem#backend#studyblr

15 notes

·

View notes

Text

TYPO3 v13 Roadmap Announcement -What's Awaits ?

Hello there, lovely TYPO3 enthusiasts! In the previous article, we explored the TYPO3 roadmap for version 13. Doesn't it sound exciting? The announcement is just about to drop, and TYPO3 has four more upcoming releases scheduled for 2024. Want to know more? Keep reading this insightful article.

If there's one thing to highlight about the TYPO3 v13 release series, it would be characterized as "reducing repetitive tasks and streamlining TYPO3 user experience in the backend." It's not just about enhancing the TYPO3 user experience; the main goal of TYPO3 v13 is to improve the TYPO3 backend user experience and streamline recurring actions. This involves introducing frontend presets, improving backend usability, enhancing external system integration, and much more.

In this blog, we will explore the upcoming plans for TYPO3 v13, providing comprehensive insights into what's in store for users in TYPO3 v13.

TYPO3 v13 Roadmap Goals - Making TYPO3 Things Easier Everyone

Frontend Rendering Presets Based on feedback from the TYPO3 community and numerous discussions at the TYPO3 Conference 2023, TYPO3 has elevated its standards to announce TYPO3 v13. During these conversations, TYPO3 discovered that many users face repetitive challenges in performing certain actions. A primary example is the integrator's focus on creating sites, establishing backend layouts, configuring user permissions, and preparing a new empty TYPO3 instance for TYPO3 editors.

To address this issue, TYPO3 plans to simplify these processes and minimize the daily efforts required by users in TYPO3 v13. TYPO3 also aims to make these processes configurable and duplicatable for integrators. Additionally, the implementation of import and export functions, along with an improved duplication process for content options, is on the agenda.

Enhancing Backend User Experience and Accessibility TYPO3 continuously works on updating the TYPO3 backend with the latest trending technologies and implementing user requirements in the best possible way. TYPO3 v12 LTS is already equipped with extensive features in this regard. To meet and exceed the strict requirements of the public sector, TYPO3 will continue to implement new modernized techniques in the backend..

Upcoming Goals of TYPO3 v13 The upcoming plans for TYPO3 v13 include external system integrations, simplified workspaces, and improvements in image rendering and content blocks.

Required Database Compatibility MySQL version 8.0.17 or higher MariaDB version 10.4.3 or higher PostgreSQL version 10.0 or higher SQLite version 3.8.3 or higher

Support Timeline: TYPO3 will now support each TYPO3 Sprint Release, including (TYPO3 v13.0 and TYPO3 v13.3), until the next minor version is published. TYPO3 v13 LTS will receive fixes until April 30, 2026.

Upcoming Plans for TYPO3 v13:

We will keep an eye on the release of TYPO3 v13 in January 2024. Initially, the release will follow new standard practices, including the removal of outdated components and APIs. Until See you Soon .. Happy TYPO3 reading !

3 notes

·

View notes

Text

Data Engineering Concepts, Tools, and Projects

All the associations in the world have large amounts of data. If not worked upon and anatomized, this data does not amount to anything. Data masterminds are the ones. who make this data pure for consideration. Data Engineering can nominate the process of developing, operating, and maintaining software systems that collect, dissect, and store the association’s data. In modern data analytics, data masterminds produce data channels, which are the structure armature.

How to become a data engineer:

While there is no specific degree requirement for data engineering, a bachelor's or master's degree in computer science, software engineering, information systems, or a related field can provide a solid foundation. Courses in databases, programming, data structures, algorithms, and statistics are particularly beneficial. Data engineers should have strong programming skills. Focus on languages commonly used in data engineering, such as Python, SQL, and Scala. Learn the basics of data manipulation, scripting, and querying databases.

Familiarize yourself with various database systems like MySQL, PostgreSQL, and NoSQL databases such as MongoDB or Apache Cassandra.Knowledge of data warehousing concepts, including schema design, indexing, and optimization techniques.

Data engineering tools recommendations:

Data Engineering makes sure to use a variety of languages and tools to negotiate its objects. These tools allow data masterminds to apply tasks like creating channels and algorithms in a much easier as well as effective manner.

1. Amazon Redshift: A widely used cloud data warehouse built by Amazon, Redshift is the go-to choice for many teams and businesses. It is a comprehensive tool that enables the setup and scaling of data warehouses, making it incredibly easy to use.

One of the most popular tools used for businesses purpose is Amazon Redshift, which provides a powerful platform for managing large amounts of data. It allows users to quickly analyze complex datasets, build models that can be used for predictive analytics, and create visualizations that make it easier to interpret results. With its scalability and flexibility, Amazon Redshift has become one of the go-to solutions when it comes to data engineering tasks.

2. Big Query: Just like Redshift, Big Query is a cloud data warehouse fully managed by Google. It's especially favored by companies that have experience with the Google Cloud Platform. BigQuery not only can scale but also has robust machine learning features that make data analysis much easier. 3. Tableau: A powerful BI tool, Tableau is the second most popular one from our survey. It helps extract and gather data stored in multiple locations and comes with an intuitive drag-and-drop interface. Tableau makes data across departments readily available for data engineers and managers to create useful dashboards. 4. Looker: An essential BI software, Looker helps visualize data more effectively. Unlike traditional BI tools, Looker has developed a LookML layer, which is a language for explaining data, aggregates, calculations, and relationships in a SQL database. A spectacle is a newly-released tool that assists in deploying the LookML layer, ensuring non-technical personnel have a much simpler time when utilizing company data.

5. Apache Spark: An open-source unified analytics engine, Apache Spark is excellent for processing large data sets. It also offers great distribution and runs easily alongside other distributed computing programs, making it essential for data mining and machine learning. 6. Airflow: With Airflow, programming, and scheduling can be done quickly and accurately, and users can keep an eye on it through the built-in UI. It is the most used workflow solution, as 25% of data teams reported using it. 7. Apache Hive: Another data warehouse project on Apache Hadoop, Hive simplifies data queries and analysis with its SQL-like interface. This language enables MapReduce tasks to be executed on Hadoop and is mainly used for data summarization, analysis, and query. 8. Segment: An efficient and comprehensive tool, Segment assists in collecting and using data from digital properties. It transforms, sends, and archives customer data, and also makes the entire process much more manageable. 9. Snowflake: This cloud data warehouse has become very popular lately due to its capabilities in storing and computing data. Snowflake’s unique shared data architecture allows for a wide range of applications, making it an ideal choice for large-scale data storage, data engineering, and data science. 10. DBT: A command-line tool that uses SQL to transform data, DBT is the perfect choice for data engineers and analysts. DBT streamlines the entire transformation process and is highly praised by many data engineers.

Data Engineering Projects:

Data engineering is an important process for businesses to understand and utilize to gain insights from their data. It involves designing, constructing, maintaining, and troubleshooting databases to ensure they are running optimally. There are many tools available for data engineers to use in their work such as My SQL, SQL server, oracle RDBMS, Open Refine, TRIFACTA, Data Ladder, Keras, Watson, TensorFlow, etc. Each tool has its strengths and weaknesses so it’s important to research each one thoroughly before making recommendations about which ones should be used for specific tasks or projects.

Smart IoT Infrastructure:

As the IoT continues to develop, the measure of data consumed with high haste is growing at an intimidating rate. It creates challenges for companies regarding storehouses, analysis, and visualization.

Data Ingestion:

Data ingestion is moving data from one or further sources to a target point for further preparation and analysis. This target point is generally a data storehouse, a unique database designed for effective reporting.

Data Quality and Testing:

Understand the importance of data quality and testing in data engineering projects. Learn about techniques and tools to ensure data accuracy and consistency.

Streaming Data:

Familiarize yourself with real-time data processing and streaming frameworks like Apache Kafka and Apache Flink. Develop your problem-solving skills through practical exercises and challenges.

Conclusion:

Data engineers are using these tools for building data systems. My SQL, SQL server and Oracle RDBMS involve collecting, storing, managing, transforming, and analyzing large amounts of data to gain insights. Data engineers are responsible for designing efficient solutions that can handle high volumes of data while ensuring accuracy and reliability. They use a variety of technologies including databases, programming languages, machine learning algorithms, and more to create powerful applications that help businesses make better decisions based on their collected data.

4 notes

·

View notes

Text

The ways to develop a website

You can approach various ways to developer website but depending on your goals, technical skill, and also resources. Below is an overview of the primary methods to develop a website:

1. Using website builders

Website builders are allow you to create website without calling knowledge and it is user friendly platform also. They offer pre designed templates and drag and drop interfaces.

• Popular tools: wordpress. com, shopify for eCommerce, wix, squarespace etc.

• How it works:

° You can choose the template that you want.

° Customize the design, layout and also content with using visual editor of the platform.

° You can also add forms, eCommerce, or blogs to plugins or also in built tools.

° Then publish the site with hosting that provided by the platform.

• Pros:

° No coding required and it is beginner friendly.

° Fast setup and development.

° Hosting, security and also updates are included.

• Cons:

°Compared to coding it have limited customisation.

° Monthly subscription costs.

° Migrating can be challenging because of platform dependency.

2. Content management system(CMS)

It require minimal coding and it allows you to manage website content efficiently with the customizable themes and plugins.

• Popular tools: wordpress. org, Joomla, drupal

• How it works:

° Install the CMS on a web server.

° With the basic coding or in built tool, you can select a theme and customize.

° To add functionality you can use plugins.

° Then you can manage content through dashboard.

• Pros:

° It is flexible and scalable and also it have thousands of themes and plugins.

° Provide community support and resources.

° It is useful for portfolios or blogs and also Complex sites.

• Cons:

° You should require some technical knowledge for the set up and maintenance.

° It have hosting and domain cost.

° It need security updates as also backups.

3. Coding from scratch (custom development)

Build a website with the raw code, it can give you complete control over design and functionality.

• Technologies:

° Frontend: HTML, CSS, JavaScript.

° Backend: python, PHP, Ruby, and node. JS

° Database: MySQL, mongoDB,postgreSQL.

° Tools: code editor, hosting, version control.

• How it works:

° You can design the site structure and visuals by using HTML/ CSS/ or JavaScript.

° For dynamic features build back end logic.

° You can connect to a database for data storage.

• Pros:

° Offers full customisation and flexibility.

° Unique functionality and also optimized performance.

° No platform restrictions.

• Cons:

° It requires coding expertise.

° Costly and also time intensive.

° Require ongoing maintenance such as security, updates.

4. Static site generator

It can create fast, and also secure website by pre rendering HTML files. It is useful for Blogs, or portfolios.

• Popular tools: Hugo, Jekyll, Next. js.

• How it works:

° You can write content in markdown or also similar format.

° Use template to generate static HTML, CSS, or JavaScript files.

° Use hosting platform like netlify,or vercel.

• Pros:

° It is fast and Secure and no server side processing.

° It provide free or low cost hosting.

° Easy to scale.

• Cons:

° It has Limited functionality.

° It required some technical knowledge.

5. Headless CMS with frontend frameworks

A headless CMS can provide a backend for content management with the conctent frontend for flexibility.

• Popular tools:

° Headless CMS: Strapy, contentful etc.

° Frontend framework: React, Vue. js etc

• How it works:

° To manage content via APIs, use headless CMS.

° With JavaScript framework built a custom frontend.

° To display the dynamic content connect both via APIs.

• Pros:

° Very much flexible and scalable.

° Allowing reuse across platforms such as web, mobile.

° Modern and also performant.

• Cons:

° It require coding skill and also familiarity with APIs.

° The setup can be complex.

6. Hiring a developer or agency

If you don't have time or lack of Technical skill, you can you can hire developer or agency.

• How it work:

° For custom development you can higher freelancers or agencies.

° They can provide your requirement such as design , features, and budget.

° In collaborate on design and functionality then launch the website.

• Pros:

° They provide professional result with your needs.

° Save time.

• Cons:

° Cost can be higher.

° Depend on third parties for maintenance.

7. Low code platforms

Low code platforms fill the gap between no code Builders and custom coding. And they offering visual development with the coding flexibility.

• Popular tools: bubble, Adalo, Outsystems.

• How it works:

° To design and configure the site, use Visual interface.

° And some custom code for specific features.

° Use built in or external hosting.

• Pros:

° It is faster than coding from scratch.

° You can offer more customizable than website Builders.

• Cons:

° Learning curve for advanced features.

° Require subscription or hosting cost.

Choosing the right method

• Beginners: if you are a beginner you can start the with the website Builders or wordpress.

• Budget conscious: if you are conscious about your budget you can use static site generators or also free CMS options.

• Developers: opt for custom coding or headless CMS for whole control.

• Businesses: for businesses, it is good to consider hiring professionals or using scalable CMS platform.

If you are looking for a website development with design, it is smart to work with an experienced agency. HollyMinds Technologies is a great choice, because they are the best website development company in Pune, and they make websites that are perfectly coded and structured to stand the test of time. The algorithms are set to bring visitors from across the globe. A structured website with right content, can bring more visitors to your business.

1 note

·

View note

Text

How to Drop Tables in MySQL Using dbForge Studio: A Simple Guide for Safer Table Management

Learn how to drop a table in MySQL quickly and safely using dbForge Studio for MySQL — a professional-grade IDE designed for MySQL and MariaDB development. Whether you’re looking to delete a table, use DROP TABLE IF EXISTS, or completely clear a MySQL table, this guide has you covered.

In article “How to Drop Tables in MySQL: A Complete Guide for Database Developers” we explain:

✅ How to drop single and multiple tables Use simple SQL commands or the intuitive UI in dbForge Studio to delete one or several tables at once — no need to write multiple queries manually.

✅ How to use the DROP TABLE command properly Learn how to avoid errors by using DROP TABLE IF EXISTS and specifying table names correctly, especially when working with multiple schemas.

✅ What happens when you drop a table in MySQL Understand the consequences: the table structure and all its data are permanently removed — and can’t be recovered unless you’ve backed up beforehand.

✅ Best practices for safe table deletion Backup first, check for dependencies like foreign keys, and use IF EXISTS to avoid runtime errors if the table doesn’t exist.

✅ How dbForge Studio simplifies and automates this process With dbForge Studio, dropping tables becomes a controlled and transparent process, thanks to:

- Visual Database Explorer — right-click any table to drop it instantly or review its structure before deletion. - Smart SQL Editor — get syntax suggestions and validation as you type DROP TABLE commands. - Built-in SQL execution preview — see what will happen before executing destructive commands. - Schema and data backup tools — create instant backups before making changes. - SQL script generation — the tool auto-generates DROP TABLE scripts, which you can edit and save for future use. - Role-based permissions and warnings — helps prevent accidental deletions in shared environments.

💡 Whether you’re cleaning up your database or optimizing its structure, this article helps you do it efficiently — with fewer risks and more control.

🔽 Try dbForge Studio for MySQL for free and experience smarter MySQL development today: 👉 https://www.devart.com/dbforge/mysql/studio/download.html

1 note

·

View note

Text

StoreGo SaaS Nulled Script 6.7

StoreGo SaaS Nulled Script – Build Powerful Online Stores with Zero Cost If you're looking for a professional, cost-effective, and user-friendly way to launch an online store, the StoreGo SaaS Nulled Script is your ultimate solution. This dynamic script offers a fully-featured eCommerce experience, empowering businesses of all sizes to create stunning digital storefronts without investing in expensive software or subscriptions. What is StoreGo SaaS Nulled Script? StoreGo SaaS Nulled Script is a premium online store builder that allows users to create, manage, and scale eCommerce businesses with ease. The nulled version provides all premium features for free, making it accessible for entrepreneurs, freelancers, and agencies who want to launch professional web stores without financial barriers. Unlike traditional eCommerce platforms that require hefty licensing fees, this script is a fully customizable and open-source alternative. With zero limitations, it’s a fantastic option for users who want complete control over their store’s design, layout, payment methods, and more. Technical Specifications Script Type: PHP Laravel Framework Database: MySQL Frontend Framework: Bootstrap 4 Server Requirements: PHP 7.3+, Apache/Nginx, cURL, OpenSSL Payment Gateways Supported: Stripe, PayPal, Razorpay, Paystack, and more Multi-Store Capability: Yes Top Features and Benefits Multi-Tenant SaaS Architecture: Each user can have a fully independent online store under your main platform. Drag-and-Drop Store Builder: Design pages effortlessly without coding. Real-Time Analytics: Get insights into orders, revenue, and customer behavior. Integrated Payment Solutions: Accept online payments globally with multiple secure gateways. Subscription Packages: Create various pricing plans for your users. Product & Inventory Management: Manage stock, variants, SKUs, and more from one dashboard. Use Cases Whether you're starting a new eCommerce business or offering SaaS services to clients, the StoreGo SaaS Nulled Script adapts perfectly to different business models. Ideal for: Freelancers offering eCommerce development services Agencies launching SaaS-based online store platforms Small business owners looking to sell online without third-party dependencies Digital marketers creating customized product landing pages Installation Guide Setting up the StoreGo SaaS Nulled Script is quick and seamless: Download the script package from our website. Upload it to your server via cPanel or FTP. Create a MySQL database and user. Run the installer and follow the on-screen instructions. Log in to the admin panel and start customizing your platform! For advanced configurations, detailed documentation is included within the script package, ensuring even beginners can get started with minimal technical effort. Why Choose StoreGo SaaS Nulled Script? By choosing the StoreGo SaaS Nulled Script, you're unlocking enterprise-level features without paying a premium. Its modern UI, powerful backend, and full customization capabilities make it a must-have tool for building eCommerce platforms that perform. Plus, when you download from our website, you get a clean, secure, and fully functional nulled version. Say goodbye to restrictive licensing and hello to freedom, flexibility, and profitability. FAQs Is the StoreGo SaaS Nulled Script safe to use? Yes, the script available on our website is thoroughly scanned and tested to ensure it’s clean, stable, and ready for production. Can I use this script for multiple clients? Absolutely! The multi-tenant feature allows you to offer customized storefronts to various clients under your own domain. Does this script include future updates? While this is a nulled version, we frequently update our repository to include the latest stable releases with new features and security patches. How do I get support if I face issues? Our community forum and documentation are excellent resources. Plus, you can always reach out to us via our contact form for guidance.

Download Now and Start Selling Online Today! Get started with the StoreGo SaaS and experience unmatched control and performance for your eCommerce venture. Download it now and build your digital empire—no license needed, no hidden costs. Need more tools to supercharge your WordPress experience? Don’t forget to check out our other offerings like WPML pro NULLED. Looking for a stylish WordPress theme? Get Impreza NULLED for free now.

0 notes

Text

💡 DID YOU KNOW?

When it comes to handling large transactions and user data in Smart Gold Chit Software, not all databases are created equal! 🤔

Can you guess which one is preferred ?

🔘 A) MySQL or PostgreSQL 🔘 B) Flat file systems 🔘 C) Spreadsheet software 🔘 D) None of the above

👇 Drop your answer in the comments!

0 notes

Text

How a Professional Web Development Company in Udaipur Builds Scalable Websites

In today’s fast-paced digital world, your website isn’t just a digital brochure — it’s your 24/7 storefront, marketing engine, and sales channel. But not every website is built to last or grow. This is where scalable web development comes into play.For businesses in Rajasthan’s growing tech hub, choosing the right web development company in Udaipur can make or break your digital success. In this article, we’ll explore how WebSenor, a leading player in the industry, helps startups, brands, and enterprises build websites that scale — not just technically, but strategically.

Why Scalability Matters in Modern Web Development

As more Udaipur tech startups and businesses go digital, a scalable website ensures your online presence grows with your company — without needing a complete rebuild every year.

Scalability means your website:

Handles increasing traffic without slowing down

Adapts to new features or user demands

Remains secure, fast, and reliable as you grow

Whether you’re launching an eCommerce website in Udaipur, building an app for services, or developing a customer portal, scalable architecture is the foundation.

Meet WebSenor – Your Web Development Partner in Udaipur

WebSenor Technologies, a trusted Udaipur IT company, has been delivering custom website development in Udaipur and globally since 2008. Known for their strategic thinking, expert team, and client-first mindset, they’ve earned their spot among the best web development firms in Udaipur. What sets them apart? Their ability to not just design beautiful websites, but to engineer platforms built for scale — from traffic to features to integrations.

What Exactly Is a Scalable Website?

A scalable website is one that grows with your business — whether that’s more visitors, more products, or more complex workflows.

Business Perspective:

Can handle thousands of users without performance drops

Easily integrates new features or plugins

Offers seamless user experience across devices

Technical Perspective:

Built using flexible frameworks and architecture

Cloud-ready with load balancing and caching

Optimized for security, speed, and modularity

Think of it like constructing a building with space to expand: it may start with one floor, but the foundation is strong enough to add five more later.

WebSenor’s Proven Process for Building Scalable Websites

Here’s how WebSenor is one of the top-rated Udaipur developers — delivers scalability from the first step:

1. Discovery & Requirement Analysis

They begin by deeply understanding your business needs, user base, and long-term goals. This early strategy phase ensures the right roadmap for future growth is in place.

2. Scalable Architecture Planning

WebSenor uses:

Microservices for independent functionality

Modular design for faster updates

Cloud-first architecture (AWS, Azure)

Database design that handles scaling easily (NoSQL, relational hybrid models)

3. Technology Stack Selection

They work with the most powerful and modern stacks:

React.js, Vue.js (for the frontend)

Node.js, Laravel, Django (for the backend)

MongoDB, MySQL, PostgreSQL for data These tools are selected based on project needs — no bloated tech, only what works best.

4. Agile Development & Code Quality

Their agile methodology allows flexible development in sprints. With Git-based version control, clean and reusable code, and collaborative workflows, they ensure top-quality software every time.

5. Cloud Integration & Hosting

WebSenor implements:

Auto-scaling cloud environments

CDN for global speed

Load balancing for high-traffic periods

This ensures your site performs reliably — whether you have 100 or 100,000 users.

6. Testing & Optimization

From speed tests to mobile responsiveness to security audits, every site is battle-tested before launch. Performance is fine-tuned using tools like Lighthouse, GTmetrix, and custom scripts.

Case Study: Scalable eCommerce for a Growing Udaipur Business

One of WebSenor’s recent projects involved an eCommerce website development in Udaipur for a local fashion brand.

Challenge:The client needed a simple store for now but anticipated scaling to 1,000+ products and international orders in the future.

Solution:WebSenor built a modular eCommerce platform using Laravel and Vue.js, integrated with AWS hosting. With scalable product catalogs and multilingual support, the store handled:

4x growth in traffic in 6 months

2,000+ monthly transactions

99.99% uptime during sale events

Client Feedback:

“WebSenor didn't just build our website — they built a system that grows with us. The team was responsive, transparent, and truly professional.”

Expert Insights from WebSenor’s Team

“Too often, startups focus only on what they need today. We help them plan 1–3 years ahead. That’s where real savings and growth come from.”

He also shared this advice:

Avoid hardcoded solutions

Always design databases for growth

Prioritize speed and user experience from Day 1

WebSenor’s team includes full-stack experts, UI/UX designers, and cloud engineers who are passionate about future-proof development.

Why Trust WebSenor – Udaipur’s Web Development Experts

Here’s why businesses across India and overseas choose WebSenor:

15+ years of development experience

300+ successful projects

A strong team of local Udaipur development experts

Featured among the best web development companies in Udaipur

Client base in the US, UK, UAE, and Australia

Ready to Build a Scalable Website? Get Started with WebSenor

Whether you're a startup, SME, or growing brand, WebSenor offers tailored web development services in Udaipur built for growth and stability.

👉 View Portfolio Book a Free Consultation Explore Custom Website Development

From eCommerce to custom applications, they’ll guide you every step of the way.

Conclusion

Scalability isn’t just a feature, it’s a necessity. As your business grows, your website should never hold you back. Partnering with a professional and experienced team like WebSenor means you’re not just getting a website. You’re investing in a future-ready digital platform, backed by some of the best web development minds in Udaipur. WebSenor is more than a service provider — they're your growth partner.

0 notes

Text

This SQL Trick Cut My Query Time by 80%

How One Simple Change Supercharged My Database Performance

If you work with SQL, you’ve probably spent hours trying to optimize slow-running queries — tweaking joins, rewriting subqueries, or even questioning your career choices. I’ve been there. But recently, I discovered a deceptively simple trick that cut my query time by 80%, and I wish I had known it sooner.

Here’s the full breakdown of the trick, how it works, and how you can apply it right now.

🧠 The Problem: Slow Query in a Large Dataset

I was working with a PostgreSQL database containing millions of records. The goal was to generate monthly reports from a transactions table joined with users and products. My query took over 35 seconds to return, and performance got worse as the data grew.

Here’s a simplified version of the original query:

sql

SELECT

u.user_id,

SUM(t.amount) AS total_spent

FROM

transactions t

JOIN

users u ON t.user_id = u.user_id

WHERE

t.created_at >= '2024-01-01'

AND t.created_at < '2024-02-01'

GROUP BY

u.user_id, http://u.name;

No complex logic. But still painfully slow.

⚡ The Trick: Use a CTE to Pre-Filter Before the Join

The major inefficiency here? The join was happening before the filtering. Even though we were only interested in one month’s data, the database had to scan and join millions of rows first — then apply the WHERE clause.

✅ Solution: Filter early using a CTE (Common Table Expression)

Here’s the optimized version:

sql

WITH filtered_transactions AS (

SELECT *

FROM transactions

WHERE created_at >= '2024-01-01'

AND created_at < '2024-02-01'

)

SELECT

u.user_id,

SUM(t.amount) AS total_spent

FROM

filtered_transactions t

JOIN

users u ON t.user_id = u.user_id

GROUP BY

u.user_id, http://u.name;

Result: Query time dropped from 35 seconds to just 7 seconds.

That’s an 80% improvement — with no hardware changes or indexing.

🧩 Why This Works

Databases (especially PostgreSQL and MySQL) optimize join order internally, but sometimes they fail to push filters deep into the query plan.

By isolating the filtered dataset before the join, you:

Reduce the number of rows being joined

Shrink the working memory needed for the query

Speed up sorting, grouping, and aggregation

This technique is especially effective when:

You’re working with time-series data

Joins involve large or denormalized tables

Filters eliminate a large portion of rows

🔍 Bonus Optimization: Add Indexes on Filtered Columns

To make this trick even more effective, add an index on created_at in the transactions table:

sql

CREATE INDEX idx_transactions_created_at ON transactions(created_at);

This allows the database to quickly locate rows for the date range, making the CTE filter lightning-fast.

🛠 When Not to Use This

While this trick is powerful, it’s not always ideal. Avoid it when:

Your filter is trivial (e.g., matches 99% of rows)

The CTE becomes more complex than the base query

Your database’s planner is already optimizing joins well (check the EXPLAIN plan)

🧾 Final Takeaway

You don’t need exotic query tuning or complex indexing strategies to speed up SQL performance. Sometimes, just changing the order of operations — like filtering before joining — is enough to make your query fly.

“Think like the database. The less work you give it, the faster it moves.”

If your SQL queries are running slow, try this CTE filtering trick before diving into advanced optimization. It might just save your day — or your job.

Would you like this as a Medium post, technical blog entry, or email tutorial series?

0 notes

Text

10 Data Science Tools You Should Master in 2025

If you're looking to become a data scientist in 2025, mastering the right tools is a must. With the constant evolution of data science, there are a ton of tools out there. But which ones should you focus on to get hired and excel in the field?

Here’s a list of 10 must-know data science tools that will not only boost your skills but also make you stand out in the job market.

1️⃣ Python

Python is the go-to programming language for data science. It’s beginner-friendly, powerful, and has a huge library of packages for data manipulation, machine learning, and visualization (like Pandas, NumPy, Matplotlib, and Scikit-learn).

Why learn it?

Easy syntax and readability

Extensive library support

Community-driven growth

2️⃣ R

R is a statistical computing language that shines in the world of data analysis and visualization. It’s widely used in academia and research fields but is increasingly gaining traction in business as well.

Why learn it?

Specialized for statistical analysis

Strong visualization packages (like ggplot2)

Great for hypothesis testing

3️⃣ Jupyter Notebooks

Jupyter is the ultimate tool for interactive data science. It lets you create and share documents that combine live code, equations, visualizations, and narrative text.

Why learn it?

Interactive coding environment

Perfect for experimentation and visualization

Widely used for machine learning and data exploration

4️⃣ SQL

SQL (Structured Query Language) is essential for managing and querying large databases. It’s the backbone of working with relational databases and is often a skill employers look for in data science roles.

Why learn it?

Helps you manipulate large datasets

Works with most relational databases (MySQL, PostgreSQL)

Crucial for data extraction and aggregation

5️⃣ Tableau

When it comes to data visualization, Tableau is a favorite. It allows you to create interactive dashboards and compelling visualizations without needing to be a coding expert.

Why learn it?

Intuitive drag-and-drop interface

Makes complex data easy to understand

Helps with business decision-making

6️⃣ TensorFlow

If you’re diving into deep learning and neural networks, TensorFlow by Google is one of the best open-source libraries for building AI models.

Why learn it?

Powerful library for deep learning models

High scalability for large datasets

Used by companies like Google and Uber

7️⃣ Scikit-learn

For general-purpose machine learning, Scikit-learn is one of the most important Python libraries. It simplifies implementing algorithms for classification, regression, and clustering.

Why learn it?

Easy-to-use interface for beginners

Implements popular ML algorithms (e.g., decision trees, SVM)

Great for prototyping and testing models

8️⃣ BigQuery

Google Cloud’s BigQuery is a data warehouse that allows you to run super-fast SQL queries on large datasets. It’s cloud-based, scalable, and integrates well with other Google Cloud services.

Why learn it?

Big data processing made easy

Fast and scalable queries

Great for real-time data analysis

9️⃣ Hadoop

If you're working with big data, you’ll need to know Hadoop. It’s an open-source framework that stores and processes large datasets across distributed computing clusters.

Why learn it?

Handles petabytes of data

Works well with large datasets

Essential for big data analytics

🔟 GitHub

Version control is key for collaborative projects, and GitHub is the go-to platform for managing code. It helps you track changes, collaborate with other data scientists, and showcase your projects.

Why learn it?

Essential for collaboration and team projects

Keeps track of code versions

Great for building a portfolio

How to Learn These Tools:

Mastering these tools is a journey, and the best way to start is with a structured Data Science course that covers them in-depth. Whether you're a beginner or want to level up your skills, Intellipaat’s Data Science course provides hands-on training with real-world projects. It will guide you through these tools and help you build a solid foundation in data science.

🎓 Learn Data Science with Intellipaat’s course here

By mastering these 10 tools, you’ll be well on your way to landing a data science role in 2025. The key is to focus on hands-on practice and real-world projects that showcase your skills. If you want to take the next step and start learning these tools from scratch, check out Intellipaat’s Data Science course and kickstart your career today!

1 note

·

View note

Text

Batch Address Validation Tool and Bulk Address Verification Software

When businesses manage thousands—or millions—of addresses, validating each one manually is impractical. That’s where batch address validation tools and bulk address verification software come into play. These solutions streamline address cleansing by processing large datasets efficiently and accurately.

What Is Batch Address Validation?

Batch address validation refers to the automated process of validating multiple addresses in a single operation. It typically involves uploading a file (CSV, Excel, or database) containing addresses, which the software then checks, corrects, formats, and appends with geolocation or delivery metadata.

Who Needs Bulk Address Verification?

Any organization managing high volumes of contact data can benefit, including:

Ecommerce retailers shipping to customers worldwide.

Financial institutions verifying client data.

Healthcare providers maintaining accurate patient records.

Government agencies validating census or mailing records.

Marketing agencies cleaning up lists for campaigns.

Key Benefits of Bulk Address Verification Software

1. Improved Deliverability

Clean data ensures your packages, documents, and marketing mailers reach the right person at the right location.

2. Cost Efficiency

Avoiding undeliverable mail means reduced waste in printing, postage, and customer service follow-up.

3. Database Accuracy

Maintaining accurate addresses in your CRM, ERP, or mailing list helps improve segmentation and customer engagement.

4. Time Savings

What would take weeks manually can now be done in minutes or hours with bulk processing tools.

5. Regulatory Compliance

Meet legal and industry data standards more easily with clean, validated address data.

Features to Expect from a Batch Address Validation Tool

When evaluating providers, check for the following capabilities:

Large File Upload Support: Ability to handle millions of records.

Address Standardization: Correcting misspellings, filling in missing components, and formatting according to regional norms.

Geocoding Integration: Assigning latitude and longitude to each validated address.

Duplicate Detection & Merging: Identifying and consolidating redundant entries.

Reporting and Audit Trails: For compliance and quality assurance.

Popular Batch Address Verification Tools

Here are leading tools in 2025:

1. Melissa Global Address Verification

Features: Supports batch and real-time validation, international formatting, and geocoding.

Integration: Works with Excel, SQL Server, and Salesforce.

2. Loqate Bulk Cleanse

Strengths: Excel-friendly UI, supports uploads via drag-and-drop, and instant insights.

Ideal For: Businesses looking to clean customer databases or mailing lists quickly.

3. Smarty Bulk Address Validation

Highlights: Fast processing, intuitive dashboard, and competitive pricing.

Free Tier: Great for small businesses or pilot projects.

4. Experian Bulk Address Verification

Capabilities: Cleans large datasets with regional postal expertise.

Notable Use Case: Utility companies and financial services.

5. Data Ladder’s DataMatch Enterprise

Advanced Matching: Beyond address validation, it detects data anomalies and fuzzy matches.

Use Case: Enterprise-grade data cleansing for mergers or CRM migrations.

How to Use Bulk Address Verification Software

Using batch tools is typically simple and follows this flow:

Upload Your File: Use CSV, Excel, or database export.

Map Fields: Match your columns with the tool’s required address fields.

Validate & Clean: The software standardizes, verifies, and corrects addresses.

Download Results: Export a clean file with enriched metadata (ZIP+4, geocode, etc.)

Import Back: Upload your clean list into your CRM or ERP system.

Integration Options for Bulk Address Validation

Many vendors offer APIs or direct plugins for:

Salesforce

Microsoft Dynamics

HubSpot

Oracle and SAP

Google Sheets

MySQL / PostgreSQL / SQL Server

Whether you're cleaning one-time datasets or automating ongoing data ingestion, integration capabilities matter.

SEO Use Cases: Why Batch Address Tools Help Digital Businesses

In the context of SEO and digital marketing, bulk address validation plays a key role:

Improved Local SEO Accuracy: Accurate NAP (Name, Address, Phone) data ensures consistent local listings and better visibility.

Better Audience Segmentation: Clean data supports targeted, geo-focused marketing.

Lower Email Bounce Rates: Often tied to postal address quality in cross-channel databases.

Final Thoughts

Batch address validation tools and bulk verification software are essential for cleaning and maintaining large datasets. These platforms save time, cut costs, and improve delivery accuracy—making them indispensable for logistics, ecommerce, and CRM management.

Key Takeaways

Use international address validation to expand globally without delivery errors.

Choose batch tools to clean large datasets in one go.

Prioritize features like postal certification, coverage, geocoding, and compliance.

Integrate with your business tools for automated, real-time validation.

Whether you're validating a single international address or millions in a database, the right tools empower your operations and increase your brand's reliability across borders.

youtube

SITES WE SUPPORT

Validate Address With API – Wix

0 notes

Text

Effortless Reporting: Connect Power BI with MySQL Using Konnectify (No Code!)

In today’s data-driven world, access to live, visual insights can mean the difference between thriving and falling behind. And when you’re managing your data with MySQL and your reports in Power BI, integration becomes more than convenient—it's critical.

But here’s the good news: You don’t need to be a developer to make these tools talk to each other.

Meet Konnectify, the no-code platform that lets anyone sync MySQL with Power BI in just a few steps.

Why Integrate Power BI and MySQL?

Power BI: Best-in-class data visualization.

MySQL: Reliable, open-source data storage.

Together: Real-time dashboards + automated workflows = data clarity.

Businesses that connect their data pipelines can automate reporting, reduce manual errors, and make decisions faster. Konnectify makes that possible without coding.

How to Connect MySQL to Power BI (The Easy Way)

Using Konnectify, you can skip the messy technical setup and connect your systems in minutes:

Log into Konnectify

Choose MySQL as your data source

Set your trigger (like new database entry)

Select your action in Power BI (create/update dashboard row)

Hit Activate – and watch the automation magic begin

No ODBC drivers. No code. Just instant connection.

5 Use Cases to Try Today

Want to see real impact? Here are 5 workflows where Power BI + MySQL + Konnectify shine:

Automated Financial Reports

Get real-time finance dashboards fed directly from your MySQL database.

Sales Performance Dashboards

Visualize acquisition, revenue, and churn instantly.

Real-Time Inventory Monitoring

Trigger alerts in Power BI when stock levels drop.

Customer Support Metrics

Track ticket resolution times and satisfaction ratings with live data.

Project Management Oversight

Watch team progress with synced task data, timelines, and status updates.

Why Konnectify?

100% No-Code

150+ App Integrations

Visual Workflow Builder

Secure and SOC2 Compliant

Konnectify is built to help teams like yours connect tools, automate data flows, and save time.

TL;DR

Power BI + MySQL = amazing insights. Konnectify = the easiest way to connect them.

No developers needed. Just drag, drop, and automate.

Ready to try it? Get started with Konnectify for free and make your data work harder for you.

#it services#saas#saas platform#saas development company#information technology#saas technology#b2b saas#software#ipaas#software development

0 notes

Text

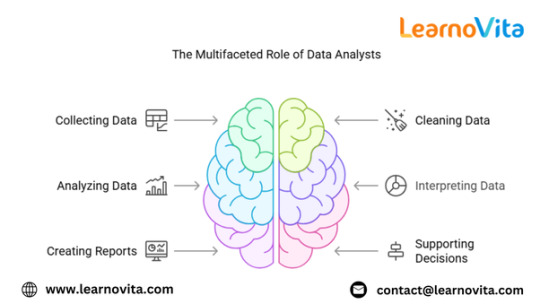

The Essential Tools Every Data Analyst Must Know

The role of a data analyst requires a strong command of various tools and technologies to efficiently collect, clean, analyze, and visualize data. These tools help transform raw data into actionable insights that drive business decisions. Whether you’re just starting your journey as a data analyst or looking to refine your skills, understanding the essential tools will give you a competitive edge in the field from the best Data Analytics Online Training.

SQL – The Backbone of Data Analysis

Structured Query Language (SQL) is one of the most fundamental tools for data analysts. It allows professionals to interact with databases, extract relevant data, and manipulate large datasets efficiently. Since most organizations store their data in relational databases like MySQL, PostgreSQL, and Microsoft SQL Server, proficiency in SQL is a must. Analysts use SQL to filter, aggregate, and join datasets, making it easier to conduct in-depth analysis.

Excel – The Classic Data Analysis Tool

Microsoft Excel remains a powerful tool for data analysis, despite the rise of more advanced technologies. With its built-in formulas, pivot tables, and data visualization features, Excel is widely used for quick data manipulation and reporting. Analysts often use Excel for smaller datasets and preliminary data exploration before transitioning to more complex tools. If you want to learn more about Data Analytics, consider enrolling in an Best Online Training & Placement programs . They often offer certifications, mentorship, and job placement opportunities to support your learning journey.

Python and R – The Power of Programming

Python and R are two of the most commonly used programming languages in data analytics. Python, with libraries like Pandas, NumPy, and Matplotlib, is excellent for data manipulation, statistical analysis, and visualization. R is preferred for statistical computing and machine learning tasks, offering packages like ggplot2 and dplyr for data visualization and transformation. Learning either of these languages can significantly enhance an analyst’s ability to work with large datasets and perform advanced analytics.

Tableau and Power BI – Turning Data into Visual Insights

Data visualization is a critical part of analytics, and tools like Tableau and Power BI help analysts create interactive dashboards and reports. Tableau is known for its ease of use and drag-and-drop functionality, while Power BI integrates seamlessly with Microsoft products and allows for automated reporting. These tools enable business leaders to understand trends and patterns through visually appealing charts and graphs.

Google Analytics – Essential for Web Data Analysis

For analysts working in digital marketing and e-commerce, Google Analytics is a crucial tool. It helps track website traffic, user behavior, and conversion rates. Analysts use it to optimize marketing campaigns, measure website performance, and make data-driven decisions to improve user experience.

BigQuery and Hadoop – Handling Big Data

With the increasing volume of data, analysts need tools that can process large datasets efficiently. Google BigQuery and Apache Hadoop are popular choices for handling big data. These tools allow analysts to perform large-scale data analysis and run queries on massive datasets without compromising speed or performance.

Jupyter Notebooks – The Data Analyst’s Playground

Jupyter Notebooks provide an interactive environment for coding, data exploration, and visualization. Data analysts use it to write and execute Python or R scripts, document their findings, and present results in a structured manner. It’s widely used in data science and analytics projects due to its flexibility and ease of use.

Conclusion

Mastering the essential tools of data analytics is key to becoming a successful data analyst. SQL, Excel, Python, Tableau, and other tools play a vital role in every stage of data analysis, from extraction to visualization. As businesses continue to rely on data for decision-making, proficiency in these tools will open doors to exciting career opportunities in the field of analytics.

0 notes

Text

MySQL Assignment Help

Are you a programming student? Are you looking for help with programming assignments and homework? Are you nervous because the deadline is approaching and you are unable to understand how to complete the boring and complex MySQL assignment? If the answer is yes, then don’t freak out as we are here to help. We have a team of nerdy programmers who provide MySQL assignment help online. If you need an A Grade in your entire MySQL coursework then you need to reach out to our experts who have solved more than 3500 projects in MySQL. We will not only deliver the work on time but will ensure that your university guidelines are met completely, thus ensuring excellent solutions for the programming work.

However, before you take MySQL Help from our experts, you must read the below content in detail to understand more about the subject:

About MySQL

MySQL is an open-source database tool that helps us to make different databases and assist them to implement the various programming languages that make both online and offline software. MySQL is a backend tool for computer programming and software that allows one to make big databases and store the different information collected by the software.

In today’s education system all around the globe, there is no need to be in touch with the theory that you have been reading but now there is a demand for the practical applications of the theory. The grades will be increased only when the student will be able to implement what he/she has learned in their studies.

Finally, the complete implementation will be explained in a step-by-step manner to the student

Since we are a globe tutor and also the best online assignment help provider, we have people who know every education system throughout the world. We are not only limited to the US or the UK, but we are here to help each and every student around the world.

Conclusively, you will not regret choosing the All Assignments Experts because we assure to give you the best MySQL assignment service within time. So what are you waiting for? If you need MySQL assignment help, sign up today with the All Assignments Experts. You can email your requirements to us at [email protected]

Popular MySQL Programming topics for which students come to us for online assignment help are:

MySQL Assignment help

Clone Tables Create Database

Drop Database Introduction to SQL

Like Clause MySQL - Connection

MySQL - Create Tables MySQL - Data Types

Database Import and Export MySQL - Database Info

MySQL - Handling Duplicates Insert Query & Select Query

MySQL - Installation NULL Values

SQL Injection MySQL - Update Query and Delete Query

MySQL - Using Sequences PHP Syntax

Regexps Relational Database Management System (RDBMS)

Select Database Temporary Tables

WAMP and LAMP Where Clause

0 notes