#Node4

Explore tagged Tumblr posts

Text

Node4 Acquires ThreeTwoFour To Strengthen Its Cybersecurity Offering And Expand In The Finance And Banking Sector

Node4, a cloud-led digital transformation Managed Services Provider (MSP), has today announced the acquisition of ThreeTwoFour, an award-winning information security and technology risk specialist

2 notes

·

View notes

Link

#cloudcomputing#cloudmigration#CostOptimization#datasovereignty#digitaltransformation#Enterprisetechnology#hybridcloud#ITInfrastructure

0 notes

Text

Node4 names Richard Moseley as new CEO

http://securitytc.com/TGkFr6

0 notes

Text

Patriot Missile Floating Point Software Problem Led to Deaths of 28 Americans

https://www.cs.unc.edu/%7Esmp/COMP205/LECTURES/ERROR/lec23/node4.html

0 notes

Text

Node4 Goes Live with First Customers on VOSS-4-UC, Best-in-Class Collaboration Solution for Medium Enterprises and SMEs

Node4 Goes Live with First Customers on VOSS-4-UC, Best-in-Class Collaboration Solution for Medium Enterprises and SMEs

July 25, 2018 – Reading, UK – Today, VOSS Solutions announced that Node4 has become the latest organisation to go live on the VOSS-4-UC management platform.

Launched in 2004, Node4is a leading UK-based Managed Service Provider, offering scalable end-to-end IT solutions, all fully-managed and deployed over its own infrastructure. Node4’s competencies include colocation, managed hosting, cloud…

View On WordPress

1 note

·

View note

Text

Kubernetes, abbreviated as K8s is an open-source tool used to orchestrate containerized workloads to run on a cluster of hosts. It is used to automate system deployments, scale, and manage containerized applications. Normally, Kubernetes distributes workloads across the cluster and automates the container networking needs. It also allocates storage and persistent volumes and works continuously to maintain the desired state of container applications. There are several tools one can use to set up a Kubernetes cluster. These tools include Minikube, Kubeadm, Kubernetes on AWS (Kube-AWS), Amazon EKS e.t.c. In this guide, we will walk through how to deploy HA Kubernetes Cluster on Rocky Linux 8 using RKE2. What is RKE2? RKE stands for Rancher Kubernetes Engine. RKE2 also known as the (RKE Government) is a combination of RKE1 and K3s. It inherits usability, ease-of-operations, and deployment model from K3s and close alignment with upstream Kubernetes from RKE1. Normally, RKE2 doesn’t rely on docker, it launches the control plane components as static pods that are managed by the kubelet. The diagram below will help you understand the RKE2 cluster topology. RKE2 ships a number of open-source components that include: K3s Helm Controller K8s API Server Controller Manager Kubelet SchedulerSet up Linux Nodes Proxy etcd containerd/cri runc Helm Metrics Server NGINX Ingress Controller CoreDNS CNI: Canal (Calico & Flannel), Cilium or Calico System Requirements Use a system that meets the below requirements: RAM: 4GB Minimum (we recommend at least 8GB) CPU: 2 Minimum (we recommend at least 4CPU) 3 Rocky Linux 8 Nodes Zero or more agent nodes that are designated to run your apps and services A load balancer to direct front-end traffic to the three nodes. A DNS record to map a URL to the load balancer Step 1 – Set up Rocky Linux 8 Nodes For this guide, we will use 3 Rocky Linux nodes, a load balancer, and RKE2 agents(1 or more). TASK HOSTNAME IP ADDRESS Server Node 1 server1.computingpost.com 192.168.205.2 Server Node 2 server2.computingpost.com 192.168.205.3 Server Node 3 server3.computingpost.com 192.168.205.33 Load Balancer rke.computingpost.com 192.168.205.9 Agent Node1 agent1.computingpost.com 192.168.205.43 Agent Node2 agent2.computingpost.com 192.168.205.44 Set the hostnames as shown: ##On Node1 sudo hostnamectl set-hostname server1.computingpost.com ##On Node2 sudo hostnamectl set-hostname server2.computingpost.com ##On Node3 sudo hostnamectl set-hostname server3.computingpost.com ##On Loadbalancer(Node4) sudo hostnamectl set-hostname rke.computingpost.com ##On Node5 sudo hostnamectl set-hostname agent1.computingpost.com ##On Node6 sudo hostnamectl set-hostname agent2.computingpost.com Add the hostnames to /etc/hosts on each node $ sudo vim /etc/hosts 192.168.205.2 server1.computingpost.com 192.168.205.3 server2.computingpost.com 192.168.205.33 server3.computingpost.com 192.168.205.43 agent1.computingpost.com 192.168.205.44 agent2.computingpost.com 192.168.205.9 rke.computingpost.com Configure the firewall on all the nodes as shown: sudo systemctl stop firewalld sudo systemctl disable firewalld sudo systemctl start nftables sudo systemctl enable nftables Step 2 – Configure the Fixed Registration Address To achieve high availability, you are required to set up an odd number of server plane nodes(runs etcd, the Kubernetes API, and other control plane services). The other server nodes and agent nodes need a URL they can use to register against. This is either an IP or domain name of any of the control nodes. This is mainly done to maintain quorum so that the cluster can afford to lose connection with one of the nodes without impacting the functionality cluster. This can be achieved using the following: A layer 4 (TCP) load balancer

Round-robin DNS Virtual or elastic IP addresses In this guide, we will configure NGINX as a layer 4 (TCP) load balancer to forward the connection to one of the RKE nodes. Install and configure Nginx on Node4 sudo yum install nginx Create a config file: sudo mv /etc/nginx/nginx.conf /etc/nginx/nginx.conf.bak sudo vim /etc/nginx/nginx.conf Create a new Nginx file with the below lines replacing where required: user nginx; worker_processes 4; worker_rlimit_nofile 40000; error_log /var/log/nginx/error.log; pid /run/nginx.pid; # Load dynamic modules. See /usr/share/doc/nginx/README.dynamic. include /usr/share/nginx/modules/*.conf; events worker_connections 8192; stream upstream backend least_conn; server :9345 max_fails=3 fail_timeout=5s; server :9345 max_fails=3 fail_timeout=5s; server :9345 max_fails=3 fail_timeout=5s; # This server accepts all traffic to port 9345 and passes it to the upstream. # Notice that the upstream name and the proxy_pass need to match. server listen 9345; proxy_pass backend; upstream rancher_api least_conn; server :6443 max_fails=3 fail_timeout=5s; server :6443 max_fails=3 fail_timeout=5s; server :6443 max_fails=3 fail_timeout=5s; server listen 6443; proxy_pass rancher_api; upstream rancher_http least_conn; server 192.168.205.2:80 max_fails=3 fail_timeout=5s; server 192.168.205.3:80 max_fails=3 fail_timeout=5s; server 192.168.205.33:80 max_fails=3 fail_timeout=5s; server listen 80; proxy_pass rancher_http; upstream rancher_https least_conn; server 192.168.205.2:443 max_fails=3 fail_timeout=5s; server 192.168.205.3:443 max_fails=3 fail_timeout=5s; server 192.168.205.33:443 max_fails=3 fail_timeout=5s; server listen 443; proxy_pass rancher_https; Save the file, disable SELinux and restart Nginx: sudo setenforce 0 sudo systemctl restart nginx Step 3 – Download installer script on Rocky Linux 8 Nodes All the Rocky Linux 8 nodes intended for this use need to be configured with the RKE2 repositories that provide the required packages. Instal curl tool on your system: sudo yum -y install curl vim wget With curl download the script used to install RKE2 server on your Rocky Linux 8 servers. curl -sfL https://get.rke2.io --output install.sh Make the script executable: chmod +x install.sh To see script usage options run: less ./install.sh Once added, you can install and configure both the RKE2 server and agent on the desired nodes. Step 4 – Set up the First Server Node (Master Node) Install RKE2 server: sudo INSTALL_RKE2_TYPE=server ./install.sh Expected output: [INFO] finding release for channel stable [INFO] using 1.23 series from channel stable Rocky Linux 8 - AppStream 19 kB/s | 4.8 kB 00:00 Rocky Linux 8 - AppStream 11 MB/s | 9.6 MB 00:00 Rocky Linux 8 - BaseOS 18 kB/s | 4.3 kB 00:00 Rocky Linux 8 - BaseOS 11 MB/s | 6.7 MB 00:00 Rocky Linux 8 - Extras 13 kB/s | 3.5 kB 00:00

Rocky Linux 8 - Extras 41 kB/s | 11 kB 00:00 Rancher RKE2 Common (stable) 1.7 kB/s | 1.7 kB 00:00 Rancher RKE2 1.23 (stable) 4.8 kB/s | 4.6 kB 00:00 Dependencies resolved. ====================================================================================================================================================================================================== ....... Transaction Summary ====================================================================================================================================================================================================== Install 5 Packages Total download size: 34 M Installed size: 166 M Downloading Packages: ..... Once installed, you need to create a config file manually. The config file contains the tls-sanparameter which avoids certificate errors with the fixed registration address. The config file can be created with the command: sudo vim /etc/rancher/rke2/config.yaml Add the below lines to the file replacing where required. write-kubeconfig-mode: "0644" tls-san: - rke.computingpost.com - 192.168.205.9 Replace rke.computingpost.com with your fixed registration address and 192.168.205.9 with its IP address. Save the file and start the service; sudo systemctl start rke2-server sudo systemctl enable rke2-server Confirm status of the service after starting it: $ systemctl status rke2-server ● rke2-server.service - Rancher Kubernetes Engine v2 (server) Loaded: loaded (/usr/lib/systemd/system/rke2-server.service; disabled; vendor preset: disabled) Active: active (running) since Sat 2022-08-27 10:17:17 UTC; 1min 32s ago Docs: https://github.com/rancher/rke2#readme Process: 3582 ExecStartPre=/sbin/modprobe overlay (code=exited, status=0/SUCCESS) Process: 3576 ExecStartPre=/sbin/modprobe br_netfilter (code=exited, status=0/SUCCESS) Process: 3573 ExecStartPre=/bin/sh -xc ! /usr/bin/systemctl is-enabled --quiet nm-cloud-setup.service (code=exited, status=0/SUCCESS) Main PID: 3587 (rke2) Tasks: 163 Memory: 1.8G CGroup: /system.slice/rke2-server.service ├─3587 /usr/bin/rke2 server .... Install kubectl curl -LO "https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl" chmod +x kubectl sudo mv kubectl /usr/local/bin Export the config: $ vim ~/.bashrc #Add line below export PATH=$PATH:/var/lib/rancher/rke2/bin export KUBECONFIG=/etc/rancher/rke2/rke2.yaml #Source bashrc file $ source ~/.bashrc After some time, check if the node and pods are up: kubectl get nodes kubectl get pods -A Sample Output: Obtain the token: $ sudo cat /var/lib/rancher/rke2/server/node-token K1079187d01ac73b1a17261a475cb1b8486144543fc59a189e0c4533ef252a26450::server:33f5c1a2b7721992be25e340ded19cac Accessing the Cluster from Outside with kubectl Copy /etc/rancher/rke2/rke2.yaml on your machine located outside the cluster as ~/.kube/config. Then replace 127.0.0.1 with the IP or hostname of your RKE2 server. kubectl can now manage your RKE2 cluster. scp /etc/rancher/rke2/rke2.yaml user@ipaddress:~/.kube/config Step 5 – Set up additional Server Nodes (Master Nodes) Now install RKE2 on the other two server nodes; curl -sfL https://get.rke2.io --output install.sh chmod +x install.sh sudo INSTALL_RKE2_TYPE=server ./install.sh Once installed, create the config file: sudo vim /etc/rancher/rke2/config.yaml Add the below lines to the file server: https://rke.computingpost.com:9345

token: [token from /var/lib/rancher/rke2/server/node-token on server node 1] write-kubeconfig-mode: "0644" tls-san: - rke.computingpost.com If you don’t have DNS server map A record for Load Balancer in /etc/hosts file: $ sudo vi /etc/hosts 192.168.205.9 rke.computingpost.com Save the file and restart the rke2-server each at a time sudo systemctl start rke2-server sudo systemctl enable rke2-server After some time, check the status of the nodes We have 3 master nodes configured. Step 6 – Set up Agent Nodes (Worker Nodes) To set up an agent node, install the RKE2 agent package using the commands below: curl -sfL https://get.rke2.io --output install.sh chmod +x install.sh sudo INSTALL_RKE2_TYPE=agent ./install.sh If you don’t have DNS server map A record for Load Balancer in /etc/hosts file: $ sudo vi /etc/hosts 192.168.205.9 rke.computingpost.com Create and modify configuration file to suit your use. $ sudo vim /etc/rancher/rke2/config.yaml server: https://rke.computingpost.com:9345 token: [token from /var/lib/rancher/rke2/server/node-token on server node 1] Start and enable the service: sudo systemctl start rke2-agent sudo systemctl enable rke2-agent Check the nodes: From the output, we have one agent node added to the cluster. Check pods. This output shows all the pods available, we have pods for the rke2 ingress and metrics deployed by default in the kube-system namespace. Step 7 – Deploy an Application. Once the above configurations have been made, deploy and application on your cluster. For this guide, we will deploy a demo Nginx application. kubectl apply -f -

0 notes

Text

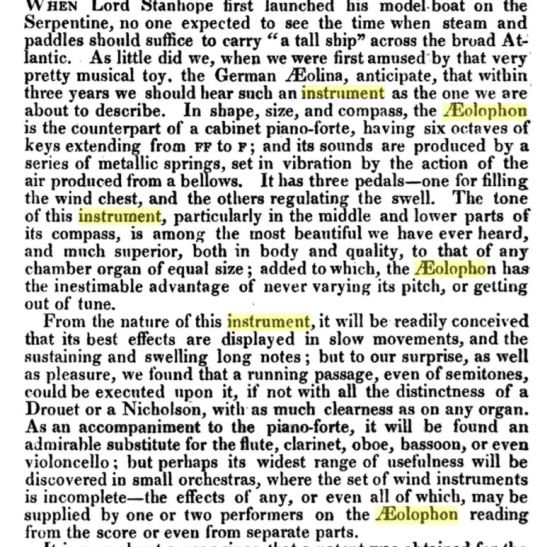

So here’s a typical evening for me. A post of a weird instrument goes by on my dash. Says it’s a “solophon”. “What the hell is that?”. I Google and fail to find any immediate information but one article mentions a “colophon”. This amuses me so I am side tracked to look it up. I find this article from “ARCANA OF SCIENCE AND ARTS: OR AN ANNUAL REGISTER OF USEFUL INVENTIONS AND IMPROVEMENTS” (1831).

No mention of a colophon. Must be confusion from the diphthong AE. But what the hell is this Aeolophon thing? And for that matter, what is this “pretty music toy, the German Aeolina”?

The German Aeolina...I find a snippet about this in the “ The Anglo-German Concertina: A Social History, Volume 1.” (Volume 1! There are more!!)

So...this Aeolina is a kind of precursor to a mouth organ/harmonica. Ok. Satisfaction. Now - what was this Aeolophon thing the first article was so excited about?

Finally...FINALLY I finally find a great (obscure) page and this very useful paragraph from “REED ORGANS IN ENGLAND - A comprehensive study of reed organs in England, Scotland and Wales”. http://tardis.dl.ac.uk/FreeReed/organ_book/node4.html

“In 1814, Bernhard Eschenbach of Königshoven in Bavaria invented a keyboard with vibrators, called the ``Organo-Violine''. In 1816, Johan Caspar Schlimbach of Ohrdurf improved it and called it the ``AEoline''. [...] Many other builders started to make similar instruments, adding their own improvements and inventions to them, and called them by a large variety of names, such as Aeolidon (which had bent tongues), Adelphone, Adiaphonon, Harmonikon, Harmonine, Melodium, Aeolian, Panorgue, Poikolorgue, Seraphine.“

and a littler further down...this line: “ Around 1827, several people in England and Europe turned their attention to the production of musical instruments on this [reed organ] principle. The first successful was possibly the Eolophon of Mr. Day.“

So...the thrilling new invention from the old article is a reed organ. The time periods work, the description works, and the article even mentions the fellow Day as the patent holder (I cut the text off that says that, sorry!). It’s just a different spelling Aeolophon/Eolophon. And that “pretty music toy, the German Aeolina” MUST be this Aeoline in this article - another sort of reed organ (thanks for misspelling all these, writer from 1831!) and not an Aeolina, the mouth organ thing (though that too is a reed instrument!).

Along the way in this journey, I discovered that I don’t know the difference between a pipe organ and a reed organ, and so I had to go read about this. (I think I will make a separate post about that when it’s not so very late.)

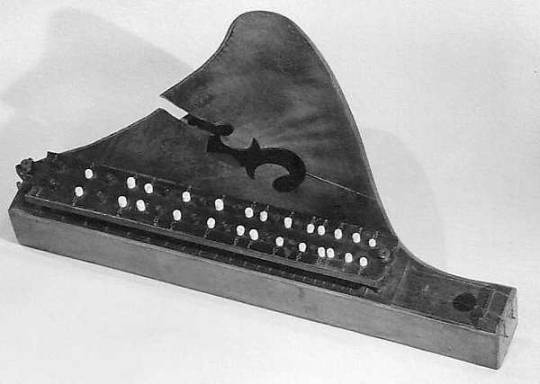

And...at last...because I must...back to the first...a solophon. It’s a German version of a bowed zither. Here is a plain one (or maybe it lost the ‘button box’ the second pic has?) and then one with buttons that I guess make it easier to learn to make “chords” (intervals really...only two strings!) by pressing the buttons. Good night! Z_Z

(last pic from: https://www.metmuseum.org/art/collection/search/505185)

#solophon#aeololphon#Reed organ#organ#bowed zither#No one will even read this!#I have a problem#I'm a triviaholic

8 notes

·

View notes

Text

Maritime connectivity specialist Marlink finds another private equity buyer

https://sciencespies.com/space/maritime-connectivity-specialist-marlink-finds-another-private-equity-buyer/

Maritime connectivity specialist Marlink finds another private equity buyer

TAMPA, Fla. — U.S. private equity giant Providence Equity Partners is in exclusive talks to buy a majority stake in Marlink, the maritime connectivity provider.

Apax Partners, a French private equity firm that bought Marlink from Airbus in 2016 for an undisclosed sum, confirmed the talks in a brief statement.

Industry sources told SpaceNews in March that Apax had put Marlink up for sale.

The timing surprised some in the financial community, according to a private equity source at the time, because two of Marlink’s main competitors — and therefore potential suitors — were occupied with their restructuring processes.

Speedcast exited Chapter 11 bankruptcy protection March 11 while Global Eagle Entertainment, now called Anuvu, emerged from its process March 23.

Marlink acquired customer contracts and assets from Anuvu after it exited Chapter 11, including more than 450 VSAT sites serving humanitarian, oil & gas, embassy and mining customers.

In April, Marlink further diversified its operations by completing its acquisition of satellite solutions provider ITC Global, which serves maritime, energy and enterprise markets.

With headquarters in France and Norway, Marlink says it serves more than 130 countries and employs more than 1,000 people.

Providence has about $45 billion in aggregate capital commitments, specializing in the media, communications, education, software and services industries.

Between 2001 and 2021, the private equity firm has invested in European technology, media, and telecom businesses including Node4, MasMovil, Mach, MobilServ, M7 Group, Ono, Comhem, TDC, Eircom, Bite, Kabel Deutschland and Casema.

“Any transaction would be subject to customary and regulatory approvals, including the completion of necessary consultations with the Company’s works council,” Providence stated in a news release.

The companies declined to disclose the transaction’s financial details.

#Space

0 notes

Text

Node4 expands Microsoft expertise with TNP buy Click here for articles July 21, 2021 at 06:00PM

0 notes

Text

MySQL NDB Cluster Replication Topologies (Part – II)

In the previous blog, we were able to setup a MySQL NDB Cluster replication between one source and one replica cluster. In this blog, we will discuss about replication between one source and three replica clusters.Note: With MySQL version (8.0.21), we have started changing the term “master” to “source”, the term “slave” to “replica”. So in this blog we will refer these terms ‘source’ and ‘replica’ wherever applicable.The main advantage of this type of topology is good for giving ‘local’ reads in geographically distant areas as well as increased redundancy in case of issues.Let’s create four MySQL NDB Cluster with the following environment, from which one will be termed as ‘source’ cluster while the rest will be ‘replica’ clusters.MySQL NDB Cluster version (Latest GA version)1 Management node4 Data nodes1 MySQLDConfiguration slots for up to 4 additional API nodesReplication schema diagram:MySQL (test01) ----->------->------->------> MySQL (test05)(CLUSTER 1, Source1) (CLUSTER 2, Replica1)^ ^| |--------> ------->------->------->---------> MySQL (test09)| (CLUSTER 3, Replica2)||------>------>------->------->------->------> MySQL (test13) (CLUSTER 4, Replica3)test01: MySQL server host name of source cluster, IP: 100.103.21.66test05: MySQL server host name of replica1 cluster, IP: 100.103.21.65test09: MySQL server host name of replica2 cluster, IP: 100.103.21.70test13: MySQL server host name of replica3 cluster, IP: 100.103.21.61Step 1: Let’s start the ‘source’ cluster:And, then start all the ‘replica’ cluster one by one. Start the first replica cluster:Start the second replica cluster:Start the third replica cluster:Now all the clusters are up and running.Step 2:a) Let’s cleanup the old binary files if exist:From the source MySQL server, let’s delete all existing binary log files (if any) through 'reset master' command. The status of the binary log file can be known through ‘show master status’ command.mysql> reset master;mysql> show master status;b) Login as ‘root’ user in the source MySQL server. Then update the root user so that other host with ‘root’ user can able to connect to this source MySQL server.mysql> bin/mysql -u root -P"13010";mysql> UPDATE mysql.user SET host='%' where user='root' and host='localhost';mysql> flush privileges;From the first replica’s MySQL Server, try to connect to the source’s MySQL server.mysql> bin/mysql -u root -P"13010";mysql> stop replica;mysql> reset replica all;mysql> CHANGE MASTER TO MASTER_HOST='100.103.21.66', MASTER_USER='root', MASTER_PORT=13010;mysql> start replica;Now check the state of the replication: mysql> show replica statusGFrom the above image we can see that we have successfully created a replication between source and first replica NDB Cluster.Step 3:Now let’s create a database ‘test1’, table ‘t1’, and will do transactions from the source cluster. We will check the same from the replica’s mysql server.Here, we will do transaction on the table ‘t1’ continuously with high number of rows. The idea is we will add other two replicas in an interval while transactions are on going and will see if all the replicas have same number of rows or any changes in rows count we saw. Here, I am inserting rows through internal tool, can also be done through any script.From the source’s MySQL server:Let’s check from the replica’s MySQL server, if all the database objects and rows are populating at first replica cluster or not.From the above image, we can see that a database ‘test1’, table ‘t1’ with rows getting populated from the source cluster. Let’s check the replication status.From the above image, we can see that rows are applying in batchesto the table ‘t1’.Let’s start the second replica server. Here, we need to login as root and issue the change master command.mysql> bin/mysql -u root -P"13010";mysql> CHANGE MASTER TO MASTER_HOST='100.103.21.66', MASTER_USER='root', MASTER_PORT=13010;mysql> start replica;Check the status of the second replica server:mysql> show replica statusGLet’s check whether database, table and rows are populating or not at second replica MySQL server.From the above image, we can see that rows are populating in table ‘t1’. Let’s start the third replica MySQL server. Here, we need to login as root and issue the change master command.mysql> bin/mysql -u root -P"13010";mysql> CHANGE MASTER TO MASTER_HOST='100.103.21.66', MASTER_USER='root', MASTER_PORT=13010;mysql> start replica;Let’s check the replication status:Since replication has been started, let’s check the database, table and rows status.From the above image, we can see that rows are started populating into table ‘t1’ in third replica MySQL server. So replication has been established successfully between source MySQL server along with three replica MySQL server.At the end, let’s check the final rows count in source MySQL server and then check all the three replica’s MySQL server.From the source MySQL server:Below image shows that the final rows count for table ‘t1’ is ‘3458910’.Let’s check the rows count in first replica MySQL Server:From the above image, we can see that replica has read all the relay logs and the final rows count is ‘3458910’ which is same as source rows count. Let’s check the second replica MySQL server rows count:From the above image, we can see that second replica MySQL server has also same number of rows count i.e. ‘3458910’. Let’s check the rows count for third replica MySQL server.From the above image, we can see that, third replica MySQL server has also same number of rows count i.e. ‘3458910’. So all rows of table 't1' from source has been replicated successfully to all the three replica MySQL server.In this demo, I have only shown here rows insertion as the only operation, we can do all the transactions like update, delete, DDL operations etc from the source MySQL server and the same can be available at all the replica MySQL server.This concludes our discussion on second replication topology in NDB Cluster. https://clustertesting.blogspot.com/2021/05/mysql-ndb-cluster-replication_18.html

0 notes

Text

Node4 teams up with Elastio to fight ransomware

http://i.securitythinkingcap.com/TC8RBj

0 notes

Text

Providence leads race to buy £300m IT group Node4 - Sky News

Providence leads race to buy £300m IT group Node4 – Sky News

The buyout giant Providence Equity Partners is leading a £300m race to buy Node4, one of Britain’s army of fast-growing IT services providers, in a deal that will net another big windfall for its founder. Sky News understands that Providence has emerged in recent weeks as the frontrunner to buy the Derby-based company. It would be the latest in a series of so-called pass-the-parcel deals…

View On WordPress

0 notes

Text

Node4 achieves PCI compliance certification for physical controls at its data centres

Node4 achieves PCI compliance certification for physical controls at its data centres

Managed service provider successfully attains PCI compliance for ensuring a continually high standard of physical security at three data centre locations.

Derby, UK – 24 September 2019 – Node4, the cloud, data centre and communications solutions provider, has today announced the completion of its Payment Card Industry (PCI) compliance for physical controls 9 and 12, across its three data centres.…

View On WordPress

0 notes

Text

BrandPost: Node4: Where Exceptional Service Is More than a Motto

https://bit.ly/3d2VXMT

0 notes

Photo

#7 روابط للدفع لدية #خالد_نقا_العازمي واسأل الله القبول من الجميع والدال على الخير كـ فاعله اجمالي التبرعات حتى الان . alawazm-kw.com node1.alawazm-kw.com node2.alawazm-kw.com node3.alawazm-kw.com node4.alawazm-kw.com node5.alawazm-kw.com node6.alawazm-kw.com https://www.instagram.com/p/Bw1vCdSg11W/?utm_source=ig_tumblr_share&igshid=pyvrl21brqac

0 notes

Text

BrandPost: Node4: Where Exceptional Service Is More than a Motto

“Exceptional Service as a Standard is our motto, but it’s not just a buzzword,” says Marc Woosnam, technical director of U.K.-based Node4. “It’s actually fully ingrained into our culture. The entire company has been through exceptional service training and it’s what our customers expect in every interaction and at every phase in our work with them.”

As one of the fastest-growing technology services and solutions providers in the U.K., Node4 has a lot going in its favor. Woosnam cites a few of the things that set it apart.

“The fact that we control our entire portfolio ourselves is really important,” he says. “We own all of our own data centers and have a low-latency, high-performance core network running through them, with which we can provide our cloud services and our own hosted enterprise-grade SIP and collaboration platforms. In this way, we’re able to maintain stringent control over how available and how reliable the services and solutions we offer are, which in turn gives our customers the peace of mind that comes with having a truly trusted partner.”

To read this article in full, please click here

from CIO https://www.cio.com/article/3562360/node4-where-exceptional-service-is-more-than-a-motto.html#tk.rss_all Baltimore IT Support

0 notes