#Offloaded MySQL Service

Explore tagged Tumblr posts

Text

🚀 Explore the Horizon of Virtualization with VPSDime! 🚀

Embark on a seamless journey through the realms of high-performance hosting without burning a hole in your pocket! With VPSDime, you unlock a treasure trove of features tailored to meet the evolving demands of developers and businesses alike. Here’s a sneak peek into what awaits you: 🖥️ Diverse Linux Distribution Support: Dive into a versatile hosting environment with a broad spectrum of…

View On WordPress

#Affordable Hosting#Developer-Friendly Hosting#Global Hosting Solutions#linux hosting#Offloaded MySQL Service#Virtual Private Server#VPSDime

0 notes

Text

Cloud Database and DBaaS Market in the United States entering an era of unstoppable scalability

Cloud Database And DBaaS Market was valued at USD 17.51 billion in 2023 and is expected to reach USD 77.65 billion by 2032, growing at a CAGR of 18.07% from 2024-2032.

Cloud Database and DBaaS Market is experiencing robust expansion as enterprises prioritize scalability, real-time access, and cost-efficiency in data management. Organizations across industries are shifting from traditional databases to cloud-native environments to streamline operations and enhance agility, creating substantial growth opportunities for vendors in the USA and beyond.

U.S. Market Sees High Demand for Scalable, Secure Cloud Database Solutions

Cloud Database and DBaaS Market continues to evolve with increasing demand for managed services, driven by the proliferation of data-intensive applications, remote work trends, and the need for zero-downtime infrastructures. As digital transformation accelerates, businesses are choosing DBaaS platforms for seamless deployment, integrated security, and faster time to market.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/6586

Market Keyplayers:

Google LLC (Cloud SQL, BigQuery)

Nutanix (Era, Nutanix Database Service)

Oracle Corporation (Autonomous Database, Exadata Cloud Service)

IBM Corporation (Db2 on Cloud, Cloudant)

SAP SE (HANA Cloud, Data Intelligence)

Amazon Web Services, Inc. (RDS, Aurora)

Alibaba Cloud (ApsaraDB for RDS, ApsaraDB for MongoDB)

MongoDB, Inc. (Atlas, Enterprise Advanced)

Microsoft Corporation (Azure SQL Database, Cosmos DB)

Teradata (VantageCloud, ClearScape Analytics)

Ninox (Cloud Database, App Builder)

DataStax (Astra DB, Enterprise)

EnterpriseDB Corporation (Postgres Cloud Database, BigAnimal)

Rackspace Technology, Inc. (Managed Database Services, Cloud Databases for MySQL)

DigitalOcean, Inc. (Managed Databases, App Platform)

IDEMIA (IDway Cloud Services, Digital Identity Platform)

NEC Corporation (Cloud IaaS, the WISE Data Platform)

Thales Group (CipherTrust Cloud Key Manager, Data Protection on Demand)

Market Analysis

The Cloud Database and DBaaS Market is being shaped by rising enterprise adoption of hybrid and multi-cloud strategies, growing volumes of unstructured data, and the rising need for flexible storage models. The shift toward as-a-service platforms enables organizations to offload infrastructure management while maintaining high availability and disaster recovery capabilities.

Key players in the U.S. are focusing on vertical-specific offerings and tighter integrations with AI/ML tools to remain competitive. In parallel, European markets are adopting DBaaS solutions with a strong emphasis on data residency, GDPR compliance, and open-source compatibility.

Market Trends

Growing adoption of NoSQL and multi-model databases for unstructured data

Integration with AI and analytics platforms for enhanced decision-making

Surge in demand for Kubernetes-native databases and serverless DBaaS

Heightened focus on security, encryption, and data governance

Open-source DBaaS gaining traction for cost control and flexibility

Vendor competition intensifying with new pricing and performance models

Rise in usage across fintech, healthcare, and e-commerce verticals

Market Scope

The Cloud Database and DBaaS Market offers broad utility across organizations seeking flexibility, resilience, and performance in data infrastructure. From real-time applications to large-scale analytics, the scope of adoption is wide and growing.

Simplified provisioning and automated scaling

Cross-region replication and backup

High-availability architecture with minimal downtime

Customizable storage and compute configurations

Built-in compliance with regional data laws

Suitable for startups to large enterprises

Forecast Outlook

The market is poised for strong and sustained growth as enterprises increasingly value agility, automation, and intelligent data management. Continued investment in cloud-native applications and data-intensive use cases like AI, IoT, and real-time analytics will drive broader DBaaS adoption. Both U.S. and European markets are expected to lead in innovation, with enhanced support for multicloud deployments and industry-specific use cases pushing the market forward.

Access Complete Report: https://www.snsinsider.com/reports/cloud-database-and-dbaas-market-6586

Conclusion

The future of enterprise data lies in the cloud, and the Cloud Database and DBaaS Market is at the heart of this transformation. As organizations demand faster, smarter, and more secure ways to manage data, DBaaS is becoming a strategic enabler of digital success. With the convergence of scalability, automation, and compliance, the market promises exciting opportunities for providers and unmatched value for businesses navigating a data-driven world.

Related reports:

U.S.A leads the surge in advanced IoT Integration Market innovations across industries

U.S.A drives secure online authentication across the Certificate Authority Market

U.S.A drives innovation with rapid adoption of graph database technologies

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

Mail us: [email protected]

#Cloud Database and DBaaS Market#Cloud Database and DBaaS Market Growth#Cloud Database and DBaaS Market Scope

0 notes

Text

Integrating ROSA Applications with AWS Services (CS221)

As cloud-native architectures become the backbone of modern application deployments, combining the power of Red Hat OpenShift Service on AWS (ROSA) with native AWS services unlocks immense value for developers and DevOps teams alike. In this blog post, we explore how to integrate ROSA-hosted applications with AWS services to build scalable, secure, and cloud-optimized solutions — a key skill set emphasized in the CS221 course.

🚀 What is ROSA?

Red Hat OpenShift Service on AWS (ROSA) is a managed OpenShift platform that runs natively on AWS. It allows organizations to deploy Kubernetes-based applications while leveraging the scalability and global reach of AWS, without managing the underlying infrastructure.

With ROSA, you get:

Fully managed OpenShift clusters

Integrated with AWS IAM and billing

Access to AWS services like RDS, S3, DynamoDB, Lambda, etc.

Native CI/CD, container orchestration, and operator support

🧩 Why Integrate ROSA with AWS Services?

ROSA applications often need to interact with services like:

Amazon S3 for object storage

Amazon RDS or DynamoDB for database integration

Amazon SNS/SQS for messaging and queuing

AWS Secrets Manager or SSM Parameter Store for secrets management

Amazon CloudWatch for monitoring and logging

Integration enhances your application’s:

Scalability — Offload data, caching, messaging to AWS-native services

Security — Use IAM roles and policies for fine-grained access control

Resilience — Rely on AWS SLAs for critical components

Observability — Monitor and trace hybrid workloads via CloudWatch and X-Ray

🔐 IAM and Permissions: Secure Integration First

A crucial part of ROSA-AWS integration is managing IAM roles and policies securely.

Steps:

Create IAM Roles for Service Accounts (IRSA):

ROSA supports IAM Roles for Service Accounts, allowing pods to securely access AWS services without hardcoding credentials.

Attach IAM Policy to the Role:

Example: An application that uploads files to S3 will need the following permissions:{ "Effect": "Allow", "Action": ["s3:PutObject", "s3:GetObject"], "Resource": "arn:aws:s3:::my-bucket-name/*" }

Annotate OpenShift Service Account:

Use oc annotate to associate your OpenShift service account with the IAM role.

📦 Common Integration Use Cases

1. Storing App Logs in S3

Use a Fluentd or Loki pipeline to export logs from OpenShift to Amazon S3.

2. Connecting ROSA Apps to RDS

Applications can use standard drivers (PostgreSQL, MySQL) to connect to RDS endpoints — make sure to configure VPC and security groups appropriately.

3. Triggering AWS Lambda from ROSA

Set up an API Gateway or SNS topic to allow OpenShift applications to invoke serverless functions in AWS for batch processing or asynchronous tasks.

4. Using AWS Secrets Manager

Mount secrets securely in pods using CSI drivers or inject them using operators.

🛠 Hands-On Example: Accessing S3 from ROSA Pod

Here’s a quick walkthrough:

Create an IAM Role with S3 permissions.

Associate the role with a Kubernetes service account.

Deploy your pod using that service account.

Use AWS SDK (e.g., boto3 for Python) inside your app to access S3.

oc create sa s3-access oc annotate sa s3-access eks.amazonaws.com/role-arn=arn:aws:iam::<account-id>:role/S3AccessRole

Then reference s3-access in your pod’s YAML.

📚 ROSA CS221 Course Highlights

The CS221 course from Red Hat focuses on:

Configuring service accounts and roles

Setting up secure access to AWS services

Using OpenShift tools and operators to manage external integrations

Best practices for hybrid cloud observability and logging

It’s a great choice for developers, cloud engineers, and architects aiming to harness the full potential of ROSA + AWS.

✅ Final Thoughts

Integrating ROSA with AWS services enables teams to build robust, cloud-native applications using best-in-class tools from both Red Hat and AWS. Whether it's persistent storage, messaging, serverless computing, or monitoring — AWS services complement ROSA perfectly.

Mastering these integrations through real-world use cases or formal training (like CS221) can significantly uplift your DevOps capabilities in hybrid cloud environments.

Looking to Learn or Deploy ROSA with AWS?

HawkStack Technologies offers hands-on training, consulting, and ROSA deployment support. For more details www.hawkstack.com

0 notes

Video

youtube

Boost Database Performance with Amazon RDS Read Replicas NOW!

Enhance your database performance and scalability with Amazon RDS Read Replicas, a powerful feature designed to improve the read performance of your Amazon RDS databases. Ideal for applications with high read traffic or complex queries, Read Replicas distribute database load and provide a scalable solution for handling increased workloads.

Key Features:

- Read Traffic Distribution: Offload read-heavy database queries to Read Replicas, reducing the load on the primary instance and improving overall application performance. - Automatic Replication: Enjoy seamless data replication from the primary database to Read Replicas with minimal configuration, ensuring data consistency across your environment. - Scalable Architecture: Easily add or remove Read Replicas as needed to accommodate changes in read traffic and database workloads, ensuring your database infrastructure scales with your application demands. - Backup and Recovery: Benefit from continuous data replication for disaster recovery and backup purposes, with Read Replicas providing an additional layer of data protection. - Low Latency: Reduce query response times and improve user experience by utilizing Read Replicas in different geographic regions to serve local read requests more efficiently.

Use Cases:

- High Read Traffic Applications: Ideal for applications with high read-to-write ratios, such as reporting tools, analytical applications, and content management systems. - Global Applications: Enhance performance for globally distributed applications by deploying Read Replicas in different AWS regions, reducing latency for users worldwide. - Data Analytics: Improve the performance of data analytics and business intelligence workloads by offloading read queries to dedicated Read Replicas, ensuring faster query execution.

Key Benefits:

1. Enhanced Performance: Boost application performance by distributing read traffic across multiple instances, reducing latency and improving response times. 2. Scalability: Easily scale your database environment to handle increased read traffic and growing workloads with the flexibility to add or remove Read Replicas as needed. 3. Improved User Experience: Deliver a faster and more responsive user experience by offloading complex read queries and serving data from geographically optimized replicas. 4. Data Protection: Utilize Read Replicas as part of your backup and disaster recovery strategy, providing an additional layer of data durability and redundancy. 5. Cost Efficiency: Optimize your database costs by balancing the load between the primary instance and Read Replicas, reducing the need for more expensive scaling solutions.

Conclusion:

Amazon RDS Read Replicas offer an effective solution for boosting database performance and scalability, allowing you to manage high read traffic and complex queries with ease. By distributing read loads, improving response times, and enhancing data protection, Read Replicas help you build a more resilient and efficient database infrastructure. Explore how this feature can elevate your database capabilities, ensuring your applications run smoothly and efficiently.

amazon rds read replicas,aws rds read replica,read replicas,aws,aws cloud,amazon web services,cloud computing,amazon rds,aws tutorial,aws rds,What Is Amazon RDS Read Replicas?,What Is Amazon RDS Read Replicas Features?,What Is Amazon RDS Read Replicas Use Cases?,What Is Amazon RDS Read Replicas Benefits?,How it Work What Is Amazon RDS Read Replicas?,Boost Database Performance with Amazon RDS Read Replicas NOW!,multi az,aws rds mysql,cloudolus,free,aws bangla

amazon rds,rds multi az deployment,rds,mysql rds aws,cloud computing,aws rds como instalar,rds mysql workbench,aws cloud,amazon web services,cloudolus,ClouDolusPro,rds mysql configuration,mysql database tutorial,read replicas,aws tutorial,high availability,What Is Amazon RDS Multi-AZ?,What Is Amazon RDS Multi-AZ Key Features?,What Is Amazon RDS Multi-AZ Use Cases?,What Is Amazon RDS Multi-AZ Benefits?,How it Work What Is Amazon RDS Multi-AZ?,aws rds

#youtube#amazon rds read replicas#amazon rds read replicasaws rds read replicaread replicasawsaws cloudamazon web servicescloud computingamazon rdsaws tutorialaws rdsWhat Is#amazon rdsrds multi az deploymentrdsmysql rds awscloud computingaws rds como instalarrds mysql workbenchaws cloudamazon web servicescloudolu#cloudolus#cloudoluspro

0 notes

Text

Comparing Amazon RDS and Aurora: Key Differences Explained

When it comes to choosing a database solution in the cloud, Amazon Web Services (AWS) offers a range of powerful options, with Amazon Relational Database Service (RDS) and Amazon Aurora being two of the most popular. Both services are designed to simplify database management, but they cater to different needs and use cases. In this blog, we’ll delve into the key differences between Amazon RDS and Aurora to help you make an informed decision for your applications.

If you want to advance your career at the AWS Course in Pune, you need to take a systematic approach and join up for a course that best suits your interests and will greatly expand your learning path.

What is Amazon RDS?

Amazon RDS is a fully managed relational database service that supports multiple database engines, including MySQL, PostgreSQL, MariaDB, Oracle, and Microsoft SQL Server. It automates routine database tasks such as backups, patching, and scaling, allowing developers to focus more on application development rather than database administration.

Key Features of RDS

Multi-Engine Support: Choose from various database engines to suit your specific application needs.

Automated Backups: RDS automatically backs up your data and provides point-in-time recovery.

Read Replicas: Scale read operations by creating read replicas to offload traffic from the primary instance.

Security: RDS offers encryption at rest and in transit, along with integration with AWS Identity and Access Management (IAM).

What is Amazon Aurora?

Amazon Aurora is a cloud-native relational database designed for high performance and availability. It is compatible with MySQL and PostgreSQL, offering enhanced features that improve speed and reliability. Aurora is built to handle demanding workloads, making it an excellent choice for large-scale applications.

Key Features of Aurora

High Performance: Aurora can deliver up to five times the performance of standard MySQL databases, thanks to its unique architecture.

Auto-Scaling Storage: Automatically scales storage from 10 GB to 128 TB without any downtime, adapting to your needs seamlessly.

High Availability: Data is automatically replicated across multiple Availability Zones for robust fault tolerance and uptime.

Serverless Option: Aurora Serverless automatically adjusts capacity based on application demand, ideal for unpredictable workloads.

To master the intricacies of AWS and unlock its full potential, individuals can benefit from enrolling in the AWS Online Training.

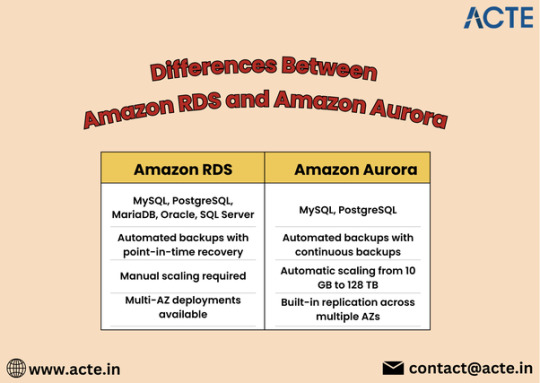

Key Differences Between Amazon RDS and Aurora

1. Performance and Scalability

One of the most significant differences lies in performance. Aurora is engineered for high throughput and low latency, making it a superior choice for applications that require fast data access. While RDS provides good performance, it may not match the efficiency of Aurora under heavy loads.

2. Cost Structure

Both services have different pricing models. RDS typically has a more straightforward pricing structure based on instance types and storage. Aurora, however, incurs costs based on the volume of data stored, I/O operations, and instance types. While Aurora may seem more expensive initially, its performance gains can result in cost savings for high-traffic applications.

3. High Availability and Fault Tolerance

Aurora inherently offers better high availability due to its design, which replicates data across multiple Availability Zones. While RDS does offer Multi-AZ deployments for high availability, Aurora’s replication and failover mechanisms provide additional resilience.

4. Feature Set

Aurora includes advanced features like cross-region replication and global databases, which are not available in standard RDS. These capabilities make Aurora an excellent option for global applications that require low-latency access across regions.

5. Management and Maintenance

Both services are managed by AWS, but Aurora requires less manual intervention for scaling and maintenance due to its automated features. This can lead to reduced operational overhead for businesses relying on Aurora.

When to Choose RDS or Aurora

Choose Amazon RDS if you need a straightforward, managed relational database solution with support for multiple engines and moderate performance needs.

Opt for Amazon Aurora if your application demands high performance, scalability, and advanced features, particularly for large-scale or global applications.

Conclusion

Amazon RDS and Amazon Aurora both offer robust solutions for managing relational databases in the cloud, but they serve different purposes. Understanding the key differences can help you select the right service based on your specific requirements. Whether you go with the simplicity of RDS or the advanced capabilities of Aurora, AWS provides the tools necessary to support your database needs effectively.

0 notes

Text

What Are Examples of AWS Managed Services?

In today's fast-paced digital world, businesses are increasingly relying on cloud services to optimize their operations and deliver exceptional user experiences. Amazon Web Services (AWS) has emerged as a dominant player in the cloud computing space, providing a wide array of services to cater to diverse business needs. AWS-managed services offer a remarkable advantage by offloading the burden of managing infrastructure and allowing organizations to focus on their core competencies. In this blog, we will explore some prominent examples of AWS-managed services and delve into their benefits, highlighting how Flentas, an AWS consulting partner, also offers managed services to help businesses thrive in the cloud era.

Amazon RDS (Relational Database Service)

One of the most widely used managed services offered by AWS is Amazon RDS. It simplifies the management of relational databases such as MySQL, PostgreSQL, Oracle, and SQL Server. Amazon RDS handles essential database tasks like provisioning, patching, backup, recovery, and scaling, allowing developers to focus on their applications rather than database administration. With features like automated backups, automated software patching, and easy replication, Amazon RDS streamlines database management and improves availability, durability, and performance.

Amazon DynamoDB

For those in need of a NoSQL database, Amazon DynamoDB is an excellent choice. It is a fully managed, highly scalable, and secure database service that supports both document and key-value data models. DynamoDB takes care of infrastructure provisioning, software patching, and database scaling, ensuring high availability and performance. With its seamless integration with other AWS services, such as Lambda and API Gateway, DynamoDB becomes an ideal choice for building serverless applications and microservices that require low-latency data access.

Amazon Elastic Beanstalk

Amazon Elastic Beanstalk provides a platform as a service (PaaS) for deploying and managing applications without worrying about infrastructure details. It supports popular programming languages like Java, .NET, Python, PHP, Ruby, and more. Elastic Beanstalk handles the deployment, capacity provisioning, load balancing, and auto-scaling of your applications, allowing developers to focus on writing code. It integrates with other AWS services, enabling easy access to services like RDS, DynamoDB, and S3. With Elastic Beanstalk, developers can quickly deploy and manage their applications, reducing time-to-market and enhancing productivity.

Amazon Redshift

When it comes to data warehousing, Amazon Redshift is a powerful managed service offered by AWS. It is specifically designed for big data analytics and provides high-performance querying and scalable storage. Redshift takes care of infrastructure management, including provisioning, patching, and backups, while also delivering automatic data compression and encryption. With its columnar storage technology, parallel query execution, and integration with popular business intelligence tools like Tableau, Redshift enables businesses to analyze vast amounts of data efficiently and derive valuable insights.

AWS Lambda

AWS Lambda is a serverless computing service that allows you to run your code without provisioning or managing servers. It executes your code in response to events, such as changes to data in an S3 bucket or updates to a DynamoDB table. AWS Lambda scales automatically, ensuring that your code runs smoothly even under high loads. By utilizing Lambda, businesses can reduce costs by paying only for the actual compute time consumed by their applications. It seamlessly integrates with other AWS services, enabling developers to build highly scalable and event-driven architectures easily.

In the realm of cloud computing, AWS-managed services provide businesses with a competitive edge by alleviating the complexities of infrastructure management. This blog has explored a few notable examples of AWS-managed services, including Amazon RDS, Amazon S3, Amazon EC2, Amazon Athena, and AWS Lambda. These services enable organizations to organizations to optimize their operations, reduce costs, and rapidly innovate in the cloud. It's worth noting that Flentas, as an AWS consulting partner, also offers AWS cloud managed services to assist businesses in harnessing the full potential of AWS. By partnering with Flentas, organizations can leverage our expertise in cloud technology.

For more details about our services please visit our website – Flentas Services

0 notes

Text

What is Amazon Aurora?

Explore in detail about Amazon aurora and its features.

Amazon Aurora is a fully managed relational database service offered by Amazon Web Services (AWS). It is designed to provide high performance, availability, and durability while being compatible with MySQL and PostgreSQL database engines.

Amazon Aurora, a powerful and scalable database solution provided by AWS, is a fantastic choice for businesses looking to streamline their data management. When it comes to optimizing your AWS infrastructure, consider leveraging professional AWS consulting services. These services can help you make the most of Amazon Aurora and other AWS offerings, ensuring that your database systems and overall cloud architecture are finely tuned for performance, reliability, and security.

Here are some of the key features of Amazon Aurora:

1. Compatibility: Amazon Aurora is compatible with both MySQL and PostgreSQL database engines, which means you can use existing tools, drivers, and applications with minimal modification.

2. High Performance: It offers excellent read and write performance due to its distributed, fault-tolerant architecture. Aurora uses a distributed storage engine that replicates data across multiple Availability Zones (AZs) for high availability and improved performance.

3. Replication: Aurora supports both automated and manual database replication. You can create up to 15 read replicas, which can help offload read traffic from the primary database and improve read scalability.

4. Global Databases: You can create Aurora Global Databases, allowing you to replicate data to multiple regions globally. This provides low-latency access to your data for users in different geographic locations.

5. High Availability: Aurora is designed for high availability and fault tolerance. It replicates data across multiple AZs, and if the primary instance fails, it automatically fails over to a replica with minimal downtime.

6. Backup and Restore: Amazon Aurora automatically takes continuous backups and offers point-in-time recovery. You can also create manual backups and snapshots for data protection.

7. Security: It provides robust security features, including encryption at rest and in transit, integration with AWS Identity and Access Management (IAM), and support for Virtual Private Cloud (VPC) isolation.

8. Scalability: Aurora allows you to scale your database instances up or down based on your application's needs, without impacting availability. It also offers Amazon Aurora Auto Scaling for automated instance scaling.

9. Performance Insights: You can use Amazon RDS Performance Insights to monitor the performance of your Aurora database, making it easier to identify and troubleshoot performance bottlenecks.

10. Serverless Aurora: AWS also offers a serverless version of Amazon Aurora, which automatically adjusts capacity based on actual usage. This can be a cost-effective option for variable workloads.

11. Integration with AWS Services: Aurora can be integrated with other AWS services like AWS Lambda, AWS Glue, and Amazon SageMaker, making it suitable for building data-driven applications and analytics solutions.

12. Cross-Region Replication: You can set up cross-region replication to have read replicas in different AWS regions for disaster recovery and data locality.

These features make Amazon Aurora a powerful and versatile database solution for a wide range of applications, from small-scale projects to large, mission-critical systems.

0 notes

Text

☄️ Pufferfish, please scale the site!

We created Team Pufferfish about a year ago with a specific goal: to avert the MySQL apocalypse! The MySQL apocalypse would occur when so many students would work on quizzes simultaneously that even the largest MySQL database AWS has on offer would not be able to cope with the load, bringing the site to a halt.

A little over a year ago, we forecasted our growth and load-tested MySQL to find out how much wiggle room we had. In the worst case (because we dislike apocalypses), or in the best case (because we like growing), we would have about a year’s time. This meant we needed to get going!

Looking back on our work now, the most important lesson we learned was the importance of timely and precise feedback at every step of the way. At times we built short-lived tooling and process to support a particular step forward. This made us so much faster in the long run.

🏔 Climbing the Legacy Code Mountain

Clear from the start, Team Pufferfish would need to make some pretty fundamental changes to the Quiz Engine, the component responsible for most of the MySQL load. Somehow the Quiz Engine would need to significantly reduce its load on MySQL.

Most of NoRedInk runs on a Rails monolith, including the Quiz Engine. The Quiz Engine is big! It’s got lots of features! It supports our teachers & students to do lots of great work together! Yay!

But the Quiz Engine has some problems, too. A mix of complexity and performance-sensitivity has made engineers afraid to touch it. Previous attempts at big structural change in the Quiz Engine failed and had to be rolled back. If Pufferfish was going make significant structural changes, we would need to ensure our ability to be productive in the Quiz Engine codebase. Thinking we could just do it without a new approach would be foolhardy.

⚡ The Vengeful God of Tests

We have mixed feelings about our test suite. It’s nice that it covers a lot of code. Less nice is that we don’t really know what each test is intended to check. These tests have evolved into complex bits of code by themselves with a lot of supporting logic, and in many cases, tight coupling to the implementation. Diving deep into some of these tests has uncovered tests no longer covering any production logic at all. The test suite is large and we didn’t have time to dive deep into each test, but we were also reluctant to delete test cases without being sure they weren’t adding value.

Our relationship with the Quiz Engine test suite was and still is a bit like one might have with an angry Greek god. We’re continuously investing effort to keep it happy (i.e. green), but we don’t always understand what we’re doing or why. Please don’t spoil our harvest and protect us from (production) fires, oh mighty RSpec!

The ultimate goal wasn’t to change Quiz Engine functionality, but rather to reduce its load on MySQL. This is the perfect scenario for tests to help us! The test suite we want is:

fast

comprehensive, and

not dependent on implementation

includes performance testing

Unfortunately, that’s not the hand we were given:

The suite takes about 30 minutes to run in CI and even longer locally.

Our QA team finds bugs that sneaked past CI in PRs with Quiz Engine changes relatively frequently.

Many tests ensure that specific queries are performed in a specific order. Considering we might replace MySQL wholesale, these tests provide little value.

And because a lot of Quiz Engine code is extremely performance-sensitive, there’s an increased risk of performance regressions only surfacing with real production load.

Fighting with our tests meant that even small changes would take hours to verify in tests, and then, because of unforeseen regressions not covered by the tests, take multiple attempts to fix, resulting in multiple-day roll-outs for small changes.

Our clock is ticking! We needed to iterate faster than that if we were going to avert the apocalypse.

🐶 I have no idea what I’m doing 🧪

Reading complicated legacy Rails code often raises questions that take surprising amounts of effort to answer.

Is this method dead code? If not, who is calling this?

Are we ever entering this conditional? When?

Is this function talking to the database?

Is this function intentionally talking to the database?

Is this function only reading from the database or also writing to it?

It isn’t even clear what code was running. There are a few features of Ruby (and Rails) which optimize for writing code over reading it. We did our best to unwrap this type of code:

Rails provides devs the ability to wrap functionality in hooks. before_ and after_ hooks let devs write setup and tear-down code once, then forget it. However, the existence of these hooks means calling a method might also evaluate code defined in a different file, and you won’t know about it unless you explicitly look for it. Hard to read!

Complicating things further is Ruby’s dynamic dispatch based on subclassing and polymorphic associations. Which load_students am I calling? The one for Quiz or the one for Practice? They each implement the Assignment interface but have pretty different behavior! And: they each have their own set of hooks🤦. Maybe it’s something completely different!

And then there’s ActiveRecord. ActiveRecord makes it easy to write queries — a little too easy. It doesn’t make it easy to know where queries are happening. It’s ergonomic that we can tell ActiveRecord what we need, and let it figure how to fetch the data. It’s less nice when you’re trying to find out where in the code your queries are happening and the answer to that question is, “absolutely anywhere”. We want to know exactly what queries are happening on these code paths. ActiveRecord doesn’t help.

🧵 A rich history

A final factor that makes working in Quiz Engine code daunting is the sheer size of the beast. The Quiz Engine has grown organically over many years, so there’s a lot of functionality to be aware of.

Because the Quiz Engine itself has been hard to change for a while, APIs defined between bits of Quiz Engine code often haven’t evolved to match our latest understanding. This means understanding the Quiz Engine code requires not just understanding what it does today, but also how we thought about it in the past, and what (partial) attempts were made to change it. This increases the sum of Quiz Engine knowledge even further.

For example, we might try to refactor a bit of code, leading to tests failing. But is this conditional branch ever reached in production? 🤷

Enough complaining. What did we do about it?

We knew this was going to be a huge project, and huge projects, in the best case, are shipped late, and in the average case don’t ever ship. The only way we were going to have confidence that our work would ever see the light of day was by doing the riskiest, hardest, scariest stuff first. That way, if one approach wasn’t going to work, we would find out about it sooner and could try something new before we’d over-invested in a direction.

So: where is the risk? What’s the scariest problem we have to solve? History dictates: The more we change the legacy system, the more likely we’re going to cause regressions.

So our first task: cut away the part of the Quiz Engine that performs database queries and port this logic to a separate service. Henceforth when Rails needs to read or change Quiz Engine data, it will talk to the new service instead of going to the database directly.

Once the legacy-code risk has been minimized, we would be able to focus on the (still challenging) task of changing where we store Quiz Engine data from single-database MySQL to something horizontally scalable.

⛏️ Phase 1: Extracting queries from Rails

🔪 Finding out where to cut

Before extracting Quiz Engine MySQL queries from our Rails service, we first needed to know where those queries were being made. As we discussed above this wasn’t obvious from reading the code.

To find the MySQL queries themself, we built some tooling: we monkey-patched ActiveRecord to warn whenever an unknown read or write was made against one of the tables containing Quiz Engine data. We ran our monkey-patched code first in CI and later in production, letting the warnings tell us where those queries were happening. Using this information we decorated our code by marking all the reads and writes. Once code was decorated, it would no longer emit warnings. As soon as all the writes & reads were decorated, we changed our monkey-patch to not just warn but fail when making a query against one of those tables, to ensure we wouldn’t accidentally introduce new queries touching Quiz Engine data.

🚛 Offloading logic: Our first approach

Now we knew where to cut, we decided our place of greatest risk was moving a single MySQL query out of our rails app. If we could move a single query, we could move all of them. There was one rub: if we did move all queries to our new app, we would add a lot of network latency. because of the number of round trips needed for a single request. Now we have a constraint: Move a single query into a new service, but with very little latency.

How did we reduce latency?

Get rid of network latency by getting rid of the network — we hosted the service in the same hardware as our Rails app.

Get rid of protocol latency by using a dead-simple protocol: socket communication.

We ended up building a socket server in Haskell that took data requests from Rails, and transformed them into a series of MySQL queries, which rails would use to fetch the data itself.

🛸 Leaving the Mothership: Fewer Round Trips

Although co-locating our service with rails got us off the ground, it required significant duct tape. We had invested a lot of work building nice deployment systems for HTTP services and we didn’t want to re-invent that tooling for socket-based side-car apps. The thing that was preventing the migration was having too many round-trip requests to the Rails app. How could we reduce the number of round trips?

As we moved MySQL query generation to our new service, we started to see this pattern in our routes:

MySQL Read some data ┐ Ruby Do some processing │ candidate 1 for MySQL Read some more data ┘ extraction Ruby More processing MySQL Write some data ┐ Ruby Processing again! │ candidate 2 for MySQL Write more data ┘ extraction

To reduce latency, we’d have to bundle reads and writes: In addition to porting reads & writes to the new service, we’d have to port the ruby logic between reads and writes, which would be a lot of work.

What if instead, we could change the order of operations and make it look like this?

MySQL Read some data ┐ candidate 1 for MySQL Read some more data ┘ extraction Ruby Do some processing Ruby More processing Ruby Processing again! MySQL Write some data ┐ candidate 2 for MySQL Write more data ┘ extraction

Then we’d be able to extract batches of queries to Haskell and leave the logic behind in Rails.

One concern we had with changing the order of operations like this was the possibility of a request handler first writing some data to the database, then reading it back again later. Changing the order of read and write queries would result in such code failing. However, since we now had a complete and accurate picture of all the queries the Rails code was making, we knew (luckily!) we didn’t need to worry about this.

Another concern was the risk of a large refactor like this resulting in regressions causing long feedback cycles and breaking the Quiz Engine. To avoid this we tried to keep our refactors as dumb as possible: Specifically: we mostly did a lot of inlining. We would start with something like this

class QuizzesControllller 9000 :super_saiyan else load_sub_syan_fun_type # TODO: inline me end end end end

These are refactors with a relatively small chance of changing behavior or causing regressions.

Once the query was at the top level of the code it became clear when we needed data, and that understanding allowed us to push those queries to happen first.

e.g. from above, we could easily push the previously obscured QuizForFun query to the beginning:

class QuizzesControllller 9000 :super_saiyan else load_sub_syan_fun_type # TODO: inline me end end end

You might expect our bout of inlining to introduce a ton of duplication in our code, but in practice, it surfaced a lot of dead code and made it clearer what the functions we left behind were doing. That wasn’t what we set out to do, but still, nice!

👛 Phase 2: Changing the Quiz Engine datastore

At this point all interactions with the Quiz Engine datastore were going through this new Quiz Engine service. Excellent! This means for the second part of this project, the part where we were actually going to avert the MySQL apocalypse, we wouldn’t need to worry about our legacy Rails code.

To facilitate easy refactoring, we built this new service in Haskell. The effect was immediately noticeable. Like an embargo had been lifted, from this point forward we saw a constant trickle of small productive refactors get mixed in the work we were doing, slowly reshaping types to reflect our latest understanding. Changes we wouldn’t have made on the Rails side unless we’d have set aside months of dedicated time. Haskell is a great tool to use to manage complexity!

The centerpiece of this phase was the architectural change we were planning to make: switching from MySQL to a horizontally scalable storage solution. But honestly, figuring the architecture details here wasn’t the most interesting or challenging portion of the work, so we’re just putting that aside for now. Maybe we’ll return to it in a future blog post (sneak peek: we ended up using Redis and Kafka). Like in step 1, the biggest question we had to solve was “how are we going to make it safe to move forward quickly?”

One challenge was that we had left most of our test suite behind in Rails in phase one, so we were not doing too well on that front. We added Haskell test coverage of course, including many golden result tests which are worth a post on their own. Together with our QA team we also invested in our Cypress integration test suite which runs tests from the browser, thus integration-testing the combination of our Rails and Haskell code.

Our most useful tool in making safe changes in this phase however was our production traffic. We started building up what was effectively a parallel Haskell service talking to Redis next to the existing one talking to MySQL. Both received production load from the start, but until the very end of the project only the MySQL code paths’ response values were used. When the Redis code path didn’t match the MySQL, we’d log a bug. Using these bug reports, we slowly massaged the Redis code path to return identical data to MySQL.

Because we weren’t relying on the output of the Redis code path in production, we could deploy changes to it many times a day, without fear of breaking the site for students or teachers. These deploys provided frequent and fast feedback. Deploying frequently was made possible by the Haskell Quiz Engine code living in its own service, which meant deploys contained only changes by our team, without work from other teams with a different risk profile.

🥁 So, did it work?

It’s been about a month since we’ve switched entirely to the new architecture and it’s been humming along happily. By the time we did the official switch-over to the new datastore it had been running at full-load (but with bugs) for a couple of months already. Still, we were standing ready with buckets of water in case we overlooked something. Our anxiety was in vain: the roll-out was a non-event.

Architecture, plans, goals, were all important to making this a success. Still, we think the thing most crucial to our success was continuously improving our feedback loops. Fast feedback (lots of deploys), accurate feedback (knowing all the MySQL queries Rails is making), detailed feedback (lots of context in error reports), high signal/noise ratio (removing errors we were not planning to act on), lots of coverage (many students doing quizzes). Getting this feedback required us to constantly tweak and create tooling and new processes. But even if these processes were sometimes short-lived, they've never been an overhead, allowing us to move so much faster.

3 notes

·

View notes

Text

Get file path filter to filter csv nifi

#GET FILE PATH FILTER TO FILTER CSV NIFI HOW TO#

#GET FILE PATH FILTER TO FILTER CSV NIFI ZIP#

The default is oldest first, but it can be configured to pull the newest first, largest first, or another custom scheme.

#GET FILE PATH FILTER TO FILTER CSV NIFI HOW TO#

Prioritized Queuing - Apache NiFi allows setting one or more prioritization schemes for how to retrieve data from a queue.Data Buffering / Back Pressure and Pressure Release - Apache NiFi supports buffering of all queued data as well as the ability to provide back pressure as those lines reach specified limits or to an age of data as it reaches a specified age (its value has perished).It is achievable through the efficient use of a purpose-built persistent write-ahead log and content repository. Guaranteed Delivery - A core philosophy of NiFi has been that guaranteed delivery is a must, even at a very high scale.Extremely Scalable, extensible platform.Support both Standalone and Cluster mode.Want to accelerate your adoption of Apache NiFi to be more productive? Get expert advice from Apache NiFi Expert What are the benefits of Apache NiFi? These links help transfer the data to any storage or processor even after the failure by the processor. Each processor in NiFi has some relationships like success, retry, failed, invalid data, etc., which we can use while connecting one processor to another. It is a UI-based platform where we need to define our source from where we want to collect data, processors for converting the data, a destination where we want to store the data. The process can also do some data transformation. NiFi helps to route and processing data from any source to any destination. What is Apache NiFi and its Architecture?Īpache NiFi provides an easy-to-use, powerful, and reliable system to process and distribute the data over several resources. Data Discovery, Prototyping, and experimentation.Data Governance with a clear distinction of roles and responsibilities.Tracking measurements with alerts on failure or violations.Testing Setup for experimenting with new technologies and data.Reduce costs by offloading analytical systems and archiving cold data.A Central Repository for Big Data Management.What are the Data Lake Objectives for Enterprise?īelow are the objectives of the enterprise data lake The Data Collection and Ingestion from Text/CSV Files.

#GET FILE PATH FILTER TO FILTER CSV NIFI ZIP#

Data Collection and Ingestion from Zip Files.The Data Collection and Ingestion from RDBMS (e.g., MySQL).Twitter Data using Apache Nifi and in Coming Blogs, we will be Sharing Data Collection and Ingestion from Below Sources. We are sharing how to Ingest, Store, and Process. Patterns that emerged have been discussed and articulated extensively in this Blog. The problems and solution The problems and solution patterns that emerged have been discussed and articulated extensively. In Today’s World, Enterprises are generating data from different sources and building a Real-Time Data lake we need to Integrate various sources of Data into One Stream. What is Big Data Analytics and Ingestion?ĭata Collection and Data Ingestion are the processes of fetching data from any data source which we can perform in two ways. Source: Data Ingestion Platform Using Apache Nifi It is a UI-based platform where we need to define our source from where we want to collect data, processors for converting the data, a destination where we want to store the data.Īpache Nifi is an open source for distributing and processing data supporting data routing and transformation. The process can also do some Data Transformation. Apache NiFi is used for routing and processing data from any source to any destination. NiFi works in both standalone mode and cluster mode. Apache NiFi for Data Lake delivers easy-to-use, powerful, and reliable solutions to process and distribute the data over several resources. Moreover, it is easy and self-service management that requires little to no coding. If you are looking for an enterprise-level solution to reduce the cost and complexity of big data, using Data Lake services is the best and most cost-efficient solution.

0 notes

Text

Understanding MySQL’s New Heatwave

Data Analytics is important in any company as you can see what happened in the past to be able to make smart decisions or even predict future actions using the existing data. Analyze a huge amount of data could be hard and you should need to use more than one database engine, to handle OLTP and OLAP workloads. In this blog, we will see what is HeatWave, and how it can help you on this task. What is HeatWave? HeatWave is a new integrated engine for MySQL Database Service in the Cloud. It is a distributed, scalable, shared-nothing, in-memory, columnar, query processing engine designed for fast execution of analytic queries. According to the official documentation, it accelerates MySQL performance by 400X for analytics queries, scales out to thousands of cores, and is 2.7X faster at around one-third the cost of the direct competitors. MySQL Database Service, with HeatWave, is the only service for running OLTP and OLAP workloads directly from the MySQL database. How HeatWave Works A HeatWave cluster includes a MySQL DB System node and two or more HeatWave nodes. The MySQL DB System node has a HeatWave plugin that is responsible for cluster management, loading data into the HeatWave cluster, query scheduling, and returning query results to the MySQL DB System. HeatWave nodes store data in memory and process analytics queries. Each HeatWave node contains an instance of HeatWave. The number of HeatWave nodes required depends on the size of your data and the amount of compression that is achieved when loading the data into the HeatWave cluster. We can see the architecture of this product in the following image: As you can see, users don’t access the HeatWave cluster directly. Queries that meet certain prerequisites are automatically offloaded from the MySQL DB System to the HeatWave cluster for accelerated processing, and the results are returned to the MySQL DB System node and then to the MySQL client or application that issued the query. How to use it To enable this feature, you will need to access the Oracle Cloud Management Site, access the existing MySQL DB System (or create a new one), and add an Analitycs Cluster. There you can specify the type of cluster and the number of nodes. You can use the Estimate Node Count feature to know the necessary number based on your workload. Loading data into a HeatWave cluster requires preparing tables on the MySQL DB System and executing table load operations. Preparing Tables Preparing tables involves modifying table definitions to exclude certain columns, define string column encodings, add data placement keys, and specify HeatWave (RAPID) as the secondary engine for the table, as InnoDB is the primary one. To define RAPID as the secondary engine for a table, specify the SECONDARY_ENGINE table option in a CREATE TABLE or ALTER TABLE statement: mysql> CREATE TABLE orders (id INT) SECONDARY_ENGINE = RAPID; or mysql> ALTER TABLE orders SECONDARY_ENGINE = RAPID; Loading Data Loading a table into a HeatWave cluster requires executing an ALTER TABLE operation with the SECONDARY_LOAD keyword. mysql> ALTER TABLE orders SECONDARY_LOAD; When a table is loaded, data is sliced horizontally and distributed among HeatWave nodes. After a table is loaded, changes to a table's data on the MySQL DB System node are automatically propagated to the HeatWave nodes. Example For this example, we will use the table orders: mysql> SHOW CREATE TABLE ordersG *************************** 1. row *************************** Table: orders Create Table: CREATE TABLE `orders` ( `O_ORDERKEY` int NOT NULL, `O_CUSTKEY` int NOT NULL, `O_ORDERSTATUS` char(1) COLLATE utf8mb4_bin NOT NULL, `O_TOTALPRICE` decimal(15,2) NOT NULL, `O_ORDERDATE` date NOT NULL, `O_ORDERPRIORITY` char(15) COLLATE utf8mb4_bin NOT NULL, `O_CLERK` char(15) COLLATE utf8mb4_bin NOT NULL, `O_SHIPPRIORITY` int NOT NULL, `O_COMMENT` varchar(79) COLLATE utf8mb4_bin NOT NULL, PRIMARY KEY (`O_ORDERKEY`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_bin You can exclude columns that you don’t want to load to HeatWave: mysql> ALTER TABLE orders MODIFY `O_COMMENT` varchar(79) NOT NULL NOT SECONDARY; Now, define RAPID as SECONDARY_ENGINE for the table: mysql> ALTER TABLE orders SECONDARY_ENGINE RAPID; Make sure that you have the SECONDARY_ENGINE parameter added in the table definition: ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_bin SECONDARY_ENGINE=RAPID And finally, load the table into HeatWave: mysql> ALTER TABLE orders SECONDARY_LOAD; You can use EXPLAIN to check if it is using the correct engine. You should see something like this: Extra: Using where; Using temporary; Using filesort; Using secondary engine RAPID On the MySQL official site, you can see a comparison between a normal execution and using HeatWave: HeatWave Execution mysql> SELECT O_ORDERPRIORITY, COUNT(*) AS ORDER_COUNT FROM orders WHERE O_ORDERDATE >= DATE '1994-03-01' GROUP BY O_ORDERPRIORITY ORDER BY O_ORDERPRIORITY; +-----------------+-------------+ | O_ORDERPRIORITY | ORDER_COUNT | +-----------------+-------------+ | 1-URGENT | 2017573 | | 2-HIGH | 2015859 | | 3-MEDIUM | 2013174 | | 4-NOT SPECIFIED | 2014476 | | 5-LOW | 2013674 | +-----------------+-------------+ 5 rows in set (0.04 sec) Normal Execution mysql> SELECT O_ORDERPRIORITY, COUNT(*) AS ORDER_COUNT FROM orders WHERE O_ORDERDATE >= DATE '1994-03-01' GROUP BY O_ORDERPRIORITY ORDER BY O_ORDERPRIORITY; +-----------------+-------------+ | O_ORDERPRIORITY | ORDER_COUNT | +-----------------+-------------+ | 1-URGENT | 2017573 | | 2-HIGH | 2015859 | | 3-MEDIUM | 2013174 | | 4-NOT SPECIFIED | 2014476 | | 5-LOW | 2013674 | +-----------------+-------------+ 5 rows in set (8.91 sec) As you can see, there is an important difference in the time of the query, even in a simple query. For more information, you can refer to the official documentation. Conclusion A single MySQL Database can be used for both OLTP and Analytics Applications. It is 100% compatible with MySQL on-premises, so you can keep your OLTP workloads on-premises and offload your analytics workloads to HeatWave without changes in your application, or even use it directly on the Oracle Cloud to improve your MySQL performance for Analytics purposes. Tags: MySQLMySQL HeatwaveHeatWave clustermysql deployment https://severalnines.com/database-blog/understanding-mysql-s-new-heatwave

0 notes

Text

Walk in Interview's in Dubai For Any Nationality Fresher & Experience

Walk in Interview's in Dubai For Any Nationality Fresher & Experience Company Name: Sankari Investment LLC Experience: 1 year UAE experience in Retail Fashion industry Interview Date: Sunday, 27th October 2019 Timing For Russian Speaking: 10:00 AM – 01:00 PM Timing For Chinese Speaking: 02:00 PM – 05:00 PM Location: ABIDOS Hotel, Behind Mall of Emirates, Al Barsha, Dubai. Contact #056 9930429 Call Center Agent

Walk in Interview's in Dubai For Any Nationality Fresher & Experience Company Name: Tafaseel Business Services Education: Graduate in any discipline Experience: 1 year similar experience Core Skills: Communication & Telephonic Etiquette Language Skills: English & Arabic Package: Salary + Labor Card + Medical Insurance + 30 Days Annual Leave Annual Flight Ticket + Overtime + Bonus Interview Date: Thursday, 24th October 2019 Timing: 10:00 AM – 01:00 PM Location: Tafaseel Business Services, Ground Floor, Conqueror Tower, Near Galleria Mall, Ajman. Mech/Civil/E&I Company Name: Galfar Engineering & Contracting LLC Education: Graduate Engineer (JP Approval From ADNOC) Joining Status: Immediately Interview Date: Thursday, 24th October 2019 Timing: 02:00 PM (Onwards) Location: Galfar Engineering & Contracting WLL- Emirates Corporate Office, MW4, Opposite of ADSB, Industrial Area, Mussafah, Abu Dhabi. Content Writer Company Name: HHS Corporate Services Provider Experience: Content Writing experience Knowledge: SEO, Branding & Product Promotion Well Versed: MS Suite, Word, PowerPoint, Outlook, Excel & Google Docs Core Skills: Interpersonal & Communication Skills Language Skills: English (Written & Verbal) Interview Date: Thursday, 24th October 2019 Timing: 10:00 AM – 02:00 PM Location: Office #501, 5th Floor, Saheel 1, Al Nahda 1, Dubai. Contact Person: Sanchit Jain Contact #056 3987222 Thrifty Car Rental Hiring (7 Vacancies) 1) Car Cleaners 2) Auto Painters 3) Auto Denters 4) Light Duty Drivers 5) Service Coordinator 6) Fleet Coordinator 7) Workshop Supervisor Experience: 1 year commercial driving experience License: UAE valid driving license Salary Range: 1900 – 4000 AED /- Interview Date: Thursday, 24th October 2019 Timing: 09:00 AM – 02:00 PM Location: Thrifty Auto Repair Office, Mussafa 33, Abu Dhabi. Driver (Loading & Offloading) Group Name: Universal Group Of Companies Experience: 10 years similar experience License: UAE valid driving license #3&6 Language Skills: English Monthly Salary: 2000 AED /- Interview Date: 21st Oct – 24th Oct 2019 Timing: 10:00 AM – 04:00 PM Location: Office #110, KML Building, Al Meydan Street, Al Quoz 1, Dubai. Cleaner (Male) Group Name: Universal Group Of Companies Nationality: Nepali, Indian & Filipino Experience: 2 years similar experience Language Skills: English Monthly Salary: 900 AED /- Interview Date: 21st Oct – 24th Oct 2019 Timing: 10:00 AM – 04:00 PM Location: Office #110, KML Building, Al Meydan Street, Al Quoz 1, Dubai. Customer Service Agent (Strictly No Visit Visa) Company Name: 6thStreet.com Job Type: 2 Months (Project) Experience: 1 year call center experience Language Skills: Bilingual – English & Arabic (Written & Verbal) Visa Type: Parents/Student Visa Working Hours: 6 days a week Age Limit: Below 35 years old Salary Range: 4000 – 4500 AED /- Interview Date: 21st Oct – 24th Oct 2019 Timing: 10:00 AM – 03:00 PM Location: 6thstreet (Apparel LLC), Building #27, Street #14b, Opposite Dubai Source Advertising LLC, Al Quoz Industrial Area 4, Dubai. Must Bring: CV, Passport & Visa Copy Company Name: Shaun Technologies General Trading LLC Experience: 1 year retail sales experience Core Skills: Communication Age Limit: Below 30 years old Gender: Female Monthly Salary: 2500 AED /- Benefits: Incentives Interview Date: 21st Oct – 23rd Oct 2019 Timing: 10:00 AM – 11:00 AM Location: Office #206, 2nd Floor, Al Fajer Complex Building, (Home R Us Same Building), Near Oud Metha Metro Station Exit #1, Dubai. On The Wood Restaurant Hiring (4 Vacancies) 1) Waiters (Filipino/Nepali) Salary Package: 1450 – 1500 AED + Accommodation + Transportation 2) Kitchen Helpers (Filipino, Nepali & Nigerian) Salary Package: 1450 – 1500 AED + Accommodation + Transportation 3) Restaurant Supervisors (Filipino) Salary Package: 3750 AED /- (Including Benefits) 4) Bike Delivery Riders Experience: 1 year similar experience Language Skills: English (Written & Verbal) Visa Type: Visit/Cancelled Visa Working Hours: 12 hours a day Age Limit: 21-35 years old Interview Date: 21st Oct – 23rd Oct 2019 Timing: 11:30 AM – 03:00 PM Location: Good Mood Cafeteria, Beside Falcon Pack Sales Office, Industrial Area #15, Sharjah. Karamna Al Khaleej Hiring (2 Vacancies) 1) Front Of House 2) Back Of House Interview Date: 15th, 16th, 22nd & 23rd Oct 2019 Timing: 12:00 PM – 06:00 PM Location: The Address, Downtown, Sheikh Mohammed Bin Rashid, Boulevard, Near Dubai Mall, Dubai. Email Contact #[email protected] Contact #04 4580899/04 5148344 Warehouse Assistant Consultancy Name: Innovation Direct Employment Services Industry: eCommerce Job Type: 2.5 Months (Extendable) Nationality: Indian/Pakistani/Nepali/Sri Lankan/Filipino Language Skills: English (Written & Verbal) Visa Type: Residence Visa Joining Status: Immediately Monthly Salary: 1500 AED /- Benefits: Transportation + Accommodation Interview Date: Tuesday, 22nd October 2019 Timing: 10:00 AM – 01:00 PM Location: Office #2301, 23rd Floor, Tiffany Towers, Cluster “W”, Jumeirah Lake Towers, Nearest Damac Properties Metro Station, P.O.Box 4452, Dubai. Must Bring: Passport Size Photograph (White Background), Passport Copy, Visa Copy, Emirates ID Copy, NOC Letter, Current Trade License Copy & Labor Card Copy Key Accounts Manager Consultancy Name: Innovations Direct Employment Services LLC Experience: UAE experience in consumer electronics Monthly Salary: 12000 AED /- Interview Date: Tuesday, 22nd October 2019 Timing: 10:00 AM – 01:00 PM Location: Innovations cluster, 23rd Floor, Cluster – W, artist Tower, urban center dock JLT, DAMAC Properties railroad line Station, Exit-1, Dubai. Sales Executive (Female Filipino) Tour Agency: Pinoy Tours & Travels LLC Experience: Freshers & Experienced Language Skills: English (Proficient) Monthly Salary: 2500 AED /- Interview Date: Tuesday, 22nd October 2019 Timing: 12:00 PM – 02:00 PM Location: Pinoy Tourism & Travels LLC, Office #803, Abu Dhabi Plaza, Hamdan Street, Abu Dhabi. Contact #02 6350007 Outdoor Sales Executive Company Name: Telecommunication Experience: 2-4 years UAE FMCG experience License: UAE valid driving license (Either 2 Wheeler/4 Wheeler Vehicle) Interview Date: Tuesday, 22nd October 2019 Timing: 09:00 AM – 03:00 PM Location: 050 Telecom, Head Office, Behind Dynatrade Center, Near Noor Bank Metro Station, Al Quoz Industrial Area, Dubai. ERP Developer Company Name: Cosmos Sports LLC Experience: 3 years software development experience Language Skills: English (Written & Verbal) Core Skills: Facilitation, Negotiation, Presentation & Teamwork Knowledge: MySQL, JDE Modules – Finance (AP, AR, FA & GA) Salary Range: 2000 – 2500 AED /- Interview Date: Tuesday, 22nd October 2019 Timing: 11:30 AM – 01:30 PM Location: Cosmos Sports LLC, Zabeel Street, Opposite Karama Post Office, Karama, Dubai. Finance Executive (Senior) Company Name: ASA Ventures Education: BS in Finance, Accounting or Economics & CFA/CPA (Plus) Experience: 5 years Financial Analyst & 3 years Auditing experience Expertise: MS Excel Well Versed: Finance Database, Analytical, Demonstrable Strategic etc. Salary Range: 7000 – 8000 AED /- Interview Date: Tuesday, 22nd October 2019 Timing: 03:00 PM – 06:00 PM Location: ASA Ventures, 28th Floor, Armada Tower #2, Cluster P, Jumeirah Lake Towers, Dubai. Sales Officer (Multiproducts) Consultancy Name: PACT Employment Services Job Location: Dubai/Abu Dhabi Nationality: Arabs Only Education: Fresh Graduate Experience: UAE outdoor sales experience License: UAE valid driving license Core Skills: Selling, Communication & Relationship Building Skills Salary Range: 5000 – 6000 AED /- Benefits: Incentives Interview Date: 21st Oct – 22nd Oct 2019 Timing: 10:30 AM – 02:00 PM Location: PACT Employment Services, Office #209, Carrera Building, Karama, Dubai. Email CV: [email protected] Pathway Global HR Consultancy (3 Vacancies) 1) Bike Drivers (Any Nationality) Job Location: Abu Dhabi Monthly Salary: 2000 AED /- Benefits: Meal + Bike + Tranportation + Accommodation 2) Light Vehicle Drivers (Any Nationality) Job Location: Abu Dhabi Monthly Salary: 2000 AED /- Benefits: Meal + Car + Transportation + Accommodation 3) Helpers (Any Nationality) Job Location: Abu Dhabi Salary Range: 1000 – 1200 AED /- Benefits: Meal + Transportation + Accommodation Experience: 1 year similar experience Visa Type: Own Visa Holder (4 Months) Interview Date: 20th Oct – 22nd Oct 2019 Timing: 08:30 AM – 02:00 PM Location: Office #207, Makateb Building, Greenhouse, Deira, Dubai. Contact #04 2555620 Security Guard (Male) Group Name: Universal Group Of Companies Nationality: Indian, Nepali, Pakistani & Sri Lankan License: SIRA Experience: 4 year similar experience Language Skills: English (Proficient) Salary Range: 1500 – 1700 AED /- Interview Date: Monday, 21st October 2019 Timing: 10:00 AM – 04:00 PM Location: Office #110, KML Building, Al Meydan Street, Al Quoz 1, Dubai. Driver Company Name: Transcorp International LLC Experience: 1 year similar experience License: UAE valid manual driving license Knowledge: UAE routes & location Language Skills: English Salary Range: 2000 – 3000 AED /- Interview Date: Tomorrow Onward Timing: 10:00 AM – 11:00 PM/02:00 PM – 03:00 PM Location: Warehouse #24, Union Properties, Al Quoz Industrial Area #1, Dubai. Beautician (Female Filipino) Company Name: Coral Beauty Lounge Experience: Manicure, Pedicure, Nail Extensions, Acrylic, Hair Treatments, Body Treatments etc. Interview Date: Saturday – Thursday 2019 Timing: 02:00 PM – 06:00 PM Location: Al Zaabi Group, M Floor, Pink Building, Besides Royal Rose Hotel, Electra Street, Abu Dhabi. Lady Worker (Filipino & Nepali) Company Name: Your Own Cleaning Services Experience: House cleaning experience Visa Type: Cancelled Visa (Finished Contract) Language Skills: English Age Limit: 28-40 years old Joining Status: Immediately Salary Range: 2000 – 2200 AED /- Benefits: Overtime + Transportation + Live Out Interview Day: Saturday – Thursday 2019 Timing: (Contact For Timing) Location: Office #1809, Metropolis Tower, Business Bay, Dubai. Relationship Officer (Personal Finance & Credit Cards) Company Name: Zeegles Financing Nationality: Indian, Pakistani & Filipino Experience: 6 Months or 1 year UAE/Home Country experience Core Skills: Time Management Skills Language Skills: English (Written & Verbal) Salary Range: 2000 – 4000 AED /- Interview Day: Sunday – Thursday 2019 Timing: 02:00 PM – 05:00 PM Location: Zeegles Financing Broker LLC, M23, Atrium Center, Bank Street, Bur Dubai, Dubai. Bike Riders For Food Delivery (Any Nationality) Company Name: Accelerate Delivery Services Job Location: Dubai & Abu Dhabi Experience: Food delivery experience Visa Type: AzadResidence/Freelance Visa (Valid For 3 Months) Salary Range: 2400 – 2700 AED /- Package: Fixed Salary + Overtime + Incentives + Petrol Card + Salik + Mobile Data + Uniform + Vehicle + Accessories Interview Day: Sunday – Thursday 2019 Timing: 10:00 AM – 01:00 PM Location: Room #1006/1007, Le Solarium Tower, Dubai Silicon Oasis, Dubai. Whatsapp #056 5339030 Must Bring: CV, Emirates ID (Front & Back), Driving License (Front & Back), Passport & Visa Copy Taxi Driver Company Name: Arabia Taxi LLC Nationality: Selective Education: 10th Grade or O Level Passed Experience: Knowledge of UAE Roads License: UAE valid driving license Language Skills: English (Written & Verbal) Gender: Male/Female Age Limit: 23-48 years old Package: pay + Commission + Visa + Accommodation + insurance Interview Day: Every Sunday – Thursday (Except Friday & Saturday) Timing: 08:00 AM – 05:00 PM Location: peninsula Taxi Transportation LLC, P.O Box #111126, Umm Al Nar, Abu Dhabi. Contact: 02 5588099, 055 8007908 Email CV: [email protected] Read the full article

0 notes

Text

Top Software Development Trends in 2020 - 10 Key Trends in Software Development

Are you searching for top software trends in 2020? This is the perfect place for you! Here you will get the best software development trends that will rule the year 2020.

No industry doesn't witness change over time. When it comes to technology, change is more frequent than many other industries. Over the years, numerous software development trends are growing in terms of potential, implementation and acceptance. In the IT sector, there occurred disruptive fluctuations in ideologies. In each year, a new trend ranges tech circles, from SDLC to Agile to modern-day IoT and blockchain. Everybody makes forecasts about innovation, top innovation patterns, and future business patterns and these three are interconnected to each other.

The demand for developers will be different in 2020. It is the year where we see a flood of excitement for the changes and trends in tech. The latest trends like edge computing, machine learning, and artificial intelligence go mainstream and gain larger adoption. However, blockchain and artificial intelligence will have a result beyond our expectation. 2020 will be an exciting year for software, and developers will play a pivotal role in it. Here are 10 software development trends that will rule 2020.

Mixed Reality (MR): Larger possibilities in enterprise solutions

A combination of Augmented Reality (AR) and Virtual Reality (VR) has important possibilities in enterprise applications. AR combines digital content with the physical environment of users, while VR creates a vast experience for users. Organisations such as defence, tourism, architecture, construction, gaming, healthcare, etc. are using this technology to understand key business value. The demand for MR is growing rapidly hence you are required to consider several MR use cases for implementation on the type of your enterprise. Businesses, government organizations, and even non-profit organizations can practice AR and VR to guide their employees in difficult jobs. Manufacturing businesses can improve their design processes and overall engineering by using 3D modelling in a VR environment.

Blockchain: providing transparency, efficiency and security

Blockchain is a peer to peer (P2P) network, it provides decentralizations, a distributed ledger, security features, and transparency. Smart contracts working on blockchain networks are tamper-proof and transparent, hence they improve trust. Its execution is irreversible, that helps contract administration much easier. Business and governments are intensely examining the blockchain. As a result, blockchain's global market is developing rapidly. Blockchain can add value to various sectors including, supply chain management, supply chain management is a difficult method having a lot of paperwork, manual processes, and inefficiencies. Blockchain can simply streamline this process. Blockchain uses for better analytics, analytics software requires reliable data for giving the right insights and forecasts.

Artificial Intelligence (AI): Leading in intelligent enterprise systems

Artificial Intelligence (AI) is a multi-disciplinary branch of computer science, which is designed for performing tasks that only undertaken by human beings earlier. It is a huge field and some of the parts of it have been commercialized, research and development remain on its other dimensions. It integrates several capabilities such as machine learning (ML), deep learning, vision, natural language processing, speech, etc. This discipline of computer science helps computer systems to improve upon their capabilities of executing human-like tasks. AI can benefit your business through a wide range of use cases. AI's image and video recognition capabilities could assist law enforcement and security agencies. It can create efficient and scalable processing of patient data which will help healthcare delivery organizations. Its powered predictive maintenance could enhance the operational capability in the construction industry.

The Internet of Things (IoT)

As technology grows, the Internet of Things (IoT) has become an inevitable part of every business. It is a network, contain physical objects such as gadgets, devices, vehicles, appliances, etc., and these devices give sensors. These devices use application programming interfaces for transferring data over the internet. IoT network has developed with the collaboration of various technologies including sensors, Big Data, AI, ML, Radio-Frequency Identification (RFID), and APIs. The demand for IoT is experiencing significant growth and it will go beyond our expectations. IoT can be used for various circumstances including predictive maintenance. Its predictive maintenance capabilities help various industries like oil & gas, manufacturing to keep their expensive machinery running at an optimal level. IoT allows monitoring energy consumption in real-time that is essential for managing energy distribution and making relevant pricing adjustments. Smart meters equipped with IoT make this for energy companies.

Language and framework trends to develop enterprise apps

As technology advances, you are required to decide what languages and frameworks should use for developing your enterprise apps. JavaScript is the most popular language, with HTML /CSS, SQL, Python, and Java following it. jQuery is the most popular web framework followed by React.js, Angular, and ASP.NET. .NET following js as it is the most popular among libraries and other frameworks. There are three most popular databases such as MySQL, PostgreSQL, and Microsoft SQL Server. Both Kotlin and Swift are the popular languages use to native development on Android and iOS respectively.

Cybersecurity: A Key consideration when building enterprise solutions

While developing an enterprise app, it is important to consider cybersecurity. The lack of cybersecurity will cause trillions of dollars in losses. Cybercriminals are combined, and also they are continuously upgrading their capabilities. Cyber-attackers turn to various kind of attacks like phishing attacks, distributed-denial-of-services (DDoS), etc. Market observers state that cyber-attacks will cause $6 trillion in losses in 2021. Adhering to IT architecture and coding guidelines will help you reduce application security risks and can manage the projects as well.

Key applications security risks are as follows:

Injection;

Ineffective authentication;

Exposure of sensitive data;

XML external entities (XXE);

Incorrect implementation of identity and access management;

Inadequate security configurations;

Cross-site scripting (XSS);

Deserialization without adequate security;

Using outdated software with known vulnerabilities;

Sub-optimal logging and monitoring processes.

Progressive Web Applications (PWAs)

Native apps provide the best user experience and performance to the users. For developing native apps, you are required to develop apps for Android and iOS separately. You also have to consider the long-term maintenance cost for two different codebases. Progressive web apps are web apps, it is similar to native apps as it delivers the best user-experience to the users. It gives many features including responsive, speedy, and secure, moreover, it works offline. Installing a PWA on the home screen can support push notifications. You don't need to search it in app stores, you can find it on the web. PWAs are linkable, and they are always up to date. Nowadays, various businesses are used PWAs to improve customer engagement.

Low-code development

Low-code development is one of the main key trends that have emerged in recent years. With this approach, businesses can improve software development efficiency, and they try to minimize hand-coding for this. Low-code development platforms provide GUIs, which allows developers to draw flowcharts depicting the business logic. The platform then creates code to implement that business logic. You can use low-code platforms to make your digital transformation. Moreover, using low-code platforms can reduce the backlog of IT development projects. These platforms will help users reduce their dependency on niche technical skills.

Code quality

Code quality has an important role in software development, therefore, you need to understand, and provide more priority to this. Readability, consistency, predictability, reliability, robustness, maintainability are several aspects of code qualities. If software development teams maintain a high-quality code, then it enables their organizations to maintain the code easily. As they write code with lower complexity, the team doesn‘t need to do too many bugs.

Outsourcing

As the global business environment progresses more and more complicated, businesses are trying to make the best use of the capabilities they have. An IT company might have developed various software solutions over the years, but this is not enough to maintain a good portfolio in this field. Businesses have realised that it is important to focus on their core competencies and offloading the peripheral services to partners that have core competencies there. This realization leads to drive the trend of IT outsourcing. As per the reports, in large business organizations, there is a drastic change occurred in the IT budget spent on outsourcing. It also influenced in medium-sized businesses. You need to outsource software development for the growth of your business. You can focus on the right priorities like delivering quality products to the customers.

Understanding the top software development trends help, you will still need to do your development successfully. It will be difficult when the project is complex. You will have to consider a trusted software development company to execute such projects.

0 notes

Text

Will Cheap Hosting Plans

Who File Hosting Providers