#Role-based access control (RBAC)

Explore tagged Tumblr posts

Text

Implementing Role-Based Access Control in Django

Learn how to implement Role-Based Access Control (RBAC) in Django. This guide covers defining roles, assigning permissions, and enforcing access control in views and templates.

Introduction Role-Based Access Control (RBAC) is a method of regulating access to resources based on the roles of individual users within an organization. Implementing RBAC in Django involves defining roles, assigning permissions, and enforcing these permissions in your views and templates. This guide will walk you through the process of implementing RBAC in Django, covering user roles,…

#Django#Django templates#Django Views#permissions#Python#RBAC#role-based access control#Security#user roles#web development

0 notes

Text

HIPAA Compliance Solutions: Protecting Healthcare Data with Confidence

In today’s healthcare landscape, protecting patient data is not just a regulatory obligation but also a fundamental trust-building measure. The Health Insurance Portability and Accountability Act (HIPAA) sets the standards for safeguarding sensitive patient information, and non-compliance can lead to significant financial penalties and reputational damage. As cyber threats targeting healthcare organizations continue to rise, having robust HIPAA compliance solutions in place is critical to ensuring that patient data remains safe and secure.

What is HIPAA Compliance?

HIPAA is a U.S. federal law that governs how healthcare organizations must handle and protect personal health information (PHI). The law applies to healthcare providers, health plans, and healthcare clearinghouses, as well as business associates that manage or process PHI on behalf of healthcare entities.

HIPAA compliance is crucial for healthcare organizations because it ensures that patient data is protected from unauthorized access, misuse, or breach. Additionally, HIPAA compliance helps healthcare providers reduce the risks associated with data breaches, including fines, legal action, and the loss of patient trust.

Why HIPAA Compliance is Essential

The healthcare industry is a prime target for cybercriminals due to the wealth of sensitive data it handles. A breach in healthcare data not only exposes patient information but can also lead to significant financial loss. Under HIPAA, healthcare organizations are required to implement various safeguards to protect patient data, including administrative, physical, and technical protections.

Failing to comply with HIPAA standards can lead to penalties ranging from $100 to $50,000 per violation, with a maximum annual penalty of $1.5 million. These penalties can severely damage an organization's financial standing and reputation.

Moreover, HIPAA compliance helps healthcare organizations foster patient trust. When patients know that their personal health information is handled securely, they are more likely to choose and stay with providers who prioritize privacy and data protection.

Key HIPAA Compliance Solutions for Healthcare Providers

1. Risk Assessment and Management

Regular risk assessments are a cornerstone of HIPAA compliance. A thorough risk assessment identifies potential vulnerabilities in your systems and processes and allows you to address them proactively. It also helps you ensure that any changes to your systems or practices don’t inadvertently expose PHI to unauthorized access.

2. Data Encryption

Encrypting sensitive patient data is one of the most effective ways to protect it from unauthorized access. Both data in transit (e.g., during communications) and data at rest (e.g., stored data) must be encrypted to meet HIPAA standards.

3. Access Controls

Access to PHI should be limited to authorized personnel only. Implementing role-based access controls (RBAC) ensures that staff members only access the data they need to perform their jobs. Additionally, multi-factor authentication (MFA) adds an extra layer of security to user logins.

4. Employee Training

Employees must be regularly trained on HIPAA rules and data security best practices. Staff should be aware of how to properly handle, store, and transmit PHI to avoid inadvertent breaches.

5. Audit Trails and Monitoring

Implementing detailed audit trails and continuous monitoring can help detect unauthorized access to PHI in real time. This ensures that any suspicious activity is identified and mitigated promptly.

6. Incident Response Plan

An incident response plan outlines the steps to take in the event of a data breach. HIPAA requires that organizations notify affected individuals within 60 days of a breach, so having a plan in place is crucial to meeting this requirement.

How Securify Can Help with HIPAA Compliance

For healthcare organizations, maintaining HIPAA compliance can be overwhelming. Fortunately, Securify provides expert HIPAA compliance solutions to guide healthcare providers through every step of the compliance process. From risk assessments and encryption strategies to developing comprehensive incident response plans, Securify has the tools and expertise to ensure your organization meets all HIPAA standards.

With Securify’s HIPAA compliance solutions, you can rest assured that your patient data is secure, and you are meeting all necessary regulatory requirements. Our team of experts works closely with you to create customized compliance strategies that address your specific needs and risks.

By partnering with Securify, you protect not just your organization but also the trust and well-being of your patients.

2 notes

·

View notes

Text

Why You Need DevOps Consulting for Kubernetes Scaling

With today’s technological advances and fast-moving landscape, scaling Kubernetes clusters has become troublesome for almost every organization. The more companies are moving towards containerized applications, the harder it gets to scale multiple Kubernetes clusters. In this article, you’ll learn the exponential challenges along with the best ways and practices of scaling Kubernetes deployments successfully by seeking expert guidance.

The open-source platform K8s, used to deploy and manage applications, is now the norm in containerized environments. Since businesses are adopting DevOps services in USA due to their flexibility and scalability, cluster management for Kubernetes at scale is now a fundamental part of the business.

Understanding Kubernetes Clusters

Before moving ahead with the challenges along with its best practices, let’s start with an introduction to what Kubernetes clusters are and why they are necessary for modern app deployments. To be precise, it is a set of nodes (physical or virtual machines) connected and running containerized software. K8’s clusters are very scalable and dynamic and are ideal for big applications accessible via multiple locations.

The Growing Complexity Organizations Must Address

Kubernetes is becoming the default container orchestration solution for many companies. But the complexity resides with its scaling, as it is challenging to keep them in working order. Kubernetes developers are thrown many problems with consistency, security, and performance, and below are the most common challenges.

Key Challenges in Managing Large-Scale K8s Deployments

Configuration Management: Configuring many different Kubernetes clusters can be a nightmare. Enterprises need to have uniform policies, security, and allocations with flexibility for unique workloads.

Resource Optimization: As a matter of course, the DevOps consulting services would often emphasize that resources should be properly distributed so that overprovisioning doesn’t happen and the application can run smoothly.

Security and Compliance: Security on distributed Kubernetes clusters needs solid policies and monitoring. Companies have to use standard security controls with different compliance standards.

Monitoring and Observability: You’ll need advanced monitoring solutions to see how many clusters are performing health-wise. DevOps services in USA focus on the complete observability instruments for efficient cluster management.

Best Practices for Scaling Kubernetes

Implement Infrastructure as Code (IaC)

Apply GitOps processes to configure

Reuse version control for all cluster settings.

Automate cluster creation and administration

Adopt Multi-Cluster Management Tools

Modern organizations should:

Set up cluster management tools in dedicated software.

Utilize centralized control planes.

Optimize CI CD Pipelines

Using K8s is perfect for automating CI CD pipelines, but you want the pipelines optimized. By using a technique like blue-green deployments or canary releases, you can roll out updates one by one and not push the whole system. This reduces downtime and makes sure only stable releases get into production.

Also, containerization using Kubernetes can enable faster and better builds since developers can build and test apps in separate environments. This should be very tightly coupled with Kubernetes clusters for updates to flow properly.

Establish Standardization

When you hire DevOps developers, always make sure they:

Create standardized templates

Implement consistent naming conventions.

Develop reusable deployment patterns.

Optimize Resource Management

Effective resource management includes:

Implementing auto-scaling policies

Adopting quotas and limits on resource allocations.

Accessing cluster auto scale for node management

Enhance Security Measures

Security best practices involve:

Role-based access control (RBAC)—Aim to restrict users by role

Network policy isolation based on isolation policy in the network

Updates and security audits: Ongoing security audits and upgrades

Leverage DevOps Services and Expertise

Hire dedicated DevOps developers or take advantage of DevOps consulting services like Spiral Mantra to get the best of services under one roof. The company comprehends the team of experts who have set up, operated, and optimized Kubernetes on an enterprise scale. By employing DevOps developers or DevOps services in USA, organizations can be sure that they are prepared to address Kubernetes issues efficiently. DevOps consultants can also help automate and standardize K8s with the existing workflows and processes.

Spiral Mantra DevOps Consulting Services

Spiral Mantra is a DevOps consulting service in USA specializing in Azure, Google Cloud Platform, and AWS. We are CI/CD integration experts for automated deployment pipelines and containerization with Kubernetes developers for automated container orchestration. We offer all the services from the first evaluation through deployment and management, with skilled experts to make sure your organizations achieve the best performance.

Frequently Asked Questions (FAQs)

Q. How can businesses manage security on different K8s clusters?

Businesses can implement security by following annual security audits and security scanners, along with going through network policies. With the right DevOps consulting services, you can develop and establish robust security plans.

Q. What is DevOps in Kubernetes management?

For Kubernetes management, it is important to implement DevOps practices like automation, infrastructure as code, continuous integration and deployment, security, compliance, etc.

Q. What are the major challenges developers face when managing clusters at scale?

Challenges like security concerns, resource management, and complexity are the most common ones. In addition to this, CI CD pipeline management is another major complexity that developers face.

Conclusion

Scaling Kubernetes clusters takes an integrated strategy with the right tools, methods, and knowledge. Automation, standardization, and security should be the main objectives of organizations that need to take advantage of professional DevOps consulting services to get the most out of K8s implementations. If companies follow these best practices and partner with skilled Kubernetes developers, they can run containerized applications efficiently and reliably on a large scale.

1 note

·

View note

Text

Safeguarding Privacy and Security in Fast-Paced Data Processing

In the current era of data-centric operations, rapid data processing is essential across many industries, fostering innovation, improving efficiency, and offering a competitive advantage.

However, as the velocity and volume of data processing increase, so do the challenges related to data privacy and security. This article explores the critical issues and best practices in maintaining data integrity and confidentiality in the era of rapid data processing.

The Importance of Data Privacy and Security

Data privacy ensures that personal and sensitive information is collected, stored, and used in compliance with legal and ethical standards, safeguarding individuals' rights. Data security, on the other hand, involves protecting data from unauthorized access, breaches, and malicious attacks. Together, they form the foundation f trust in digital systems and processes.

Challenges in Rapid Data Processing

Volume and Velocity: The sheer amount of data generated and processed in real-time poses significant security risks.

Complex Data Environments: Modern data processing often involves distributed systems, cloud services, and multiple third-party vendors, creating a complex ecosystem that is challenging to secure comprehensively.

Regulatory Compliance: With stringent regulations like GDPR, CCPA, and HIPAA, organizations must ensure that their rapid data processing activities comply with data privacy laws.

Anonymization and De-identification: Rapid data processing systems must implement robust anonymization techniques to protect individual identities.

Best Practices for Ensuring Data Privacy and Security

Data Encryption: Encrypting data at rest and in transit is crucial to prevent unauthorized access.

Access Controls: Role-based access controls (RBAC) and multi-factor authentication (MFA) are effective measures.

Regular Audits and Monitoring: Continuous monitoring and regular security audits help identify and mitigate vulnerabilities in data processing systems.

Data Minimization: Collecting and processing only the necessary data reduces exposure risks.

Compliance Management: Staying updated with regulatory requirements and integrating compliance checks into the data processing workflow ensures adherence to legal standards.

Robust Anonymization Techniques: Employing advanced anonymization methods and regularly updating them can reduce the risk of re-identification.

Conclusion

As organizations leverage rapid data processing for competitive advantage, prioritizing data privacy and security becomes increasingly critical. By adopting best practices and staying vigilant against evolving threats, businesses can safeguard their data assets, maintain regulatory compliance, and uphold the trust of their customers and stakeholders.

To know more: project management service company

data processing services

Also read: https://stagnateresearch.com/blog/data-privacy-and-security-in-rapid-data-processing-a-guide-for-market-research-professionals/

#onlineresearch#marketresearch#datacollection#project management#survey research#data collection company

3 notes

·

View notes

Text

Ensuring Data Security in Online Market Research while using AI to Collect Data

In the realm of Online market research, the integration of Artificial Intelligence (AI) has revolutionized data collection processes, offering unparalleled efficiency and insights. However, alongside these advancements, ensuring robust data security measures is paramount to safeguarding sensitive information.

Encryption Protocols: Implementing robust encryption protocols is the first line of defense in protecting data integrity. Utilizing industry-standard encryption algorithms ensures that data remains encrypted both in transit and at rest, mitigating the risk of unauthorized access.

Access Controls: Implementing strict access controls ensures that only authorized personnel can access sensitive data. Role-based access controls (RBAC) limit access to data based on predefined roles and responsibilities, minimizing the potential for data breaches.

Anonymization and Pseudonymization: Employing techniques such as anonymization and pseudonymization reduces the risk of exposing personally identifiable information (PII). By replacing identifiable information with artificial identifiers, researchers can analyze data while preserving individual privacy.

Data Minimization: Adhering to the principle of data minimization involves collecting only the necessary data required for research purposes. By reducing the volume of sensitive information stored, organizations can minimize the potential impact of a data breach.

Secure Data Transmission: Utilizing secure communication channels, such as encrypted connections (e.g., SSL/TLS), ensures that data transmitted between clients and servers remains confidential. Secure socket layers provide end-to-end encryption, safeguarding against eavesdropping and tampering.

Regular Security Audits: Conducting regular security audits and assessments helps identify vulnerabilities and areas for improvement within existing security frameworks. By proactively addressing security gaps, organizations can enhance their resilience to potential threats.

Compliance with Regulations: Adhering to relevant data protection regulations such as GDPR, CCPA, and HIPAA ensures legal compliance and fosters trust among participants. Compliance frameworks outline specific requirements for data handling, storage, and processing, guiding organizations in implementing robust security measures.

Continuous Monitoring and Response: Implementing real-time monitoring systems allows organizations to detect and respond to security incidents promptly. Automated alerting mechanisms notify administrators of suspicious activities, enabling swift intervention to mitigate potential risks.

Employee Training and Awareness: Educating employees about data security best practices and the importance of safeguarding sensitive information is critical in maintaining a secure environment. Training programs raise awareness about common security threats and equip staff with the knowledge to identify and respond appropriately to potential risks.

Vendor Due Diligence: When outsourcing data collection or processing tasks to third-party vendors, conducting thorough due diligence is essential. Assessing vendor security practices and ensuring compliance with data protection standards mitigate the risk of data breaches arising from external sources.

By implementing these comprehensive strategies, organizations can uphold the integrity and confidentiality of data collected through AI-powered online market research. Prioritizing data security not only fosters trust with participants but also mitigates the risk of reputational damage and regulatory non-compliance.

Also read:

The Right Approach to Designing & Conducting Online Surveys

Know more: Online Community Management Software

panel management platform

Online Project Management Platform

#market research#onlineresearch#samplemanagement#panelmanagement#communitypanel#datacollection#datainsights

2 notes

·

View notes

Text

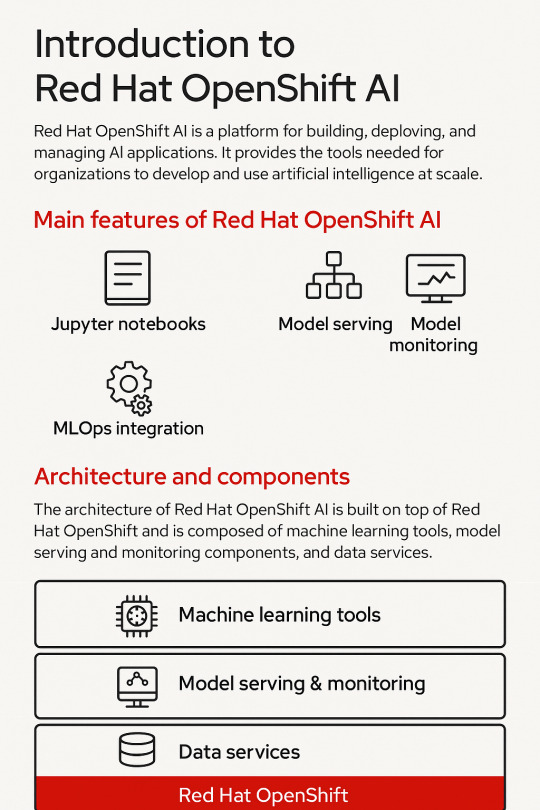

Introduction to Red Hat OpenShift AI: Features, Architecture & Components

In today’s data-driven world, organizations need a scalable, secure, and flexible platform to build, deploy, and manage artificial intelligence (AI) and machine learning (ML) models. Red Hat OpenShift AI is built precisely for that. It provides a consistent, Kubernetes-native platform for MLOps, integrating open-source tools, enterprise-grade support, and cloud-native flexibility.

Let’s break down the key features, architecture, and components that make OpenShift AI a powerful platform for AI innovation.

🔍 What is Red Hat OpenShift AI?

Red Hat OpenShift AI (formerly known as OpenShift Data Science) is a fully supported, enterprise-ready platform that brings together tools for data scientists, ML engineers, and DevOps teams. It enables rapid model development, training, and deployment on the Red Hat OpenShift Container Platform.

🚀 Key Features of OpenShift AI

1. Built for MLOps

OpenShift AI supports the entire ML lifecycle—from experimentation to deployment—within a consistent, containerized environment.

2. Integrated Jupyter Notebooks

Data scientists can use Jupyter notebooks pre-integrated into the platform, allowing quick experimentation with data and models.

3. Model Training and Serving

Use Kubernetes to scale model training jobs and deploy inference services using tools like KServe and Seldon Core.

4. Security and Governance

OpenShift AI integrates enterprise-grade security, role-based access controls (RBAC), and policy enforcement using OpenShift’s built-in features.

5. Support for Open Source Tools

Seamless integration with open-source frameworks like TensorFlow, PyTorch, Scikit-learn, and ONNX for maximum flexibility.

6. Hybrid and Multicloud Ready

You can run OpenShift AI on any OpenShift cluster—on-premise or across cloud providers like AWS, Azure, and GCP.

🧠 OpenShift AI Architecture Overview

Red Hat OpenShift AI builds upon OpenShift’s robust Kubernetes platform, adding specific components to support the AI/ML workflows. The architecture broadly consists of:

1. User Interface Layer

JupyterHub: Multi-user Jupyter notebook support.

Dashboard: UI for managing projects, models, and pipelines.

2. Model Development Layer

Notebooks: Containerized environments with GPU/CPU options.

Data Connectors: Access to S3, Ceph, or other object storage for datasets.

3. Training and Pipeline Layer

Open Data Hub and Kubeflow Pipelines: Automate ML workflows.

Ray, MPI, and Horovod: For distributed training jobs.

4. Inference Layer

KServe/Seldon: Model serving at scale with REST and gRPC endpoints.

Model Monitoring: Metrics and performance tracking for live models.

5. Storage and Resource Management

Ceph / OpenShift Data Foundation: Persistent storage for model artifacts and datasets.

GPU Scheduling and Node Management: Leverages OpenShift for optimized hardware utilization.

🧩 Core Components of OpenShift AI

ComponentDescriptionJupyterHubWeb-based development interface for notebooksKServe/SeldonInference serving engines with auto-scalingOpen Data HubML platform tools including Kafka, Spark, and moreKubeflow PipelinesWorkflow orchestration for training pipelinesModelMeshScalable, multi-model servingPrometheus + GrafanaMonitoring and dashboarding for models and infrastructureOpenShift PipelinesCI/CD for ML workflows using Tekton

🌎 Use Cases

Financial Services: Fraud detection using real-time ML models

Healthcare: Predictive diagnostics and patient risk models

Retail: Personalized recommendations powered by AI

Manufacturing: Predictive maintenance and quality control

🏁 Final Thoughts

Red Hat OpenShift AI brings together the best of Kubernetes, open-source innovation, and enterprise-level security to enable real-world AI at scale. Whether you’re building a simple classifier or deploying a complex deep learning pipeline, OpenShift AI provides a unified, scalable, and production-grade platform.

For more info, Kindly follow: Hawkstack Technologies

0 notes

Text

HIPAA Compliance in Software Development: A Complete Guide for Developers

In the healthcare industry, protecting sensitive patient data is not just good practice—it's a legal requirement. For software developers building healthcare applications or platforms, ensuring HIPAA compliance (Health Insurance Portability and Accountability Act) is essential. Non-compliance can lead to serious consequences, including hefty fines, loss of trust, and even legal action.

This article outlines what HIPAA compliant software means for software development, the key requirements, and best practices to follow throughout the development lifecycle.

What Is HIPAA and Why Does It Matter?

HIPAA is a U.S. federal law enacted in 1996 to safeguard patient health information. It applies to any entity that handles Protected Health Information (PHI)—this includes healthcare providers, insurance companies, and their business associates, such as software developers.

If you're developing an app or software that stores, processes, or transmits PHI, HIPAA compliance is mandatory.

Key HIPAA Rules Developers Must Know:

1. Risk Analysis and Assessment

Conduct a thorough risk assessment to identify vulnerabilities.

Regularly update the analysis as the software evolves.

2. Data Encryption

Encrypt data at rest and in transit using industry-standard protocols like AES and TLS.

Avoid storing PHI on mobile devices unless absolutely necessary—and if you do, encrypt it.

3. Access Controls

Implement role-based access controls (RBAC).

Use strong authentication mechanisms (e.g., multi-factor authentication).

4. Audit Logs and Monitoring

Maintain logs of who accessed PHI, when, and what changes were made.

Monitor access patterns for unusual activity.

5. Secure Hosting

Choose HIPAA-compliant cloud providers (e.g., AWS, Azure, Google Cloud).

Sign Business Associate Agreements (BAAs) with all third-party vendors.

6. Data Backup and Disaster Recovery

Ensure data is regularly backed up.

Develop and test disaster recovery plans.

HIPAA Compliance Testing

During QA and penetration testing, ensure you:

Perform vulnerability scanning

Conduct penetration testing

Validate encryption and data isolation mechanisms

Review all third-party tools and libraries for compliance risks

Final Thoughts

HIPAA compliant software development is more than a checklist—it's a continuous commitment to data security and patient privacy. By integrating HIPAA requirements into the development lifecycle, teams can build trustworthy, secure, and legally compliant healthcare software solutions.

Whether you're building a patient portal, telemedicine platform, or health tracking app, compliance from day one is the safest and smartest approach.

0 notes

Text

Master Production-Grade Kubernetes with Red Hat OpenShift Administration III (DO380)

When you're ready to take your OpenShift skills to the next level, Red Hat OpenShift Administration III (DO380) is the course that delivers. It’s designed for system administrators and DevOps professionals who want to master managing and scaling OpenShift clusters in production environments.

At HawkStack, we’ve seen first-hand how this course transforms tech teams—helping them build scalable, secure, and resilient applications using Red Hat’s most powerful platform.

Why DO380 Matters OpenShift isn’t just another Kubernetes distribution. It’s an enterprise-ready platform built by Red Hat, trusted by some of the biggest organizations around the world.

But managing OpenShift in a live production environment requires more than just basic knowledge. That's where DO380 steps in. This course gives you the skills to:

Configure cluster scaling

Automate management tasks

Secure and monitor applications

Handle multi-tenant workloads

Optimize performance and availability

In short, it equips you to keep production clusters running smoothly under pressure.

What You’ll Learn in DO380 Red Hat OpenShift Administration III dives deep into advanced cluster operations, covering:

✅ Day 2 Operations Learn to troubleshoot and tune OpenShift clusters for maximum reliability.

✅ Performance Optimization Get hands-on with tuning resource limits, autoscaling, and load balancing.

✅ Monitoring & Logging Set up Prometheus, Grafana, and EFK (Elasticsearch, Fluentd, Kibana) stacks for full-stack observability.

✅ Security Best Practices Configure role-based access control (RBAC), network policies, and more to protect sensitive workloads.

Who Should Take DO380? This course is ideal for:

Red Hat Certified System Administrators (RHCSAs)

OpenShift administrators with real-world experience

DevOps professionals managing container workloads

Anyone aiming for Red Hat Certified Specialist in OpenShift Administration

If you're managing containerized applications and want to run them securely and at scale—DO380 is for you.

Learn with HawkStack At HawkStack Technologies, we offer expert-led training for DO380, along with access to Red Hat Learning Subscription (RHLS), hands-on labs, and mentoring from certified professionals.

Why choose us?

🔴 Red Hat Certified Instructors

📘 Tailored learning plans

💼 Real-world project exposure

🎓 100% exam-focused support

Our students don’t just pass exams - they build real skills.

Final Thoughts Red Hat OpenShift Administration III (DO380) is more than just a training course—it’s a gateway to high-performance DevOps and production-grade Kubernetes. If you're serious about advancing your career in cloud-native technologies, this is the course that sets you apart.

Let HawkStack guide your journey with Red Hat. Book your seat today and start building the future of enterprise IT.

For more details www.hawkstack.com

0 notes

Text

What to Look for in a Secure and Scalable Mail API

In today’s fast-paced digital economy, businesses are constantly looking for ways to streamline communications while ensuring data privacy and delivery accuracy. A Mail API is one of the most effective tools for automating transactional and marketing messages, offering integration, reliability, and performance at scale. But with numerous providers and technologies available, how do you choose a Mail API that’s both secure and scalable?

Selecting the right Mail API isn’t just about sending emails or physical documents—it’s about ensuring sensitive data is protected, messages are delivered efficiently, and the platform grows with your business. In this comprehensive guide, we’ll break down the key features, compliance requirements, and performance benchmarks you should evaluate when choosing a Mail API for long-term success.

Prioritizing End-to-End Data Security

Security should be the foremost consideration when evaluating a Mail API, especially if your business handles sensitive or regulated information. Look for APIs that offer end-to-end encryption for data at rest and in transit. This ensures that your documents, personal details, and transactional content are protected from unauthorized access.

Secure token-based authentication and OAuth 2.0 support are also essential to prevent unauthorized API usage. Additionally, platforms that perform regular penetration testing and follow secure development practices offer added assurance against vulnerabilities.

Evaluating Compliance and Industry Certifications

If you operate in industries such as healthcare, finance, or legal services, regulatory compliance is non-negotiable. A trustworthy Mail API should comply with key standards such as SOC 2 Type II, HIPAA (for U.S. healthcare data), GDPR (for European users), and PCI DSS (for payment data).

Beyond compliance checkboxes, consider whether the provider offers audit trails, access logs, and data residency options. These features not only help you meet legal requirements but also strengthen internal governance.

Assessing API Reliability and Uptime Guarantees

A secure platform is only as good as its availability. Downtime or API latency can disrupt time-sensitive communications and erode customer trust. When selecting a Mail API, review the provider’s service-level agreements (SLAs), uptime guarantees, and historical performance data.

Top-tier providers offer 99.99% or higher uptime and operate across multiple availability zones to ensure redundancy. Look for platforms that provide detailed status dashboards and real-time monitoring so that your engineering and operations teams are always in the loop.

Checking for Granular Access Controls

Role-based access control (RBAC) is a must-have feature, especially for teams that handle different facets of your mail workflow. Whether it's developers managing API keys, marketing teams executing campaigns, or compliance officers reviewing logs—each user should have the appropriate level of access.

An ideal Mail API platform supports fine-tuned permission settings, API usage policies, and the ability to restrict IP addresses, helping minimize insider risk and improve operational transparency.

Understanding Customization Capabilities

Flexibility is critical to ensuring your Mail API fits your workflow—not the other way around. Look for an API that allows for custom document templates, dynamic variables, and logic-based routing. Whether you’re sending marketing messages, invoices, or legal notices, the ability to tailor messages per recipient is essential.

Also, consider if the platform supports international characters, multi-language content, and custom branding, which are especially useful for global businesses and customer segmentation.

Analyzing Delivery Infrastructure and Throughput

Scalability is about more than handling volume—it’s about delivering large volumes without sacrificing speed or accuracy. A robust Mail API must be capable of handling bursts of traffic without queuing delays, failed deliveries, or IP reputation issues.

Check the API’s throughput rates (messages per second or per minute) and ask if the infrastructure is designed for horizontal scaling. For physical mail APIs, inquire about print facility redundancies, geographic distribution, and logistics partnerships to ensure reliable delivery.

Exploring Real-Time Tracking and Reporting Features

For any organization, visibility into mail performance is essential for making informed decisions. A quality Mail API offers real-time tracking capabilities—whether it's delivery status, open rates, or bounce data—directly within your dashboard or via webhooks.

Advanced platforms also include reporting tools that let you monitor usage, segment results by campaign, and export data for analysis. These insights can help you optimize timing, content, and targeting for future sends.

Looking Into Global Delivery Support

If your audience spans multiple countries, your Mail API must support international delivery. This includes handling different address formats, postal standards, and customs requirements. For physical mail, check whether the provider operates or partners with facilities in different regions to reduce delivery times and shipping costs.

For digital mail delivery, ensure that international character sets (e.g., UTF-8) are supported and that local data laws are respected. A provider that handles global compliance helps you avoid regulatory pitfalls in cross-border communications.

Evaluating Ease of Integration

A Mail API should fit seamlessly into your existing technology stack. Look for platforms that provide comprehensive developer documentation, SDKs in multiple languages, and prebuilt integrations with common platforms like Salesforce, HubSpot, and Zapier.

The API should support RESTful architecture and standard formats such as JSON or XML. Well-structured endpoints and intuitive naming conventions also reduce developer ramp-up time and simplify long-term maintenance.

Considering Onboarding and Support Options

Even the most powerful API is only as useful as the support behind it. Evaluate the onboarding process, availability of dedicated success managers, and training resources. Does the provider offer guided implementation? Do they have sandbox environments for testing? Are there real-world tutorials and best practices?

Reliable support channels—email, live chat, or even a dedicated Slack—can make a big difference, especially during technical incidents or high-priority launches. 24/7 customer support is crucial if you operate in multiple time zones or industries with critical messaging timelines.

Reviewing API Rate Limits and Fair Usage Policies

Many Mail APIs impose rate limits or quotas to prevent abuse and ensure fair access. Before committing to a provider, understand their API usage policies—what are the daily or hourly limits, and what happens when you exceed them?

Providers that allow for burst traffic, with options for custom SLAs or dedicated throughput lanes, offer more flexibility for high-volume senders. Rate limiting should be transparent, adjustable, and aligned with your projected growth.

Monitoring Webhook Stability and Customization

Webhooks are essential for real-time event updates—like delivery confirmations, message failures, or address corrections. A high-quality Mail API should provide robust webhook support with custom triggers, payload formats, and retry logic.

Ensure that the webhook architecture supports HMAC validation, secure HTTPS endpoints, and is resilient to downtime. The ability to filter or suppress certain events can also reduce noise and improve integration with your internal systems.

Evaluating Cost Structures and Predictability

Pricing should be transparent and aligned with your usage patterns. Some APIs offer pay-as-you-go models, while others rely on tiered pricing or enterprise agreements. Consider whether the platform charges based on volume, pages, regions, or additional features like address verification.

Hidden fees can quickly erode your ROI, so look for providers that provide detailed invoices and usage dashboards. Predictable billing allows finance teams to forecast costs more accurately and avoid unpleasant surprises.

Checking SLA Enforcement and Penalty Clauses

It’s not enough for a provider to claim reliability—they need to back it up. Ask for a copy of the service-level agreement and evaluate the guarantees around delivery timelines, uptime, and support responsiveness.

Penalty clauses—such as credits for downtime or failed deliveries—demonstrate the provider’s confidence in their infrastructure. This accountability is especially critical for industries where timing and compliance are business-critical.

Planning for Future Innovations

Technology is always evolving, and your Mail API should evolve with it. Choose a provider with a clear product roadmap, history of regular updates, and a commitment to innovation. Are they investing in AI-powered optimization, smart routing, or document automation?

Being aligned with a forward-looking provider ensures that your communications strategy will stay ahead of the curve, rather than being limited by outdated technology.

Final Thoughts

A secure and scalable Mail API can transform how your organization communicates—driving operational efficiency, improving customer experience, and reducing risk. But not all APIs are created equal. The right solution will strike a balance between security, performance, flexibility, and usability.

When evaluating a provider, look beyond surface-level features and dig into the architectural, compliance, and support details. Focus on how the platform will integrate with your unique needs today and scale as your business evolves.

Ultimately, investing in the right Mail API isn’t just about sending messages—it’s about building a smarter, safer, and more sustainable communication infrastructure that supports growth for years to come.

youtube

SITES WE SUPPORT

Secure Printing Made Easy: HIPAA, Print Mail API & Postcard API – Wix

SOCIAL LINKS

Facebook Twitter LinkedIn Instagram Pinterest

0 notes

Text

Flawless HR Data Integration: Oracle Fusion HCM Breakthrough Strategies

Introduction

In today's fast-paced digital world, businesses rapidly transition to cloud-based solutions to streamline HR operations. Oracle Fusion HCM (Human Capital Management) stands out as a game-changer, offering robust data integration strategies that help organizations unify and optimize workforce management. Whether you're migrating from legacy systems or integrating third-party applications, Oracle Fusion HCM ensures flawless HR data integration with minimal disruptions.

For those aiming to master this cutting-edge HR technology, Oracle Fusion HCM Cloud Online Training is the gateway to unlocking your career potential. This article delves into the breakthrough strategies that make Oracle Fusion HCM a leader in seamless data integration. It also underscores the indispensable role of Oracle Fusion HCM Online Training for HR and IT professionals, offering them the opportunity to gain hands-on experience and expertise in this field.

Understanding HR Data Integration in Oracle Fusion HCM

HR data integration is critical in synchronizing employee records, payroll details, benefits, and performance analytics across multiple platforms. Traditional HR systems often struggle with fragmented data, leading to inefficiencies, errors, and compliance risks. Oracle Fusion HCM Training equips professionals with the knowledge to effectively integrate HR data while ensuring security, accuracy, and compliance.

Key Features of Oracle Fusion HCM Data Integration

Prebuilt Connectors: Oracle Fusion HCM offers prebuilt connectors that allow seamless integration with third-party applications such as payroll, benefits providers, and talent management tools.

HCM Data Loader (HDL): A powerful tool that enables bulk data loading, reducing the time and effort required for data migration.

Application Programming Interfaces (APIs): Oracle Fusion HCM provides robust REST and SOAP APIs for real-time data exchange across multiple systems.

Fast Formula & Business Intelligence: Advanced data transformation capabilities ensure high-quality data reporting and insights.

Role-Based Security (RBAC): Secure integration ensures only authorized users can access sensitive HR data.

Breakthrough Strategies for Flawless HR Data Integration

1. Plan & Standardize Data Migration

Before initiating any data migration process, defining a clear integration roadmap is essential. Oracle HCM Course modules emphasize the importance of:

Identifying data sources and cleansing inconsistencies before migration.

Using standard data formats to avoid compatibility issues.

Creating a phased migration plan to minimize downtime and disruptions.

2. Leverage Oracle HCM Data Loader (HDL) for Bulk Processing

Oracle Fusion HCM's HDL tool is a game-changer for handling bulk data imports. This tool simplifies:

Importing employee records, payroll data, and performance reports efficiently.

Mapping data fields accurately to ensure smooth migration.

Validating errors before committing changes, reducing rework.

3. Utilize Pre Built Integration Tools for Seamless Connectivity

Organizations using multiple HR systems can take advantage of Oracle Fusion HCM Cloud Online Training to master pre built integration tools such as:

Oracle Integration Cloud (OIC) for automated workflows.

HCM Extracts for structured data exporting.

REST and SOAP APIs for real-time HR data synchronization.

4. Enhance Security with Role-Based Access Control (RBAC)

Data security is a top concern for HR professionals. Oracle Fusion HCM ensures that only authorized personnel have access to sensitive information. Best practices include:

Assigning user roles based on job function.

Enforcing multi-factor authentication (MFA) to add extra layers of security.

Enforcing audit trails to monitor integration activities.

5. Automate HR Processes for Increased Efficiency

HR teams can significantly reduce manual workload by automating key HR processes. It includes:

Automating payroll processing with third-party financial applications.

Syncing attendance and leave data with workforce management systems.

Triggering notifications for compliance-related updates automatically.

6. Monitor & Optimize Integration Performance

Continuous monitoring is crucial for a successful HR data integration strategy. Oracle Fusion HCM offers powerful analytics tools to:

Track integration success rates and identify potential errors.

Generate real-time reports for HR and IT decision-makers.

Optimize system performance by analyzing usage patterns.

Why Enroll in Oracle Fusion HCM Online Training?

Professionals must acquire hands-on experience with Oracle Fusion HCM tools to successfully implement these integration strategies. Enrolling in Oracle Fusion HCM Cloud Online Training offers:

Comprehensive Course Modules covering data migration, integration techniques, and security best practices.

Expert-led sessions by industry professionals with real-world implementation experience.

Hands-on Practice Labs for mastering HCM Data Loader (HDL), APIs, and Oracle Integration Cloud (OIC).

Certification Guidance to boost career growth and job opportunities in the HR technology domain.

Final Thoughts

Oracle Fusion HCM's breakthrough integration strategies allow businesses to manage HR data more efficiently, securely, and seamlessly. Organizations can achieve a unified HR system that drives productivity and compliance by leveraging tools like HCM Data Loader, REST APIs, and Oracle Integration Cloud.

Enrolling in an Oracle Fusion HCM Training program is the best step forward if you're ready to take your career to the next level. Gain hands-on data migration, automation, and integration expertise, and become a sought-after HR tech professional.

Looking for expert training? Join an Oracle HCM Course today and transform how HR data integration works for your organization!

#OracleFusionHCMOnlineTraining #OracleHCMCourse #OracleFusionHCMTraining #OracleHCMCloudTraining #OracleHCMCertification

0 notes

Text

How to Create a High-Performance and Secure Website Using Craft CMS

Empower them to scale, secure, and streamline their web presence. One such platform that overshadows others is Craft CMS, a malleable, user-focused content management system perfect for custom-built websites. At Maven Group, we feel that a high-performing and secure website is not only about technology, but it’s about building digital experiences that convert, engage, and last. This blog offers a fresh approach to using Craft CMS to build future-proof websites that are aligned with best practices in performance, security, and customization.

Why Craft CMS is the Backbone of Modern Website Development Craft CMS is not like the standard CMS platforms that tend to have unnecessary bloat or strict frameworks. It provides full control to developers over front-end styling, yet remains simple enough for content managers to handle on the back-end. For a Website Development Company, Craft CMS is a treasure trove, it permits developing exactly what a client requires without pushing them into pre-designed templates or restrictive workflows.

Architectural Flexibility: Build Without Constraints One of the best things about Craft CMS is that it is “structure-less.” It does not take any idea of what type of website you are creating, so whether a complex eCommerce site or a minimal landing page, it allows you to build your architecture from scratch. This is necessary for a Website Development Company like ours in Hyderabad that has clients with different business objectives.

With Matrix fields, custom entry types, and multisite functionality, Craft CMS enables a development team to build reusable blocks of content, build multi-language sites, or host multiple domains out of a single control panel, all without any loss of performance.

Performance at the Core: Speed-First Development Present-day users will not wait longer than a few seconds for a page to load. Craft CMS, being clean-coded and lightweight, provides developers with a great foundation upon which to create speedy websites. Here’s why we make sure to have blazing-fast speed using Craft CMS:

Twig Templating Engine: Enables thin front-end code with little overhead. Custom Query Control: Developers code their queries, minimizing the risk of overweight data fetching. Efficient Asset Management: Integrated image transformation and caching provide optimized media delivery. For a Website Development Company, this detailed level of control over performance is a huge benefit, it provides fine-grained tuning of speed-critical components such as search, mobile responsiveness, and interactive UI elements.

Uncompromising Security Standards Security isn’t an afterthought with Craft CMS; it’s baked deep into its DNA. Whether you’re dealing with user data, payment systems, or admin access, Craft includes secure defaults and the ability to customize them. We augment these with best practices like:

Secure Admin URL Routing to conceal login pages from bots. Role-based Access Controls (RBAC) to specify who can read or modify what. Automatic Updates and Plugin Security Audits for continuous prevention of threats. In the context of cybersecurity, prevention is always more economical than a cure. That is why a Website Development Company in Hyderabad has to first focus on secure coding practices, particularly while handling local industries such as fintech, healthcare, and education, where user information is sensitive.

Developer-First, Client-Focused: The Craft Advantage Craft CMS not only empowers the developers it also frees the content editors. With its Live Preview, element-based design, and intuitive content matrix, clients are able to develop rich pages without having to ensure design consistency or disrupt the layout.

This harmony between developer and client is important. We make sure our clients aren’t just given a mighty website. They’re trained, mentored, and given the tools to get the most out of it. To any Website Development Company, the ultimate goal isn’t launch, it’s longevity.

Future-Proofing with Integrations and Extensibility Craft CMS gets along with contemporary stacks. Whether it is being integrated with headless frontends such as React/Vue or with CRMs, ERPs, or analytics tools, its API-first approach makes it future-proof. This is just so well in sync with the changing needs of businesses in Hyderabad and elsewhere.

As a Website Development Company in Hyderabad, we understand how businesses now need to integrate with cloud tools, marketing platforms, and mobile apps seamlessly. Craft CMS enables us to achieve this without rewriting the underlying system.

Why Maven Group Chooses Craft CMS We don’t subscribe to cookie-cutter answers. Each client is different, and each digital problem has a bespoke solution. Craft CMS gives us the canvas we bring the vision, code, and strategy.

As a forward-thinking Website Development Company in Hyderabad, we’re committed to pairing the newest tools with proven development practices. Our adoption of Craft CMS is just one reflection of that commitment to bring quicker, more secure, and more conscious digital experiences.

Final Thoughts In an era where website speed and security have direct effects on business success, Craft CMS is the CMS of choice for high-performance custom web development. It’s no longer about making websites, now it’s about building digital infrastructure that can adapt and expand alongside your brand.

Suppose you are looking for a Website Development Company in Hyderabad that understands the nuances of Craft CMS and can create a website as per your specific needs. In that case, Maven Group is the company to hire.

#SEO Services in Hyderabad#SEO Company in Hyderabad#web development company in Hyderabad#APP Development company in Hyderabad#ERP Company in Hyderabad#Digital Marketing Company in Hyderabad#Digital Marketing Agency in Hyderabad

0 notes

Text

Security Best Practices in Custom Website Development

In today’s digital age, your website is more than just a virtual business card — it’s a powerful platform that interacts with users, stores sensitive data, and supports business operations. As a leading custom website development company, Kwebmaker understands the critical importance of security in every phase of website development.

Custom websites are tailored to meet specific business needs, but with customization comes the responsibility of ensuring airtight security. Below are the top security best practices that Kwebmaker implements in custom website development projects to protect businesses and their users.

1. Secure Code Practices

The foundation of any secure website lies in its code. Our developers follow secure coding guidelines and frameworks to prevent common vulnerabilities like SQL injection, cross-site scripting (XSS), and cross-site request forgery (CSRF). Regular code reviews and automated scanning tools help us identify and fix security issues early in the development cycle.

2. HTTPS and SSL Encryption

Every website developed by Kwebmaker comes with HTTPS and SSL encryption by default. These protocols ensure that all data transferred between the user and the server remains encrypted and protected from eavesdropping and tampering.

3. Authentication and Authorization Controls

We implement strong user authentication protocols such as two-factor authentication (2FA), password strength enforcement, and session timeout mechanisms. Role-based access control (RBAC) is also configured to ensure users only access the data and features they are authorized to use.

4. Regular Security Updates and Patch Management

As a proactive custom website development company, Kwebmaker ensures that all third-party libraries, CMS platforms, and frameworks are regularly updated. Security patches are applied promptly to avoid any known vulnerabilities being exploited.

5. Data Validation and Sanitization

User inputs are thoroughly validated and sanitized on both the client and server sides to prevent malicious code from compromising the system. This prevents injection attacks and ensures data integrity throughout the application.

6. Database Security

Kwebmaker secures databases through encryption, access control, and optimized query design to prevent data leaks and minimize risks. Sensitive data such as passwords and personal information is stored using robust hashing and encryption techniques.

7. Secure File Uploads

File uploads are common in many custom websites, but they pose a security risk if not handled properly. We enforce strict file validation, limit file types, and scan for malware to ensure only safe files are stored on the server.

8. Backup and Disaster Recovery

Our websites are built with disaster recovery in mind. Regular automated backups and secure storage allow us to recover quickly from data loss, breaches, or system failures — giving our clients peace of mind.

9. Security Testing and Monitoring

Before launch, every custom website undergoes rigorous security testing, including penetration testing and vulnerability scanning. Post-launch, continuous monitoring tools are used to detect suspicious activity in real time.

Conclusion

Security is not a one-time task — it’s an ongoing commitment. At Kwebmaker, we go beyond just building custom websites. As a trusted custom website development company, we build secure digital experiences that empower businesses to grow confidently in the digital landscape.

Looking to build a secure, scalable website tailored to your business? Contact Kwebmaker, your reliable custom website development company, today!

To Know More: https://kwebmaker.com/services/best-custom-website-design-development-services-agency-in-india/

0 notes

Text

Cyber security for Companies Essential Strategies to Protect Your Business in the Digital Age

In today’s digital-driven economy, cyber security for companies is no longer optional it’s a fundamental necessity for companies of all sizes. As cyber threats become more frequent and sophisticated, businesses face increasing risks of data breaches, financial loss, and reputational damage. From ransomware attacks to insider threats, the consequences of poor security can be devastating. Implementing a comprehensive cybersecurity strategy is essential to protect sensitive information, maintain customer trust, and ensure business continuity. In this blog, we’ll explore key cybersecurity strategies every company should adopt to safeguard their operations, stay compliant with regulations, and thrive securely in an increasingly connected and vulnerable digital world.

Assessing Your Company’s Cybersecurity Risk

The first step in building a strong cyber security for companies framework is understanding your company’s unique risk profile. Conducting a thorough risk assessment helps identify potential vulnerabilities, including outdated systems, unprotected endpoints, and weak access controls. It also considers the value and sensitivity of your data, employee practices, and industry-specific threats. A risk assessment allows businesses to prioritize their security efforts and allocate resources efficiently. By knowing where your weaknesses lie, you can proactively address them before they’re exploited. Regular assessments ensure your cybersecurity plan evolves with emerging threats and changing technologies, forming the foundation for effective, long-term digital protection.

Implementing Multi-Layered Defense Systems

A single security solution isn’t enough to protect a company in today’s complex threat environment. A multi-layered defense strategy combines various tools and technologies to create overlapping protection. This includes firewalls, antivirus software, intrusion detection systems, encryption, and secure cloud platforms. Each layer addresses different types of threats, making it harder for cybercriminals to find vulnerabilities. For example, while a firewall blocks unauthorized access, antivirus software detects and removes malware. Together, these tools significantly strengthen your cybersecurity posture. Layered security ensures that even if one line of defense fails, others remain active—minimizing damage and maintaining operational continuity.

Employee Education and Cyber Awareness

Employees are often the first line of defense and the weakest link when it comes to cybersecurity. Human error, such as clicking on phishing emails or using weak passwords, can lead to severe breaches. Implementing regular training sessions helps staff recognize threats, practice safe online behavior, and understand the importance of data protection. Cybersecurity awareness should be part of company culture, with updates on the latest threats and policies. Empowered and educated employees are more likely to report suspicious activity and follow security protocols. A well-informed team reduces risk significantly and turns your workforce into a powerful asset in your cybersecurity strategy.

Enforcing Strong Access Controls and Authentication

Limiting access to sensitive data is a crucial step in protecting your business. Role-based access control (RBAC) ensures that employees can only access the information necessary for their job. Additionally, implementing multi-factor authentication (MFA) adds an extra layer of security by requiring more than just a password to log in. Monitoring user activity and regularly reviewing access permissions helps detect unusual behavior and prevents unauthorized access. Companies should also enforce policies around strong password creation and rotation. Effective access control minimizes the chances of insider threats and unauthorized breaches, safeguarding your critical systems and confidential business information.

Securing Mobile Devices and Remote Work Environments

With the rise of remote work and mobile device usage, securing off-site access to company resources is more important than ever. Unsecured devices and public Wi-Fi networks can expose sensitive data to cyber threats. Businesses must implement mobile device management (MDM) systems, require secure VPN usage, and enforce strong authentication for remote access. Ensuring that devices are encrypted and updated regularly reduces vulnerabilities. Employee training on secure remote practices is also vital. By extending cybersecurity protocols beyond the office, companies can protect their networks and data, regardless of where employees are working—making remote operations safer and more reliable.

Regular Backups and Disaster Recovery Planning

Data loss can cripple a business, especially during a cyberattack like ransomware. Regularly backing up data ensures that your company can quickly recover critical files in the event of an incident. Backup systems should be automated, encrypted, and stored both on-site and in the cloud for redundancy. Additionally, every company should have a disaster recovery plan outlining how to respond to various cyber threats. This includes assigning roles, establishing communication protocols, and testing the plan regularly. A solid backup and recovery strategy minimizes downtime, reduces financial losses, and ensures business continuity even in the face of severe cyber disruptions.

Staying Compliant with Cybersecurity Regulations

Many industries are subject to strict cybersecurity regulations designed to protect consumer data and ensure ethical handling of digital information. From GDPR and HIPAA to PCI-DSS and ISO standards, staying compliant isn’t just about avoiding penalties it’s about building trust. Compliance requires regular audits, secure data handling practices, and detailed documentation. Working with cybersecurity professionals can help your company meet these regulatory demands efficiently. Moreover, being compliant often means your security posture is already strong. By aligning your cybersecurity strategy with legal standards, your business not only avoids legal trouble but also demonstrates a commitment to privacy and data protection.

Conclusion

In today’s digital age, cyber security for companies is not just a technical concern it’s a vital component of a company’s overall strategy. With the rise in cyber threats, businesses must take a proactive approach to protect sensitive data, ensure compliance, and maintain customer trust. By implementing layered security measures, educating employees, securing remote access, and staying updated with evolving threats and regulations, companies can significantly reduce their vulnerability. Cybersecurity is an ongoing commitment that requires vigilance, investment, and adaptability. Prioritizing it not only safeguards your business assets but also positions your organization for long-term success in an increasingly connected and risky digital world.

0 notes

Text

GitOps for Kubernetes: Automating Deployments the Git Way

In the world of cloud-native development and Kubernetes orchestration, speed and reliability are essential. That's where GitOps comes in—a modern approach to continuous deployment that leverages Git as a single source of truth. It's a powerful method to manage Kubernetes clusters and applications with clarity, control, and automation.

🔍 What is GitOps?

GitOps is a set of practices that uses Git repositories as the source of truth for declarative infrastructure and applications. It applies DevOps best practices—like version control, collaboration, and automation—to infrastructure operations.

In simpler terms:

You declare what your system should look like (using YAML files).

Git holds the desired state.

A GitOps tool (like Argo CD) continuously syncs the actual system with what’s defined in Git.

💡 Why GitOps for Kubernetes?

Kubernetes is complex. Managing its resources manually or with ad hoc scripts often leads to configuration drift and inconsistent environments. GitOps brings order to the chaos by:

Enabling consistency across environments (dev, staging, prod)

Reducing errors through version-controlled infrastructure

Automating rollbacks and change tracking

Speeding up deployments while increasing reliability

🔧 Core Principles of GitOps

Declarative Configuration All infrastructure and app definitions are written in YAML or JSON files—stored in Git repositories.

Version Control with Git Every change is committed, tracked, and reviewed using Git. This means full visibility and easy rollbacks.

Automatic Synchronization GitOps tools ensure the actual state in Kubernetes matches the desired state in Git—automatically and continuously.

Pull-Based Deployment Instead of pushing changes to Kubernetes, GitOps agents pull the changes, reducing attack surfaces and increasing security.

🚀 Enter Argo CD: GitOps Made Easy

Argo CD (short for Argo Continuous Delivery) is an open-source tool designed to implement GitOps for Kubernetes. It works by:

Watching a Git repository

Detecting any changes in configuration

Automatically applying those changes to the Kubernetes cluster

🧩 Key Concepts of Argo CD

Without diving into code, here are the essential building blocks:

Applications: These are the units Argo CD manages. Each application maps to a Git repo that defines Kubernetes manifests.

Repositories: Git locations Argo CD connects to for pulling app definitions.

Syncing: Argo CD keeps the live state in sync with what's declared in Git. It flags drifts and can auto-correct them.

Health & Status: Argo CD shows whether each application is healthy and in sync—providing visual dashboards and alerts.

🔴 Red Hat OpenShift GitOps

Red Hat OpenShift brings GitOps to enterprise Kubernetes with OpenShift GitOps, a supported Argo CD integration. It enhances Argo CD with:

Seamless integration into OpenShift Console

Secure GitOps pipelines for clusters and applications

RBAC (Role-Based Access Control) tailored to enterprise needs

Scalability and lifecycle management for GitOps workflows

No need to write code—just define your app configurations in Git, and OpenShift GitOps takes care of syncing, deploying, and managing across your environments.

✅ Benefits of GitOps with OpenShift

🔐 Secure by design – Pull-based delivery reduces attack surface

📈 Scalable operations – Manage 100s of clusters and apps from a single Git repo

🔁 Reproducible environments – Easy rollback, audit, and clone of full environments

💼 Enterprise-ready – Backed by Red Hat’s support and OpenShift integrations

🔚 Conclusion

GitOps is not just a buzzword—it's a fundamental shift in how we manage and deliver applications on Kubernetes. With tools like Argo CD and Red Hat OpenShift GitOps, teams can automate deployments, ensure consistency, and scale operations—all using Git.

Whether you're managing a single cluster or many, GitOps gives you control, traceability, and peace of mind.

For more info, Kindly follow: Hawkstack Technologies

0 notes

Text

Why Cloud Compliance and Governance Is Critical for Secure and Scalable Cloud Operations

As businesses shift operations to the cloud, managing regulatory risk and data control becomes a growing challenge. That’s where cloud compliance and governance comes in—ensuring that your cloud infrastructure is secure, accountable, and aligned with global standards.

GrupDev offers comprehensive cloud compliance and governance services tailored to help organizations meet legal, industry, and internal requirements. Whether you're operating in finance, healthcare, retail, or SaaS, staying compliant with frameworks like GDPR, HIPAA, ISO 27001, and SOC 2 is no longer optional—it’s essential.

Their approach starts with a detailed compliance audit that identifies gaps, misconfigurations, and policy violations across your cloud architecture. From there, GrupDev develops a custom compliance roadmap that includes automated controls, documentation practices, and risk mitigation strategies.

One of the key benefits of effective cloud governance is visibility. GrupDev helps businesses implement centralized dashboards that offer real-time monitoring of access controls, policy enforcement, cost usage, and asset management. This provides full transparency and accountability across your cloud resources.

Security and access management are also critical components. GrupDev uses role-based access control (RBAC), encryption, identity federation, and automated audits to ensure only the right users have access to the right data—at the right time.

Additionally, GrupDev’s services include ongoing support and compliance automation. With evolving regulations and increasingly complex cloud ecosystems, their cloud policy enforcement tools make it easier to stay compliant without slowing down operations.

By embedding governance into your cloud framework, you not only reduce legal and financial risks but also gain better control over performance, security, and cost.

0 notes