#SQL Server temp tables

Explore tagged Tumblr posts

Text

The Performance Trade-offs Between SELECT * INTO and SELECT THEN INSERT in T-SQL

In the realm of SQL Server development, understanding the intricacies of query optimization can drastically impact the performance of your applications. A common scenario that developers encounter involves deciding between using SELECT * INTO to create and populate a temporary table at the beginning of a stored procedure versus first creating a temp table and then populating it with a SELECT…

View On WordPress

#efficient data handling in SQL#query optimization techniques#SELECT INTO vs INSERT#SQL Server temp tables#T-SQL performance optimization#TempTable

0 notes

Text

AX 2012 Interview Questions and Answers for Beginners and Experts

Microsoft Dynamics AX 2012 is a powerful ERP answer that facilitates organizations streamline their operations. Whether you're a newbie or an professional, making ready for an interview associated with AX 2012 requires a radical knowledge of its core standards, functionalities, and technical factors. Below is a list of commonly requested AX 2012 interview questions together with their solutions.

Basic AX 2012 Interview Questions

What is Microsoft Dynamics AX 2012?Microsoft Dynamics AX 2012 is an company aid planning (ERP) solution advanced with the aid of Microsoft. It is designed for large and mid-sized groups to manage finance, supply chain, manufacturing, and client relationship control.

What are the important thing features of AX 2012?

Role-primarily based user experience

Strong financial control skills

Advanced warehouse and deliver chain management

Workflow automation

Enhanced reporting with SSRS (SQL Server Reporting Services)

What is the distinction between AX 2009 and AX 2012?

AX 2012 introduced a new data version with the introduction of surrogate keys.

The MorphX IDE changed into replaced with the Visual Studio development environment.

Improved workflow and role-based totally get right of entry to manipulate.

What is the AOT (Application Object Tree) in AX 2012?The AOT is a hierarchical shape used to keep and manipulate objects like tables, bureaucracy, reports, lessons, and queries in AX 2012.

Explain the usage of the Data Dictionary in AX 2012.The Data Dictionary contains definitions of tables, information types, family members, and indexes utilized in AX 2012. It guarantees facts integrity and consistency across the device.

Technical AX 2012 Interview Questions

What are the distinctive sorts of tables in AX 2012?

Regular tables

Temporary tables

In Memory tables

System tables

What is the distinction between In Memory and TempDB tables?

In Memory tables shop information within the purchaser memory and aren't continual.

Temp DB tables save brief statistics in SQL Server and are session-unique.

What is X++ and the way is it utilized in AX 2012?X++ is an item-oriented programming language used in AX 2012 for growing business good judgment, creating custom modules, and automating processes.

What is the cause of the CIL (Common Intermediate Language) in AX 2012?CIL is used to convert X++ code into .NET IL, enhancing overall performance by using enabling execution at the .NET runtime degree.

How do you debug X++ code in AX 2012?Debugging may be accomplished the use of the X++ Debugger or with the aid of enabling the Just-In-Time Debugging function in Visual Studio.

Advanced AX 2012 Interview Questions

What is a Query Object in AX 2012?A Query Object is used to retrieve statistics from tables using joins, tiers, and sorting.

What are Services in AX 2012, and what sorts are to be had?

Document Services (for replacing statistics)

Custom Services (for exposing X++ logic as a carrier)

System Services (metadata, question, and user consultation offerings)

Explain the concept of Workflows in AX 2012.Workflows allow the automation of commercial enterprise techniques, together with approvals, via defining steps and assigning responsibilities to users.

What is the purpose of the SysOperation Framework in AX 2012?It is a substitute for RunBaseBatch framework, used for walking techniques asynchronously with higher scalability.

How do you optimize overall performance in AX 2012?

Using indexes effectively

Optimizing queries

Implementing caching strategies

Using batch processing for massive facts operations

Conclusion

By understanding those AX 2012 interview questions, applicants can successfully put together for interviews. Whether you're a novice or an experienced expert, gaining knowledge of those topics will boost your self assurance and help you secure a role in Microsoft Dynamics AX 2012 tasks.

0 notes

Text

SQL TEMP TABLE

The concept of temporary table is introduced by SQL server. It helps developers in many ways:

Temporary tables can be created at run-time and can do all kinds of operations that a normal table can do. These temporary tables are created inside tempdb database.

There are two types of temp tables based on the behavior and scope.

Local Temp Variable

Global Temp Variable

0 notes

Text

Part 4: How to use EF Core with MongoDb in Blazor Server Web Application

In part 4 of the series, I'm going to show you how to use EF Core with MongoDb in Blazor Server Web Application.

Articles in this series:

Part 1: Getting started with Blazor Server Web App Development using .NET 8

Part 2: How to implement Microsoft Entra ID Authentication in Blazor Server Web App in .NET 8

Part 3: How to implement Multilanguage UI in Blazor Server Web App in .NET 8

Part 4: How to use EF Core with MongoDb in Blazor Server Web Application

Part 5: How to show Dashboard with Radzen Bar and Pie Chart controls in in Blazor Server Web App

Part 6: How to support Authorization in Blazor server web app when using Microsoft Entra ID authentication

Part 7: How to implement Radzen Grid Control with dynamic paging, filtering, shorting in in Blazor Server Web App

Part 8: How to implement Data Entry form in Blazor server web app

Part 9: How to use SignalR to show real time updates in Blazor server web app

Entity Framework Core (EF Core) is a popular Object-Relational Mapper (ORM) framework for .NET applications. While primarily designed for relational databases, it has expanded its capabilities to support NoSQL databases, including MongoDB. This article will guide you through the process of using EF Core with MongoDB in your .NET 8 projects

Introduction

Before diving into the code, it's crucial to understand the nuances of using EF Core with MongoDB. Unlike relational databases, MongoDB is a NoSQL database, meaning it doesn't adhere to the traditional table-row structure. This introduces certain limitations and differences in how EF Core operates with MongoDB compared to relational databases.

In Part 1 of this series, I've explained the development environment setup for this article, that I will repeat here for the users who have not gone through it.

Before we start

In order to follow along with this articles, you will need:

.NET 8 SDK and .NET 8 Runtime installation

If you are planning to use Visual Studio 2022, make sure you have all latest updates installed (v17.8.2)

Install MongoDb Community Server, MongoDb Compass, and Mongodb version of Northwind database (Covered in this blog)

You may need Microsoft Azure (trial will also work) to setup Entra ID App in Azure and configure it to use

If you are fan of command line tools or want to know more about the tooling, see ASP.NET Core Blazor Tooling

How to setup MongoDB for development on windows

If you are not familiar with setting up MongoDB for development on windows, here is a quick walkthrough of what you will need:

Go to MongoDB download center and download MongoDB Community Server MSI package and install.

Next you will need MongoDB compass MSI and install it. You will need this tool to connect with your MongoDB Community Server and do the database administration related activities. If you are familiar with Microsoft SQL Server world, MongoDB compass is like SQL Server Management Studio of MongoDB world.

Next, download MongoDB Command line Database Tool which we will need to import an existing sample database.

We will be using a sample existing database called Northwind that I've downloaded from here

Import Northwind Mongodb Database

Open MongoDB Compass and connect to localhost:27017 which is a default port when you install MongoDB Server on windows.

Click on the databases in the left navigation and create a new database with name northwind and collection sample.

Go to github repository here in your browser, click on code in the top right and then download zip menu to download the zip file.

Extract MongoDB command line database tools zip file

Open mongo-import.sh inside the root folder and change the mongoimport keyword with the actual full path of mongoimport.exe that is part of MongoDb command line database tools zip file. The resulting line will look like below: "C:\temp\import\mongodb-database-tools\bin\mongoimport" -d "$1" -c "$collection" --type json --file "$file"

Open command prompt and go to root folder where you have mongo-import.sh file and run command ./mongo-import.sh northwind

If everything is done correctly, you will see console message that displays the json file name and the number of documents imported.

If you switch back to MongoDB Compass, select northwind database and click on the refresh at the top right corner of the sidebar, you will see collections like customers, employees etc.

We are going to use this sample database to build something interesting that makes sense and has a real-life use cases instead of just a sample table with a few fields.

Setting Up the Project

Create ASP.NET Core Blazor Server Web Application

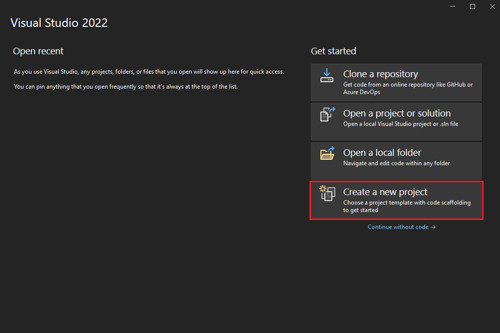

Start visual studio and select create new project.

In the Create a new project window, type Blazor on the search box and hit Enter.

Select the Blazor Web App template and select Next.

In the Configure your new project window, enter BlazorAppPart4 as the project name and select Next.

In the Additional information window, select .NET 8.0 (Long Term Support) in the Framework drop-down if not already selected and click the Create button.

Build and run the application once so that you have your application url that you can copy from the browser and save it for later.

Add the nuget package

Open Tools > nuget package manager > Nuget package manager console and type following command:

install-package MongoDb.EntityFrameworkCore

view rawPackageManager.ps hosted with ❤ by GitHub

This will install all the necessary files needed for accessing northwind database that we created in MongoDb using Entity Framework Core Code First.

Add Model for Customer table

In the example northwind database, there is already a customers table with some data in it. We are going to display the first name and last name of the customer. So, let's create a folder in the root called Model and add new file called Customer.cs that contains following class definition.

using MongoDB.Bson;

using MongoDB.Bson.Serialization.Attributes;

using MongoDB.EntityFrameworkCore;

namespace BlazorAppPart4.Model

{

[Collection("customers")]

public class Customer

{

[BsonId]

public int Id { get; set; }

[BsonElement("last_name")]

public string? LastName { get; set; }

[BsonElement("first_name")]

public string? FirstName { get; set; } }}

view rawCustomer.cs hosted with ❤ by GitHub

Create Entity Framework Core AppDbContext class

Next, we are going to create a new class called AppDbContext that inherits from DbContext class of entity framework core. Add another cs file called AppDbContext.cs in the models folder and paste following code.

using Microsoft.EntityFrameworkCore;

namespace BlazorAppPart4.Model

{

public class AppDbContext: DbContext

{

public DbSet<Customer> Customers { get; init; }

public AppDbContext(DbContextOptions options) :

base(options)

{

}

protected override void OnModelCreating(ModelBuilder modelBuilder)

{

base.OnModelCreating(modelBuilder);

modelBuilder.Entity<Customer>();

}

}

}

view rawAppDbContext.cs hosted with ❤ by GitHub

Create Customer Repository

Next, we want to use repository pattern to ensure data access code is separate and testable. Add another folder in the root of your project called Repositories and add a new cs file called ICustomerRepository.cs with below code:

using BlazorAppPart4.Model;

namespace BlazorAppPart4.Repositories

{

public interface ICustomerRepository

{

Task<List<Customer>> GetAllAsync();

}

}

view rawICustomerRepository.cs hosted with ❤ by GitHub

Once you are done, add 1 more file called CustomerRepository.cs with below code:

using BlazorAppPart4.Model;

using Microsoft.EntityFrameworkCore;

namespace BlazorAppPart4.Repositories

{

public class CustomerRepository : ICustomerRepository

{

private readonly AppDbContext _db;

public CustomerRepository(AppDbContext db)

{

_db = db;

}

public async Task<List<Customer>> GetAllAsync()

{

return await _db.Customers.ToListAsync();

}

}

}

view rawCustomerRepository.cs hosted with ❤ by GitHub

Create AppSettings.json settings

The AppDbContext context will require MongoDb connectionstring and database name. It is recommended to create an application wide setting section in the AppSettings.json. Open your AppSettings.json and 'AppConfig' section from below. I'm showing my full AppSettings.json file for your reference:

{

"AppConfig": {

"Database": {

"ConnectionString": "mongodb://localhost:27017",

"DatabaseName": "northwind"

}

},

"Logging": {

"LogLevel": {

"Default": "Information",

"Microsoft.AspNetCore": "Warning"

}

},

"AllowedHosts": "*"

}

view rawAppSettings.json hosted with ❤ by GitHub

Create AppConfig class for settings

Add AppConfig.cs class in your project and then add below code in the class definition:

namespace BlazorAppPart4

{

public class AppConfig

{

public AppConfig() {

Database = new DbConfig();

}

public DbConfig Database { get; set; }

}

public class DbConfig { public string? ConnectionString { get; set; }

public string? DatabaseName { get; set;

}

}

}

view rawAppConfig.cs hosted with ❤ by GitHub

Configure Dependencies in Program.cs

Next, we want to configure dependency injection so that Repository and AppDbContext classes that we created in our project can be injected where required. Here is my Program.cs:

using BlazorAppPart4;

using BlazorAppPart4.Components;

using BlazorAppPart4.Model;

using BlazorAppPart4.Repositories;

using Microsoft.EntityFrameworkCore;

var builder = WebApplication.CreateBuilder(args);

// Add services to the container.

builder.Services.AddRazorComponents()

.AddInteractiveServerComponents();

var appConfig = builder.Configuration.GetSection("AppConfig").Get<AppConfig>();

if (appConfig == null)

{

throw new InvalidOperationException("Db connectionstring not found");

}

builder.Services.AddDbContext<AppDbContext>(options =>

options.UseMongoDB(appConfig.Database.ConnectionString ?? "", appConfig.Database.DatabaseName ?? ""));

builder.Services.AddTransient<ICustomerRepository, CustomerRepository>();

var app = builder.Build();

// Configure the HTTP request pipeline.

f (!app.Environment.IsDevelopment())

{

app.UseExceptionHandler("/Error", createScopeForErrors: true);

// The default HSTS value is 30 days. You may want to change this for production scenarios, see https://aka.ms/aspnetcore-hsts. app.UseHsts();

}

app.UseHttpsRedirection();

app.UseStaticFiles()

;app.UseAntiforgery();

app.MapRazorComponents<App>()

.AddInteractiveServerRenderMode();

app.Run();

view rawProgram.cs hosted with ❤ by GitHub

Please note on line 13 above, we are reading our AppSettings.json section called AppConfig and then convert it to AppConfig class object. On line 18, we are configuring Entity Framework Core MongoDb data access connection string. On line 21, we are resolving the customer repository. The other part of the file has no changes.

Show customers

And finally, open Components/Pages/Home.razor and replace the content of the file with below:

@page "/"

@using BlazorAppPart4.Repositories

@using BlazorAppPart4.Model

@inject ICustomerRepository customerRepo

@rendermode RenderMode.InteractiveServer

<PageTitle>Home</PageTitle>

<h1>Hello, world!</h1>

Welcome to your new app.

@if(customers.Any())

{

<table class="table table-striped table-responsive">

<thead>

<th>Name</th>

</thead>

<tbody>

@foreach (var cust in customers)

{

<tr>

<td>

@cust.FirstName @cust.LastName

</td>

</tr>

}

</tbody>

</table>

}

else{

<p>No customers found</p>

}

@code {

List<Customer> customers;

protected override async Task OnInitializedAsync()

{

customers = await customerRepo.GetAllAsync();

}

}

view rawHome.razor hosted with ❤ by GitHub

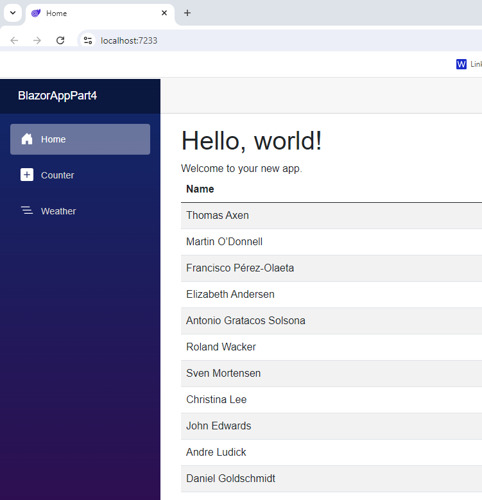

Here is the output of the whole exercise

Conclusion

In this article, we covered the step by step tutorial of How to use EF Core with MongoDb in Blazor Server Web Application of the blazor web app development series.

Ready to unlock the full potential of Blazor for your product development? Facile Technolab, top Blazor Development Company in India providing Blazor development services can help you bring your vision of product mvp to reality.

0 notes

Text

Using XSAS to develop multiple XOOPS sites locally

This tutorial provides step-by-step instructions for how to develop multiple XOOPS websites locally using XSAS (XOOPS Stand Alone Server). I use this information pretty much on a daily basis, and I hope you find it helpful, too. As always, I welcome any feedback or suggestions you may have.

(It is assumed that the reader has a basic knowledge of folder structures, permission settings, how to perform basic operations in phpMyAdmin, and of course, how to install XOOPS.)

1. Create a folder on your hard drive called Localhost

2. Run the XSAS Setup program in that folder

3. Create your folders in the www root of XSAS to represent the different sites you will be developing (i.e.: Clients, Personal, etc.)

4. Extract a fresh distro of Xoops in a temp folder

5. Copy the html folder from your Xoops package into the various folders you created in step 3.

6. Rename the html folder to represent the particular site to be developed (i.e.: Client1, Site2, etc.)

7. Start the XSAS server on your local machine

8. Open PHPMyAdmin from the advanced tab of the XSAS GUI

9. Create a database that has the same name as the database used for your published website (the site on the Internet)

10. Open a browser and navigate to http://localhost and select the folder of the site you want to install (ex: http://localhost/clients/somecoweb/). This will begin the installation of Xoops as usual.

11. Setup Xoops as you normally would

12. Put the database name of the site you want to develop in the appropriate field, but put root as the database username with no password

13. Make sure you change the prefix for the tables to match the database you will import later (if applicable)

14. Complete your installation as usual

15. Export the database from your site that is on the Internet into a text file. (Be sure you export it with complete inserts and add 'drop table'. This will insure a proper import later.)

16. Open the text file in a text editor and do a find and replace for the url

(i.e.: Find the Internet url that the site would use online and replace it with the local url. ex: Find: http://yourdomain.com/ Replace with: http://localhost/the_directory_where_you_installed_xoops/) Save your file.

**The copy and paste method works best for the aformentioned step.**

17. Open PHPMyAdmin in XSAS and import the database you just edited.

18. Now test your site out.

**If you will be developing multiple sites, I've found it quite convenient to keep a bookmark of http://localhost and I add a bookmark for each additional site when I begin development (i.e.: http://localhost/clients/client1, http://localhost/clients/client2, etc.)**

Now, after you've made all the changes you want to your site locally, you only have a few steps to follow to publish your work online.

19. You essentially repeat steps 15-18, but instead, you export from localhost's database, edit the sql file to change the url to the Internet url, and you import the database into the online SQL server.

**It's also important to note that, if you have added any additional files to your website while developing it locally (i.e.: themes, modules, hacks, etc.), you'll want to upload those files to your web server prior to updating your database.**

On another note. If you want to work on your website away from home, if you've setup your local server as I've outlined, you can just copy the entire Localhost folder onto a USB Pen Drive and take it with you. Then all you have to do is just execute XSAS directly from the pen drive on any Windows 98 and above system. Since XSAS always creates a virtual w: drive, this method works quite well for portable development and demonstration.

This article mainly focuses on XSAS and XOOPS, however, similar steps can be used for virtaully any standalone server software and content management system. These two were used because it is a combination I know to be relatively bug-free and easy to use.

Thanks and regards, Guruji Softwares

1 note

·

View note

Text

How to Create a Temp Table in SQL Server

If you want to to create a temp table in SQL server, the following article will provide you with detailed instructions

#SQLserver #createatemptable

0 notes

Text

Datagrip create database

DATAGRIP CREATE DATABASE INSTALL

DATAGRIP CREATE DATABASE DRIVERS

DATAGRIP CREATE DATABASE UPDATE

DATAGRIP CREATE DATABASE DRIVER

DATAGRIP CREATE DATABASE SOFTWARE

If you have connected to a database with DataGrip as a MySQL GUI before, this experience will likely be pretty familiar to you. Using DataGrip as a MySQL GUI for PlanetScale #

DATAGRIP CREATE DATABASE UPDATE

Once you are ready to go live, non-blocking schema changes provide a schema change workflow that allows users to update database tables without locking or causing downtime for production databases. This enables experimenting with schemas in an isolated environment. Database Branches™ allow you to branch your database schemas like you branch your code. If you aren’t familiar with DataGrip, it is a popular, cross-platform IDE for databases that works nicely with PlanetScale databases.Īlongside DataGrip, you can manage your database changes easier with branching in PlanetScale.

Pre-created sequences are not suggested by Datagrip Intellisense.Connect your PlanetScale databases and their branches to manage and run queries from JetBrains DataGrip in a few small steps.

Flex tables are not included in the DataGrip object tree.

Temp tables are not included in the DataGrip object tree.

TIME and TIMETZ data types do not support milliseconds.

BINARY, VARBINARY, and LONGVARBINARY data types are not supported.

DATAGRIP CREATE DATABASE DRIVERS

In the Drivers list on the left, select Vertica.īrowse to the Vertica JDBC JAR file that you want to use.įollow steps 5 - 7 of Connect DataGrip to Vertica Using the Named Connector to provide the connection details and test the connection.

On the Data Sources and Drivers page, click the + icon to expand the list of available data sources.

DATAGRIP CREATE DATABASE DRIVER

If you do not already have the driver, you can download it from the Client Drivers page on the Vertica website.įor details about client and server compatibility, see Client Driver and Server Version Compatibility in the Vertica documentation.Īfter noting the location of the Vertica JDBC driver file on your system, follow these steps: If you want to use the latest Vertica JDBC driver, or any version other than the one provided with DataGrip, you can specify it as a Custom JAR.īefore establishing the connection in DataGrip, note the location of the Vertica JDBC driver file on your system. Connect DataGrip to Vertica Using a Custom JAR Below is an example that shows a SQL command and the results in the Vertica connection.įor details about using DataGrip, refer to the DataGrip documentation. Now you can start using DataGrip with your Vertica database. When the connection is successful, click OK. Select Vertica from the list of available data sources and click OK.Ĭlick the Download link to download the Vertica JDBC driver that is included with DataGrip. On the Data Sources and Drivers page, click the Add icon ( +) to create a new data source. Start DataGrip and select File > Data Sources. Connect DataGrip to Vertica Using the Named Connector Follow the instructinos in Connect DataGrip to Vertica Using a Custom JAR. If you want to use a later or earlier version of the Vertica JDBC driver, you can specify it as a Custom JAR. Follow the instructions in Connect DataGrip to Vertica Using the Named Connector. To obtain the connector, you must download it from the DataGrip server.

Run the installer and follow the on-screen instructions.ĭataGrip uses Vertica's JDBC driver to connect to Vertica.ĭataGrip 2019.1 includes the Vertica 9.2.0 JDBC driver as a named connector.

Click Installation Instructions to read the installation instructions for your platform.

On Linux, DataGrip requires the Linux Desktop.

DATAGRIP CREATE DATABASE INSTALL

To install the evaluation version of DataGrip:Ĭlick System requirements to check the system requirements for your platform. We have tested DataGrip on Windows and Linux.įor details about DataGrip, visit the DataGrip page on the JetBrains website:Ī free version of DataGrip is available for a 30-day evaluation period. DataGrip is available for Windows, Linux, and MacOS. JetBrains DataGrip is a database IDE for SQL developers. Vertica JDBC 9.2.0 included with DataGrip

DATAGRIP CREATE DATABASE SOFTWARE

Vertica and DataGrip: Latest Versions Tested Software Connection guides are based on our testing with specific versions of Vertica and the partner product. Vertica connection guides provide basic instructions for connecting a third-party partner product to Vertica. Vertica Integration with DataGrip: Connection Guide About Vertica Connection Guides

0 notes

Text

Sql server option recompile

SQL SERVER OPTION RECOMPILE SOFTWARE

SQL SERVER OPTION RECOMPILE CODE

SQL SERVER OPTION RECOMPILE FREE

I’m also available for consulting if you just don’t have time for that and need to solve performance problems quickly. I’m offering a 75% discount on to my blog readers if you click from here. If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m not mad.Īnd yeah, there’s advances in SQL Server 20 that start to address some issues here, but they’re still imperfect. Using a plan guide doesn’t interfere with that precious vendor IP that makes SQL Server unresponsive every 15 minutes.

Plan Guides: An often overlooked detail of plan guides is that you can attach hints to them, including recompile.

You can single out troublesome queries to remove specific plans.

DBCC FREEPROCCACHE: No, not the whole cache.

Sure, you might be able to sneak a recompile hint somewhere in the mix even if it’d make the vendor upset.

SQL SERVER OPTION RECOMPILE SOFTWARE

For third party vendors who have somehow developed software that uses SQL Server for decades without running into a single best practice even by accident, it’s often harder to get those changes through. And yeah, sometimes there’s a good tuning option for these, like changing or adding an index, moving parts of the query around, sticking part of the query in a temp table, etc.īut all that assumes that those options are immediately available. Those are very real problems that I see on client systems pretty frequently.

SQL SERVER OPTION RECOMPILE CODE

CPU spikes for high-frequency execution queries: Maybe time for caching some stuff, or getting away from the kind of code that executes like this (scalar functions, cursors, etc.)īut for everything in the middle: a little RECOMPILE probably won’t hurt that bad.Sucks less if you have a monitoring tool or Query Store. No plan history in the cache (only the most recent plan): Sucks if you’re looking at the plan cache.Long compile times: Admittedly pretty rare, and plan guides or forced plans are likely a better option.Not necessarily caused by recompile, but by not re-using plans. Here are some problems you can hit with recompile. But as I list them out, I’m kinda shrugging. Obviously, you can run into problems if you (“you” includes Entity Framework, AKA the Database Demolisher) author the kind of queries that take a very long time to compile. And if you put it up against the performance problems that you can hit with parameter sniffing, I’d have a hard time telling someone strapped for time and knowledge that it’s the worst idea for them. You can do it in SSMS as well, but Plan Explorer is much nicer.It’s been a while since SQL Server has had a real RECOMPILE problem. Look at details of each operator in the plan and you should see what is going on.

SQL SERVER OPTION RECOMPILE FREE

I would recommend to look at both actual execution plans in the free SQL Sentry Plan Explorer tool. Without OPTION(RECOMPILE) optimiser has to generate a plan that is valid (produces correct results) for any possible value of the parameter.Īs you have observed, this may lead to different plans. If there are a lot of values in the table that are equal to 1, it would choose a scan. If there is only one value in the table that is equal to 1, most likely it will choose a seek. Also, optimiser knows statistics of the table and usually can make a better decision. It does not have to be valid for any other value of the parameter. The generated plan has to be valid for this specific value of the parameter. With OPTION(RECOMPILE) optimiser knows the value of the variable and essentially generates the plan, as if you wrote: SELECT * And simple (7 rows) and actual statistics. With OPTION (RECOMPILE) it uses the key lookup for the D table, without it uses scan for D table. INSERT INTO D (idH, detail) VALUES 'nonononono') INSERT INTO H (header) VALUES ('nonononono') The script is: Create two tables: CREATE TABLE H (id INT PRIMARY KEY CLUSTERED IDENTITY(1,1), header CHAR(100))ĬREATE TABLE D (id INT PRIMARY KEY CLUSTERED IDENTITY(1,1), idH INT, detail CHAR(100)) I am lost why execution plan is different if I run query with option recompile to compare to same query (with clean proc cache) without option recompile.

0 notes

Text

PHPMyAdmin is a free software tool written in PHP, intended to handle the administration of MySQL over the Web interface. phpMyAdmin supports a wide range of operations on MySQL and MariaDB. In this article we look at how you install PHPMyAdmin on Kali Linux & Debian Linux system. Most frequent database operations – (managing databases, tables, columns, relations, indexes, users, permissions, etc) can be performed via the web console, while you still have the ability to directly execute any SQL statement. Core Features of phpMyAdmin An intuitive cool web interface Support for most MySQL features Import data from CSV and SQL Export data to various formats: CSV, SQL, XML, PDF, ISO/IEC 26300 – OpenDocument Text and Spreadsheet, Word, LATEX, and others Administering multiple servers Creating PDF graphics of your database layout Creating complex queries using Query-by-example (QBE) Searching globally in a database or a subset of it Transforming stored data into any format using a set of predefined functions, like displaying BLOB-data as image or download-link The following is the procedure to follow while installing PHPMyAdmin on Kali Linux or any other Debian based system. Step 1: Update System Start by ensuring the system is updated. sudo apt update sudo apt upgrade Because of kernel updates a reboot may be required. sudo reboot Step 2: Install PHP and Apache on Kali Linux The next step is the installation of PHP, required modules and Apache Web Server. sudo apt -y update sudo apt -y install wget php php-cgi php-mysqli php-pear php-mbstring libapache2-mod-php php-common php-phpseclib php-mysql Confirm installation of PHP by checking the version: $ php --version PHP 7.4.11 (cli) (built: Oct 6 2020 10:34:39) ( NTS ) Copyright (c) The PHP Group Zend Engine v3.4.0, Copyright (c) Zend Technologies with Zend OPcache v7.4.11, Copyright (c), by Zend Technologies Step 3: Install MariaDB / MySQL database Server Since you’re using phpMyAdmin to administer MySQL or MariaDB database server you should have database server already installed. You can also reference our guides below. How To Install MariaDB on Kali Linux How To Install MySQL 8.0 on Kali Linux Once the database server is installed and running you can then proceed to install phpMyAdmin on Kali Linux and Debian system. Step 4: Install PHPMyAdmin on Kali Linux From the phpMyAdmin downloads page you should be able to check the latest release. Use wget command line tool to download the latest version of phpMyAdmin: wget https://www.phpmyadmin.net/downloads/phpMyAdmin-latest-all-languages.tar.gz Extract downloaded archive file using tar: tar xvf phpMyAdmin-latest-all-languages.tar.gz Move the folder created from extraction to /usr/share/phpmyadmin directory. rm -f phpMyAdmin-latest-all-languages.tar.gz sudo mv phpMyAdmin-*/ /usr/share/phpmyadmin Create directory for phpMyAdmin temp files. sudo mkdir -p /var/lib/phpmyadmin/tmp sudo chown -R www-data:www-data /var/lib/phpmyadmin We also need to create a directory where phpMyAdmin configuration files will be stored. sudo mkdir /etc/phpmyadmin/ Copy configuration template to the directory we just created. sudo cp /usr/share/phpmyadmin/config.sample.inc.php /usr/share/phpmyadmin/config.inc.php Edit the file /usr/share/phpmyadmin/config.inc.php and set secret passphrase: $ sudo vim /usr/share/phpmyadmin/config.inc.php $cfg['blowfish_secret'] = 'H2TxcGXxflSd8JwrXVlh6KW4s2rER63i'; Configure Temp directory by adding this line in the file. $cfg['TempDir'] = '/var/lib/phpmyadmin/tmp'; Step 5: Configure Apache web Server Create a new Apache configuration file for phpMyAdmin. sudo vim /etc/apache2/conf-enabled/phpmyadmin.conf Paste below contents to the file. Alias /phpmyadmin /usr/share/phpmyadmin Options SymLinksIfOwnerMatch DirectoryIndex index.php AddType application/x-httpd-php .php

SetHandler application/x-httpd-php php_value include_path . php_admin_value upload_tmp_dir /var/lib/phpmyadmin/tmp php_admin_value open_basedir /usr/share/phpmyadmin/:/etc/phpmyadmin/:/var/lib/phpmyadmin/:/usr/share/php/php-gettext/:/usr/share/php/php-php-gettext/:/usr/share/javascript/:/usr/share/php/tcpdf/:/usr/share/doc/phpmyadmin/:/usr/share/php/phpseclib/ php_admin_value mbstring.func_overload 0 AddType application/x-httpd-php .php SetHandler application/x-httpd-php php_value include_path . php_admin_value upload_tmp_dir /var/lib/phpmyadmin/tmp php_admin_value open_basedir /usr/share/phpmyadmin/:/etc/phpmyadmin/:/var/lib/phpmyadmin/:/usr/share/php/php-gettext/:/usr/share/php/php-php-gettext/:/usr/share/javascript/:/usr/share/php/tcpdf/:/usr/share/doc/phpmyadmin/:/usr/share/php/phpseclib/ php_admin_value mbstring.func_overload 0 # Authorize for setup AuthType Basic AuthName "phpMyAdmin Setup" AuthUserFile /etc/phpmyadmin/htpasswd.setup Require valid-user # Disallow web access to directories that don't need it Require all denied Require all denied Require all denied Restriction to specific IP addresses or network address block can be set with a configuration which looks similar to below. Require ip 127.0.0.1 192.168.10.0/24 Finally restart Apache web server to read the changes. sudo systemctl restart apache2 Confirm Apache service has been started without any error: $ systemctl status apache2 ● apache2.service - The Apache HTTP Server Loaded: loaded (/lib/systemd/system/apache2.service; disabled; vendor preset: disabled) Active: active (running) since Fri 2022-01-22 14:49:54 EST; 11min ago Docs: https://httpd.apache.org/docs/2.4/ Process: 7502 ExecStart=/usr/sbin/apachectl start (code=exited, status=0/SUCCESS) Main PID: 7513 (apache2) Tasks: 11 (limit: 2274) Memory: 57.8M CPU: 656ms CGroup: /system.slice/apache2.service ├─7513 /usr/sbin/apache2 -k start ├─7515 /usr/sbin/apache2 -k start ├─7516 /usr/sbin/apache2 -k start ├─7517 /usr/sbin/apache2 -k start ├─7518 /usr/sbin/apache2 -k start ├─7519 /usr/sbin/apache2 -k start ├─7751 /usr/sbin/apache2 -k start ├─7757 /usr/sbin/apache2 -k start ├─7758 /usr/sbin/apache2 -k start ├─7759 /usr/sbin/apache2 -k start └─7760 /usr/sbin/apache2 -k start Step 6: Open phpMyAdmin Web interface Access phpMyAdmin Web interface on http://[ServerIP|Hostname]/phpmyadmin Use your database credentials – username & password to login. The root user credentials can also be used to authenticate. phpMyAdmin dashboard is displayed upon a successful login. You can now use phpMyAdmin for all database management tasks in your software development cycle. Below are more guides we have on Kali Linux.

0 notes

Text

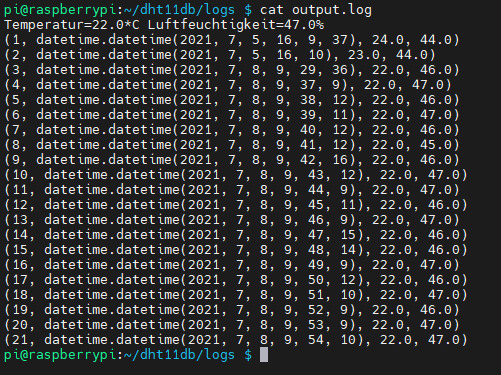

Raspberry PI Tutorial #8: Daten eines DHT11 Sensors in eine MySQL Datenbank speichern

In diesem Beitrag möchte ich dir zeigen wie du die Daten eines DHT11 Sensors in einer MySQL Datenbank mit Python am Raspberry Pi speichern kannst.

Im Beitrag Python mit MySQL Datenbank verbinden habe ich dir bereits gezeigt wie man eine Verbindung zu einer MySQL Datenbank unter Python aufbauen kannst. Hier soll es nun darum gehen wie du die Daten des DHT11 Sensors in einer Tabelle speichern und abrufen kannst.

benötigte Bauteile für dieses Projekt

Für dieses Projekt benötigst du: - einen Raspberry Pi,- ein USB Stromadapter, (je nach Pi muss die entsprechende Stromstärke beachtet werden) - eine SD Karte (mit vorinstalliertem Raspian OS oder vergleichbar) - ein Netzwerkkabel oder WiFi Adapter - ein 170 Pin Breadboard, - einen DHT11 Sensor, - diverse Breadboardkabel

Schaltung & Aufbau

Den Aufbau der Schaltung habe ich bereits im Beitrag Raspberry PI Tutorial #4: DHT11 Sensor ansteuern (Teil1) erläutert.

Schaltung - Raspberry PI Model B mit DHT11 Sensor Es gibt den DHT11 Sensor als einzelnen Baustein oder aber auch bequem auf einer Platine, somit erspart man sich einen "komplizierten" Aufbau auf einem Breadboard.

Varianten des DHT11 Sensors

MySQL Datenbank vorbereiten

Bevor wir Daten in eine MySQL Datenbank speichern können, müssen wir diese zuvor erstellen und min. eine Tabelle erzeugen. SQL Statements Hier nun die SQL Statements zum - erstellen der Datenbank, - erstellen eines Benutzers für die DB, - zuweisen der Privilegien des Benutzers zur DB, - erstellen der Tabelle, - einfügen von Daten, und - abfragen der Daten erstellen der Datenbank Als erstes benötigen wir eine Datenbank diese erstellen wir mit dem Befehl "CREATE DATABASE". create database sensorTestDb; erstellen eines Benutzers für die DB Damit wir auf der Datenbank arbeiten können, benötigen wir einen Benutzer. Die Benutzer sind in MySQL Global d.h. ein Benutzer kann für mehrere Datenbanken berechtigt werden (dazu im nächsten Abschnitt mehr). INSERT INTO mysql.user (User,Host,authentication_string,ssl_cipher,x509_issuer,x509_subject) VALUES('pydbuser','localhost',PASSWORD('pydbpw'),'','',''); zuweisen der Privilegien des Benutzers zur Datenbank Wenn der Benutzer & die Datenbank angelegt wurde, muss nun der Benutzer für die Datenbank berechtigt werden. In meinem Fall gebe ich dem Benutzer "pydbuser" alle Rechte auf der Datenbank. GRANT ALL PRIVILEGES ON sensorTestDb.* to pydbuser@localhost; Nachdem das obrige Statement ausgeführt wurde, muss nur noch die privilegien geschrieben und dem Server mitgeteilt werden das dieser die Tabellen mit den Rechten neu einließt. FLUSH PRIVILEGES; erstellen der Tabelle Zunächst erstellen wir eine Tabelle mit vier Spalten - ID - ganzahlig, aufsteigend - zeitstempel - DateTime - temperatur - Float - Gleitkommazahl - luftfeuchtigkeit - Float - Gleitkommazahl CREATE TABLE `sensorTestDb`.`sensorvalues` (`ID` INT NOT NULL AUTO_INCREMENT, `zeitstempel` DATETIME NOT NULL, `temperatur` FLOAT NOT NULL, `luftfeuchtigkeit` FLOAT NOT NULL, PRIMARY KEY (`ID`)) ENGINE = InnoDB; einfügen von Daten Das einfügen von Daten in eine SQL Tabelle erfolgt mit dem "INSERT INTO" Befehl. Hier nun ein Beispiel wie der Befehl in unserem Fall aussieht: INSERT INTO `sensorvalues` (`ID`, `zeitstempel`, `temperatur`, `luftfeuchtigkeit`) VALUES (NULL, CURRENT_TIME(), 23.56, 57.6); Wichtig ist dass, die Gleitkommazahlen mit einem Punkt getrennt sind. Sollte als Trenner ein Komma (deutsches Format) gewählt werden, so wird die Nachkommastelle entfernt. abfragen der Daten aus der Tabelle Mit dem SQL Befehl "BETWEEN" können wir Werte aus der Tabelle lesen welche zwischen zwei Datumsbereichen liegen. Dabei ist das Datumsformat YYYY-MM-TT HH:mm:SS. SELECT * FROM `sensorvalues` WHERE zeitstempel between '2021-01-01 00:00:00' and '2021-12-31 23:59:59'

Python-Skript erstellen

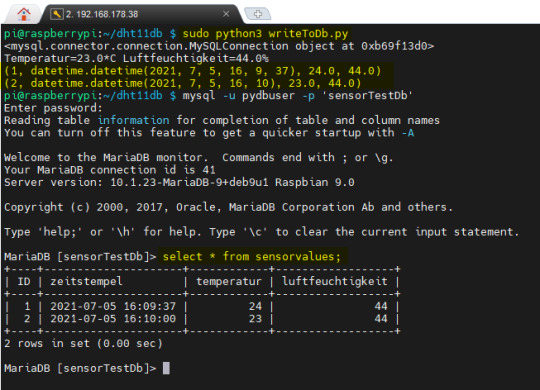

Das passende Python-Skript erstelle ich in zwei Schritte - installieren des MySQL Connectors für Python, - aufbauen der Datenbankverbindung, - Abfragen der Sensordaten, - einfügen der Sensordaten in die Tabelle Dieses Python-Skript wird im Anschluss per CronJob Zeitgesteuert am Raspberry Pi gestartet. installieren des MySQL Connectors für Python Bevor wir mit dem Skripten beginnen können, müssen wir den passenden MySQL-Connector installieren. sudo pip install mysql-connector-python Auf meinem betagten Raspberry Pi dauert diese Installation etwas und kommt zwischendurch auch etwas ins Stocken. Hier muss man etwas warten und nicht gleich diverse Tastenkombinationen betätigen. pi@raspberrypi:~/dht11db $ sudo pip install mysql-connector-python Looking in indexes: https://pypi.org/simple, https://www.piwheels.org/simple Collecting mysql-connector-python Downloading https://files.pythonhosted.org/packages/2a/8a/428d6be58fab7106ab1cacfde3076162cd3621ef7fc6871da54da15d857d/my sql_connector_python-8.0.25-py2.py3-none-any.whl (319kB) |████████████████████████████████| 327kB 575kB/s Collecting protobuf>=3.0.0 Downloading https://files.pythonhosted.org/packages/d5/e0/20ba06eb42155cdb4c741e5c af9946e4569e26d71165abaecada18c58603/protobuf-3.17.3-py2.py3-none-any.whl (173kB) |████████████████████████████████| 174kB 878kB/s Collecting six>=1.9 Downloading https://files.pythonhosted.org/packages/d9/5a/e7c31adbe875f2abbb91bd84 cf2dc52d792b5a01506781dbcf25c91daf11/six-1.16.0-py2.py3-none-any.whl Installing collected packages: six, protobuf, mysql-connector-python Successfully installed mysql-connector-python-8.0.25 protobuf-3.17.3 six-1.16.0 WARNING: You are using pip version 19.3; however, version 21.1.3 is available. You should consider upgrading via the 'pip install --upgrade pip' command. pi@raspberrypi:~/dht11db $ aufbauen der Datenbankverbindung Zunächst müssen wir die Datenbankverbindung aufbauen. Dazu benötigen wir: - Servername, - Benutzername, - Passwort, - Datenbankname import mysql.connector #Aufbau einer Verbindung db = mysql.connector.connect( host="localhost", # Servername user="pydbuser", # Benutzername password="pydbpw", # Passwort database="sensorTestDb" ) # Ausgabe des Hashwertes des initialisierten Objektes print(db) sqlStmt = "SHOW TABLES;" cursor = db.cursor() cursor.execute(sqlStmt) for table in cursor: print(table) Die Ausgabe auf der Bash sieht in diesem Fall wie folgt aus: pi@raspberrypi:~/dht11db $ sudo python3 writeToDb.py ('sensorvalues',) pi@raspberrypi:~/dht11db $ Abfragen der Daten des DHT11 Sensors Wie bereits erwähnt habe ich den DHT11 Sensor am Raspberry PI schon ein paar Beiträge auf meinem Blog gewidmet. Ich möchte hier nur darauf eingehen wie du diese Werte in eine Datenbank speichern kannst und somit möchte ich dir im nachfolgenden lediglich das kleine Skript zeigen, welches die Daten des DHT11 Sensors mit der Adafruit Bibliothek ausliest. import Adafruit_DHT #Adafruit Bibliothek für den Zugriff auf den DHT Sensor #Instanz eines DHT11 Sensor erstellen #für einen # DHT22 Sensor bitte Adafruit_DHT.DHT22 # AM2302 Sensor bitte Adafruit_DHT.AM2302 #verwenden sensor = Adafruit_DHT.DHT11 #Der Sensor ist am GPIO Pin 4 angeschlossen pin = 4 #Variablen deklarieren und gleichzeitig die Werte vom Sensor empfangen. humidity, temperature = Adafruit_DHT.read_retry(sensor, pin) #Wenn die Werte erfolgreich gelesen werden konnten dann... if humidity is not None and temperature is not None: #formatierte Ausgabe der Werte auf der Kommandozeile print('Temperatur={0:0.1f}*C Luftfeuchtigkeit={1:0.1f}%'.format(temperature, humidity)) else: #ansonsten #Ausgabe einer Fehlermeldung auf der Kommandozeile print('Fehler beim empfangen der Daten. Bitte versuche es erneut!') Speichern der Daten in die MySQL Datenbank Die bereits abgefragten Daten des DHT11 Sensors, möchten wir nun in die Tabelle "sensorvalues" der Datenbank "sensorTestDb" speichern. import mysql.connector #Connector für den Aufbau der Datenbankverbindung import Adafruit_DHT #Adafruit Bibliothek für den Zugriff auf den DHT Sensor #Aufbau einer Verbindung db = mysql.connector.connect( host="localhost", # Servername user="pydbuser", # Benutzername password="pydbpw", # Passwort database="sensorTestDb" ) # Ausgabe des Hashwertes des initialisierten Objektes print(db) sensor = Adafruit_DHT.DHT11 #Der Sensor ist am GPIO Pin 4 angeschlossen pin = 4 #Variablen deklarieren und gleichzeitig die Werte vom Sensor empfangen. humidity, temperature = Adafruit_DHT.read_retry(sensor, pin) #Wenn die Werte erfolgreich gelesen werden konnten dann... if humidity is not None and temperature is not None: #formatierte Ausgabe der Werte auf der Kommandozeile print('Temperatur={0:0.1f}*C Luftfeuchtigkeit={1:0.1f}%'.format(temperature, humidity)) sqlStatement = "INSERT INTO `sensorvalues` (`ID`, `zeitstempel`, `temperatur`, `luftfeuchtigkei$ cursor = db.cursor() # einen Cursor von der Datenbankconnection holen # ausführen des SQL Statements, es werden an den Platzhaltern "temp" & "hum" die jeweiligen Sensorwerte # formatiert und eingesetzt cursor.execute(sqlStatement.format(temp = temperature, hum = humidity)) # der Commit dient dazu die Daten in die Datenbank zu speichern cursor.execute("COMMIT;") else: #ansonsten #Ausgabe einer Fehlermeldung auf der Kommandozeile print('Fehler beim empfangen der Daten.')

Abfragen der Daten aus der Datenbank

Die gespeicherten Daten können wir nun mit einem weiteren kleinen Python-Skript aus der Datenbank lesen: import mysql.connector db = mysql.connector.connect( host="localhost", # Servername user="pydbuser", # Benutzername password="pydbpw", # Passwort database="sensorTestDb" ) cursor = db.cursor() cursor.execute("SELECT * FROM sensorvalues;") for row in cursor: print(row) Oder aber, man verbindet sich mit der Datenbank und führt das SELECT Statement manuell aus.

ausführen des SELECT Statements auf der Konsole

fertiges Python-Skript

import mysql.connector import Adafruit_DHT #Adafruit Bibliothek für den Zugriff auf den DHT Sensor #Aufbau einer Verbindung db = mysql.connector.connect( host="localhost", # Servername user="pydbuser", # Benutzername password="pydbpw", # Passwort database="sensorTestDb" ) # Ausgabe des Hashwertes des initialisierten Objektes print(db) sensor = Adafruit_DHT.DHT11 #Der Sensor ist am GPIO Pin 4 angeschlossen pin = 4 #Variablen deklarieren und gleichzeitig die Werte vom Sensor empfangen. humidity, temperature = Adafruit_DHT.read_retry(sensor, pin) #Wenn die Werte erfolgreich gelesen werden konnten dann... if humidity is not None and temperature is not None: #formatierte Ausgabe der Werte auf der Kommandozeile print('Temperatur={0:0.1f}*C Luftfeuchtigkeit={1:0.1f}%'.format(temperature, humidity)) sqlStatement = "INSERT INTO `sensorvalues` (`ID`, `zeitstempel`, `temperatur`, `luftfeuchtigkeit`) VALUES (NULL, CURRENT_TIME(), {temp:000.2f}, {hum:000.2f});" cursor = db.cursor() cursor.execute(sqlStatement.format(temp = temperature, hum = humidity)) cursor.execute("COMMIT;") else: #ansonsten #Ausgabe einer Fehlermeldung auf der Kommandozeile print('Fehler beim empfangen der Daten.') cursor = db.cursor() cursor.execute("SELECT * FROM sensorvalues;") for row in cursor: print(row) Hier nun der Download des Python-Skriptes zum bequemen download :

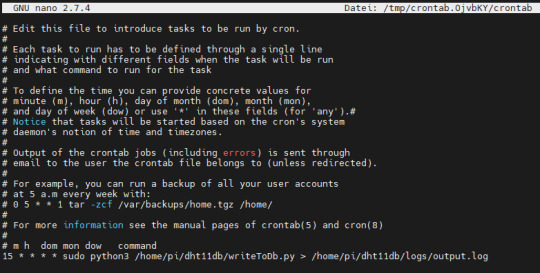

CronJob zum zeitgesteuerten Starten des Skriptes

Der Vorteil eines CronJobs ist dass, das Skript vom Betriebssystem gesteuert und gestartet wird. Vor allem, wenn einmal der Pi Hard- / Softreset erfährt, muss man das gewollte Skript nicht erneut starten, sondern das System übernimmt dieses für einen. Die Syntax für einen CronJob ist für ungeübte recht schwierig zu lesen bzw. zu erstellen, aber auch hier gibt es einige gute Seiten, welche einen Generator erstellt haben und somit zumindest diese Arbeit etwas leichter wird. In meinem Fall möchte ich alle 15 min. einen neuen Wert einlesen und abspeichern, somit ist der Befehl wie folgt: 15 * * * * sudo python3 /home/pi/dht11db/writeToDb.py > /home/pi/dht11db/logs/output.log Die Ausgabe des Skriptes wird nach "/home/pi/dht11db/logs/output.log" geschrieben, d.h. die Ausgabe mit dem Befehl "print" aus dem Skript werden in diese Datei geschrieben, des Weiteren werden auch eventuelle Exceptions / Ausnahmen in diese Datei geschrieben. Den CronJob legen wir mit dem Befehl "crontab -e" an.

CronTab im Editor - Nano Die Ausgabe erfolgt wie bereits erwähnt in die Datei "output.log", welche die eingefügten Zeilen enthält.

Ausgabe der Einträge des Logfiles Read the full article

0 notes

Text

Monitor deadlocks in Amazon RDS for SQL Server and set notifications using Amazon CloudWatch

Monitoring SQL Server is an essential aspect of any relational database management system (RDBMS) when dealing with performance problems. Many of our customers want to capture certain systems and user-defined events for monitoring and troubleshooting problems related to SQL Server. SQL Server logs these events in SQL Server error logs and SQL Server agent logs. Deadlocks are one such event can be captured in SQL Server error logs. A deadlock occurs when two or more processes are waiting on the same resource and each process is waiting on the other process to complete before moving forward. When this situation occurs, there is no way for these processes to resolve the conflict, so SQL Server automatically chooses one of the processes as the victim of the deadlock and rolls back the process, and the other process succeeds. By default, when this occurs, your application may see or handle the error, but nothing is captured in the SQL Server error log or the Windows event log to let you know this occurred. The error message that SQL Server sends back to the client is similar to the following: Msg 1205, Level 13, State 51, Line 3 Transaction (Process ID xx) was deadlocked on {xxx} resources with another process and has been chosen as the deadlock victim. Rerun the transaction With Amazon RDS for SQL Server, you can now monitor the deadlocks and send Amazon Simple Notification Service (Amazon SNS) notifications as soon as a deadlock event occurs on an RDS for SQL Server instance. This can help you automate deadlock reporting and take appropriate actions to resolve deadlock conflicts. This solution isn’t limited to capturing deadlock events; you can scale this solution to monitor other system and user-defined events captured in the error logs and SQL Server agent logs. In this post, we show you how to publish error and agent log events directly to Amazon CloudWatch Logs and set up CloudWatch alarms and SNS notifications for the deadlock events that match the filter pattern that you create. Solution overview The following diagram illustrates the solution architecture. To implement the solution, we walk through the following high-level steps: Enable deadlock detection for Amazon RDS for SQL Server. Publish the SQL Server error logs to CloudWatch. Simulate a deadlock event. Create a filter pattern and CloudWatch alarm. Monitor the solution using Amazon RDS Performance Insights. Prerequisites Amazon RDS for SQL Server Access to the AWS Management Console and Amazon CloudWatch An email address to receive notifications SQL Server Management Studio (SSMS) Enable deadlock detection for Amazon RDS for SQL Server To enable deadlock detection, complete the following steps. On the Amazon RDS console, choose Parameter groups in the navigation pane. Choose Create parameter group. For Parameter group family, choose the SQL Server version and edition you are using. For example, SQL Server 2017 Standard Edition uses sqlserver-se-14.0. Enter a Group name and Description. Choose Create. On the Parameter groups page, choose the group that you created in the previous step. Choose Edit parameters, and select 1204 and 1222. Edit the Values for 1204 and 1222 to 1. Choose Preview changes. On the next page, choose Save changes. In the navigation pane, choose Databases. In the DB identifier section, choose your RDS DB instance. Choose Modify. From the Database options section, for DB parameter group, choose the parameter group you created. DB instances require a manual reboot in the following circumstances: If you replace the current parameter group with a different parameter group If you modify and save a static parameter in a custom parameter group Publish the SQL Server error logs to CloudWatch To publish your SQL Server error logs to CloudWatch, complete the following steps. On the Modify page of the Amazon RDS console, in the Error logs section, choose Error log. This makes sure that the SQL Server error logs are published to CloudWatch Logs. Choose Continue. Enable Performance Insights for Amazon RDS. In the Scheduling of modifications section, choose Apply immediately, and then choose Modify DB Instance. On the Databases page, choose your RDS DB instance, then choose Actions. Reboot the RDS DB instance for the changes to take effect. Simulate a deadlock event Simulate a deadlock transaction on your RDS for SQL Server instance by running the following T-SQL code in SQL Server Management Studio (SSMS). --Two global temp tables with sample data for demo purposes. CREATE TABLE ##Employees ( EmpId INT IDENTITY, EmpName VARCHAR(16), Phone VARCHAR(16) ) GO INSERT INTO ##Employees (EmpName, Phone) VALUES ('Amy', '900-999-1332'), ('Jay', '742-234-2222') GO CREATE TABLE ##Suppliers( SupplierId INT IDENTITY, SupplierName VARCHAR(64), Location VARCHAR(16) ) GO INSERT INTO ##Suppliers (SupplierName, Location) VALUES ('ABC', 'New York'), ('Honest Sourcing', 'Boston') GO Next, open two query windows in SSMS. Run the following code in each of the sessions, step by step, in two windows. Session 1 Session 2 Begin Tran; Begin Tran; UPDATE ##Employees SET EmpName = ‘Gani’ WHERE EmpId = 1; UPDATE ##Suppliers SET Location = N’Toronto’ WHERE SupplierId = 1; UPDATE ##Suppliers SET Location = N’Columbus’ WHERE SupplierId = 1; Blocked UPDATE ##Employees SET Phone = N’123-456-7890′ WHERE EmpId = 1; Blocked After you run the code, you can see one of the transactions is processed (see the following screenshot). The following screenshot shows the second transaction is blocked with a deadlock error. Create a filter pattern and CloudWatch alarm On the CloudWatch console, under Logs, choose Log groups. Choose the SQL Server error logs of your RDS DB instance. The logs are listed in the following format:(/aws/rds/instance//error) Choose Create metric filter. In the Filter Pattern section, enter deadlock. Select any errors to monitor and use that as the filter word. Choose Assign metric. Enter deadlock in both the Filter Name and Metric Name Set the metric value field to 1. Choose Create Filter. The following screenshot shows your filter details. After the deadlock filter is created, choose Create alarm. On the Specify metric and conditions page, for Metric name, enter deadlock. For Statistic, choose Minimum. For Period, choose the time period for the alarm, for example, 1 minute. In the Conditions section, for Threshold type, choose Static. For Whenever Deadlock is, choose Greater > threshold. For Than, enter 0. Choose Next. In the Notification section, for Alarm state trigger, choose In alarm. Select an SNS topic, or choose Create new topic to create an SNS topic using the email address you want to receive alerts. Choose Next. In the Name and description section, enter a name and description for your alarm. Choose Next. On the Preview and create page, review your alarm configuration, then choose Create alarm. Confirm the notification email. After you follow these steps, simulate a deadlock again. When the alarm has enough data, the status shows as OK. The CloudWatch alarm sends an SNS notification to the email that you specified (see the following screenshot). Monitor using Performance Insights Performance Insights is an Amazon RDS feature that can automatically analyze the current workload of a database instance and identify the queries that are slowing it down. For each query, it can show the type of wait it’s causing and the user who is causing the slowness or the client machine that’s running the query. All this information is made available in a compact, easy-to-understand dashboard, which makes Performance Insights a great tool for troubleshooting. To get this kind of information, Performance Insights queries the RDS instance’s internal data structures in memory one time every second. It’s not a disk-based operation, so the sampling doesn’t put any pressure on the system. Later, we talk about the types of data that are collected during the samplings. To use Performance Insights for Amazon RDS for SQL Server, complete the following steps. Modify the RDS for SQL Server instance settings to enable Performance Insights. On the Amazon RDS console, choose the database you want to monitor. On the Monitoring tab, choose the Monitoring menu and choose Performance Insights. You can choose the deadlock metrics and monitor using Performance Insights as well. Clean up When you’re finished using the resources in this post, clean up the AWS resources to avoid incurring unwanted charges. Specifically, delete the RDS for SQL Server instance and CloudWatch logs. Conclusion In this post, we showed how to publish error and agent log events directly to CloudWatch Logs and then set up a CloudWatch alarm and SNS notification for deadlock events that match a specific filter pattern. With this solution, you can automate RDS for SQL Server error log files monitoring and alerting. This can help you automate deadlock reporting and take appropriate actions to resolve deadlocks. You can use this solution for monitoring other RDS for SQL Server log events and fatal errors. We showed an example on how to capture deadlock event metrics using Performance Insights. To learn more about monitoring Amazon RDS for SQL Server, see Monitoring OS metrics using Enhanced Monitoring and Monitoring with Performance Insights on Amazon RDS. About the authors Yogi Barot is Microsoft Specialist Senior Solution Architect at AWS, she has 22 years of experience working with different Microsoft technologies, her specialty is in SQL Server and different database technologies. Yogi has in depth AWS knowledge and expertise in running Microsoft workload on AWS. Ganapathi Varma Chekuri is a Database Specialist Solutions Architect at AWS. Ganapathi works with AWS customers providing technical assistance and designing customer solutions on database projects, helping them move their existing databases to AWS cloud. https://aws.amazon.com/blogs/database/monitor-deadlocks-in-amazon-rds-for-sql-server-and-set-notifications-using-amazon-cloudwatch/

0 notes

Text

Resources consumed by idle PostgreSQL connections

PostgreSQL is one of the most popular open-source relational database systems. With more than 30 years of development work, PostgreSQL has proven to be a highly reliable and robust database that can handle a large number of complex data workloads. AWS provides two managed PostgreSQL options: Amazon Relational Database Service (Amazon RDS) for PostgreSQL and Amazon Aurora PostgreSQL. This is a two-part series. In this post, I talk about how PostgreSQL manages connections and the impact of idle connections on the memory and CPU resources. In the second post, Performance impact of idle PostgreSQL connections, I discuss how idle connections impact PostgreSQL performance Connections in PostgreSQL When the PostgreSQL server is started, the main process forks to start background maintenance processes. With default configurations, the process tree looks like the following on a self-managed PostgreSQL instance: /usr/pgsql-11/bin/postmaster -D /var/lib/pgsql/11/data _ postgres: logger _ postgres: checkpointer _ postgres: background writer _ postgres: walwriter _ postgres: autovacuum launcher _ postgres: stats collector _ postgres: logical replication launcher You can see this process tree in Amazon RDS and Aurora PostgreSQL by enabling enhanced monitoring and looking at the OS Process List page (see the following screenshot). For more information, see Enhanced Monitoring. These child processes take care of activities such as logging, checkpointing, stats collection, and vacuuming. The process list in Enhanced Monitoring limits the total number of processes that are shown on the console. If you need to view the complete list of processes, consider using the pg_proctab extension to query system statistics. This extension is available in the latest RDS PostgreSQL minor versions. After initializing the maintenance child processes, the main PostgreSQL process starts waiting for new client connections. When a new connection is received, the main process forks to create a child process to handle this new connection. The main process goes back to wait for the next connection, and the newly forked child process takes care of all activities related to this new client connection. A new child process is started for each new connection received by the database. The following screenshot shows that a user app_user is connected to the database mydb from a remote host (10.0.0.123). The max_connections parameter controls the total number of connections that can be opened simultaneously. Memory used by connections PostgreSQL uses shared memory and process memory. Shared memory is a chunk of memory used primarily for data page cache. The shared_buffers parameter configures the size of the shared memory. This shared memory is used by all the PostgreSQL processes. The process memory contains process-specific data. This memory is used for maintaining the process state, data sorting, storing temporary tables data, and caching catalog data. On Linux, when a new process is forked, a copy of the process gets created. As an optimization, Linux kernel uses the copy-on-write method, which means that initially the new process keeps pointing to the same physical memory that was available in the parent process. This continues until the parent or the child process actually changes the data. At that point, a copy of the changed data gets created. This method reduces some memory overhead when PostgreSQL forks a child process on receiving the new connection. For more information about fork functionality, see the entry in the Linux manual. Idle connections Idle connections are one of the common causes of bad database performance. It’s very common to see a huge number of connections against the database. A common explanation is that they’re just idle connections and not actually doing anything. However, this is incorrect—they’re consuming server resources. To determine the impact of idle PostgreSQL connections, I performed a few tests using a Python script that uses the Psycopg 2 for PostgreSQL connectivity. The tests include the following parameters: Each test consists of 2 runs Each test run opens 100 PostgreSQL connections Depending on the test case, some activity is performed on each connection before leaving it idle The connections are left idle for 10 minutes before closing the connections The second test runs DISCARD ALL on the connection before leaving it idle The tests are performed using Amazon RDS for PostgreSQL 11.6 Although this post shows the results for Amazon RDS for PostgreSQL 11.6 only, these tests were also performed with Aurora PostgreSQL 11.6, PostgreSQL on EC2, and Amazon RDS for PostgreSQL 12 to confirm that we see a similar resource utilization trend. I used the RDS instance type db.m5.large for the test runs, which provides 2 vCPUs and 8GB memory. For storage, I used an IO1 EBS volume with 3000 IOPS. The DISCARD ALL statement discards the session state. It discards all temporary tables, plans, and sequences, along with any session-specific configurations. This statement is often used by connection poolers before reusing the connection for the next client. For each test, a run with DISCARD ALL has been added to see if there is any change in the memory utilization. Connections test #1: Connections with no activity This basic test determines the memory impact of newly opened connection. This test performs the following steps: Open 100 connections. Leave the connections idle for 10 minutes. Close the connections. During the 10-minute wait, check the connection state as follows: postgres=> select state, query, count(1) from pg_stat_activity where usename='app_user' group by 1,2 order by 1,2; state | query | count -------+-------+------- idle | | 100 (1 row) The second run repeats the same steps but runs DISCARD ALL before leaving the connection idle. If you run the preceding query, you get the following output: postgres=> select state, query, count(1) from pg_stat_activity where usename='app_user' group by 1,2 order by 1,2; state | query | count -------+-------------+------- idle | DISCARD ALL | 100 (1 row) The following Amazon CloudWatch metrics show the connections count (DatabaseConnections) and memory utilization (FreeableMemory) on an RDS PostgreSQL instance. The free memory chart shows no significant difference between the run with and without DISCARD ALL. As the connections got opened, the free memory reduced from approximately 5.27 GB to 5.12 GB. The 100 test connections used around 150 MB, which means that on average, each idle connection used around 1.5 MB. Connections test #2: Temporary tables This test determines the memory impact of creating temporary tables. In this test, the connections create and drop a temporary table in the following steps: Open a connection. Create a temporary table and insert 1 million rows with the following SQL statement: CREATE TEMP TABLE my_tmp_table (id int primary key, data text); INSERT INTO my_tmp_table values (generate_series(1,1000000,1), generate_series(1,1000000,1)::TEXT); Drop the temporary table: DROP TABLE my_tmp_table; Commit the changes. Repeat these steps for all 100 connections. Leave the connections idle for 10 minutes. Close the connections. During the 10-minute wait, check the connections state as follows: postgres=> select state, query, count(1) from pg_stat_activity where usename='app_user' group by 1,2 order by 1,2; state | query | count -------+--------+------- idle | COMMIT | 100 (1 row) The second run repeats the same step but runs DISCARD ALL before leaving the connections idle. If you run the same query again, you get the following results: postgres=> select state, query, count(1) from pg_stat_activity where usename='app_user' group by 1,2 order by 1,2; state | query | count -------+-------------+------- idle | DISCARD ALL | 100 (1 row) The following chart shows the connections count and the memory utilization on an RDS PostgreSQL instance. The free memory chart shows no significant difference between the run with and without DISCARD ALL. As the connections got opened, the free memory reduced from approximately 5.26 G to 4.22 G. The 100 test connections used around 1004 MB, which means that on average, each idle connection used around 10.04 MB. This additional memory is consumed by the buffers allocated for temporary tables storage. The parameter temp_buffers controls the maximum memory that can be allocated for temporary tables. The default value for this parameter is set to 8 MB. This memory, once allocated in a session, is not freed up until the connection is closed. Connections test #3: SELECT queries This test determines the memory impact of running some SELECT queries. In this test, each connection fetches one row from each of the tables in the PostgreSQL internal schema information_schema. In PostgreSQL 11, this schema has 60 tables and views. The test includes the following steps: Open a connection. Fetch the names of all the tables and views in information_schema: SELECT table_schema||'.'||table_name as relname from information_schema.tables WHERE table_schema='information_schema In a loop, run select on each of these tables with LIMIT 1. The following code is an example query: SELECT * FROM information_schema.columns LIMIT 1; Repeat these steps for all 100 connections. Leave the connections idle for 10 minutes. Close the connections. During the 10-minute wait, check the connections state as follows: postgres=> select state, query, count(1) from pg_stat_activity where usename='app_user' group by 1,2 order by 1,2; state | query | count -------+--------+------- idle | COMMIT | 100 (1 row) The second run repeats the same steps but runs DISCARD ALL before leaving the connections idle. Running the query again gives you the following results: postgres=> select state, query, count(1) from pg_stat_activity where usename='app_user' group by 1,2 order by 1,2; state | query | count -------+-------------+------- idle | DISCARD ALL | 100 (1 row) The following chart shows the connections count and the memory utilization on an RDS PostgreSQL instance. The free memory chart shows no significant difference between the run with and without DISCARD ALL. As the connections got opened, the free memory reduced from approximately 5.25 GB to 4.17 GB. The 100 test connections used around 1080 MB, which means that on average, each idle connection used around 10.8 MB. Connections test #4: Temporary table and SELECT queries This test is a combination of tests 2 and 3 to determine the memory impact of creating a temporary table and running some SELECT queries on same connection. The test includes the following steps: Open a connection. Fetch the names of all the tables and views in information_schema: SELECT table_schema||'.'||table_name as relname from information_schema.tables WHERE table_schema='information_schema In a loop, run select on each of these tables with LIMIT 1. The following is an example query: SELECT * FROM information_schema.columns LIMIT 1; Create a temporary table and insert 1 million rows: CREATE TEMP TABLE my_tmp_table (id int primary key, data text); INSERT INTO my_tmp_table values (generate_series(1,1000000,1), generate_series(1,1000000,1)::TEXT); Drop the temporary table: DROP TABLE my_tmp_table; Commit the changes. Repeat these steps for all 100 connections. Leave the connections idle for 10 minutes. Close the connections. During the 10-minute wait, check the connection state as follows: postgres=> select state, query, count(1) from pg_stat_activity where usename='app_user' group by 1,2 order by 1,2; state | query | count -------+--------+------- idle | COMMIT | 100 (1 row) The second run repeats the same step but runs DISCARD ALL before leaving the connections idle. Running the preceding query gives the following results: postgres=> select state, query, count(1) from pg_stat_activity where usename='app_user' group by 1,2 order by 1,2; state | query | count -------+-------------+------- idle | DISCARD ALL | 100 (1 row) The following chart shows the connections count and the memory utilization on an RDS PostgreSQL instance. The free memory chart shows no significant difference between the run with and without DISCARD ALL. As the connections got opened, the free memory reduced from approximately 5.24 GB to 3.79 GB. The 100 test connections used around 1450 MB, which means that on average, each idle connection used around 14.5 MB. CPU impact So far, we have focused on memory impact only, but the metrics show that CPU utilization also goes up when the number of idle connections go up. The idle connections have minimal impact on the CPU, but this can be an issue if CPU utilization is already high. The following figure shows the connection counts and CPU utilizations with different numbers of idle connections. In this test, the connections were open and left idle for 10 minutes before closing the connections and waiting another 10 minutes before opening next batch of connections. The figure shows that the CPU utilization was around 1% with some small spikes to 2% with no connections. The utilization increased to 2% with 100 idle connections, increased to 3% with 500 idle connections, increased to 5% with 1,000 idle connections, increased to 6% with 1,500 idle connections and increased to 8% with 2,000 idle. Note that this utilization is for an instance with 2 vCPUs. CPU utilization goes up with the number of connections because PostgreSQL needs to examine each process to check the status. This is required irrespective of whether the connection is active or idle. Summary PostgreSQL connections consume memory and CPU resources even when idle. As queries are run on a connection, memory gets allocated. This memory isn’t completely freed up even when the connection goes idle. In all the scenarios described in this post, idle connections result in memory consumption irrespective of DISCARD ALL. The amount of memory consumed by each connection varies based on factors such as the type and count of queries run by the connection, and the usage of temporary tables. As per the test results shown in this post, the memory utilization ranged from around 1.5–14.5 MB per connection. If your application is configured in a way that results in idle connections, it’s recommended to use a connection pooler so your memory and CPU resources aren’t wasted just to manage the idle connections. The following post in this series shows how these idle connections impact your database performance. About the Author Yaser Raja is a Senior Consultant with Professional Services team at Amazon Web Services. He works with customers to build scalable, highly available and secure solutions in AWS cloud. His focus area is homogenous and heterogeneous migrations of on-premise databases to AWS RDS and Aurora PostgreSQL. https://aws.amazon.com/blogs/database/resources-consumed-by-idle-postgresql-connections/

0 notes

Photo

Using a tMSSQLSP component as your source for a SQL result set seems like a pretty straightforward concept, especially if you come from an SSIS background. However, there are a few things that make it not so intuitive.

I’m quite green when it comes to Talend, but have well over a decade of experience in ETL design in the Microsoft stack. Recently, I had the opportunity through my employer to work on this specific issue with a Talend-centric consulting firm, ETL Advisors. If you’re looking to add extra skills to your team, you should check them out.

Back to the topic at hand:

First of all, you’ll need to pass in any parameters to the stored procedure, which you can find various articles for. I won’t go into great detail here.

Second, the resultset that is your “select statement” within the stored procedure will need to be schema-defined as an object within Talend, and mapped as a RECORD SET.

Third, on a tParseRecordSet object that you’ll connect to the main row out from your tMSSQLSP object, you’ll need to define out the schema of all the columns that come from the stored procedure results. As you have likely encountered in your ETL career, this can sometimes be dozens of columns. If you hate yourself, get 2 monitors and start hand keying all of the output schema. If you want a possible shortcut, keep reading.

When you are in the output portion of the schema definition screen, you can import from an XML file using the folder icon highlighted below.

Where would you get this XML from? Well, with a little help from your friends, that’s where. SQL server has a ton of system stored procedures and DMVs that give you all types of information. I’ve enhanced the “sp_describe_first_result_set” in a way that presents it as valid XML. You’ll be able to copy the XML column, and paste it into an XML file that gets imported above.

This is accomplished with the following stored procedure creation/execution to suit your needs. There is plenty of help on how to use sp_describe_first_result_set natively, if you want to just see it in action without my enhancements.

But in the meantime, here’s my T-SQL if you want to get started on the rest of your life. Keep in mind there are a few data types that are probably not included, and it is mainly because I didn’t see a quick way to map them to Talend-equivalents. (Feel free to edit it up!) :

--+PROCEDURE TO COMPILE TO A LOCAL DATABASE WHERE USE IS WARRANTED ALTER PROCEDURE USP_HELPER_INTERROGATE_SP ( @PROC AS NVARCHAR(max), @PARAMS_IN AS NVARCHAR(MAX) ) AS BEGIN --+author: Radish --+date: 3/8/2018 --+comments: For demonstration purposes only, results will very with more complex stored procedures