#Sqoop

Explore tagged Tumblr posts

Text

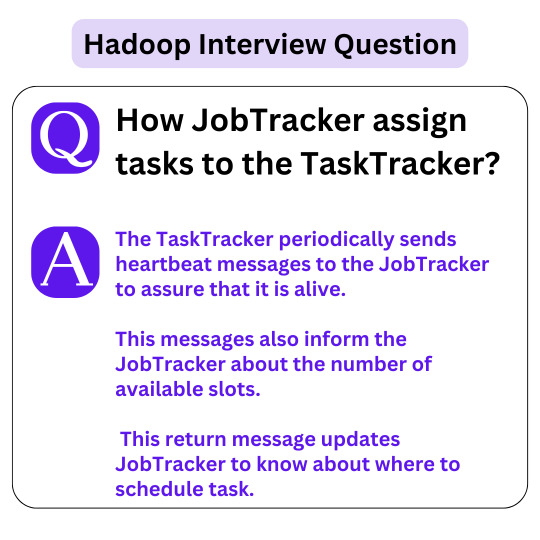

Hadoop Interview Questions . . . . How JobTracker assign tasks to the TaskTracker? . . . for more information and tutorial https://bit.ly/3y7dhRh check the above link

0 notes

Text

Join Now : https://meet.goto.com/844038797

Attend Online Free Demo On AWS Data Engineering with Data Analytics by Mr. Srikanth

Demo on: 09th December (Saturday) @ 9:30 AM (IST).

Contact us: +91-9989971070.

Join us on Telegram: https://t.me/+bEu9LVFFlh5iOTA9

Join us on WhatsApp: https://www.whatsapp.com/catalog/919989971070Visit: https://www.visualpath.in/aws-data-engineering-with-data-analytics-training.html

#aws#AWSRedshift#redshift#athena#S3#Hadoop#MSSQL#Sqoop#EMR#Scala#BigData#MySQL#java#database#spark#git#ETL#Dataengineer#MongoDB#oracle#visualpathedu#FreeDemo#onlinetraining#newcourses#latesttechnology#dataanalysis#dataanalytics#awsdataengineer#dataengineering#awsdataengineering

0 notes

Text

I've looked through all of these and they all are tools for the Apache framework made in around the mid 2000s, so long before the Poobening. Some of my favorites:

Hadoop: Named after the founder's toy elephant (their mascot is an elephant)

Pig: High-level development platform for Hadoop programs. The coding language is called Pig Latin

Flume: "A service for collecting, aggregating, and moving large amounts of log data."

CompSci people love getting silly with it.

What the fuck are these Poob ass frameworks

2K notes

·

View notes

Note

Please bless me with some words any words

Squippity Sqoop

The words didn't have to be real, right?

18 notes

·

View notes

Note

beep beep sqoop morp

̶̴̢̢̡̧̡̡̢̨̛̛̛̤̠̝̤͈͚̬̜̫̪͚̳͍̥̥̜̙̱̳̟̮͍̪͕͈̼͓͖̗͓̺͇̘̥̭͚̻͉̤͈̟͕̲͕͖͍̲̗̜͖͙̜̹̦̤̘̺̜͔͋̽̓̌̀̅̀̈́́̈́͂́͗͌̿̊̆̿̇̈́̀͒̒͆͗̊́̇̔̍̿͂͂͂̇͒̋͂̉̾̀̎̉̃͐͌̈́̈́̏͆̐̈́͑̓͒̑̈̅̊͗̎́̌͐̾͛͑̑̎̀̅͛̅̀̇̄̽̎̐̅̑͑̄̀̌̄̽͑̔̎̏̀̀̽̏͒͗̆̒̊̇̔̌̐͊̚̚͝͝͝ą̸̧̨̨̨͚͓̮̥̪̗̥̪̤̝̭̞̙̘̫̯̣͕͓̦̫̮̭͔̰̙͈̮͙̺̝̟̞̦͓̖͓̗̮̮̬̜͕̝̦̲́͆̾͆̔͊̂̅̊̀͗͐̑̿͜͜ͅl̶̢̡̡̛̛̝̞̞̭̦̳͈̖̺̺̘̦͈̹̥̗̠̺̰̙̮͓̳̪̩̼͓̦̱͊̿̈̐̀͌̓̈́͋̎̌͆̊̽͑̉̄̄͑͆̓͆̈́͗̀͗͐̾̈̏́̓̔̽̇͗̒͐̿̋̀̌̈́̋̂̓̒̄̄̀́̈́͌̋̏̀̒̕͘̚͠͠͝͝͝͝ͅį̵̡̛̗̻̦̻̫͓͖͇̰̲͔͇͖̫͎̙̮͇̟̻̭̤̣̱͉͓̪̜͇̳̖͔̥͉͎̣̲̱͓͍̃͒̂̉̎̃͒̇̄͊̋̽̾͆͗͋̐̓͑͜͜͜͜͝ͅͅę̴̡̧̧̲͍͍͎͓̘͉̜̺̤̥̘̜͔̖̟͎̻̗̤͖̯͔̙̘̥̯̬͔̙͎͕̟̼̖̠̝̩̪̫͎̼͔̞̪̦̝̺͓͚͚̃͋̽́͊̊̔͛̋͜͜͜͝ͅͅͅͅn̶̡̨̧̨̧̨̢̜̱̞̮̗͇̻̪͉̭̘̙̤̩̳̜̞̣͉͖̪̖̹̣̻͍̱̠͎̟̭͓̗͉͔̮̬̣̜̆̾͐̽̈́̓͂̒́̎̂̀́̾̑́̌͛͋̈́͊̀̓͌̇͊̏̍̈́̓̐̈́̉̀̊͋̔̀͊̾̃͛̈̐̃̈́̏́̈́͋̓͘͘͘̚͘̕͜͝ͅͅ ̶̹̳̞͓͉̼̘̟̝̙̙̥̜̟̲͚͙̳̬̘̯̫̗̦̳̳͋͒̇̒͗́͛̎̏̈̂̌̈̈́͐͗́̏͗ͅͅĄ̴̧̧̧̡̡̛̛̤̰̼̟̜͚̥̮̗̬̬͉̖̩̥̙͙̙͉̝̫̜̫̞͈̖͇̭̟̱̞̘͙̟̭͉̦͚͖͇̥̻̲̣͇̘̭̝͚̟͎̻͙͌̄͆̈́̏̒̌̈́̏͂͐́̐̊̈́̌̿̿̀̐́̄̾͑̎̓̾̾̾̐̈́͐͗͐̀̏͊̓̽̍̎̃̓̾̄̅͘͜͜͠͠͠͝͝͠͠͠ͅͅͅL̷̢̝̤͙̝̠̖̫͉̮̝̹̜̟̗̼͔̫͕̻̫̈́͂ͅĪ̷̢̛̪͕̥̲͚̼̼̼̙͇̲̰̥̤̠͕̗͎̞̞̭̗̰͓̙̼̫̟̞͚̋͌̈́͋́̈͌́͆̆̋́̄̽͒̋̔̀̈́͂̊̇̉͛͌̿̓͗̌̾̒͆́̇̎̈́̌̋͐̍̌̊͋̂͊͑̀̕̕̚͘̚͘̕͝͠͝͝͝Ȩ̸̧̨̡͚͓̣̤̙͎̪̥͕̟̣̤̖̖͎̞̭̠̝̠͇̺̮̯̻̗̼̭̜̱̦͖͎̙̮̞̘̼̹̯̪̯̹͇̳̎͗̌̎̽́͐͌̋̄͑̕͝ͅN̶̨̡̢̨̢̧̧̢̳̮̹̼̦̙̰̝̜̺͕̲̞̼̰̙͔̘̗̻̖͈̤̼̻̭̞̤̫̖͓̜̥̻͎̻̩̜̟̹̩̖̩̣̟͎͈̪̰̖̘͙͙̩͈̲̘̻̲̜̬͚͕͊̒̅̑̐͂͑̓́̎̇̀̍͌̒͐͛̀̃̍͒̋̊̈́͆̔̔͐̇̐̉͛͋̅͌̏͝ ̸̢̳̲͕̦͔͓̰̥̳̩̉͆̉͒̂̆͆̑̿̂̆͑͂̂́̀̀̿̄̑̔̌̀̑̎̅͒͊̅̇͒̒͛̇͆́͊͜

̸̢̢̡̡̛͙̥͎͇̞̱̼̮̭̝̹̱̹̮͔̰̹̤͕̞̲͚̝̘̪͓̟̜̙͍̹̭͖̬̭͉̭͍̗̳͆̓̋͒̿̃̓̅̂̈́̈́̇̀̋̓̓͐̍̍̌̐̇̄̌͜͝͠͝

3 notes

·

View notes

Note

Squibbibbles YOU

SQOOPS YOU RIGHT UP and SQUISSES YOU!!!

3 notes

·

View notes

Text

Bigdata Training coaching center in chennai

Chennai hosts several leading coaching centers that offer specialized training in Big Data technologies such as Apache Hadoop, Spark, Hive, Pig, HBase, Sqoop, and Kafka, along with real-time project exposure. Whether you're a fresher, student, or working professional, institutes like Greens Technologys, FITA Academy, Besant Technologies, and Credo Systemz provide expert-led sessions, practical assignments, and hands-on labs tailored to meet industry requirements.

0 notes

Text

Big Data Analytics: Tools & Career Paths

In this digital era, data is being generated at an unimaginable speed. Social media interactions, online transactions, sensor readings, scientific inquiries-all contribute to an extremely high volume, velocity, and variety of information, synonymously referred to as Big Data. Impossible is a term that does not exist; then, how can we say that we have immense data that remains useless? It is where Big Data Analytics transforms huge volumes of unstructured and semi-structured data into actionable insights that spur decision-making processes, innovation, and growth.

It is roughly implied that Big Data Analytics should remain within the triangle of skills as a widely considered niche; in contrast, nowadays, it amounts to a must-have capability for any working professional across tech and business landscapes, leading to numerous career opportunities.

What Exactly Is Big Data Analytics?

This is the process of examining huge, varied data sets to uncover hidden patterns, customer preferences, market trends, and other useful information. The aim is to enable organizations to make better business decisions. It is different from regular data processing because it uses special tools and techniques that Big Data requires to confront the three Vs:

Volume: Masses of data.

Velocity: Data at high speed of generation and processing.

Variety: From diverse sources and in varying formats (!structured, semi-structured, unstructured).

Key Tools in Big Data Analytics

Having the skills to work with the right tools becomes imperative in mastering Big Data. Here are some of the most famous ones:

Hadoop Ecosystem: The core layer is an open-source framework for storing and processing large datasets across clusters of computers. Key components include:

HDFS (Hadoop Distributed File System): For storing data.

MapReduce: For processing data.

YARN: For resource-management purposes.

Hive, Pig, Sqoop: Higher-level data warehousing and transfer.

Apache Spark: Quite powerful and flexible open-source analytics engine for big data processing. It is much faster than MapReduce, especially for iterative algorithms, hence its popularity in real-time analytics, machine learning, and stream processing. Languages: Scala, Python (PySpark), Java, R.

NoSQL Databases: In contrast to traditional relational databases, NoSQL (Not only SQL) databases are structured to maintain unstructured and semic-structured data at scale. Examples include:

MongoDB: Document-oriented (e.g., for JSON-like data).

Cassandra: Column-oriented (e.g., for high-volume writes).

Neo4j: Graph DB (e.g., for data heavy with relationships).

Data Warehousing & ETL Tools: Tools for extracting, transforming, and loading (ETL) data from various sources into a data warehouse for analysis. Examples: Talend, Informatica. Cloud-based solutions such as AWS Redshift, Google BigQuery, and Azure Synapse Analytics are also greatly used.

Data Visualization Tools: Essential for presenting complex Big Data insights in an understandable and actionable format. Tools like Tableau, Power BI, and Qlik Sense are widely used for creating dashboards and reports.

Programming Languages: Python and R are the dominant languages for data manipulation, statistical analysis, and integrating with Big Data tools. Python's extensive libraries (Pandas, NumPy, Scikit-learn) make it particularly versatile.

Promising Career Paths in Big Data Analytics

As Big Data professionals in India was fast evolving, there were diverse professional roles that were offered with handsome perks:

Big Data Engineer: Designs, builds, and maintains the large-scale data processing systems and infrastructure.

Big Data Analyst: Work on big datasets, finding trends, patterns, and insights that big decisions can be made on.

Data Scientist: Utilize statistics, programming, and domain expertise to create predictive models and glean deep insights from data.

Machine Learning Engineer: Concentrates on the deployment and development of machine learning models on Big Data platforms.

Data Architect: Designs the entire data environment and strategy of an organization.

Launch Your Big Data Analytics Career

Some more Specialized Big Data Analytics course should be taken if you feel very much attracted to data and what it can do. Hence, many computer training institutes in Ahmedabad offer comprehensive courses covering these tools and concepts of Big Data Analytics, usually as a part of Data Science with Python or special training in AI and Machine Learning. Try to find those courses that offer real-time experience and projects along with industry mentoring, so as to help you compete for these much-demanded jobs.

When you are thoroughly trained in the Big Data Analytics tools and concepts, you can manipulate information for innovation and can be highly paid in the working future.

At TCCI, we don't just teach computers — we build careers. Join us and take the first step toward a brighter future.

Location: Bopal & Iskcon-Ambli in Ahmedabad, Gujarat

Call now on +91 9825618292

Visit Our Website: http://tccicomputercoaching.com/

0 notes

Text

Hadoop Training in Mumbai – Master Big Data for a Smarter Career Move

In the age of data-driven decision-making, Big Data professionals are in high demand. Among the most powerful tools in the Big Data ecosystem is Apache Hadoop, a framework that allows businesses to store, process, and analyze massive volumes of data efficiently. If you're aiming to break into data science, analytics, or big data engineering, Hadoop Training in Mumbai is your gateway to success.

Mumbai, being India’s financial and technology hub, offers a range of professional courses tailored to help you master Hadoop and related technologies—no matter your experience level.

Why Learn Hadoop?

Data is the new oil, and Hadoop is the refinery.

Apache Hadoop is an open-source platform that allows the distributed processing of large data sets across clusters of computers. It powers the backend of major tech giants, financial institutions, and healthcare systems across the world.

Key Benefits of Learning Hadoop:

Manage and analyze massive datasets

Open doors to Big Data, AI, and machine learning roles

High-paying career opportunities in leading firms

Work globally with a recognized skillset

Strong growth trajectory in data engineering and analytics fields

Why Choose Hadoop Training in Mumbai?

Mumbai isn’t just the financial capital of India—it’s also home to top IT parks, multinational corporations, and data-centric startups. From Andheri and Powai to Navi Mumbai and Thane, you’ll find world-class Hadoop training institutes.

What Makes Mumbai Ideal for Hadoop Training?

Hands-on training with real-world data sets

Expert instructors with industry experience

Updated curriculum with Hadoop 3.x, Hive, Pig, HDFS, Spark, etc.

Options for beginners, working professionals, and tech graduates

Job placement assistance with top MNCs and startups

Flexible learning modes – classroom, weekend, online, fast-track

What You’ll Learn in Hadoop Training

Most Hadoop Training in Mumbai is designed to be job-oriented and certification-ready.

A typical course covers:

Fundamentals of Big Data & Hadoop

HDFS (Hadoop Distributed File System)

MapReduce Programming

Hive, Pig, Sqoop, Flume, and HBase

Apache Spark Integration

YARN – Resource Management

Data Ingestion and Real-Time Processing

Hands-on Projects + Mock Interviews

Some courses also prepare you for Cloudera, Hortonworks, or Apache Certification exams.

Who Should Take Hadoop Training?

Students from Computer Science, BSc IT, BCA, MCA backgrounds

Software developers and IT professionals

Data analysts and business intelligence experts

Anyone searching for “Hadoop Training Near Me” to move into Big Data roles

Working professionals looking to upskill in a high-growth domain

Career Opportunities After Hadoop Training

With Hadoop skills, you can explore job titles like:

Big Data Engineer

Hadoop Developer

Data Analyst

Data Engineer

ETL Developer

Data Architect

Companies like TCS, Accenture, Capgemini, LTI, Wipro, and data-driven startups in Mumbai’s BKC, Vikhroli, and Andheri hire Hadoop-trained professionals actively.

Find the Best Hadoop Training Near You with Quick India

QuickIndia.in is your trusted platform to explore the best Hadoop Training in Mumbai. Use it to:

Discover top-rated institutes in your area

Connect directly for demo sessions and course details

Compare course content, fees, and timings

Read verified reviews and ratings from past learners

Choose training with certifications and placement support

Final Thoughts

The future belongs to those who can handle data intelligently. By choosing Hadoop Training in Mumbai, you're investing in a skill that’s in-demand across the globe.

Search “Hadoop Training Near Me” on QuickIndia.in today Enroll. Learn. Get Certified. Get Hired.

Quick India – Powering India’s Skill Economy Smart Search | Skill-Based Listings | Career Growth Starts Here

0 notes

Text

Big Data Analytics Training - Learn Hadoop, Spark

Big Data Analytics Training – Learn Hadoop, Spark & Boost Your Career

Meta Title: Big Data Analytics Training | Learn Hadoop & Spark Online Meta Description: Enroll in Big Data Analytics Training to master Hadoop and Spark. Get hands-on experience, industry certification, and job-ready skills. Start your big data career now!

Introduction: Why Big Data Analytics?

In today’s digital world, data is the new oil. Organizations across the globe are generating vast amounts of data every second. But without proper analysis, this data is meaningless. That’s where Big Data Analytics comes in. By leveraging tools like Hadoop and Apache Spark, businesses can extract powerful insights from large data sets to drive better decisions.

If you want to become a data expert, enrolling in a Big Data Analytics Training course is the first step toward a successful career.

What is Big Data Analytics?

Big Data Analytics refers to the complex process of examining large and varied data sets—known as big data—to uncover hidden patterns, correlations, market trends, and customer preferences. It helps businesses make informed decisions and gain a competitive edge.

Why Learn Hadoop and Spark?

Hadoop: The Backbone of Big Data

Hadoop is an open-source framework that allows distributed processing of large data sets across clusters of computers. It includes:

HDFS (Hadoop Distributed File System) for scalable storage

MapReduce for parallel data processing

Hive, Pig, and Sqoop for data manipulation

Apache Spark: Real-Time Data Engine

Apache Spark is a fast and general-purpose cluster computing system. It performs:

Real-time stream processing

In-memory data computing

Machine learning and graph processing

Together, Hadoop and Spark form the foundation of any robust big data architecture.

What You'll Learn in Big Data Analytics Training

Our expert-designed course covers everything you need to become a certified Big Data professional:

1. Big Data Basics

What is Big Data?

Importance and applications

Hadoop ecosystem overview

2. Hadoop Essentials

Installation and configuration

Working with HDFS and MapReduce

Hive, Pig, Sqoop, and Flume

3. Apache Spark Training

Spark Core and Spark SQL

Spark Streaming

MLlib for machine learning

Integrating Spark with Hadoop

4. Data Processing Tools

Kafka for data ingestion

NoSQL databases (HBase, Cassandra)

Data visualization using tools like Power BI

5. Live Projects & Case Studies

Real-time data analytics projects

End-to-end data pipeline implementation

Domain-specific use cases (finance, healthcare, e-commerce)

Who Should Enroll?

This course is ideal for:

IT professionals and software developers

Data analysts and database administrators

Engineering and computer science students

Anyone aspiring to become a Big Data Engineer

Benefits of Our Big Data Analytics Training

100% hands-on training

Industry-recognized certification

Access to real-time projects

Resume and job interview support

Learn from certified Hadoop and Spark experts

SEO Keywords Targeted

Big Data Analytics Training

Learn Hadoop and Spark

Big Data course online

Hadoop training and certification

Apache Spark training

Big Data online training with certification

Final Thoughts

The demand for Big Data professionals continues to rise as more businesses embrace data-driven strategies. By mastering Hadoop and Spark, you position yourself as a valuable asset in the tech industry. Whether you're looking to switch careers or upskill, Big Data Analytics Training is your pathway to success.

0 notes

Text

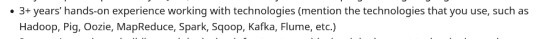

Hadoop Interview Questions . . . . What is the functionality of JobTracker in Hadoop? How many instances of a JobTracker run on Hadoop cluster? . . . for more information and tutorial https://bit.ly/3y7dhRh check the above link

0 notes

Text

Join now in Visualpath Training Institute and enhance your career by learning AWS Data Engineering Online Training course by real-time experts and with live projects, get real-time exposure to the technology and Recorded Videos will be Provided. Enroll Now for FREE DEMO..! Contact us +91-9989971070.visit:https://www.visualpath.in/aws-data-engineering-with-data-analytics-training.html

#aws#AWSRedshift#redshift#athena#S3#Hadoop#MSSQL#Sqoop#Scala#BigData#MySQL#java#database#spark#git#ETL#Dataengineer#MongoDB#oracle#freedemo#awsdataengineer#awscertified#AWSTools#python

0 notes

Text

Are you looking to build a career in Big Data Analytics? Gain in-depth knowledge of Hadoop and its ecosystem with expert-led training at Sunbeam Institute, Pune – a trusted name in IT education.

Why Choose Our Big Data Hadoop Classes?

🔹 Comprehensive Curriculum: Covering Hadoop, HDFS, MapReduce, Apache Spark, Hive, Pig, HBase, Sqoop, Flume, and more. 🔹 Hands-on Training: Work on real-world projects and industry use cases to gain practical experience. 🔹 Expert Faculty: Learn from experienced professionals with real-time industry exposure. 🔹 Placement Assistance: Get career guidance, resume building support, and interview preparation. 🔹 Flexible Learning Modes: Classroom and online training options available. 🔹 Industry-Recognized Certification: Boost your resume with a professional certification.

Who Should Join?

✔️ Freshers and IT professionals looking to enter the field of Big Data & Analytics ✔️ Software developers, system administrators, and data engineers ✔️ Business intelligence professionals and database administrators ✔️ Anyone passionate about Big Data and Machine Learning

#Big Data Hadoop training in Pune#Hadoop classes Pune#Big Data course Pune#Hadoop certification Pune#learn Hadoop in Pune#Apache Spark training Pune#best Big Data course Pune#Hadoop coaching in Pune#Big Data Analytics training Pune#Hadoop and Spark training Pune

0 notes

Text

9 Important Tips for First-Year Engineering Students - Arya College

For B.Tech first-year students, Arya College of Engineering & I.T. which is the best Engineering college in Jaipur says success involves a combination of academic focus, skill development, and social integration. Here's a comprehensive guide to help you navigate your first year:

1. Academics and Skill Development:

Maintain good grades: Good grades are beneficial. Focus on understanding core subjects such as mathematics, physics, computer programming, engineering graphics, and basic engineering principles.

Explore specializations: B.Tech degrees offer various specializations like Computer Science, Electronics and Communication, Mechanical, Civil, Electrical, Chemical, Aerospace, and more.

Technical, analytical, and problem-solving skills: B.Tech programs are designed to impart a range of technical, analytical, and problem-solving skills, along with a solid grounding in mathematics and science.

Certification courses: Pursue certification courses to become more employable.

Stay updated: Update yourself with new technology and topics related to core subjects. Open yourself to every opportunity for learning.

2. Extracurricular Activities and Social Life:

Find college friends: During the first few weeks of school, be social, as these people will grow with you and be very influential in your life for the next four years.

Have fun, but don't make choices you will regret: Your first year of college life is when you’re supposed to go crazy, but don’t make choices you’ll regret.

Develop your social skills: Focus on developing your social skills.

3. Projects and Internships:

Projects and internships: Do various projects and internships to showcase on your resume.

4. Mindset and Goals:

Embrace opportunities: Open yourself to every opportunity for learning.

Avoid lagging behind: Work to avoid lagging behind peers in better colleges by developing an amazing skillset.

By focusing on these key areas, B.Tech first-year students can lay a strong foundation for a successful and fulfilling college experience.

What are the best certification courses for a first-year BTech student

For first-year B.Tech students, certification courses can enhance skills and broaden career prospects. Here are some of the best options to consider:

Job-Oriented Courses:

These short-term training programs equip individuals with specific skills and knowledge required for particular job roles or industries. They focus on practical and hands-on learning, making participants job-ready and enhancing their employability.

Specific Certification Courses:

Cybersecurity Certification: Because of the increase in data threats and hackers, there is a high demand for skilled individuals in the field of Cybersecurity. The cybersecurity certification program helps you learn about the nature of cyberattacks, how to recognize online risks, and how to take preventative actions.

Data Science Certification: The increased demand for Big Data skills & technologies introduced certification programs in data science. The course provides learning about the data management technologies such as Hadoop, R, Flume, Sqoop, Machine Learning, Mahout, etc.

Data Analyst: The data analyst certification helps learners develop skills like critical thinking & problem-solving.

Web Designing: B.Tech CSE students with an additional certification in web development have higher chances to be selected as software engineers, UX/UI designers, web designers & web developers.

Full Stack Web Development:

Python Programming:

Artificial Intelligence:

Matrix Algebra for Engineers: This certification emphasizes linear algebra that an engineer should know.

Artificial Intelligence for Robotics: This course covers basic methods in AI such as probabilistic inference, planning, search, localization, tracking, and control, all focusing on robotics.

Technical Report Writing for Engineers: This course introduces the art of technical writing and teaches engineers the techniques to construct impressive engineering reports.

Embedded Systems - Shape The World: Microcontroller Input/ Output: This course will teach you to solve real-world problems using embedded systems

0 notes

Text

A Complete Success Guide for BTech First-Year Students

For B.Tech first-year students, Arya College of Engineering & I.T. which is the best Engineering college in Jaipur says success involves a combination of academic focus, skill development, and social integration. Here's a comprehensive guide to help you navigate your first year:

1. Academics and Skill Development:

Maintain good grades: Good grades are beneficial. Focus on understanding core subjects such as mathematics, physics, computer programming, engineering graphics, and basic engineering principles.

Explore specializations: B.Tech degrees offer various specializations like Computer Science, Electronics and Communication, Mechanical, Civil, Electrical, Chemical, Aerospace, and more.

Technical, analytical, and problem-solving skills: B.Tech programs are designed to impart a range of technical, analytical, and problem-solving skills, along with a solid grounding in mathematics and science.

Certification courses: Pursue certification courses to become more employable.

Stay updated: Update yourself with new technology and topics related to core subjects. Open yourself to every opportunity for learning.

2. Extracurricular Activities and Social Life:

Find college friends: During the first few weeks of school, be social, as these people will grow with you and be very influential in your life for the next four years.

Have fun, but don't make choices you will regret: Your first year of college life is when you’re supposed to go crazy, but don’t make choices you’ll regret.

Develop your social skills: Focus on developing your social skills.

3. Projects and Internships:

Projects and internships: Do various projects and internships to showcase on your resume.

4. Mindset and Goals:

Embrace opportunities: Open yourself to every opportunity for learning.

Avoid lagging behind: Work to avoid lagging behind peers in better colleges by developing an amazing skillset.

By focusing on these key areas, B.Tech first-year students can lay a strong foundation for a successful and fulfilling college experience.

What are the best certification courses for a first-year BTech student

For first-year B.Tech students, certification courses can enhance skills and broaden career prospects. Here are some of the best options to consider:

Job-Oriented Courses:

These short-term training programs equip individuals with specific skills and knowledge required for particular job roles or industries. They focus on practical and hands-on learning, making participants job-ready and enhancing their employability.

Specific Certification Courses:

Cybersecurity Certification: Because of the increase in data threats and hackers, there is a high demand for skilled individuals in the field of Cybersecurity. The cybersecurity certification program helps you learn about the nature of cyberattacks, how to recognize online risks, and how to take preventative actions.

Data Science Certification: The increased demand for Big Data skills & technologies introduced certification programs in data science. The course provides learning about the data management technologies such as Hadoop, R, Flume, Sqoop, Machine Learning, Mahout, etc.

Data Analyst: The data analyst certification helps learners develop skills like critical thinking & problem-solving.

Web Designing: B.Tech CSE students with an additional certification in web development have higher chances to be selected as software engineers, UX/UI designers, web designers & web developers.

Full Stack Web Development:

Python Programming:

Artificial Intelligence:

Matrix Algebra for Engineers: This certification emphasizes linear algebra that an engineer should know.

Artificial Intelligence for Robotics: This course covers basic methods in AI such as probabilistic inference, planning, search, localization, tracking, and control, all focusing on robotics.

Technical Report Writing for Engineers: This course introduces the art of technical writing and teaches engineers the techniques to construct impressive engineering reports.

Embedded Systems - Shape The World: Microcontroller Input/ Output: This course will teach you to solve real-world problems using embedded systems.

Source: Click here

#best btech college in jaipur#best engineering college in jaipur#best btech college in rajasthan#best private engineering college in jaipur

0 notes

Text

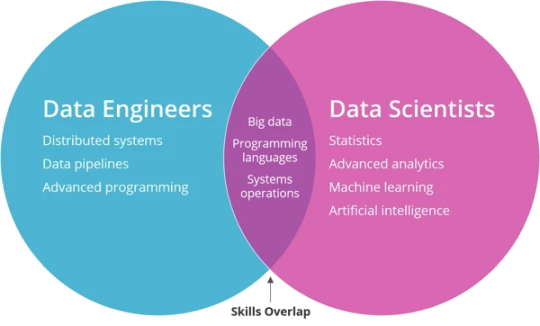

Big Data and Data Engineering

Big Data and Data Engineering are essential concepts in modern data science, analytics, and machine learning.

They focus on the processes and technologies used to manage and process large volumes of data.

Here’s an overview:

What is Big Data? Big Data refers to extremely large datasets that cannot be processed or analyzed using traditional data processing tools or methods.

It typically has the following characteristics:

Volume:

Huge amounts of data (petabytes or more).

Variety:

Data comes in different formats (structured, semi-structured, unstructured). Velocity: The speed at which data is generated and processed.

Veracity: The quality and accuracy of data.

Value: Extracting meaningful insights from data.

Big Data is often associated with technologies and tools that allow organizations to store, process, and analyze data at scale.

2. Data Engineering:

Overview Data Engineering is the process of designing, building, and managing the systems and infrastructure required to collect, store, process, and analyze data.

The goal is to make data easily accessible for analytics and decision-making.

Key areas of Data Engineering:

Data Collection:

Gathering data from various sources (e.g., IoT devices, logs, APIs). Data Storage: Storing data in data lakes, databases, or distributed storage systems. Data Processing: Cleaning, transforming, and aggregating raw data into usable formats.

Data Integration:

Combining data from multiple sources to create a unified dataset for analysis.

3. Big Data Technologies and Tools

The following tools and technologies are commonly used in Big Data and Data Engineering to manage and process large datasets:

Data Storage:

Data Lakes: Large storage systems that can handle structured, semi-structured, and unstructured data. Examples include Amazon S3, Azure Data Lake, and Google Cloud Storage.

Distributed File Systems:

Systems that allow data to be stored across multiple machines. Examples include Hadoop HDFS and Apache Cassandra.

Databases:

Relational databases (e.g., MySQL, PostgreSQL) and NoSQL databases (e.g., MongoDB, Cassandra, HBase).

Data Processing:

Batch Processing: Handling large volumes of data in scheduled, discrete chunks.

Common tools:

Apache Hadoop (MapReduce framework). Apache Spark (offers both batch and stream processing).

Stream Processing:

Handling real-time data flows. Common tools: Apache Kafka (message broker). Apache Flink (streaming data processing). Apache Storm (real-time computation).

ETL (Extract, Transform, Load):

Tools like Apache Nifi, Airflow, and AWS Glue are used to automate data extraction, transformation, and loading processes.

Data Orchestration & Workflow Management:

Apache Airflow is a platform for programmatically authoring, scheduling, and monitoring workflows. Kubernetes and Docker are used to deploy and scale applications in data pipelines.

Data Warehousing & Analytics:

Amazon Redshift, Google BigQuery, Snowflake, and Azure Synapse Analytics are popular cloud data warehouses for large-scale data analytics.

Apache Hive is a data warehouse built on top of Hadoop to provide SQL-like querying capabilities.

Data Quality and Governance:

Tools like Great Expectations, Deequ, and AWS Glue DataBrew help ensure data quality by validating, cleaning, and transforming data before it’s analyzed.

4. Data Engineering Lifecycle

The typical lifecycle in Data Engineering involves the following stages: Data Ingestion: Collecting and importing data from various sources into a central storage system.

This could include real-time ingestion using tools like Apache Kafka or batch-based ingestion using Apache Sqoop.

Data Transformation (ETL/ELT): After ingestion, raw data is cleaned and transformed.

This may include:

Data normalization and standardization. Removing duplicates and handling missing data.

Aggregating or merging datasets. Using tools like Apache Spark, AWS Glue, and Talend.

Data Storage:

After transformation, the data is stored in a format that can be easily queried.

This could be in a data warehouse (e.g., Snowflake, Google BigQuery) or a data lake (e.g., Amazon S3).

Data Analytics & Visualization:

After the data is stored, it is ready for analysis. Data scientists and analysts use tools like SQL, Jupyter Notebooks, Tableau, and Power BI to create insights and visualize the data.

Data Deployment & Serving:

In some use cases, data is deployed to serve real-time queries using tools like Apache Druid or Elasticsearch.

5. Challenges in Big Data and Data Engineering

Data Security & Privacy:

Ensuring that data is secure, encrypted, and complies with privacy regulations (e.g., GDPR, CCPA).

Scalability:

As data grows, the infrastructure needs to scale to handle it efficiently.

Data Quality:

Ensuring that the data collected is accurate, complete, and relevant. Data

Integration:

Combining data from multiple systems with differing formats and structures can be complex.

Real-Time Processing:

Managing data that flows continuously and needs to be processed in real-time.

6. Best Practices in Data Engineering Modular Pipelines:

Design data pipelines as modular components that can be reused and updated independently.

Data Versioning: Keep track of versions of datasets and data models to maintain consistency.

Data Lineage: Track how data moves and is transformed across systems.

Automation: Automate repetitive tasks like data collection, transformation, and processing using tools like Apache Airflow or Luigi.

Monitoring: Set up monitoring and alerting to track the health of data pipelines and ensure data accuracy and timeliness.

7. Cloud and Managed Services for Big Data

Many companies are now leveraging cloud-based services to handle Big Data:

AWS:

Offers tools like AWS Glue (ETL), Redshift (data warehousing), S3 (storage), and Kinesis (real-time streaming).

Azure:

Provides Azure Data Lake, Azure Synapse Analytics, and Azure Databricks for Big Data processing.

Google Cloud:

Offers BigQuery, Cloud Storage, and Dataflow for Big Data workloads.

Data Engineering plays a critical role in enabling efficient data processing, analysis, and decision-making in a data-driven world.

0 notes