#Two Tier Client Server Database Architecture

Explore tagged Tumblr posts

Text

Client Server Database Architecture

Client Server Database Architecture Client server database architecture consists of two logical components. One is “Client” and the other one is “Server”. Clients are those who send the request to perform a specific task to the server. Servers are normally receive the command sent by the clients, perform the task and send the appropriate result back to the client. Example of client is PC where as…

View On WordPress

#Client Server Database Architecture#Problems in two tier architecture#Two Tier Client Server Database Architecture

1 note

·

View note

Text

The Debate of the Decade: What to choose as the backend framework Node.Js or Ruby on Rails?

New, cutting-edge web development frameworks and tools have been made available in recent years. While this variety is great for developers and company owners alike, it does come with certain drawbacks. This not only creates a lot of confusion but also slows down development at a time when quick and effective answers are essential. This is why discussions about whether Ruby on Rails or Noe.js is superior continue to rage. What framework is best for what kind of project is a hotly contested question. Nivida Web Solutions is a top-tier web development company in Vadodara. Nivida Web Solutions is the place to go if you want to make a beautiful website that gets people talking.

Identifying the optimal option for your work is challenging. This piece breaks things down for you. Two widely used web development frameworks, RoR and Node.js, are compared and contrasted in this article. We'll also get deep into contrasting RoR and Node.js. Let's get started with a quick overview of Ruby on Rails and Node.js.

NodeJS:

This method makes it possible to convert client-side software to server-side ones. At the node, JavaScript is usually converted into machine code that the hardware can process with a single click. Node.js is a very efficient server-side web framework built on the Chrome V8 Engine. It makes a sizable contribution to the maximum conversion rate achievable under normal operating conditions.

There are several open-source libraries available through the Node Package Manager that make the Node.js ecosystem special. Node.js's built-in modules make it suitable for managing everything from computer resources to security information. Are you prepared to make your mark in the online world? If you want to improve your online reputation, team up with Nivida Web Solutions, the best web development company in Gujarat.

Key Features:

· Cross-Platforms Interoperability

· V8 Engine

· Microservice Development and Swift Deployment

· Easy to Scale

· Dependable Technology

Ruby on Rails:

The back-end framework Ruby on Rails (RoR) is commonly used for both web and desktop applications. Developers appreciate the Ruby framework because it provides a solid foundation upon which other website elements may be built. A custom-made website can greatly enhance your visibility on the web. If you're looking for a trustworthy web development company in India, go no further than Nivida Web Solutions.

Ruby on Rails' cutting-edge features, such as automatic table generation, database migrations, and view scaffolding, are a big reason for the framework's widespread adoption.

Key Features:

· MVC Structure

· Current Record

· Convention Over Configuration (CoC)

· Automatic Deployment

· The Boom of Mobile Apps

· Sharing Data in Databases

Node.js v/s RoR:

· Libraries:

The Rails package library is called the Ruby Gems. However, the Node.Js Node Package Manager (NPM) provides libraries and packages to help programmers avoid duplicating their work. Ruby Gems and NPM work together to make it easy to generate NPM packages with strict version control and straightforward installation.

· Performance:

Node.js' performance has been lauded for its speed. Node.js is the go-to framework for resource-intensive projects because of its ability to run asynchronous code and the fact that it is powered by Google's V8 engine. Ruby on Rails is 20 times less efficient than Node.js.

· Scalability:

Ruby's scalability is constrained by comparison to Node.js due to the latter's cluster module. In an abstraction-based cluster, the number of CPUs a process uses is based on the demands of the application.

· Architecture:

The Node.js ecosystem has a wealth of useful components, but JavaScript was never designed to handle backend activities and has significant constraints when it comes to cutting-edge construction strategies. Ruby on Rails, in contrast to Node.js, is a framework that aims to streamline the process of building out a website's infrastructure by eliminating frequent installation problems.

· The learning curve:

Ruby has a low barrier to entry since it is an easy language to learn. The learning curve with Node.js is considerably lower. JavaScript veterans will have the easiest time learning the language, but developers acquainted with various languages should have no trouble.

Final Thoughts:

Both Node.JS and RoR have been tried and tested in real-world scenarios. Ruby on Rails is great for fast-paced development teams, whereas Node.js excels at building real-time web apps and single-page applications.

If you are in need of a back-end developer, Nivida Web Solutions, a unique web development agency in Gujarat, can assist you in creating a product that will both meet and exceed the needs of your target audience.

#web development company in vadodara#web development company in India#web development company in Gujarat#Web development Companies in Vadodara#Web development Companies in India#Web development Companies in Gujarat#Web development agency in Gujarat#Web development agency in India#Web development agency in Vadodara

8 notes

·

View notes

Text

Dedicated Server Netherlands

Why Dedicated Server Netherlands Outperforms Global Hosting Providers [2025 Tests]

The Amsterdam Internet Exchange processes a mind-blowing 8.3TB of data every second, sometimes reaching peaks of 11.3TB. These numbers make dedicated server Netherlands hosting a powerful choice when you just need top-tier performance. The Netherlands stands proud as Europe's third-largest data center hub with nearly 300 facilities, right behind Germany and the UK.

The country's commitment shows in its 40% renewable energy usage, which leads to eco-friendly and affordable hosting options. Dedicated server hosting in Amsterdam gives you a strategic edge. The country's power supply ranks in the global top ten, which means exceptional performance for audiences in Europe and worldwide. Your business gets complete GDPR compliance and reliable infrastructure, backed by advanced DDoS protection and high-performance servers.

This piece will show you how Netherlands-based servers prove better than global alternatives through performance tests and real-life applications.

Netherlands vs Global Server Performance Tests

Our performance tests show clear benefits of Netherlands-based servers compared to global options. We used a standardized environment with 2 vCPU, 2GB RAM, and 10Gbit Network connectivity to ensure fair comparisons.

Test Environment Setup and Methodology

The test framework used ten globally distributed nodes to measure server response times. We managed to keep consistent client loads while tracking key metrics like network throughput and bandwidth usage. The testing environment matched production setups to generate reliable performance data.

Response Time Comparison Across 10 Global Locations

Amsterdam's server response times showed remarkable consistency. The average latency to UK locations was just 11ms. Tests proved that dedicated server hosting in Amsterdam keeps response times under 100ms in European locations. Google rates this as excellent performance.

Location Response Time Western Europe 11-20ms Eastern Europe 20-40ms US East Coast 80-90ms US West Coast 140-170ms Network Latency Analysis: 45% Faster Than US Servers

Cross-Atlantic connections add at least 90ms latency. Netherlands-based dedicated servers benefit from direct AMS-IX internet exchange connections. European users get much faster response times compared to US-based servers. Tests show that transatlantic connections from London to New York average 73ms. Netherlands-based servers deliver responses in about half that time.

Amsterdam's position as a major internet hub drives this superior performance. Businesses serving European markets get the best response times through Netherlands-based hosting. This advantage becomes crucial for apps that need up-to-the-minute interactions or database operations.

Technical Infrastructure Deep Dive

The Netherlands' reliable digital infrastructure depends on two critical pillars: the AMS-IX exchange architecture and an exceptionally stable power grid. These elements support dedicated server Netherlands hosting capabilities.

AMS-IX Internet Exchange Architecture

AMS-IX platform runs on a sophisticated VPLS/MPLS network setup that uses Brocade hardware to manage massive data flows. The system started with a redundant hub-spoke architecture and evolved to include photonic switches with a fully redundant MPLS/VPLS configuration. This advanced setup lets members connect through 1, 10, and 100 Gbit/s Ethernet ports.

The exchange's infrastructure has these key components:

Photonic cross-connects for 10GE customer connections

Redundant stub switches at each location

Core switches with WDM technology integration

The platform delivers carrier-grade service level agreements that ensure optimal performance for dedicated server hosting Amsterdam operations.

Power Grid Reliability: 99.99% Uptime Stats

TenneT's Dutch power infrastructure shows remarkable stability by maintaining 99.99% grid availability. Users experience just 24 minutes without electricity on average over five years.

Power Grid Metric Performance Core Uptime 99.99% Annual Downtime <24 mins Renewable Usage 86% The power infrastructure stands out through:

Advanced monitoring systems for early fault detection

Proactive maintenance protocols

Integration of renewable energy sources

This reliable power infrastructure and AMS-IX architecture make Netherlands a premier location for dedicated server hosting that offers unmatched stability and performance for mission-critical applications.

Real-World Performance Impact

Dedicated server configurations in Netherlands show measurable benefits in many use cases. Let's look at some real examples.

E-commerce Site Load Time Improvement

E-commerce websites on Netherlands servers show remarkable performance gains. Sites achieve a 70% reduction in bounce rates as page load times drop from three seconds to one second. The conversion rates jump by 7% with every second saved in load time. A dedicated server setup in Amsterdam provides:

Metric Improvement Page Load Speed 2.4x faster than other platforms Average Render Time 1.2 seconds vs 2.17 seconds industry standard. Resource Utilization 30% reduction in file sizes Gaming Server Latency Reduction.

The Netherlands' position as a major internet hub benefits gaming applications significantly. Multiplayer gaming servers show excellent performance with:

Ultra-low latency connections maintaining sub-20ms response times across Western Europe

Optimized network paths reducing packet loss through minimal network hops

Advanced routing protocols ensuring stable connections for real-time gaming interactions

Database Query Speed Enhancement

Database operations improve significantly thanks to optimized infrastructure. Query response times drop by 90% with buffer pool optimization. The improved query throughput comes from:

Efficient connection pooling reducing database latency

Advanced caching mechanisms delivering 90% buffer pool hit ratios

Optimized disk I/O operations minimizing data retrieval times

These examples highlight how dedicated server configurations in Netherlands deliver clear performance benefits in a variety of use cases.

Cost-Benefit Analysis 2025

A financial analysis shows that dedicated server hosting in the Netherlands offers significant cost advantages for 2025. The full picture of operational expenses reveals clear benefits in power efficiency and bandwidth pricing models.

Power Consumption Metrics

Data centers in the Netherlands show excellent efficiency rates, as they use 86% of their electricity from green sources. Dutch facilities must meet strict energy efficiency standards and maintain PUE ratings below 1.2. Here's how the power infrastructure costs break down:

Component Power Usage Computing/Server Systems 40% of total consumption Cooling Systems 38-40% of total Power Conditioning 8-10% Network Equipment 5% Amsterdam's dedicated server hosting operations benefit from the Netherlands' sophisticated energy management. Users experience just 24 minutes of downtime over five years. Data centers have cut their consumption by 50% through consolidation by implementing energy-saving protocols.

Bandwidth Pricing Comparison

Dedicated server hosting in the Netherlands comes with an attractive bandwidth pricing structure. Many providers have moved away from traditional models and now offer pooled bandwidth allowances from 500 GiB to 11,000 GiB. The costs work like this:

Simple bandwidth packages begin at USD 0.01 per GB for excess usage, which is nowhere near the global provider rates of USD 0.09-0.12 per GB. Businesses save substantially because internal data transfers between servers within the Netherlands infrastructure come at no extra cost.

Monthly operational costs for dedicated hosting range from USD 129.99 to USD 169.99. Linux-based systems cost about USD 20.00 less per month than Windows alternatives.

Conclusion

The Netherlands leads the global hosting solutions market with its dedicated servers, showing strong growth through 2025 and beyond. Tests show these servers respond 45% faster than their US counterparts. The country's AMS-IX infrastructure provides exceptional European connectivity.

Dutch data centers paint an impressive picture. They maintain 99.99% uptime and process 8.3TB of data every second. Their commitment to green energy shows with 86% renewable power usage. These benefits create real business value. E-commerce sites load 2.4 times faster. Gaming servers keep latency under 20ms. Database queries run 90% faster.

The cost benefits stand out clearly. Power runs efficiently and bandwidth prices start at just USD 0.01 per GB, while global rates range from USD 0.09-0.12. The Netherlands' prime location combines with cutting-edge infrastructure and eco-friendly operations to give businesses superior hosting at competitive rates.

The evidence speaks for itself. Dutch dedicated servers beat global options in speed, reliability, cost, and sustainability. Companies that need top performance and European regulatory compliance will find Netherlands-based hosting matches their digital needs perfectly.

FAQs

Q1. What are the key advantages of dedicated server hosting in the Netherlands? Dedicated server hosting in the Netherlands offers superior performance with 45% faster response times than US-based servers, exceptional connectivity through the AMS-IX internet exchange, 99.99% uptime, and sustainable operations with 86% renewable energy usage.

Q2. How does the Netherlands' server infrastructure compare to other European countries? The Netherlands boasts one of Europe's most advanced digital infrastructures, ranking third in data center presence. Its strategic location and sophisticated AMS-IX architecture enable faster response times and more reliable connections compared to servers in countries like Germany, France, and the UK.

Q3. What real-world benefits can businesses expect from Netherlands-based servers? Businesses can experience significant improvements, including 2.4x faster page load speeds for e-commerce sites, sub-20ms latency for gaming servers across Western Europe, and up to 90% faster database query responses, leading to enhanced user experiences and improved performance.

Q4. Are dedicated servers in the Netherlands cost-effective? Yes, dedicated servers in the Netherlands offer competitive pricing with bandwidth costs starting at $0.01 per GB, compared to global rates of $0.09-$0.12. Additionally, the country's energy-efficient data centers and renewable energy usage contribute to long-term cost savings.

Q5. How does the Netherlands ensure reliable server performance? The Netherlands maintains reliable server performance through its robust power grid with 99.99% uptime, advanced monitoring systems for early fault detection, and proactive maintenance protocols. Users experience an average of only 24 minutes of downtime over five years, ensuring consistent and dependable hosting services.

1 note

·

View note

Text

Dedicated Server Hosting Amsterdam

Why Dedicated Server Netherlands Outperforms Global Hosting Providers [2025 Tests]

The Amsterdam Internet Exchange processes a mind-blowing 8.3TB of data every second, sometimes reaching peaks of 11.3TB. These numbers make dedicated server Netherlands hosting a powerful choice when you just need top-tier performance. The Netherlands stands proud as Europe's third-largest data center hub with nearly 300 facilities, right behind Germany and the UK.

The country's commitment shows in its 40% renewable energy usage, which leads to eco-friendly and affordable hosting options. Dedicated server hosting in Amsterdam gives you a strategic edge. The country's power supply ranks in the global top ten, which means exceptional performance for audiences in Europe and worldwide. Your business gets complete GDPR compliance and reliable infrastructure, backed by advanced DDoS protection and high-performance servers.

This piece will show you how Netherlands-based servers prove better than global alternatives through performance tests and real-life applications.

Netherlands vs Global Server Performance Tests

Our performance tests show clear benefits of Netherlands-based servers compared to global options. We used a standardized environment with 2 vCPU, 2GB RAM, and 10Gbit Network connectivity to ensure fair comparisons.

Test Environment Setup and Methodology

The test framework used ten globally distributed nodes to measure server response times. We managed to keep consistent client loads while tracking key metrics like network throughput and bandwidth usage. The testing environment matched production setups to generate reliable performance data.

Response Time Comparison Across 10 Global Locations

Amsterdam's server response times showed remarkable consistency. The average latency to UK locations was just 11ms. Tests proved that dedicated server hosting in Amsterdam keeps response times under 100ms in European locations. Google rates this as excellent performance.

Location Response Time Western Europe 11-20ms Eastern Europe 20-40ms US East Coast 80-90ms US West Coast 140-170ms Network Latency Analysis: 45% Faster Than US Servers

Cross-Atlantic connections add at least 90ms latency. Netherlands-based dedicated servers benefit from direct AMS-IX internet exchange connections. European users get much faster response times compared to US-based servers. Tests show that transatlantic connections from London to New York average 73ms. Netherlands-based servers deliver responses in about half that time.

Amsterdam's position as a major internet hub drives this superior performance. Businesses serving European markets get the best response times through Netherlands-based hosting. This advantage becomes crucial for apps that need up-to-the-minute interactions or database operations.

Technical Infrastructure Deep Dive

The Netherlands' reliable digital infrastructure depends on two critical pillars: the AMS-IX exchange architecture and an exceptionally stable power grid. These elements support dedicated server Netherlands hosting capabilities.

AMS-IX Internet Exchange Architecture

AMS-IX platform runs on a sophisticated VPLS/MPLS network setup that uses Brocade hardware to manage massive data flows. The system started with a redundant hub-spoke architecture and evolved to include photonic switches with a fully redundant MPLS/VPLS configuration. This advanced setup lets members connect through 1, 10, and 100 Gbit/s Ethernet ports.

The exchange's infrastructure has these key components:

Photonic cross-connects for 10GE customer connections

Redundant stub switches at each location

Core switches with WDM technology integration

The platform delivers carrier-grade service level agreements that ensure optimal performance for dedicated server hosting Amsterdam operations.

Power Grid Reliability: 99.99% Uptime Stats

TenneT's Dutch power infrastructure shows remarkable stability by maintaining 99.99% grid availability. Users experience just 24 minutes without electricity on average over five years.

Power Grid Metric Performance Core Uptime 99.99% Annual Downtime <24 mins Renewable Usage 86% The power infrastructure stands out through:

Advanced monitoring systems for early fault detection

Proactive maintenance protocols

Integration of renewable energy sources

This reliable power infrastructure and AMS-IX architecture make Netherlands a premier location for dedicated server hosting that offers unmatched stability and performance for mission-critical applications.

Real-World Performance Impact

Dedicated server configurations in Netherlands show measurable benefits in many use cases. Let's look at some real examples.

E-commerce Site Load Time Improvement

E-commerce websites on Netherlands servers show remarkable performance gains. Sites achieve a 70% reduction in bounce rates as page load times drop from three seconds to one second. The conversion rates jump by 7% with every second saved in load time. A dedicated server setup in Amsterdam provides:

Metric Improvement Page Load Speed 2.4x faster than other platforms Average Render Time 1.2 seconds vs 2.17 seconds industry standard. Resource Utilization 30% reduction in file sizes Gaming Server Latency Reduction.

The Netherlands' position as a major internet hub benefits gaming applications significantly. Multiplayer gaming servers show excellent performance with:

Ultra-low latency connections maintaining sub-20ms response times across Western Europe

Optimized network paths reducing packet loss through minimal network hops

Advanced routing protocols ensuring stable connections for real-time gaming interactions

Database Query Speed Enhancement

Database operations improve significantly thanks to optimized infrastructure. Query response times drop by 90% with buffer pool optimization. The improved query throughput comes from:

Efficient connection pooling reducing database latency

Advanced caching mechanisms delivering 90% buffer pool hit ratios

Optimized disk I/O operations minimizing data retrieval times

These examples highlight how dedicated server configurations in Netherlands deliver clear performance benefits in a variety of use cases.

Cost-Benefit Analysis 2025

A financial analysis shows that dedicated server hosting in the Netherlands offers significant cost advantages for 2025. The full picture of operational expenses reveals clear benefits in power efficiency and bandwidth pricing models.

Power Consumption Metrics

Data centers in the Netherlands show excellent efficiency rates, as they use 86% of their electricity from green sources. Dutch facilities must meet strict energy efficiency standards and maintain PUE ratings below 1.2. Here's how the power infrastructure costs break down:

Component Power Usage Computing/Server Systems 40% of total consumption Cooling Systems 38-40% of total Power Conditioning 8-10% Network Equipment 5% Amsterdam's dedicated server hosting operations benefit from the Netherlands' sophisticated energy management. Users experience just 24 minutes of downtime over five years. Data centers have cut their consumption by 50% through consolidation by implementing energy-saving protocols.

Bandwidth Pricing Comparison

Dedicated server hosting in the Netherlands comes with an attractive bandwidth pricing structure. Many providers have moved away from traditional models and now offer pooled bandwidth allowances from 500 GiB to 11,000 GiB. The costs work like this:

Simple bandwidth packages begin at USD 0.01 per GB for excess usage, which is nowhere near the global provider rates of USD 0.09-0.12 per GB. Businesses save substantially because internal data transfers between servers within the Netherlands infrastructure come at no extra cost.

Monthly operational costs for dedicated hosting range from USD 129.99 to USD 169.99. Linux-based systems cost about USD 20.00 less per month than Windows alternatives.

Conclusion

The Netherlands leads the global hosting solutions market with its dedicated servers, showing strong growth through 2025 and beyond. Tests show these servers respond 45% faster than their US counterparts. The country's AMS-IX infrastructure provides exceptional European connectivity.

Dutch data centers paint an impressive picture. They maintain 99.99% uptime and process 8.3TB of data every second. Their commitment to green energy shows with 86% renewable power usage. These benefits create real business value. E-commerce sites load 2.4 times faster. Gaming servers keep latency under 20ms. Database queries run 90% faster.

The cost benefits stand out clearly. Power runs efficiently and bandwidth prices start at just USD 0.01 per GB, while global rates range from USD 0.09-0.12. The Netherlands' prime location combines with cutting-edge infrastructure and eco-friendly operations to give businesses superior hosting at competitive rates.

The evidence speaks for itself. Dutch dedicated servers beat global options in speed, reliability, cost, and sustainability. Companies that need top performance and European regulatory compliance will find Netherlands-based hosting matches their digital needs perfectly.

FAQs

Q1. What are the key advantages of dedicated server hosting in the Netherlands? Dedicated server hosting in the Netherlands offers superior performance with 45% faster response times than US-based servers, exceptional connectivity through the AMS-IX internet exchange, 99.99% uptime, and sustainable operations with 86% renewable energy usage.

Q2. How does the Netherlands' server infrastructure compare to other European countries? The Netherlands boasts one of Europe's most advanced digital infrastructures, ranking third in data center presence. Its strategic location and sophisticated AMS-IX architecture enable faster response times and more reliable connections compared to servers in countries like Germany, France, and the UK.

Q3. What real-world benefits can businesses expect from Netherlands-based servers? Businesses can experience significant improvements, including 2.4x faster page load speeds for e-commerce sites, sub-20ms latency for gaming servers across Western Europe, and up to 90% faster database query responses, leading to enhanced user experiences and improved performance.

Q4. Are dedicated servers in the Netherlands cost-effective? Yes, dedicated servers in the Netherlands offer competitive pricing with bandwidth costs starting at $0.01 per GB, compared to global rates of $0.09-$0.12. Additionally, the country's energy-efficient data centers and renewable energy usage contribute to long-term cost savings.

Q5. How does the Netherlands ensure reliable server performance? The Netherlands maintains reliable server performance through its robust power grid with 99.99% uptime, advanced monitoring systems for early fault detection, and proactive maintenance protocols. Users experience an average of only 24 minutes of downtime over five years, ensuring consistent and dependable hosting services.

0 notes

Text

Mono2Micro Mastery: IBM CIO’s Approach to App Innovation

The path of application modernization being taken by the IBM CIO organization: Mono2Micro

Monolithic software applications with antiquated systems are often hard to modify, notoriously more expensive to repair, and even dangerous to the health of a company. to the southwest Airlines had to put on hold over 13.000 passengers in the month of November 2022 as the consequence of obsolete computer systems as well as technologies. Due to this collapse, the airline staunch suffered major losses, and that in turn destroyed the reputation of its name.

One the opposite hand, the streaming service was an early adopter of the concept of microservices and has since emerged the industry leader when it comes to online watching. The firm has over two billion customers in more than 200 nations across the world.

During the procedures of applications industrialization, developers are able to come up with services whose can be remade, and ultimately contributes to an achieve in performance and contribute the quicker shipping of new features and capabilities.

In her most recent blog article, They provided an overview of her tiered modernization methodology, which begins with runtime and operational modernization, followed by architectural modernization, which involves reworking monolithic applications into microservices configurations. In this blog, they will conduct an in-depth investigation into the architectural modernization of Java 2 Platform, Enterprise Edition (J2EE) applications and describe how the IBM Mono2Micro tool sped up the process of transition.

A typical J2EE architecture of a monolithic application is shown in the following figure. There is a close connection between the various components, which include the user interface (UI) on the client side, the code on the server side, and the logic in the database. The fact that these applications are deployed as a single unit often results in a longer churn time for very minor changes.

Decoupling the user interface (UI) on the client side from the components on the server side is the very first stage in the process of architectural modernization. Additionally, the data communication method should be changed from Java objects to JSON. Backend for Front-End (BFF) services simplify the process of converting Java objects to JSON or vice versa between the two formats. Both the front end and the back end are separated, which allows for the possibility of modernization and deployment on their own.

As the next phase in the process of architectural modernization, the backend code will be decomposed into macroservices that may be deployed separately.

Through the use of IBM Mono2Micro Tool, the migration of monolithic applications into microservices was more quickly accomplished. IBM Mono2Micro is a semi-automated toolkit that is based on artificial intelligence. It employs innovative machine learning techniques and a technology that is the first of its kind to generate code in order to provide assistance to you throughout the process of refactoring to complete or partial microservices.

The monolithic program is analyzed in both a static and dynamic manner, and then suggestions are provided for how the monolithic application might be partitioned into groups of classes that have the probability of becoming microservices.

During the process of evaluating, redesigning, and developing the microservices architecture, Mono2Micro saved more than 800 hours of valuable human labor. The process of setting up Mono2Micro might take anywhere from three to four hours, depending on how well you understand the various components and how they interact with one another to remodel your monolith. On the other hand, it is worthwhile to spend a few hours in order to save hundreds of hours when changing your monolith into microservices that can be deployed.

In a nutshell, modernization solutions such as IBM Mono2Micro and Cloud transition Advisor facilitated a more rapid transition and increased cost efficiency, nonetheless

The main differentiators are as follows:

Managing her infrastructure by transitioning from bloated on-premises virtual machines to cloud-native containers is the platform’s primary focus.

Developing a community of software developers that can work together and establish a culture that is prepared for the future

In addition to enhancing system security and simplifying data administration, modernization encourages innovation while simultaneously fostering corporate agility. The most essential benefit is that it boosts the productivity of developers while also delivering cost-efficiency, resilience, and an enhanced experience for customers.

Read more on Govindhtech.com

#Mono2Micro#IBMCIO#microservices#IBM#virtualmachines#softwaredevelopers#technews#technology#govindhtech

0 notes

Text

Systems, Planning, Metrics, Workforce Analysis, & Costs concerning an HRIS

System Deliberations in the Design of an HRIS For employing any HRIS successfully, the system design requirements are important. It must have scope for customizations just as the users require it. The whole process and the end results which are expected from the module are analyzed. Implementing an HRIS system requires careful planning and a clear definition of goals in a combined approach. A well-designed HRIS system will lead to improved organizational productivity, and a badly designed one will be otherwise. HRIS Customers/Users The customers or users are both employees and non-employees. - Employees are managers, data analyzers, potential decision-makers, clerks, system providers, etc. Managers are regular managers, directors, presidents, vice presidents, CEO, etc. - The Data Analyzer collects the relevant data but also filters and examines it. The authentication of the data helps managers in decision-making. - The technical analysts interpret the data through various programs in an easy language so that the managers can have easy access to them. - Clerks provide backup support and assistance. - The non-employee job seekers rely on the job portal for gathering knowledge of the HRIS. They don’t interact directly. In terms of usage of data and information, the data can be classified into 3 categories: - Information about the employees. - Organizational structure, job description, specifications, various positions and jobs. - The third kind of information will essentially is an amalgamation of the above 2. HRIS Architecture System Architecture has three key features. These are Components, Collaborators, and Connectors. The HRIS architecture can be 2-tiered, 3-tiered, or multi-tiered architectures. - Two-tier architecture: The Two-tier or Client-Server architecture came into origin during the 1980s. In the two-tier architecture each and every minute detail of the clients is noted. - Three-tier architecture: During the 1990s, the server was both a database server and an application server. The three-tier architecture is refined and has additional advantages, besides various limitations. - N-Tier architecture (introduction of application server-HTML): N-Tier architecture is an improved version of 3-Tier architecture. It has load balancing, worldwide accessibility, extra-savings space, and easy data generation. HRIS Enactment It is a planned and integrated approach involving the top management, HR Managers, Technical Consultants, and Specialists. Planning is the basis for successful implementation. It offers the outline for the choice of project manager or consultant, choosing project experts, defining reports and management approaches, a decent implementation team, operational spans, budgetary approaches, analyzing and comparing present and upcoming processes, hardware, and software determination, customizing updated techniques, the interaction of the user and software for acceptance. Planning for System Implementation Planning is the first step involved in system implementation. It is the very basic function that describes effectively the very basic questions of how, where, and when the objectives can be realized or it serves as a guiding framework. Planning equally involves a careful assessment of the available resources and the challenges which the team might have to encounter while reaching their business objectives/goals. The key steps which are involved in the process are given underneath: Project Manager or Project Leader: A project manager is responsible for the planning and execution of projects. This is within defined timelines and resources. Certain basic assets or qualities are obligatorily required in project managers. He should have sufficient knowledge to execute the whole project within the specified time and budget. He should be a good leader and communicator. The technicalities and project methodologies should be at his fingertips. Project Managers can be hired and consultants should be appointed. Another way is to hire a full-time manager, preferably from a project management institute. For hiring a specialized project manager, an organization must have projects available at present and in the future too. Another way is to select a manager who is already involved in the project execution. Steering Committee: The project manager is aided by individuals who help the manager with the implementation process. This is known as the steering committee. It decides the priorities of the business of an organization. It also manages its operations. The key features of a “respectable” steering committee are: - It should create healthy competition amongst the participants and foster an enabling working environment. - It should make sure of the mechanisms to get things done. - It must make sure that the project meets all the expectations. - It is the final decision-making body to handle legal, technical, cost-related, cultural, and personnel issues. A Project Charter is a statement of the scope and objectives. It: - Strikes a configuration between the project goals and the organizational goals. - Provides clarity about the extent of the project. - Selects a team of individuals and experts. - Must undertake decision-making. - Must gather customer feedback. - Uses project management methods. - Budgets and highlights the constraints in the project Implementation Team: The Project Manager is supported by functional and technical professionals. They look after the operational and software development needs. The functional team members are from the HR department. The technical professionals have strong technical expertise in integrating HR with Information Technology. Project Scope: This is important. Projects should be carefully planned and executed. Knowing the project scope helps. Project scope makes sure a set course of action is followed. It also defines the resources and the deadlines of the project. Management Sponsorship: Management sponsorship and project management are mutually interdependent and interlinked. Management is responsible for any change in the project. Senior managers offer themselves as sponsors. Process Mapping: This highlights the systems and processes involved in the implementation stage. It gives a clear idea about the existing processes and the changes needed. Flowcharts are developed. Besides flowcharts, other tools are also used for mapping. Software Implementation: First, the hardware is verified. Then the software implementation starts by determining which past data is needed. All steps of all HR processes are matched with the HRIS. Documentation is the last step. Customization: Customizations provide continuous improvement. They facilitate business goals via robust solutions. Customizations involve software upgrades. They involve huge maintenance costs. Change Management: This step tells us whether the HR users accept the HRIS. The employees may face trouble in accepting it, so, proper training should be given about system processes. “Go Live!”: This is when the old software is shut down. A new one replaces it. There are training sessions on the software before interaction. Evaluation of Project: Once the implementation is over, continuous evaluation is required. This identifies the loopholes in the system and develops a plan of action for overpowering these drawbacks. Potential Pitfalls: This is the final stage where a few things are considered: - Poor planning and poor scope. - Unlimited mapping. - Failure to assess internal and environmental changes. - Incorrect implementation of the evaluation. HR Metrics & the Workforce Analysis This is a useful strategic tool that shares information and evidence about the functioning of the entire system. This is done by relying on facts and figures instead of assumptions or opinions. Modern HR Metrics are based on quantitative and qualitative measurement methods. They handle large amounts of data simultaneously. Workforce analysis is actually a gap analysis and involves forecasting future business goals and purposes. HR Metrics and Workforce Analysis were conceived during Taylor’s period when he advocated the idea of scientific management. HR metrics & Workforce Analysis play a prime role in sharing vital information on people matters. Moreover, it supports this info with vital data which helps in strategic decision-making at the management level. Because of its strategic role, these techniques have gained importance among HR professionals. HR Metrics and Workforce Analytics are in a state of evolution at present. Modern organizations use metrics for evaluating or auditing their HR initiatives/programs and to measure their success. Objectives HR Metrics and workforce analysis link HR objectives to strategic business activities. They allow the convenience of information for making proper managerial decisions. In this context, correct HR metrics and analysis should be known. Staff characteristics, business objectives of a strategic kind, HR strategies, talent acquisition, employee wellbeing, productivity details, and diversity targets can be aligned using HR metrics and workforce analytics. The first and foremost step is regarding data gathering. The data can be collected from various sources, which are: - Employee work tenure - Recruitment details of all units in an organization - Surveys done by the departments - Information gathered by way of interviews - Information collected via focus groups The use of HR Metrics & Workforce Analysis REPORTING: Reporting is integral to decision-making. It involves what and how metrics should be selected and presented, the audience, and the manner of reporting. Reporting tells you about problems or loopholes which affect performance, motivation, and productivity. One can work on the existing lacunas or loopholes too. Reporting involves interpretation of the information, putting it in the right framework, and endorsing suitable policies for nonstop development. DASHBOARDS: These are to measure and display the metrics of any organization. Managers can examine metrics at different levels. The metrics recognized are known as key performance indicators (KPIs). Dashboards give a visual representation of information on key areas of HR. It also analyzes the key HR metrics or additional details. BENCHMARKING: This is for doing comparisons between the predefined standards against the existing results. It offers insights into achieving an outcome. It redefines goals & forecasts by analyzing the existing realities and limitations on a comparative base. DATA MINING: Data Mining relies on data patterns from usually large databases. This is for acquiring knowledge and enabling effective decision-making by knowing causal mechanisms. It uses multiple regression and correlation for analyzing the patterns of data and their relationships. The data mining process essentially involves three stages. They are: - Exploration: Selection of data records, their subsets, and the preparation of data - Model building: Development of various models and selection of the best model by considering various criterions - Deployment: Best model is implemented for arriving at effective decisions and meeting the expected outcomes PREDICTIVE ANALYSES: This is for assessing and predicting future outcomes by evaluating key indicators or processes of an organizational system. OPERATIONAL EXPERIMENTS: These develop models on which the managers take decisions. They help determine the relevant variables. WORKFORCE MODELLING: Workforce modeling helps an HR professional understand the changes in the requirements for human capital due to changes in the organizational environment. This can be due to mergers/demergers, acquisitions, divestiture, or due to changes in demand for products. HRIS: An Evaluation of its Cost and Benefits HRIS is usually adopted & implemented for attaining the following goals: - Improving efficiencies: Computerization in HR, reduces dependence on hard copy data. It saves time to recall records via a user-friendly interface. This improves the complete efficiency of the HR department. The HR professionals can then focus more on strategic decision-making and the progressive functions of HR. - Mutually beneficial for both the management and the employees: HRIS facilitates transparency in the system. It results in improved employee satisfaction and convenience for the management when responding to people-related matters. - HR as a strategic partner: With HRIS, HR becomes a strategic partner. HR functions are aligned with the corporate strategy. An evaluation of HR costs involves calculation of ROI (Return on Investment) on Human Capital, which generally encompasses an assessment of the benefits or the positive outcomes and also the costs or the negative outcomes of HR led initiatives/practices. The evaluation of costs and benefits of HRIS can be performed with the help of various techniques: - Identification of sources of value for costs and benefits of HR-led initiatives: This includes evaluating the business environment, the changing trends, and the strategic course of alternatives. - estimating the timing of benefits and costs: This is comparing the HR costs & benefits at different times or measuring the cost-benefits of various programs at different timings. This is important for the policy-making process. - Calculating the value of indirect benefits: Indirect benefits are the lesser benefits. By estimating the scale, one can perform a better assessment of the planning process. A proper metric is selected, and then direct estimation, benchmarking, and internal assessment are done. Benchmarking has several rewards to offer. It results in better risk management for large-scale present projects. Internal assessment is of the firm’s own internal metrics. Data transfers are much easier and cost- effective too. Methods for guessing the value of indirect benefits: These are estimated in dollars. Average Employee Contributions (AECs) are calculated. This is derived by calculating the difference between the net revenue and the cost of goods sold divided by the total number of employees. In short, AEC = (Net Revenues – Cost of Goods Sold)/number of employees. It contributes to the assessment of employees’ individual differences and production rates. Avoiding frequently occurring problems The Cost Benefit Analysis in the HRIS ignores the HR policies and their influence on organizational effectiveness. The calculation of direct and indirect costs is confusing as sometimes direct costs are calculated as indirect costs. Time-saving tactics lead to a bad analysis of the results of the HR-led initiatives. Packaging the analysis for decision-makers This is done by analyzing the organizational goals and objectives. Without a proper Cost Benefit Analysis, decision-makers cannot estimate the costs of investment. Detailed analysis is needed by the decision-maker via proper identification of direct and indirect costs and benefits. Variance Analysis: Variance Analysis is for assessing the indirect benefits. This is an assessment of the financial and operational data for identifying the reason for the variance. In project management, this can be useful for evaluating and reviewing the progress of a project. This helps in maintaining budgetary control by assessing the planned as well as the actual costs of a project. HRIS Benefits According to Kovach (2002), HRIS implementation has the following advantages: - Refining organizational competitiveness via enhanced human resource working. - Provides the chance for shifting from daily operational issues of HR to strategic objectives. - Employees are instrumental in HRIS implementation and its daily functioning and usage. - Reengineers or restructures the whole HRD. HRIS Benefits can be categorized into the following: - Advantages for the management for improving the decision-making ability, effective cost control, precision of vision and transparency of its operations, and emphasis on the HR strategic objectives. - Advantages for the HRD for improving the efficiency of the department, reducing dependence on paperwork and manual management. It reduces idleness and transforms HR into a proactive department. - Provides advantages for the employees through time-saving, convenience in usage and administration, improved decision-making. Read the full article

0 notes

Text

Advanced Java is the next advanced level concept of Java programming. This high level programming basically uses two Tier Architecture i.e Client and Server. “Advanced Java” is nothing but specialization in domains such as web, networking, and database handling. most of the packages always start with ‘javax.servlet.’

Java is concurrent, class-based, object-oriented and specifically designed to have as few implementation dependencies as possible.

Java technology is used to develop applications for a wide range of environments, from consumer devices to heterogeneous enterprise systems. In this section, get a high-level view of the Java platform and its components.

Java is one of the most popular programming languages used to create Web applications and platforms. It was designed for flexibility, allowing developers to write code that would run on any machine, regardless of architecture or platform.

Topics in advanced java include the following.

Advance: Java Networking, JDBC, Servlets, JSP.

Framework: JSF, Beans, EJB, Web Services.

Advanced Java has a complete solution for dynamic processes which has many frameworks design patterns servers mainly JSP. Advanced Java means java application that runs on servers means these are web applications.

TCCI teaches Advanced Java School, College, Engineering students or any person who wants to learn and go ahead in Java Field. TCCI teaches this tough subject in terms of theory and practical.

Course Duration: Daily/2 Days/3 Days/4 Days

Class Mode: Theory With Practical

Learn Training: At student’s Convenience

TCCI computer classes provide the best training in advanced Java programming courses through different learning methods/media located in Bopal Ahmedabad and ISCON Ambli Road in Ahmedabad.

For More Information:

Call us @ +91 9825618292

Visit us @ http://tccicomputercoaching.com

#java language classes in bopal Ahmedabad#Java language classes in ISCON Ambli Road Ahmedabad#java language institute in bopal Ahmedabad#java language institute in ISCON Ambli Road Ahmedabad#java language courses in Ahmedabad

0 notes

Text

Skills a Cloud Engineer should Learn

The popularity of Cloud Computing has rocketed sky-high and on the other hand, Forecasters have also given it a thumbs up suggesting that Cloud Computing is here to stay. No wonder we see a rise in the number of individuals wanting to make a career in this domain. If you too have a similar desire then we are sure you must have questions like what skills you should learn to become a Cloud Engineer? This blog will help you answer these questions so continue reading!

Skills You Should Learn To Become a Cloud Engineer

As a Cloud Engineer, you will be working with cross-functional teams which are a mix of software, operations, and architecture. This means when it comes to learning these skills, you would have quite a few options in your bag you can choose from. Here are some of the must-have cloud engineer skills:

1. Cloud Service Providers

If you are to get started with Cloud Computing you cannot do that without understanding how different Cloud Service providers work. Several Cloud Service providers offer end to end services like compute, storage, databases, ML, Migration, that is why almost everything related to cloud computing is catered by them making it a vital cloud engineer skill.

It is important that you choose at least one from many that are available. AWS and Azure are now the market leaders and compete for neck and neck in the Cloud market. AWS has the experience of holding the top position in the tech market and is known for its niche. On the other hand, Azure is a Microsoft product making it easier to integrate with almost all the stack of Microsoft products that are there. Moreover, GCP, Openstack has its stranglehold in big data and software development markets respectively. Depending on the business needs, you would be required to choose one or more providers for your job role.

Each of these service providers has their free tier for the usage which is enough to get you started and have sufficient hands-on practice.

2. Storage

Cloud storage can easily be defined as “Storing data online on the Cloud”, so the company’s data is stored and accessed from multiple distributed and connected resources. Some of the crucial benefits of Cloud Storage are as follows:

Greater accessibility

Reliability

Quick Deployment

Strong Protection

Data Backup and Archival

Disaster Recovery

Cost Optimisation

Depending upon the various needs and requirements of an organization, they may choose from the following types of storage:

Personal Cloud Storage

Public Cloud Storage

Private Cloud Storage

Hybrid Cloud Storage

The fact that data is now centric to Cloud Computing, it is very important that one understands where to store and how to store it. This is because the measures taken to achieve what is mentioned above may vary based on the type and volume of data an organization wants to store and use. Therefore, understanding and learning how Cloud Storages work for you would be a good idea making it an important cloud engineer skill. Now, various other popular storage services cloud service providers use. So, to name a few popular ones, we have S3, Glacier in AWS, blobs & Queues, Data Lakes in Azure.

3. Networking

Coming to Networking, it is now related to cloud computing, as centralized computing resources are shared for clients over the Cloud. It has spurred a trend of pushing more network management functions into the cloud so that fewer customer devices are needed to manage the network.

The improved Internet access and reliable WAN bandwidth have made it easier to push more networking management functions into the Cloud. This, in turn, has increased the demand for cloud networking, as customers are now looking for easier ways to build and access networks using a cloud-based service.

A Cloud Engineer may also be responsible for designing ways to make sure the network is responsive to user demands by building automatic adjustment procedures. Hence understanding of networking concepts and fundamentals and Virtual Networks are very important Cloud engineer skills as they are centric to networking on the Cloud.

4. Linux

On the other hand, Linux is bringing in features like Open source, easy customization, security, etc. making it a paradise for programmers. Cloud providers are aware of this new fact and hence we see the adoption of this Linux system on different cloud platforms.

Now, if we take into consideration the number of servers that power Azure alone, you would note that around 30% of those are Linux based. So, if you are a professional with skills like architecting, designing, building, administering, and maintaining Linux servers in a cloud environment, you could survive and thrive in the Cloud domain with this single cloud Engineer skill alone.

5. Security and Disaster Recovery

With internet thefts on the rise, cloud security is important for all organizations. Cloud security aims at protecting data, applications, and infrastructures involved in cloud computing. It’s not much different from the security of On-premise architectures. But the fact that everything is moving to the Cloud, it is important one gets a hang of it.

For any computing environment, the cloud security always involves maintaining adequate preventive measures like:

Knowing that the data and systems are safe.

Tracking the current state of security.

Tracing and responding to unexpected events.

If these operations interest you then let me tell you Security and Disaster Recovery related concepts will help you immensely as a Cloud Engineer or Cloud Admin. These are the methodologies that are central to operating software in the Cloud.

6. Web Services and API

We already know that the underlying foundation is very important to any architecture. Cloud architectures are heavily based on APIs and Web Services because Web services provide developers with methods of integrating Web applications over the Internet. XML, SOAP, WSDL, and UDDI open standards are used to tag data, transfer data, describe, and list services available. Plus you need API to get the required integration done.

Thus, having a good experience of working on websites, and related knowledge would help you have a strong core in developing Cloud Architectures.

7. DevOps

If you are a software developer or an operations engineer earlier then you are no stranger to the constant issues these individuals deal with as they work in different environments. DevOps brings in Development and Operations approach in one mold thus easing their work dependencies and filling in the gap between the two teams.

This cloud engineer skill may look a little out of place on this list of skills, but this development approach has made its presence felt to many developers. DevOps gels well with most of the Cloud Service Providers, AWS in particular making AWS DevOps a great skill to have.

8. Programming Skills

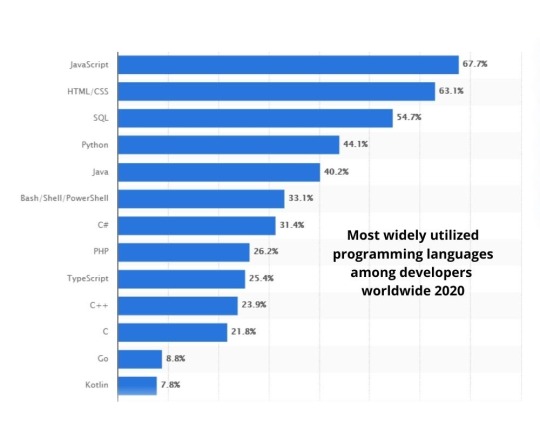

Talking about cloud engineer skills, you cannot ignore the importance of developers’ play in computing. Developers possess the ability to build, deploy, and manage applications quickly. Cloud Computing uses this feature for strengthing, scalability. Hence learning appropriate programming languages or frameworks would be a boon. Here is a list of some popular languages and frameworks:

SQL: Very important for data manipulation and processing

Python: lets you create, analyze and organize large chunks of data with ease

XML With Java Programming: Data description

net: must-have framework especially for Azure Developers

Stack up these programming skills and you would be an unstoppable Cloud Engineer.

So this is it as we come to an end of this blog on ‘Skills you should learn to become a Cloud Engineer’. If you wish to master Cloud Computing and build a career in this domain, then check out our website Edufyz which comes with instructor-led online training and online courses. Our e-courses training will help you understand Cloud Computing in-depth and help you master various concepts that are a must for a successful Cloud Engineer Career.

1 note

·

View note

Link

What is Netlify CMS, why should i use this CMS? Pro and Cons of Netlify CMS

More about Netlify CMS:

Netlify is “all-in-one platform for automating modern web projects”, serving more advanced users, like website developers.

The Netlify CMS is predicated on JamStack technology, which was wont to build the foremost popular static site generator. JamStack is mentioned as “the way forward for development architecture”. It’s built on serverless, headless technology, supported client-side JavaScript , reusable application programming interfaces (APIs), and prebuilt Markup. This structure makes it safer than a server-side CMS like WordPress.

Netlify isn’t a static site generator; it’s a CMS to create static and headless web projects. Content is stored in your Git repository, alongside your code, for straightforward editing and updating.

Netlify CMS distributes static sites across its CDN (content delivery network). (Imagine what you’ll achieve in terms of page load speed when you’re serving pre-built pages from the CDNs nearest to visitors). Because files are lighter, you’ll host your site within the cloud and avoid web hosting fees. Most developers find Netlify platform’s free tier plan offers quite enough for private projects.

Don’t get confused, Netlify CMS is different from the Netlify platform , which may be wont to automatically build, deploy, serve, and manage your frontend sites and web apps. consistent with Netlify , the Netlify CMS has never been locked to their platform (despite both having an equivalent name).

What are static site generator?

Static site generators convert certain pages on your website into static site versions (simply HTML files). When a user requests a page on the static site, the request is shipped to the online server (HTML files directly served to users without any Database query), which then finds the corresponding file and returns it to the user. This process helps the location perform faster, and cache easier.

This process is additionally safer. The static site generator doesn’t believe databases or other data sources and it also avoids server-side processing when accessing the web site .

Several static site generators can convert existing pages on your WordPress site in order that you don’t need to start over from scratch.

However, static site generators do have a couple of downsides, including:

Incompatibility with page builders. If you don’t have the skills to code, you won’t be ready to build a site without the assistance of page builders.

Trouble managing large sites. Websites that have thousands of pages, multiple authors, and content published regularly can pose a drag for a static site development environment. The frequency and quantity of creating page edits can delay updates as sites must be rebuilt and tested.

Server site functionality. Everything comes in from the static HTML files, so you can’t ask your users for input (such as allowing users to make a login or post a comment).

Fortunately, many of those static website limitations are often addressed through Netlify.

Before we get into the pros and cons of Netlify, let’s talk about static site generators.

Let’s compare Netlify CMS with WordPress

WordPress and Netlify CMS are two of the foremost robust CMS (content management systems) on the market. Both are open-source and liberal to use, but that’s about where their similarities end.

WordPress is more popular — it powers almost 35% of all websites on the web . This is often likely thanks to the very fact that WordPress caters to users who don’t have prior programming experience and are trying to find an easy-to-use CMS.

On the opposite hand, Netlify appeals to developers concerned about website performance. WordPress’s heavy rear can impact a website’s speed and security.

If you ask a developer the way to speed up an internet site , they could recommend converting to a static site. This is often ideal for informational sites that change infrequently and need no user interaction.

For more complex sites, you would like a database-driven CMS, like WordPress. Where you want your users to create their account.

Let’s discuss about Pros and Cons of Netlify CMS

Pros:

Easy to setup website

Very easy and intuitive UI

Both CLI (Command Line Interface) and Web Based available.

Support custom domains and setup DNS for your domain automatically.

Supports HTTPS, and it’s very easy to set up.

Can pull updates from Git providers like Github, Gitlab etc.

Supports all static site generators

Cons:

Need understanding web programming (React, Javascript, TypeScript).

Need understanding for Markdown (few tools are available which can convert html or word to Markdown)

Need help?

If you want to make your first website then you can hire me on Fiverr and i can create Blog in Gatsby framework and Host on Netlify CMS.

via LearnPainLess’s RSS Feed

1 note

·

View note

Text

Everything You Need To Know About Mern Stack Development Trends

Suppose you are a beginner and want to acquire knowledge related to Mern Stack Development. What are you going to do? To help you find the correct information, we have come up with a detailed understanding of an open-source framework with powerful technologies known as Mern Stack development trends.

Many mern stack development trends are available in the market. Despite this, it has decent popularity amongst developers. Why and how? Before answering these questions, you must first know what Mern Stack development trends are. Let’s see.

What is Mern Stack? How It Is Impacting Web Development Services

Mern Stack is a web development framework combining four powerful technologies that develop the fastest application. Worldwide developers use this framework. It is a java-based framework for deploying the easiest and fastest full-stack web applications.

Mern stands for MongoDB, Express.Js, React.Js, and Node.Js. These technologies combinedly make the Mern Stack framework and work together to develop web applications and websites. Let’s discuss these technologies.

M– It refers to MongoDB. It is a NoSQL database management system. It is an alternative to the traditional relational database and works with a large set of distributed data. It manages the retrieved information, document-oriented information, or storage. To understand and learn this technology, you have knowledge and expertise in the C++ language.

E– It refers to Express.Js. It is based on the Node.js web application framework. Hybrid web application, single page, and multipage built by using Express.js. It is free and open-source software that is released under the MIT license. It is also known as the de-facto standard software server framework for Node.js.

R– It stands for React.Js. It is a free and open-source front-end JavaScript library. It is used for developing user interfaces based on UI components. It is a flexible and efficient JavaScript library.

N– It stands for Node.Js. It is a free, open-source, and Cross-platform JavaScript runtime environment.

It is an ideal tool for any project. It runs on the V8 engine. Developers use this technology to develop single-page applications, intense video streaming sites, and other intensive web applications. It is a powerful JavaScript server platform.

The Mern Stack is similar to the Mean Stack. But the only difference is that Mern Stack relies on React.Js development, and Mean Stack uses Angular.Js. Angular.js is the most popular front-end framework that is used to simplify the testing and development process. React.js is the most famous library in the world for developing user interfaces. Apart from these two technologies, all technologies are the same in both web development frameworks.

Let’s clear a question by answering it, is Mern a Full-Stack Solution?

Yes, Mern Stack is a Full-Stack Solution. Mern Stack is a part of full-stack. A Mern Stack can be a Full-Stack solution by following the traditional 3-tier architectural patterns. It includes the front-end display tier that is React.Js, the database tier that is MongoDB, and the application tier (Express.Js and Node.Js).

Advantages of Mern Stack Development

After understanding the components of Mern Stack (MongoDB, Express.Js, React.Js, and Node.Js), now it’s time to see the advantages of Mern Stack. These advantages are:

There is no context switching:

JavaScript is the only language used in the application to build both clients- and server-side. So there is no need for context switching in Web applications and delivers efficient web apps.

Model-View-Controller Architecture:

Mern Stack is providing a Model-view-controller architecture. Due to this, a developer can develop a web application conveniently.

Full Stack:

The added advantage will get with no context switching that is highly compatible and robust technologies. These technologies will work together so that client-side and server-side development will be efficiently handled and faster.

Easy Learning Curve:

There is only one need to secure Mern Stack advantages while developing web apps that developers have an excellent knowledge of JS and JSON.

Disadvantages of Mern Stack Development

Let’s see the disadvantages of Mern Stack Development after the advantages of Mern Stack Development. These are:

Productivity:

React code requires more effort because React uses many third-party libraries that deliver lower developer productivity.

Large-Scale Applications:

It is ideal for single-page applications. But when it comes to large projects, it will disappoint us. It becomes challenging to develop a large project with the Mern Stack Development.

It Prevents Common Coding Errors:

If you are looking for a stack that prevents common coding errors, then Mean stack Development is your right choice because the Mean stack has a component that makes it different from the Mern stack. That component is Angular.Js. Angular.Js uses TypeScript, preventing common coding errors at the coding stages. In this position, React.Js is effortless.

We have seen the advantages and disadvantages of Mern Stack Development. But now it’s time to know the difference between Mern Stack vs. Mean Stack. These two technologies have too many similarities except for one component. Mern Stack uses React.Js, whereas Mean Stack relies on Angular.Js. This is the significant difference between Mern stack development and Mean stack development.

View Original Source:

https://www.dreamsoft4u.com/blog/everything-you-need-to-know-about-mern-stack-development-trends/

0 notes

Text

What is SAP ERP? How does it work?

The SAP ERP software suite, which is an enterprise resource planning software, was created by the SAP SE company. ERP software integrates the core business processes of an organization into one system. The SAP ERP software suite, which is an enterprise resource planning software, was created by the SAP SE company. ERP software integrates the core business processes of an organization into one system.

ERP systems are made up of modules. Each module focuses on a specific business function such as finance, accounting, human resources management, production, materials management or customer relationship management. Only the modules required for their particular operations are used by businesses.

Customers can use SAP's ERP products to manage their business processes. This includes accounting, sales, production and HR. Data from all modules is stored in a central database. By integrating SAP consulting services ERP components, the common data store allows information to flow from one component without duplicate data entry. This enforces financial, legal, and process controls.

SAP ERP Central Component (SAP ECC), which is used in large and medium-sized American companies, is often implemented on-premises. SAP ERP used to be synonymous with Enterprise Content Management. It now refers to all SAP ERP products, including ECC, S/4HANA and Business One. ECC, SAP's flagship ERP serves as the foundation for S/4HANA, its next generation product. However, SAP implementation can be difficult. It is wise to hire SAP consultants who are able to handle all technical aspects of SAP ERP.

Why do Organizations Choose SAP ERP?

Many reasons are given for why SAP ERP is being preferred by many American organizations.

All-Inclusive Solutions

SAP has a wide range of ERP cloud systems and tools that can be tailored to your business, no matter how many employees you have.

Leading Edge Technology

SAP has more than 40 years of experience in enterprise resource planning across all industries and businesses in the USA. Cloud ERP tools that are future-proofed use the most recent technology and receive automatic updates.

Adaptability

SAP Cloud ERP Applications are easy to use and adaptable. Flexibility is the heart of SAP implementation.

Management Of Cloud Security

The SAP Business Technology Platform is supported by an advanced technology infrastructure. However, security threats and data protection must be managed by US agencies that offer data migration services.

How did SAP ERP gain importance?

For the development of an ERP, the former SAP R/3 software was used. SAP R/3 was launched on the 6th of July 1992. It included a number of programs and applications. All applications were hosted on the SAP Web Application Server. Extension sets were used to deliver new features while maintaining the core stability. The Web Application Server was integrated with SAP Basis.

The architecture of my SAP ERP was transformed in 2004 with the introduction. SAP introduced ERP Central Component (SAP ECC), in place of R/3 Enterprise. To support the transition from a service-oriented architecture to enterprise service architecture, the 2003 architecture of the enterprise was modified.

How does the SAP ERP system work?

S/4HANA is SAP's next-generation product. It's built on ECC ERP, SAP's flagship ERP. There are two types of modules: technical and functional. The following are examples of functional modules:

Human Capital Management-SAP HCM. Production Planning-SAP PP. Materials Management -SAP MM. Project System-SAP PS. Sales and Distribution-SAP SD. Plant Maintenance-SAP PM.

SAP ECC is usually deployed as an on-premises ERP solution using a client-server architecture. There are three levels: the presentation, the application and the database tier.

The presentation tier provides the user with the SAP GUI. This can be installed on any computer running Microsoft Windows or macOS. The SAP GUI is the interface between the user's computer and ECC.

ECC's core application tier is the application tier. Executes business logic, processes transactions, runs reports, monitors database access, prints documents, and communicates directly with other applications.

The database tier stores transaction data and other information.

SAP Business Suite is a collection of modules that includes supply chain management (SCM), product lifecycle management and ERP. ERP is the core component of SAP Business Suite.

SAP's in-memory ERP platform, S/4HANA was released in 2015. SAP HANA Business Suite, a complete rewrite designed for SAP HANA's in-memory databases, is available. SAP claims that S/4HANA was designed to simplify complex tasks and eventually replace SAP ECC.

SAP implementation can be a good option for large companies in the USA (ECC or S/4HANA).

Standardization of business processes within an organization

Business-wide unified view.

Strong reporting and analytics tools can help with decision-making.

Conclusion

SAP is committed in moving more customers into the cloud and to S/4HANA. By using the platforms to deliver leading-edge technologies such as AI and big data and advanced analytics, SAP implementation can increase productivity in your ERP.

SAP ERP is designed to help customers be more flexible, innovative, and profitable by leveraging AI, ubiquitous network, and human-centric user experiences. An expert should handle SAP implementation. An experienced agency that offers SAP consulting services can help an organization in the USA to drive greater growth and better resource planning.

1 note

·

View note

Text

On-Premises and Cloud data warehouses – Differences

Data warehousing is a process through which you can collect and manage your data from multiple sources. The data collected can serve as a source to capture meaningful business insights. The data management system of data warehousing is designed in such a way that it enables and supports activities related to business intelligence, specifically analytics. Data within a data warehouse is basically extracted from multiple sources like application log files and transaction applications.

Looking at the data warehouse market, the global Data Warehouse as a Service (DWaaS) is expected to reach $4.7bn in 2021, at a CAGR of 22.3% from 2021-2026. Let’s check out the data warehouse concepts, it’s extremely important while we consider working with offshore engineering services companies.

Data Warehouse Concepts

The architecture of a data warehouse is made of tiers consisting of the top tier, middle tier, and bottom tier. The front-end client is the top tier that gives results through reporting, analysis, and data mining tools. The middle tier has an analytics engine that can be utilized to access and analyze data. Lastly, the bottom tier is the database server where all the data is loaded and stored as well. The data in the bottom tier is stored in two ways that are:

Frequently accessed data is stored in very fast storage like SSD drives

Data not accessed frequently is stored in Amazon S3

Data warehouse at this point ensures that the frequently accessed data is moved into the fast storage to optimize the query speed.

On-Premises Data Warehouse

In on-premise data warehousing, the team is wholly responsible to carry out the actions due to its deployment nature. Go through some of the key benefits of a premise data warehouse.

Control: The organization using on-premise has complete authority over which hardware or software to choose, where to place it, and who all can access it with the on-premise deployments. The IT team also has physical access to the hardware if there is any failure. The team can also go through every layer of software to troubleshoot the issue. The team doesn’t have to depend on third parties to solve the issues.