#another one with so many ideas that i cant cram it all into one image

Text

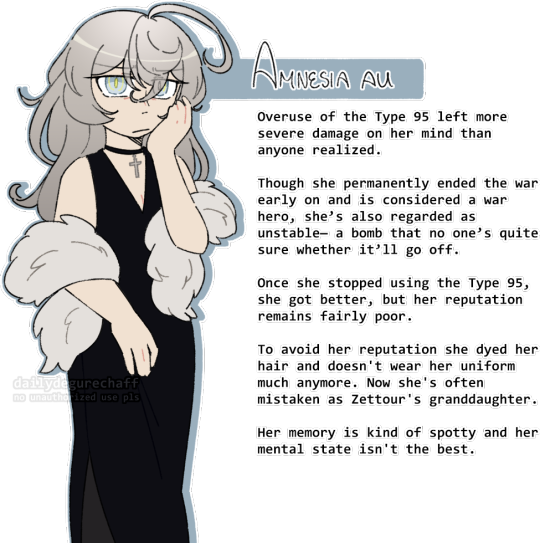

Today's Daily Degurechaff is... Regular Tanya is on vacation day 6 - Amnesia/Memory Loss AU

#this is the last day of AU Degus#dailydegurechaff#youjo senki#alternate universe#amnesia au#another one with so many ideas that i cant cram it all into one image#guys shes so depressed someone help her#the moment she realized she couldnt remember her old name at all it was over for her#she’s not normally in pretty dresses. but I was trying to depict the initial fic scene#alone at a party looking like she hates everyone

106 notes

·

View notes

Text

i yearn for one(1) thing only, and that is to have a nice, simplistic, cartoonish artstyle. an artstyle that doesnt rely on anatomy, but the "movement" of the drawing, if you get what i mean.

i dont want realistic proportions and traditional colors and basic poses and gradient shading, i want funky lil dudes in funky poses with funky styles littering my sketchbook :( but alas i havent figured out how to develop that kind of style yet, my brain wants anatomy to look nice but also i dont want to draw eyes. i dont want to take time out of my day to learn how to draw lips i want to draw a line that extends past the characters face. i dont want all my characters to have pointy chins with curved cheeks i want their heads to be round and friend-like or full of sharp edges depending on their personalities and styles. i want to give them all not-quite human ears, blob feet, simple faces, but at the same time i want enough detail to convey the story or emotion im trying to tell.

ive spent so much time recently agonizing over how to use 3d model websites, using real-life references and tracing over them for practice, color-picking from real images to try and do realism and failing miserably, but you know whats easier than that? funky little dudes. little dudes who do not care if their legs are too long or their hair is too bouncy. i dont want my characters to look human.

ive spent enough time on the artfight website to realize that most people who classify their characters as "human" have the most basic ass designs (no offense to people who like basic human designs its just not my thing) or its like dnd-medieval style outfits which i cant draw for the life of me (ive tried). again no offense to people who actively enjoy and draw characters like that. i just need my dudes to have that certain,,, off-ness to them. tails are cool. wings are swag (especially if they arent even like,, fully attached,, ), elf ears are so wonderful to me no matter how much theyre overused, horns are so much fun to draw, and colors!! i have no knowledge in the color theory department so this works great for me!! the only thing i really know is dont shade with black, other than that i just colorpick from references usually but i dont want to do that!! i want the colors to hurt people's eyes but in a satisfying way. like the character's design is so nice to look at that you dont mind your eyes hurting a bit. like how im enjoying writing this post even though its 2 am and the brightness on my computer wont go any lower.

and then another thing ive noticed from being on the artfight website is that a lot of people classify their characters that are anthro/have anthro features under humanoids/monsters. like i made a google form to find some people to attack and someone sent me in a character with some sort of animal (wolf? idk) arms and legs. like dude!! peak character design i love her. but me personally? i cant draw that shit, its so hard for me. i tried a while back and its just Not my thing. nothing against furries i just. cant. and i dont want to either.

and i got another submission that i accidentally deleted that was like full anthro/wolf-like like my comrade,,, i cannot draw animals what makes you think i can draw an animal who acts like a human lmao. i can do like. very basic tails, and also animal ears but i cant do the arms and legs and such i just dont know the anatomy, and i know i was talking about how i dont want to care about anatomy but i feel like for anthros you really do need to know at least basic animal anatomy so you know how the limbs look and shit and i dont have that knowledge and dont feel like gaining it.

and then there were some submissions that i absolutely adored. there was one that like, was vaguely human shaped but definitely was not a human. they had a dark-ish lavender colored skin and horns and tusks and like goat ears and a sorta fluffy tail with spikes on it and they had wings and such and they were such a pleasure to draw i love them. and they had a fairly simple outfit too, nothing too complicated. and then i also enjoy object head characters, theyre so neato to me. i got one of those and i really wish i had the motivation to work on it cause it looks so fun.

i want to make funky characters but id have nothing to do with them because the only book i ever tried writing (key word tried - never got past planning it out) had strictly human characters in it, and most of the books i read are humans/humans with powers in situations specific to them so id have no idea what lore to make with the dudes. assuming i have the motivation to make lore and backstory because honestly i just really enjoy character designing its super duper fun.

(side note a song about trucks doing the deed came on just now and its interrupted my flow, apologies).

i only have three actual characters right now. one is an original roleplay oc whos design is literally athletic shorts, an oversized long sleeved grey sweatshirt, long purple hair, and demon horns. the second one is my persona whos design some sorta medival knight outfit kinda thing? but not ugly it looks really cool (idk one of my friends designed it bc i won some contest from him but the drawing was on a super small scale so idrk the details,,,) with a plague doctor mask and crown, and shoulder length wavy brown hair, dyed bright pink at the end. and then my last one im not too comfortable using other places because theyre a character my friend is using in the story hes writing, and thats really the only place theyve been used. but theyre easily my favorite and im already writing a ton so ill talk about them too.

they're a sorta elf species thing from another planet, with pale green skin and pointed ears. they also have a tail, its like,, super thin, but with a feathery bit at the end. probably not the texture of a feather but i dont know how else to describe it. they have short, curly, almost-draco-malfoy-blonde hair that when it gets too long they can put in a man bun. their eyesight is kinda shitty so when they got to earth, they were exploring some supply closets around the airship. drop off area. thing. like airport but for rocketships and also fancier. yeah. they were exploring that area and found a nice big pair of round glasses with grey frames. and they also found a cowboy-style hat and a sharpie so they wrote their name on the underside of the brim of the hat and stole the hat and glasses (but left the sharpie in the supply closet).

yeah theyre my favorite, my absolute beloved, my child, so cool. i want more characters like them but with maybe a bit more snazzier designs. theyre super cool and all but they could have more pizzazz if they werent in a story where its too late to give them more pizzazz. i just want to be able to give my characters thigh-high boots with a bunch of buckles and fluffy hair with tons of accessories crammed in and abnormally large and long ears that can harbor many piercings and horns that can hold rings on them and special little details on their outfits like who knows what but i dont have any characters to do that too, so i have to make them from scratch, which is always hard especially when you have artblock.

and i also have like 17 characters i need to fully draw, line, and maybe color for artfight before august 1st. so i dont know. i have many things to do and plenty of time to do it but instead i spend my time halfway watching repetitive youtube videos that get boring or sleeping all damn day because i stay up too late doing things like this or i just do nothing at all and its tiring and frustrating but i also feel nothing about it like theres no consequence if i dont do it besides you know. not doing it, not gaining that experience, not making something i enjoy.

so i should do it but i dont for whatever reason, i think its called executive dysfunction but im not sure. this post started out very differently than it ended and i said somewhere up there that i was writing this at 2 am but now its almost 3. this is so many words why couldnt i have put this energy into something productive

#long post#sorry its so messy but like i said its almost 3 am and i dont want to go back and format all this#i might come back and make it look nicer in the morning#maybe not who knows#i just checked and this is 1.5k words what the hell

3 notes

·

View notes

Text

Vanishing point: the rise of the invisible computer

The long read: For decades, computers have got smaller and more powerful, enabling huge scientific progress. But this cant go on for ever. What happens when they stop shrinking?

In 1971, Intel, then an obscure firm in what would only later come to be known as Silicon Valley, released a chip called the 4004. It was the worlds first commercially available microprocessor, which meant it sported all the electronic circuits necessary for advanced number-crunching in a single, tiny package. It was a marvel of its time, built from 2,300 tiny transistors, each around 10,000 nanometres (or billionths of a metre) across about the size of a red blood cell. A transistor is an electronic switch that, by flipping between on and off, provides a physical representation of the 1s and 0s that are the fundamental particles of information.

In 2015 Intel, by then the worlds leading chipmaker, with revenues of more than $55bn that year, released its Skylake chips. The firm no longer publishes exact numbers, but the best guess is that they have about 1.5bn2 bn transistors apiece. Spaced 14 nanometres apart, each is so tiny as to be literally invisible, for they are more than an order of magnitude smaller than the wavelengths of light that humans use to see.

Everyone knows that modern computers are better than old ones. But it is hard to convey just how much better, for no other consumer technology has improved at anything approaching a similar pace. The standard analogy is with cars: if the car from 1971 had improved at the same rate as computer chips, then by 2015 new models would have had top speeds of about 420 million miles per hour. That is roughly two-thirds the speed of light, or fast enough to drive round the world in less than a fifth of a second. If that is still too slow, then before the end of 2017 models that can go twice as fast again will begin arriving in showrooms.

This blistering progress is a consequence of an observation first made in 1965 by one of Intels founders, Gordon Moore. Moore noted that the number of components that could be crammed onto an integrated circuit was doubling every year. Later amended to every two years, Moores law has become a self-fulfilling prophecy that sets the pace for the entire computing industry. Each year, firms such as Intel and the Taiwan Semiconductor Manufacturing Company spend billions of dollars figuring out how to keep shrinking the components that go into computer chips. Along the way, Moores law has helped to build a world in which chips are built in to everything from kettles to cars (which can, increasingly, drive themselves), where millions of people relax in virtual worlds, financial markets are played by algorithms and pundits worry that artificial intelligence will soon take all the jobs.

But it is also a force that is nearly spent. Shrinking a chips components gets harder each time you do it, and with modern transistors having features measured in mere dozens of atoms, engineers are simply running out of room. There have been roughly 22 ticks of Moores law since the launch of the 4004 in 1971 through to mid-2016. For the law to hold until 2050 means there will have to be 17 more, in which case those engineers would have to figure out how to build computers from components smaller than an atom of hydrogen, the smallest element there is. That, as far as anyone knows, is impossible.

Yet business will kill Moores law before physics does, for the benefits of shrinking transistors are not what they used to be. Moores law was given teeth by a related phenomenon called Dennard scaling (named for Robert Dennard, an IBM engineer who first formalised the idea in 1974), which states that shrinking a chips components makes that chip faster, less power-hungry and cheaper to produce. Chips with smaller components, in other words, are better chips, which is why the computing industry has been able to persuade consumers to shell out for the latest models every few years. But the old magic is fading.

Shrinking chips no longer makes them faster or more efficient in the way that it used to. At the same time, the rising cost of the ultra-sophisticated equipment needed to make the chips is eroding the financial gains. Moores second law, more light-hearted than his first, states that the cost of a foundry, as such factories are called, doubles every four years. A modern one leaves little change from $10bn. Even for Intel, that is a lot of money.

The result is a consensus among Silicon Valleys experts that Moores law is near its end. From an economic standpoint, Moores law is dead, says Linley Gwennap, who runs a Silicon Valley analysis firm. Dario Gil, IBMs head of research and development, is similarly frank: I would say categorically that the future of computing cannot just be Moores law any more. Bob Colwell, a former chip designer at Intel, thinks the industry may be able to get down to chips whose components are just five nanometres apart by the early 2020s but youll struggle to persuade me that theyll get much further than that.

One of the most powerful technological forces of the past 50 years, in other words, will soon have run its course. The assumption that computers will carry on getting better and cheaper at breakneck speed is baked into peoples ideas about the future. It underlies many technological forecasts, from self-driving cars to better artificial intelligence and ever more compelling consumer gadgetry. There are other ways of making computers better besides shrinking their components. The end of Moores law does not mean that the computer revolution will stall. But it does mean that the coming decades will look very different from the preceding ones, for none of the alternatives is as reliable, or as repeatable, as the great shrinkage of the past half-century.

Moores law has made computers smaller, transforming them from room-filling behemoths to svelte, pocket-filling slabs. It has also made them more frugal: a smartphone that packs more computing power than was available to entire nations in 1971 can last a day or more on a single battery charge. But its most famous effect has been to make computers faster. By 2050, when Moores law will be ancient history, engineers will have to make use of a string of other tricks if they are to keep computers getting faster.

There are some easy wins. One is better programming. The breakneck pace of Moores law has in the past left software firms with little time to streamline their products. The fact that their customers would be buying faster machines every few years weakened the incentive even further: the easiest way to speed up sluggish code might simply be to wait a year or two for hardware to catch up. As Moores law winds down, the famously short product cycles of the computing industry may start to lengthen, giving programmers more time to polish their work.

Another is to design chips that trade general mathematical prowess for more specialised hardware. Modern chips are starting to feature specialised circuits designed to speed up common tasks, such as decompressing a film, performing the complex calculations required for encryption or drawing the complicated 3D graphics used in video games. As computers spread into all sorts of other products, such specialised silicon will be very useful. Self-driving cars, for instance, will increasingly make use of machine vision, in which computers learn to interpret images from the real world, classifying objects and extracting information, which is a computationally demanding task. Specialised circuitry will provide a significant boost.

IBM reckons 3D chips could allow designers to shrink a supercomputer that now fills a building to something the size of a shoebox. Photograph: Bloomberg/Bloomberg via Getty Images

However, for computing to continue to improve at the rate to which everyone has become accustomed, something more radical will be needed. One idea is to try to keep Moores law going by moving it into the third dimension. Modern chips are essentially flat, but researchers are toying with chips that stack their components on top of each other. Even if the footprint of such chips stops shrinking, building up would allow their designers to keep cramming in more components, just as tower blocks can house more people in a given area than low-rise houses.

The first such devices are already coming to market: Samsung, a big South Korean microelectronics firm, sells hard drives whose memory chips are stacked in several layers. The technology holds huge promise.

Modern computers mount their memory several centimetres from their processors. At silicon speeds a centimetre is a long way, meaning significant delays whenever new data need to be fetched. A 3D chip could eliminate that bottleneck by sandwiching layers of processing logic between layers of memory. IBM reckons that 3D chips could allow designers to shrink a supercomputer that currently fills a building to something the size of a shoebox.

But making it work will require some fundamental design changes. Modern chips already run hot, requiring beefy heatsinks and fans to keep them cool. A 3D chip would be even worse, for the surface area available to remove heat would grow much more slowly than the volume that generates it. For the same reason, there are problems with getting enough electricity and data into such a chip to keep it powered and fed with numbers to crunch. IBMs shoebox supercomputer would therefore require liquid cooling. Microscopic channels would be drilled into each chip, allowing cooling liquid to flow through. At the same time, the firm believes that the coolant can double as a power source. The idea is to use it as the electrolyte in a flow battery, in which electrolyte flows past fixed electrodes.

There are more exotic ideas, too. Quantum computing proposes to use the counterintuitive rules of quantum mechanics to build machines that can solve certain types of mathematical problem far more quickly than any conventional computer, no matter how fast or high-tech (for many other problems, though, a quantum machine would offer no advantage). Their most famous application is cracking some cryptographic codes, but their most important use may be accurately simulating the quantum subtleties of chemistry, a problem that has thousands of uses in manufacturing and industry but that conventional machines find almost completely intractable.

A decade ago, quantum computing was confined to speculative research within universities. These days several big firms including Microsoft, IBM and Google are pouring money into the technology all of which forecast that quantum chips should be available within the next decade or two (indeed, anyone who is interested can already play with one of IBMs quantum chips remotely, programming it via the internet).

A Canadian firm called D-Wave already sells a limited quantum computer, which can perform just one mathematical function, though it is not yet clear whether that specific machine is really faster than a non-quantum model.

Like 3D chips, quantum computers need specialised care and feeding. For a quantum computer to work, its internals must be sealed off from the outside world. Quantum computers must be chilled with liquid helium to within a hairs breadth of absolute zero, and protected by sophisticated shielding, for even the smallest pulse of heat or stray electromagnetic wave could ruin the delicate quantum states that such machines rely on.

Each of these prospective improvements, though, is limited: either the gains are a one-off, or they apply only to certain sorts of calculations. The great strength of Moores law was that it improved everything, every couple of years, with metronomic regularity. Progress in the future will be bittier, more unpredictable and more erratic. And, unlike the glory days, it is not clear how well any of this translates to consumer products. Few people would want a cryogenically cooled quantum PC or smartphone, after all. Ditto liquid cooling, which is heavy, messy and complicated. Even building specialised logic for a given task is worthwhile only if it will be regularly used.

But all three technologies will work well in data centres, where they will help to power another big trend of the next few decades. Traditionally, a computer has been a box on your desk or in your pocket. In the future the increasingly ubiquitous connectivity provided by the internet and the mobile-phone network will allow a great deal of computing power to be hidden away in data centres, with customers making use of it as and when they need it. In other words, computing will become a utility that is tapped on demand, like electricity or water today.

The ability to remove the hardware that does the computational heavy lifting from the hunk of plastic with which users actually interact known as cloud computing will be one of the most important ways for the industry to blunt the impact of the demise of Moores law. Unlike a smartphone or a PC, which can only grow so large, data centres can be made more powerful simply by building them bigger. As the worlds demand for computing continues to expand, an increasing proportion of it will take place in shadowy warehouses hundreds of miles from the users who are being served.

Googles data centre in Dalles, Oregon. As the worlds demand for computing continues to expand, an increasing proportion of it will take place in shadowy warehouses hundreds of miles from the users who are being served. Photograph: Uncredited/AP

This is already beginning to happen. Take an app such as Siri, Apples voice-powered personal assistant. Decoding human speech and working out the intent behind an instruction such as Siri, find me some Indian restaurants nearby requires more computing power than an iPhone has available. Instead, the phone simply records its users voice and forwards the information to a beefier computer in one of Apples data centres. Once that remote computer has figured out an appropriate response, it sends the information back to the iPhone.

The same model can be applied to much more than just smartphones. Chips have already made their way into things not normally thought of as computers, from cars to medical implants to televisions and kettles, and the process is accelerating. Dubbed the internet of things (IoT), the idea is to embed computing into almost every conceivable object.

Smart clothes will use a home network to tell a washing machine what settings to use; smart paving slabs will monitor pedestrian traffic in cities and give governments forensically detailed maps of air pollution.

Once again, a glimpse of that future is visible already: engineers at firms such as Rolls-Royce can even now monitor dozens of performance indicators for individual jet engines in flight, for instance. Smart home hubs, which allow their owners to control everything from lighting to their kitchen appliances with a smartphone, have been popular among early adopters.

But for the IoT to reach its full potential will require some way to make sense of the torrents of data that billions of embedded chips will throw off. The IoT chips themselves will not be up to the task: the chip embedded in a smart paving slab, for instance, will have to be as cheap as possible, and very frugal with its power: since connecting individual paving stones to the electricity network is impractical, such chips will have to scavenge energy from heat, footfalls or even ambient electromagnetic radiation.

As Moores law runs into the sand, then, the definition of better will change. Besides the avenues outlined above, many other possible paths look promising. Much effort is going into improving the energy efficiency of computers, for instance. This matters for several reasons: consumers want their smartphones to have longer battery life; the IoT will require computers to be deployed in places where mains power is not available; and the sheer amount of computing going on is already consuming something like 2% of the worlds electricity generation.

User interfaces are another area ripe for improvement, for todays technology is ancient. Keyboards are a direct descendant of mechanical typewriters. The mouse was first demonstrated in 1968, as were the graphical user interfaces, such as Windows or iOS, which have replaced the arcane text symbols of early computers with friendly icons and windows. Cern, Europes particle-physics laboratory, pioneered touchscreens in the 1970s.

The computer mouse was first demonstrated in 1968. Photograph: Getty Images

Siri may leave your phone and become omnipresent: artificial intelligence will (and cloud computing could) allow virtually any machine, no matter how individually feeble, to be controlled simply by talking to it. Samsung already makes a voice-controlled television.

Technologies such as gesture tracking and gaze tracking, currently being pioneered for virtual-reality video games, may also prove useful. Augmented reality (AR), a close cousin of virtual reality that involves laying computer-generated information over the top of the real world, will begin to blend the virtual and the real. Google may have sent its Glass AR headset back to the drawing board, but something very like it will probably find a use one day. And the firm is working on electronic contact lenses that could perform similar functions while being much less intrusive.

Moores law cannot go on for ever. But as it fades, it will fade in importance. It mattered a lot when your computer was confined to a box on your desk, and when computers were too slow to perform many desirable tasks. It gave a gigantic global industry a master metronome, and a future without it will see computing progress become harder, more fitful and more irregular. But progress will still happen. The computer of 2050 will be a system of tiny chips embedded in everything from your kitchen counter to your car. Most of them will have access to vast amounts of computing power delivered wirelessly, through the internet, and you will interact with them by speaking to the room. Trillions of tiny chips will be scattered through every corner of the physical environment, making a world more comprehensible and more monitored than ever before. Moores law may soon be over. The computing revolution is not.

This article is adapted from Megatech: Technology in 2050, edited by Daniel Franklin, published by Economist Books on 16 February

Follow the Long Read on Twitter at @gdnlongread, or sign up to the long read weekly email here.

Read more: http://ift.tt/2jrM3vy

from Vanishing point: the rise of the invisible computer

0 notes