#apache spark advantages

Explore tagged Tumblr posts

Text

Exploring the Boundless World of Java Programming: Your Path to Software Development Excellence

The world of programming is a fascinating and dynamic realm where innovation knows no bounds. In this rapidly evolving landscape, one language has remained a steadfast and versatile companion to developers for many years – Java. As one of the cornerstones of software development, Java is a programming language that continues to shape the digital future. Its ability to create diverse applications, its cross-platform compatibility, and its emphasis on readability have endeared it to developers across the globe.

Java's extensive libraries and its commitment to staying at the forefront of technology have made it a powerhouse capable of addressing a wide spectrum of challenges in the software development arena. The allure of Java programming lies in its versatility and the potential it offers to explore and master this dynamic and ever-evolving world.

Whether you're a seasoned developer or a novice just embarking on your coding adventure, Java has something to offer. It opens doors to countless opportunities and holds the potential to shape your digital future. As you delve into the capabilities of Java programming, you'll discover why it's a valuable skill to acquire and the myriad ways it can impact your journey in the world of software development.

Java in the World of Development

Java programming's influence spans a wide range of application domains, making it a sought-after skill among developers. Let's explore some key areas where Java shines:

1. Web Development

Java's utility in web development is well-established. The Java Enterprise Edition (Java EE) offers a robust set of tools and frameworks for building enterprise-level web applications. Popular web frameworks like Spring and JavaServer Faces (JSF) simplify web development, making it more efficient and structured.

2. Mobile App Development

For mobile app development, Java stands as a primary language for the Android platform. Android Studio, the official Android development environment, uses Java to create mobile apps for Android devices. Given the widespread use of Android devices, Java skills are in high demand, offering lucrative opportunities for developers.

3. Desktop Applications

Java's Graphical User Interface (GUI) libraries, including Swing and JavaFX, allow developers to create cross-platform desktop applications. This means a single Java application can run seamlessly on Windows, macOS, and Linux without modification. Java's platform independence is a significant advantage in this regard.

4. Game Development

While not as common as some other languages for game development, Java has made its mark in the gaming industry. Frameworks like LibGDX empower developers to create engaging and interactive games, proving that Java's versatility extends to the world of gaming.

5. Big Data and Analytics

In the realm of big data processing and analytics, Java plays a pivotal role. Leading frameworks like Apache Hadoop and Apache Spark are written in Java, leveraging its multithreading capabilities for processing vast datasets. Java's speed and reliability make it a preferred choice in data-intensive applications.

6. Server-Side Applications

Java is a top choice for building server-side applications. It powers numerous web servers, including Apache Tomcat and Jetty, and is widely used in developing backend services. Java's scalability and performance make it an ideal candidate for server-side tasks.

7. Cloud Computing

The cloud computing landscape benefits from Java's presence. Java applications can be seamlessly deployed on cloud platforms like Amazon Web Services (AWS) and Microsoft Azure. Its robustness and adaptability are vital for building cloud-based services and applications, offering limitless possibilities in cloud computing.

8. Scientific and Academic Research

Java's readability and maintainability make it a preferred choice in scientific and academic research. Researchers and scientists find Java suitable for developing scientific simulations, analysis tools, and research applications. Its ability to handle complex computations and data processing is a significant advantage in the research domain.

9. Internet of Things (IoT)

Java is gaining prominence in the Internet of Things (IoT) domain. It serves as a valuable tool for developing embedded systems, particularly in conjunction with platforms like Raspberry Pi. Its cross-platform compatibility ensures that IoT devices can run smoothly across diverse hardware.

10. Enterprise Software

Many large-scale enterprise-level applications and systems rely on Java as their foundation. Its scalability, security features, and maintainability make it an ideal choice for large organizations. Java's robustness and ability to handle complex business logic are assets in the enterprise software domain.

Java Programming: A Valuable Skill

The allure of Java programming lies in its adaptability and its remarkable potential to shape your career in software development. Java is not just a programming language; it's a gateway to a world of opportunities. With an ever-present demand for skilled Java developers, learning Java can open doors to numerous career prospects and professional growth.

As you embark on your journey to master Java programming, it's essential to have the right guidance and training.If you're committed to becoming a proficient Java developer and unlocking the full potential of this versatile language, consider enrolling in the comprehensive Java training programs offered by ACTE Technologies. It stands as a trusted guide, offering expert-led courses designed to equip you with the knowledge, skills, and practical experience necessary to excel in the world of Java programming. Your future as a proficient Java developer begins here, and the possibilities are limitless.

Java programming is a valuable investment in your career in software development. The multitude of applications and opportunities it offers, coupled with its sustained relevance and demand, make it a skill worth acquiring. Whether you are entering the world of programming or seeking to expand your horizons, mastering Java is a rewarding and transformative journey. So, why wait? Take the first step towards becoming a proficient Java developer with ACTE Technologies, and unlocking a world of possibilities in the realm of software development. Your journey to success begins here.

3 notes

·

View notes

Text

Transform Your Career with Our Big Data Analytics Course: The Future is Now

In today's rapidly evolving technological landscape, the power of data is undeniable. Big data analytics has emerged as a game-changer across industries, revolutionizing the way businesses operate and make informed decisions. By equipping yourself with the right skills and knowledge in this field, you can unlock exciting career opportunities and embark on a path to success. Our comprehensive Big Data Analytics Course is designed to empower you with the expertise needed to thrive in the data-driven world of tomorrow.

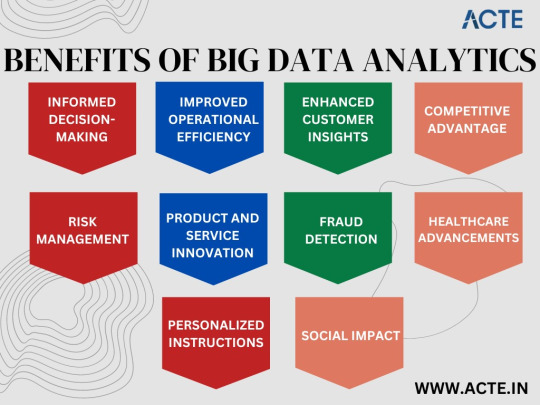

Benefits of Our Big Data Analytics Course

Stay Ahead of the Curve

With the ever-increasing amount of data generated each day, organizations seek professionals who can effectively analyze and interpret this wealth of information. By enrolling in our Big Data Analytics Course, you gain a competitive edge by staying ahead of the curve. Learn the latest techniques and tools used in the industry to extract insights from complex datasets, enabling you to make data-driven decisions that propel organizations into the future.

Highly Lucrative Opportunities

The demand for skilled big data professionals continues to skyrocket, creating a vast array of lucrative job opportunities. As more and more companies recognize the value of harnessing their data, they actively seek individuals with the ability to leverage big data analytics for strategic advantages. By completing our course, you position yourself as a sought-after professional capable of commanding an impressive salary and enjoying job security in this rapidly expanding field.

Broaden Your Career Horizon

Big data analytics transcends industry boundaries, making this skillset highly transferrable. By mastering the art of data analysis, you open doors to exciting career prospects in various sectors ranging from finance and healthcare to marketing and e-commerce. The versatility of big data analytics empowers you to shape your career trajectory according to your interests, guaranteeing a vibrant and dynamic professional journey.

Ignite Innovation and Growth

In today's digital age, data is often referred to as the new oil, and for a good reason. The ability to unlock insights from vast amounts of data enables organizations to identify trends, optimize processes, and identify new opportunities for growth. By acquiring proficiency in big data analytics through our course, you become a catalyst for innovation within your organization, driving positive change and propelling businesses towards sustainable success.

Information Provided by Our Big Data Analytics Course

Advanced Data Analytics Techniques

Our course dives deep into advanced data analytics techniques, equipping you with the knowledge and skills to handle complex datasets. From data preprocessing and data visualization to statistical analysis and predictive modeling, you will gain a comprehensive understanding of the entire data analysis pipeline. Our experienced instructors use practical examples and real-world case studies to ensure you develop proficiency in applying these techniques to solve complex business problems.

Cutting-Edge Tools and Technologies

Staying ahead in the field of big data analytics requires fluency in the latest tools and technologies. Throughout our course, you will work with industry-leading software, such as Apache Hadoop and Spark, Python, R, and SQL, which are widely used for data manipulation, analysis, and visualization. Hands-on exercises and interactive projects provide you with invaluable practical experience, enabling you to confidently apply these tools in real-world scenarios.

Ethical Considerations in Big Data

As the use of big data becomes more prevalent, ethical concerns surrounding privacy, security, and bias arise. Our course dedicates a comprehensive module to explore the ethical considerations in big data analytics. By understanding the impact of your work on individuals and society, you learn how to ensure responsible data handling and adhere to legal and ethical guidelines. By fostering a sense of responsibility, the course empowers you to embrace ethical practices and make a positive contribution to the industry.

Education and Learning Experience

Expert Instructors

Our Big Data Analytics Course is led by accomplished industry experts with a wealth of experience in the field. These instructors possess a deep understanding of big data analytics and leverage their practical knowledge to deliver engaging and insightful lessons. Their guidance and mentorship ensure you receive top-quality education that aligns with industry best practices, optimally preparing you for the challenges and opportunities that lie ahead.

Interactive and Collaborative Learning

We believe in the power of interactive and collaborative learning experiences. Our Big Data Analytics Course fosters a vibrant learning community where you can engage with fellow students, share ideas, and collaborate on projects. Through group discussions, hands-on activities, and peer feedback, you gain a comprehensive understanding of big data analytics while also developing vital teamwork and communication skills essential for success in the professional world.

Flexible Learning Options

We understand that individuals lead busy lives, juggling multiple commitments. That's why our Big Data Analytics Course offers flexible learning options to suit your schedule. Whether you prefer attending live virtual classes or learning at your own pace through recorded lectures, we provide a range of options to accommodate your needs. Our user-friendly online learning platform empowers you to access course material anytime, anywhere, making it convenient for you to balance learning with your other commitments.

The future is now, and big data analytics has the potential to transform your career. By enrolling in our Big Data Analytics Course at ACTE institute, you gain the necessary knowledge and skills to excel in this rapidly evolving field. From the incredible benefits and the wealth of information provided to the exceptional education and learning experience, our course equips you with the tools you need to thrive in the data-driven world of the future. Don't wait - take the leap and embark on an exciting journey towards a successful and fulfilling career in big data analytics.

6 notes

·

View notes

Text

Career Opportunities After Completing an Artificial Intelligence Course in Dubai

As the world moves rapidly toward digital transformation, Artificial Intelligence (AI) is no longer a luxury but a necessity across industries. Dubai, a global leader in smart technology adoption, has become a thriving hub for AI innovation and talent. If you're considering taking an Artificial Intelligence course in Dubai, you're making a strategic decision that can open up lucrative and future-proof career paths.

In this article, we'll explore the career opportunities available after completing an Artificial Intelligence course in Dubai and why this city is emerging as a leading AI education and employment destination in 2025.

Why AI in Dubai?

Dubai’s ambitious government-led initiatives—such as the UAE Artificial Intelligence Strategy 2031 and Smart Dubai—are driving AI implementation across sectors. From smart policing and autonomous transport to AI-driven healthcare and finance, Dubai is creating a dynamic environment for AI professionals to flourish.

Completing an Artificial Intelligence course in Dubai equips you with the skills needed to take advantage of these opportunities in a tech-forward economy.

In-Demand AI Career Roles in Dubai

Here are the top job roles you can pursue after earning your AI certification in Dubai:

1. AI Engineer

AI Engineers are the architects behind intelligent systems. They develop algorithms, build neural networks, and optimize AI models for real-world applications. Companies in Dubai’s fintech, logistics, and e-commerce sectors are actively hiring AI Engineers to streamline operations and enhance customer experience.

Key Skills: Python, TensorFlow, Keras, deep learning, machine learning Average Salary: AED 240,000 – AED 360,000 annually

2. Data Scientist

Data Scientists extract actionable insights from massive datasets using AI-powered tools. With Dubai’s government and private sectors investing in data-driven decision-making, data scientists are in high demand across healthcare, education, and energy sectors.

Key Skills: Data analysis, machine learning, R, Python, SQL, statistics Average Salary: AED 220,000 – AED 340,000 annually

3. Machine Learning Engineer

These professionals build scalable ML systems that can learn and adapt from data. ML Engineers in Dubai are developing recommendation engines, fraud detection systems, and predictive maintenance tools for smart cities and businesses.

Key Skills: Supervised and unsupervised learning, model deployment, Python, Apache Spark Average Salary: AED 250,000 – AED 380,000 annually

4. NLP (Natural Language Processing) Specialist

NLP Specialists develop applications that can understand and process human language. In Dubai, the rise of Arabic-language chatbots, voice assistants, and document automation tools has made this role highly valuable.

Key Skills: NLP libraries (spaCy, NLTK), sentiment analysis, text mining Average Salary: AED 220,000 – AED 300,000 annually

5. AI Project Manager

For those with both technical and managerial skills, this role involves overseeing AI projects from ideation to implementation. Dubai’s public sector projects often require skilled AI project managers to ensure smooth execution.

Key Skills: Agile methodology, team management, data literacy, stakeholder communication Average Salary: AED 300,000 – AED 450,000 annually

6. Computer Vision Engineer

In sectors like security, autonomous vehicles, and smart retail, computer vision is essential. Dubai’s airport systems, surveillance, and retail analytics are using CV applications extensively.

Key Skills: OpenCV, deep learning, image processing, object detection Average Salary: AED 240,000 – AED 320,000 annually

7. AI Consultant

AI consultants guide businesses in understanding how AI can solve operational challenges. Consulting firms and innovation labs in Dubai are looking for AI experts who can advise on implementation and ROI.

Key Skills: AI strategy, solution architecture, client management Average Salary: AED 280,000 – AED 400,000 annually

Top Industries Hiring AI Talent in Dubai

Dubai’s cross-sectoral adoption of AI means that career opportunities aren’t limited to just tech companies. Here are the top industries where AI professionals are in high demand:

Healthcare

From AI-powered diagnostics to hospital management systems, healthcare in Dubai is being transformed. Government and private hospitals are adopting machine learning models for faster and more accurate care.

Finance & Banking

AI is being used for fraud detection, automated credit scoring, algorithmic trading, and customer service. Dubai’s banking sector is particularly active in integrating AI to improve efficiency and reduce risk.

Logistics & Supply Chain

As a global logistics hub, Dubai relies heavily on predictive analytics, route optimization, and intelligent inventory systems—all powered by AI.

Smart Cities & Government

The Smart Dubai initiative aims to digitize every aspect of city living using AI—from transportation and energy to law enforcement and education.

Retail & E-Commerce

Companies like Noon and Carrefour are leveraging AI to personalize shopping experiences, manage inventory, and drive targeted marketing.

Real Estate & Construction

AI is being used in property valuation, risk assessment, and smart building technologies throughout Dubai’s rapidly growing skyline.

Advantages of Studying AI in Dubai

Completing your Artificial Intelligence course in Dubai offers several advantages beyond just academics:

International Exposure

Dubai is home to professionals from over 200 nationalities. Studying here provides a multicultural learning environment and exposure to global best practices.

Industry Collaboration

Many AI programs in Dubai include internships, capstone projects, and industry tie-ups. This gives students a head start in applying AI to real business problems.

Practical, Industry-Driven Curriculum

Institutes offering AI education in Dubai focus heavily on practical skills and hands-on learning—making graduates job-ready from day one.

A Glimpse into a Globally Recognized AI Program in Dubai

One standout program in Dubai is offered by a globally respected AI training institute that emphasizes:

Real-world case studies in healthcare, finance, and smart cities

Tools like Python, TensorFlow, Scikit-learn, and Generative AI platforms

Mentorship by experienced data scientists and AI engineers

Career support services including resume building and interview preparation

Flexibility with weekend, part-time, and online learning options

Their Artificial Intelligence course in Dubai is ideal for students, working professionals, and even entrepreneurs seeking to harness the power of AI in their domain.

Graduates from this institute have landed roles at leading companies across the UAE and globally. The curriculum is continuously updated to reflect the fast-changing AI landscape, including areas like Agentic AI, ethical AI, and AI policy.

Final Thoughts

Dubai is not just a city of the future—it is the present capital of innovation in the Middle East. As AI becomes a cornerstone of every major industry, having the right skills can give you a significant edge in the job market.

By enrolling in anArtificial Intelligence Classroom Course in Dubai, you position yourself at the intersection of technology, opportunity, and growth. Whether you're a beginner or a seasoned professional looking to upskill, the career paths are wide, varied, and lucrative.

And when you choose a globally recognized, practical, and project-oriented AI program—like the one offered by the Boston Institute of Analytics—you're not just learning AI, you're preparing to lead in it.

#Artificial Intelligence Course in Dubai#Artificial Intelligence Classroom Course in Dubai#Data Science Certification Training Course in Dubai#Data Scientist Training Institutes in Dubai

0 notes

Text

What are the benefits of Amazon EMR? Drawbacks of AWS EMR

Benefits of Amazon EMR

Amazon EMR has many benefits. These include AWS's flexibility and cost savings over on-premises resource development.

Cost-saving

Amazon EMR costs depend on instance type, number of Amazon EC2 instances, and cluster launch area. On-demand pricing is low, but Reserved or Spot Instances save much more. Spot instances can save up to a tenth of on-demand costs.

Note

Using Amazon S3, Kinesis, or DynamoDB with your EMR cluster incurs expenses irrespective of Amazon EMR usage.

Note

Set up Amazon S3 VPC endpoints when creating an Amazon EMR cluster in a private subnet. If your EMR cluster is on a private subnet without Amazon S3 VPC endpoints, you will be charged extra for S3 traffic NAT gates.

AWS integration

Amazon EMR integrates with other AWS services for cluster networking, storage, security, and more. The following list shows many examples of this integration:

Use Amazon EC2 for cluster nodes.

Amazon VPC creates the virtual network where your instances start.

Amazon S3 input/output data storage

Set alarms and monitor cluster performance with Amazon CloudWatch.

AWS IAM permissions setting

Audit service requests with AWS CloudTrail.

Cluster scheduling and launch with AWS Data Pipeline

AWS Lake Formation searches, categorises, and secures Amazon S3 data lakes.

Its deployment

The EC2 instances in your EMR cluster do the tasks you designate. When you launch your cluster, Amazon EMR configures instances using Spark or Apache Hadoop. Choose the instance size and type that best suits your cluster's processing needs: streaming data, low-latency queries, batch processing, or big data storage.

Amazon EMR cluster software setup has many options. For example, an Amazon EMR version can be loaded with Hive, Pig, Spark, and flexible frameworks like Hadoop. Installing a MapR distribution is another alternative. Since Amazon EMR runs on Amazon Linux, you can manually install software on your cluster using yum or the source code.

Flexibility and scalability

Amazon EMR lets you scale your cluster as your computing needs vary. Resizing your cluster lets you add instances during peak workloads and remove them to cut costs.

Amazon EMR supports multiple instance groups. This lets you employ Spot Instances in one group to perform jobs faster and cheaper and On-Demand Instances in another for guaranteed processing power. Multiple Spot Instance types might be mixed to take advantage of a better price.

Amazon EMR lets you use several file systems for input, output, and intermediate data. HDFS on your cluster's primary and core nodes can handle data you don't need to store beyond its lifecycle.

Amazon S3 can be used as a data layer for EMR File System applications to decouple computation and storage and store data outside of your cluster's lifespan. EMRFS lets you scale up or down to meet storage and processing needs independently. Amazon S3 lets you adjust storage and cluster size to meet growing processing needs.

Reliability

Amazon EMR monitors cluster nodes and shuts down and replaces instances as needed.

Amazon EMR lets you configure automated or manual cluster termination. Automatic cluster termination occurs after all procedures are complete. Transitory cluster. After processing, you can set up the cluster to continue running so you can manually stop it. You can also construct a cluster, use the installed apps, and manually terminate it. These clusters are “long-running clusters.”

Termination prevention can prevent processing errors from terminating cluster instances. With termination protection, you can retrieve data from instances before termination. Whether you activate your cluster by console, CLI, or API changes these features' default settings.

Security

Amazon EMR uses Amazon EC2 key pairs, IAM, and VPC to safeguard data and clusters.

IAM

Amazon EMR uses IAM for permissions. Person or group permissions are set by IAM policies. Users and groups can access resources and activities through policies.

The Amazon EMR service uses IAM roles, while instances use the EC2 instance profile. These roles allow the service and instances to access other AWS services for you. Amazon EMR and EC2 instance profiles have default roles. By default, roles use AWS managed policies generated when you launch an EMR cluster from the console and select default permissions. Additionally, the AWS CLI may construct default IAM roles. Custom service and instance profile roles can be created to govern rights outside of AWS.

Security groups

Amazon EMR employs security groups to control EC2 instance traffic. Amazon EMR shares a security group for your primary instance and core/task instances when your cluster is deployed. Amazon EMR creates security group rules to ensure cluster instance communication. Extra security groups can be added to your primary and core/task instances for more advanced restrictions.

Encryption

Amazon EMR enables optional server-side and client-side encryption using EMRFS to protect Amazon S3 data. After submission, Amazon S3 encrypts data server-side.

The EMRFS client on your EMR cluster encrypts and decrypts client-side encryption. AWS KMS or your key management system can handle client-side encryption root keys.

Amazon VPC

Amazon EMR launches clusters in Amazon VPCs. VPCs in AWS allow you to manage sophisticated network settings and access functionalities.

AWS CloudTrail

Amazon EMR and CloudTrail record AWS account requests. This data shows who accesses your cluster, when, and from what IP.

Amazon EC2 key pairs

A secure link between the primary node and your remote computer lets you monitor and communicate with your cluster. SSH or Kerberos can authenticate this connection. SSH requires an Amazon EC2 key pair.

Monitoring

Debug cluster issues like faults or failures utilising log files and Amazon EMR management interfaces. Amazon EMR can archive log files on Amazon S3 to save records and solve problems after your cluster ends. The Amazon EMR UI also has a task, job, and step-specific debugging tool for log files.

Amazon EMR connects to CloudWatch for cluster and job performance monitoring. Alarms can be set based on cluster idle state and storage use %.

Management interfaces

There are numerous Amazon EMR access methods:

The console provides a graphical interface for cluster launch and management. You may examine, debug, terminate, and describe clusters to launch via online forms. Amazon EMR is easiest to use via the console, requiring no scripting.

Installing the AWS Command Line Interface (AWS CLI) on your computer lets you connect to Amazon EMR and manage clusters. The broad AWS CLI includes Amazon EMR-specific commands. You can automate cluster administration and initialisation with scripts. If you prefer command line operations, utilise the AWS CLI.

SDK allows cluster creation and management for Amazon EMR calls. They enable cluster formation and management automation systems. This SDK is best for customising Amazon EMR. Amazon EMR supports Go, Java,.NET (C# and VB.NET), Node.js, PHP, Python, and Ruby SDKs.

A Web Service API lets you call a web service using JSON. A custom SDK that calls Amazon EMR is best done utilising the API.

Complexity:

EMR cluster setup and maintenance are more involved than with AWS Glue and require framework knowledge.

Learning curve

Setting up and optimising EMR clusters may require adjusting settings and parameters.

Possible Performance Issues:

Incorrect instance types or under-provisioned clusters might slow task execution and other performance.

Depends on AWS:

Due to its deep interaction with AWS infrastructure, EMR is less portable than on-premise solutions despite cloud flexibility.

#AmazonEMR#AmazonEC2#AmazonS3#AmazonVirtualPrivateCloud#EMRFS#AmazonEMRservice#Technology#technews#NEWS#technologynews#govindhtech

0 notes

Text

Build Your Career with the Best Data Engineering Community Online

In today’s digital-first economy, data engineering is one of the most in-demand and rewarding tech careers. However, mastering this complex and evolving field isn’t just about self-study or online courses. Real growth often happens when you're part of a strong, supportive, and expert-driven community.

That’s exactly what the Big Data Bootcamp Data Engineering Community offers: a thriving ecosystem of professionals, mentors, and learners united by a common goal—to build and elevate careers in data engineering. Whether you’re just starting out or already working in tech, this online community offers the tools, guidance, and connections to help you succeed faster and more confidently.

Let’s explore why joining the right Data Engineering Community is a game-changer, and how Big Data Bootcamp’s platform stands out as the ultimate launchpad for your career in this exciting field.

Why Community Matters in Data Engineering

Learning to become a data engineer is more than following tutorials or earning certifications. The technology stack is wide and deep, involving concepts like distributed systems, data pipelines, cloud platforms, and real-time processing. Keeping up with these tools and practices is easier—and more effective—when you have a network of peers and experts to support you.

A professional community helps by providing:

1. Mentorship and Guidance

Tap into the knowledge of experienced professionals who have walked the path you’re on. Whether you're facing technical challenges or career decisions, mentors can provide direction that accelerates your progress.

2. Collaborative Learning

Communities foster an environment where learning is not just individual but shared. Group projects, open-source contributions, and peer reviews are common in active communities, offering real-world skills you can't gain in isolation.

3. Industry Insights

Staying current in data engineering requires awareness of trends, best practices, and innovations. A connected community can be your real-time feed for what’s happening in the world of big data.

4. Career Opportunities

Networking is one of the fastest ways to land a job in tech. Many community members share job leads, referrals, and insider info that isn't publicly posted.

5. Accountability and Motivation

When you're surrounded by motivated people with similar goals, it keeps you inspired and on track. Sharing progress and celebrating milestones fuels ongoing commitment.

Introducing the Big Data Bootcamp Community

The Big Data Bootcamp Data Engineering Community is more than just a chat group or online forum. It’s an organized, high-impact environment designed to provide real value at every stage of your career journey.

Hosted at BigDataBootcamp.com, the platform combines the best of structured learning, peer support, and professional development. It’s tailored specifically for:

Aspiring data engineers

Bootcamp and college graduates

Career switchers from software development, analytics, or IT

Experienced data professionals looking to level up

Here’s what makes this online community stand out.

What You Get as a Member

1. Access to Expert Mentors

Learn from top-tier professionals who have worked with companies like Google, Amazon, Meta, and cutting-edge startups. These mentors actively guide members through code reviews, project feedback, and one-on-one career advice.

2. Structured Learning Paths

Community members can access exclusive workshops, tutorials, and study groups aligned with in-demand skills like:

Data pipeline design

Apache Spark, Kafka, and Airflow

Cloud data platforms (AWS, GCP, Azure)

Data warehouse tools like Snowflake and BigQuery

Advanced SQL and Python scripting

3. Real-World Projects

Apply your skills in collaborative projects that simulate actual industry challenges. This builds not just your knowledge, but also your portfolio—essential for standing out to employers.

4. Career Acceleration Services

Take advantage of:

Resume and LinkedIn profile reviews

Job interview prep sessions

Access to a private job board

Referrals from alumni and hiring partners

5. Regular Events and Networking

Participate in:

Webinars with industry leaders

AMAs with senior data engineers

Virtual meetups and hackathons

Fireside chats and alumni Q&As

These events keep the community lively and ensure you stay connected with the pulse of the industry.

6. Supportive Peer Network

Exchange ideas, ask questions, and get feedback in a welcoming environment. Whether you’re debugging a pipeline or seeking advice on cloud certification, the community is always there to help.

Proven Success Stories

Here are just a few examples of how the community has changed lives:

Manoj, a mechanical engineer by training, transitioned into a data engineering role at a healthcare company within six months of joining the community.

Ayesha, a computer science graduate, used the community's project-based learning approach to build a portfolio that landed her a job at a fintech startup.

Carlos, an IT administrator, leaned on mentorship and mock interviews to land a role as a data engineer with an international consulting firm.

These success stories aren't exceptions—they're examples of what's possible when you're part of the right support system.

Why Choose Big Data Bootcamp Over Other Communities?

While other online tech communities exist, few offer the blend of quality, focus, and career alignment found at Big Data Bootcamp. Here’s why it stands out:

Focused on Data Engineering – It’s not a generic tech group. It’s built specifically for those in data engineering.

Built by Practitioners – Content and mentorship come from people doing the work, not just teaching it.

Job-Oriented – Everything is aligned with real job requirements and employer expectations.

Inclusive and Supportive – Whether you're just beginning or well into your career, there's a place for you.

Live Interaction – From live workshops to mentor check-ins, it's a dynamic experience, not a passive one.

How to Join

Becoming part of the Big Data Bootcamp Community is simple:

Visit BigDataBootcamp.com

Explore bootcamp offerings and apply for membership

Choose your learning path and start attending community events

Introduce yourself and start engaging

Membership includes lifetime access to the community, learning content, events, and ongoing support.

Final Thoughts

If you're serious about becoming a high-performing data engineer, you need more than just courses or textbooks. You need real connections, honest guidance, and a community that pushes you to grow.

At Big Data Bootcamp, the online data engineering community is built to do just that. It’s where careers are born, skills are refined, and goals are achieved.

Join us today and start building your future with the best data engineering community on the internet.

The tech world moves fast. Move faster with the right people by your side.

0 notes

Text

Why You Should Hire Scala Developers for Your Next Big Data Project

As big data drives decision-making across industries, the demand for technologies that can handle large-scale processing is on the rise. Scala, is a high-level language that blends object-oriented and functional programming and has emerged as a go-to choice for developing robust and scalable data systems.

It’s no wonder that tech companies are looking to hire software engineers skilled in Scala to support their data pipelines, streamline applications, and real-time analytics platforms. If your next project is about processing massive datasets or working with tools like Apache Spark, Scala would be the strategic advantage that you need.

Scalable Code, Powerful Performance, and Future-Ready Solutions

If you are wondering why Scala, let's first answer that thought.

Scala is ideal for big data systems since it was created to be succinct, expressive, and scalable. It is more effective at creating intricate data transformations or concurrent operations since it runs on the Java Virtual Machine (JVM), which makes it compatible with Java.

Scala is preferred by many tech businesses because:

It easily combines with Akka, Kafka, and Apache Spark.

It facilitates functional programming, which produces code that is more dependable and testable.

It manages concurrency and parallelism better than many alternatives.

Its community is expanding, and its environment for data-intensive applications is robust.

Top Reasons to Hire Scala Developers

Hiring Scala developers means bringing in experts who are aware of the business implications of data as well as its technical complexity. They are skilled at creating distributed systems that scale effectively and maintain dependability when put under stress.

When should you think about recruiting Scala developers?

You currently use Apache Spark or intend to do so in the future.

Your app manages complicated event streaming or real-time data processing.

Compared to conventional Java-based solutions, you want more manageable, succinct code.

You're developing pipelines for machine learning that depend on effective batch or stream processing.

In addition to writing code, a proficient Scala developer will assist in the design of effective, fault-tolerant systems that can grow with your data requirements.

Finding the Right Talent

Finding the appropriate fit can be more difficult with Scala because it is more specialized than some other programming languages. For this reason, it's critical to explicitly identify the role: Does the developer work on real-time dashboards, back-end services, or Spark jobs?

You can use a salary benchmarking tool to determine the current market rate after your needs are clear. Because of their specialized knowledge and familiarity with big data frameworks, scala engineers typically charge higher pay. This is why setting up a proper budget early on is essential to luring in qualified applicants.

Have you tried the Uplers salary benchmarking tool? If not, give it a shot as it’s free and still offers you relevant real-time salary insights related to tech and digital roles. This helps global companies to align their compensation with the industry benchmarks.

Final Thoughts

Big data projects present unique challenges, ranging from system stability to processing speed. Selecting the appropriate development team and technology stack is crucial. Hiring Scala developers gives your team the resources and know-how they need to create scalable, high-performance data solutions.

Scala is more than simply another language for tech organizations dealing with real-time analytics, IoT data streams, or large-scale processing workloads; it's a competitive edge.

Hiring Scala talent for your next big data project could be the best choice you make, regardless of whether you're creating a system from the ground up or enhancing an existing one.

0 notes

Text

Stimulate Your Success with AI Certification Courses from Ascendient Learning

Artificial Intelligence is transforming industries worldwide. From finance and healthcare to manufacturing and marketing, AI is at the heart of innovation, streamlining operations, enhancing customer experiences, and predicting market trends with unprecedented accuracy. According to Gartner, 75% of enterprises are expected to shift from piloting AI to operationalizing it by 2024. However, a significant skills gap remains, with only 26% of businesses confident they have the AI talent required to leverage AI's full potential.

Ascendient Learning closes this skills gap by providing cutting-edge AI certification courses from leading vendors. With courses designed to align with the practical demands of the marketplace, Ascendient ensures professionals can harness the power of AI effectively.

Comprehensive AI and Machine Learning Training for All Skill Levels

Ascendient Learning’s robust portfolio of AI certification courses covers a broad spectrum of disciplines and vendor-specific solutions, making it easy for professionals at any stage of their AI journey to advance their skills. Our training categories include:

Generative AI: Gain practical skills in building intelligent, creative systems that can automate content generation, drive innovation, and unlock new opportunities. Popular courses include Generative AI Essentials on AWS and NVIDIA's Generative AI with Diffusion Models.

Cloud-Based AI Platforms: Learn to leverage powerful platforms like AWS SageMaker, Google Cloud Vertex AI, and Microsoft Azure AI for scalable machine learning operations and predictive analytics.

Data Engineering & Analytics: Master critical data preparation and management techniques for successful AI implementation. Courses such as Apache Spark Machine Learning and Databricks Scalable Machine Learning prepare professionals to handle complex data workflows.

AI Operations and DevOps: Equip your teams with continuous deployment and integration skills for machine learning models. Our courses in Machine Learning Operations (MLOps) ensure your organization stays agile, responsive, and competitive.

Practical Benefits of AI Certification for Professionals and Organizations

Certifying your workforce in AI brings measurable, real-world advantages. According to recent studies, organizations that invest in AI training have reported productivity improvements of up to 40% due to streamlined processes and automated workflows. Additionally, companies implementing AI strategies often significantly increase customer satisfaction due to enhanced insights, personalized services, and more thoughtful customer interactions.

According to the 2023 IT Skills and Salary Report, AI-certified specialists earn approximately 30% more on average than non-certified colleagues. Further, certified professionals frequently report enhanced job satisfaction, increased recognition, and faster career progression.

Customized Learning with Flexible Delivery Options

Instructor-Led Virtual and Classroom Training: Expert-led interactive sessions allow participants to benefit from real-time guidance and collaboration.

Self-Paced Learning: Learn at your convenience with comprehensive online resources, interactive exercises, and extensive practice labs.

Customized Group Training: Tailored AI training solutions designed specifically for your organization's unique needs, delivered at your site or virtually.

Our exclusive AI Skill Factory provides a structured approach to workforce upskilling, ensuring your organization builds lasting AI capability through targeted, practical training.

Trust Ascendient Learning’s Proven Track Record

Ascendient Learning partners with the industry’s leading AI and ML vendors, including AWS, Microsoft, Google Cloud, NVIDIA, IBM, Databricks, and Oracle. As a result, all our certification courses are fully vendor-authorized, ensuring training reflects the most current methodologies, tools, and best practices.

Take Action Today with Ascendient Learning

AI adoption is accelerating rapidly, reshaping industries and redefining competitive landscapes. Acquiring recognized AI certifications is essential to remain relevant and valuable in this new era.

Ascendient Learning provides the comprehensive, practical, and vendor-aligned training necessary to thrive in the AI-powered future. Don’t wait to upgrade your skills or empower your team.

Act today with Ascendient Learning and drive your career and your organization toward unparalleled success.

For more information, visit: https://www.ascendientlearning.com/it-training/topics/ai-and-machine-learning

0 notes

Text

What Are the Hadoop Skills to Be Learned?

With the constantly changing nature of big data, Hadoop is among the most essential technologies for processing and storing big datasets. With companies in all sectors gathering more structured and unstructured data, those who have skills in Hadoop are highly sought after. So what exactly does it take to master Hadoop? Though Hadoop is an impressive open-source tool, to master it one needs a combination of technical and analytical capabilities. Whether you are a student looking to pursue a career in big data, a data professional looking to upskill, or someone career transitioning, here's a complete guide to the key skills that you need to learn Hadoop. 1. Familiarity with Big Data Concepts Before we jump into Hadoop, it's helpful to understand the basics of big data. Hadoop was designed specifically to address big data issues, so knowing these issues makes you realize why Hadoop operates the way it does. • Volume, Variety, and Velocity (The 3Vs): Know how data nowadays is huge (volume), is from various sources (variety), and is coming at high speed (velocity). • Structured vs Unstructured Data: Understand the distinction and why Hadoop is particularly suited to handle both. • Limitations of Traditional Systems: Know why traditional relational databases are not equipped to handle big data and how Hadoop addresses that need. This ground level knowledge guarantees that you're not simply picking up tools, but realizing their context and significance.

2. Fundamental Programming Skills Hadoop is not plug-and-play. Though there are tools higher up the stack that layer over some of the complexity, a solid understanding of programming is necessary in order to take advantage of Hadoop. • Java: Hadoop was implemented in Java, and much of its fundamental ecosystem (such as MapReduce) is built on Java APIs. Familiarity with Java is a major plus. • Python: Growing among data scientists, Python can be applied to Hadoop with tools such as Pydoop and MRJob. It's particularly useful when paired with Spark, another big data application commonly used in conjunction with Hadoop. • Shell Scripting: Because Hadoop tends to be used on Linux systems, Bash and shell scripting knowledge is useful for automating jobs, transferring data, and watching processes. Being comfortable with at least one of these languages will go a long way in making Hadoop easier to learn. 3. Familiarity with Linux and Command Line Interface (CLI) Most Hadoop deployments run on Linux servers. If you’re not familiar with Linux, you’ll hit roadblocks early on. • Basic Linux Commands: Navigating the file system, editing files with vi or nano, and managing file permissions are crucial. • Hadoop CLI: Hadoop has a collection of command-line utilities of its own. Commands will need to be used in order to copy files from the local filesystem and HDFS (Hadoop Distributed File System), to start and stop processes, and to observe job execution. A solid comfort level with Linux is not negotiable—it's a foundational skill for any Hadoop student.

4. HDFS Knowledge HDFS is short for Hadoop Distributed File System, and it's the heart of Hadoop. It's designed to hold a great deal of information in a reliable manner across a large number of machines. You need: • Familiarity with the HDFS architecture: NameNode, DataNode, and block allocation. • Understanding of how writing and reading data occur in HDFS. • Understanding of data replication, fault tolerance, and scalability. Understanding how HDFS works makes you confident while performing data work in distributed systems.

5. MapReduce Programming Knowledge MapReduce is Hadoop's original data processing engine. Although newer options such as Apache Spark are currently popular for processing, MapReduce remains a topic worth understanding. • How Map and Reduce Work: Learn about the divide-and-conquer technique where data is processed in two phases—map and reduce. • MapReduce Job Writing: Get experience writing MapReduce programs, preferably in Java or Python. • Performance Tuning: Study job chaining, partitioners, combiners, and optimization techniques. Even if you eventually favor Spark or Hive, studying MapReduce provides you with a strong foundation in distributed data processing.

6. Working with Hadoop Ecosystem Tools Hadoop is not one tool—its an ecosystem. Knowing how all the components interact makes your skills that much better. Some of the big tools to become acquainted with: • Apache Pig: A data flow language that simplifies the development of MapReduce jobs. • Apache Sqoop: Imports relational database data to Hadoop and vice versa. • Apache Flume: Collects and transfers big logs of data into HDFS. • Apache Oozie: A workflow scheduler to orchestrate Hadoop jobs. • Apache Zookeeper: Distributes systems. Each of these provides useful functionality and makes Hadoop more useful. 7. Basic Data Analysis and Problem-Solving Skills Learning Hadoop isn't merely technical expertise—it's also problem-solving. • Analytical Thinking: Identify the issue, determine how data can be harnessed to address it, and then determine which Hadoop tools to apply. • Data Cleaning: Understand how to preprocess and clean large datasets before analysis. • Result Interpretation: Understand the output that Hadoop jobs produce. These soft skills are typically what separate a decent Hadoop user from a great one.

8. Learning Cluster Management and Cloud Platforms Although most learn Hadoop locally using pseudo-distributed mode or sandbox VMs, production Hadoop runs on clusters—either on-premises or in the cloud. • Cluster Management Tools: Familiarize yourself with tools such as Apache Ambari and Cloudera Manager. • Cloud Platforms: Learn how Hadoop runs on AWS (through EMR), Google Cloud, or Azure HDInsight. It is crucial to know how to set up, monitor, and debug clusters for production-level deployments. 9. Willingness to Learn and Curiosity Last but not least, you will require curiosity. The Hadoop ecosystem is large and dynamic. New tools, enhancements, and applications are developed regularly. • Monitor big data communities and forums. • Participate in open-source projects or contributions. • Keep abreast of tutorials and documentation. Your attitude and willingness to play around will largely be the distinguishing factor in terms of how well and quickly you learn Hadoop. Conclusion Hadoop opens the door to the world of big data. Learning it, although intimidating initially, can be made easy when you break it down into sets of skills—such as programming, Linux, HDFS, SQL, and problem-solving. While acquiring these skills, not only will you learn Hadoop, but also the confidence in creating scalable and intelligent data solutions. Whether you're creating data pipelines, log analysis, or designing large-scale systems, learning Hadoop gives you access to a whole universe of possibilities in the current data-driven age. Arm yourself with these key skills and begin your Hadoop journey today.

Website: https://www.icertglobal.com/course/bigdata-and-hadoop-certification-training/Classroom/60/3044

0 notes

Text

Data Science and Analysis: The Cornerstone of the Digital Age

Introduction

In an age where every click, swipe, and transaction generates data, the ability to derive insights from this massive influx of information has become not just a competitive edge but a necessity. Enter Data Science and Analysis – two closely linked yet distinct disciplines that play a critical role in transforming raw data into actionable intelligence.

Whether you're an entrepreneur, student, marketer, or corporate executive, understanding data science and analysis can revolutionize your approach to decision-making. In this comprehensive guide, we'll explore the foundations, tools, applications, benefits, and real-world implications of data science and analysis, along with a review and FAQs to clarify common doubts.

What is Data Science?

Data Science is an interdisciplinary field that uses scientific methods, algorithms, and systems to extract knowledge and insights from structured and unstructured data. It combines elements of statistics, computer science, domain knowledge, and data engineering.

Key Components of Data Science:

Data Collection: Gathering data from various sources like databases, APIs, sensors, and websites.

Data Cleaning and Preprocessing: Removing inconsistencies, handling missing values, and preparing data for analysis.

Exploratory Data Analysis (EDA): Understanding the patterns, distributions, and relationships in data.

Model Building: Using machine learning and statistical models to make predictions or classify data.

Evaluation and Deployment: Testing model performance and deploying it in real-world applications.

What is Data Analysis?

Data Analysis is the process of inspecting, cleaning, transforming, and modeling data with the goal of discovering useful information, drawing conclusions, and supporting decision-making.

Types of Data Analysis:

Descriptive Analysis: What happened?

Diagnostic Analysis: Why did it happen?

Predictive Analysis: What is likely to happen?

Prescriptive Analysis: What should we do about it?

While data science is more advanced and predictive in nature, data analysis often focuses on current and historical data to understand trends and patterns.

Difference Between Data Science and Data AnalysisFeatureData ScienceData AnalysisScopeBroad (includes machine learning, AI)Narrower (focus on interpretation)Skills RequiredCoding, statistics, ML, big data toolsStatistics, Excel, SQL, visualizationOutcomePredictive and prescriptive insightsDescriptive and diagnostic insightsTools UsedPython, R, TensorFlow, HadoopExcel, Power BI, Tableau, SQL

Importance of Data Science and Analysis in Today’s World

Improved Decision-Making: Organizations rely on data to back strategic decisions.

Operational Efficiency: Identifying inefficiencies and optimizing workflows.

Customer Insights: Tailoring products and services to meet user needs.

Innovation and Automation: Powering AI applications like chatbots, recommendation systems, and predictive maintenance.

Competitive Advantage: Leveraging data for market trend forecasting and risk assessment.

Popular Tools Used in Data Science and Analysis

Python and R: Programming languages widely used for statistical computing and machine learning.

SQL: Essential for querying relational databases.

Excel: Still a staple for data wrangling and basic analysis.

Tableau & Power BI: Popular tools for data visualization and reporting.

Jupyter Notebooks: Great for collaborative data science work.

Apache Spark and Hadoop: Big data frameworks for processing massive datasets.

TensorFlow and Scikit-learn: Libraries for building machine learning models.

Real-World Applications of Data Science and Analysis

Healthcare:

Predicting disease outbreaks

Personalized treatment plans

Hospital resource optimization

Finance:

Fraud detection

Credit scoring

Algorithmic trading

Retail and E-Commerce:

Recommendation engines

Customer segmentation

Inventory forecasting

Transportation:

Route optimization

Predictive maintenance of vehicles

Traffic flow analysis

Marketing:

Sentiment analysis

Campaign performance analysis

Lead scoring

Education:

Student performance prediction

Curriculum design based on learning patterns

The Data Science Lifecycle

Define the Problem

Collect the Data

Clean and Prepare the Data

Explore the Data

Model the Data

Interpret and Communicate Results

Deploy the Model and Monitor Performance

Benefits of Learning Data Science and Analysis

High Demand: One of the fastest-growing job sectors globally.

Lucrative Salaries: Data professionals are among the top-paid roles in tech.

Versatility: Applicable across every major industry.

Problem-Solving: Enhance your ability to tackle complex problems logically.

Future-Proof Skill: Data is the language of tomorrow.

Challenges in Data Science and Analysis

Data Privacy and Security

Lack of Quality Data

Interpretability of Models

Skill Gap in Workforce

Tool Overload and Rapid Technological Changes

Review: Is Data Science and Analysis Worth Investing In?

The answer is a resounding yes. With data becoming the cornerstone of innovation, mastering data science and analysis is no longer optional but essential. Whether you're looking to advance your career, grow your business, or simply understand the digital world better, investing time and resources into this domain can yield incredible dividends. It's not just about crunching numbers; it's about telling stories, predicting the future, and making intelligent decisions that drive success.

FAQs About Data Science and Analysis

Do I need a technical background to start in data science?

No, but a willingness to learn statistics, basic programming (like Python), and analytical thinking is key.

How is data analysis different from business analysis?

Business analysis focuses on processes and requirements; data analysis focuses on deriving insights from data.

Which is better for a beginner: R or Python?

Python is generally preferred for beginners due to its simplicity and vast libraries.

Can I become a data scientist without a degree?

Yes. Many data scientists are self-taught or have taken online certifications from platforms like Coursera, edX, or Udemy.

How long does it take to become proficient in data science?

With consistent effort, you can reach an intermediate level in 6-12 months.

What industries hire data scientists the most?

Finance, healthcare, tech, e-commerce, logistics, and marketing are the top recruiters.

Are there any free tools to start with?

Yes. Tools like Google Colab, Jupyter Notebooks, Power BI Free, and Tableau Public are excellent.

What are the future trends in data science?

Automated machine learning (AutoML), Explainable AI (XAI), Edge AI, and ethical data usage are leading trends.

Conclusion

Data is the fuel of the 21st century, and those who know how to harness it are shaping the future. Whether you’re looking to make better decisions, understand customer behavior, or dive into predictive analytics, the combination of Data Science and Analysis provides the tools and frameworks to get you there.

This powerful duo isn't limited to tech companies. From healthcare to agriculture, education to energy – every sector is riding the data wave. So, if you've been thinking about diving into data science or sharpening your analytical skills, there's no better time than now.

For more deep dives into digital innovation, AI, and analytics, stay tuned to diglip7.com.

0 notes

Text

How to Order TVS Genuine Spare Parts Online from Smart Parts Exports

TVS is one of the most trusted two-wheeler brands in India and globally, known for performance, mileage, and affordability. Whether you're riding a TVS Apache, Jupiter, Ntorq, or XL100, keeping your vehicle in top shape requires TVS genuine spare parts. The good news is that you don’t have to visit multiple stores anymore. You can now order genuine TVS spare parts online easily from Smart Parts Exports, a global supplier of authentic automobile components.

Why Choose TVS Genuine Spare Parts?

TVS vehicles are engineered with precision. To maintain optimal performance and safety, it's important to use genuine OEM parts. Here’s why:

Perfect fit and compatibility

Manufacturer-approved quality

Longer life and durability

Retains original performance and mileage

Keeps your warranty intact

Using counterfeit or low-grade aftermarket parts can lead to poor performance, more frequent breakdowns, and increased long-term costs.

Introduction to Smart Parts Exports

Smart Parts Exports is a leading exporter and distributor of genuine TVS spare parts online. The company specializes in making and supplying high-quality spare parts for all major automobile brands, including two-wheelers, three-wheelers, and commercial vehicles. With a vast network and global shipping services, Smart Parts Exports serves customers across Asia, Africa, Europe, and Latin America.

Wide Range of TVS Spare Parts Available

Smart Parts Exports stocks a comprehensive range of TVS spare parts online, including but not limited to:

Engine components (cylinders, pistons, crankshafts)

Brake systems (discs, pads, levers)

Electrical parts (spark plugs, wiring harness, switches)

Suspension parts (shock absorbers, forks, linkages)

Transmission parts (clutch plates, chains, sprockets)

Body and frame parts (mudguards, mirrors, side panels)

Lighting (headlights, indicators, tail lamps)

Filters (air, oil, and fuel filters)

Step-by-Step Guide to Ordering TVS Genuine Spare Parts Online

Ordering your required TVS parts online from Smart Parts Exports is a hassle-free process. Here’s a step-by-step breakdown:

Step 1: Visit the Website

Head over to the official website of Smart Parts Exports. The platform is user-friendly and mobile-optimized for quick access from any device.

Step 2: Search for the Spare Part

Use the search bar or browse through the TVS section in the product categories. You can search by model name, part number, or spare part category.

Step 3: Check Product Details

Each product page includes clear images, technical specifications, part numbers, and compatibility information. This helps ensure you’re ordering the correct spare part for your TVS vehicle.

Step 4: Add to Cart

Once confirmed, add the item(s) to your cart. You can continue shopping for additional parts if needed.

Step 5: Submit Your Inquiry or Order

Since Smart Parts Exports also deals in bulk and wholesale orders, you can either:

Complete the inquiry form to get the best quotation.

Or place a direct order for retail purchases, depending on availability.

Their team will respond promptly with pricing, availability, and shipping timelines.

Step 6: Make Secure Payment

Smart Parts Exports offers secure payment options, including international transactions. All payments are processed through encrypted, trustworthy payment gateways.

Step 7: Track Your Shipment

Once your order is dispatched, you’ll receive tracking details so you can monitor the delivery status in real-time.

Benefits of Ordering from Smart Parts Exports

Ordering TVS spare parts online from Smart Parts Exports comes with several advantages:

100% genuine and verified spare parts

Competitive wholesale prices

Global shipping with real-time tracking

Professional customer support

Secure payments and reliable packaging

Availability of spare parts for all TVS models

TVS Models Supported

Whether you own a daily commuter or a performance bike, Smart Parts Exports supports spare parts for a wide range of TVS models, including:

TVS Apache RTR Series

TVS Ntorq 125

TVS Jupiter

TVS Star City Plus

TVS XL100

TVS Radeon

TVS Pep+

TVS Sport

Wholesale Ordering Options for Businesses

Are you a dealer, service center, or reseller looking for spare parts in bulk? Smart Parts Exports offers special wholesale pricing and B2B services. You can request a TVS spare parts catalog and get a custom quote based on your monthly or annual requirements.

Get Expert Assistance

Not sure which part you need? Smart Parts Exports has a knowledgeable support team ready to help you identify the right components using model numbers or even pictures. Whether it’s an engine part, electrical unit, or body frame part—you’re never alone in the process.

Conclusion

If you're looking to buy genuine TVS spare parts online, Smart Parts Exports is your go-to platform. With a wide range of products, an easy ordering process, and worldwide shipping, they offer unmatched convenience for both individual users and automotive businesses.

Avoid the risks of counterfeit parts. Stick with the original. Choose Smart Parts Exports for all your TVS spare parts needs—because your vehicle deserves the best.

0 notes

Text

Databricks Consulting Services: Accelerating Business Intelligence with Helical IT Solutions

In today’s data-driven world, businesses are increasingly turning to advanced platforms like Databricks to streamline their data engineering, analytics, and machine learning processes. Databricks, built on Apache Spark, allows companies to unify their analytics and AI capabilities in a scalable cloud environment. However, leveraging Databricks to its full potential requires deep expertise, which is where Databricks consulting services come into play.

Helical IT Solutions offers comprehensive Databricks consulting services designed to help organizations maximize the value of Databricks and accelerate their business intelligence initiatives. Whether your organization is just getting started with Databricks or looking to optimize your existing setup, Helical IT Solutions can guide you through every step of the process.

Why Choose Databricks Consulting?

Databricks is a powerful platform that allows companies to handle massive datasets, run complex machine learning models, and perform high-level data analytics. However, like any advanced technology, it can be overwhelming to integrate Databricks into your existing infrastructure without the right expertise. This is where Databricks consulting becomes essential.

Databricks consultants are equipped with in-depth knowledge of the platform's capabilities, from data engineering pipelines to machine learning workflows. They can help your organization design, implement, and optimize Databricks solutions that align with your specific business objectives. Consulting services ensure that your team is equipped with the right tools, best practices, and strategies to make the most out of Databricks and its ecosystem.

Helical IT Solutions: Your Trusted Partner for Databricks Consulting

Helical IT Solutions has established itself as a trusted provider of Databricks consulting services, offering end-to-end solutions tailored to businesses of all sizes. Their team of experts works closely with clients to understand their unique needs and objectives, ensuring that every Databricks deployment is aligned with their business goals.

Databricks Architecture Design and Setup: Helical IT Solutions begins by assessing your current data infrastructure and designing a robust Databricks architecture. This step involves determining the most efficient way to set up your Databricks environment to handle your data volumes and specific workloads. Their consultants ensure seamless integration with other data platforms and systems to ensure a smooth flow of data across your organization.

Data Engineering and ETL Pipelines: Databricks provides powerful tools for building scalable data engineering workflows. Helical IT Solutions’ consultants help businesses create data pipelines that integrate data from various sources, ensuring high-quality and real-time data for reporting and analysis. They design and optimize ETL (Extract, Transform, Load) pipelines that are essential for efficient data processing, enhancing the overall performance of your data infrastructure.

Advanced Analytics and Machine Learning: With Databricks, businesses can easily scale their machine learning models and apply advanced analytics techniques to their data. Helical IT Solutions leverages Databricks’ built-in tools for MLlib and TensorFlow to design custom machine learning models tailored to your specific business needs. The consultancy also focuses on optimizing the performance of your models and workflows, helping you deploy AI solutions faster and more efficiently.

Cost Optimization and Performance Tuning: One of the key advantages of Databricks is its ability to scale up or down based on your workload. Helical IT Solutions helps businesses optimize their Databricks costs by implementing best practices to manage compute resources and storage efficiently. Their consultants also focus on performance tuning, ensuring that your Databricks infrastructure is running at peak performance without unnecessary overhead.

Ongoing Support and Training: Helical IT Solutions doesn’t just stop at implementation. Their Databricks consulting services include continuous support and training to ensure that your team is empowered to manage and optimize the platform long after the initial deployment. Their experts offer training sessions and documentation to help your team get the most out of Databricks, enabling them to become proficient in managing data pipelines, analytics, and machine learning models independently.

Conclusion

Incorporating Databricks into your business intelligence strategy can significantly boost your organization’s ability to manage, analyse, and derive insights from data. However, achieving success with Databricks requires expertise and guidance from experienced consultants. Helical IT Solutions stands out as a leading provider of Databricks consulting services, offering a comprehensive suite of solutions that drive results. From architecture design and data engineering to advanced analytics and cost optimization, Helical IT Solutions ensures that your business can unlock the full potential of Databricks. With their expertise, you can accelerate your journey toward data-driven decision-making and maximize the value of your data assets.

0 notes

Text

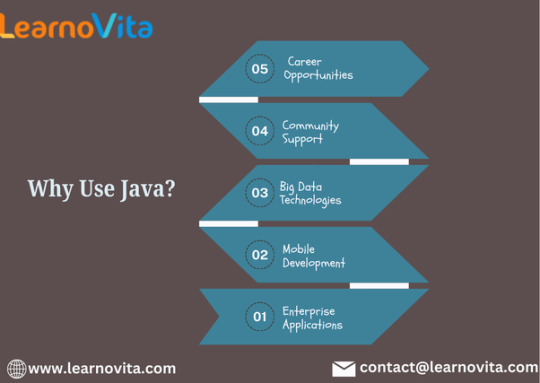

Why Learn Java? Key Benefits and Applications Explained

Java is one of the most popular and widely used programming languages in the world. It powers everything from enterprise applications and mobile apps to cloud computing and big data technologies. Enrolling in a Java Training in Bangalore significantly enhances one’s ability to leverage Java’s capabilities effectively.

Whether you are a beginner looking to start coding or an experienced developer seeking to expand your skill set, Java offers a range of benefits that make it a great choice.

High Demand and Career Opportunities

Java remains one of the most in-demand programming languages across various industries. Many top tech companies, including Google, Amazon, and IBM, rely on Java for their applications and services. As a result, Java developers enjoy strong job prospects, competitive salaries, and opportunities in fields such as web development, mobile app development, cloud computing, and software engineering.

Versatility and Wide Applications

Java is a versatile language that can be used to develop a wide range of applications. Some of the most common applications include:

Web Development – Java is widely used for building scalable web applications with frameworks like Spring and JSP.

Mobile App Development – Android applications are primarily built using Java, making it a crucial skill for mobile developers.

Cloud Computing – Many cloud-based applications and platforms, including AWS, Google Cloud, and Azure, rely on Java for their backend services.

Big Data and Artificial Intelligence – Java is commonly used in big data processing frameworks like Hadoop and Apache Spark.

Enterprise Software – Many businesses use Java for financial systems, CRM tools, and supply chain management applications.

Platform Independence

One of Java’s biggest advantages is its cross-platform capability. With the "Write Once, Run Anywhere" (WORA) principle, Java applications can run on any device with a Java Virtual Machine (JVM). This feature makes Java an excellent choice for building applications that work seamlessly across different operating systems.

Object-Oriented Programming (OOP)

Java is based on the object-oriented programming paradigm, which makes it easier to organize, maintain, and scale code. OOP principles such as encapsulation, inheritance, and polymorphism allow developers to write reusable and modular code, reducing development time and improving software efficiency. It’s simpler to master this tool and progress your profession with the help of Best Online Training & Placement Programs, which provide thorough instruction and job placement support to anyone seeking to improve their talents.

Rich Ecosystem of Libraries and Frameworks

Java offers a vast ecosystem of libraries and frameworks that simplify development and accelerate the coding process. Some of the most popular Java frameworks include:

Spring Boot – Used for building enterprise applications and microservices.

Hibernate – Simplifies database management and object-relational mapping.

Struts – Helps in developing large-scale web applications.

JavaFX – Used for creating graphical user interfaces for desktop applications.

These frameworks provide pre-built solutions that reduce development complexity and allow developers to focus on core functionalities.

Strong Security Features

Java is known for its robust security features, making it a preferred choice for developing secure applications. It includes built-in security mechanisms such as:

Automatic memory management to prevent memory leaks.

Exception handling to manage errors efficiently.

Secure APIs for encryption and authentication.

Because of its security advantages, Java is widely used in financial institutions, healthcare applications, and government systems.

Easy to Learn and Beginner-Friendly

Java is one of the easiest programming languages to learn, thanks to its straightforward syntax and extensive documentation. Beginners can quickly grasp Java's fundamentals and start building real-world applications. Additionally, there are numerous online tutorials, courses, and coding communities that provide support for learners at all levels.

Large and Active Community Support

With millions of Java developers worldwide, there is a vast and active community that offers help, resources, and open-source projects. Whether you are troubleshooting a bug, learning a new framework, or seeking career guidance, you can find support through:

Online forums like Stack Overflow and Reddit.

Open-source projects on GitHub.

Java conferences and meetups.

Final Thoughts

Java continues to be a powerful and versatile programming language that offers numerous career opportunities and development advantages. Whether you want to build web applications, mobile apps, or enterprise software, Java provides the tools, frameworks, and community support needed for success. Learning Java is a smart investment for any aspiring or experienced programmer looking to advance their skills and career in the tech industry.

0 notes

Text

Combining Hadoop with Machine Learning for Smarter Analytics

In today's digital landscape, businesses generate massive amounts of data every second. Advanced technologies like Hadoop and Machine Learning (ML) are essential to extract meaningful insights from this vast data pool. By integrating these technologies, companies can enhance their analytics capabilities, drive innovation, and improve decision-making processes.

Understanding Hadoop and Its Role in Big Data

Hadoop is an open-source framework designed to manage and process large-scale datasets efficiently. It distributes data across multiple nodes, ensuring seamless scalability and high-speed processing. With HDFS for storage and MapReduce for processing, Hadoop efficiently handles large-scale data.

Key Benefits of Hadoop:

Scalability – Manages growing data effortlessly with distributed computing.

Cost-Effective – Operates on commodity hardware, reducing infrastructure expenses.

Fault Tolerance – Maintains data integrity by replicating information across nodes.

Flexibility – It handles diverse data formats, including structured and unstructured data.

Hadoop’s ability to store and process large datasets efficiently makes it a go-to solution for businesses dealing with big data. However, organisations need to integrate advanced analytical techniques like Machine Learning to derive valuable insights.

The Role of Machine Learning in Analytics

Machine Learning, a key AI discipline, enables computers to learn from data and make accurate predictions. It is widely used in predictive modeling, anomaly detection, recommendation systems, and automation.

Key Advantages of Machine Learning:

Automation – Eliminates repetitive tasks by learning from data.

Data-Driven Insights – Enhances decision-making through predictive analytics.

Real-Time Analysis – Processes streaming data for instant insights.

Personalization – Adapts recommendations based on user preferences.

Machine Learning models require extensive data for training to achieve accuracy. Hadoop is an ideal platform for handling this large-scale data, making their integration highly beneficial.

How Hadoop and Machine Learning Work Together