#clientside prediction

Explore tagged Tumblr posts

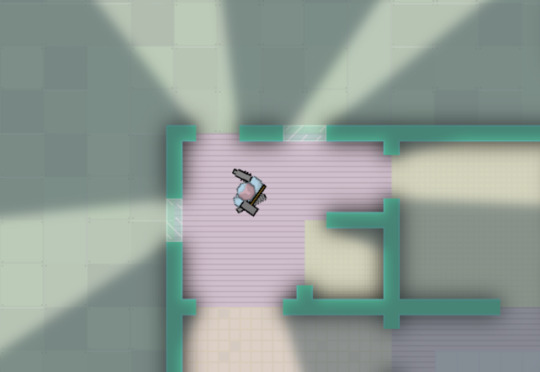

Photo

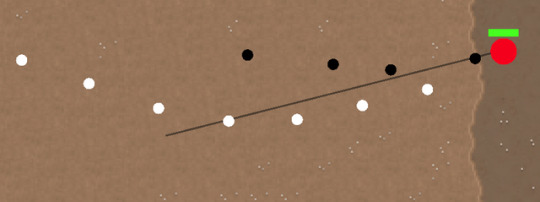

Client predicted projectile @ 300 ms of latency, followed by the server side shot (white is client prediction, black is server-confirmed reality, though seeing it is subject to the latency as well).

Out of the 7 projectiles, the first 4 have been created on the server as far as this client knows. The 4th projectile has a line demonstrating its relationship with its counterpart. The last 3 white dots are projectiles that have also been fired on the client but due to simulated lag have yet to be created on the server and confirmed again on the client (which is the point at which the black dots appear).

Getting these things really deterministic (and therefore consistent) with date/time related math seemed inherently flawed, so I’ve favored a fixed timestep + deterministic simulation approach instead.

Example/process:

When the client is determining if it can fire a projectile, it does so by comparing the weapon cooldown with an accumulator variable. e.g. is our accumulator greater or equal to our cooldown of 0.500 seconds? Each frame it adds 1/60 of a second to the accumulator.

The server also uses the same logic to determine if it should created a projectile -- at a rate of 1/60 being accumulated for each frame it receives from the client.

The result being that the number of frames experienced by the client is the number of frames processed on the server... and the small aggregate of 1/60s being added up will pass 0.500 on the identical frame for both computers (deterministic).

The server can also decide if the frames it receives from the client are valid... did they contain actions that are feasible for that player? Are they coming in at a believable framerate?

From there each computer (client and server) can simulate their own highly-similar result for the projectile. The server might determine that the projectile hit another player, and deduct hitpoints. Meanwhile the client, which for cheating reasons does not get to say anything about anyone’s hitpoints, might instead determine that the projectile hit another player and draw a purely aesthetic blood effect.

After some polish and lag compensation, I’ll publish a nengi-demo of a client+server combo that can predict and compensate high speed shots at a variety of different latencies while maintaining very reactive controls (16 ms input delay). An early prototype of this demo already exists for hitscan weapons, but has some bugs with its timers (Date.now() math...).

#nengi.js#devlog#network programming#multiplayer programming#clientside prediction#lag compensation#authoritative server design#physics simulation

3 notes

·

View notes

Photo

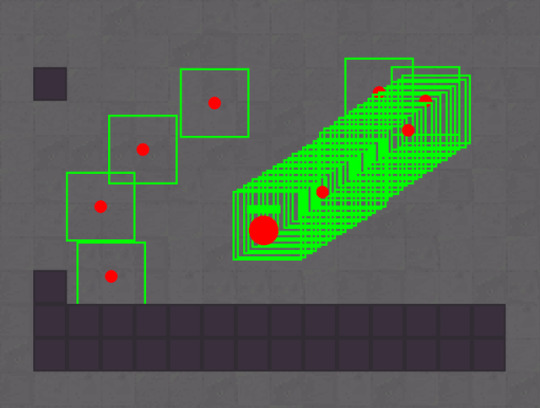

Some visual tools for continuous collision detection, broadphase sweeps, and a raycaster. In this case the green rectangles are showing areas where a spatial structure was accessed in the recent past.

There’s something naturally unintuitive about a game which takes into account both the immutable past (for lag compensation) and the hypothetical future (for clientside prediction). Each tick of the engine occurs in the present... but the state of the present depends on a past of the server as well as the newly discovered pasts of players (things players allegedly did a while ago, but the server hears about just now). The state of the present for the client depends on the past of the server, plus a hypothetical future that the client simulates just ahead of confirmation from the server. So nothing is simple, nor particularly relate-able to the physical world (some overlap w/ astronomy and looking at distant objects, maybe).

While I’ve been tinkering with these things for quite a long time, I’m near the point where prediction and compensation become part of nengi’s formal api. The challenge isn't in getting them to work, nengi has thought in these terms since its inception -- the challenge comes in writing the actual game code in a way that these various temporal states become valuable tools and not an overwhelming mess. (We’ll see about that...) After all people are often looking to translate game ideas from singleplayer that exist in a single temporal state to multiplayer and then they want the bells and whistles of CSP and lag comp... which exist by constantly reconciling multiple temporal states.

2 notes

·

View notes

Video

youtube

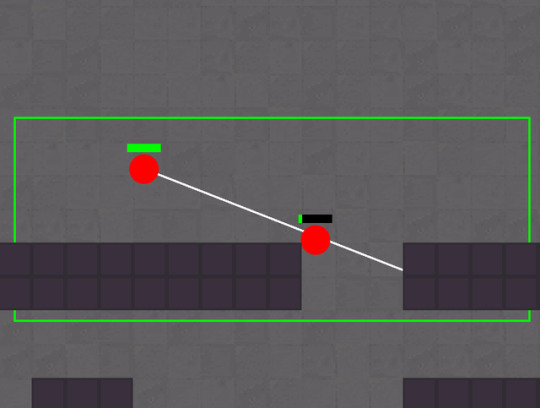

Clientside prediction in nengi, available for download @ https://timetocode.com/nengi

Video is just a quick overview and demonstration for those already familiar with the concept. I’ll work on an explanation of what it is and why to use it when closer to nengi 1.0.0.

I am pretty happy with how the code actually came out.. there’s a certain elegance to using the serverside code on the clientside so that the client can predict what the server would do with the client’s inputs. This is nothing new to the world of network programming (and it is thanks to the writings of many experts that I have even learned this much).. but I’d like to think that I’ve done it in a way that is particular high-performance and yet generally-applicable to a variety of games... and well.. in JavaScript... which is a bit unique. As this feature has nearly no impact on server performance, I can still add 100-200 players to the mixture (assuming a relatively simple game and a 10 to 30 tick server). We’ll see if I can still hang onto this performance level with lag compensated collisions (I would think those would cost the server some CPU).

I’m going to be working on the ultimate combination of clientside prediction, entity interpolation, and lag compensation combined to create extremely crisp controls and hit detection that feels similar from 0 - 250 latency. Reaching and demonstrating this level of performance in a browser has been a goal of mine since the start of nengi. I think it is within grasp now.. in fact I’ve made all of these pieces 3 or more times each in some fairly messy game prototypes.. but it is time to connect them and expose them with a somewhat manageable API via nengi.

The epitome of this test to me is a small shooter with a high-accuracy weapon and rapid player movement. Essentially... fast players + railguns. I’ll be making this 2D initially, though when I start to break nengi into modules (the last thing before the 1.0.0 release) I hope to repeat it again in 3D. This is also going to form the basis for the gunplay in the battleroyale game that I’ve been teasing.

2 notes

·

View notes

Photo

Active reload for https://bruh.io/. After the player starts reloading, if they can press ‘R’ again at the right time they’ll be rewarded with a fast reload.

This is part of a megapatch we’re working on to deepen the weapon mechanics. Also, did I mention... RELOADING? Also weapon recoil and bullet spread. Still infinite ammo for now tho.

2 notes

·

View notes

Text

The Peeker’s Compromise: A Fair(er) Netcode Model

Many first person shooters are plagued by a netcode artifact known as the peeker’s advantage. I propose here a technique for correcting this bug, based around normalizing gameplay in such a way that human reflexes and skill decide the outcome of competition (as opposed to network latency or artifacts of varying netcode designs, discussed shortly).

When a player in a first person shooter is moving around the game world they exist in a position on their own game client that is slightly ahead of their position on the server. This is a solution/side-effect of clientside prediction which is ubiquitously used in first person shooters giving the player instantaneous movement and controls that feel like a single player game despite controlling a character that is moving around on a remote server.

If we were to visualize the difference between the clientside player position and the serverside player position it would look like two characters chasing each other. How far apart the two characters are depends (in descending order of usual importance) on how fast movement is in the game, the latency of the player, and the tickrate of the server. But how big are these differences in the actual games of the current era? Are the two states of characters practically overlapping? Or is one several meters behind the other? The answer -- which varies by game and by internet connection -- is that the desynchronization between these two positions is significant. Over amazing internet connections in games with slow moving characters the desynchronization is usually on the order of 1 player length. So imagine any fps game character (valorant, cs:go, apex, overwatch, fortnite, cod, etc) -- and then imagine them creeping or walking around slowly. In this scenario the desync between the two states is such that one could picture the character being followed by its clone, touching. If they move *really* slowly then they’ll be overlapping. However as the characters break into a run their clone will trail them by more -- maybe 2-8 player lengths depending how fast characters are in the game. If a player has a high latency the clone will be ever farther behind in all scenarios except holding still.

Now when a player shoots their gun in all of the above games, the game engines will calculate the shot based on where the player perceives themselves. That means that as you play the game what you see is pretty much what you get. You don’t have to manually correct for lag while aiming in modern shooters -- just aim for the head right where you see it. However this introduces the peeker’s advantage. A defender can hold a corner with their crosshair primed to shoot anything that appears, but an attacker (the peeker) who comes around that corner is ahead of their server position and thus they get to a bit of extra time to "peek” and shoot at the defender before they themselves are visible to the defender. Depending on the actual amount of lag and the game itself the defender perceives themselves as either having been shot insanely quickly right as the attacker appeared, or maybe if the lag is not as bad they perceive themselves as having gotten to trade shots with the attacker, but ultimately they lost. The attacker perceives nothing special -- they just walked around the corner and shot the defender b/c they were playing aggressively and have superior reflexes (or so they think).

How big is the actual peekers advantage? Well it varies by game, but an article put out by Riot Games about Valorant goes into detail about how much the peeker’s advantage affects gameplay, and how their engine attempts to minimize it. It’s a great read: https://technology.riotgames.com/news/peeking-valorants-netcode. But to summarize, using 128 tick servers (very fast) 35 ms of internet latency (fast) and monitor refresh rates of 60 hz (standard) they calculate an advantage of 100 milliseconds after extensive optimization. That’s fast, but is it fast enough? Well back in 2003 I used to be a competitive Counterstrike 1.6 player at around the same time that I was obtaining a psychology degree with a particular interest in human perception (reaction time, how our eyes work, perception of subluminal images that are shown very quickly). I tested the reflexes of myself and all of my teammates. Competitive gaming didn’t have the same structure back then as it does now (everything has a ladder now -- back then it was private leagues), but by modern standards we were probably top 3% ladder players or something like that. Generally speaking there isn’t much of a speed difference in the whole pool of pro-gamers, at least when compared to new players. They are all pretty fast. Response time for watching a corner and clicking as you see a player (already perfectly lined up) fall into the range of 150-190 ms. Tasks that involve moving the crosshair to react quickly (as opposed to having had perfect placement already) slow that down another 50-150 ms. But generally speaking the competition between two similarly fast players with good crosshair placement comes down to very tiny units of time with even 10 ms producing an advantage that is measurable (both Riot and I agree about this). This means that had the server allowed for a double K.O. (which these games do not) we would find that in fact both players were very good and would’ve killed the other just 20-50 milliseconds apart. Valorant and CS:GO don’t work like that however, and instead the game essentially deletes the bullets from one of the players and leaves the other alive. Unfortunately this difference in human reflexes amongst competitive gamers is entirely gobbled up by 100 ms of peeker’s advantage -- meaning that at high skill levels the peeker will very often win and the defender will very often lose. So while the Riot article celebrates the success of engineering that allowed Riot to reduce the peeker’s advantage as much as they did, if you read the fine print you’ll find that the peeker’s advantage remains huge.

I don’t mean to pick on Riot, far from it. They’ve clearly done an amazing job. The other games I mentioned earlier are presumably in the same approximate ballpark, though I can tell you from personal experience that some of them are a fair margin worse. Not all of the games I mentioned use a 128 tick server (only one does). They also have longer interpolation delays and other little engine details that slow things down further. Riot is also an insane company that literally owns/builds the internet just to reduce latency for its players -- so if we want to take away a general sense of how bad the peeker’s advantage is in most games we should assume it to be worse than the scenario described above regarding Valorant.

Now that I’ve discussed at length the peeker’s advantage, allow me to present a related netcode model that attempts to solve these problems: The Peeker’s Compromise. If we delay the time of death on the serverside by the timing difference between the attacker and the victim, then we can allow the defender an equal opportunity to shoot the attacker. The server can then determine the winner (and the remaining damage) based on the performance of the human (instead of using the ~100 ms of engine-related advantage and internet latency). So let’s use some numbers for a hypothetical situation. Let’s say our game has 128 tick server, the players have 35 ms of latency, and 60 hz screens (like the Valoran example from earlier). Right as a player peeks another player they essentially get to shoot 100.6 ms sooner than their victim. As their shots arrive at the server the server might calculate that the victim has died -- but rather than killing the player it will keep them alive for 100.6 ms PLUS their own latency, which in this scenario puts the total at 135.6 ms. If during these 135.6 milliseconds the server receives shots where the defender hits the peeker, it will enter a section of code that attempts to settle this discrepancy. First off, it is entirely possible that after compensating each shot for the difference between the players we find out that one truly was faster than the other -- the game could use this information to decide which one lives and which one dies. It also might make sense to allow damage to legitimately trade kills and to build double K.O. situation into more first person shooters.

Let’s talk about the artifacts of this new and proposed system. In low-latency games with a high tick rate this change would be subtle -- we would just have no more peeker’s advantage. As latency increases all way up to 200 ms we will have a new artifact. Instead of having a more severe peeker’s advantage we’ll end up with a scenario where it looks as if players are taking 1-3 extra bullets beyond what would normally kill them -- although if you stop shooting early they still end up dead a few milliseconds later. The Vandal in Valorant (similar to the AK in cs:go) fires 9.75 shots per second, which is one bullet per 103 ms. So in a best case scenario the player death is delayed by the time it takes to fire one extra shot at full auto, and in a worse case scenario we add 1 bullet per added ~100 ms of waiting done by the engine. It would also make sense to cut off certain shots from being counted from a laggy player (existing systems already do this in their own way).

Gameplay at lower skill levels wouldn’t really be affected one way or the other. It isn’t affected much by the peeker’s advantage either -- players have to know where to aim and thus be involved in legitimate reflex test before we’re down to something so close that milliseconds of delay have an effect. If players are oblivious to each other, or place their crosshairs incorrectly as they come around a corner then the added slow down of the human having to make a new visual-search-decision-plus-adjustment is too slow for any of this to matter. But at higher skill levels there would be some actual changes to gameplay. The most significant change is that players would be able to hold corners -- and if they’re truly faster than the peeker they would win. In such a design it really would make much more sense to allow two players who fire at essentially the same time to kill each other which if adopted would need to be addressed at a game design level. Also games that had an alternate method of very indirectly addressing peeker’s advantage, such as weapon instability during movement as a major element (arma, h1z1, pubg, tarkov, etc), would have more options, and may need to tune existing timings to get the same feeling back.

The underlying netcode behind the peeker’s advantage affects more than the classic peeking situation. It also affects two players picking up an item at the same time (it decides the winner here). And it also is present when you’re playing a game and you duck behind cover and take damage after you should already have been safe (the peeker and the victim are on slightly different timelines). Neither peeker’s advantage nor my proposed peeker’s compromise actually removes lag of the underlying systems of the network connection nor the game engine ticks, both simply *move* delays around such that the controls feel responsive and the latency is suffered elsewhere. There’s a certain physics to the realm of network programming. As I like to half-jokingly say: “Lag is neither created nor destroyed [by compensation techniques.]” So the same problems would still exist, though the Peeker’s Compromise is philosophically different. Where the peeker’s advantage says let the fastest internet and the more aggressive player win, the Peeker’s Compromise says let the more skillful (in terms of accuracy and speed) human win. Outside of a double K.O.esque duel however, this is subjective. Who should pick up an item when both players tried to pick it up at the same time? Well the old method says the one with the better internet gets it, the new method suggests perhaps that we should compensate the timing to remove the internet/engine delay and award it to whomever was faster. But what about getting shot after reaching cover? This is really up to the game designer -- is it more impressive to tag someone barely as they run off? Or more impressive to slide behind a barrier right as you get shot? It’s a design decision. It’s also possible via this proposed system to compromise. The engine design I propose has more data in its context with which to make decisions, courtesy of temporarily allowing ties to occur which get addressed after both players take an action. It could say well that was an amazing shot, AND it was an amazing dodge. After crunching the numbers the decision is to deal a hit but cut the damage in half as a compromise between the feats of the two players.

#netcode#network programming#peeker's advantage#Peeker's Compromise#multiplayer#first person shooter#Riot Games#Valorant#Valve#Counterstrike

4 notes

·

View notes

Text

Plans for nengi.js 2.0

Hi, this is Alex, the people’s network programmer and developer of nengi.js. Let’s talk about the future.

I consider nengi 1.x to be complete. Of course there are always unfinished items of work -- I wish I had a comprehensive tutorial series on prediction for example -- but really things have been stable and good for a long time.

So as I look towards 2.0, there are no fundamental changes to the library in mind. Instead the future is about improvement, making things easier, and staying open to deeper integrations with other libraries and possibly even with other languages.

One area of intended improvement is the whole process around forming connections both on the client and the server.

On the clientside, client.readNetwork() or equivalent is invoked every frame as the mechanism that pumps the network data into the application. However, this pump also controls the network data related to the connection -- meaning that without spinning the game loop one cannot finish trading the data back and forth that completes the handshake. I’d like to redo this such that we end up with a more normal api, e.g. client.connect(address, successCb, failCb) or equivalent. This presents a clean flow with no ambiguity as to when a connection is open. It’ll also let the clientside of games be a bit tidier as they don’t need to spin the network in anticipation of a connection opening.

On the serverside the whole .on(‘connect’, () =>{}) warrants a redo. I have in mind a simpler api where a ‘connectionAttempt’ occurs, and then the user code gets to invoke instance.acceptConnection(client, greeting) or instance.denyConnection(client, reason) thus again providing a nice and clean exact line after which we know what state the connection is in (attempted => connected, or attempted => denied).

Another area is Typescript support and some positive side-effects thereof. Nengi has minimal typescript definitions, but I think the actual surface of each api class/function should be rewritten in actual typescript. This will be limited, as the actual inner workings of nengi are truly untyped -- it has its own crazy typesystem and fancy optimization of high speed iteration based on object shapes that I should stop talking about now before I accidentally write a dissertation.

Per adding Typescript support there will be a major benefit to Typescript and JavaScript developers alike which is the opportunity for some top tier intellisense. The nengi api is small and having some modest documentation pop up right as you type things like .addEntity would be awesome.

The other benefit (ish..) of formally supporting Typescript is that a few of the processes around how to integrate game logic and nengi could finally be strictly addressed. I used to favor a very laissez-faire approach to integration as I didn’t want to stifle anyone’s style… but as time has gone by it seems that the level at which nengi can be decoupled is not seen as powerful, and instead it just confuses people. I want a better newbie experience, and presenting things like “well you can kinda do anything” isn’t helpful. I wouldn’t necessarily limit nengi itself, and instead may supply this functionality as a layer, but I would like to suggest a much stricter boilerplate for topics such as associating game data with a connected client and any other spot where game and network get glued together.

On that note of making things less open ended, I am *considering* whether nengi should offer an Entity and Message etc as part of the api. Currently entity is a concept or an implied interface -- really it is any object. Too decoupled? Maybe something more explicit would be nice. We’ll see.

More advanced protocols/schemas are also needed in the future. There are a bunch of features that can easily come from having more options on the protocols, but initially I plan to skip over all of these features and just change the api in a hopefully future-proof manner. The plan here is to change things from protocol = { x: Int, y: Int, name: String } to something more like context.defineSchema({ x: Int, y: Int, name: String }). Initially these will do the same thing, but in the future more arguments will be added to defineSchema.

The eventual removal of types from nengiConfig is another dream feature that may or may not make 2.0 but is worth a bit of discussion. NengiConfig.js is that file where every entity, message, command etc is listed out. Removing this would require nengi to be able to explain *in binary* how to *read future binary* and is non-trivial. The benefit however is that the parallel building of client and server code would no longer be a strict requirement. In the end of course a client and server need to be built for one another, but if the relationship were less strict than it is now it may pave the way for eventual nengi clients that aren’t even JavaScript. To me this has always been a bad joke -- who would want such a thing??? But as the years have passed it has become clear that nengi is not just special for being JavaScript, but that it is actually competitive in performance and functionality with the type of technology coming out of AAA multiplayer gaming companies (send money!!). So this may not be a bad direction (though it is worth noting there are at least two other major changes needed on this path).

There would also need to be changes to the current ‘semver’-ish release cycle. As it stands currently nengi version numbers follow the rules of breaking changes on major release (1.0.0) non-breaking changes on minor release(0.1.0) and small patches on patch release (0.0.1). As the current version of nengi is 1.18.0 that means that I’ve managed to add all functionality since release without a single breaking change (send money?!). This is not easy. These new changes described above are deliberately breaking api changes. Given the work cycle that I’m on and the lack of funding, the most efficient way for me to work would be with breaking changes allowed and perhaps a changelog to help the users out. So 2.0.0+ may shift to this type of development, where the ‘2’ is just my arbitrary name for the functionality, and 2.1.0 is a potentially breaking change. Obviously no one has to join me over in the land of nengi 2 until it becomes more stable, but letting me do *whatever* will get everything done faster, which is more important than ever given my limited time.

In the category of “maybe 2.0 things” here are a bunch of other things I’d like to talk about too, but they’re too involved (and experimental) of topics to go into detail. Here’s a vague summary of things I’ve put R&D time into:

Experimentally rewrites of sections of nengi in Rust, C, C++ with integrations via n-API, wasm transpilation, and some in-memory efforts. Crossing the boundary between JavaScript and anything else has been problematic as a means of solving most obvious problems, but some less-than-obvious problems may yet warrant this approach. I would say that n-API is a dead end for most nengi functionality but has some merit for spreading sockets across threads. WASM, or specifically working on a continuous block of memory may have some promise but requires further R&D.

An advanced rewrite of the nengi culler based on spatial chunking (promising!).

A middle api between serving up interpolated snapshots and the nengi client hooks api. This would become a generic replacement for the entire nengi clientside api. Until further typescript support I’m going to leave this one alone as it is very likely that a naturally elegant solution will show itself in the near future.

Multithreaded nengi, specifically the spreading of open connections across threads and the computation of snapshots. True optimal CPU use is opening multiple instances, not giving more threads to an instance, but there are some uses nonetheless.

Multi-area servers that use spatial queries instead of instances or channels (for example creating multiple zones, but not making an instance or channel per zone, instead the client.view just goes to a different space with some spatial math).

So yeah, that’s the plan! Thanks for your support (send money)

https://github.com/sponsors/timetocode

https://www.patreon.com/timetocode

2 notes

·

View notes

Text

nengi.js roadmap

I’ve recently given tours of nengi to 3 developers. The feedback has been very helpful.

The top requested features/changes so far are:

demo (and api) for multi-instance game worlds

demo of sending tilemaps via nengi

webpack support in demos, build/run scripts, es6 module support

division of demos into client, server, and shared code

code sample for authenticating players

code sample for saving/persisting a game world

more support for 3 dimensional space (z-axis, different ways of calculating entities within view of a player)

a more complete game example that goes beyond nengi features for newer devs to disect (player, enemies, camera, medium sized world, map data, animations)

Also on the TODO list for the current game that I'm working on are improvements to clientside prediction, serverside lag compensated collisions, and polishing+modularizing the current entity interpolator. These probably won't be seen in the published version of nengi until after I've tested them.

BTW if you're using nengi currently and trying to make use of multiple instances as one game world, a player can travel between instances the same way the player gets into the instance to begin with: via connecting. So it is possible to have a player step into a cave entrance (for example) and then have the server detect this and tell the client to connect to another instance. This is a manual process at the moment. The feature that I may add is a streamlined version of the same idea, perhaps with an api like instance.transferClient(whoToTransfer, instanceToTransferTo).

If you make a multi-instance game in nengi I recommend being very tidy and modular with the game logic, such that it can be used across all relevant instances. Like in a classic mmorpg.. much of the game's core logic applies in all areas of the game world, with only the terrain, creatures, and players varying. Perhaps a few instances are slight exceptions... maybe the big city of the game is optimized for higher populations by having a lower tickrate and disabling the CombatCollisionSystem. Maybe an arena instance is optimized for responsiveness via a higher tickrate, and also carries slightly different rules.

I'm also debating a feature where a client can connect to multiple instances at the same time as a means of hiding the seam between the areas of the world controlled by one server and another.

ETAs are largely unknown. v1 prediction/compensation is going to be released with my new game. I hope to add multi-instance support and do a big code reorg around the same time (6-8 weeks)

I do have one more spot open for the week of Aug 21 2017 if someone would like a tour. Details: http://timetocode.tumblr.com/post/164001115291/offering-nengijs-walk-throughs

#nengi.js#nengi#html5 multiplayer game engine#network engine#network library#javascript#node.js#high performance

1 note

·

View note

Photo

Experimenting with a different aesthetic. I like something about this, although everything is a bit too pastel at the moment. Will keep iterating

I think only the lavender and the turquoise are too saturated at the moment

I liked things about the previous textures, but ultimately they had two main problems: 1) they were confusingly prominent... I need the players and the bullet-hell stuff to really stick out against the background and 2) the pseudorealism was blocking from learning more pixel art

In other news clientside prediction of movement is working super smoothly up through 500 ms of latency, and prediction of weapon firing is working for hitscan weapons. I have yet to test if fast moving projectiles that can travel the screen in <0.5s can be acceptably implemented as raycasts, but that is my intention. The game is going to have a lot of those, probably. The slow moving projectiles still need work. But given that all of the hard technical challenges are dropping off without a problem, I’m going to focus on aesthetics for awhile.

1 note

·

View note