A blog about game development and procedural content generation. This is the blog section of timetocode.com [email protected] | Tumblr ask | @bennettor

Don't wanna be here? Send us removal request.

Text

How to mix vuex / vue with HTML5 games

Do you want a vue ui mixed with Babylon.js, Three.js, PIXI.js, canvas, Webgl, etc? Or maybe just to store some variables... or make reactive changes? Here's how it works in a few of my games... There are 3 main points of integration. These are mutating state in the store (writing to a variable in the store), reading state from the store (accessing the store variables from other places), and reacting to changes in state (triggering a function when a value in the store changes).

First, how do you expose the store? Well you can literally just export it and then import it. Or be lazy and stick it on window.store. You'll want to have a sensible order of initialization when your game starts up so that you don't try to access the store before it exists. But games already use loaders etc to load up their images and sounds, so there's a place for that. Load up the store first, probably.

For reading state from the store you can use a syntax like store.getters['setttings/graphicsMode']. It can be a little simpler than that, but this shows the synatx for a store nested within a store. In my game for example, I have a gameStore and a settingsStore just because I didn't feel like putting *all* of the properties into one giant vuex config. One can also access the state via store.state.settingsStore.someProperty but this is reading it directly instead of uses the getter. You probably want to use the getter, because then you can have a computed property, but either will work. For writing state to the store, the key is store.dispatch. Syntax is like store.dispatch('devStore/toggleDeveloperMenu', optionalPayload). This is invoking the *action* which means you need to create actions instead of just using a setter with this particular syntax.

So that's reading and writing! But what about reactivity? Well lets say your game logic dispatches some state which enters the store, you'll find that if that state is displayed in your vue ui it is already reactive and working. For example if you store.dispatch('game/updateHitpoints', { hp: 50 }) and there's already ui to draw the hitpoint bar on the hud that is going to change automagically. But what if you're pressing buttons in your vue-based settings menu or game ui? How does one get the game itself to react to that? Well there are two some what easy ways (among a million homegrown options). The first is to use the window to dispatch an event from the same action that you use to update the state, and be sure that you always use the action instead of directly accessing the setter. This will work fine, and I did it at first, but there is a more elegant and integrated way. That is to use store.watch.

The store.watch(selector, callback) will invoke your callback when the state defined by the selector you provide changes. For example this is how to setup your game to change the rendering settings in bablyon js (pseudocode) whenever someone changes the graphics level from low | medium | high | ultra. store.watch(state => state.settings.graphicsMode, () => changeGraphics(store.getters['settings/graphicsMode']));; The first arg is the selector function, which in this case specifies that we're watching graphicsMode for changes. The second function is what gets invoked when it does change. The actual body of my changeGraphics function is skipped b/c it doesn't really matter.. for me it might turn off shadows and downscale the resolution or something like that. Personally I create a function called bindToStore(stateSelector, callback) which sets up the watcher and invokes it once for good measure. This way when my game is starting it up, as all the watchers get setup listening for changes, that first first invocation also puts the ui into the correct state. So that's writing, reading, and reacting to state changes across the border between vuex/vue and any html5 app or game engine by integrating with getters, actions via dispatch, and watchers. Good luck and have fun!

#vuex#vue#vuejs#vue2#vue3#html5 game dev#babylon.js#three.js#pixi.js#game development#game design#javascript

9 notes

·

View notes

Photo

froggy fall, prompt was fortune

3 notes

·

View notes

Photo

i’ve fallen behind on #froggyfall but maybe i can catch up!

these are herb garden (lol... got lazy), broomstick, and cozy snack prompts

3 notes

·

View notes

Text

Displacement Profiler for node.js

I get hired to do performance work on node / javascript applications and I’ve had a little trick up my sleeve for about a decade that may be of use to some of y’all. I’ll be writing about node but the concept applies to a great many things. One can even measure across languages...

I discovered this trick partially by accident when I made a node game loop and experimented with setImmediate, nextTick, and setTimeout (nextTick can freeze an application indefinitely btw). The most interesting loop is the one using setImmediate, which in my case just incremented a counter by one and queued up the loop to run itself again infinitely. As I watched the console scroll by counting the number of ticks, I came to a simple realization: these numbers that I was seeing were what it looked like for the event loop to count as fast as it possibly could, using 100% of the CPU resources allocated to it. Looking at the CPU usage confirmed this. setImmediate however *does not lock up the event loop* all it does is schedule work for the next tick. In fact, I would argue that setImmediate and nextTick are in fact transposed and misnamed in node or v8 or wherever it is that they actually live. I don’t think this is much of an argument, I’m pretty sure this is a known mistake but hopefully I’m not misremembering. IN ANY CASE; setImmediate spins as fast as it can but doesn’t interfere with other events. So one one can make a setImmediate loop and have it counting inside of an application that is already doing its job. What you’ll find is that the speed at which the setImmediate loop runs depends on how much other work is being done in that thread. And there we have it: a displacement profiler. You can change a piece of the application and run this profiler before and after and end up with a “volume” change in the CPU usage -- thus measuring something that was perhaps very elusive before.

I call the profiler by this name per the famous story about Archimedes. He was given a task about figuring out if a crown was made of solid gold and he wasn’t sure how he was gonna figure it out. At some point he was getting into a bathtub that was full to the brim and when he got in water spilled out everywhere... he realized that the amount of water that spilled out of the tub was equal to the volume of the part of his body that had entered the tub, exclaimed, “Eureka!” and ran through the street naked to tell everyone what he had figured out. He had realized that he could measure the volume of the crown, weigh it, and figure out what material it was made out of -- or at least compare it to solid gold which would be enough to answer the initial question. This same notion of volume or displacement applies to CPU usage and thus provides an alternative method of measuring performance (an alternative to a traditional profiler that measures the timings of functions).

Let me say that the CONCEPT is more interesting than the specifies. The idea of measuring the “space” available on the cpu outside an application’s workload instead of measuring the application workload itself is a potentially very different way of seeing things. I don’t recommend starting with it, do the obvious stuff first. But when things remain obscure after looking at the obvious stuff, consider this approach. A lot of applications these days are asynchronous monstrosities without a clear pipeline of work being done... instead it’s all evented i/o! Using a displacement profiler combined with a stress test one can really get a feel for the capacity of an application. One is not going to get the same answers by counting the milliseconds spent in the hot path functions and multiplying by that by the hypothetical number of users at launch time. ALSO if one expands the displacement to multiple threads (saturate them, ideally !) one can even measure the hidden load of the application -- e.g. what is happening out of the application’s main thread but still nevertheless consumes cpu on the machine, such as filesystem i/o or the cpu component of network i/o. We might not think of node or javascript as traditionally multithreaded, and it isn’t, but there are c++ threads or whatever doing stuff during a lot of the i/o and not measuring that (and only measuring the javascript) is going to cause problems when trying to figure out how much load a heavy i/o program can take.

Another thing to note about this technique is that data collected one day should not be compared to data collected another day or even much later in the same day. We may be measuring the displacement of an application, but we’re also measuring the displacement of *everything* that uses CPU. And this is something that is pretty much constantly changing on most machines.

I hereby write this thing here and release it to the world so that no fool may attempt to patent it. The existence of this concept is ancient I would hope no one would attempt to own it. And I’m not just talking about Archimedes. Anyone who opened up 10 copies of a video game because they wanted to see how some other program they made behaved in lag was essentially onto the same idea. GOODLUCK. HAVE FUN

1 note

·

View note

Photo

prompt: old book

Some of you may not know the history of the frog people... fortunate we are to have this old tome.

5 notes

·

View notes

Photo

ima be doing #froggyfall this october.... so ima be drawing a frog-related prompt every day . GL HF. prompt for today was mushroom but i went all in on the frog instead lul

5 notes

·

View notes

Photo

Prototype of an engine with a raycast mining laser that can dig through the terrain while the lighting / fog of war is recomputed. The lighting idea here was that the light from the sky should travel pretty far down but only a small distance horizontally when shining into a cave. The “background” tiles affect the light quite a bit, so if one were to punch one of those it would look like opening a window tot he sky. It isn’t perfect but it has most of the feel that I was going for. Also the actual tile graphics are being rendered in fairly raw webgl (a PIXI mesh + shaders) and there’s a lot of logic for the world being divided into chunks so that as the meshes deform only a small piece of the whole is actually being updated on the gpu. I’ve written stuff like this a dozen times but this is the first that this much of it is on the gpu and the performance is pretty great. It does make me wonder about making essentially the same thing in webgpu instead of webgl however...

1 note

·

View note

Photo

was trying to program rigid collisions and torque. it kinda sorta works

1 note

·

View note

Photo

Working on letting people use their own photos for https://jigsawpuzzles.io/

There isn’t much of a user interface yet, but the prototype is finally able to cut a new puzzle from whatever image, provision a sever, and start a multiplayer game. It does this all without actually uploading the user’s image to internet, muhahahaha.

6 notes

·

View notes

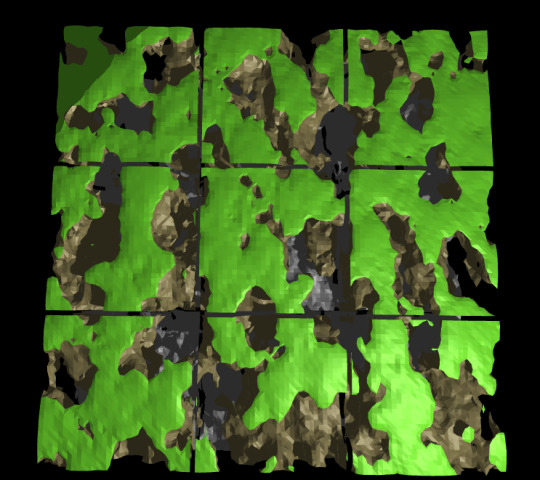

Photo

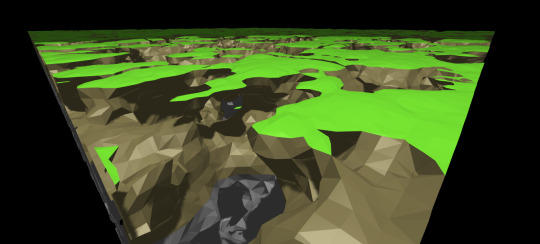

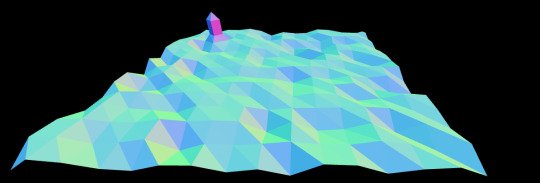

Some more voxel experiments. This is my first time getting one voxel to be a different color than another. Strictly speaking, I did not quite accomplish that. These were colored just by looking at the height of the vertexes -- the top layer green, then dirt colored, and finally something rockier down below. It would be much more interesting to step away from this globby noise generator, and then to color the surfaces based on making volumetric chunks of different materials instead of just coloring by height. That’s what I hope to work on next. Also probably some deformation. This marching cubes foray was inspired by Sebastian Lague’s “Coding Advenutre: Marching Cubes” https://www.youtube.com/watch?v=M3iI2l0ltbE . His project is in Unity and generates terrain (and noise) right in a compute shader.

I took an essentially identical approach but due to the lack of a fancy engine I instead used the cpu to generate vertices, normals, and colors in ye old javascript before handing them to the gpu via webgl. Each chunk here evaluates 32x32x32 voxels and creates a mesh in ~30-40 ms (on a fairly fast computer). That’s too slow for realtime chunk generation on the main thread, though the workload is pretty perfect for using a webworker (it’s all math that produces a float array). After a chunk is generated it’s no problem to render; these particular chunks are so low poly that several of them were able to render at 60 fps on a chromebook with an integrated gpu. I’m pretty new to 3d, and I did not investigate whether Sebastian Lague’s shader could in fact be translated to glsl and run directly on the gpu even in a browser.

Lighting, as always in webgl, remains an ordeal. While it was possible to use a default three.js shadow mapping approach and produce some darkened carverns for the screenshots, ultimately the light looks nothing like what one would get in Unreal or Unity. Naïve/easy lighting is also not performant and instantly fails on my ultra-low-end gpu test case. I think one would have to actually calculate light manually per face via a simplified schema (not a shadow map) or do some shader magic of which I’m unaware (i know nothing of shaders, yet) to stand a chance of getting any sort of visually interesting torch-lit underground aesthetic while still producing something accessible to non-high-end gpus.

#devlog#game development#graphics programming#voxel#procedural content generation#terrain#javascript#webgl

2 notes

·

View notes

Photo

learning about marching cubes

10 notes

·

View notes

Photo

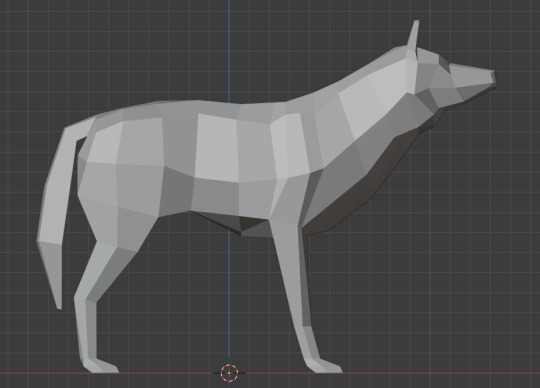

wip low poly wolf. will be pounding rigging and animation tutorials until this guy can sit, run, and howl

6 notes

·

View notes

Photo

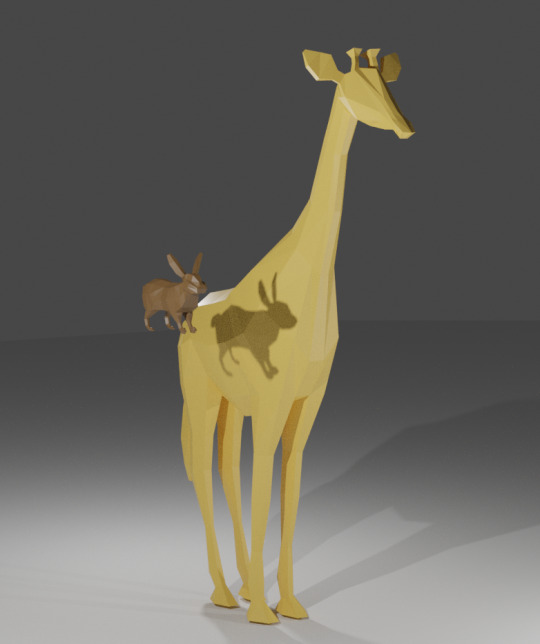

practicing low poly modeling, got a lot of the technique from this video: https://www.youtube.com/watch?v=6mT4XFJYq-4

3 notes

·

View notes

Photo

practicing low poly trees

3 notes

·

View notes

Photo

I don’t normally do 3d, but here’s my progression of trying to see if *a lot* of trees could be rendered in webgl. It started with a little cubey twiggy thing that had a little animation in blender (after consuming blender tutorials for hours). Then after reading a lot of three.js code/forum posts and using their nice discord I went from rendering the “tree” naively to using shaders and a large variety of performance techniques. Eventually around the time of making the multi-colored image one of the techniques yielded 120,000 of the little tree things at 144 hz. And then came time to put in some more complicated art, so I followed this tree modeling tutorial: https://www.youtube.com/watch?v=__cqtq6vm_0. That plus the shadows dropped the performance significantly, but there are still thousands of the trees in the scene. What’s next? I’m not sure... probably an effort to make the tree more basic and then push the performance of rendering a variety of different types of vegetation which is a pretty different challenge (performance-wise) than repeating one thing over and over.

12 notes

·

View notes

Text

The Peeker’s Compromise: A Fair(er) Netcode Model

Many first person shooters are plagued by a netcode artifact known as the peeker’s advantage. I propose here a technique for correcting this bug, based around normalizing gameplay in such a way that human reflexes and skill decide the outcome of competition (as opposed to network latency or artifacts of varying netcode designs, discussed shortly).

When a player in a first person shooter is moving around the game world they exist in a position on their own game client that is slightly ahead of their position on the server. This is a solution/side-effect of clientside prediction which is ubiquitously used in first person shooters giving the player instantaneous movement and controls that feel like a single player game despite controlling a character that is moving around on a remote server.

If we were to visualize the difference between the clientside player position and the serverside player position it would look like two characters chasing each other. How far apart the two characters are depends (in descending order of usual importance) on how fast movement is in the game, the latency of the player, and the tickrate of the server. But how big are these differences in the actual games of the current era? Are the two states of characters practically overlapping? Or is one several meters behind the other? The answer -- which varies by game and by internet connection -- is that the desynchronization between these two positions is significant. Over amazing internet connections in games with slow moving characters the desynchronization is usually on the order of 1 player length. So imagine any fps game character (valorant, cs:go, apex, overwatch, fortnite, cod, etc) -- and then imagine them creeping or walking around slowly. In this scenario the desync between the two states is such that one could picture the character being followed by its clone, touching. If they move *really* slowly then they’ll be overlapping. However as the characters break into a run their clone will trail them by more -- maybe 2-8 player lengths depending how fast characters are in the game. If a player has a high latency the clone will be ever farther behind in all scenarios except holding still.

Now when a player shoots their gun in all of the above games, the game engines will calculate the shot based on where the player perceives themselves. That means that as you play the game what you see is pretty much what you get. You don’t have to manually correct for lag while aiming in modern shooters -- just aim for the head right where you see it. However this introduces the peeker’s advantage. A defender can hold a corner with their crosshair primed to shoot anything that appears, but an attacker (the peeker) who comes around that corner is ahead of their server position and thus they get to a bit of extra time to "peek” and shoot at the defender before they themselves are visible to the defender. Depending on the actual amount of lag and the game itself the defender perceives themselves as either having been shot insanely quickly right as the attacker appeared, or maybe if the lag is not as bad they perceive themselves as having gotten to trade shots with the attacker, but ultimately they lost. The attacker perceives nothing special -- they just walked around the corner and shot the defender b/c they were playing aggressively and have superior reflexes (or so they think).

How big is the actual peekers advantage? Well it varies by game, but an article put out by Riot Games about Valorant goes into detail about how much the peeker’s advantage affects gameplay, and how their engine attempts to minimize it. It’s a great read: https://technology.riotgames.com/news/peeking-valorants-netcode. But to summarize, using 128 tick servers (very fast) 35 ms of internet latency (fast) and monitor refresh rates of 60 hz (standard) they calculate an advantage of 100 milliseconds after extensive optimization. That’s fast, but is it fast enough? Well back in 2003 I used to be a competitive Counterstrike 1.6 player at around the same time that I was obtaining a psychology degree with a particular interest in human perception (reaction time, how our eyes work, perception of subluminal images that are shown very quickly). I tested the reflexes of myself and all of my teammates. Competitive gaming didn’t have the same structure back then as it does now (everything has a ladder now -- back then it was private leagues), but by modern standards we were probably top 3% ladder players or something like that. Generally speaking there isn’t much of a speed difference in the whole pool of pro-gamers, at least when compared to new players. They are all pretty fast. Response time for watching a corner and clicking as you see a player (already perfectly lined up) fall into the range of 150-190 ms. Tasks that involve moving the crosshair to react quickly (as opposed to having had perfect placement already) slow that down another 50-150 ms. But generally speaking the competition between two similarly fast players with good crosshair placement comes down to very tiny units of time with even 10 ms producing an advantage that is measurable (both Riot and I agree about this). This means that had the server allowed for a double K.O. (which these games do not) we would find that in fact both players were very good and would’ve killed the other just 20-50 milliseconds apart. Valorant and CS:GO don’t work like that however, and instead the game essentially deletes the bullets from one of the players and leaves the other alive. Unfortunately this difference in human reflexes amongst competitive gamers is entirely gobbled up by 100 ms of peeker’s advantage -- meaning that at high skill levels the peeker will very often win and the defender will very often lose. So while the Riot article celebrates the success of engineering that allowed Riot to reduce the peeker’s advantage as much as they did, if you read the fine print you’ll find that the peeker’s advantage remains huge.

I don’t mean to pick on Riot, far from it. They’ve clearly done an amazing job. The other games I mentioned earlier are presumably in the same approximate ballpark, though I can tell you from personal experience that some of them are a fair margin worse. Not all of the games I mentioned use a 128 tick server (only one does). They also have longer interpolation delays and other little engine details that slow things down further. Riot is also an insane company that literally owns/builds the internet just to reduce latency for its players -- so if we want to take away a general sense of how bad the peeker’s advantage is in most games we should assume it to be worse than the scenario described above regarding Valorant.

Now that I’ve discussed at length the peeker’s advantage, allow me to present a related netcode model that attempts to solve these problems: The Peeker’s Compromise. If we delay the time of death on the serverside by the timing difference between the attacker and the victim, then we can allow the defender an equal opportunity to shoot the attacker. The server can then determine the winner (and the remaining damage) based on the performance of the human (instead of using the ~100 ms of engine-related advantage and internet latency). So let’s use some numbers for a hypothetical situation. Let’s say our game has 128 tick server, the players have 35 ms of latency, and 60 hz screens (like the Valoran example from earlier). Right as a player peeks another player they essentially get to shoot 100.6 ms sooner than their victim. As their shots arrive at the server the server might calculate that the victim has died -- but rather than killing the player it will keep them alive for 100.6 ms PLUS their own latency, which in this scenario puts the total at 135.6 ms. If during these 135.6 milliseconds the server receives shots where the defender hits the peeker, it will enter a section of code that attempts to settle this discrepancy. First off, it is entirely possible that after compensating each shot for the difference between the players we find out that one truly was faster than the other -- the game could use this information to decide which one lives and which one dies. It also might make sense to allow damage to legitimately trade kills and to build double K.O. situation into more first person shooters.

Let’s talk about the artifacts of this new and proposed system. In low-latency games with a high tick rate this change would be subtle -- we would just have no more peeker’s advantage. As latency increases all way up to 200 ms we will have a new artifact. Instead of having a more severe peeker’s advantage we’ll end up with a scenario where it looks as if players are taking 1-3 extra bullets beyond what would normally kill them -- although if you stop shooting early they still end up dead a few milliseconds later. The Vandal in Valorant (similar to the AK in cs:go) fires 9.75 shots per second, which is one bullet per 103 ms. So in a best case scenario the player death is delayed by the time it takes to fire one extra shot at full auto, and in a worse case scenario we add 1 bullet per added ~100 ms of waiting done by the engine. It would also make sense to cut off certain shots from being counted from a laggy player (existing systems already do this in their own way).

Gameplay at lower skill levels wouldn’t really be affected one way or the other. It isn’t affected much by the peeker’s advantage either -- players have to know where to aim and thus be involved in legitimate reflex test before we’re down to something so close that milliseconds of delay have an effect. If players are oblivious to each other, or place their crosshairs incorrectly as they come around a corner then the added slow down of the human having to make a new visual-search-decision-plus-adjustment is too slow for any of this to matter. But at higher skill levels there would be some actual changes to gameplay. The most significant change is that players would be able to hold corners -- and if they’re truly faster than the peeker they would win. In such a design it really would make much more sense to allow two players who fire at essentially the same time to kill each other which if adopted would need to be addressed at a game design level. Also games that had an alternate method of very indirectly addressing peeker’s advantage, such as weapon instability during movement as a major element (arma, h1z1, pubg, tarkov, etc), would have more options, and may need to tune existing timings to get the same feeling back.

The underlying netcode behind the peeker’s advantage affects more than the classic peeking situation. It also affects two players picking up an item at the same time (it decides the winner here). And it also is present when you’re playing a game and you duck behind cover and take damage after you should already have been safe (the peeker and the victim are on slightly different timelines). Neither peeker’s advantage nor my proposed peeker’s compromise actually removes lag of the underlying systems of the network connection nor the game engine ticks, both simply *move* delays around such that the controls feel responsive and the latency is suffered elsewhere. There’s a certain physics to the realm of network programming. As I like to half-jokingly say: “Lag is neither created nor destroyed [by compensation techniques.]” So the same problems would still exist, though the Peeker’s Compromise is philosophically different. Where the peeker’s advantage says let the fastest internet and the more aggressive player win, the Peeker’s Compromise says let the more skillful (in terms of accuracy and speed) human win. Outside of a double K.O.esque duel however, this is subjective. Who should pick up an item when both players tried to pick it up at the same time? Well the old method says the one with the better internet gets it, the new method suggests perhaps that we should compensate the timing to remove the internet/engine delay and award it to whomever was faster. But what about getting shot after reaching cover? This is really up to the game designer -- is it more impressive to tag someone barely as they run off? Or more impressive to slide behind a barrier right as you get shot? It’s a design decision. It’s also possible via this proposed system to compromise. The engine design I propose has more data in its context with which to make decisions, courtesy of temporarily allowing ties to occur which get addressed after both players take an action. It could say well that was an amazing shot, AND it was an amazing dodge. After crunching the numbers the decision is to deal a hit but cut the damage in half as a compromise between the feats of the two players.

#netcode#network programming#peeker's advantage#Peeker's Compromise#multiplayer#first person shooter#Riot Games#Valorant#Valve#Counterstrike

4 notes

·

View notes