#entity queue module

Explore tagged Tumblr posts

Text

Sorting node data using the Entity Queue module in Drupal

Want a way to easily reorder nodes, users, taxonomy terms, media, or any entity type in Drupal? Meet Entity Queue - an extremely handy Drupal module that helps you sort items your way. Find out more!

#entity queue#drupal modules#drupal development#content management#sort node data#entity queue module

0 notes

Text

All Systems Red, Chapter 7

(Curious what I'm doing here? Read this post! For the link index and a primer on The Murderbot Diaries, read this one! Like what you see? Send me a Ko-Fi.)

In which there's a fun switcheroo.

The next day, only Mensah and Murderbot fly to the rendezvous. MB expects that by now, GrayCris know approximately the makeup of the PresAux team, and just hopes they don't know how messed up this SecUnit is.

Mensah opens a comm channel and announces arrival. A voice responds that a deal can be made. Mensah says she'll send her SecUnit out. The camp is set up on high platforms over the uneven trees. It's required by the company if you want your camp anywhere there's not open terrain, and it's why MB thinks its plan will work.

As they land and put the controls on standby, Mensah looks like she wants to say something, but is resisting the urge. She simply wishes MB good luck, and MB is unsure how to respond, so it stares awkwardly for a few seconds, then seals its helmet and goes.

It stops several meters from the figures awaiting, four SecUnits and three humans, leaving one SecUnit unaccounted for, as well as at least 27 more humans. It greets them formally, and feels a ping from their equipment, trying to get it to auto-install the update to immobilize. The meeting is so close to their hub because they probably needed equipment that can't be moved from here.

Fortunately, all MB feels is a light tickle.

One of the SecUnits approaches, and MB says it recommends against trying to override it, opening its gun ports. The SecUnits go alert, and the humans are startled. MB says it has an alternate solution.

One of the humans, as skeptical as if the hopper started talking, repeats that back questioningly. MB offers that they weren't the first to hack PresAux's HubSystem. One of the humans says it must be a trick, but MB continues that it doesn't have a governor module, then goes back to lying and says PresAux don't know, and it's open to a compromise that benefits it and GrayCris.

The leader, in blue, asks if PresAux are telling the truth, about knowing why this planet is important. MB says if they want to find out if a real rogue SecUnit has to answer their questions, they're going to get an education.(1) The humans discuss a bit, then ask what MB's compromise is. It asks for them to take it off planet, listed as destroyed inventory, in exchange for very important information. The humans discuss, then the blue leader says they agree. MB knows they intend to install an override in it regardless.

MB asks them to delete it from the PresAux HubSystem inventory first, and then it will give them the information they need. They protest that the HubSystem is still down. MB says they can initiate startup and queue the command, once it sees that it's in queue it will cooperate.

Three minutes later, the comm channel opens, and MB has limited access to the GrayCris feed, to see that the command is in queue.

Since it's been watching the time, it knows the target window is reached, and tells GrayCris that PresAux have sent a group to set off GrayCris's distress beacon. Blue leader says that's impossible as the others look unsure, but MB says the team has an augmented human systems engineer who can do it. Blue asks Yellow if it's possible, and Yellow says, maybe. Blue says they have to go now, and tells MB to have its client come out. MB expected them to leave without asking for Mensah.

(Last night Gurathin had said this was a weak point, that this was where the plan would fall apart. It was irritating that he was right.)

MB can't open its comm without GrayCris knowing, so it tells them Mensah knows they mean to kill her, adding that she's a planetary admin and not stupid. Green asks what entity, and MB points to the name, Preservation.

Yellow says they can't kill her, and Green says they can hold her until the settlement agreement. Blue snaps that won't work, the investigation will be even more thorough if she's missing.

Blue suggests MB go get Mensah, and bring her here. Blue even sends one of the DeltFall units to help.

What I did next was predicated on the assumption that she had told the DeltFall SecUnit to kill me. If I was wrong, we were screwed, and Mensah and I would both die, and the plan to save the rest of the group would fail and PreservationAux would be back to where it started, except minus their leader, their SecUnit, and their little hopper.

MB attacks the DeltFall unit, and manages to overpower it.(2) It feels bad, though, because the DeltFall units did nothing wrong. Not that any SecUnit had a choice about involvement in this.

Mensah bursts out of the bush with the mining tool, and MB tells her she'll have to pretend to be its prisoner. It starts shedding its own indentifiable pieces of armour(3) and putting on the DeltFall unit's equivalents.

MB fake-drags Mensah back to the GrayCris compound, Mensah struggling convincingly the whole way. Blue tells Mensah they know someone's trying to trigger their beacon, and if Mensah goes with them, no one will come to harm. Mensah hesitates, not wanting to look like she's giving in too quickly, but needing GrayCris gone. She agrees.

MB has to ride in the cargo container, which would feel nostalgic, if it were its own cargo container. Still, as a company product, MB can access the hopper's feed, if it stays quiet. The GrayCris SecSystem is still recording. MB figures they plan to try to wipe it before they're picked up, but the company's analysts will be on guard for it, and GrayCris might be caught even if PresAux doesn't survive this, which isn't as comforting as it sounds.(4)

MB accesses the live recording, and hears Mensah talking to them about the alien remnants in the unmapped areas. Their properties are weird enough to glitch out the mapping software. They prove that the planet was inhabited in the distant past, which would make it forbidden to mine except by archaeological surveys. But, if you hid them and got the mining rights, you could make a fortune selling the remnants off.

MB is cautiously optimistic about the plan, even with the hitches. Pin-Lee and Gurathin just have to hack the perimeter, avoid the one unaccounted-for SecUnit, and get close enough to the GrayCris hub to access HubSystem to set off their beacon.

The GC hopper lands near the beacon, and MB plays along with the other three SecUnits, making a standard formation around it. One of the GC humans reports no sign of anyone nearby, as the GC units go closer to the beacon to investigate.

MB takes a moment to explain, in an aside, that the beacons are disposable and thus cheap, and their launch can cause quite a lot of damage, which is why they're stored kilos(5) away from the habitat. MB and Mensah shouldn't be this close, and potentially end up toast in the launch process, but there's little choice otherwise.

MB moves toward Mensah, and Yellow notices and says something to Blue. The DeltFall SecUnit attacks, and MB fights with it as Mensah ducks behind the hopper. MB feels a thump in the plateau, as the beacon launch begins. MB runs, taking a hit to the thigh, and tackles Mensah off the edge of the plateau, curling itself to protect her. Then the beacon launches and knocks it offline.

It comes back momentarily, and hear and see Mensah next to it. She's injured, and talking to Gurathin and Pin-Lee over comms about being careful in their haste. Then MB goes offline again and wakes to Gurathin and Pin-Lee standing over it.

Pin-Lee leaned over me and I said, “This unit is at minimal functionality and it is recommended that you discard it.” It’s an automatic reaction triggered by catastrophic malfunction. Also, I really didn’t want them to try to move me because it hurt bad enough the way it was. “Your contract allows—” “Shut up,” Mensah snapped. “You shut the fuck up. We’re not leaving you.”(6)

MB lingers just this side of a total systems failure, having flashes of consciousness, including Arada holding its hand, and being on the big hopper as the pick-up transport lifts it.

That was a relief. It meant they were all safe, and I let go.(7)

=====

(1) And by education it no doubt means whatever its arm weapons fire. (2) Any two SecUnits should theoretically be evenly matched, MB all but said so, but MB has advantages like the ability to look up its own tactics education in its spare time, though it's unlikely to have done much of that, and think like a person without fear of punishment by a governor module, which it is most definitely taking advantage of. (3) Remember when it said it would never leave its armour again? Alas, it was too good to be true. (4) "They'll avenge us if we die" never is much comfort. (5) So, I'm giving this some grace because it's a scifi setting, use whatever terms you want in whatever ways you want. But, I can almost always tell when an American writes in metric and doesn't work and think in metric every day, because of tells like this. In the rest of the English-speaking world, we don't use kilos for any kilo-units. Kilos is only kilograms, the weight measure. (Ok not weight but like basically weight don't nitpick me to death I do enough of it to myself.) Using kilos for kilometers is absolutely off limits unless you want to get a comedic double-take. (6) Murderbot's not the only one who formed an attachment. It's done nothing but protect and help Mensah and her team, and she strikes me as absolutely the kind of person who forms attachments and friendships easily. (7) A fake-out death like this hits a little less hard when you know this is the first in quite a long series. XD

#the murderbot diaries#murderbot diaries#all systems red#murderbot#secunit#ayda mensah#graycris#gurathin#pin lee#arada

6 notes

·

View notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] Master efficient parallel programming to build powerful applications using PythonKey FeaturesDesign and implement efficient parallel softwareMaster new programming techniques to address and solve complex programming problemsExplore the world of parallel programming with this book, which is a go-to resource for different kinds of parallel computing tasks in Python, using examples and topics covered in great depthBook DescriptionThis book will teach you parallel programming techniques using examples in Python and will help you explore the many ways in which you can write code that allows more than one process to happen at once. Starting with introducing you to the world of parallel computing, it moves on to cover the fundamentals in Python. This is followed by exploring the thread-based parallelism model using the Python threading module by synchronizing threads and using locks, mutex, semaphores queues, GIL, and the thread pool.Next you will be taught about process-based parallelism where you will synchronize processes using message passing along with learning about the performance of MPI Python Modules. You will then go on to learn the asynchronous parallel programming model using the Python asyncio module along with handling exceptions. Moving on, you will discover distributed computing with Python, and learn how to install a broker, use Celery Python Module, and create a worker.You will understand anche Pycsp, the Scoop framework, and disk modules in Python. Further on, you will learnGPU programming withPython using the PyCUDA module along with evaluating performance limitations.What you will learnSynchronize multiple threads and processes to manage parallel tasksImplement message passing communication between processes to build parallel applicationsProgram your own GPU cards to address complex problemsManage computing entities to execute distributed computational tasksWrite efficient programs by adopting the event-driven programming modelExplore the cloud technology with DJango and Google App EngineApply parallel programming techniques that can lead to performance improvementsWho this book is forPython Parallel Programming Cookbook is intended for software developers who are well versed with Python and want to use parallel programming techniques to write powerful and efficient code. This book will help you master the basics and the advanced of parallel computing. Publisher : Packt Pub Ltd (29 August 2015) Language : English Paperback : 286 pages ISBN-10 : 1785289586 ISBN-13 : 978-1785289583 Item Weight : 500 g Dimensions : 23.5 x 19.1 x 1.53 cm Country of Origin : India [ad_2]

0 notes

Text

Your Minecraft Client Dream! Survey

💾 ►►► DOWNLOAD FILE 🔥🔥🔥 Every time you report someone, you should always report them at the official website! Watchdog is not a ban system due to the employment of an attempt to make the player unclear of what module they were banned for. Otherwise the hacked client creators may know exactly what hack is getting players banned and will fix it immediately. Watchdog is slowly advancing because they want time to try to overwhelm the hacked client creators, so they can patch a trick of bypasses rather than a flood. The more players hack, the more players get banned, and Watchdog receives more data. Applying more data makes it easier to match hacker data to actual legit player data. On a consistent interval of 30 minutes, Watchdog entities will be released upon all the players present in the queue concurrently, and circle the player under strictly circumscribed movements. This only happens very rarely. To refrain this unfortunate event from ever taking place, please follow the recommendation below. Recommendation: Safe users that fear getting falsely-banned stay calm and try, as hard as possible, not to hit the Watchdog entity, or avoid vector contact with it. If you click at a very high pace, please feel free to employ this safety technique. Recommendation: Safe users that fear getting banned for Blacklisted Modifications only use approved modifications. Note: Most of the time, bans that deal with Blacklisted Modifications are permanent for the first time. With the new appeal system, you can only appeal one time per punishment. It is easier for the players to make an appeal without having to find the format, not use it, etc. Especially with the Appeals Team getting spammed. Appeals sent in any other way e. We can only deal with appeals for the server in the appeals section on the Hypixel Forums. If you believe you were falsely banned, you can read about appealing here , and then appeal here. Hypixel Wiki Explore. Staff Administrators Moderators Helpers. Popular pages. Explore Wikis Community Central. Register Don't have an account? Watchdog Cheat Detection. View source. History Talk 0. This is a community Wiki, hence we have no power over bans or appeals. If you believe you were falsely banned, you may read about appealing here , and then appeal here. Categories : Cheating Rules. Universal Conquest Wiki. We have trained Watchdog for many months on how to successfully spot hackers, and now it can do it automatically. We can't reveal the exact details of how this works, but it's super cool.

1 note

·

View note

Text

What you'll learn Implement message passing communication between processes to build parallel applicationsManage computing entities to execute distributed computational tasksMaster the similarities between thread and process managementProcess synchronization and interprocess communicationAre you looking forward to get well versed with Parallel & Concurrent Programming Using Python? Then this is the perfect course for you!The terms concurrency and parallelism are often used in relation to multithreaded programs. Parallel programming is not a walk in the park and sometimes confuses even some of the most experienced developers.This comprehensive 2-in-1 course will take you smoothly through this difficult journey of current programming in Python, including common thread programming techniques and approaches to parallel processing. Similarly with parallel programming techniques you explore the ways in which you can write code that allows more than one process to happen at once.After taking this course you will have gained an in-depth knowledge of using threads and processes with the help of real-world examples along with hands-on in GPU programming with Python using the PyCUDA module and will evaluate performance limitations.Contents and OverviewThis training program includes 2 complete courses, carefully chosen to give you the most comprehensive training possible.The first course, Python Parallel Programming Solutions will teach you parallel programming techniques using examples in Python and help you explore the many ways in which you can write code that allows more than one process to happen at once. Starting with introducing you to the world of parallel computing, we move on to cover the fundamentals in Python. This is followed by exploring the thread-based parallelism model using the Python threading module by synchronizing threads and using locks, mutex, semaphores queues, GIL, and the thread pool. Next you will be taught about process-based parallelism, where you will synchronize processes using message passing and will learn about the performance of MPI Python Modules. Moving on, you’ll get to grips with the asynchronous parallel programming model using the Python asyncio module, and will see how to handle exceptions. You will discover distributed computing with Python, and learn how to install a broker, use Celery Python Module, and create a worker.The second course, Concurrent Programming in Python will skill-up with techniques related to various aspects of concurrent programming in Python, including common thread programming techniques and approaches to parallel processing.Filled with examples, this course will show you all you need to know to start using concurrency in Python. You will learn about the principal approaches to concurrency that Python has to offer, including libraries and tools needed to exploit the performance of your processor. Learn the basic theory and history of parallelism and choose the best approach when it comes to parallel processing. About the Authors:Giancarlo Zaccone, a physicist, has been involved in scientific computing projects among firms and research institutions. He currently works in an IT company that designs software systems with high technological content. BignumWorks Software LLP is an India-based software consultancy that provides consultancy services in the area of software development and technical training. Our domain expertise includes web, mobile, cloud app development, data science projects, in-house software training services, and up-skilling servicesWho this course is for:This course is for software developers who are well versed with Python and want to use parallel programming techniques to write powerful and efficient code & it also aims at Python developers who want to learn how to write concurrent applications to speed up the execution of their programs, and to provide interactivity for users, will greatly benefit from this course.

0 notes

Text

The Ultimate Guide to Microsoft Dynamics 365 Certifications

Microsoft announced a 51% increase in Dynamics 365 revenue in the first quarter of 2019. As a result, IT firms that deploy, adapt, and maintain Microsoft Dynamics 365 solutions are seeing an increase in the need for experienced and certified professionals in this product line, including ERP and CRM systems. We've created a guide on Microsoft Dynamics 365 certifications to demonstrate what certifications are presently available, what it takes to become certified, and what benefits organizations and their workers gain from these certificates.

Microsoft Dynamics 365 Product Certifications

There are now 8 Microsoft certifications available for the Microsoft Dynamics 365 training product range. The certifications are designed for those just starting in their Microsoft Dynamics 365 careers and functional consultants who work with these products, and they range in complexity appropriately.

Microsoft Dynamics 365 Certifications

The entry-level certification

The certification route begins with an optional certificate for individuals just starting in their consulting profession. Dynamics 365 Fundamentals is a certificate that is commonly used for CRM and ERP applications. It provides an overview of cloud computing principles, the Microsoft Dynamics 365 family of apps, and their license, deployment, and release choices. It also includes the Microsoft Power Platform, which allows for custom apps, the automation of workflows, and the design of analytic reports.

Advanced-level certificates

Because the Dynamics 365 Fundamentals certification is not required to earn higher-level certificates, people already familiar with Microsoft Dynamics 365 technology can concentrate on gaining deeper Associate-level certifications. They need two years of prior work experience with Microsoft Dynamics 365 solutions separated into CRM and ERP applications.

A tier of Microsoft Dynamics CRM certifications includes:

Sales Functional Consultant Associate Microsoft Certified: Dynamics 365

The certification validates the abilities required to create sales applications, reporting and dashboards, email, Word, and Excel templates, automate sales workflows, and manage sales entities such as leads, prospects, quotations, goods, and sales orders.

Marketing Functional Consultant Associate Microsoft Certified: Dynamics 365

The certification validates the abilities required for setting marketing applications, reporting, and dashboards, automating marketing workflows, managing leads, marketing emails, customer journeys, events, and webinars.

Microsoft Certified: Associate Functional Consultant for Dynamics 365 for Customer Service

The certification validates the abilities required to create customer service management settings, reports, and dashboards, automate customer service workflows, maintain cases, case queues, and the knowledge base for agents, and manage entitlements and SLAs.

Field Service Functional Consultant Associate Microsoft Certified: Dynamics 365

The certification validates the abilities required to deploy field service apps (including mobile apps), track work orders, issues, inventories, and purchase returns.

A tier of Microsoft Dynamics ERP certifications includes:

Finance and Operations, Financials Functional Consultant Associate Microsoft Certified: Dynamics 365

The certification validates the abilities required for setting up and configuring financial modules, cost and allocation procedures, and taxes, among other things.

Finance and Operations, Manufacturing Functional Consultant Associate Microsoft Certified: Dynamics 365

The certification validates the abilities required for establishing and testing the production control module, managing production, and lean orders, production scheduling, and subcontracting.

Microsoft Certified: Associate Functional Consultant, Dynamics 365 for Finance and Operations, Supply Chain Management

The certification validates the abilities required for controlling product and inventory pricing, processing inventory management operations, managing supply chain procedures, and so on.

Requirements for becoming a Microsoft Dynamics 365 specialist

Exam preparations

If a firm is a Microsoft partner, its employees can study for the test by using self-learning resources such as the Microsoft Dynamics Learning Portal. Otherwise, a candidate might use Microsoft Learn, a free online training resource.

A firm that wants to hire qualified Microsoft Dynamics 365 consultants might organize an instructor-led training course for its staff. The course might be provided by a Microsoft learning partner or an independent third-party training provider.

How long are Microsoft Dynamics 365 certificates valid?

Microsoft Dynamics 365 certificates have no stated expiration date. Specific technology and products, on the other hand, may become obsolete. If they do, Microsoft will retire the certification (like it did with Microsoft Dynamics GP, NAV, and AX certifications) and replace it with an up-to-date equivalent that may be earned as verification of the most relevant Microsoft Dynamics 365 capabilities.

Is it worth it to go through the trouble of being certified?

The primary benefit of having certified Microsoft Dynamics 365 experts on board for IT service firms is increased client trust. This is also one of the requirements for earning Silver or Gold Microsoft Partner certification. In turn, holding any of these statuses entitles you to preferential placement in Microsoft's list of recommended IT service providers, the ability to interact with more clients through referrals, and other perks.

The most apparent benefit of acquiring Microsoft Dynamics 365 certifications for workers is obtaining valuable evidence of competence, new career prospects, and a competitive edge on the job market.

0 notes

Text

Manage AWS ElastiCache for Redis access with Role-Based Access Control, AWS Secrets Manager, and IAM

Amazon ElastiCache for Redis is an AWS managed, Redis-compliant service that provides a high-performance, scalable, and distributed key-value data store that you can use as a database, cache, message broker, or queue. Redis is a popular choice for caching, session management, gaming, leaderboards, real-time analytics, geospatial, ride-hailing, chat and messaging, media streaming, and pub/sub apps. You can authenticate in ElastiCache for Redis in one of two ways: via an authentication token or with a username and password via Role-Based Access Control (RBAC) for ElastiCache for Redis 6 and later. Although authentication with a token allows administrators to restrict reads and writes to ElastiCache replication groups via a password, RBAC enhances this by introducing the concept of ElastiCache users and groups. Additionally, with RBAC in Amazon ElastiCache for Redis 6, administrators can specify access strings for each ElastiCache user—further defining which commands and keys they can access. When configured for RBAC, ElastiCache for Redis replication groups authenticate ElastiCache RBAC users based on the credentials provided when connections are established. Authorization to Redis commands and keys are defined by the access strings (in Redis ACL syntax) for each ElastiCache RBAC user. ElastiCache RBAC users and ACLs, however, aren’t linked to AWS Identity Access Management (IAM) roles, groups, or users. The dissociation between IAM and Redis RBAC means that there is no out-of-the-box way to grant IAM entities (roles, users, or groups) read and write access to Redis. In this post, we present a solution that allows you to associate IAM entities with ElastiCache RBAC users and ACLs. The overall solution demonstrates how ElastiCache RBAC users can effectively be associated with IAM through the user of AWS Secrets Manager as a proxy for granting access to ElastiCache RBAC user credentials. Solution overview To demonstrate this solution, we implement the following high-level steps: Define a set of ElastiCache RBAC users; each with credentials and ACL access strings. This defines the commands and keys that a user has access to. Grant IAM entities access to ElastiCache RBAC user credentials stored in Secrets Manager through secret policies and IAM policies. Configure users, applications, and services with roles or users that can access ElastiCache RBAC user credentials from Secrets Manager so they can connect to ElastiCache Redis by assuming an ElastiCache RBAC user. This also defines which commands and keys they have access to. Store Redis RBAC passwords in Secrets Manager You can create ElastiCache RBAC users via the AWS Command Line Interface (AWS CLI), AWS API, or AWS CloudFormation. When doing so, they’re specified with a plaintext password and a username. These credentials must then be shared with the actors who access the Redis replication group via ElastiCache RBAC users (human users or applications). The solution we present uses Secrets Manager to generate a password that is used when the ElastiCache RBAC user is created, meaning that no plaintext passwords are exposed and must be retrieved through Secrets Manager. Manage access to ElastiCache RBAC user passwords in Secrets Manager with IAM You can restrict access to the credentials stored in Secrets Manager to specific IAM entities by defining a secret resource policy in addition to IAM policies. IAM entities can then retrieve the credentials by making the appropriate AWS API or AWS CLI call. See the following code: { "Version" : "2012-10-17", "Statement" : [ { "Effect" : "Allow", "Principal" : { "AWS" : "arn:aws:iam::1234567890123:role/producer" }, "Action" : [ "secretsmanager:DescribeSecret", "secretsmanager:GetSecretValue" ], "Resource" : "arn:aws:secretsmanager:us-west-2:1234567890123:secret:producerRBACsecret " } ] } Manage access to ElastiCache for Redis with ElastiCache RBAC, Secrets Manager, and IAM In essence, we’re creating a mapping between IAM roles and ElastiCache RBAC users by defining which IAM roles, groups, and users can retrieve credentials from Secrets Manager. The following diagram demonstrates the flow of the solution. First, an actor with an IAM role that has permissions to the secret (named Producer Credentials) reads it from Secrets Manager (Steps 1 and 2). Next, the actor establishes a connection with the credentials to an ElastiCache replication group (3). After the user is authenticated (4), they can perform commands and access keys (5)— the commands and keys that can be accessed are defined by the ElastiCache RBAC user’s access string. Implementation in AWS Cloud Development Kit We present the solution to you in the AWS Cloud Development Kit (AWS CDK), which is a software development framework that defines infrastructure through object-oriented programming languages—in our case, Typescript. You can clone the code from the GitHub repo. The following is deployed: One VPC with isolated subnets and one Secrets Manager VPC endpoint One security group with an ingress rule that allows all traffic in via port 6379 Three ElastiCache RBAC users: default, consumer, producer Three secrets: default, producer, consumer One ElastiCache RBAC user group One ElastiCache subnet group One ElastiCache replication group One AWS Key Management Service (AWS KMS) customer master key (CMK) to encrypt the three secrets One KMS CMK key to encrypt the ElastiCache replication group Three IAM roles: consumer, producer, outsider One AWS Lambda layer that contains the redis-py Python module Three Lambda functions: producerFn, consumerFn, outsiderFn The following diagram illustrates this architecture. A VPC is created to host the ElastiCache replication group and the Lambda functions. The code snippet defines the VPC with an isolated subnet, which in AWS CDK terms is a private subnet with no routing to the internet. For resources in the isolated subnet to access Secrets Manager, a Secrets Manager VPC interface endpoint is added. See the following code: const vpc = new ec2.Vpc(this, "Vpc", { subnetConfiguration: [ { cidrMask: 24, name: 'Isolated', subnetType: ec2.SubnetType.ISOLATED, } ] }); const secretsManagerEndpoint = vpc.addInterfaceEndpoint('SecretsManagerEndpoint', { service: ec2.InterfaceVpcEndpointAwsService.SECRETS_MANAGER, subnets: { subnetType: ec2.SubnetType.ISOLATED } }); secretsManagerEndpoint.connections.allowDefaultPortFromAnyIpv4(); To modularize the design of the solution, a RedisRbacUser class is also created. This class is composed of two AWS CDK resources: a Secrets Manager secret and an ElastiCache CfnUser; these resources are explicitly grouped together because the secret stores the CfnUser password, and as we show later, read and decrypt permissions to the secret are granted to an IAM user. See the following code: export class RedisRbacUser extends cdk.Construct { ... constructor(scope: cdk.Construct, id: string, props: RedisRbacUserProps) { super(scope, id); ... this.rbacUserSecret = new secretsmanager.Secret(this, 'secret', { generateSecretString: { secretStringTemplate: JSON.stringify({ username: props.redisUserName }), generateStringKey: 'password', excludeCharacters: '@%*()_+=`~{}|[]:";'?,./' }, }); const user = new elasticache.CfnUser(this, 'redisuser', { engine: 'redis', userName: props.redisUserName, accessString: props.accessString? props.accessString : "off +get ~keys*", userId: props.redisUserId, passwords: [this.rbacUserSecret.secretValueFromJson('password').toString()] }) ... } } An IAM role is granted the ability to read the RedisRbacUser’s secret. This association means that the IAM role can decrypt the credentials and use them to establish a connection with Redis as the producerRbacUser: const producerRole = new iam.Role(this, producerName+'Role', { ... }); producerRbacUser.grantSecretRead(producerRole) The function grantSecretRead in the RedisRbacUser class modifies the role that is passed into it to allow it to perform actions secretsmanager:GetSecretValue and secretsmanager:DescribeSecret. The same function also modifies the secret by adding a resource policy that allows the same actions and adds the role to the principal list. This prevents unlisted principals from attempting to access the secret after the stack is deployed. See the following code: public grantReadSecret(principal: iam.IPrincipal){ if (this.secretResourcePolicyStatement == null) { this.secretResourcePolicyStatement = new iam.PolicyStatement({ effect: iam.Effect.ALLOW, actions: ['secretsmanager:DescribeSecret', 'secretsmanager:GetSecretValue'], resources: [this.rbacUserSecret.secretArn], principals: [principal] }) this.rbacUserSecret.addToResourcePolicy(this.secretResourcePolicyStatement) } else { this.secretResourcePolicyStatement.addPrincipals(principal) } this.rbacUserSecret.grantRead(principal) } A Lambda function uses the IAM role created previously to decrypt the credentials stored in the secret and access the ElastiCache for Redis replication group. The ElastiCache primary endpoint address and port as well as the secret ARN are provided via environment variables. See the following code: const producerLambda = new lambda.Function(this, producerName+'Fn', { ... role: producerRole, ... environment: { redis_endpoint: ecClusterReplicationGroup.attrPrimaryEndPointAddress, redis_port: ecClusterReplicationGroup.attrPrimaryEndPointPort, secret_arn: producerRbacUser.getSecret().secretArn, } }); Deploy the solution The infrastructure for this solution is implemented in AWS CDK in Typescript and can be cloned from the GitHub repository. For instructions on setting up your environment for AWS CDK, see Prerequisites. To deploy the solution, first install the node dependencies by navigating to the root of the project and running the following command in the terminal: $ npm install Next, build the Lambda .zip files that are used in the Lambda functions. To do so, enter the following command in your terminal: $ npm run-script zip To deploy the solution to your account, run the following command from the root of the project: $ cdk deploy The command attempts to deploy the solution in the default AWS profile defined in either your ~/.aws/config file or your ~/.aws/credentials file. You can also define a profile by specifying the --profile profile_name at the end of the command. Test the solution Three Lambda functions are deployed as a part of the stack: Producer – Decrypts the producer credentials from Secrets Manager and establishes a connection to Redis with these credentials. After it’s authenticated as the producer RBAC user, the function attempts to set a key (time) with a string representation of the current time. If the function attempts to perform any other commands, it fails because the producer RBAC user only allows it to perform SET operations. Consumer – Decrypts the consumer credentials from Secrets Manager and establishes a connection to Redis with these credentials. After it’s authenticated as the consumer RBAC user, the function attempts to get the value of the time key that was set by the producer. The function fails if it attempts to perform other Redis commands because the access string for the consumer RBAC user only allows it to perform GET operations. Outside – Attempts to decrypt the producer credentials from Secrets Manager and fails because the function’s role doesn’t have permission to decrypt the producer credentials. Create a test trigger for each function To create a test event to test each function, complete the following steps: On the Lambda console, navigate to the function and choose Test. Select Create new test event. For Event template, choose test. Use the default JSON object in the body—the test functions don’t read the event contents. Trigger each test by choosing Test. Producer function writes to Redis The producer function demonstrates how you can use an IAM role attached to a Lambda function to retrieve an ElastiCache RBAC user’s credentials from Secrets Manager, and then use these credentials to establish a connection to Redis and perform a write operation. The producer function writes a key time with a value of the current time. The producer function can write to Redis because its IAM role allows it to get and decrypt the Producer credentials in Secrets Manager, and the Producer ElastiCache RBAC user’s access string was defined to allow SET commands to be performed. The producer function can’t perform GET commands because the same access string doesn’t allow GET commands to be performed. See the following code: const producerRbacUser = new RedisRbacUser(this, producerName+'RBAC', { ... accessString: 'on ~* -@all +SET' }); Consumer function can read but can’t write to Redis This function demonstrates the use case in which you allow a specific IAM role to access ElastiCache RBAC credentials from Secrets Manager and establish a connection with Redis, but the actions it can perform are restricted by an access string setting. The consumer function attempts to write a key time with a value of the current time, and subsequently attempts to read back the key time. The consumer function can’t write to Redis, but it can read from it. Even though the function has an IAM role that permits it to get and decrypt the Consumer credentials in Secrets Manager, the Consumer ElastiCache RBAC user was created with a Redis ACL access string value that only allows the GET command. See the following code: const consumerRbacUser = new RedisRbacUser(this, consumerName+'RBAC', { ... accessString: 'on ~* -@all +GET' }); Outsider function can’t read or write to Redis The outsider function demonstrates the use case in which you specify an IAM role that can’t access Redis because it can’t decrypt credentials stored in Secrets Manager. The outsider Lambda function attempts to decrypt the Producer credentials from Secrets Manager, then read and write to the Redis cluster. An exception is raised that indicates that it’s not permitted to access the Producer secret. The IAM role attached to it doesn’t have the permissions to decrypt the Producer secret, and the secret it’s trying to decrypt has a resource policy that doesn’t list the role in the principals list attribute. Cost of running the solution The solution to associate an IAM entity with an ElastiCache RBAC user requires deploying a sample ElastiCache cluster, storing secrets in Secrets Manager, and defining an ElastiCache RBAC user and user group. To run this solution in us-east-1, you can expect the following costs. Please note that costs vary by region. Secrets Manager $0.40 per secret per month, prorated for secrets stored less than a month $0.05 per 10,000 API calls Assuming each of the three secrets are called 10 times for testing purposes in one day, the total cost is (3 * $0.40 / 30) + (3 * 10 / 1000) * $0.05 = $0.04015 ElastiCache cache.m6g.large node $0.077 per hour Assuming that the node used for one day, the total cost is $1.848 Lambda function $0.0000000021 per millisecond of runtime Assuming that each function is called 10 times for testing purposes in one day and that the average runtime is 400 milliseconds, the total cost is 3 * 400 * $0.000000021 = $0.00000252 AWS KMS $1 per month, per key, prorated to the hour $0.03 per 10000 API calls Assuming that the solution is torn down after 24 hours, the total cost for two keys is 2 * 1 / 31 = $0.06 The total cost of the solution, for 24 hours, assuming that each of the three Lambda functions are called 10 times, is $1.95. Clean up the resources To delete all resources from your account, including the VPC, call the following command from the project root folder: $ cdk destroy As in the cdk deploy command, the destroy command attempts to run on the default profile defined in ~/.aws/config or ~/.aws/credentials. You can specify another profile by providing --profile as a command line option. Conclusion Although fine-grained access is now possible with the inclusion of Redis Role-Based Access Control (RBAC) users, user groups, and access strings in Amazon ElastiCache for Redis 6.x, there is no out-of-the box ability to associate ElastiCache RBAC users with IAM entities (roles, users, and groups). This post presented a solution that restricted ElastiCache RBAC credentials (username and password) access by storing them in Secrets Manager and granting select IAM entities permissions to decrypt these credentials—effectively linking ElastiCache RBAC users with IAM roles by way of Secrets Manager as a proxy. Additional benefits presented in this solution include: ElastiCache RBAC passwords aren’t defined, stored, or shared in plaintext when ElastiCache RBAC users are created ElastiCache RBAC users and groups can be defined wholly in AWS CDK (and by extension AWS CloudFormation) and included as infrastructure as code You can trace Redis access to IAM users because ElastiCache RBAC credentials are stored and accessed through Secrets Manager and access to these credentials can be traced via AWS CloudTrail From this post, you learned how to authorize access to specific ElastiCache Redis keys and commands through access strings. For more information about how to configure access strings, see Authenticating users with Role-Based Access Control (RBAC). Beyond the scope of this post is also the topic of secret key rotation. It’s recommended to further secure your infrastructure by rotating ElastiCache RBAC user credentials stored in Secrets Manager on a periodic basis. This requires the creation of a custom Lambda function to rotate and set the new password. For more information, see Rotating AWS Secrets Manager Secrets for One User with a Single Password. The custom Lambda function makes API calls to modify the existing user password (in Boto through the modify_user method). About the authors Claudio Sidi is a DevOps Cloud Architect at AWS Professional Services. His role consists of helping customers to achieve their business outcomes through the use of AWS services and DevOps technologies. Outside of work, Claudio loves going around the Bay Area to play soccer pickup games, and he also enjoys watching sport games on TV. Jim Gallagher is an AWS ElastiCache Specialist Solutions Architect based in Austin, TX. He helps AWS customers across the world best leverage the power, simplicity, and beauty of Redis. Outside of work he enjoys exploring the Texas Hill Country with his wife and son. Mirus Lu is a DevOps Cloud Architect at AWS Professional Services, where he helps customers innovate with AWS through operational and architectural solutions. When he’s not coding in Python, Typescript or Java, Mirus enjoys playing the guitar, working on his car and playing practical jokes on unsuspecting friends and coworkers. https://aws.amazon.com/blogs/database/manage-aws-elasticache-for-redis-access-with-role-based-access-control-aws-secrets-manager-and-iam/

0 notes

Text

Drupal Architect

Role: Drupal Architect Location: Chicago IL / Washington DC Type: Contract Job Description: Must have skills: · Experience in defining & realizing end-to-end Solution Architecture for large & complex systems · 10+ years of experience in IT including at least 18 months in Architecture roles · Experience in Architecture consulting engagements is required · Exposure to emerging data technologies in the content management world · Evident track record in Drupal community via Open source code contributions, presentations made in conferences etc. · Strong communication skills and ability to independently and directly communicate with the customers. · Experience conducting security and performance review audits and workshops · Experience in providing leadership to teams in following Drupal Coding Standards, code review and the audit process, with attention to detail · Deep expertise in Drupal 8/9 core/ Symfony concepts, Services, Dependency Injection, etc. (including Twig, Custom Entities, Views, Panels, Blocks/Custom blocks, Drupal Config Management/CMI, layout builder, migrate module, writing custom modules, external integrations) · Proven ability to design and estimate new end-to-end Drupal systems including integrations with external systems (DAM, IAM/AD, CDN etc), and deep collaboration with infra-teams. Expertise in key Drupal topics like: · Cache API · Caching systems and caching architecture · Plugin Systems · Migration · Render API · Theming · Batches and Queues · DAM · Module Development: · Writing new modules from scratch · Working within already written custom code to enhance or change existing features. · Troubleshooting bugs, applying security patches, etc. · Knowledge of debugging tools like XDebug · Strong knowledge of JavaScript, HTML, and CSS · Strong fundamental knowledge of data structures and algorithms. · Experience working in fast paced and mature practice of Agile/Scrum · Credible practice of following rigorous quality practices such as reviews, code scans, etc. · Experience working on enterprise-level SAAS solutions where security, stability, and performance are top priorities · Experience setting up virtualized environment like vagrant or docker Reference : Drupal Architect jobs from Latest listings added - JobsAggregation http://jobsaggregation.com/jobs/technology/drupal-architect_i10355

0 notes

Text

Drupal Architect

Role: Drupal Architect Location: Chicago IL / Washington DC Type: Contract Job Description: Must have skills: · Experience in defining & realizing end-to-end Solution Architecture for large & complex systems · �� 10+ years of experience in IT including at least 18 months in Architecture roles · Experience in Architecture consulting engagements is required · Exposure to emerging data technologies in the content management world · Evident track record in Drupal community via Open source code contributions, presentations made in conferences etc. · Strong communication skills and ability to independently and directly communicate with the customers. · Experience conducting security and performance review audits and workshops · Experience in providing leadership to teams in following Drupal Coding Standards, code review and the audit process, with attention to detail · Deep expertise in Drupal 8/9 core/ Symfony concepts, Services, Dependency Injection, etc. (including Twig, Custom Entities, Views, Panels, Blocks/Custom blocks, Drupal Config Management/CMI, layout builder, migrate module, writing custom modules, external integrations) · Proven ability to design and estimate new end-to-end Drupal systems including integrations with external systems (DAM, IAM/AD, CDN etc), and deep collaboration with infra-teams. Expertise in key Drupal topics like: · Cache API · Caching systems and caching architecture · Plugin Systems · Migration · Render API · Theming · Batches and Queues · DAM · Module Development: · Writing new modules from scratch · Working within already written custom code to enhance or change existing features. · Troubleshooting bugs, applying security patches, etc. · Knowledge of debugging tools like XDebug · Strong knowledge of JavaScript, HTML, and CSS · Strong fundamental knowledge of data structures and algorithms. · Experience working in fast paced and mature practice of Agile/Scrum · Credible practice of following rigorous quality practices such as reviews, code scans, etc. · Experience working on enterprise-level SAAS solutions where security, stability, and performance are top priorities · Experience setting up virtualized environment like vagrant or docker Reference : Drupal Architect jobs Source: http://jobrealtime.com/jobs/technology/drupal-architect_i11069

0 notes

Text

Best School Management Software for African Countries- Genius Edusoft!

In most African countries primary education is required for all children until they reach a certain age. In many places, however, only about half of the students who attend primary school finish the entire course of study, and in some areas more than 90 percent of primary school students repeat at least one grade. The World Economic Forum accessed 140 countries, including 38 African countries, to rank the best education systems based on skill development. The report looks at the general level of skills of the workforce and the quantity and quality of education in each country. Factors considered include: developing digital literacy, interpersonal skills, and the ability to think critically and creatively.

Insights of Genius Education Management System:

We are a 16 year old and leading company in School ERP because we provide user-friendly and customized school management system as per the needs and demand of clients. Our software is Angular JS based Cloud application i.e. real time application as well as Web based software. We provide our service to every kind of entity whether it is School, Institute, Training centres or Colleges irrespective of the size of organization, big or small. We have a fantastic set of clients using our system which makes us understand on daily basis the strengths and loopholes if any.

Our software is designed with a tag-line“Enhanced Education system with limited cost”. We assure 100% safety & security to each of our clients relating to their data. Our software is for every person working with the entity; teachers, students, Principal, Admin, Parents and even other employees as they are heart of any organization.

Why should African schools use ERP software?

With the help of School management education software’s the next level demand arising in the education field rising in today’s scenario can be met with ease as compared to traditional education systems. As we see the history of education in African countries they are not able to satisfy their client needs because of some barriers and to overcome them they need a robust school management education system which is exactly our Genius Education software is currently which will help them to improve their competitiveness and business in the market as well meeting their strategic objectives. Every organization whether small or big needs an automation in its education system because traditional methods are not able to satisfy all the requirements. This is where our Genius Edusoft comes into picture to help in every possible way to enhance the education system and its management in African schools, colleges, and every organization providing education by improving their internal processes, cutting down their operating costs and help them to improve their relation with customers which indirectly helps the organization to improve their decision making capacities.

Challenges faced by African countries to implement ERP software?

Implementing an ERP software for a school, college, institute or any educational training entity is not an easy task because lot of spoon feeding has to be done to employees of the entity to get used to it and update on daily basis the needed information. On the other hand African countries has lot of limitations like other developing countries like low economic capacities, limited human skills and limited infrastructures therefore there can be lot of difficulties in implementing the ERP in African context. Many a times implementation failures also occur when there is a lack in updates, maintenance, consultation and training for the same.

How Genius Edusoft helps African countries to improvise their education system by overcoming the challenges!

As discussed African countries have limitations in many aspects; Genius Edusoft overcomes this loophole easily as our system is likely to work on atomization which is covered under various modules of our ERP like our Attendance management module where if RFID system is installed in the school the student can scan the QR code while entering in the campus and attendance will be fetched into the system easing the daily hustle of class teacher to manually take the attendance.

Even the long long queue that creates a lot of waste of time of school management to collect the fees of students has been reduced by our Admission module as parents can directly pay the fees through an online token through our payment gateway.

Even the circulars that piun or any school boy used to come with for any event, picnic, sports day news or any such event is now automated by our academic module where admin or teachers can directly upload circulars for important announcement for which notification will be received by the parents/students on their mobile app.

Even our Exam management module is a great work as our education software is designed with a tag line “Enhanced Education System” where in teachers can add manual exam assigning scores and ranks to students. Even students can take online examination created by teachers by accessing their login credentials

Even now cost of getting ID cards designed can be reduced with the help of our ID card generator in software as it can be managed from our software wherein institutes just need to integrate their printer with our system & get Id cards printed for students as well as employees

Genius Edusoft Verdict

From this article you might have got a better understanding of how our real time based software helps African countries including Ghana, Nigeria, Tanzania, Kenya, Madagascar, Rwanda, Namibia, Zimbabwe, Somalia, Tunisia, Malawi, Botswana etc by enhancing their education system efficiently by reducing their costs with different modules designed effectively keeping in mind general as well as special needs of every training centres like schools, institutes, colleges etc.

Genius Education Management Software

Address: 331-332, 3rd floor, Patel Avenue Nr.Gurudwara, S.G. Road, Thaltej, Ahmedabad, Gujarat, India.

Contact: 8320243119, 9328151561

Email: [email protected]

Website:

www.geniusedusoft.com

#schoolmanagementsystem#schoolmanagementsoftware#schoolsoftware#schoolmanagementapplication#schoolmanagementsystemsoftware#schoolmanagementERPsoftware#schoolmanagementsystemERP#schoolERP#schoolERPsoftware#schoolERPsoftwaredemo#schoolERPsystem#ERPschoolmanagementsoftware#cloudbasedschoolmanagementsystem#cloudschoolsoftware#cloudbasedschoolmanagementsoftware#cloudbasedschoolERP#stateboardschoolmanagementsystem#ERPeducationmanagementsoftware#educationmanagementsoftware#educationmanagementapplication#educationmanagementsystem

0 notes

Photo

New Post has been published on https://warmdevs.com/moving-towards-web3-0-using-blockchain-as-core-tech.html

Moving Towards web3.0 Using Blockchain as Core Tech

The invention of Bitcoin and blockchain technology sets the foundations for the next generations of web applications. The applications which will run on peer to peer network model with existing networking and routing protocols. The applications where centralized Servers would be obsolete and data will be controlled by the entity whom it belongs, i.e., the User.

From Web 1.0 to Web 2.0

As we all know, Web 1.0 was static web, and the majority of the information was static and flat. The major shift happened when user-generated content becomes mainstream. Projects such as WordPress, Facebook, Twitter, YouTube, and others are nominated as Web 2.0 sites where we produce and consume verity of contents such as Video, Audio, Images, etc.

The problem, however, was not the content; it was the architecture. The Centralized nature of Web opens up tons of security threats, data gathering of malicious purpose, privacy intrusion and cost as well.

The invention of Bitcoin and successful use of decentralized, peer to peer, secure network opens up the opportunity to take a step back and redesign the way our web works. The blockchain is becoming the backbone of the new Web, i.e., Web 3.0.

History of blockchain

The invention of blockchain came to the mainstream after the boom of the Bitcoin in 2018. Have a look at the graph below; Bitcoin was at its peak around $20000.

But the technologies that power the blockchain network is not something new. These concepts were researched and developed during the ’90s. Have a look at this timeline.

The concepts, such as proof of work, peer to peer network, public key cryptography and consensus algorithms for distributed computing which powers the blockchain have been researched and developed by various universities and computer scientists during the ’90s.

These algorithms and concepts are mature and battle-tested by various organizations. Satoshi Nakamoto combined these technologies and ideas together and built a decentralized, immutable, peer to peer database primarily used for financial purposes.

Initially, we all thought that blockchain is suitable only for cryptocurrencies and not for other applications. This thought was challenged when Vitalik buterin developed the Etherum cryptocurrency with a new Smart contract feature.

Smart contracts changed everything. The ability to code your own application and run on top of blockchain was the answer to critics who thought that blockchain is only for currencies.

“Cryptocurrency is a small subset of the blockchain, like the Email of the internet.”

The smart contracts open up the wave of new applications. The viral game cryptokitties showcases blockchain can handle large end applications such as games.

Smart contracts are written in Solidity language and can be executed on Etherum network. The protocol and design of Etherum inspired the engineers to build an open source and blockchain agnostic platform to build decentralized applications.

DApps protocols

As I have mentioned earlier, Etherum smart contracts were among the first such technology that can be used to program the decentralized applications. The issue was that smart contracts were written by keeping transactions or money in mind.

Developers need multiple tools to build a full-fledged web application such as storage (database, files, index, etc.), message queues or event queues to facilitate the communication. Etherum address these problems by introducing Etherum Swarm and Etherum Whisper projects.

As you can see in the diagram above, With Etherum Smart contracts for writing contracts and swarm to store files that can be associated with contracts. To make the decentralized apps communicate with each other, Whisper can be used. All of these can run inside the decentralized browser such as Dbrowser.

Swarm is a distributed storage platform for an ethereum stack. The core objective of Swarm is to provide decentralized storage for ethereum public records. Swarm is supposed to save the DApps code base and data associated with it.

Swarm allows public participants to pool their storage and bandwidth resources to make the network robust and get paid in Etherum incentives. Fair deal.

Ethereum whisper, in a nutshell, is a protocol for DApps running on Etherum blockchain to communicate with each other, similar to message queues or event queues. Whisper provides low-level API’s to support communication between DApps. You can learn more about it here.

However, do you sense the limitations here? Well, the main issue is that this is entirely Etherum agnostic, i.e., all apps are written and run on top of Etherum blockchain. This change will increase the size of the chain significantly, and scalability will be an issue.

Other than scalability, adaptability will be an issue as well. We need a smooth shift from the centralized web to decentralized web. A shift where masses do not need to change everything for the sake of the decentralized web.

This is where our new protocol comes in, called the IPFS (Interplanetary file system) stack by Protocols labs.

Protocols labs is dedicated to building the decentralized web which runs in parallel to TCP/IP stack. This will make the shift from existing web to web 3.0 very smooth, and masses do not need to make a significant change to use the web 3.0.

Here is the stack.

Rings a bell? This stack looks pretty similar to TCP/IP protocol layers. Let’s learn about this in detail.

The IPFS stack is divided into three essential layers:

Networking layer.

Data definition and naming layer.

Data layer.

Let’s learn about each of them in detail.

Networking Layer

One of the core challenges in the decentralized web is the peer to peer network and designing the protocols which work in a peer to peer network in parallel to the centralized system.

The Libp2p project addresses this challenge with protocols labs. Libp2p provides the modular stack which one can use to build peer to peer network in conjunction with existing protocols such as WebRTC or any new transport layer protocols. Hence, Libp2p is transported agnostics.

Features of libp2p:

Libp2p is a modular networking stack. You can use all of it or use part of the stack to build your application.

Libp2p provides transport and peer to peer protocols to build large, robust and scalable network application.

Libp2p is transport protocol agnostics. It can work with TCP, UDP, WebRTC, WebSockets, and UDP.

Libp2p offers a number of modules such as transport interface, discovery, distributed hash lookup, peer discovery, and routing.

Libp2p offers built-in encryption to prevent eavesdropping.

Libp2p offers built-in roaming features so that your service can switch networks without any intervention and loss of packets.

Libp2p is the solution upon which the networking layer of peer to peer can be built.

Data definition and naming layer

Content addressing through hashes is widely used in a distributed system. We use hash-based content addressing in Git, cryptocurrencies, etc. The same is also used in peer to peer networking.

IPLD provides a unified namespace for all hash-based protocals. Using IPLD, data can be traversed across various protocols to explore and view the data spread across peer to peer network.

IPNS is a system to create a mutable address to the contents stored on IPFS. The reason why they are mutable because the addresses of the content change every time the content changes.

Data Layer

The topmost layer in this stack is the data layer. Interplanetary file system or IPFS is the peer to peer hypermedia protocol. IPFS provides a way to store and retrieve the data across peer to peer network.

IPFS uses IPNS and Libp2p to create, name and distribute content across peer to peer network.

Anyone can become an IPFS peer and start looking for the content using hashes. IPFS peer does not need to store every data; they only need to store data created by them or the one they have searched in the past.

Features of IPFS:

IPFS provides peer to peer hypermedia protocols for web 3.0.

IPFS can work with existing protocols and browsers. This gives a smooth transition from centralized web to web 3.0.

IPFS uses Libp2p to support peer to peer networking.

IPFS data is cryptographically secure.

IPFS can save tons of bandwidth in streaming services. For in-depth details, read this white paper.

IPFS is under development, and there are some fantastic applications built by developers that are running on top of the IPFS. One of the applications of my choice is d.tube. This is a decentralized version of YouTube with built-in incentive and reward feature provided by Steem blockchain.

IPFS also trying to solve the incentive issue. We can’t expect every person connected to the internet to act as a peer. In order to provide an incentive to those who participate and contribute value to the network, IPFS has created a coin called Filecoin which can be paid to peers in the network by the user.

Conclusion

Decentralized web or Web 3.0 is the future. There is a need to design a robust, secure and peer to peer network to tackle the issue in existing web. Etherum and IPFS are leading the path to develop the development suite for developers like us to start developing core applications that are needed to make a smooth transition from the existing web to decentralized web.

0 notes

Text

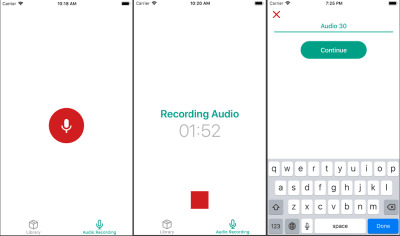

How To Create An Audio/Video Recording App With React Native: An In-Depth Tutorial

How To Create An Audio/Video Recording App With React Native: An In-Depth Tutorial

Oleh Mryhlod

2018-04-19T12:15:26+02:002018-04-19T10:27:58+00:00

React Native is a young technology, already gaining popularity among developers. It is a great option for smooth, fast, and efficient mobile app development. High-performance rates for mobile environments, code reuse, and a strong community: These are just some of the benefits React Native provides.

In this guide, I will share some insights about the high-level capabilities of React Native and the products you can develop with it in a short period of time.

We will delve into the step-by-step process of creating a video/audio recording app with React Native and Expo. Expo is an open-source toolchain built around React Native for developing iOS and Android projects with React and JavaScript. It provides a bunch of native APIs maintained by native developers and the open-source community.

After reading this article, you should have all the necessary knowledge to create video/audio recording functionality with React Native.

Let's get right to it.

Brief Description Of The Application

The application you will learn to develop is called a multimedia notebook. I have implemented part of this functionality in an online job board application for the film industry. The main goal of this mobile app is to connect people who work in the film industry with employers. They can create a profile, add a video or audio introduction, and apply for jobs.

The application consists of three main screens that you can switch between with the help of a tab navigator:

the audio recording screen,

the video recording screen,

a screen with a list of all recorded media and functionality to play back or delete them.

Check out how this app works by opening this link with Expo.

Getting workflow just right ain't an easy task. So are proper estimates. Or alignment among different departments. That's why we've set up 'this-is-how-I-work'-sessions — with smart cookies sharing what works well for them. A part of the Smashing Membership, of course.

Explore features →

First, download Expo to your mobile phone. There are two options to open the project :

Open the link in the browser, scan the QR code with your mobile phone, and wait for the project to load.

Open the link with your mobile phone and click on “Open project using Expo”.

You can also open the app in the browser. Click on “Open project in the browser”. If you have a paid account on Appetize.io, visit it and enter the code in the field to open the project. If you don’t have an account, click on “Open project” and wait in an account-level queue to open the project.

However, I recommend that you download the Expo app and open this project on your mobile phone to check out all of the features of the video and audio recording app.

You can find the full code for the media recording app in the repository on GitHub.

Dependencies Used For App Development

As mentioned, the media recording app is developed with React Native and Expo.

You can see the full list of dependencies in the repository’s package.json file.

These are the main libraries used:

React-navigation, for navigating the application,

Redux, for saving the application’s state,

React-redux, which are React bindings for Redux,

Recompose, for writing the components’ logic,

Reselect, for extracting the state fragments from Redux.

Let's look at the project's structure:

Large preview

src/index.js: root app component imported in the app.js file;

src/components: reusable components;

src/constants: global constants;

src/styles: global styles, colors, fonts sizes and dimensions.

src/utils: useful utilities and recompose enhancers;

src/screens: screens components;

src/store: Redux store;

src/navigation: application’s navigator;

src/modules: Redux modules divided by entities as modules/audio, modules/video, modules/navigation.

Let’s proceed to the practical part.

Create Audio Recording Functionality With React Native

First, it's important to сheck the documentation for the Expo Audio API, related to audio recording and playback. You can see all of the code in the repository. I recommend opening the code as you read this article to better understand the process.

When launching the application for the first time, you’ll need the user's permission for audio recording, which entails access to the microphone. Let's use Expo.AppLoading and ask permission for recording by using Expo.Permissions (see the src/index.js) during startAsync.

Await Permissions.askAsync(Permissions.AUDIO_RECORDING);

Audio recordings are displayed on a seperate screen whose UI changes depending on the state.

First, you can see the button “Start recording”. After it is clicked, the audio recording begins, and you will find the current audio duration on the screen. After stopping the recording, you will have to type the recording’s name and save the audio to the Redux store.

My audio recording UI looks like this:

Large preview

I can save the audio in the Redux store in the following format:

audioItemsIds: [‘id1’, ‘id2’], audioItems: { ‘id1’: { id: string, title: string, recordDate: date string, duration: number, audioUrl: string, } },

Let’s write the audio logic by using Recompose in the screen’s container src/screens/RecordAudioScreenContainer.

Before you start recording, customize the audio mode with the help of Expo.Audio.set.AudioModeAsync (mode), where mode is the dictionary with the following key-value pairs:

playsInSilentModeIOS: A boolean selecting whether your experience’s audio should play in silent mode on iOS. This value defaults to false.

allowsRecordingIOS: A boolean selecting whether recording is enabled on iOS. This value defaults to false. Note: When this flag is set to true, playback may be routed to the phone receiver, instead of to the speaker.

interruptionModeIOS: An enum selecting how your experience’s audio should interact with the audio from other apps on iOS.

shouldDuckAndroid: A boolean selecting whether your experience’s audio should automatically be lowered in volume (“duck”) if audio from another app interrupts your experience. This value defaults to true. If false, audio from other apps will pause your audio.

interruptionModeAndroid: An enum selecting how your experience’s audio should interact with the audio from other apps on Android.

Note: You can learn more about the customization of AudioMode in the documentation.

I have used the following values in this app:

interruptionModeIOS: Audio.INTERRUPTION_MODE_IOS_DO_NOT_MIX, — Our record interrupts audio from other apps on IOS.

playsInSilentModeIOS: true,

shouldDuckAndroid: true,

interruptionModeAndroid: Audio.INTERRUPTION_MODE_ANDROID_DO_NOT_MIX — Our record interrupts audio from other apps on Android.

allowsRecordingIOS Will change to true before the audio recording and to false after its completion.

To implement this, let's write the handler setAudioMode with Recompose.

withHandlers({ setAudioMode: () => async ({ allowsRecordingIOS }) => { try { await Audio.setAudioModeAsync({ allowsRecordingIOS, interruptionModeIOS: Audio.INTERRUPTION_MODE_IOS_DO_NOT_MIX, playsInSilentModeIOS: true, shouldDuckAndroid: true, interruptionModeAndroid: Audio.INTERRUPTION_MODE_ANDROID_DO_NOT_MIX, }); } catch (error) { console.log(error) // eslint-disable-line } }, }),

To record the audio, you’ll need to create an instance of the Expo.Audio.Recording class.

const recording = new Audio.Recording();

After creating the recording instance, you will be able to receive the status of the Recording with the help of recordingInstance.getStatusAsync().

The status of the recording is a dictionary with the following key-value pairs:

canRecord: a boolean.

isRecording: a boolean describing whether the recording is currently recording.

isDoneRecording: a boolean.

durationMillis: current duration of the recorded audio.

You can also set a function to be called at regular intervals with recordingInstance.setOnRecordingStatusUpdate(onRecordingStatusUpdate).

To update the UI, you will need to call setOnRecordingStatusUpdate and set your own callback.

Let’s add some props and a recording callback to the container.

withStateHandlers({ recording: null, isRecording: false, durationMillis: 0, isDoneRecording: false, fileUrl: null, audioName: '', }, { setState: () => obj => obj, setAudioName: () => audioName => ({ audioName }), recordingCallback: () => ({ durationMillis, isRecording, isDoneRecording }) => ({ durationMillis, isRecording, isDoneRecording }), }),

The callback setting for setOnRecordingStatusUpdate is:

recording.setOnRecordingStatusUpdate(props.recordingCallback);

onRecordingStatusUpdate is called every 500 milliseconds by default. To make the UI update valid, set the 200 milliseconds interval with the help of setProgressUpdateInterval:

recording.setProgressUpdateInterval(200);

After creating an instance of this class, call prepareToRecordAsync to record the audio.

recordingInstance.prepareToRecordAsync(options) loads the recorder into memory and prepares it for recording. It must be called before calling startAsync(). This method can be used if the recording instance has never been prepared.

The parameters of this method include such options for the recording as sample rate, bitrate, channels, format, encoder and extension. You can find a list of all recording options in this document.

In this case, let’s use Audio.RECORDING_OPTIONS_PRESET_HIGH_QUALITY.

After the recording has been prepared, you can start recording by calling the method recordingInstance.startAsync().

Before creating a new recording instance, check whether it has been created before. The handler for beginning the recording looks like this:

onStartRecording: props => async () => { try { if (props.recording) { props.recording.setOnRecordingStatusUpdate(null); props.setState({ recording: null }); } await props.setAudioMode({ allowsRecordingIOS: true }); const recording = new Audio.Recording(); recording.setOnRecordingStatusUpdate(props.recordingCallback); recording.setProgressUpdateInterval(200); props.setState({ fileUrl: null }); await recording.prepareToRecordAsync(Audio.RECORDING_OPTIONS_PRESET_HIGH_QUALITY); await recording.startAsync(); props.setState({ recording }); } catch (error) { console.log(error) // eslint-disable-line } },

Now you need to write a handler for the audio recording completion. After clicking the stop button, you have to stop the recording, disable it on iOS, receive and save the local URL of the recording, and set OnRecordingStatusUpdate and the recording instance to null:

onEndRecording: props => async () => { try { await props.recording.stopAndUnloadAsync(); await props.setAudioMode({ allowsRecordingIOS: false }); } catch (error) { console.log(error); // eslint-disable-line } if (props.recording) { const fileUrl = props.recording.getURI(); props.recording.setOnRecordingStatusUpdate(null); props.setState({ recording: null, fileUrl }); } },

After this, type the audio name, click the “continue” button, and the audio note will be saved in the Redux store.

onSubmit: props => () => { if (props.audioName && props.fileUrl) { const audioItem = { id: uuid(), recordDate: moment().format(), title: props.audioName, audioUrl: props.fileUrl, duration: props.durationMillis, }; props.addAudio(audioItem); props.setState({ audioName: '', isDoneRecording: false, }); props.navigation.navigate(screens.LibraryTab); } },

(Large preview)

Audio Playback With React Native

You can play the audio on the screen with the saved audio notes. To start the audio playback, click one of the items on the list. Below, you can see the audio player that allows you to track the current position of playback, to set the playback starting point and to toggle the playing audio.

Here’s what my audio playback UI looks like:

Large preview

The Expo.Audio.Sound objects and Expo.Video components share a unified imperative API for media playback.

Let's write the logic of the audio playback by using Recompose in the screen container src/screens/LibraryScreen/LibraryScreenContainer, as the audio player is available only on this screen.

If you want to display the player at any point of the application, I recommend writing the logic of the player and audio playback in Redux operations using redux-thunk.

Let's customize the audio mode in the same way we did for the audio recording. First, set allowsRecordingIOS to false.

lifecycle({ async componentDidMount() { await Audio.setAudioModeAsync({ allowsRecordingIOS: false, interruptionModeIOS: Audio.INTERRUPTION_MODE_IOS_DO_NOT_MIX, playsInSilentModeIOS: true, shouldDuckAndroid: true, interruptionModeAndroid: Audio.INTERRUPTION_MODE_ANDROID_DO_NOT_MIX, }); }, }),

We have created the recording instance for audio recording. As for audio playback, we need to create the sound instance. We can do it in two different ways:

const playbackObject = new Expo.Audio.Sound();

Expo.Audio.Sound.create(source, initialStatus = {}, onPlaybackStatusUpdate = null, downloadFirst = true)