#file handling methods in python

Explore tagged Tumblr posts

Text

Concept of File Handling in Python

Hello dear visitor !!! You all the again most welcome to this domain bittutech.com . Today we are going to discus about most important concept of Python Programming Language which is File Handling including What is file?, Types of files, Modes of files, Opening and Closing of file, Working with files… and many more. So let’s dive in this amazing concept 👍. Most of the programming languages…

#file handling#file handling in python#file handling methods in python#what is file handling#what is file handling in programming language

0 notes

Text

piece of code I thought was going to be hellish to write turned out to be relatively simple and actually kinda fun! peace and love on fucking planet earth

#turns out file handling in python isn't a super headache at all#also the built in string methods worked great to handle the lines of said file#now hopefully I've got the info I need to actually get this project done#still much to do but that's a major headache cleared#tales from the phd#chattering

6 notes

·

View notes

Text

shitGPT

for uni im going to be coding with a chatGPT user, so i decided to see how good it is at coding (sure ive heard it can code, but theres a massive difference between being able to code and being able to code well).

i will complain about a specific project i asked it to make and improve on under the cut, but i will copy my conclusion from the bottom of the post and paste it up here.

-

conclusion: it (mostly) writes code that works, but isnt great. but this is actually a pretty big problem imo. as more and more people are using this to learn how to code, or getting examples of functions, theyre going to be learning from pretty bad code. and then theres what im going to be experiencing, coding with someone who uses this tool. theres going to be easily improvable code that the quote unquote writer wont fully understand going into a codebase with my name of it - a codebase which we will need present for our degree. even though the code is not the main part of this project (well, the quality of the code at least. you need it to be able to run and thats about it) its still a shitty feeling having my name attached to code of this quality.

and also it is possible to get it to write good (readable, idiomatic, efficient enough) code, but only if you can write this code yourself (and are willing to spend more time arguing with the AI than you would writing the code.) most of the things i pointed out to the AI was stuff that someone using this as a learning resource wont know about. if it never gives you static methods, class methods, ABCs, coroutines, type hints, multi-file programs, etc without you explicitly asking for them then its use is limited at best. and people who think that its a tool that can take all the info they need, and give it back to them in a concise, readable way (which is a surprising lot of people) will be missing out without even knowing about it.

i got it to write tic-tac-toe (the standard babee) in python (the lang i have to use for uni ;-; (held at gunpoint here)). my specific prompt was "write me a python program for tictactoe that is written in an object oriented way and allows for future expansion via multiple files"

it separated it into three files below (which i think would run, but i never actually ran any of this code. just reading and judging)

why does board use display instead of __str__ and __repr__?

why is the board stored as 1d instead of 2d? thats just confusing

why does it never early return aside from check_winner? (not a big issue here but kept on choosing to never early return when i asked it to add more methods)

why is there no handling of non-number user inputs?

why are non-int inputs truncated instead of telling the user that they should input ints only?

why is display implemented like that?

why are so many lines so bloody long (wide)?

why is there a redundant self.check_winner() after the while loop in TicTaacToe.play()? and if it wasnt redundant then you could finish the game without there being anything printed telling you that the game is finished?

why is the only comment useless? (this comment wouldnt be useless if it was a doc comment tho, but it aint a doc comment. speaking of, why is there no doc comments?)

these are the more immediate things i saw, but there are other things that are bad here.

whenever i write * this is where it updated the api without changing any usage of the api.

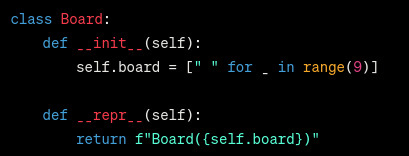

so i ask it to change board.display into __str__ and __repr__, it changes it to __str__*, it does not add a __repr__. asking it to add a __repr__ 1) removes the __str__ and 2) gives me this (the other methods are unchanged)

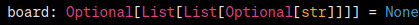

what. the. fuck. this would imply that board takes in an argument for the boardstate, but it clearly doesnt. after 4 more asks it finally has both __str__ and __repr__, without fixing the fact its implying board takes an optional arg, so i get it to add this arg. anything that needs to print the board still calls display btw.

the reasoning it gave for using display over the repr and str magics was this

While using __str__ and __repr__ is a more idiomatic approach in Python, choosing to have a separate display method can still be a valid choice, especially if the display logic is more complex or if you want to keep the __str__ method for a more concise or formal representation of the object.

which, erm what? why would __str__ be for a concise or formal repr when thats what __repr__ is for? who cares about how complex the logic is. youre calling this every time you print, so move the logic into __str__. it makes no difference for the performance of the program (if you had a very expensive func that prints smth, and you dont want it to run every time you try to print the obj then its understandable to implement that alongside str and repr)

it also said the difference between __str__ and __repr__ every damn time, which if youre asking it to implement these magics then surely you already know the difference?

but okay, one issue down and that took what? 5-10 minutes? and it wouldve taken 1 minute tops to do it yourself?

okay next implementing a tic-tac-toe board as a 1d array is fine, but kinda weird when 2d arrays exist. this one is just personal preference though so i got it to change it to a 2d list*. it changed the init method to this

tumblr wont let me add alt text to this image so:

[begin ID: Python code that generates a 2D array using nested list comprehensions. end ID]

which works, but just use [[" "] * 3 for _ in range(3)]. the only advantage listcomps have here over multiplying is that they create new lists, instead of copying the pointers. but if you update a cell it will change that pointer. you only need listcomps for the outermost level.

again, this is mainly personal preference, nothing major. but it does show that chatgpt gives u sloppy code

(also if you notice it got rid of the board argument lol)

now i had to explicitly get it to change is_full and make_move. methods in the same damn class that would be changed by changing to a 2d array. this sorta shit should be done automatically lol

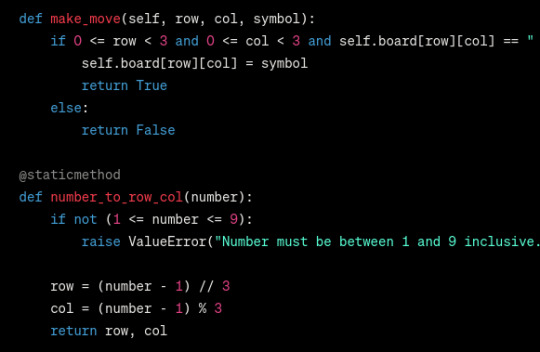

it changed make_move by taking row and col args, which is a shitty decision coz it asks for a pos 1-9, so anything that calls make_move would have to change this to a row and col. so i got it to make a func thatll do this for the board class

what i was hoping for: a static method that is called inside make_move

what i got: a standalone function that is not inside any class that isnt early exited

the fuck is this supposed to do if its never called?

so i had to tell it to put it in the class as a static method, and get it to call it. i had to tell it to call this function holy hell

like what is this?

i cant believe it wrote this method without ever calling it!

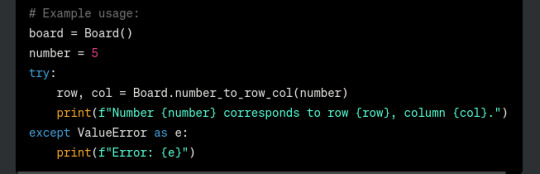

and - AND - theres this code here that WILL run when this file is imported

which, errrr, this files entire point is being imported innit. if youre going to have example usage check if __name__ = "__main__" and dont store vars as globals

now i finally asked it to update the other classes not that the api has changed (hoping it would change the implementation of make_move to use the static method.) (it didnt.)

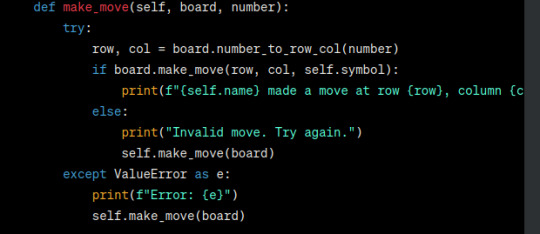

Player.make_move is now defined recursively in a way that doesnt work. yippe! why not propagate the error ill never know.

also why is there so much shit in the try block? its not clear which part needs to be error checked and it also makes the prints go offscreen.

after getting it to fix the static method not being called, and the try block being overcrowded (not getting it to propagate the error yet) i got it to add type hints (if u coding python, add type hints. please. itll make me happy)

now for the next 5 asks it changed 0 code. nothing at all. regardless of what i asked it to do. fucks sake.

also look at this type hint

what

the

hell

is

this

?

why is it Optional[str]???????? the hell??? at no point is it anything but a char. either write it as Optional[list[list[char]]] or Optional[list[list]], either works fine. just - dont bloody do this

also does anything look wrong with this type hint?

a bloody optional when its not optional

so i got it to remove this optional. it sure as hell got rid of optional

it sure as hell got rid of optional

now i was just trying to make board.py more readable. its been maybe half an hour at this point? i just want to move on.

it did not want to write PEP 8 code, but oh well. fuck it we ball, its not like it again decided to stop changing any code

(i lied)

but anyway one file down two to go, they were more of the same so i eventually gave up (i wont say each and every issue i had with the code. you get the gist. yes a lot of it didnt work)

conclusion: as you probably saw, it (mostly) writes code that works, but isnt great. but this is actually a pretty big problem imo. as more and more people are using this to learn how to code, or getting examples of functions, theyre going to be learning from pretty bad code. and then theres what im going to be experiencing, coding with someone who uses this tool. theres going to be easily improvable code that the quote unquote writer wont fully understand going into a codebase with my name of it - a codebase which we will need present for our degree. even though the code is not the main part of this project (well, the quality of the code at least. you need it to be able to run and thats about it) its still a shitty feeling having my name attached to code of this quality.

and also it is possible to get it to write good (readable, idiomatic, efficient enough) code, but only if you can write this code yourself (and are willing to spend more time arguing with the AI than you would writing the code.) most of the things i pointed out to the AI was stuff that someone using this as a learning resource wont know about. if it never gives you static methods, class methods, ABCs, coroutines, type hints, multi-file programs, etc without you explicitly asking for them then its use is limited at best. and people who think that its a tool that can take all the info they need, and give it back to them in a concise, readable way (which is a surprising lot of people) will be missing out without even knowing about it.

#i speak i ramble#effortpost#long post#progblr#codeblr#python#chatgpt#tried to add IDs in as many alts as possible. some didnt let me and also its hard to decide what to put in the IDs for code.#like sometimes you need implementation details but others just the broad overview is good enough yknow?#and i also tried to write in a way where you dont need the IDs to follow along. (but with something like this it is hard yknow?)#id in alt#aside from that one where i got cockblocked#codeblocked?#codeblocked.

40 notes

·

View notes

Text

UNLOCKING THE POWER OF AI WITH EASYLIBPAL 2/2

EXPANDED COMPONENTS AND DETAILS OF EASYLIBPAL:

1. Easylibpal Class: The core component of the library, responsible for handling algorithm selection, model fitting, and prediction generation

2. Algorithm Selection and Support:

Supports classic AI algorithms such as Linear Regression, Logistic Regression, Support Vector Machine (SVM), Naive Bayes, and K-Nearest Neighbors (K-NN).

and

- Decision Trees

- Random Forest

- AdaBoost

- Gradient Boosting

3. Integration with Popular Libraries: Seamless integration with essential Python libraries like NumPy, Pandas, Matplotlib, and Scikit-learn for enhanced functionality.

4. Data Handling:

- DataLoader class for importing and preprocessing data from various formats (CSV, JSON, SQL databases).

- DataTransformer class for feature scaling, normalization, and encoding categorical variables.

- Includes functions for loading and preprocessing datasets to prepare them for training and testing.

- `FeatureSelector` class: Provides methods for feature selection and dimensionality reduction.

5. Model Evaluation:

- Evaluator class to assess model performance using metrics like accuracy, precision, recall, F1-score, and ROC-AUC.

- Methods for generating confusion matrices and classification reports.

6. Model Training: Contains methods for fitting the selected algorithm with the training data.

- `fit` method: Trains the selected algorithm on the provided training data.

7. Prediction Generation: Allows users to make predictions using the trained model on new data.

- `predict` method: Makes predictions using the trained model on new data.

- `predict_proba` method: Returns the predicted probabilities for classification tasks.

8. Model Evaluation:

- `Evaluator` class: Assesses model performance using various metrics (e.g., accuracy, precision, recall, F1-score, ROC-AUC).

- `cross_validate` method: Performs cross-validation to evaluate the model's performance.

- `confusion_matrix` method: Generates a confusion matrix for classification tasks.

- `classification_report` method: Provides a detailed classification report.

9. Hyperparameter Tuning:

- Tuner class that uses techniques likes Grid Search and Random Search for hyperparameter optimization.

10. Visualization:

- Integration with Matplotlib and Seaborn for generating plots to analyze model performance and data characteristics.

- Visualization support: Enables users to visualize data, model performance, and predictions using plotting functionalities.

- `Visualizer` class: Integrates with Matplotlib and Seaborn to generate plots for model performance analysis and data visualization.

- `plot_confusion_matrix` method: Visualizes the confusion matrix.

- `plot_roc_curve` method: Plots the Receiver Operating Characteristic (ROC) curve.

- `plot_feature_importance` method: Visualizes feature importance for applicable algorithms.

11. Utility Functions:

- Functions for saving and loading trained models.

- Logging functionalities to track the model training and prediction processes.

- `save_model` method: Saves the trained model to a file.

- `load_model` method: Loads a previously trained model from a file.

- `set_logger` method: Configures logging functionality for tracking model training and prediction processes.

12. User-Friendly Interface: Provides a simplified and intuitive interface for users to interact with and apply classic AI algorithms without extensive knowledge or configuration.

13.. Error Handling: Incorporates mechanisms to handle invalid inputs, errors during training, and other potential issues during algorithm usage.

- Custom exception classes for handling specific errors and providing informative error messages to users.

14. Documentation: Comprehensive documentation to guide users on how to use Easylibpal effectively and efficiently

- Comprehensive documentation explaining the usage and functionality of each component.

- Example scripts demonstrating how to use Easylibpal for various AI tasks and datasets.

15. Testing Suite:

- Unit tests for each component to ensure code reliability and maintainability.

- Integration tests to verify the smooth interaction between different components.

IMPLEMENTATION EXAMPLE WITH ADDITIONAL FEATURES:

Here is an example of how the expanded Easylibpal library could be structured and used:

```python

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from easylibpal import Easylibpal, DataLoader, Evaluator, Tuner

# Example DataLoader

class DataLoader:

def load_data(self, filepath, file_type='csv'):

if file_type == 'csv':

return pd.read_csv(filepath)

else:

raise ValueError("Unsupported file type provided.")

# Example Evaluator

class Evaluator:

def evaluate(self, model, X_test, y_test):

predictions = model.predict(X_test)

accuracy = np.mean(predictions == y_test)

return {'accuracy': accuracy}

# Example usage of Easylibpal with DataLoader and Evaluator

if __name__ == "__main__":

# Load and prepare the data

data_loader = DataLoader()

data = data_loader.load_data('path/to/your/data.csv')

X = data.iloc[:, :-1]

y = data.iloc[:, -1]

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Scale features

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train)

X_test_scaled = scaler.transform(X_test)

# Initialize Easylibpal with the desired algorithm

model = Easylibpal('Random Forest')

model.fit(X_train_scaled, y_train)

# Evaluate the model

evaluator = Evaluator()

results = evaluator.evaluate(model, X_test_scaled, y_test)

print(f"Model Accuracy: {results['accuracy']}")

# Optional: Use Tuner for hyperparameter optimization

tuner = Tuner(model, param_grid={'n_estimators': [100, 200], 'max_depth': [10, 20, 30]})

best_params = tuner.optimize(X_train_scaled, y_train)

print(f"Best Parameters: {best_params}")

```

This example demonstrates the structured approach to using Easylibpal with enhanced data handling, model evaluation, and optional hyperparameter tuning. The library empowers users to handle real-world datasets, apply various machine learning algorithms, and evaluate their performance with ease, making it an invaluable tool for developers and data scientists aiming to implement AI solutions efficiently.

Easylibpal is dedicated to making the latest AI technology accessible to everyone, regardless of their background or expertise. Our platform simplifies the process of selecting and implementing classic AI algorithms, enabling users across various industries to harness the power of artificial intelligence with ease. By democratizing access to AI, we aim to accelerate innovation and empower users to achieve their goals with confidence. Easylibpal's approach involves a democratization framework that reduces entry barriers, lowers the cost of building AI solutions, and speeds up the adoption of AI in both academic and business settings.

Below are examples showcasing how each main component of the Easylibpal library could be implemented and used in practice to provide a user-friendly interface for utilizing classic AI algorithms.

1. Core Components

Easylibpal Class Example:

```python

class Easylibpal:

def __init__(self, algorithm):

self.algorithm = algorithm

self.model = None

def fit(self, X, y):

# Simplified example: Instantiate and train a model based on the selected algorithm

if self.algorithm == 'Linear Regression':

from sklearn.linear_model import LinearRegression

self.model = LinearRegression()

elif self.algorithm == 'Random Forest':

from sklearn.ensemble import RandomForestClassifier

self.model = RandomForestClassifier()

self.model.fit(X, y)

def predict(self, X):

return self.model.predict(X)

```

2. Data Handling

DataLoader Class Example:

```python

class DataLoader:

def load_data(self, filepath, file_type='csv'):

if file_type == 'csv':

import pandas as pd

return pd.read_csv(filepath)

else:

raise ValueError("Unsupported file type provided.")

```

3. Model Evaluation

Evaluator Class Example:

```python

from sklearn.metrics import accuracy_score, classification_report

class Evaluator:

def evaluate(self, model, X_test, y_test):

predictions = model.predict(X_test)

accuracy = accuracy_score(y_test, predictions)

report = classification_report(y_test, predictions)

return {'accuracy': accuracy, 'report': report}

```

4. Hyperparameter Tuning

Tuner Class Example:

```python

from sklearn.model_selection import GridSearchCV

class Tuner:

def __init__(self, model, param_grid):

self.model = model

self.param_grid = param_grid

def optimize(self, X, y):

grid_search = GridSearchCV(self.model, self.param_grid, cv=5)

grid_search.fit(X, y)

return grid_search.best_params_

```

5. Visualization

Visualizer Class Example:

```python

import matplotlib.pyplot as plt

class Visualizer:

def plot_confusion_matrix(self, cm, classes, normalize=False, title='Confusion matrix'):

plt.imshow(cm, interpolation='nearest', cmap=plt.cm.Blues)

plt.title(title)

plt.colorbar()

tick_marks = np.arange(len(classes))

plt.xticks(tick_marks, classes, rotation=45)

plt.yticks(tick_marks, classes)

plt.ylabel('True label')

plt.xlabel('Predicted label')

plt.show()

```

6. Utility Functions

Save and Load Model Example:

```python

import joblib

def save_model(model, filename):

joblib.dump(model, filename)

def load_model(filename):

return joblib.load(filename)

```

7. Example Usage Script

Using Easylibpal in a Script:

```python

# Assuming Easylibpal and other classes have been imported

data_loader = DataLoader()

data = data_loader.load_data('data.csv')

X = data.drop('Target', axis=1)

y = data['Target']

model = Easylibpal('Random Forest')

model.fit(X, y)

evaluator = Evaluator()

results = evaluator.evaluate(model, X, y)

print("Accuracy:", results['accuracy'])

print("Report:", results['report'])

visualizer = Visualizer()

visualizer.plot_confusion_matrix(results['cm'], classes=['Class1', 'Class2'])

save_model(model, 'trained_model.pkl')

loaded_model = load_model('trained_model.pkl')

```

These examples illustrate the practical implementation and use of the Easylibpal library components, aiming to simplify the application of AI algorithms for users with varying levels of expertise in machine learning.

EASYLIBPAL IMPLEMENTATION:

Step 1: Define the Problem

First, we need to define the problem we want to solve. For this POC, let's assume we want to predict house prices based on various features like the number of bedrooms, square footage, and location.

Step 2: Choose an Appropriate Algorithm

Given our problem, a supervised learning algorithm like linear regression would be suitable. We'll use Scikit-learn, a popular library for machine learning in Python, to implement this algorithm.

Step 3: Prepare Your Data

We'll use Pandas to load and prepare our dataset. This involves cleaning the data, handling missing values, and splitting the dataset into training and testing sets.

Step 4: Implement the Algorithm

Now, we'll use Scikit-learn to implement the linear regression algorithm. We'll train the model on our training data and then test its performance on the testing data.

Step 5: Evaluate the Model

Finally, we'll evaluate the performance of our model using metrics like Mean Squared Error (MSE) and R-squared.

Python Code POC

```python

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error, r2_score

# Load the dataset

data = pd.read_csv('house_prices.csv')

# Prepare the data

X = data'bedrooms', 'square_footage', 'location'

y = data['price']

# Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create and train the model

model = LinearRegression()

model.fit(X_train, y_train)

# Make predictions

predictions = model.predict(X_test)

# Evaluate the model

mse = mean_squared_error(y_test, predictions)

r2 = r2_score(y_test, predictions)

print(f'Mean Squared Error: {mse}')

print(f'R-squared: {r2}')

```

Below is an implementation, Easylibpal provides a simple interface to instantiate and utilize classic AI algorithms such as Linear Regression, Logistic Regression, SVM, Naive Bayes, and K-NN. Users can easily create an instance of Easylibpal with their desired algorithm, fit the model with training data, and make predictions, all with minimal code and hassle. This demonstrates the power of Easylibpal in simplifying the integration of AI algorithms for various tasks.

```python

# Import necessary libraries

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.linear_model import LinearRegression

from sklearn.linear_model import LogisticRegression

from sklearn.svm import SVC

from sklearn.naive_bayes import GaussianNB

from sklearn.neighbors import KNeighborsClassifier

class Easylibpal:

def __init__(self, algorithm):

self.algorithm = algorithm

def fit(self, X, y):

if self.algorithm == 'Linear Regression':

self.model = LinearRegression()

elif self.algorithm == 'Logistic Regression':

self.model = LogisticRegression()

elif self.algorithm == 'SVM':

self.model = SVC()

elif self.algorithm == 'Naive Bayes':

self.model = GaussianNB()

elif self.algorithm == 'K-NN':

self.model = KNeighborsClassifier()

else:

raise ValueError("Invalid algorithm specified.")

self.model.fit(X, y)

def predict(self, X):

return self.model.predict(X)

# Example usage:

# Initialize Easylibpal with the desired algorithm

easy_algo = Easylibpal('Linear Regression')

# Generate some sample data

X = np.array([[1], [2], [3], [4]])

y = np.array([2, 4, 6, 8])

# Fit the model

easy_algo.fit(X, y)

# Make predictions

predictions = easy_algo.predict(X)

# Plot the results

plt.scatter(X, y)

plt.plot(X, predictions, color='red')

plt.title('Linear Regression with Easylibpal')

plt.xlabel('X')

plt.ylabel('y')

plt.show()

```

Easylibpal is an innovative Python library designed to simplify the integration and use of classic AI algorithms in a user-friendly manner. It aims to bridge the gap between the complexity of AI libraries and the ease of use, making it accessible for developers and data scientists alike. Easylibpal abstracts the underlying complexity of each algorithm, providing a unified interface that allows users to apply these algorithms with minimal configuration and understanding of the underlying mechanisms.

ENHANCED DATASET HANDLING

Easylibpal should be able to handle datasets more efficiently. This includes loading datasets from various sources (e.g., CSV files, databases), preprocessing data (e.g., normalization, handling missing values), and splitting data into training and testing sets.

```python

import os

from sklearn.model_selection import train_test_split

class Easylibpal:

# Existing code...

def load_dataset(self, filepath):

"""Loads a dataset from a CSV file."""

if not os.path.exists(filepath):

raise FileNotFoundError("Dataset file not found.")

return pd.read_csv(filepath)

def preprocess_data(self, dataset):

"""Preprocesses the dataset."""

# Implement data preprocessing steps here

return dataset

def split_data(self, X, y, test_size=0.2):

"""Splits the dataset into training and testing sets."""

return train_test_split(X, y, test_size=test_size)

```

Additional Algorithms

Easylibpal should support a wider range of algorithms. This includes decision trees, random forests, and gradient boosting machines.

```python

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier

from sklearn.ensemble import GradientBoostingClassifier

class Easylibpal:

# Existing code...

def fit(self, X, y):

# Existing code...

elif self.algorithm == 'Decision Tree':

self.model = DecisionTreeClassifier()

elif self.algorithm == 'Random Forest':

self.model = RandomForestClassifier()

elif self.algorithm == 'Gradient Boosting':

self.model = GradientBoostingClassifier()

# Add more algorithms as needed

```

User-Friendly Features

To make Easylibpal even more user-friendly, consider adding features like:

- Automatic hyperparameter tuning: Implementing a simple interface for hyperparameter tuning using GridSearchCV or RandomizedSearchCV.

- Model evaluation metrics: Providing easy access to common evaluation metrics like accuracy, precision, recall, and F1 score.

- Visualization tools: Adding methods for plotting model performance, confusion matrices, and feature importance.

```python

from sklearn.metrics import accuracy_score, classification_report

from sklearn.model_selection import GridSearchCV

class Easylibpal:

# Existing code...

def evaluate_model(self, X_test, y_test):

"""Evaluates the model using accuracy and classification report."""

y_pred = self.predict(X_test)

print("Accuracy:", accuracy_score(y_test, y_pred))

print(classification_report(y_test, y_pred))

def tune_hyperparameters(self, X, y, param_grid):

"""Tunes the model's hyperparameters using GridSearchCV."""

grid_search = GridSearchCV(self.model, param_grid, cv=5)

grid_search.fit(X, y)

self.model = grid_search.best_estimator_

```

Easylibpal leverages the power of Python and its rich ecosystem of AI and machine learning libraries, such as scikit-learn, to implement the classic algorithms. It provides a high-level API that abstracts the specifics of each algorithm, allowing users to focus on the problem at hand rather than the intricacies of the algorithm.

Python Code Snippets for Easylibpal

Below are Python code snippets demonstrating the use of Easylibpal with classic AI algorithms. Each snippet demonstrates how to use Easylibpal to apply a specific algorithm to a dataset.

# Linear Regression

```python

from Easylibpal import Easylibpal

# Initialize Easylibpal with a dataset

Easylibpal = Easylibpal(dataset='your_dataset.csv')

# Apply Linear Regression

result = Easylibpal.apply_algorithm('linear_regression', target_column='target')

# Print the result

print(result)

```

# Logistic Regression

```python

from Easylibpal import Easylibpal

# Initialize Easylibpal with a dataset

Easylibpal = Easylibpal(dataset='your_dataset.csv')

# Apply Logistic Regression

result = Easylibpal.apply_algorithm('logistic_regression', target_column='target')

# Print the result

print(result)

```

# Support Vector Machines (SVM)

```python

from Easylibpal import Easylibpal

# Initialize Easylibpal with a dataset

Easylibpal = Easylibpal(dataset='your_dataset.csv')

# Apply SVM

result = Easylibpal.apply_algorithm('svm', target_column='target')

# Print the result

print(result)

```

# Naive Bayes

```python

from Easylibpal import Easylibpal

# Initialize Easylibpal with a dataset

Easylibpal = Easylibpal(dataset='your_dataset.csv')

# Apply Naive Bayes

result = Easylibpal.apply_algorithm('naive_bayes', target_column='target')

# Print the result

print(result)

```

# K-Nearest Neighbors (K-NN)

```python

from Easylibpal import Easylibpal

# Initialize Easylibpal with a dataset

Easylibpal = Easylibpal(dataset='your_dataset.csv')

# Apply K-NN

result = Easylibpal.apply_algorithm('knn', target_column='target')

# Print the result

print(result)

```

ABSTRACTION AND ESSENTIAL COMPLEXITY

- Essential Complexity: This refers to the inherent complexity of the problem domain, which cannot be reduced regardless of the programming language or framework used. It includes the logic and algorithm needed to solve the problem. For example, the essential complexity of sorting a list remains the same across different programming languages.

- Accidental Complexity: This is the complexity introduced by the choice of programming language, framework, or libraries. It can be reduced or eliminated through abstraction. For instance, using a high-level API in Python can hide the complexity of lower-level operations, making the code more readable and maintainable.

HOW EASYLIBPAL ABSTRACTS COMPLEXITY

Easylibpal aims to reduce accidental complexity by providing a high-level API that encapsulates the details of each classic AI algorithm. This abstraction allows users to apply these algorithms without needing to understand the underlying mechanisms or the specifics of the algorithm's implementation.

- Simplified Interface: Easylibpal offers a unified interface for applying various algorithms, such as Linear Regression, Logistic Regression, SVM, Naive Bayes, and K-NN. This interface abstracts the complexity of each algorithm, making it easier for users to apply them to their datasets.

- Runtime Fusion: By evaluating sub-expressions and sharing them across multiple terms, Easylibpal can optimize the execution of algorithms. This approach, similar to runtime fusion in abstract algorithms, allows for efficient computation without duplicating work, thereby reducing the computational complexity.

- Focus on Essential Complexity: While Easylibpal abstracts away the accidental complexity; it ensures that the essential complexity of the problem domain remains at the forefront. This means that while the implementation details are hidden, the core logic and algorithmic approach are still accessible and understandable to the user.

To implement Easylibpal, one would need to create a Python class that encapsulates the functionality of each classic AI algorithm. This class would provide methods for loading datasets, preprocessing data, and applying the algorithm with minimal configuration required from the user. The implementation would leverage existing libraries like scikit-learn for the actual algorithmic computations, abstracting away the complexity of these libraries.

Here's a conceptual example of how the Easylibpal class might be structured for applying a Linear Regression algorithm:

```python

class Easylibpal:

def __init__(self, dataset):

self.dataset = dataset

# Load and preprocess the dataset

def apply_linear_regression(self, target_column):

# Abstracted implementation of Linear Regression

# This method would internally use scikit-learn or another library

# to perform the actual computation, abstracting the complexity

pass

# Usage

Easylibpal = Easylibpal(dataset='your_dataset.csv')

result = Easylibpal.apply_linear_regression(target_column='target')

```

This example demonstrates the concept of Easylibpal by abstracting the complexity of applying a Linear Regression algorithm. The actual implementation would need to include the specifics of loading the dataset, preprocessing it, and applying the algorithm using an underlying library like scikit-learn.

Easylibpal abstracts the complexity of classic AI algorithms by providing a simplified interface that hides the intricacies of each algorithm's implementation. This abstraction allows users to apply these algorithms with minimal configuration and understanding of the underlying mechanisms. Here are examples of specific algorithms that Easylibpal abstracts:

To implement Easylibpal, one would need to create a Python class that encapsulates the functionality of each classic AI algorithm. This class would provide methods for loading datasets, preprocessing data, and applying the algorithm with minimal configuration required from the user. The implementation would leverage existing libraries like scikit-learn for the actual algorithmic computations, abstracting away the complexity of these libraries.

Here's a conceptual example of how the Easylibpal class might be structured for applying a Linear Regression algorithm:

```python

class Easylibpal:

def __init__(self, dataset):

self.dataset = dataset

# Load and preprocess the dataset

def apply_linear_regression(self, target_column):

# Abstracted implementation of Linear Regression

# This method would internally use scikit-learn or another library

# to perform the actual computation, abstracting the complexity

pass

# Usage

Easylibpal = Easylibpal(dataset='your_dataset.csv')

result = Easylibpal.apply_linear_regression(target_column='target')

```

This example demonstrates the concept of Easylibpal by abstracting the complexity of applying a Linear Regression algorithm. The actual implementation would need to include the specifics of loading the dataset, preprocessing it, and applying the algorithm using an underlying library like scikit-learn.

Easylibpal abstracts the complexity of feature selection for classic AI algorithms by providing a simplified interface that automates the process of selecting the most relevant features for each algorithm. This abstraction is crucial because feature selection is a critical step in machine learning that can significantly impact the performance of a model. Here's how Easylibpal handles feature selection for the mentioned algorithms:

To implement feature selection in Easylibpal, one could use scikit-learn's `SelectKBest` or `RFE` classes for feature selection based on statistical tests or model coefficients. Here's a conceptual example of how feature selection might be integrated into the Easylibpal class for Linear Regression:

```python

from sklearn.feature_selection import SelectKBest, f_regression

from sklearn.linear_model import LinearRegression

class Easylibpal:

def __init__(self, dataset):

self.dataset = dataset

# Load and preprocess the dataset

def apply_linear_regression(self, target_column):

# Feature selection using SelectKBest

selector = SelectKBest(score_func=f_regression, k=10)

X_new = selector.fit_transform(self.dataset.drop(target_column, axis=1), self.dataset[target_column])

# Train Linear Regression model

model = LinearRegression()

model.fit(X_new, self.dataset[target_column])

# Return the trained model

return model

# Usage

Easylibpal = Easylibpal(dataset='your_dataset.csv')

model = Easylibpal.apply_linear_regression(target_column='target')

```

This example demonstrates how Easylibpal abstracts the complexity of feature selection for Linear Regression by using scikit-learn's `SelectKBest` to select the top 10 features based on their statistical significance in predicting the target variable. The actual implementation would need to adapt this approach for each algorithm, considering the specific characteristics and requirements of each algorithm.

To implement feature selection in Easylibpal, one could use scikit-learn's `SelectKBest`, `RFE`, or other feature selection classes based on the algorithm's requirements. Here's a conceptual example of how feature selection might be integrated into the Easylibpal class for Logistic Regression using RFE:

```python

from sklearn.feature_selection import RFE

from sklearn.linear_model import LogisticRegression

class Easylibpal:

def __init__(self, dataset):

self.dataset = dataset

# Load and preprocess the dataset

def apply_logistic_regression(self, target_column):

# Feature selection using RFE

model = LogisticRegression()

rfe = RFE(model, n_features_to_select=10)

rfe.fit(self.dataset.drop(target_column, axis=1), self.dataset[target_column])

# Train Logistic Regression model

model.fit(self.dataset.drop(target_column, axis=1), self.dataset[target_column])

# Return the trained model

return model

# Usage

Easylibpal = Easylibpal(dataset='your_dataset.csv')

model = Easylibpal.apply_logistic_regression(target_column='target')

```

This example demonstrates how Easylibpal abstracts the complexity of feature selection for Logistic Regression by using scikit-learn's `RFE` to select the top 10 features based on their importance in the model. The actual implementation would need to adapt this approach for each algorithm, considering the specific characteristics and requirements of each algorithm.

EASYLIBPAL HANDLES DIFFERENT TYPES OF DATASETS

Easylibpal handles different types of datasets with varying structures by adopting a flexible and adaptable approach to data preprocessing and transformation. This approach is inspired by the principles of tidy data and the need to ensure data is in a consistent, usable format before applying AI algorithms. Here's how Easylibpal addresses the challenges posed by varying dataset structures:

One Type in Multiple Tables

When datasets contain different variables, the same variables with different names, different file formats, or different conventions for missing values, Easylibpal employs a process similar to tidying data. This involves identifying and standardizing the structure of each dataset, ensuring that each variable is consistently named and formatted across datasets. This process might include renaming columns, converting data types, and handling missing values in a uniform manner. For datasets stored in different file formats, Easylibpal would use appropriate libraries (e.g., pandas for CSV, Excel files, and SQL databases) to load and preprocess the data before applying the algorithms.

Multiple Types in One Table

For datasets that involve values collected at multiple levels or on different types of observational units, Easylibpal applies a normalization process. This involves breaking down the dataset into multiple tables, each representing a distinct type of observational unit. For example, if a dataset contains information about songs and their rankings over time, Easylibpal would separate this into two tables: one for song details and another for rankings. This normalization ensures that each fact is expressed in only one place, reducing inconsistencies and making the data more manageable for analysis.

Data Semantics

Easylibpal ensures that the data is organized in a way that aligns with the principles of data semantics, where every value belongs to a variable and an observation. This organization is crucial for the algorithms to interpret the data correctly. Easylibpal might use functions like `pivot_longer` and `pivot_wider` from the tidyverse or equivalent functions in pandas to reshape the data into a long format, where each row represents a single observation and each column represents a single variable. This format is particularly useful for algorithms that require a consistent structure for input data.

Messy Data

Dealing with messy data, which can include inconsistent data types, missing values, and outliers, is a common challenge in data science. Easylibpal addresses this by implementing robust data cleaning and preprocessing steps. This includes handling missing values (e.g., imputation or deletion), converting data types to ensure consistency, and identifying and removing outliers. These steps are crucial for preparing the data in a format that is suitable for the algorithms, ensuring that the algorithms can effectively learn from the data without being hindered by its inconsistencies.

To implement these principles in Python, Easylibpal would leverage libraries like pandas for data manipulation and preprocessing. Here's a conceptual example of how Easylibpal might handle a dataset with multiple types in one table:

```python

import pandas as pd

# Load the dataset

dataset = pd.read_csv('your_dataset.csv')

# Normalize the dataset by separating it into two tables

song_table = dataset'artist', 'track'.drop_duplicates().reset_index(drop=True)

song_table['song_id'] = range(1, len(song_table) + 1)

ranking_table = dataset'artist', 'track', 'week', 'rank'.drop_duplicates().reset_index(drop=True)

# Now, song_table and ranking_table can be used separately for analysis

```

This example demonstrates how Easylibpal might normalize a dataset with multiple types of observational units into separate tables, ensuring that each type of observational unit is stored in its own table. The actual implementation would need to adapt this approach based on the specific structure and requirements of the dataset being processed.

CLEAN DATA

Easylibpal employs a comprehensive set of data cleaning and preprocessing steps to handle messy data, ensuring that the data is in a suitable format for machine learning algorithms. These steps are crucial for improving the accuracy and reliability of the models, as well as preventing misleading results and conclusions. Here's a detailed look at the specific steps Easylibpal might employ:

1. Remove Irrelevant Data

The first step involves identifying and removing data that is not relevant to the analysis or modeling task at hand. This could include columns or rows that do not contribute to the predictive power of the model or are not necessary for the analysis .

2. Deduplicate Data

Deduplication is the process of removing duplicate entries from the dataset. Duplicates can skew the analysis and lead to incorrect conclusions. Easylibpal would use appropriate methods to identify and remove duplicates, ensuring that each entry in the dataset is unique.

3. Fix Structural Errors

Structural errors in the dataset, such as inconsistent data types, incorrect values, or formatting issues, can significantly impact the performance of machine learning algorithms. Easylibpal would employ data cleaning techniques to correct these errors, ensuring that the data is consistent and correctly formatted.

4. Deal with Missing Data

Handling missing data is a common challenge in data preprocessing. Easylibpal might use techniques such as imputation (filling missing values with statistical estimates like mean, median, or mode) or deletion (removing rows or columns with missing values) to address this issue. The choice of method depends on the nature of the data and the specific requirements of the analysis.

5. Filter Out Data Outliers

Outliers can significantly affect the performance of machine learning models. Easylibpal would use statistical methods to identify and filter out outliers, ensuring that the data is more representative of the population being analyzed.

6. Validate Data

The final step involves validating the cleaned and preprocessed data to ensure its quality and accuracy. This could include checking for consistency, verifying the correctness of the data, and ensuring that the data meets the requirements of the machine learning algorithms. Easylibpal would employ validation techniques to confirm that the data is ready for analysis.

To implement these data cleaning and preprocessing steps in Python, Easylibpal would leverage libraries like pandas and scikit-learn. Here's a conceptual example of how these steps might be integrated into the Easylibpal class:

```python

import pandas as pd

from sklearn.impute import SimpleImputer

from sklearn.preprocessing import StandardScaler

class Easylibpal:

def __init__(self, dataset):

self.dataset = dataset

# Load and preprocess the dataset

def clean_and_preprocess(self):

# Remove irrelevant data

self.dataset = self.dataset.drop(['irrelevant_column'], axis=1)

# Deduplicate data

self.dataset = self.dataset.drop_duplicates()

# Fix structural errors (example: correct data type)

self.dataset['correct_data_type_column'] = self.dataset['correct_data_type_column'].astype(float)

# Deal with missing data (example: imputation)

imputer = SimpleImputer(strategy='mean')

self.dataset['missing_data_column'] = imputer.fit_transform(self.dataset'missing_data_column')

# Filter out data outliers (example: using Z-score)

# This step requires a more detailed implementation based on the specific dataset

# Validate data (example: checking for NaN values)

assert not self.dataset.isnull().values.any(), "Data still contains NaN values"

# Return the cleaned and preprocessed dataset

return self.dataset

# Usage

Easylibpal = Easylibpal(dataset=pd.read_csv('your_dataset.csv'))

cleaned_dataset = Easylibpal.clean_and_preprocess()

```

This example demonstrates a simplified approach to data cleaning and preprocessing within Easylibpal. The actual implementation would need to adapt these steps based on the specific characteristics and requirements of the dataset being processed.

VALUE DATA

Easylibpal determines which data is irrelevant and can be removed through a combination of domain knowledge, data analysis, and automated techniques. The process involves identifying data that does not contribute to the analysis, research, or goals of the project, and removing it to improve the quality, efficiency, and clarity of the data. Here's how Easylibpal might approach this:

Domain Knowledge

Easylibpal leverages domain knowledge to identify data that is not relevant to the specific goals of the analysis or modeling task. This could include data that is out of scope, outdated, duplicated, or erroneous. By understanding the context and objectives of the project, Easylibpal can systematically exclude data that does not add value to the analysis.

Data Analysis

Easylibpal employs data analysis techniques to identify irrelevant data. This involves examining the dataset to understand the relationships between variables, the distribution of data, and the presence of outliers or anomalies. Data that does not have a significant impact on the predictive power of the model or the insights derived from the analysis is considered irrelevant.

Automated Techniques

Easylibpal uses automated tools and methods to remove irrelevant data. This includes filtering techniques to select or exclude certain rows or columns based on criteria or conditions, aggregating data to reduce its complexity, and deduplicating to remove duplicate entries. Tools like Excel, Google Sheets, Tableau, Power BI, OpenRefine, Python, R, Data Linter, Data Cleaner, and Data Wrangler can be employed for these purposes .

Examples of Irrelevant Data

- Personal Identifiable Information (PII): Data such as names, addresses, and phone numbers are irrelevant for most analytical purposes and should be removed to protect privacy and comply with data protection regulations .

- URLs and HTML Tags: These are typically not relevant to the analysis and can be removed to clean up the dataset.

- Boilerplate Text: Excessive blank space or boilerplate text (e.g., in emails) adds noise to the data and can be removed.

- Tracking Codes: These are used for tracking user interactions and do not contribute to the analysis.

To implement these steps in Python, Easylibpal might use pandas for data manipulation and filtering. Here's a conceptual example of how to remove irrelevant data:

```python

import pandas as pd

# Load the dataset

dataset = pd.read_csv('your_dataset.csv')

# Remove irrelevant columns (example: email addresses)

dataset = dataset.drop(['email_address'], axis=1)

# Remove rows with missing values (example: if a column is required for analysis)

dataset = dataset.dropna(subset=['required_column'])

# Deduplicate data

dataset = dataset.drop_duplicates()

# Return the cleaned dataset

cleaned_dataset = dataset

```

This example demonstrates how Easylibpal might remove irrelevant data from a dataset using Python and pandas. The actual implementation would need to adapt these steps based on the specific characteristics and requirements of the dataset being processed.

Detecting Inconsistencies

Easylibpal starts by detecting inconsistencies in the data. This involves identifying discrepancies in data types, missing values, duplicates, and formatting errors. By detecting these inconsistencies, Easylibpal can take targeted actions to address them.

Handling Formatting Errors

Formatting errors, such as inconsistent data types for the same feature, can significantly impact the analysis. Easylibpal uses functions like `astype()` in pandas to convert data types, ensuring uniformity and consistency across the dataset. This step is crucial for preparing the data for analysis, as it ensures that each feature is in the correct format expected by the algorithms.

Handling Missing Values

Missing values are a common issue in datasets. Easylibpal addresses this by consulting with subject matter experts to understand why data might be missing. If the missing data is missing completely at random, Easylibpal might choose to drop it. However, for other cases, Easylibpal might employ imputation techniques to fill in missing values, ensuring that the dataset is complete and ready for analysis.

Handling Duplicates

Duplicate entries can skew the analysis and lead to incorrect conclusions. Easylibpal uses pandas to identify and remove duplicates, ensuring that each entry in the dataset is unique. This step is crucial for maintaining the integrity of the data and ensuring that the analysis is based on distinct observations.

Handling Inconsistent Values

Inconsistent values, such as different representations of the same concept (e.g., "yes" vs. "y" for a binary variable), can also pose challenges. Easylibpal employs data cleaning techniques to standardize these values, ensuring that the data is consistent and can be accurately analyzed.

To implement these steps in Python, Easylibpal would leverage pandas for data manipulation and preprocessing. Here's a conceptual example of how these steps might be integrated into the Easylibpal class:

```python

import pandas as pd

class Easylibpal:

def __init__(self, dataset):

self.dataset = dataset

# Load and preprocess the dataset

def clean_and_preprocess(self):

# Detect inconsistencies (example: check data types)

print(self.dataset.dtypes)

# Handle formatting errors (example: convert data types)

self.dataset['date_column'] = pd.to_datetime(self.dataset['date_column'])

# Handle missing values (example: drop rows with missing values)

self.dataset = self.dataset.dropna(subset=['required_column'])

# Handle duplicates (example: drop duplicates)

self.dataset = self.dataset.drop_duplicates()

# Handle inconsistent values (example: standardize values)

self.dataset['binary_column'] = self.dataset['binary_column'].map({'yes': 1, 'no': 0})

# Return the cleaned and preprocessed dataset

return self.dataset

# Usage

Easylibpal = Easylibpal(dataset=pd.read_csv('your_dataset.csv'))

cleaned_dataset = Easylibpal.clean_and_preprocess()

```

This example demonstrates a simplified approach to handling inconsistent or messy data within Easylibpal. The actual implementation would need to adapt these steps based on the specific characteristics and requirements of the dataset being processed.

Statistical Imputation

Statistical imputation involves replacing missing values with statistical estimates such as the mean, median, or mode of the available data. This method is straightforward and can be effective for numerical data. For categorical data, mode imputation is commonly used. The choice of imputation method depends on the distribution of the data and the nature of the missing values.

Model-Based Imputation

Model-based imputation uses machine learning models to predict missing values. This approach can be more sophisticated and potentially more accurate than statistical imputation, especially for complex datasets. Techniques like K-Nearest Neighbors (KNN) imputation can be used, where the missing values are replaced with the values of the K nearest neighbors in the feature space.

Using SimpleImputer in scikit-learn

The scikit-learn library provides the `SimpleImputer` class, which supports both statistical and model-based imputation. `SimpleImputer` can be used to replace missing values with the mean, median, or most frequent value (mode) of the column. It also supports more advanced imputation methods like KNN imputation.

To implement these imputation techniques in Python, Easylibpal might use the `SimpleImputer` class from scikit-learn. Here's an example of how to use `SimpleImputer` for statistical imputation:

```python

from sklearn.impute import SimpleImputer

import pandas as pd

# Load the dataset

dataset = pd.read_csv('your_dataset.csv')

# Initialize SimpleImputer for numerical columns

num_imputer = SimpleImputer(strategy='mean')

# Fit and transform the numerical columns

dataset'numerical_column1', 'numerical_column2' = num_imputer.fit_transform(dataset'numerical_column1', 'numerical_column2')

# Initialize SimpleImputer for categorical columns

cat_imputer = SimpleImputer(strategy='most_frequent')

# Fit and transform the categorical columns

dataset'categorical_column1', 'categorical_column2' = cat_imputer.fit_transform(dataset'categorical_column1', 'categorical_column2')

# The dataset now has missing values imputed

```

This example demonstrates how to use `SimpleImputer` to fill in missing values in both numerical and categorical columns of a dataset. The actual implementation would need to adapt these steps based on the specific characteristics and requirements of the dataset being processed.

Model-based imputation techniques, such as Multiple Imputation by Chained Equations (MICE), offer powerful ways to handle missing data by using statistical models to predict missing values. However, these techniques come with their own set of limitations and potential drawbacks:

1. Complexity and Computational Cost

Model-based imputation methods can be computationally intensive, especially for large datasets or complex models. This can lead to longer processing times and increased computational resources required for imputation.

2. Overfitting and Convergence Issues

These methods are prone to overfitting, where the imputation model captures noise in the data rather than the underlying pattern. Overfitting can lead to imputed values that are too closely aligned with the observed data, potentially introducing bias into the analysis. Additionally, convergence issues may arise, where the imputation process does not settle on a stable solution.

3. Assumptions About Missing Data

Model-based imputation techniques often assume that the data is missing at random (MAR), which means that the probability of a value being missing is not related to the values of other variables. However, this assumption may not hold true in all cases, leading to biased imputations if the data is missing not at random (MNAR).

4. Need for Suitable Regression Models

For each variable with missing values, a suitable regression model must be chosen. Selecting the wrong model can lead to inaccurate imputations. The choice of model depends on the nature of the data and the relationship between the variable with missing values and other variables.

5. Combining Imputed Datasets

After imputing missing values, there is a challenge in combining the multiple imputed datasets to produce a single, final dataset. This requires careful consideration of how to aggregate the imputed values and can introduce additional complexity and uncertainty into the analysis.

6. Lack of Transparency

The process of model-based imputation can be less transparent than simpler imputation methods, such as mean or median imputation. This can make it harder to justify the imputation process, especially in contexts where the reasons for missing data are important, such as in healthcare research.

Despite these limitations, model-based imputation techniques can be highly effective for handling missing data in datasets where a amusingness is MAR and where the relationships between variables are complex. Careful consideration of the assumptions, the choice of models, and the methods for combining imputed datasets are crucial to mitigate these drawbacks and ensure the validity of the imputation process.

USING EASYLIBPAL FOR AI ALGORITHM INTEGRATION OFFERS SEVERAL SIGNIFICANT BENEFITS, PARTICULARLY IN ENHANCING EVERYDAY LIFE AND REVOLUTIONIZING VARIOUS SECTORS. HERE'S A DETAILED LOOK AT THE ADVANTAGES:

1. Enhanced Communication: AI, through Easylibpal, can significantly improve communication by categorizing messages, prioritizing inboxes, and providing instant customer support through chatbots. This ensures that critical information is not missed and that customer queries are resolved promptly.

2. Creative Endeavors: Beyond mundane tasks, AI can also contribute to creative endeavors. For instance, photo editing applications can use AI algorithms to enhance images, suggesting edits that align with aesthetic preferences. Music composition tools can generate melodies based on user input, inspiring musicians and amateurs alike to explore new artistic horizons. These innovations empower individuals to express themselves creatively with AI as a collaborative partner.

3. Daily Life Enhancement: AI, integrated through Easylibpal, has the potential to enhance daily life exponentially. Smart homes equipped with AI-driven systems can adjust lighting, temperature, and security settings according to user preferences. Autonomous vehicles promise safer and more efficient commuting experiences. Predictive analytics can optimize supply chains, reducing waste and ensuring goods reach users when needed.

4. Paradigm Shift in Technology Interaction: The integration of AI into our daily lives is not just a trend; it's a paradigm shift that's redefining how we interact with technology. By streamlining routine tasks, personalizing experiences, revolutionizing healthcare, enhancing communication, and fueling creativity, AI is opening doors to a more convenient, efficient, and tailored existence.

5. Responsible Benefit Harnessing: As we embrace AI's transformational power, it's essential to approach its integration with a sense of responsibility, ensuring that its benefits are harnessed for the betterment of society as a whole. This approach aligns with the ethical considerations of using AI, emphasizing the importance of using AI in a way that benefits all stakeholders.

In summary, Easylibpal facilitates the integration and use of AI algorithms in a manner that is accessible and beneficial across various domains, from enhancing communication and creative endeavors to revolutionizing daily life and promoting a paradigm shift in technology interaction. This integration not only streamlines the application of AI but also ensures that its benefits are harnessed responsibly for the betterment of society.

USING EASYLIBPAL OVER TRADITIONAL AI LIBRARIES OFFERS SEVERAL BENEFITS, PARTICULARLY IN TERMS OF EASE OF USE, EFFICIENCY, AND THE ABILITY TO APPLY AI ALGORITHMS WITH MINIMAL CONFIGURATION. HERE ARE THE KEY ADVANTAGES:

- Simplified Integration: Easylibpal abstracts the complexity of traditional AI libraries, making it easier for users to integrate classic AI algorithms into their projects. This simplification reduces the learning curve and allows developers and data scientists to focus on their core tasks without getting bogged down by the intricacies of AI implementation.

- User-Friendly Interface: By providing a unified platform for various AI algorithms, Easylibpal offers a user-friendly interface that streamlines the process of selecting and applying algorithms. This interface is designed to be intuitive and accessible, enabling users to experiment with different algorithms with minimal effort.

- Enhanced Productivity: The ability to effortlessly instantiate algorithms, fit models with training data, and make predictions with minimal configuration significantly enhances productivity. This efficiency allows for rapid prototyping and deployment of AI solutions, enabling users to bring their ideas to life more quickly.

- Democratization of AI: Easylibpal democratizes access to classic AI algorithms, making them accessible to a wider range of users, including those with limited programming experience. This democratization empowers users to leverage AI in various domains, fostering innovation and creativity.

- Automation of Repetitive Tasks: By automating the process of applying AI algorithms, Easylibpal helps users save time on repetitive tasks, allowing them to focus on more complex and creative aspects of their projects. This automation is particularly beneficial for users who may not have extensive experience with AI but still wish to incorporate AI capabilities into their work.

- Personalized Learning and Discovery: Easylibpal can be used to enhance personalized learning experiences and discovery mechanisms, similar to the benefits seen in academic libraries. By analyzing user behaviors and preferences, Easylibpal can tailor recommendations and resource suggestions to individual needs, fostering a more engaging and relevant learning journey.

- Data Management and Analysis: Easylibpal aids in managing large datasets efficiently and deriving meaningful insights from data. This capability is crucial in today's data-driven world, where the ability to analyze and interpret large volumes of data can significantly impact research outcomes and decision-making processes.

In summary, Easylibpal offers a simplified, user-friendly approach to applying classic AI algorithms, enhancing productivity, democratizing access to AI, and automating repetitive tasks. These benefits make Easylibpal a valuable tool for developers, data scientists, and users looking to leverage AI in their projects without the complexities associated with traditional AI libraries.

2 notes

·

View notes

Text

Introduction: The Evolution of Web Scraping

Traditional Web Scraping involves deploying scrapers on dedicated servers or local machines, using tools like Python, BeautifulSoup, and Selenium. While effective for small-scale tasks, these methods require constant monitoring, manual scaling, and significant infrastructure management. Developers often need to handle cron jobs, storage, IP rotation, and failover mechanisms themselves. Any sudden spike in demand could result in performance bottlenecks or downtime. As businesses grow, these challenges make traditional scraping harder to maintain. This is where new-age, cloud-based approaches like Serverless Web Scraping emerge as efficient alternatives, helping automate, scale, and streamline data extraction.

Challenges of Manual Scraper Deployment (Scaling, Infrastructure, Cost)

Manual scraper deployment comes with numerous operational challenges. Scaling scrapers to handle large datasets or traffic spikes requires robust infrastructure and resource allocation. Managing servers involves ongoing costs, including hosting, maintenance, load balancing, and monitoring. Additionally, handling failures, retries, and scheduling manually can lead to downtime or missed data. These issues slow down development and increase overhead. In contrast, Serverless Web Scraping removes the need for dedicated servers by running scraping tasks on platforms like AWS Lambda, Azure Functions, and Google Cloud Functions, offering auto-scaling and cost-efficiency on a pay-per-use model.

Introduction to Serverless Web Scraping as a Game-Changer

What is Serverless Web Scraping?

Serverless Web Scraping refers to the process of extracting data from websites using cloud-based, event-driven architecture, without the need to manage underlying servers. In cloud computing, "serverless" means the cloud provider automatically handles infrastructure scaling, provisioning, and resource allocation. This enables developers to focus purely on writing the logic of Data Collection, while the platform takes care of execution.

Popular Cloud Providers like AWS Lambda, Azure Functions, and Google Cloud Functions offer robust platforms for deploying these scraping tasks. Developers write small, stateless functions that are triggered by events such as HTTP requests, file uploads, or scheduled intervals—referred to as Scheduled Scraping and Event-Based Triggers. These functions are executed in isolated containers, providing secure, cost-effective, and on-demand scraping capabilities.

The core advantage is Lightweight Data Extraction. Instead of running a full scraper continuously on a server, serverless functions only execute when needed—making them highly efficient. Use cases include:

Scheduled Scraping (e.g., extracting prices every 6 hours)

Real-time scraping triggered by user queries

API-less extraction where data is not available via public APIs

These functionalities allow businesses to collect data at scale without investing in infrastructure or DevOps.

Key Benefits of Serverless Web Scraping

Scalability on Demand

One of the strongest advantages of Serverless Web Scraping is its ability to scale automatically. When using Cloud Providers like AWS Lambda, Azure Functions, or Google Cloud Functions, your scraping tasks can scale from a few requests to thousands instantly—without any manual intervention. For example, an e-commerce brand tracking product listings during flash sales can instantly scale their Data Collection tasks to accommodate massive price updates across multiple platforms in real time.

Cost-Effectiveness (Pay-as-You-Go Model)

Traditional Web Scraping involves paying for full-time servers, regardless of usage. With serverless solutions, you only pay for the time your code is running. This pay-as-you-go model significantly reduces costs, especially for intermittent scraping tasks. For instance, a marketing agency running weekly Scheduled Scraping to track keyword rankings or competitor ads will only be billed for those brief executions—making Serverless Web Scraping extremely budget-friendly.

Zero Server Maintenance

Server management can be tedious and resource-intensive, especially when deploying at scale. Serverless frameworks eliminate the need for provisioning, patching, or maintaining infrastructure. A developer scraping real estate listings no longer needs to manage server health or uptime. Instead, they focus solely on writing scraping logic, while Cloud Providers handle the backend processes, ensuring smooth, uninterrupted Lightweight Data Extraction.

Improved Reliability and Automation

Using Event-Based Triggers (like new data uploads, emails, or HTTP calls), serverless scraping functions can be scheduled or executed automatically based on specific events. This guarantees better uptime and reduces the likelihood of missing important updates. For example, Azure Functions can be triggered every time a CSV file is uploaded to the cloud, automating the Data Collection pipeline.

Environmentally Efficient

Traditional servers consume energy 24/7, regardless of activity. Serverless environments run functions only when needed, minimizing energy usage and environmental impact. This makes Serverless Web Scraping an eco-friendly option. Businesses concerned with sustainability can reduce their carbon footprint while efficiently extracting vital business intelligence.

Ideal Use Cases for Serverless Web Scraping

1. Market and Price Monitoring

Serverless Web Scraping enables retailers and analysts to monitor competitor prices in real-time using Scheduled Scraping or Event-Based Triggers.

Example:

A fashion retailer uses AWS Lambda to scrape competitor pricing data every 4 hours. This allows dynamic pricing updates without maintaining any servers, leading to a 30% improvement in pricing competitiveness and a 12% uplift in revenue.

2. E-commerce Product Data Collection

Collect structured product information (SKUs, availability, images, etc.) from multiple e-commerce platforms using Lightweight Data Extraction methods via serverless setups.

Example:

An online electronics aggregator uses Google Cloud Functions to scrape product specs and availability across 50+ vendors daily. By automating Data Collection, they reduce manual data entry costs by 80%.

3. Real-Time News and Sentiment Tracking

Use Web Scraping to monitor breaking news or updates relevant to your industry and feed it into dashboards or sentiment engines.

Example:

A fintech firm uses Azure Functions to scrape financial news from Bloomberg and CNBC every 5 minutes. The data is piped into a sentiment analysis engine, helping traders act faster based on market sentiment—cutting reaction time by 40%.

4. Social Media Trend Analysis

Track hashtags, mentions, and viral content in real time across platforms like Twitter, Instagram, or Reddit using Serverless Web Scraping.

Example:

A digital marketing agency leverages AWS Lambda to scrape trending hashtags and influencer posts during product launches. This real-time Data Collection enables live campaign adjustments, improving engagement by 25%.

5. Mobile App Backend Scraping Using Mobile App Scraping Services

Extract backend content and APIs from mobile apps using Mobile App Scraping Services hosted via Cloud Providers.

Example:

A food delivery startup uses Google Cloud Functions to scrape menu availability and pricing data from a competitor’s app every 15 minutes. This helps optimize their own platform in real-time, improving response speed and user satisfaction.

Technical Workflow of a Serverless Scraper

In this section, we’ll outline how a Lambda-based scraper works and how to integrate it with Web Scraping API Services and cloud triggers.

1. Step-by-Step on How a Typical Lambda-Based Scraper Functions

A Lambda-based scraper runs serverless functions that handle the data extraction process. Here’s a step-by-step workflow for a typical AWS Lambda-based scraper:

Step 1: Function Trigger

Lambda functions can be triggered by various events. Common triggers include API calls, file uploads, or scheduled intervals.

For example, a scraper function can be triggered by a cron job or a Scheduled Scraping event.

Example Lambda Trigger Code:

Lambda functionis triggered based on a schedule (using EventBridge or CloudWatch).

requests.getfetches the web page.

BeautifulSoupprocesses the HTML to extract relevant data.

Step 2: Data Collection

After triggering the Lambda function, the scraper fetches data from the targeted website. Data extraction logic is handled in the function using tools like BeautifulSoup or Selenium.

Step 3: Data Storage/Transmission

After collecting data, the scraper stores or transmits the results:

Save data to AWS S3 for storage.

Push data to an API for further processing.

Store results in a database like Amazon DynamoDB.

2. Integration with Web Scraping API Services

Lambda can be used to call external Web Scraping API Services to handle more complex scraping tasks, such as bypassing captchas, managing proxies, and rotating IPs.

For instance, if you're using a service like ScrapingBee or ScraperAPI, the Lambda function can make an API call to fetch data.

Example: Integrating Web Scraping API Services

In this case, ScrapingBee handles the web scraping complexities, and Lambda simply calls their API.

3. Using Cloud Triggers and Events

Lambda functions can be triggered in multiple ways based on events. Here are some examples of triggers used in Serverless Web Scraping:

Scheduled Scraping (Cron Jobs Cron Jobs):

You can use AWS EventBridge or CloudWatch Events to schedule your Lambda function to run at specific intervals (e.g., every hour, daily, or weekly).

Example: CloudWatch Event Rule (cron job) for Scheduled Scraping:

This will trigger the Lambda function to scrape a webpage every hour.

File Upload Trigger (Event-Based):

Lambda can be triggered by file uploads in S3. For example, after scraping, if the data is saved as a file, the file upload in S3 can trigger another Lambda function for processing.

Example: Trigger Lambda on S3 File Upload:

By leveraging Serverless Web Scraping using AWS Lambda, you can easily scale your web scraping tasks with Event-Based Triggers such as Scheduled Scraping, API calls, or file uploads. This approach ensures that you avoid the complexity of infrastructure management while still benefiting from scalable, automated data collection. Learn More

#LightweightDataExtraction#AutomatedDataExtraction#StreamlineDataExtraction#ServerlessWebScraping#DataMining

0 notes

Text

Is Python Training Certification Worth It? A Complete Breakdown

Introduction: Why Python, Why Now?