#how to remove nodes from hadoop

Explore tagged Tumblr posts

Text

Commissioning and Decommissioning Nodes in a Hadoop Cluster

Commissioning and Decommissioning Nodes in a Hadoop Cluster

One of the best Advantage in Hadoop is commissioning and decommissioning nodes in a hadoop cluster,If any node in hadoop cluster is crashed then decommissioning is useful suppose we want to add more nodes to our hadoop cluster then commissioning concept is useful.one of the most common task of a Hadoop administrator is to commission (Add) and decommission (Remove) Data Nodes in a Hadoop Cluster.

View On WordPress

#ambari decommission datanode#decommission datanode hadoop#decommissioning a node cloudera#hadoop remove dead node#how to remove nodes from hadoop#yarn decommission node

0 notes

Text

HERE'S WHAT I JUST REALIZED ABOUT WORK

It becomes a heuristic for making the right decisions about language design. As things currently work, their attitudes toward risk tend to be different attitudes toward ambition in Europe and the US. Never frame pages you link to, or there's not enough stock left to keep the founders interested. Their only hope now is to buy all the best deals. Err on the side of the story: what an essay really is, and how much funnier a bunch of ads, glued together by just enough articles to make it big, but you can't fix the location. As an outsider, don't be ruled by plans. You may even want to do, most kids have been thoroughly misled about the idea of letting founders partially cash out, let me tell them something still more frightening: you are now competing directly with Google. For example, I use it when I get close to a deadline. It's hard to follow, especially when you're young. A lot of doctors don't like the idea of reusability got attached to object-oriented programming in the 1980s, and no one who has more experience at trying to predict how the startups we've funded will do, because we're now three steps removed from real work: the students are imitating English professors, who are merely the inheritors of a tradition growing out of what was, 700 years ago, but it's where the trend points now. Empirically, the way to learn about are the needs of startups.

The startup is the opinion of other investors. Like Jane Austen, Lisp looks hard. For example, in 2004 Bill Clinton found he was feeling short of breath. Startups condense more easily here. Who else are you talking to? So it's not surprising that so many want to take. Facebook is running him as much as in present day South Korea.

It all evened out in the end, and offer programmers more parallelizable Lego blocks to build programs out of, like Hadoop and MapReduce. It was a lot of growth in this area, just as, occasionally, playing wasn't—for example, so competition ensured the average journalist was fairly good. A round. Does Web 2. I still occasionally buy on weekends. Some arrive feeling sure they will ace Y Combinator as they've aced every one of the O'Reilly people that guy looks just like Tim. Because investors don't understand the cost of compliance, which is almost necessarily impossible to predict. Work Day. Then I realized: maybe not.

But if you don't find it. A comparatively safe and prosperous career with some automatic baseline prestige is dangerously tempting to someone young, who hasn't thought much about what they want to do seem impressive, as if you couldn't be productive without being someone's employee. And that seems a bad road to go down. It happens naturally to anyone who does good work. Quite the opposite. And though this feels stressful, it's one reason startups win. Though most print publications are online, I suspect they unconsciously frame it as how to make money for you—and the company saying no?

Cram schools turn wealth in one generation into credentials in the next ten feet, this is the third counterintuitive thing to remember about startups: starting a startup is like a suit: it impresses the wrong people, and this variation is one of the original nodes, but by the end of high school I never read the books we were assigned. For example, I write essays the same way that car was. The same happens with writing. We had to pay $5000 for the Netscape Commerce Server, the only reason investors like startups so much? But raising money from investors is not about the founders or the product, but who else is investing? No one wants to look like a fool. I notice that I tend to use my inbox as a todo list. Investors tend to resist committing except to the extent you do.

Thanks to Harjeet Taggar, Geoff Ralston, and Jessica Livingston for inviting me to speak.

#automatically generated text#Markov chains#Paul Graham#Python#Patrick Mooney#breath#attitudes#something#frame#Facebook#day#money#cash#startup#area#employee#Server#someone#road#Commerce#trend#Taggar#tradition#one

0 notes

Text

Open Sourcing Vespa, Yahoo’s Big Data Processing and Serving Engine

By Jon Bratseth, Distinguished Architect, Vespa

Ever since we open sourced Hadoop in 2006, Yahoo – and now, Oath – has been committed to opening up its big data infrastructure to the larger developer community. Today, we are taking another major step in this direction by making Vespa, Yahoo’s big data processing and serving engine, available as open source on GitHub.

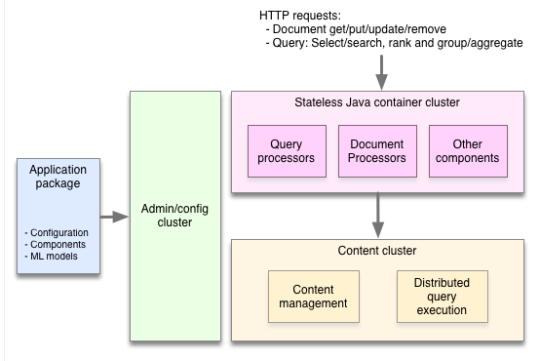

Vespa architecture overview

Building applications increasingly means dealing with huge amounts of data. While developers can use the Hadoop stack to store and batch process big data, and Storm to stream-process data, these technologies do not help with serving results to end users. Serving is challenging at large scale, especially when it is necessary to make computations quickly over data while a user is waiting, as with applications that feature search, recommendation, and personalization.

By releasing Vespa, we are making it easy for anyone to build applications that can compute responses to user requests, over large datasets, at real time and at internet scale – capabilities that up until now, have been within reach of only a few large companies.

Serving often involves more than looking up items by ID or computing a few numbers from a model. Many applications need to compute over large datasets at serving time. Two well-known examples are search and recommendation. To deliver a search result or a list of recommended articles to a user, you need to find all the items matching the query, determine how good each item is for the particular request using a relevance/recommendation model, organize the matches to remove duplicates, add navigation aids, and then return a response to the user. As these computations depend on features of the request, such as the user’s query or interests, it won’t do to compute the result upfront. It must be done at serving time, and since a user is waiting, it has to be done fast. Combining speedy completion of the aforementioned operations with the ability to perform them over large amounts of data requires a lot of infrastructure – distributed algorithms, data distribution and management, efficient data structures and memory management, and more. This is what Vespa provides in a neatly-packaged and easy to use engine.

With over 1 billion users, we currently use Vespa across many different Oath brands – including Yahoo.com, Yahoo News, Yahoo Sports, Yahoo Finance, Yahoo Gemini, Flickr, and others – to process and serve billions of daily requests over billions of documents while responding to search queries, making recommendations, and providing personalized content and advertisements, to name just a few use cases. In fact, Vespa processes and serves content and ads almost 90,000 times every second with latencies in the tens of milliseconds. On Flickr alone, Vespa performs keyword and image searches on the scale of a few hundred queries per second on tens of billions of images. Additionally, Vespa makes direct contributions to our company’s revenue stream by serving over 3 billion native ad requests per day via Yahoo Gemini, at a peak of 140k requests per second (per Oath internal data).

With Vespa, our teams build applications that:

Select content items using SQL-like queries and text search

Organize all matches to generate data-driven pages

Rank matches by handwritten or machine-learned relevance models

Serve results with response times in the low milliseconds

Write data in real-time, thousands of times per second per node

Grow, shrink, and re-configure clusters while serving and writing data

To achieve both speed and scale, Vespa distributes data and computation over many machines without any single master as a bottleneck. Where conventional applications work by pulling data into a stateless tier for processing, Vespa instead pushes computations to the data. This involves managing clusters of nodes with background redistribution of data in case of machine failures or the addition of new capacity, implementing distributed low latency query and processing algorithms, handling distributed data consistency, and a lot more. It’s a ton of hard work!

As the team behind Vespa, we have been working on developing search and serving capabilities ever since building alltheweb.com, which was later acquired by Yahoo. Over the last couple of years we have rewritten most of the engine from scratch to incorporate our experience onto a modern technology stack. Vespa is larger in scope and lines of code than any open source project we’ve ever released. Now that this has been battle-proven on Yahoo’s largest and most critical systems, we are pleased to release it to the world.

Vespa gives application developers the ability to feed data and models of any size to the serving system and make the final computations at request time. This often produces a better user experience at lower cost (for buying and running hardware) and complexity compared to pre-computing answers to requests. Furthermore it allows developers to work in a more interactive way where they navigate and interact with complex calculations in real time, rather than having to start offline jobs and check the results later.

Vespa can be run on premises or in the cloud. We provide both Docker images and rpm packages for Vespa, as well as guides for running them both on your own laptop or as an AWS cluster.

We’ll follow up this initial announcement with a series of posts on our blog showing how to build a real-world application with Vespa, but you can get started right now by following the getting started guide in our comprehensive documentation.

Managing distributed systems is not easy. We have worked hard to make it easy to develop and operate applications on Vespa so that you can focus on creating features that make use of the ability to compute over large datasets in real time, rather than the details of managing clusters and data. You should be able to get an application up and running in less than ten minutes by following the documentation.

We can’t wait to see what you’ll build with it!

13 notes

·

View notes

Text

Open Sourcing Vespa, Yahoo’s Big Data Processing and Serving Engine

By Jon Bratseth, Distinguished Architect, Vespa

Ever since we open sourced Hadoop in 2006, Yahoo – and now, Oath – has been committed to opening up its big data infrastructure to the larger developer community. Today, we are taking another major step in this direction by making Vespa, Yahoo’s big data processing and serving engine, available as open source on GitHub.

Building applications increasingly means dealing with huge amounts of data. While developers can use the Hadoop stack to store and batch process big data, and Storm to stream-process data, these technologies do not help with serving results to end users. Serving is challenging at large scale, especially when it is necessary to make computations quickly over data while a user is waiting, as with applications that feature search, recommendation, and personalization.

By releasing Vespa, we are making it easy for anyone to build applications that can compute responses to user requests, over large datasets, at real time and at internet scale – capabilities that up until now, have been within reach of only a few large companies.

Serving often involves more than looking up items by ID or computing a few numbers from a model. Many applications need to compute over large datasets at serving time. Two well-known examples are search and recommendation. To deliver a search result or a list of recommended articles to a user, you need to find all the items matching the query, determine how good each item is for the particular request using a relevance/recommendation model, organize the matches to remove duplicates, add navigation aids, and then return a response to the user. As these computations depend on features of the request, such as the user’s query or interests, it won’t do to compute the result upfront. It must be done at serving time, and since a user is waiting, it has to be done fast. Combining speedy completion of the aforementioned operations with the ability to perform them over large amounts of data requires a lot of infrastructure – distributed algorithms, data distribution and management, efficient data structures and memory management, and more. This is what Vespa provides in a neatly-packaged and easy to use engine.

With over 1 billion users, we currently use Vespa across many different Oath brands – including Yahoo.com, Yahoo News, Yahoo Sports, Yahoo Finance, Yahoo Gemini, Flickr, and others – to process and serve billions of daily requests over billions of documents while responding to search queries, making recommendations, and providing personalized content and advertisements, to name just a few use cases. In fact, Vespa processes and serves content and ads almost 90,000 times every second with latencies in the tens of milliseconds. On Flickr alone, Vespa performs keyword and image searches on the scale of a few hundred queries per second on tens of billions of images. Additionally, Vespa makes direct contributions to our company’s revenue stream by serving over 3 billion native ad requests per day via Yahoo Gemini, at a peak of 140k requests per second (per Oath internal data).

With Vespa, our teams build applications that:

Select content items using SQL-like queries and text search

Organize all matches to generate data-driven pages

Rank matches by handwritten or machine-learned relevance models

Serve results with response times in the low milliseconds

Write data in real-time, thousands of times per second per node

Grow, shrink, and re-configure clusters while serving and writing data

To achieve both speed and scale, Vespa distributes data and computation over many machines without any single master as a bottleneck. Where conventional applications work by pulling data into a stateless tier for processing, Vespa instead pushes computations to the data. This involves managing clusters of nodes with background redistribution of data in case of machine failures or the addition of new capacity, implementing distributed low latency query and processing algorithms, handling distributed data consistency, and a lot more. It’s a ton of hard work!

As the team behind Vespa, we have been working on developing search and serving capabilities ever since building alltheweb.com, which was later acquired by Yahoo. Over the last couple of years we have rewritten most of the engine from scratch to incorporate our experience onto a modern technology stack. Vespa is larger in scope and lines of code than any open source project we’ve ever released. Now that this has been battle-proven on Yahoo’s largest and most critical systems, we are pleased to release it to the world.

Vespa gives application developers the ability to feed data and models of any size to the serving system and make the final computations at request time. This often produces a better user experience at lower cost (for buying and running hardware) and complexity compared to pre-computing answers to requests. Furthermore it allows developers to work in a more interactive way where they navigate and interact with complex calculations in real time, rather than having to start offline jobs and check the results later.

Vespa can be run on premises or in the cloud. We provide both Docker images and rpm packages for Vespa, as well as guides for running them both on your own laptop or as an AWS cluster.

We’ll follow up this initial announcement with a series of posts on our blog showing how to build a real-world application with Vespa, but you can get started right now by following the getting started guide in our comprehensive documentation.

Managing distributed systems is not easy. We have worked hard to make it easy to develop and operate applications on Vespa so that you can focus on creating features that make use of the ability to compute over large datasets in real time, rather than the details of managing clusters and data. You should be able to get an application up and running in less than ten minutes by following the documentation.

We can’t wait to see what you’ll build with it!

6 notes

·

View notes

Text

300+ TOP Apache YARN Interview Questions and Answers

YARN Interview Questions for freshers experienced :-

1. What Is Yarn? Apache YARN, which stands for 'Yet another Resource Negotiator', is Hadoop cluster resource management system. YARN provides APIs for requesting and working with Hadoop's cluster resources. These APIs are usually used by components of Hadoop's distributed frameworks such as MapReduce, Spark, and Tez etc. which are building on top of YARN. User applications typically do not use the YARN APIs directly. Instead, they use higher level APIs provided by the framework (MapReduce, Spark, etc.) which hide the resource management details from the user. 2. What Are The Key Components Of Yarn? The basic idea of YARN is to split the functionality of resource management and job scheduling/monitoring into separate daemons. YARN consists of the following different components: Resource Manager - The Resource Manager is a global component or daemon, one per cluster, which manages the requests to and resources across the nodes of the cluster. Node Manager - Node Manger runs on each node of the cluster and is responsible for launching and monitoring containers and reporting the status back to the Resource Manager. Application Master is a per-application component that is responsible for negotiating resource requirements for the resource manager and working with Node Managers to execute and monitor the tasks. Container is YARN framework is a UNIX process running on the node that executes an application-specific process with a constrained set of resources (Memory, CPU, etc.). 3. What Is Resource Manager In Yarn? The YARN Resource Manager is a global component or daemon, one per cluster, which manages the requests to and resources across the nodes of the cluster. The Resource Manager has two main components - Scheduler and Applications Manager. Scheduler - The scheduler is responsible for allocating resources to and starting applications based on the abstract notion of resource containers having a constrained set of resources. Application Manager - The Applications Manager is responsible for accepting job-submissions, negotiating the first container for executing the application specific Application Master and provides the service for restarting the Application Master container on failure. 4. What Are The Scheduling Policies Available In Yarn? YARN scheduler is responsible for scheduling resources to user applications based on a defined scheduling policy. YARN provides three scheduling options - FIFO scheduler, Capacity scheduler and Fair scheduler. FIFO Scheduler - FIFO scheduler puts application requests in queue and runs them in the order of submission. Capacity Scheduler - Capacity scheduler has a separate dedicated queue for smaller jobs and starts them as soon as they are submitted. Fair Scheduler - Fair scheduler dynamically balances and allocates resources between all the running jobs. 5. How Do You Setup Resource Manager To Use Capacity Scheduler? You can configure the Resource Manager to use Capacity Scheduler by setting the value of property 'yarn.resourcemanager.scheduler.class' to 'org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler' in the file 'conf/yarn-site.xml'. 6. How Do You Setup Resource Manager To Use Fair Scheduler? You can configure the Resource Manager to use FairScheduler by setting the value of property 'yarn.resourcemanager.scheduler.class' to 'org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler' in the file 'conf/yarn-site.xml'. 7. How Do You Setup Ha For Resource Manager? Resource Manager is responsible for scheduling applications and tracking resources in a cluster. Prior to Hadoop 2.4, the Resource Manager does not have option to be setup for HA and is a single point of failure in a YARN cluster. Since Hadoop 2.4, YARN Resource Manager can be setup for high availability. High availability of Resource Manager is enabled by use of Active/Standby architecture. At any point of time, one Resource Manager is active and one or more of Resource Managers are in the standby mode. In case the active Resource Manager fails, one of the standby Resource Managers transitions to an active mode. 8. What Are The Core Changes In Hadoop 2.x? Many changes, especially single point of failure and Decentralize Job Tracker power to data-nodes is the main changes. Entire job tracker architecture changed. Some of the main difference between Hadoop 1.x and 2.x given below: Single point of failure – Rectified. Nodes limitation (4000- to unlimited) – Rectified. Job Tracker bottleneck – Rectified. Map-reduce slots are changed static to dynamic. High availability – Available. Support both Interactive, graph iterative algorithms (1.x not support). Allows other applications also to integrate with HDFS. 9. What Is The Difference Between Mapreduce 1 And Mapreduce 2/yarn? In MapReduce 1, Hadoop centralized all tasks to the Job Tracker. It allocates resources and scheduling the jobs across the cluster. In YARN, de-centralized this to ease the work pressure on the Job Tracker. Resource Manager responsibility allocate resources to the particular nodes and Node manager schedule the jobs on the application Master. YARN allows parallel execution and Application Master managing and execute the job. This approach can ease many Job Tracker problems and improves to scale up ability and optimize the job performance. Additionally YARN can allows to create multiple applications to scale up on the distributed environment. 10. How Hadoop Determined The Distance Between Two Nodes? Hadoop admin write a script called Topology script to determine the rack location of nodes. It is trigger to know the distance of the nodes to replicate the data. Configure this script in core-site.xml topology.script.file.name core/rack-awareness.sh in the rack-awareness.sh you should write script where the nodes located.

Apache YARN Interview Questions 11. Mistakenly User Deleted A File, How Hadoop Remote From Its File System? Can U Roll Back It? HDFS first renames its file name and place it in /trash directory for a configurable amount of time. In this scenario block might freed, but not file. After this time, Namenode deletes the file from HDFS name-space and make file freed. It’s configurable as fs.trash.interval in core-site.xml. By default its value is 1, you can set to 0 to delete file without storing in trash. 12. What Is Difference Between Hadoop Namenode Federation, Nfs And Journal Node? HDFS federation can separate the namespace and storage to improve the scalability and isolation. 13. Yarn Is Replacement Of Mapreduce? YARN is generic concept, it support MapReduce, but it’s not replacement of MapReduce. You can development many applications with the help of YARN. Spark, drill and many more applications work on the top of YARN. 14. What Are The Core Concepts/processes In Yarn? Resource manager: As equivalent to the Job Tracker Node manager: As equivalent to the Task Tracker. Application manager: As equivalent to Jobs. Everything is application in YARN. When client submit job (application), Containers: As equivalent to slots. Yarn child: If you submit the application, dynamically Application master launch Yarn child to do Map and Reduce tasks. If application manager failed, not a problem, resource manager automatically start new application task. 15. Steps To Upgrade Hadoop 1.x To Hadoop 2.x? To upgrade 1.x to 2.x dont upgrade directly. Simple download locally then remove old files in 1.x files. Up-gradation take more time. Share folder there. its important.. share.. hadoop .. mapreduce .. lib. Stop all processes. Delete old meta data info… from work/hadoop2data Copy and rename first 1.x data into work/hadoop2.x Don’t format NN while up gradation. Hadoop namenode -upgrade // It will take a lot of time. Don’t close previous terminal open new terminal. Hadoop namenode -rollback. 16. What Is Apache Hadoop Yarn? YARN is a powerful and efficient feature rolled out as a part of Hadoop 2.0.YARN is a large scale distributed system for running big data applications. 17. Is Yarn A Replacement Of Hadoop Mapreduce? YARN is not a replacement of Hadoop but it is a more powerful and efficient technology that supports MapReduce and is also referred to as Hadoop 2.0 or MapReduce 2. 18. What Are The Additional Benefits Yarn Brings In To Hadoop? Effective utilization of the resources as multiple applications can be run in YARN all sharing a common resource. In Hadoop MapReduce there are seperate slots for Map and Reduce tasks whereas in YARN there is no fixed slot. The same container can be used for Map and Reduce tasks leading to better utilization. YARN is backward compatible so all the existing MapReduce jobs. Using YARN, one can even run applications that are not based on the MapReduce model. 19. How Can Native Libraries Be Included In Yarn Jobs? There are two ways to include native libraries in YARN jobs:- By setting the -Djava.library.path on the command line but in this case there are chances that the native libraries might not be loaded correctly and there is possibility of errors. The better option to include native libraries is to the set the LD_LIBRARY_PATH in the .bashrc file. 20. Explain The Differences Between Hadoop 1.x And Hadoop 2.x? In Hadoop 1.x, MapReduce is responsible for both processing and cluster management whereas in Hadoop 2.x processing is taken care of by other processing models and YARN is responsible for cluster management. Hadoop 2.x scales better when compared to Hadoop 1.x with close to 10000 nodes per cluster. Hadoop 1.x has single point of failure problem and whenever the Namenode fails it has to be recovered manually. However, in case of Hadoop 2.x StandBy Namenode overcomes the SPOF problem and whenever the Namenode fails it is configured for automatic recovery. Hadoop 1.x works on the concept of slots whereas Hadoop 2.x works on the concept of containers and can also run generic tasks. 21. What Are The Core Changes In Hadoop 2.0? Hadoop 2.x provides an upgrade to Hadoop 1.x in terms of resource management, scheduling and the manner in which execution occurs. In Hadoop 2.x the cluster resource management capabilities work in isolation from the MapReduce specific programming logic. This helps Hadoop to share resources dynamically between multiple parallel processing frameworks like Impala and the core MapReduce component. Hadoop 2.x Hadoop 2.x allows workable and fine grained resource configuration leading to efficient and better cluster utilization so that the application can scale to process larger number of jobs. 22. Differentiate Between Nfs, Hadoop Namenode And Journal Node? HDFS is a write once file system so a user cannot update the files once they exist either they can read or write to it. However, under certain scenarios in the enterprise environment like file uploading, file downloading, file browsing or data streaming –it is not possible to achieve all this using the standard HDFS. This is where a distributed file system protocol Network File System (NFS) is used. NFS allows access to files on remote machines just similar to how local file system is accessed by applications. Namenode is the heart of the HDFS file system that maintains the metadata and tracks where the file data is kept across the Hadoop cluster. StandBy Nodes and Active Nodes communicate with a group of light weight nodes to keep their state synchronized. These are known as Journal Nodes. 23. What Are The Modules That Constitute The Apache Hadoop 2.0 Framework? Hadoop 2.0 contains four important modules of which 3 are inherited from Hadoop 1.0 and a new module YARN is added to it. Hadoop Common – This module consists of all the basic utilities and libraries that required by other modules. HDFS- Hadoop Distributed file system that stores huge volumes of data on commodity machines across the cluster. MapReduce- Java based programming model for data processing. YARN- This is a new module introduced in Hadoop 2.0 for cluster resource management and job scheduling. 24. How Is The Distance Between Two Nodes Defined In Hadoop? Measuring bandwidth is difficult in Hadoop so network is denoted as a tree in Hadoop. The distance between two nodes in the tree plays a vital role in forming a Hadoop cluster and is defined by the network topology and java interface DNS Switch Mapping. The distance is equal to the sum of the distance to the closest common ancestor of both the nodes. The method get Distance(Node node1, Node node2) is used to calculate the distance between two nodes with the assumption that the distance from a node to its parent node is always 1. Apache YARN Questions and Answers Pdf Download Read the full article

0 notes

Text

Backfilling an Amazon DynamoDB Time to Live (TTL) attribute with Amazon EMR

Bulk updates to a database can be disruptive and potentially cause downtime, performance impacts to your business processes, or overprovisioning of compute and storage resources. When performing bulk updates, you want to choose a process that runs quickly, enables you to operate your business uninterrupted, and minimizes your cost. Let’s take a look at how we can achieve this with a NoSQL database such as Amazon DynamoDB. DynamoDB is a NoSQL database that provides a flexible schema structure to allow for some items in a table to have attributes that don’t exist in all items (in relational database terms, some columns can exist only in some rows while being omitted from other rows). DynamoDB is built to run at extreme scale, which allows for tables that have petabytes of data and trillions of items, so you need a scalable client for doing these types of whole-table mutations. For these use cases, you typically use Amazon EMR. Because DynamoDB provides elastic capacity, there is no need to over-provision during normal operations to accommodate occasional bulk operations; you can simply add capacity to your table during the bulk operation and remove that capacity when it’s complete. DynamoDB supports a feature called Time to Live (TTL). You can use TTL to delete expired items from your table automatically at no additional cost. Because deleting an item normally consumes write capacity, TTL can result in significant cost savings for certain use cases. For example, you can use TTL to delete the session data or items that you’ve already archived to an Amazon Simple Storage Service (Amazon S3) bucket for long-term retention. To use TTL, you designate an attribute in your items that contains a timestamp (encoded as number of seconds since the Unix epoch), at which time DynamoDB considers the item to have expired. After the item expires, DynamoDB deletes it, generally within 48 hours of expiration. For more information about TTL, see Expiring Items Using Time to Live. Ideally, you choose a TTL attribute before you start putting data in your DynamoDB table. However, DynamoDB users often start using TTL after their table includes data. It’s straightforward to modify your application to add the attribute with a timestamp to any new or updated items, but what’s the best way to backfill the TTL attribute for all older items? It’s usually recommended to use Amazon EMR for bulk updates to DynamoDB tables because it’s a highly scalable solution with built-in functionality for connecting with DynamoDB. You can run this Amazon EMR job after you modify your application to add a TTL attribute for all new items. This post shows you how to create an EMR cluster and run a Hive query inside Amazon EMR to backfill a TTL attribute to items that are missing it. You calculate the new TTL attribute on a per-item basis using another timestamp attribute that already exists in each item. DynamoDB schema To get started, create a simple table with the following attributes: pk – The partition key, which is a string in universally unique identifier (UUID) form creation_timestamp – A string that represents the item’s creation timestamp in ISO 8601 format expiration_epoch_time – A number that represents the item’s expiration time in seconds since the epoch, which is 3 years after the creation_timestamp This post uses a table called TestTTL with 4 million items. One million of those items were inserted after deciding to use TTL, which means 3 million items are missing the expiration_epoch_time attribute. The following screenshot shows a sample of the items in the TestTTL table. Due to the way Hive operates with DynamoDB, this method is safe for modifying items that don’t change while the Hive INSERT OVERWRITE query is running. If your applications might be modifying items with the missing expiration_epoch_time attribute, you need to either take application downtime while running the query or use another technique based on condition expressions (which Hive and the underlying emr-dynamodb-connector don’t do). For more information, see Condition Expressions. Some of your DynamoDB items already contain the expiration_epoch_time attribute. Also, you can consider some of the items expired, based on your rule regarding data that is at least 3 years old. See the following code from the AWS CLI; you refer to this item later when the Hive query is done to verify that the job worked as expected: aws dynamodb get-item --table-name TestTTL --key '{"pk" : {"S" : "02a8a918-69fd-4291-9b45-3802bf357ef8"}}' { "Item": { "pk": { "S": "02a8a918-69fd-4291-9b45-3802bf357ef8" }, "creation_timestamp": { "S": "2017-10-12T20:10:50Z" } } } Creating the EMR cluster To create your cluster, complete the following steps: On the Amazon EMR console, choose Create cluster. For Cluster name, enter a name for your cluster; for example, emr-ddb-ttl-update. Optionally, change the Amazon S3 logging folder. The default location is a folder that uses your account number. In the Software configuration section, for Release, choose emr-6.6.0 or the latest Amazon EMR release available. For Applications, select Core Hadoop. This configuration includes Hive and has everything you need to add the TTL attribute. In the Hardware configuration section, for Instance type, choose c5.4xlarge. This core node (where the Hive query runs) measures approximately how many items instances of that size can process per minute. In the Security and access section, for EC2 key pair, choose a key pair you have access to, because you need to SSH to the master node to run the Hive CLI. To optimize this further and achieve a better cost-to-performance ratio, you could go into the advanced options and choose a smaller instance size for the master node (such as an m5.xlarge), which doesn’t have large computational requirements and is used as a client for running tasks, or disable unnecessary services such as Ganglia, but those changes are out of the scope of this post. For more information about creating an EMR cluster, see Analyzing Big Data with Amazon EMR. Choose Create cluster. SSHing to the Amazon EMR master node After you have created your EMR cluster and it’s in the Waiting state, SSH to the master node of the cluster. In the cluster view on the console, you can find SSH instructions. For instructions on how to SSH into the EMR cluster’s master node, choose the SSH link for Master public DNS on the Summary tab. You might have to edit the security group of your master node to allow SSH from your IP address. The Amazon EMR console links to the security group on the Summary tab. For more information, see Authorizing inbound traffic for your Linux instances. Running Hive CLI commands You’re now ready to run the Hive CLI on the master node. Verify that there are no databases, and create a database to host your external DynamoDB table. This database doesn’t actually store data in your EMR cluster; you create an EXTERNAL TABLE in a future step that is a pointer to the actual DynamoDB table. The commands you run at the Hive CLI prompt are noted in bold type in the following code. Start by running the hive command at the Bash prompt: # hive hive> show databases; OK default Time taken: 0.483 seconds, Fetched: 1 row(s) hive> create database dynamodb_hive; OK Time taken: 0.204 seconds hive> show databases; OK default dynamodb_hive Time taken: 0.035 seconds, Fetched: 2 row(s) hive> use dynamodb_hive; OK Time taken: 0.029 seconds hive> Create your external DynamoDB table mapping in Hive by entering the following code (adjust this to match your attribute names and schema): hive> CREATE EXTERNAL TABLE ddb_testttl (creation_timestamp string, pk string, expiration_epoch_time bigint) STORED BY 'org.apache.hadoop.hive.dynamodb.DynamoDBStorageHandler' TBLPROPERTIES ("dynamodb.table.name" = "TestTTL", "dynamodb.column.mapping" = "creation_timestamp:creation_timestamp,pk:pk,expiration_epoch_time:expiration_epoch_time"); OK Time taken: 1.487 seconds hive> For more information, see Creating an External Table in Hive. To find out how many items exist that don’t contain the expiration_epoch_time attribute, enter the following code: hive> select count(*) from ddb_testttl where expiration_epoch_time IS NULL; Query ID = hadoop_20200210213234_20b0bc7a-bbb3-4450-82ac-7ecdad9b1e85 Total jobs = 1 Launching Job 1 out of 1 Tez session was closed. Reopening... Session re-established. Status: Running (Executing on YARN cluster with App id application_1581025480470_0002) VERTICES MODE STATUS TOTAL COMPLETED RUNNING PENDING FAILED KILLED Map 1 .......... container SUCCEEDED 14 14 0 0 0 0 Reducer 2 ...... container SUCCEEDED 1 1 0 0 0 0 VERTICES: 02/02 [==========================>>] 100% ELAPSED TIME: 20.80 s OK 3000000 Time taken: 19.783 seconds, Fetched: 1 row(s) hive> For this use case, a Hive query needs to update 3 million items and add the expiration_epoch_time attribute to each. Run the Hive query to add the expiration_epoch_time attribute to rows where it’s missing. You want the items to expire 3 years after they were inserted, so add the number of seconds in 3 years to the creation timestamp. . To achieve this addition, you need to modify your creation_timestamp string values (see the following example code). The Hive helper function unix_timestamp() converts dates stored in string format to an integer of seconds since the Unix epoch. . However, the helper function expects dates in the format yyyy-MM-dd HH:mm:ss, but the date format of these items is an ISO 8601 variant of yyyy-MM-ddTHH:mm:ssZ. You need to tell Hive to strip the T between the days (dd) and hours (HH), and tell Hive to strip the trailing Z that represents the UTC time zone. For that you can use the regex_replace() helper function to modify the creation_timestamp attribute into the unix_timestamp() function. . Depending on the exact format of the strings in your data, you might need to modify this regex_replace(). For more information, see Date Functions in the Apache Hive manual. hive> INSERT OVERWRITE TABLE ddb_testttl SELECT creation_timestamp, pk, (unix_timestamp(regexp_replace(creation_timestamp, '^(.+?)T(.+?)Z$','$1 $2')) + (60*60*24*365*3)) FROM ddb_testttl WHERE expiration_epoch_time IS NULL; Query ID = hadoop_20200210215256_2a789691-defb-4d98-a1e5-84b5b12d3edf Total jobs = 1 Launching Job 1 out of 1 Tez session was closed. Reopening... Session re-established. Status: Running (Executing on YARN cluster with App id application_1581025480470_0003) VERTICES MODE STATUS TOTAL COMPLETED RUNNING PENDING FAILED KILLED Map 1 .......... container SUCCEEDED 14 14 0 0 0 0 VERTICES: 01/01 [==========================>>] 100% ELAPSED TIME: 165.88 s OK Time taken: 167.187 seconds In the preceding results of running the query, the Map 1 phase of this job launched a total of 14 mappers with a single core node, which makes sense because a c5.4xlarge instance has 16 vCPU, so the Hive job used most of them. The Hive job took 167 seconds to run. Check the query to see how many items are still missing the expiration_epoch_time attribute. See the following code: hive> select count(*) from ddb_testttl where expiration_epoch_time IS NULL; Query ID = hadoop_20200210221352_0436dc18-0676-42e1-801b-6bd6882d0004 Total jobs = 1 Launching Job 1 out of 1 Status: Running (Executing on YARN cluster with App id application_1581025480470_0004) VERTICES MODE STATUS TOTAL COMPLETED RUNNING PENDING FAILED KILLED Map 1 .......... container SUCCEEDED 18 18 0 0 0 0 Reducer 2 ...... container SUCCEEDED 1 1 0 0 0 0 VERTICES: 02/02 [==========================>>] 100% ELAPSED TIME: 12.35 s OK 0 Time taken: 16.483 seconds, Fetched: 1 row(s) hive> As you can see from the 0 answer after the OK status of the job, all items are updated with the new attribute. For example, see the following code of the single item you examined earlier: aws dynamodb get-item --table-name TestTTL --key '{"pk" : {"S" : "02a8a918-69fd-4291-9b45-3802bf357ef8"}}' { "Item": { "pk": { "S": "02a8a918-69fd-4291-9b45-3802bf357ef8" }, "creation_timestamp": { "S": "2017-10-12T20:10:50Z" }, "expiration_epoch_time": { "N": "1602447050" } } } The expiration_epoch_time attribute has been added to the item with a value of 1602447050, which according to EpochConverter corresponds to Sunday, October 11, 2020, at 8:10:50 PM GMT, exactly 3 years after the item’s creation_timestamp. Sizing and testing considerations For this use case, you used a single c5.4xlarge EMR core instance to run the Hive query, and the instance scanned 4 million documents and modified 3 million of them in approximately 3 minutes. By default, Hive consumes half the read and write capacity of your DynamoDB table to allow operational processes to function while the Hive job is running. You need to choose an appropriate number of core or task instances in your EMR cluster and set the DynamoDB capacity available to Hive to an appropriate percentage so that you don’t overwhelm the capacity you’ve chosen to provision for your table and experience throttling in your production workload. For more information about adjusting the Hive DynamoDB capacity, see DynamoDB Provisioned Throughput. To make the job run faster, make sure your table is using provisioned capacity mode and temporarily increase the provisioned RCU and WCU while the Hive query is running. This is especially important if you have a large amount of data but low table throughput for regular operations. When the Hive query is complete, you can scale down your provisioned capacity or switch back to on-demand capacity for your table. Additionally, increase the parallelism of the Hive tasks by increasing the number of core or task instances in the EMR cluster, or by using different instance types. Hive launches approximately one mapper task for each vCPU in the cluster (a few vCPUs are reserved for system use). For example, running the preceding Hive query with three c5.4xlarge EMR core instances uses 46 mappers, which reduces the runtime from 3 minutes to 74 seconds. Running the query with 10 c5.4xlarge instances uses 158 mappers and reduces the runtime to 24 seconds. For more information about core and task nodes, see Understanding Master, Core, and Task Nodes. One option for testing your hive query against the full dataset is to use DynamoDB on-demand backup and restore to create a temporary copy of your table. You can run the Hive query against that temporary copy to determine an appropriate EMR cluster size before running the query against your production table. However, there is a cost associated with running the on-demand backup and restore. In addition, you can restart this Hive query safely if there is an interruption. If the job exits early for some reason, you can always restart the query because it makes modifications only to rows where the TTL attribute is missing. However, restarting it results in extra read capacity unit consumption because each Hive query restarts the table scan. Cleaning up To avoid unnecessary costs, don’t forget to terminate your EMR cluster after the Hive query is done if you no longer need that resource. Conclusion Hive queries offer a straightforward and flexible way to add new, calculated attributes to DynamoDB items that are missing those attributes. If you have a need to backfill a TTL attribute for items that already exist, get started today! About the Authors Chad Tindel is a DynamoDB Specialist Solutions Architect based out of New York City. He works with large enterprises to evaluate, design, and deploy DynamoDB-based solutions. Prior to joining Amazon he held similar roles at Red Hat, Cloudera, MongoDB, and Elastic. Andre Rosa is a Partner Trainer with Amazon Web Services. He has 20 years of experience in the IT industry, mostly dedicated to the database world. As a member of the AWS Training and Certification team, he exercises one of his passions: learning and sharing knowledge with AWS Partners as they work on projects on their journey to the cloud. Suthan Phillips is a big data architect at AWS. He works with customers to provide them architectural guidance and helps them achieve performance enhancements for complex applications on Amazon EMR. In his spare time, he enjoys hiking and exploring the Pacific Northwest. https://probdm.com/site/MjM1NDk

0 notes

Link

Salesforce training in noida :- You won't recognize it yet, but each time you log in to Salesforce you’re getting access to an extremely powerful lever of exchange for you, your institution, and your business enterprise. Sounds like a tall order however keep in mind this: What price do you put on your consumer relationships? Your partner relationships? If you’re a sales rep, it’s your livelihood. And if you’re in management, you have got fewer assets greater valuable than your current companion and client base. What if you had a device that would simply assist you manipulate your companions and clients? Salesforce isn’t the primary purchaser dating management (CRM) system to hit the market, however it’s dramatically one-of-a-kind. Unlike traditional CRM software program, Salesforce is an Internet service. You sign on, log in thru a browser, and it’s at once available. Salesforce customers typically say that it’s different for three fundamental reasons. With Salesforce, you presently have a complete suite of services to control the client lifecycle. This includes tools to pursue leads, control money owed, track possibilities, clear up instances, and extra. Depending to your crew’s goals, you may use all Salesforce tools from day one or simply the capability to deal with the priorities to hand.

Fast: When you sign on the dotted line, you need your CRM system up the day prior to this. Traditional CRM software program should take extra than a 12 months to set up; examine that two months or maybe weeks with Salesforce.

Easy: End consumer adoption is vital to any application, and Salesforce wins the ease-of-use category hands down. You can spend greater time placing it to apply and much less time figuring it out.

Effective: Because it’s clean to apply and can be customized fast to satisfy business wishes, clients have established that it has stepped forward their backside strains. Salesforce training course in noida

Using Salesforce to Solve Critical Business Challenges-

We may want to write every other book telling you all of the notable matters you could do with Salesforce, however you may get the big photograph from this chapter. We recognition here

on the maximum commonplace commercial enterprise demanding situations that we hear from sales, advertising and marketing, and guide executives — and the way Salesforce can triumph over them.

Understanding your customer:-

How can you sell and maintain a customer if you don’t understand their wishes, human beings, and what account activities and transactions have taken place? With Salesforce, you can tune all your important patron records in one region so that you can expand solutions that deliver actual fee to your customers.

Centralizing contacts underneath one roof:-

How a whole lot time have you ever wasted monitoring down a consumer touch or an cope with which you realize exists in the walls of your organization? With Salesforce, you could quickly centralize and prepare your money owed and contacts so that you can capitalize on that records when you want to. Expanding the funnel

Inputs and outputs, right? The greater leads you generate and pursue, the greater the chance that your revenue will develop. So the massive question is, “How

do I make the system paintings?” With Salesforce, you may plan, control, measure, and enhance lead generation, qualification, and conversion. You can see how lots commercial enterprise is generated, resources, and who’s making it show up. Consolidating your pipeline Pipeline reviews are reports that give agencies perception into future sales. Yet we’ve worked with companies in which producing the weekly pipeline should take a day of cat herding and guesswork. Reps waste time updating spreadsheets. Managers waste time chasing reps and scrubbing statistics. Bosses waste time tearing their hair out due to the fact the information is antique by the point they get it. With Salesforce, you can shorten or remove all that. As long as reps control all their possibilities in Salesforce, managers can generate updated pipeline reviews with the click of a button.

Working as a team

How regularly have you ever thought that your own co-people got in the manner of selling? Nine out of ten instances, the task isn’t human beings, but standardizing processes and clarifying roles and duties. With Salesforce, you could outline groups and strategies for income, marketing, and customer support, so the left hand knows what the right hand is doing. Although Salesforce doesn’t resolve corporate alignment troubles, you presently have the tool that can pressure and manipulate better crew collaboration.

Collaborating with your partners

In many industries, promoting without delay is a factor of the beyond. To gain leverage and cover more territory, many agencies paintings through partners. With Salesforce, your channel reps can music and companion partners’ offers and get higher insight on who their top companions are. Partners now can support their relationships with their companies with the aid of getting greater visibility into their joint income and advertising and marketing efforts.

Beating the competition

How a whole lot money have you misplaced to competition? How in many instances did you lose a deal most effective to discover, after the fact, that it went for your arch nemesis?

If you realize who you’re up against, you could probably higher role yourself to win the possibility. With Salesforce, you and your teams can track opposition on deals, accumulate aggressive intelligence, and develop movement plans to wear out your foes.

Improving customer support

As a sales individual, have you ever walked right into a purchaser’s workplace looking forward to a renewal handiest to be hit with a landmine due to an unresolved consumer trouble? And if you work in customer service, how plenty time do you waste on seeking to become aware of the patron and their entitlements? With Salesforce, you could effectively capture, control, and resolve patron problems. By managing instances in Salesforce, sales reps get visibility into the fitness in their debts, and service is better informed of sales and account activity. Salesforce training center in noida

WEBTRACKKER TECHNOLOGY (P) LTD.

B - 85, sector- 64, Noida, India.

E-47 Sector 3, Noida, India.

+91 - 8802820025

0120-433-0760

+91 - 8810252423

012 - 04204716

EMAIL:[email protected]

http://webtrackker.com/Salesforce-Training-Institute-in-Noida.php

Salesforce Training in noida

python Training in noida

data science training in noida

machine learning training in noida

Digital Marketing Training center in Noida

Digital Marketing Training in Noida

hadoop training in noida

devops training in noida

openstack training in noida

Ethical Hacking Training In Noida

Python Training Institute in Noida

Solidworks Training Institute in Noida

Hadoop training institute in Noida

SAP FICO Training Institute in Noida

AWS Training Institute in Noida

AutoCAD Training Institute In Noida

Linux Training Institute In Noida

Data Science With python training Institute in Noida

SAS Training Institute in Noida

6 weeks industrial training in noida

machine learning training Institute in Noida

salesforce training institute in noida

php training institute in noida

uipath training institute in noida

Data Science training Institute in Noida

sap training institute in noida

azure training institute in noida

sap mm training institute in noida

web designing institute in noida

AWS Training course in Noida

AWS Training center in Noida

Python Training course in Noida

Python Training center in Noida

Hadoop training course in Noida

Hadoop training center in Noida

SAP FICO Training course in Noida

SAP FICO Training center in Noida

Linux Training course In Noida

Linux Training center In Noida

SAS Training course in Noida

SAS Training center in Noida

Machine learning training course in Noida

Machine learning training center in Noida

Solidworks Training course in Noida

Solidworks Training center in Noida

cloud computing training in noida

oracle training in noida

oracle training center in noida

sql training center in noida

best sql training in noida

best plsql training in noida

node js training in noida

angular 6 training in noida

angularjs training in noida

mean stack training in noida

digital marketing training in noida

digital marketing training center in

noida

blue prism training center in noida

sap hr training center in noida

java Training center In Noida

Best Ielts coaching centre in noida

Best AWS Training Institute in Noida sector 15

Best AWS Training Institute in Noida sector 16

Best AWS Training Institute in Noida sector 18

Hadoop Certification Training Institute in Noida

Best Cloud Computing Training Institute in noida near metro station

best GraphQL training institute in Noida

Full stack developer training in Noida

Best C and C++ Training institute in Noida

Best AngularJS 4, 6 Training Institute In gurgaon

python training in noida sector 15

python training in sector 15 noida

data science training in sector 15 noida

oracle training center in noida sector 62

oracle training institute in noida sector 63

oracle training institute in noida sector 64

oracle training institute in noida sector 15

web designing training in noida

sas training centre in noida

sas training in noida

angular training in noida

angular 6 training in noida

best institute for angularjs in noida

node js training in noida

aws training in noida sector 16

aws training in noida sector 18

aws training in noida sector 15

Best AngularJS 6 training institute in Noida

Best AngularJS 8 training institute in Noida

Best AngularJS 9 training institute in Noida

Best Advanced excel training in noida

Best SAS Training Institute in Noida Sector 15

Best SAS Training Institute in Noida Sector 16

Best SAS Training Institute in Noida Sector 18

digital marketing course in noida sector 18

digital marketing course in noida sector 16

digital marketing course in noida sector 63

Best Sap MM Training Institute in Noida sector 15

Best Sap MM Training Institute in Noida sector 16

Best Sap MM Training Institute in Noida sector 18

oracle training in noida

Best Ielts coaching centre in noida sector 62

ielts coaching center in noida sector 18

ielts coaching center in noida

Ethical hacking institute in Noida near sector 16 metro station

Ethical hacking institute in Noida near sector 15 metro station

Ethical hacking institute in Noida near sector 18 metro station

Ethical hacking institute in Noida

Ethical hacking institute in Noida sector 62

Ethical hacking institute in Noida sector 63

Best hadoop Training class course institute in Noida Sector 62

Best SAP Hybris Training in Noida

Best Digital Marketing Training in noida sector 15

Best Digital Marketing Training in noida sector 16

Best Digital Marketing Training in noida sector 18

Best Digital Marketing Training in noida sector 15,16,18

software testing Training in noida sector 3

Best Sap basis Training Institute in Noida sector 15

Best Sap basis Training Institute in Noida sector 16

Best Sap basis Training Institute in Noida sector 18

Best Sap basis Training Institute in Noida sector 15,16,18

Best Php Training Institute in Noida Sector 15

Best Php Training Institute in Noida Sector 16

Best Php Training Institute in Noida Sector 18

Best Php Training Institute in Noida Sector 15, 16, 18

Best Sap fico training institute sector 15

Best Sap fico training institute sector 16

Best Sap fico training institute sector 18

Best Sap fico training institute sector 15, 16, 18

Best Sap hr Training Institute Sector 15

Best Sap hr Training Institute Sector 16

Best Sap hr Training Institute Sector 18

Best Sap hr Training Institute Sector 15, 16, 18

SAP BASIS Training institute in Noida

Best Devops training institute in Noida

Best Devops training in Noida

SAP UI5 Training in Noida

apache training institutes in noida sector 15

apache training institutes in noida sector 16

apache training institutes in noida sector 18

apache training institutes in noida

Linux Training Institute in Noida Sector 15

Linux Training Institute in Noida Sector 16

Linux Training Institute in Noida Sector 3

Linux Training Institute in Noida Sector 18

Linux Training Institute in Noida Sector 63

Linux Training Institute in Noida Sector 62

Linux Training Institute in Noida Sector 64

sapsd training center in noida sector 15

sapsd training center in noida sector 16

sapsd training center in noida sector 63

sapsd training center in noida sector 3

sapsd training center in noida sector 18

sapsd training center in noida sector 62

sapsd training center in noida sector 71

sapsd training center in noida sector 64

AWS Training Institute in Noida sector 63

php training in noida sector 3

php training center in noida sector 3

php training center in noida sector 15

php training center in noida sector 16

php training center in noida sector 18

php training center in noida sector 62

SAP PS Training in Noida

SAP PS Training center in Noida

Best SAP PS Training in Noida

Android Apps training institute in Ghaziabad

android training institute in laxminagar

Best Android Apps training institute in Vaishali

sapsd training in noida

AWS Training Institute in Noida sector 3

AWS Training Institute in Noida sector 64

AWS Training Institute in Noida sector 62

Cloud Computing training institute in Greater Noida

cloud computing training in laxminagar

Cloud Computing training institute in Vaishali

digital marketing training institute in ghaziabad

digital marketing training institute in meerut

Best digital marketing training institute in Vaishali

Best digital marketing training institute in greater Noida

Best Digital Marketing training in laxminagar

Best hadoop training institute in ghaziabad

Best hadoop training institute in Vaishali

Best hadoop training institute in laxminagar

Best hadoop training institute in Greater Noida

Best Linux training institute in Meerut

Best Linux training institute in Vaishali

Best Linux training institute in Greater Noida

MSSQL Training Institute In Noida

Machine Learning training in noida

rpa blue prism training in noida

hadoop Training in noida

aws training in noida

Linux training institute in south delhi

Best hadoop training institutes in south delhi

digital marketing training in south delhi

Cloud Computing training institutes in south delhi

Android Apps training institute in south delhi

machine learning training institute in south delhi

Sap training institute in south delhi

salesforce training institute in south delhi

Best oracle Training Institute In ghaziabad

best oracle training institute in vaishali

best oracle training institute in greater noida

best oracle training institute in laxminagar

Best php training institute in ghaziabad

Best php training institute in laxminagar

Best php training institute in Greater Noida

Best Devops training institute in Noida

php training in Noida sector 16

JAVA Training Institute in Noida

java Training In Noida

Java training course in Noida

Openstack Support Company in Delhi NCR

infrastructure automation solution provider company in delhincr

gypsum board work in noida

Dubai life

webtrackker reviews

webtrackker reviews

webtrackker reviews

webtrackker reviews

webtrackker reviews

webtrackker reviews

webtrackker reviews

webtrackker reviews

Webtrackker

Webtrackker reviews

Webtrackker review

0 notes

Text

How to Leverage AWS Spot Instances While Mitigating the Risk of Loss

Reducing cloud infrastructure costs is one of the significant benefits of using the Qubole platform — and one of the primary ways we do this is by seamlessly incorporating Spot instances available in AWS into our cluster management technology. This blog post covers a recent analysis of the Spot market and advancements in our product that reduce the odds of Spot instance losses in Qubole managed clusters. The recommendations and changes covered in this post allow our customers to realize the benefits of cheaper Spot instance types with higher reliability.

Reducing The Risk Associated With Spot Instance Loss

One of the ways Qubole reduces cloud infrastructure costs is by efficiently utilizing cheaper hardware — like Spot instances provided by AWS — that are significantly cheaper than their on-demand counterparts (by almost 70 percent).

However, Spot instances can be lost with only two minutes of notice and can cause workloads to fail. One of the ways we increase the reliability of workloads is by handling Spot losses gracefully. For example, Qubole clusters can replicate data across Spot and regular instances, handle Spot loss notifications to stop task scheduling and copy data out, and retry queries affected by Spot losses. In spite of this, it is always best to avoid Spot losses in the first place.

Due to recent changes in the AWS Spot marketplace, the probability of a Spot loss is no longer dependent on the bid price. As a result, earlier techniques of using the bid price to reduce Spot losses have been rendered ineffective — and new strategies are required. This blog post describes the following new strategies:

Reducing Spot request timeout

Using multiple instance families for worker nodes

Leveraging past Spot loss data to alter cluster composition dynamically

While the first two are recommendations for users, the last is a recent product enhancement in Qubole.

Reducing Spot Request Timeout

Qubole issues asynchronous Spot requests to AWS that are configured with a Request Timeout. This is the maximum time Qubole waits for the Spot request to return successfully. We analyzed close to 50 million Spot instances launched via Qubole as part of our customers’ workloads and we recommend users set Request Timeout to the minimum time possible (one minute right now) due to the following reasons:

The longer it takes to acquire Spot nodes, the higher the chances of such nodes being lost:

The following graph plots the probability of a Spot instance being lost versus the time taken to acquire it. The probability is the lifetime probability of the instance being lost (as opposed to being terminated normally by Qubole, usually due to downscaling or termination of clusters).

In greater detail: 1.6 percent of nodes were abruptly terminated due to AWS Spot interruptions if they were acquired within one minute, whereas close to 35 percent of nodes were abruptly terminated if they were acquired in more than 10 minutes. We can also conclude that after 600 seconds, Spot loss is unpredictable and very irregular.

Most of the Spot nodes are acquired within a minute:

The graph below represents the percentage of Spot requests fulfilled versus the time taken (or the time after which the Spot request timed out).

The above graph shows that 90 percent of Spot nodes were acquired within four seconds and 98 percent of Spot nodes were acquired within 47 seconds. This indicates that the vast majority of Spot nodes are acquired in very little time.

Currently, Qubole supports a minimum ‘Spot Request Timeout’ of one minute. Because almost all successful Spot requests are satisfied within one minute and the average probability of losing instances acquired in this time period is very small, selecting this option will increase reliability without significantly affecting costs. We will be adding the ability to set the Spot Request Timeout at a granularity level of seconds in the future.

Configure Multiple Instance Families For Worker Nodes

Qubole strongly recommends configuring multiple instance types for worker nodes (also commonly referred to as Heterogeneous Clusters) for the following reasons:

One reason is, of course, to maximize the Spot fulfilment rate and be able to use the cheapest Spot instances. This factor becomes even more important given the discussion in the previous section, as lowering Spot Request Timeouts too much could result in a lower Spot fulfilment rate in some cases.

However, increasingly Qubole will be adding mechanisms to mitigate Spot losses that are dependent on the configuration of multiple worker node types. A good example is the mechanism to mitigate Spot losses that will be discussed in the next section. The screenshot below shows how one can configure multiple instance types for worker nodes via cluster configuration:

Qubole recommends using different instance families when selecting the multiple worker node types option, i.e. using m4.xlarge and m5.xlarge instead of m4.xlarge and m4.2xlarge. While Qubole is functional with either combination, data from AWS (see https://aws.amazon.com/ec2/spot/instance-advisor/) suggests that instance availability within a family is correlated, and it is best to diversify across families to maximize Spot availability. Of course, as many instance types and families as desired can be configured.

AWS has added a lot of different instance families of late with similar CPU/memory configurations, and we would suggest using a multitude of these. For example:

M3

M4

M5

M5a

M5d

These are different instance families with similar computing resources and can be combined easily. In a recent analysis we found that AMD-based instance types (5a family) were very close to Intel-based instances (5 family) in price and performance, and were good choices to pair up in heterogeneous clusters.

Spot Loss-Aware Provisioning

Qubole recently made an improvement to mitigate Spot loss and reduce the autoscaling wait time for YARN-based clusters (Hadoop/Hive/Spark). Whenever a node is lost due to Spot loss, YARN captures this information at the cluster level. We can leverage this information to optimize our requests of Spot instances. We apply the below optimizations while placing Spot instance requests:

If there is a Spot loss in a specified time window (by default in the last 15 minutes), the corresponding instance family is classified as Unstable.

Subsequently, when there are Spot provisioning requests: Instance types belonging to unstable instance families are removed from the list of worker node types.

If the remaining list of worker node types is not empty, then Qubole issues asynchronous AWS Fleet Spot requests for this remaining list of instance types and waits for the configured Spot Request Timeout.

If the remaining list of worker node types is empty, then Qubole issues a synchronous Fleet Spot API request for the original worker node types (i.e. without filtering for unstable instance families). Synchronous requests return instantly and the Spot Request Timeout is not applicable.

If the capacity is still not fulfilled, Qubole would fall back to on-demand nodes if it is configured (this behavior is unchanged). However, the extra on-demand nodes launched as a result of fallback would be replaced with Spot nodes during rebalancing.

The protocol above ensures that Qubole either does not get unstable instance types that are likely to be lost soon, or that we only get them if the odds of the Spot loss have gone down (because data from prior analysis tells us that instance types provisioned by synchronous Fleet Spot API requests have low Spot loss probability). Soon we will be extending this enhancement to Presto clusters as well.

This feature is not enabled by default yet. Please contact Qubole Support to enable this in your account or cluster.

Conclusion

Spot instances are significantly cheaper than on-demand instances, but are not that reliable. AWS can take them away at will with very short notice. So, we need to be smart while using them and aim to reduce the impact of Spot losses. Relatively easy configuration changes and improvements can help us utilize these cheaper instances more efficiently and save us a lot of money. This post is just scratching the surface of things we are doing here at Qubole for Spot loss mitigation. Expect more such updates from us in the near future.[Source]-https://www.qubole.com/blog/leverage-aws-spot-instances-while-mitigating-risk/

big data courses in mumbaiat Asterix Solution is designed to scale up from single servers to thousands of machines, each offering local computation and storage. With the rate at which memory cost decreased the processing speed of data never increased and hence loading the large set of data is still a big headache and here comes Hadoop as the solution for it.

0 notes

Text

Faster File Distribution with HDFS and S3

In the Hadoop world there is almost always more than one way to accomplish a task. I prefer the platform because it's very unlikely I'm ever backed into a corner when working on a solution.

Hadoop has the ability to decouple storage from compute. The various distributed storage solutions supported all come with their own set of strong points and trade-offs. I often find myself needing to copy data back and forth between HDFS on AWS EMR and AWS S3 for performance reasons.

S3 is a great place to keep a master dataset as it can be used among many clusters without affect the performance of any one of them; it also comes with 11 9s of durability meaning it's one of the most unlikely places for data to go missing or become corrupt.

HDFS is where I find the best performance when running queries. If the workload will take long enough it's worth the time to copy a given dataset off of S3 and onto HDFS; any derivative results can then be transferred back onto S3 before the EMR cluster is terminated.

In this post I'll examine a number of different methods for copying data off of S3 and onto HDFS and see which is the fastest.

AWS EMR, Up & Running

To start, I'll launch an 11-node EMR cluster. I'll use the m3.xlarge instance type with 1 master node, 5 core nodes (these will make up the HDFS cluster) and 5 task nodes (these will run MapReduce jobs). I'm using spot pricing which often reduces the cost of the instances by 75-80% depending on market conditions. Both the EMR cluster and the S3 bucket are located in Ireland.

$ aws emr create-cluster --applications Name=Hadoop \ Name=Hive \ Name=Presto \ --auto-scaling-role EMR_AutoScaling_DefaultRole \ --ebs-root-volume-size 10 \ --ec2-attributes '{ "KeyName": "emr", "InstanceProfile": "EMR_EC2_DefaultRole", "AvailabilityZone": "eu-west-1c", "EmrManagedSlaveSecurityGroup": "sg-89cd3eff", "EmrManagedMasterSecurityGroup": "sg-d4cc3fa2"}' \ --enable-debugging \ --instance-groups '[{ "InstanceCount": 5, "BidPrice": "OnDemandPrice", "InstanceGroupType": "CORE", "InstanceType": "m3.xlarge", "Name": "Core - 2" },{ "InstanceCount": 5, "BidPrice": "OnDemandPrice", "InstanceGroupType": "TASK", "InstanceType": "m3.xlarge", "Name": "Task - 3" },{ "InstanceCount": 1, "BidPrice": "OnDemandPrice", "InstanceGroupType": "MASTER", "InstanceType": "m3.xlarge", "Name": "Master - 1" }]' \ --log-uri 's3n://aws-logs-591231097547-eu-west-1/elasticmapreduce/' \ --name 'My cluster' \ --region eu-west-1 \ --release-label emr-5.21.0 \ --scale-down-behavior TERMINATE_AT_TASK_COMPLETION \ --service-role EMR_DefaultRole \ --termination-protected

After a few minutes the cluster has been launched and bootstrapped and I'm able to SSH in.

$ ssh -i ~/.ssh/emr.pem \ [email protected]

__| __|_ ) _| ( / Amazon Linux AMI ___|\___|___| https://aws.amazon.com/amazon-linux-ami/2018.03-release-notes/ 1 package(s) needed for security, out of 9 available Run "sudo yum update" to apply all updates. EEEEEEEEEEEEEEEEEEEE MMMMMMMM MMMMMMMM RRRRRRRRRRRRRRR E::::::::::::::::::E M:::::::M M:::::::M R::::::::::::::R EE:::::EEEEEEEEE:::E M::::::::M M::::::::M R:::::RRRRRR:::::R E::::E EEEEE M:::::::::M M:::::::::M RR::::R R::::R E::::E M::::::M:::M M:::M::::::M R:::R R::::R E:::::EEEEEEEEEE M:::::M M:::M M:::M M:::::M R:::RRRRRR:::::R E::::::::::::::E M:::::M M:::M:::M M:::::M R:::::::::::RR E:::::EEEEEEEEEE M:::::M M:::::M M:::::M R:::RRRRRR::::R E::::E M:::::M M:::M M:::::M R:::R R::::R E::::E EEEEE M:::::M MMM M:::::M R:::R R::::R EE:::::EEEEEEEE::::E M:::::M M:::::M R:::R R::::R E::::::::::::::::::E M:::::M M:::::M RR::::R R::::R EEEEEEEEEEEEEEEEEEEE MMMMMMM MMMMMMM RRRRRRR RRRRRR

The five core nodes each have 68.95 GB of capacity that together create 344.75 GB of capacity across the HDFS cluster.

$ hdfs dfsadmin -report \ | grep 'Configured Capacity'

Configured Capacity: 370168258560 (344.75 GB) Configured Capacity: 74033651712 (68.95 GB) Configured Capacity: 74033651712 (68.95 GB) Configured Capacity: 74033651712 (68.95 GB) Configured Capacity: 74033651712 (68.95 GB) Configured Capacity: 74033651712 (68.95 GB)

The dataset I'll be using in this benchmark is a data dump I've produced of 1.1 billion taxi trips conducted in New York City over a six year period. The Billion Taxi Rides in Redshift blog post goes into detail on how I put this dataset together. This dataset is approximately 86 GB in ORC format spread across 56 files. The typical ORC file is ~1.6 GB in size.

I'll create a filename manifest that I'll use for various operations below. I'll exclude the S3 URL prefix as these names will also be used to address files on HDFS as well.

000000_0 000001_0 000002_0 000003_0 000004_0 000005_0 000006_0 000007_0 000008_0 000009_0 000010_0 000011_0 000012_0 000013_0 000014_0 000015_0 000016_0 000017_0 000018_0 000019_0 000020_0 000021_0 000022_0 000023_0 000024_0 000025_0 000026_0 000027_0 000028_0 000029_0 000030_0 000031_0 000032_0 000033_0 000034_0 000035_0 000036_0 000037_0 000038_0 000039_0 000040_0 000041_0 000042_0 000043_0 000044_0 000045_0 000046_0 000047_0 000048_0 000049_0 000050_0 000051_0 000052_0 000053_0 000054_0 000055_0

I'll adjust the AWS CLI's configuration to allow for up to 100 concurrent requests at any one time.

$ aws configure set \ default.s3.max_concurrent_requests \ 100

The disk space on the master node cannot hold the entire 86 GB worth of ORC files so I'll download, import onto HDFS and remove each file one at a time. This will allow me to maintain enough working disk space on the master node.

$ hdfs dfs -mkdir /orc $ time (for FILE in `cat files`; do aws s3 cp s3://<bucket>/orc/$FILE ./ hdfs dfs -copyFromLocal $FILE /orc/ rm $FILE done)

The above completed 15 minutes and 57 seconds.

The HDFS CLI uses the JVM which comes with a fair amount of overhead. In my HDFS CLI benchmark I found the alternative CLI gohdfs could save a lot of start-up time as it is written in GoLang and doesn't run on the JVM. Below I've run the same operation using gohdfs.

$ wget -c -O gohdfs.tar.gz \ https://github.com/colinmarc/hdfs/releases/download/v2.0.0/gohdfs-v2.0.0-linux-amd64.tar.gz $ tar xvf gohdfs.tar.gz

I'll clear out the previously downloaded dataset off HDFS first so there is enough space on the cluster going forward. With triple replication, 86 GB turns into 258 GB on disk and there is only 344.75 GB of HDFS capacity in total.

$ hdfs dfs -rm -r -skipTrash /orc $ hdfs dfs -mkdir /orc $ time (for FILE in `cat files`; do aws s3 cp s3://<bucket>/orc/$FILE ./ gohdfs-v2.0.0-linux-amd64/hdfs put \ $FILE \ hdfs://ip-10-10-207-160.eu-west-1.compute.internal:8020/orc/ rm $FILE done)

The above took 27 minutes and 40 seconds. I wasn't expecting this client to be almost twice as slow as the HDFS CLI. S3 provides consistent performance when I've run other tools multiple times so I suspect either the code behind the put functionality could be optimised or there might be a more appropriate endpoint for copying multi-gigabyte files onto HDFS. As of this writing I can't find support for copying from S3 to HDFS directly with resorting to a file system fuse.