#i have a bunch of do loops that create an array and pastes the values of the array into a file. If i print out the array after the loop

Explore tagged Tumblr posts

Text

my code is cursed again TT

#fortran#i have a bunch of do loops that create an array and pastes the values of the array into a file. If i print out the array after the loop#the values are different than the ones in the file#this has just started occuring spontaneously#it was not there before#i dont know how to make it go away#probably something is no initialized correctly or something?#*sigh* fortran

0 notes

Text

Random level generation basics

I wanted to look into random level generation as it's something I definitely would like to use in this project. I'm using chunks to create the level - as I mentioned in a previous blog post, so I will be randomizing stuff within these chunks.

Parenting

I'll end up having many different chunks featuring many different things ranging from moving platforms to spinning obstacles. If I were to create a ton of chunk blueprints, some of the code would become tedious. There's a nice workaround for this though - parenting.

In blueprints, a parent can have children. Any blueprint that is a child will contain all of the data from its matching parent. This is especially useful if you want to create a bunch of the same things with some small changes. Any changes you do in the parent blueprint will apply to all of its children.

I will have a parent containing just the boundaries of the chunk and a collision to spawn the next chunk. This blueprint will need no more design or code than this - all of the work will be done in child blueprints. This blueprint is called Chunk_BP.

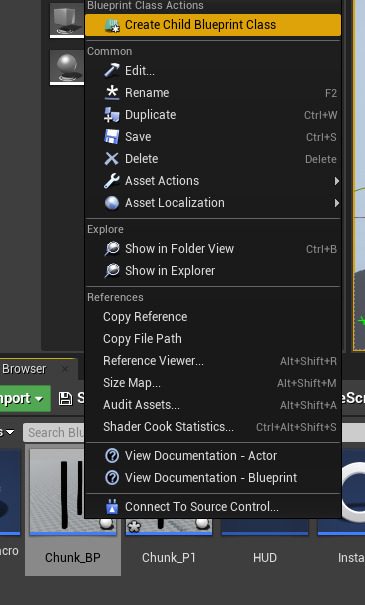

To create a child blueprint, right-click on the blueprint you want as parent and click 'Create child blueprint class'. This will create a child blueprint which is currently identical to the parent.

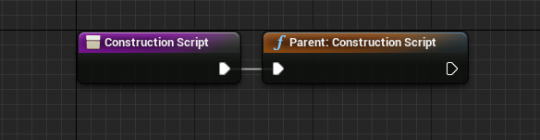

After opening up the child blueprint and going into the construction script, you'll see an orangey box. This is essentially running all of the code in the entire parent blueprint in a single node. No matter how much code is in the parent's event graph, the child's event graph will be completely empty but it will all still run.

Randomly spawning platforms

In the child blueprint, I added 7 platforms evenly spaced up the chunk. I needed to randomise their horizontal position though as currently they're all on top of each other. There's also some logic behind this though - I need to make sure that the gap between the platforms is possible for the player to jump across (it can't be too big).

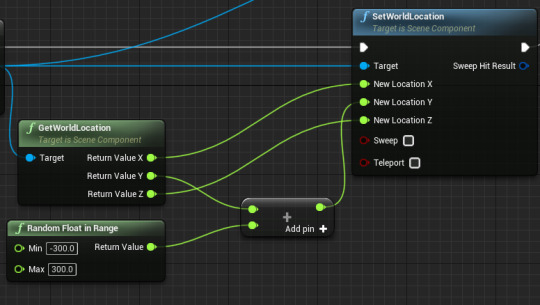

To randomise but limit the horizontal position, I got the platform's location and added a random value between -300 and 300 to the Y-coordinate and set this as the new world location of the platform. A 'random float in range' node will output a random float between a given minimum and maximum.

For each loop

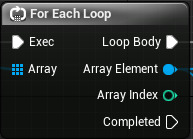

I have 7 platforms, and I don't want to write nor paste this code 6 more times. Instead, I used a 'For Each' loop. A for each loop will run a piece of code for each item in a given array. An array is just a list of stuff.

It has an Exec and array input, the array can be of any type such as actor, float or vector. The loop body is where you put the code you want to run in the loop. Array element is the current element of the array. Array index is the index of the current array element. Completed runs when the loop is done.

I got all 7 of my platforms and searched for a 'Make Array' node to store of these components into an array. I used this array as the for each loop's input. The 'Array Element' is used as the target for the get and set location nodes as it will adjust the location of the correct platform. One final thing I did was randomise the width of the platforms by using a random float node to randomise the relative scale of the platform.

Preview of what the randomly generated platforms look like below. There's still some more logic I could add to them to make the gameplay flow more smoothly, and this is something I will look into in the future.

0 notes

Text

Max and Leap changes

Once I had stereo sound working, I knew that I simply had to try and get a more immersive sound scape working. Even stereo panning was insanely effective, however, as soon as one turned their head so that both speakers are closer to one ear, the illusion is instantly ruined. Additionally, I thought that an interesting way of exploring my identity could be through recordings in multiple languages, rather than just English (at this point, I was still preparing for recording. Sorry about the inconsistency in chronology, but I was really working on everything simultaneously, so I’m breaking it up by topics for my own sanity).

Initially, I was very scared of even opening up Max. For me, patch-based programming is a bit alien, as it requires knowing what each object does, why each one is better for what, etc., whereas with Processing, even if there is a function for something, as long as I understand the very basics of how a library works, I can write my own functions and code to do what’s needed. Without Jen’s help, I probably would have been unable to get anywhere near the final patch, so Jen, sincerely, thank you. While I understood the basics of what was needed, and was able to find many examples for each of the separate elements of the patch, I was struggling to connect them together, as well as to integrate them with the Leap Motion. As it happens, the main documented methods of Leap integration with Max is deprecated for Windows, and as far as I’ve read, difficult to set-up, even on Mac.

I was already planning on using both Processing and Max, as I was nowhere near knowledeable in Max to undertake building the visual framework that I had in mind using it, I realized that I can simply send messages from Processing to Max with the data it needed.

Simply, he says. First, we start with a whole bunch of data from Processing that gets broken up into bits, as needed. So, we’re sending each hand’s XYZ, and each finger’s XYZ. And scaling all of them.

Two subpatches as below in the main patch (or at least that was the idea, maybe not in this specific version) In these, we take the position data of the hand to pan all of the audio of this hand (i.e. all of the audio of the left-hand-fingers), and set the volume based on the height.

Each of these subpatches has another subpatch, where we actually control the audio. So each finger has its own soundfile, which gets stretched and pitched based on the finger position. Easy:

Did I say one subpatch? Yeah, for testing purposes. Later there will be five of each for each hand. So ten sub-subpatches, in two sub-patches, in one big mess of a main patch.

So in the end, it looks more like this... just for the main patch and hand patches. There’s another ten patches within that, but I’ll skip those for lack of space.

So, as you can imagine, my lack of experience in Max meant that while it actually worked, it was so unbearable slow it might as well not have worked.

I had a lot of optimization to do. In my sketchbook, you’ll find all of the different notes I scribbled while trying to work out the best way of sending messages from Processing into Max. In the end, I first minimized the number of messages I was sending. Rather than sending a message with five values per finger, per hand, per frame (so that’s 5*5*2*60=3000 values to be sorted/second), I began by only sending the messages when they were needed. That is, if there was no hand visible that frame (checked via a trigger), a message containing x 0 y 0 z 0 would get sent to that finger, and then a trigger set telling processing it doesn’t need to send that message again. Alright, so now we’re sending only the messages we need to send. Rather than expanding the messages as I had until that point with many route objects (which then required the message to be far more complex, i.e. have variable names before variables, for proper routing), I learnt that I could instead have one very large unpack message. While this helped, it wasn’t really enough.

But what are we actually doing with the fingers? At this point, the work had become focused on imagery and storytelling. There was no need for each finger to control a sound, each, instead, each hand only needed to control one image in processing, and one sound in Max. So, now we can cut out many, many more messages. We’re only sending a message per hand, only when the hand is visible. So at most, we’d be sending 120 messages / second (not calculating in values anymore, as I was unpacking much more efficiently than previously. If your curious, I was sending anywhere between 12 and 5 values; in the latest version there are eight values), which already yields a great performance increase. Additionally, though, we weren’t having to deal with 10 different samples, where all of them had to be correctly moved around the ‘virtual’ space for the proper illusion of sound moving around the physical space. Instead, it was just two audio files!

For the moment, we can pause on talking about Max, and move over to how the visuals were progressing in Processing as all of this was happening.

Up until this point, I had really only had two versions of visuals based on Processing - simple shapes to provide myself feedback while working with the Leap, and the visual feedback by way of lights. I wasn’t really focused on the visuals at this point, though, and was rather just trying to create a system that would communicate with Max. So, after fourteen code iterations just with this library, the sketch that was sending messages to the first working max patches had the following visuals:

Very conceptual.

Once I actually had the Max messages somewhat sorted out, I actually began integrating the visuals - photos that in some way represented my identity or idea of home, that would get ‘drawn’ on the screen via the viewers’ interaction.

The very first version was actually a bit more of a challenge to make than I had thought. I had never really worked with images in Processing up until this point, only with generative visuals, so while just building something to draw one image in this way was easy, it was rather the thinking ahead and trying to make sure that the system was flexible that was the difficult part.

The first version is primarily based on the classic example sketch “Pattern” that draws ellipses at your mouse, where the size of the ellipse is dependend on the ‘speed’, yet I replaced speed with the y-height of the hand, thinking that might add some interesting interaction (which was a stupid thought, as it just meant that it was easier to draw at the bottom and harder to draw at the top, and was not interesting at all in any way interaction wise nor visually). As I had the Max side of things relatively sorted out at this point, I put a lot of work into really pushing this framework that I was building. Initially I was quite hesitant on having the visuals on screen without any sort of fading, where it would be just drawing over top of everything. So, I set out to build a framework where each pixel was drawn individually, and a few arrays kept track of which images were ‘active’, how many pixels of each image were drawn on the screen at that time, and what the ‘image’ of every pixel was.

I ended up having many, many issues with figuring out how to get it to work, but once I did, I instantly went to adding another function, that would put all of that hard work to use - for every pixel whose ‘image’ value was not one of the two ‘active’ images, the pixel had a probability of getting turned black. Basically, it was a way to fade the screen in the parts where the image was an ‘old’ one, not one being currently drawn.

Now, if you know anything about any of what I said above, you’ll probably already see that the way I was doing this was very, very inefficient. Every single frame, I was not only drawing massive rectangle-shaped bits of images, PIXEL BY PIXEL, but I was then at the end of the draw-loop going through every pixel, and comparing it’s value to that of the hands’. There’s 1920*1080 pixels. Just over two million pixels, that I was going through one by one, and for each of them asking - is the pixel’s value the same as x or y? Yes? cool, move to the next pixel. No? Alright, if this random number between zero and one is greater than 0.25, let’s make this pixel black, change the value of the pixel to 0, oh and also, what was the previous value of the pixel? Let’s subtract one from the total count of that value, so we can keep tracking how many pixels each image currently ‘occupies’.

youtube

Yeah, the system was a bit slow. But that wasn’t the real issue. The issue was that I wanted to create such a robust and versatile system, that I didn’t really think about what the system had to do. Yes, the sillhoutte-effect that happens as the image fades is quite cool, but past that - so what? Not only was it jarring to look at, it was also completely unnecessary. There was no conceptual reason for having this, in fact it was quite the opposite.

The whole idea of drawing the images was to layer all of these different places where I had lived and that influenced me one atop the other, and to show the viewer that this physical movement, expressed through their own movement and the movement of sound in the space, doesn’t allow for a single identity, for a single home. And here I was creating visuals where the other places, the other identities simply get removed. And sure, in a way, that is true - I have blocked out a few periods of my life from my memory, but that isn’t the point of the work. So, I made the decision that the whole system I was making had become too complex for its own good.

Rather than just removing those elements, though, I thought it was best to just take everything that I had learnt and write a new version of the program from scratch. Just before doing that, though, I played with adding a few simple calculations - a velocity-based approach to drawing the ‘rectangles’. The size of the image would only be as large as the speed at which the viewer moved their hands, meaning that one had to continue moving their hands to hear and see the story. As I knew the ‘center’ of the hand, and thus of the image, I could then use the velocity as an input for the size of the rectangle, setting one corner as the horizontal center minus one half the horizontal velocity and the vertical center minus one half the vertical velocity, and the other corner as the horizontal center plus one half the horizontal velocity and the vertical center plus one half the vertical velocity. Boom. Rectangle.

Having tested this, I began completely rewriting the code, including only that what needed to be included. This time, I was only building for the present, not trying to future-proof the project. Seven code iterations later, I had a system that worked just as well as the previous one, but much more clean, and was able to start working on all of the various extra elements past the main visuals. I began adding a title scene, rather than just having a blank screen, working on a reset mechanism, ensuring that the same images were not repeated (unless a reset happens), and sending new data to Max! Also, due to the wonderful GSA wi-fi, the processing sketch began to send the data to some bizarre subnet. Because I did not have a static-IP, and the OSCP5 IP address function worked in bizarre ways, rather than broadcasting to one single client, I switched to a (deprecated, yet still working) protocol - multicast. In theory, this meant that more than one device could be ‘listening’ to the processing sketch, and was a sort of insurance for myself, in case I did not receive the equipment I was hoping to get from the EMS for the degree show. This did mean some redesigning in Max as well, however, compared to some other issues, it was really a non-issue.

With a new processing sketch, I had to redesign the messages I was sending to Max. As the Max patch had also changed a bit, to reflect the overall changes (from finger control to hand control), I had to rethink a few things. The playlist object accepts integers to control which sound in the list should be played, howeever, every time them message is received, it starts the clip again. So, I could not send the image number all of the time. I mitigated this by placing the image number at the end of the message, and sending the full length message only when the image changed. This way, the message was sent once, and there was no need to complicate both sides by making new types of messages. While this allowed me to keep the Max patch clean, it meant that the sound would play when it wasn’t supposed to: the image, and hence audio file, would only change when the hand was NOT visible (and thus the image and audio should both be off). This then required another value to be sent - a boolean signifying whether the file is alive or dead. So now, every time that the audio file was changed, and began playing, a zero was also sent. That zero was then slightly delayed in Max, and attached to a pause message, meaning that once the audio file had been switched - the audio paused. Problem solved!

The data I was receiving in Max for each hand, every frame, was as follows: alive/dead,pos.x,pos.y,pos.z,vel.x,vel.y,vel.z, image (the velocities could be removed, as they only served a purpose in Processing, but the three extra values don’t actually slow it down, and the messages are so fragile that I would rather keep a working and slightly unoptimized version than risk breaking it for unnoticeable gains).

From 3000 values being inefficiently processed every second, to seven (the eighth only gets sent once every time you remove a hand) per hand per frame - 840 values per second, yet in such an efficient manner that reducing that number to 112 wasn’t even worth it. I think that speaks volumes for the amount I learned in such a short period of time.

0 notes