#nodejs api in ec2

Explore tagged Tumblr posts

Video

youtube

Create EC2 Instance in AWS | Deploy & Run Nodejs JavaScript API in EC2 S... Full Video Link - https://youtu.be/Ctq-WBMtnD0Hi, a new #video on #aws #ec2 #vm #setup & run #nodejs #javascript #api in EC2 instance published on #codeonedigest #youtube channel. @java #java #awscloud @awscloud #aws @AWSCloudIndia #Cloud #CloudComputing @YouTube #youtube #azure #msazure #codeonedigest @codeonedigest #aws #amazonwebservices #ec2 #awscloud #awstutorial #awstraining #awsec2 #awsec2instance #awsec2instancecreation #awsec2deploymenttutorial #awsec2createinstance #awsec2creation #awsec2instanceconnect #awsec2instancedeployment #awsec2instancecreationstepbystep #awsec2instancecreation2023 #ec2instancecreationinaws #ec2instanceconnect #ec2instancecreationinawslinux #nodejsapiproject #nodejsawsec2 #javascriptapiproject #nodejs

1 note

·

View note

Text

Flexpeak - Front e Back - Opções

Módulo 1 - Revisão de JavaScript e Fundamentos do Backend: • Revisão de JavaScript: Fundamentos • Variáveis e Tipos de Dados (let, const, var) • Estruturas de Controle (if, switch, for, while) • Funções (function, arrow functions, callbacks) • Manipulação de Arrays e Objetos (map, filter, reduce) • Introdução a Promises e Async/Await • Revisão de JavaScript: Programação Assíncrona e Módulos • Promises e Async/Await na prática Módulo 2 – Controle de Versão com Git / GitHub • O que é controle de versão e por que usá-lo? • Diferença entre Git (local) e GitHub (remoto) • Instalação e configuração inicial (git config) • Repositório e inicialização (git init) • Staging e commits (git add, git commit) • Histórico de commits (git log) • Atualização do repositório (git pull, git push) • Clonagem de repositório (git clone) • Criando um repositório no GitHub e conectando ao repositório local • Adicionando e confirmando mudanças (git commit -m "mensagem") • Enviando código para o repositório remoto (git push origin main) • O que são commits semânticos e por que usá-los? • Estrutura de um commit semântico: • Tipos comuns de commits semânticos(feat, fix, docs, style, refactor, test, chore) • Criando e alternando entre branches (git branch, git checkout -b) • Trabalhando com múltiplos branches • Fazendo merges entre branches (git merge) • Resolução de conflitos • Criando um Pull Request no GitHub Módulo 3 – Desenvolvimento Backend com NodeJS • O que é o Node.js e por que usá-lo? • Módulos do Node.js (require, import/export) • Uso do npm e package.json • Ambiente e Configuração com dotenv • Criando um servidor com Express.js • Uso de Middleware e Rotas • Testando endpoints com Insomnia • O que é um ORM e por que usar Sequelize? • Configuração do Sequelize (sequelize-cli) • Criando conexões com MySQL • Criando Models, Migrations e Seeds • Operações CRUD (findAll, findByPk, create, update, destroy) • Validações no Sequelize • Estruturando Controllers e Services • Introdução à autenticação com JWT • Implementação de Login e Registro • Middleware de autenticação • Proteção de rotas • Upload de arquivos com multer • Validação de arquivos enviados • Tratamento de erros com express-async-errors Módulo 4 - Desenvolvimento Frontend com React.js • O que é React.js e como funciona? • Criando um projeto com Vite ou Create React App • Estruturação do Projeto: Organização de pastas e arquivos, convenções e padrões • Criando Componentes: Componentes reutilizáveis, estruturação de layouts e boas práticas • JSX e Componentes Funcionais • Props e Estado (useState) • Comunicação pai → filho e filho → pai • Uso de useEffect para chamadas de API • Manipulação de formulários com useState • Context API para Gerenciamento de Estado • Configuração do react-router-dom • Rotas Dinâmicas e Parâmetros • Consumo de API com fetch e axios • Exibindo dados da API Node.js no frontend • Autenticação no frontend com JWT • Armazenamento de tokens (localStorage, sessionStorage) • Hooks avançados: useContext, useReducer, useMemo • Implementação de logout e proteção de rotas

Módulo 5 - Implantação na AWS • O que é AWS e como ela pode ser usada? • Criando uma instância EC2 e configurando ambiente • Instalando Node.js, MySQL na AWS • Configuração de ambiente e variáveis no servidor • Deploy da API Node.js na AWS • Deploy do Frontend React na AWS • Configuração de permissões e CORS • Conectando o frontend ao backend na AWS • Otimização e dicas de performance

Matricular-se

0 notes

Text

How to Deploy Your Full Stack Application: A Beginner’s Guide

Deploying a full stack application involves setting up your frontend, backend, and database on a live server so users can access it over the internet. This guide covers deployment strategies, hosting services, and best practices.

1. Choosing a Deployment Platform

Popular options include:

Cloud Platforms: AWS, Google Cloud, Azure

PaaS Providers: Heroku, Vercel, Netlify

Containerized Deployment: Docker, Kubernetes

Traditional Hosting: VPS (DigitalOcean, Linode)

2. Deploying the Backend

Option 1: Deploy with a Cloud Server (e.g., AWS EC2, DigitalOcean)

Set Up a Virtual Machine (VM)

bash

ssh user@your-server-ip

Install Dependencies

Node.js (sudo apt install nodejs npm)

Python (sudo apt install python3-pip)

Database (MySQL, PostgreSQL, MongoDB)

Run the Server

bash

nohup node server.js & # For Node.js apps gunicorn app:app --daemon # For Python Flask/Django apps

Option 2: Serverless Deployment (AWS Lambda, Firebase Functions)

Pros: No server maintenance, auto-scaling

Cons: Limited control over infrastructure

3. Deploying the Frontend

Option 1: Static Site Hosting (Vercel, Netlify, GitHub Pages)

Push Code to GitHub

Connect GitHub Repo to Netlify/Vercel

Set Build Command (e.g., npm run build)

Deploy and Get Live URL

Option 2: Deploy with Nginx on a Cloud Server

Install Nginx

bash

sudo apt install nginx

Configure Nginx for React/Vue/Angular

nginx

server { listen 80; root /var/www/html; index index.html; location / { try_files $uri /index.html; } }

Restart Nginx

bash

sudo systemctl restart nginx

4. Connecting Frontend and Backend

Use CORS middleware to allow cross-origin requests

Set up reverse proxy with Nginx

Secure API with authentication tokens (JWT, OAuth)

5. Database Setup

Cloud Databases: AWS RDS, Firebase, MongoDB Atlas

Self-Hosted Databases: PostgreSQL, MySQL on a VPS

bash# Example: Run PostgreSQL on DigitalOcean sudo apt install postgresql sudo systemctl start postgresql

6. Security & Optimization

✅ SSL Certificate: Secure site with HTTPS (Let’s Encrypt) ✅ Load Balancing: Use AWS ALB, Nginx reverse proxy ✅ Scaling: Auto-scale with Kubernetes or cloud functions ✅ Logging & Monitoring: Use Datadog, New Relic, AWS CloudWatch

7. CI/CD for Automated Deployment

GitHub Actions: Automate builds and deployment

Jenkins/GitLab CI/CD: Custom pipelines for complex deployments

Docker & Kubernetes: Containerized deployment for scalability

Final Thoughts

Deploying a full stack app requires setting up hosting, configuring the backend, deploying the frontend, and securing the application.

Cloud platforms like AWS, Heroku, and Vercel simplify the process, while advanced setups use Kubernetes and Docker for scalability.

WEBSITE: https://www.ficusoft.in/full-stack-developer-course-in-chennai/

0 notes

Text

Day ??? - unresponsive

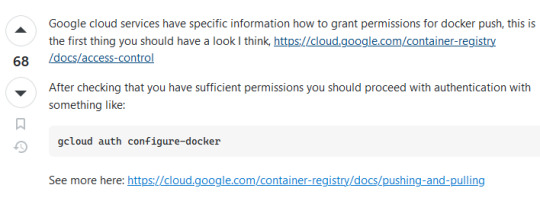

Well today... more like yesterday was the actual demo for the app... it took a while but finally deployed the backend on google cloud run. This video was very helpful

youtube

The initial plan I had for deployment was to just host an ec2 instance however the url link was over http instead of https, since we were using firebase hosting which has a policy against http content. It didn't work, so I had to re improvise and tried a lot of different ways which include:

Getting HTTPS manually using openssl, this didn't work as most browsers don't allow self signed certificates and firebase was no different

Using Netlify to host our NodeJS however their libraries were and documentation were either outdated or no longer working, and they updated their website so I wasn't sure on how exactly to deploy

Using firebase functions, this didn't work because of the way firebase preprocesses requests, it does some stuff to it like turning the request body into like a buffer so I would have to decrypt it on my end by adding like a middleware or switching from using multer to busboy, because my services need to handle image uploads so I gave up on that idea

Cloud Run, this actually worked well! Thanks to the tutorial I sent before, I wouldn't have understood the video if I didn't know much about docker. You can still follow along however it gets tedious, takes about 5-10 minutes to deploy and its effective and you have full control which is amazing! Running a docker container in the cloud is awesome. I did run into an issue during the video, and it was because my docker client wasn't authorized to push to gcr.io, a simple command to fix it was all I Needed thankfully it was fixed with one command

The rest of the day was spent with my team preparing for the presentation, our 3rd one. We were working and I was happy the backend was deployed, I feel like I'm getting more familiar with GCP. We were implementing features left and right, polishing whatever needed to. And when the demo came... well...

Just before the demo, we met up our client who was happy to see us and we were happy to see her, we wanted to show off all the features which took a while to make but yeah. There was a whoopsie, thanks to my miscommunication, I forgot to tell my stallion that we're using a shared variable that stores the apiUrl link and so he hard coded the localhost:8000, so some of his api wasn't getting used because it was trying to access localhost:8000 instead of the deployed links. We couldn't redeploy in time of the demo so we went in nervous.

The demo starts and everyone looks so scary, however my lecturer who I think may not like our team just left. The demo goes on and on, and one of the guys leaves. They ask me questions, I try my best like one was why do RabbitMQ's AMQP for communication between services instead of Restful HTTPS, the question was around security and I panicked and out right said something kinda not true but also true.

It ends, and they say our app was just too simple and needed more AI (not more features but more AI). The faces they gave, it was almost as if they were looking at poor helpless dumb little pups or people who worked last minute to finished. Our client, for the second time said that she was worried about us on whether we can finish the AI.

I'm a bit sad honestly... yeah the app was simple by my design... I'm just so sad... it doesn't help that I'm also going through a repetitive phase in my life where I start liking someone because we're so close and comfortable with each other only to find out that we were just very close friends and nothing romantic was built up.

I'm like really depressed because I can't really seem to catch a break. I want to improve the website but I'm just really sad now. Well... I honestly am trying to lose faith or what not,,,

0 notes

Text

9 lipca 2020

◢ #unknownews ◣

Dziś wyjątkowo w czwartek - zapraszam do lektury :)

1) SQL vs NoSQL - kiedy zdecydować się na który typ bazy? https://dev.to/ombharatiya/when-to-choose-nosql-over-sql-536p INFO: prosta analiza tematu z naciskiem na plusy i minusy rozwiązania NoSQL. Przydatne. 2) Wyszukiwanie tekstu z uwzględnieniem literówek - jak to działa? https://dev.to/akhilpokle/the-algorithm-behind-ctrl-f-3hgh INFO: implementacje z użyciem kilku algorytmów - rozwiązanie naiwne i algorytm Boyera Moorea plus omówienie wspomnienie innych algorytmów, które mogłyby tu pomóc 3) Brython - zastąp JavaScript na swojej stronie za pomocą... Pythona https://brython.info INFO: rzuć okiem na źródło tej strony. Tam po prostu osadzony jest czysty kod Pythona, który się wykonuje. Nie ma tam żadnego backendu! 4) Czy powinieneś używać tzw. 'karuzeli' na swojej stronie? Wyjaśnienie z użyciem karuzeli ;) http://shouldiuseacarousel.com INFO: niczego nie klikaj, po prostu przeczytaj przekaz, aby zrozumieć 5) Jak zbudować 10 popularnych layoutów webowych z użyciem prostego, często jednolinijkowego CSSa? https://web.dev/one-line-layouts/ INFO: autorka nazywa to 'single line CSS layouts', ale nie dlatego, że kod zajmuje jedną linijkę, ale dlatego, że 'jedna linijka z tego kodu realizuje koncepcję' 6) SASS (SCSS) - jak i w jakim celu rozpocząć swoją przygodę z preprocesorem arkuszy CSS https://dzone.com/articles/introduction-of-scss INFO: to znane i często stosowane rozwiązanie. Zobacz, dlaczego może ułatwić Ci prace nad stroną. 7) Jak szybko porównać wszystkie dostępne instancje EC2 w usłudze AWS? Jest do tego narzędzie https://ec2.shop INFO: przydatne zestawienie, które można przeszukiwać także przez command line (via curl) 8) Lekcje wyniesione z "50 days of CSS art" (challenge polegający na codziennym tworzeniu artystycznych obrazków w CSS) https://dev.to/s_aitchison/5-lessons-from-50-days-of-css-art-2ae1 INFO: kilka ciekawych przemyśleń na temat tworzenia bardziej zaawansowanych arkuszy. Warto też rzucić okiem na kody źródłowe z codepena 9) "Prefetching" może bardzo przyspieszyć ładowanie podstron w Twoim serwisie - chyba, że używasz nagłówka 'age', wtedy sprawy się komplikują https://timkadlec.com/remembers/2020-06-17-prefetching-at-this-age/ INFO: dobrze wyjaśniona sprawa wspomnianego nagłówka jak i samego procesu prefetchingu - jak to działa i jak współpracuje z cache przeglądarki 10) PHONK - środowisko do oskryptowania telefonu z androidem z użyciem JavaScriptu https://phonk.app INFO: piszesz kod na komputerze i od razu widzisz efekty na smartfonie. Masz dostęp do wielu API (GPS, sensory itp). 11) Nigdy nie robiłeś pull-requesta na GitHubie i trochę Cie to przeraża? Zacznij od tego. https://github.com/firstcontributions/first-contributions/blob/master/translations/README.pl.md INFO: Repozytorium uczące, w jaki sposób robi się pull-requesty. Prościej się tego wytłumaczyć chyba nie da, a do tego wszystko jest po polsku. 12) Używasz aplikacji Notion do organizowania swojego życia i projektów? Jeśli nie, to zacznij ;) https://dev.to/whoisryosuke/taking-notion-to-the-next-level-2nln INFO: praktyczne przedstawienie modułów dostępnych w Notion wraz z przykładami ich zastosowania w codziennym użyciu 13) Jak zmniejszyć obraz Dockera z aplikacją NodeJS? Przykład redukcji z 1.34GB do 157MB https://dev.to/itsopensource/how-to-reduce-node-docker-image-size-by-10x-1h81 INFO: autor używa lżejszego obrazu z nodeJS (bazującego na Alpine) i stosuje multi-stage build 14) Zaproś Ricka na swoją następną wideokonferencję w pracy... https://inviterick.com INFO: powiedzmy, że jest to soft działający w modelu RaaS (Rickroll as a Service) :D 15) Jedyna koszerna wyszukiwarka internetowa na świecie :o https://jewjewjew.com INFO: zapytania są cachowane we wtorek, a serwery zasilane są z akumulatorów i chłodzone pasywnie, aby wiatraki nie musiały pracować w szabat. 16) Obsługa płatności online z użyciem React + Stripe (pośrednik płatności) https://www.youtube.com/watch?v=MjMh62ZXlOw INFO: integracja ze Stripe (choć nieopłacalna na polskie warunki) może przydać Ci się przy realizacji projektów międzynarodowych, bo jest to standard w branży. 17) Strony internetowe, które wyglądają jak pulpity systemów operacyjnych https://bit.ly/2ZRMUcW INFO: spora kolekcja (74 sztuki) stron. Z tych, które przeglądałem, wszystkie były niezwykle starannie dopracowane. 18) Nauka Basica w 2020 roku? Tak! ale takiego 64-bitowego z opcjami kompilacji na Windowsa, Linuksa i Maca https://www.qb64.org/portal/ INFO: środowisko jest w pełni kompatybilne z oldschoolowym QBasic, ale dostosowano je do współczesnych komputerów. Wrzucam jako ciekawostkę 19) GUIetta - biblioteka do ekstremalnie prostej budowy GUI w Pythonie (QT) https://github.com/alfiopuglisi/guietta INFO: ja wiem, że takich bibliotek są setki, ale jak zobaczysz przykładowy kod źródłowy, to zrozumiesz o co chodzi. WOW! 20) EasyOCR - bardzo prosta (w użyciu) biblioteka do rozpoznawania tekstu na obrazkach (Python) https://github.com/JaidedAI/EasyOCR INFO: obsługuje ponad 40 języków (jest PL!). Całe rozpoznawanie tekstu to 2 linijki kodu (w pierwszej podajesz które języki Cię interesują, a w drugiej, który obrazek chcesz analizować) 21) Gość odzyskuje nagrania ze starych, sprzedawanych na Ebay, kamer policyjnych (body cam) https://twitter.com/d0tslash/status/1278414895487365121 INFO: jak widać, idzie mu to całkiem nieźle ;) 22) Budowa rozbudowanej aplikacji czytającej/zapisującej dane w chmurze, ale bez tworzenia własnego backendu https://dev.to/jiangh/building-feature-rich-app-with-zero-backend-code-2eno INFO: jako backend wykorzystano chmurę LeanCloud z dostępem przez API w JS. Dzięki temu 100% kodu jest we frontendzie. 23) Jeśli nie GitHub, to co? - 13 alternatyw dla tej usługi https://dzone.com/articles/top-13-github-alternatives-in-2020-free-and-paid INFO: każdy zamiennik został opisany z wymienieniem zalet i wad. Część rozwiązań jest płatna - wszystko zależy od tego, czego potrzebujesz. == LINKI TYLKO DLA PATRONÓW == 24) Ebooki dla DevOpsów i SRE - do czytania za darmo online (5 sztuk) https://uw7.org/un_5f0720913c4cf INFO: pierwszy i ostatni wymaga rejestracji w serwisie. Pozostałe są dostępne od ręki. 25) Lista darmowych kursów online dla iOS developerów - Udemy + Pluralsight https://uw7.org/un_5f0720967c0db INFO: w chwili gdy piszę te słowa, tylko jeden kurs z listy nie jest już dostępny (nr 3) 26) Kilkanaście filmów z konferencji, które mocno ukształtują Twoje postrzeganie JavaScriptu (jak to działa i dlaczego tak) https://uw7.org/un_5f07209bc2924 INFO: event loop, promisy, iteratory, generatory, prototypy, nasłuchiwanie eventów i kilka innych == Chcesz, aby Twój link pojawił się tutaj? Po prostu mi go zgłoś. To zupełnie NIC nie kosztuje - dodaję jednak tylko to, co mi przypadnie do gustu. https://mrugalski.pl/suggest.html

-- Zostań patronem: https://patronite.pl/unknow

0 notes

Text

StudySync: Software Engineer

Headquarters: Sonoma, CA URL: http://www.studysync.com

StudySync is the award-winning, flagship product of BookheadEd Learning LLC, a California-based company developing products that leverage forward-thinking designs and technologies to engage students while providing teachers and administrators with a relevant, easy-to-use platform that delivers adaptable, dynamic curriculum solutions. We are looking for a mid-level or senior software engineer with strong foundational HTML/CSS/JavaScript skills to join the StudySync engineering team. You will build browser-based K-12 education software, primarily in JavaScript using React. You have a good eye for UX/UI design and should be comfortable developing on the front-end as well as assisting with the design of the back-end API.

You are comfortable working in a distributed software development team and have the communication skills necessary to thrive in that environment. You also have prior experience building modern applications with examples you are proud to show off. Candidates seeking a full-stack position are welcome to apply.

Must have expertise in: • JavaScript • CSS • HTML • A modern, component-based SPA framework (React, Vue, Angular 2+)

An ideal candidate will have experience with: • React 16.8+ • State management frameworks (Redux preferred) • Web accessibility (WCAG Standards) • JSS/SASS

A full-stack candidate will have in-depth knowledge of: • Application and system architecture • AWS (EC2, ECS, S3, RDS, CloudFormation) • NodeJS • Postgres

The team is currently distributed across the US.

To apply: [email protected]

from We Work Remotely: Remote jobs in design, programming, marketing and more https://ift.tt/381cnTW from Work From Home YouTuber Job Board Blog https://ift.tt/380ylGC

0 notes

Photo

AWS LambdaとHyperledger Fabric SDK for Node.jsを利用してAmazon Managed Blockchainのブロックチェーンネットワークにアクセスする http://bit.ly/2WH8ORP

Amazon Managed BlockchainのブロックチェーンネットワークはVPC内に構築されるため、VPC外からブロックチェーンネットワークへアクセスするにはクライアントとなるアプリなりサービスを開発して経由する必要があります。 AWS LambdaでVPC内に関数を配置するとアクセス可能になるはずなので試してみました。

Amazon VPC 内のリソースにアクセスできるように Lambda 関数を構成する – AWS Lambda https://docs.aws.amazon.com/ja_jp/lambda/latest/dg/vpc.html

前提

Dockerを利用して開発環境を構築します。

AWS Lambdaへのデプロイにはserverlessを利用します。

Serverless – The Serverless Application Framework powered by AWS Lambda, API Gateway, and more https://serverless.com/

AWS Lambdaを利用するのでAWSアカウントや権限も必要となります。

> docker --version Docker version 18.09.2, build 6247962 > docker-compose --version docker-compose version 1.23.2, build 1110ad01 > sls --version 1.43.0

Amazon Managed Blockchainでブロックチェーンネットワークが構築済み

下記の2記事の手順でブロックチェーンネットワークが構築済みでfabcarのサンプルが動作する環境がある前提です。

Amazon Managed BlockchainでHyperledger Fabricのブロックチェーンネットワークを構築してみた – Qiita https://cloudpack.media/46963

Amazon Managed Blockchainで作成したブロックチェーンネットワークにHyperledger Fabric SDK for Node.jsでアクセスしてみる – Qiita https://cloudpack.media/47382

開発環境を構築する

Hyperledger FabricのSDKをAWS Lambda上で利用するにはLinuxでnpm installする必要があったのでDockerを利用して開発環境を構築します。

Dockerコンテナの立ち上げ

> mkdir 任意のディレクトリ > cd 任意のディレクトリ > touch Dockerfile > touch docker-compose.yml

AWS Lambdaで利用できるNode.jsのバージョンは8.10 と10.xとなります。 Hyperledger Fabric SDK for Node.jsは8.x系で動作するのでDockerにも8.xをインストールします。

AWS Lambda ランタイム – AWS Lambda https://docs.aws.amazon.com/ja_jp/lambda/latest/dg/lambda-runtimes.html

Dockerfile

FROM amazonlinux RUN yum update && \ curl -sL https://rpm.nodesource.com/setup_8.x | bash - && \ yum install -y gcc-c++ make nodejs && \ npm i -g serverless

docker-compose.yml

version: '3' services: app: build: . volumes: - ./:/src working_dir: /src tty: true

> docker-compose build > docker-compose run app bash

Node.jsのプロジェクト作成

コンテナが立ち上がったらserverlessでNode.jsのテンプレートでプロジェクトを作成します。

コンテナ内

$ sls create \ --template aws-nodejs \ --path fablic-app $ cd fablic-app $ npm init

Hyperledger Fabric SDK for Node.jsが利用できるようにpackage.jsonを編集してnpm installを実行します。

package.json

{ "name": "fabcar", "version": "1.0.0", "description": "Hyperledger Fabric Car Sample Application", "main": "fabcar.js", "scripts": { "test": "echo \"Error: no test specified\" && exit 1" }, "dependencies": { "fabric-ca-client": "~1.2.0", "fabric-client": "~1.2.0", "fs-extra": "^8.0.1", "grpc": "^1.6.0" }, "author": "", "license": "Apache-2.0", "keywords": [ ] }

コンテナ内

$ npm install

証明書の用意

Hyperledger Fabric SDK for Node.jsでブロックチェーンネットワークへアクセスするのに各種証明書が必要となるためプロジェクトに含めます。こちらはDockerコンテナ外で行います。

コンテナ外

> cd fablic-app > aws s3 cp s3://us-east-1.managedblockchain-preview/etc/managedblockchain-tls-chain.pem ./managedblockchain-tls-chain.pem # EC2インスタンスからhfc-key-storeフォルダを取得 > scp -r -i [EC2インスタンス用のpemファイルパス] [email protected]:/home/ec2-user/fabric-samples/fabcar/hfc-key-store ./hfc-key-store

実装

今回はブロックチェーンネットワークのステートDBから情報を取得する実装を行います。 下記記事でも利用しているquery.jsをAWS Lambdaで実行できるように編集しました。

Amazon Managed Blockchainで作成したブロックチェーンネットワークにHyperledger Fabric SDK for Node.jsでアクセスしてみる – Qiita https://cloudpack.media/47382

handler.jsはquery.jsのメソッドを呼び出し結果を返す実装にしました。

> cd fabric-app > tree -F -L 1 . . ├── handler.js ├── hfc-key-store/ ├── managedblockchain-tls-chain.pem ├── node_modules/ ├── package-lock.json ├── package.json ├── query.js └── serverless.yml

handler.js

var query = require('./query'); module.exports.hello = async (event) => { var result = await query.run(); return { statusCode: 200, body: JSON.stringify({ message: JSON.parse(result), input: event, }, null, 2), }; };

実装のポイントは下記となります。

証明書フォルダhfc-key-store を/tmp にコピーして利用

module.exports.run = async () => {} でhandler.jsから呼び出し可能にする

async/await で同期的に実行する

query.js

'use strict'; /* * Copyright IBM Corp All Rights Reserved * * SPDX-License-Identifier: Apache-2.0 */ /* * Chaincode query */ var Fabric_Client = require('fabric-client'); var path = require('path'); var util = require('util'); var os = require('os'); var fs = require('fs-extra'); // var fabric_client = new Fabric_Client(); // setup the fabric network var channel = fabric_client.newChannel('mychannel'); var peer = fabric_client.newPeer('grpcs://nd-xxxxxxxxxxxxxxxxxxxxxxxxxx.m-xxxxxxxxxxxxxxxxxxxxxxxxxx.n-xxxxxxxxxxxxxxxxxxxxxxxxxx.managedblockchain.us-east-1.amazonaws.com:30003', { pem: fs.readFileSync('./managedblockchain-tls-chain.pem').toString(), 'ssl-target-name-override': null}); channel.addPeer(peer); // var member_user = null; var store_base_path = path.join(__dirname, 'hfc-key-store'); var store_path = path.join('/tmp', 'hfc-key-store'); console.log('Store path:'+store_path); var tx_id = null; // create the key value store as defined in the fabric-client/config/default.json 'key-value-store' setting module.exports.run = async () => { // 証明書ファイルを/tmp ディレクトリにコピーして利用する fs.copySync(store_base_path, store_path); console.log('Store copied!'); return await Fabric_Client.newDefaultKeyValueStore({ path: store_path }).then((state_store) => { // assign the store to the fabric client fabric_client.setStateStore(state_store); var crypto_suite = Fabric_Client.newCryptoSuite(); // use the same location for the state store (where the users' certificate are kept) // and the crypto store (where the users' keys are kept) var crypto_store = Fabric_Client.newCryptoKeyStore({path: store_path}); crypto_suite.setCryptoKeyStore(crypto_store); fabric_client.setCryptoSuite(crypto_suite); // get the enrolled user from persistence, this user will sign all requests return fabric_client.getUserContext('user1', true); }).then((user_from_store) => { if (user_from_store && user_from_store.isEnrolled()) { console.log('Successfully loaded user1 from persistence'); member_user = user_from_store; } else { throw new Error('Failed to get user1.... run registerUser.js'); } // queryCar chaincode function - requires 1 argument, ex: args: ['CAR4'], // queryAllCars chaincode function - requires no arguments , ex: args: [''], const request = { //targets : --- letting this default to the peers assigned to the channel chaincodeId: 'fabcar', fcn: 'queryAllCars', args: [''] }; // send the query proposal to the peer return channel.queryByChaincode(request); }).then((query_responses) => { console.log("Query has completed, checking results"); // query_responses could have more than one results if there multiple peers were used as targets if (query_responses && query_responses.length == 1) { if (query_responses[0] instanceof Error) { console.error("error from query = ", query_responses[0]); } else { console.log("Response is ", query_responses[0].toString()); return query_responses[0].toString(); } } else { console.log("No payloads were returned from query"); } }).catch((err) => { console.error('Failed to query successfully :: ' + err); }); };

serverlessの設定

AWS LambdaでVPC内配置されるようにserverless.yml を編集します。 vpc やiamRoleStatements の定義については下記が参考になりました。 セキュリティグループとサブネットはAmazon Managed Blockchainで構築したブロックチェーンネットワークと同じものを指定します。

ServerlessでLambdaをVPC内にデプロイする – Qiita https://qiita.com/70_10/items/ae22a7a9bca62c273495

serverless.yml

service: fabric-app provider: name: aws runtime: nodejs8.10 iamRoleStatements: - Effect: "Allow" Action: - "ec2:CreateNetworkInterface" - "ec2:DescribeNetworkInterfaces" - "ec2:DeleteNetworkInterface" Resource: - "*" vpc: securityGroupIds: - sg-xxxxxxxxxxxxxxxxx subnetIds: - subnet-xxxxxxxx - subnet-yyyyyyyy functions: hello: handler: handler.hello events: - http: path: hello method: get

AWS Lambdaにデプロイする

> sls deploy Serverless: Packaging service... Serverless: Excluding development dependencies... Serverless: Uploading CloudFormation file to S3... Serverless: Uploading artifacts... Serverless: Uploading service fabric-app.zip file to S3 (40.12 MB)... Serverless: Validating template... Serverless: Updating Stack... Serverless: Checking Stack update progress... .............. Serverless: Stack update finished... Service Information service: fabric-app stage: dev region: us-east-1 stack: fabric-app-dev resources: 10 api keys: None endpoints: GET - https://xxxxxxxxxx.execute-api.us-east-1.amazonaws.com/dev/hello functions: hello: fabric-app-dev-hello layers: None Serverless: Removing old service artifacts from S3...

デプロイができたらエンドポイントにアクセスしてみます。

> curl https://xxxxxxxxxx.execute-api.us-east-1.amazonaws.com/dev/hello { "message": [ { "Key": "CAR0", "Record": { "make": "Toyota", "model": "Prius", "colour": "blue", "owner": "Tomoko" } }, { "Key": "CAR1", "Record": { "make": "Ford", "model": "Mustang", "colour": "red", "owner": "Brad" } }, (略) ], "input": { "resource": "/hello", "path": "/hello", "httpMethod": "GET", (略) } }

はい。 無事にAWS Lambda関数からHyperledger Fabric SDK for Node.jsを利用してブロックチェーンネットワークにアクセスすることができました。

VPC内にLamnbda関数を配置する必要があるため、ENI(仮想ネットワークインターフェース)の利用に伴う制限や起動速度に課題が発生するかもしれませんので、実際に利用する際には負荷検証などしっかりと行う必要がありそうです。

AWS LambdaをVPC内に配置する際の注意点 | そるでぶろぐ https://devlog.arksystems.co.jp/2018/04/04/4807/

参考

Amazon VPC 内のリソースにアクセスできるように Lambda 関数を構成する – AWS Lambda https://docs.aws.amazon.com/ja_jp/lambda/latest/dg/vpc.html

Serverless – The Serverless Application Framework powered by AWS Lambda, API Gateway, and more https://serverless.com/

Amazon Managed BlockchainでHyperledger Fabricのブロックチェーンネットワークを構築してみた – Qiita https://cloudpack.media/46963

Amazon Managed Blockchainで作成したブロックチェーンネットワークにHyperledger Fabric SDK for Node.jsでアクセスしてみる – Qiita https://cloudpack.media/47382

AWS Lambda ランタイム – AWS Lambda https://docs.aws.amazon.com/ja_jp/lambda/latest/dg/lambda-runtimes.html

ServerlessでLambdaをVPC内にデプロイする – Qiita https://qiita.com/70_10/items/ae22a7a9bca62c273495

AWS LambdaをVPC内に配置する際の注意点 | そるでぶろぐ https://devlog.arksystems.co.jp/2018/04/04/4807/

元記事はこちら

「AWS LambdaとHyperledger Fabric SDK for Node.jsを利用してAmazon Managed Blockchainのブロックチェーンネットワークにアクセスする」

June 11, 2019 at 02:00PM

0 notes

Text

Head of Tech job at StyleTribute Singapore

StyleTribute, the company behind StyleTribute.com (live in Oct'13) & KidzStyle.com (live in Feb'15), is a Singapore-based VC-funded start-up that aims at revolutionizing the second-hand fashion industry, combining the premium shopping experience and assortment of Net-a-porter with the convenience and user-friendliness of a marketplace solution like Airbnb.

We are looking for an experienced, highly motivated and hands-on Head of Tech to lead the development of StyleTribute’s tech assets. The person in this role will own critical systems throughout the platform and be responsible for their performance.

You will be responsible in building an off-shore team of techs and leading the implementation amongst others, of new website/mobile features, building B2B APIs, building programs and tools for our in-house team, further improving our IOS application and developing an android application which is at the core of StyleTribute’s vision and which will revolutionize the 2nd hand market.

You will work closely with the Founder and CEO of StyleTribute as well as with the Head on Digital, helping the company to reach its next stage of growth.

Are you passionate about programming? Are you obsessed with solving problems and building things? Are you constantly thinking about new creative ways to solve a programmatic task?

And most importantly, are you looking to step up a role in your career, punching above your weight class, proving you can succeed in an extremely challenging role, with infinite career upside?

If the answer is ‘YES’, we want to hear from you.

RESPONSIBILITIES

· IMPROVE OUR IOS APPLICATION: Our Seller App is a native iOS app written in Objective C.

· DEVELOP OUR ANDROID APPLICATION

· DEVELOP B2B APIs: Further our goal of automating data exchange with our B2B partners.

· ENHANCE OUR B2C DESKTOP AND MOBILE INTERFACES

· IMPLEMENT TOOLS FOR OUR IN-HOUSE OPERATIONS: Designing systems and organizations that are scalable and maintainable, while putting in place agile processes agile processes that enable our teams to deliver high quality products on time.

· BUILD A CRM TOOL

· PROJECT & TEAM MANAGEMENT - Oversee and coordinate the work of our Social Media Manager and Graphic designer

- Work alongside our Tech team to build new marketing tools, additional tracking integrations or new onsite SEO improvements

- Communication; managing expectations with business and product owners.

· SUPPORT DIGITAL MARKETING: Setting up trackers and A/B testing and helping out with SEO requests.

REQUIREMENTS

We are looking for an exceptional talent who aspires at being part of a revolutionary start-up. Someone that:

· Has a minimum of 3-4 years of working experience in a tech team of an online venture (ideally an eCommerce startup).

· You are a coder at heart. You like hard problems, but know how to keep things simple. You would love to do more coding yourself, but accept that you can bring most value by helping others excel.

· You have built large-scale applications with modern web frameworks (Java/Spring, PHP, AngularJS or React, NodeJS).

· You can deal with (and preferably limit) complexity.

· You are a fast thinker, hands-on manager and a strong communicator - you think and act like an entrepreneur.

· You know agile. Not just from books. You know how to do fast release cycles without compromising on quality.

· You possess not only top-notch tech skills, but also keen business skills honed through similar previous roles

· You are both a strategic as well as an operational leader; you can establish a clear tech vision and then lead it to fruition

· You are passionate about the latest stack; you have the full overview of the latest methodologies and innovations, and can choose the best to implement

· Can work independently with minimal supervision and a high level of proactivity

· Demonstrated success driving cross-functional initiatives to completion

· Has a can-do attitude

· Is a hard-working and driven person

· Is genuinely passionate about tech and start ups

TECHNICAL SKILLS

Amazon Web Services (Specifically EB, S3, Lambda, RDS, EC2, SQS)

Continuous Deployment (we are using CircleCI)

Development workflows with git

Serverless Framework

Java/Spring/Springboot

NodeJS

AngularJS (1.5)

PHP, preferably Magento experience

BENEFITS

· Opportunity to lead a fundamental function within the company

· Work hand-in-hand with our CEO

· Being able to directly impact the long term success and growth of the business

· Opportunity to grow long term in a highly ambitious and investors-backed company

· Unparalleled opportunity to gain top-notch marketing experience in one of today’s hottest emerging markets and industries

· Work permit sponsorship for expatriates

· Flexible working environment and hours

· Competitive salary

StartUp Jobs Asia - Startup Jobs in Singapore , Malaysia , HongKong ,Thailand from http://www.startupjobs.asia/job/32354-head-of-tech-it-job-at-styletribute-singapore Startup Jobs Asia https://startupjobsasia.tumblr.com/post/164478949924

1 note

·

View note

Video

youtube

Create EC2 Instance in AWS | Deploy & Run Nodejs JavaScript API in EC2 S...

0 notes

Text

Handling Hawk’s Deluge of Data with AWS

When a user loads a web page, all the associated code runs on their computer. Unless communicated explicitly to a server, what they do with the code is unknown. Choices they make, errors and UX bugs are all locked away on their computer. And then they vanish forever when the user closes the browser window.

This is a problem for developers, who need to know those things.

At Cyber Care, we became dissatisfied with the available solutions to that problem. Most locked you into a funnel paradigm, or required you to interpret complex heatmaps. Some only handled error reporting. We wanted a solution that would do what we needed, without constraining us in ways we weren't committed to.

So we started developing Hawk: our answer to the problem.

One major technical difficulty with Hawk, from the beginning, was data volume. We didn't have the budget to spin up huge, inefficient servers. But as Hawk ran day by day during development, the pile of data mounted higher and higher.

We went through several server paradigms to handle it.

Starting Simple

In our first iteration, our “reporter” script (the JavaScript file that Hawk users included on their websites to facilitate reporting data to Hawk) sent data to REST endpoints on a NodeJS server, which then stored the data in MongoDB. This approach was simple and familiar, and allowed us to easily develop Hawk locally and deploy it in production.

For our production server, we picked AWS Lightsail for its low price, but one of the downsides is that (for the server size we selected), you’re expected to use an average of no more than 10% CPU. When you exceed the limit, the server slows to a crawl. On top of that, disk usage is throttled too.

Hawk’s most important feature was providing a visual recording of what the user actually saw on their screen. Our initial implementation was to send the full HTML serialization of the DOM to the server each time something changed in the DOM. We were also:

capturing details about JS errors and failed XHR and fetch requests,

using WebSockets to send the HTML to site owners logged in on usehawk.com, so they could watch sessions live

using Puppeteer to render the HTML to PNG images to include in email notifications

so it was easy to overwhelm our server.

A Little Lambda

The first optimization we made was moving the storage of HTML snapshots to S3 and the rendering of PNG images into a Lambda function. We still had the data coming into our server, but rather than writing it to disk in our Mongo database, we sent the HTML to S3 and kicked off a Lambda function. The Lambda function loaded the HTML from S3 in Puppeteer, took a screenshot, and saved the PNG into S3.

Lambda was a much better choice for running Puppeteer and rendering HTML to PNGs than our resource-constrained Lightsail server. With Lambda, you’re only charged for the time when your function is running and Amazon handles scaling for you. If 100 requests come in at the same time, you can run 100 instances of your Lambda function concurrently. And if there’s no traffic between midnight and 6:00am, you don’t pay anything during that period. Lambda also allows your function to remain loaded in memory for a period of time after it completes. They don’t charge for this time, but in our case, this meant we didn’t have to wait for Chrome to launch each time our function ran.

This was a good first step, but we still had a lot of data flowing through our humble Lightsail server.

API Gateway, Lambda and S3

We knew that our Lightsail server was the center of our performance problems. We could have upgraded to a larger server (in EC2, for example), but while this might “fix” things in the short-term, it wouldn’t scale well as Hawk grew. After our positive experience with Lambda, we knew that we wanted to move as much as possible off of our server.

We used AWS API Gateway as the new entry point for receiving data from our reporter script. We created an API for each category we needed to handle (snapshot [HTML] data, events, site-specific configuration, etc.). Each API had from one to a handful of REST endpoints that invoked a Lambda function. We unified the APIs under a single domain name using API Gateway Custom Domain feature.

Data Storage

For data storage, we knew that we didn’t want to use our MongoDB server. We considered using AWS’s schemaless database, DynamoDB. However, querying in DynamoDB seemed very limited (dependent on indices, with additional charges for each index). We decided that S3 (although not designed to be a database) would work just as well for our purposes. S3 makes it easy to list files in ascending order, with an optional prefix. So for events (including page navigations and errors), we organized them in S3 by account, then by site, and then using an “inverted timestamp”, for example:

5cd31ad21d581b0071b53abc/5cd31ad21d581b0071b53def/7980-94-90T78:68:…

The timestamp is generated by taking an ISO-8601 formatted date and subtracting each digit from 9; this results in the newest events being sorted at the top, which is the order Hawk wanted to display them in the UI.

Live View

To support live viewing of sessions, we needed a way to get the data from the visitor to the site owner. This involved a couple of Lambda functions and some SQS queues: On the visitor side of things:

Our html-to-s3 Lambda function accepts screenshot data (HTML, window dimensions, etc.) from a site visitor and stores it in S3.

It then checks for the existence of an SQS queue named live-viewers-requests-{siteId}-{sessionId}

If such a queue exists, it reads messages off of that queue. Each message contains the name of a queue that should be notified of new screenshot data for the session.

It sends the name of the screenshot data in S3 to each queue found in step 3.

And then on the viewer side:

Our live-viewer Lambda function takes site and session ids as parameters, and the name of the last screenshot the viewer received.

It queries S3 to see if a newer screenshot is available. If so, it returns that screenshot.

Otherwise, it creates a randomly-named SQS queue, and sends a message containing that random queue name to live-viewers-requests-{siteId}-{sessionId}.

It then waits for a message on the randomly-named queue. If it receives one within 23 seconds, it returns the screenshot contained in the message.

Otherwise, it returns a specific error code to Hawk's front-end to indicate that no new data was available. The front-end can then retry the request.

Keeping Lambda functions small and fast

API Gateway APIs time out after 29 seconds. In order to avoid having our requests time out, and to ensure we didn't slow down our customer's sites by having concurrent, long-running requests, we split some of our endpoints into multiple Lambda functions. For example, the html-to-s3 function we mentioned above is actually split into a couple of pieces. The first accepts the HTML from the client, stores it in S3, asynchronously kicks of the next Lambda function in the process, and returns as quickly as possible. The next Lambda function in the chain deals with SQS, and if necessary, kicks off a final Lambda function to render the HTML to a PNG image.

Authentication

When a Hawk user wanted to retrieve data, our web app used the /signin endpoint on our Lightsail server, which returned a JWT. Subsequent requests were then sent to API Gateway, with the JWT included in a request header. Our Lambda functions could then validate the signature on the JWT and extract the necessary data (eg. the account id) without needing to contact our server.

We're happy with where Hawk ended up. If your team is dealing with a project that produces an overwhelming amount of data, maybe something we did might work for you.

0 notes

Text

持续部署微服务的实践和准则

当我们讨论微服务架构时,我们通常会和Monolithic架构(单体架构 )进行比较。

在Monolithic架构中,一个简单的应用会随着功能的增加、时间的推移变得越来越庞大。当Monoltithic App变成一个庞然大物,就没有人能够完全理解它究竟做了什么。此时无论是添加新功能,还是修复Bug,都是一个非常痛苦、异常耗时的过程。

Microservices架构渐渐被许多公司采用(Amazon、eBay、Netflix),用于解决Monolithic架构带来的问题。其思路是将应用分解为小的、可以相互组合的Microservices。这些Microservices通过轻量级的机制进行交互,通常会采用基于HTTP协议的服务。

��个Microservices完成一个独立的业务逻辑,它可以是一个HTTP API服务,提供给其他服务或者客户端使用。也可以是一个ETL服务,用于完成数据迁移工作。每个Microservices除了在业务独立外,也会有自己独立的运行环境,独立的开发、部署流程。

这种独立性给服务的部署和运营带来很大的挑战。因此持续部署(Continuous Deployment)是Microservices场景下一个重要的技术实践。本文将介绍持续部署Microservices的实践和准则。

实践:

使用Docker容器化服务

采用Docker Compose运行测试

准则:

构建适合团队的持续部署流水线

版本化一切

容器化一切

使用Docker容器化服务

我们在构建和发布服务的时候,不仅要发布服务本身,还需要为其配置服务器环境。使用Docker容器化微服务,可以让我们不仅发布服务,同时还发布其需要的运行环境。容器化之后,我们可以基于Docker构建我们的持续部署流水线:

上图描述了一个基于Ruby on Rails(简称:Rails)服务的持续部署流水线。我们用Dockerfile配置Rails项目运行所需的环境,并将Dockerfile和项目同时放在Git代码仓库中进行版本管理。下面Dockerfile可以描述一个Rails项目的基础环境:

FROM ruby:2.3.3 RUN apt-get update -y && \ apt-get install -y libpq-dev nodejs git WORKDIR /app ADD Gemfile /app/Gemfile ADD Gemfile.lock /app/Gemfile.lock RUN bundle install ADD . /app EXPOSE 80 CMD ["bin/run"]

在持续集成服务器上会将项目代码和Dockerfile同时下载(git clone)下来进行构建(Build Image)、单元测试(Testing)、最终发布(Publish)。此时整个构建过程都基于Docker进行,构建结果为Docker Image,并且将最终发布到Docker Registry。

在部署阶段,部署机器只需要配置Docker环境,从Docker Registry上Pull Image进行部署。

在服务容器化之后,我们可以让整套持续部署流水线只依赖Docker,并不需要为环境各异的服务进行单独配置。

使用Docker Compose运行测试

在整个持续部署流水线中,我们需要在持续集成服务器上部署服务、运行单元测试和集成测试Docker Compose为我们提供了很好的解决方案。

Docker Compose可以将多个Docker Image进行组合。在服务需要访问数据库时,我们可以通过Docker Compose将服务的Image和数据库的Image组合在一起,然后使用Docker Compose在持续集成服务器上进行部署并运行测试。

上图描述了Rails服务和Postgres数据库的组装过程。我们只需在项目中额外添加一个docker-compose.yml来描述组装过程:

db: image: postgres:9.4 ports: - "5432" service: build: . command: ./bin/run volumes: - .:/app ports: - "3000:3000" dev: extends: file: docker-compose.yml service: service links: - db environment: - RAILS_ENV=development ci: extends: file: docker-compose.yml service: service links: - db environment: - RAILS_ENV=test

采用Docker Compose运行单元测试和集成测试:

docker-compose run -rm ci bundle exec rake

构建适合团队的持续部署流水线

当我们的代码提交到代码仓库后,持续部署流水线应该能够对服务进行构建、测试、并最终部署到生产环境。

为了让持续部署流水线更好的服务团队,我们通常会对持续部署流水线做一些调整,使其更好的服务于团队的工作流程。例如下图所示的,一个敏捷团队的工作流程:

通常团队会有业务分析师(BA)做需求分析,业务分析师将需求转换成适合工作的用户故事卡(Story Card),开发人员(Dev)在拿到新的用户故事卡时会先做分析,之后和业务分析师、技术主管(Tech Lead)讨论需求和技术实现方案(Kick off)。

开发人员在开发阶段会在分支(Branch)上进行开发,采用Pull Request的方式提交代码,并且邀请他人进行代码评审(Review)。在Pull Request被评审通过之后,分支会被合并到Master分支,此时代码会被自动部署到测试环境(Test)。

在Microservices场景下,本地很难搭建一整套集成环境,通常测试环境具有完整的集成环境,在部署到测试环境之后,测试人员(QA)会在测试环境上进行测试。

测试完成后,测试人员会跟业务分析师、技术主管进行验收测试(User Acceptance Test),确认需求的实现和技术实现方案,进行验收。验收后的用户故事卡会被部署到生产环境(Production)。

在上述团队工作的流程下,如果持续部署流水线仅对Master分支进行打包、测试、发布。在开发阶段(即:代码还在分支)时,无法从持续集成上得到反馈,直到代码被合并到Master并运行构建后才能得到反馈,通常会造成“本地测试成功,但是持续集成失败”的场景。

因此,团队对仅基于Master分支的持续部署流水线做一些改进。使其可以支持对Pull Request代码的构建:

如上图所示:

持续部署流水线区分Pull Request和Master。Pull Request上只运行单元测试,Master运行完成全部构建并自动将代码部署到测试环境。

为生产环境部署引入手动操作,在验收测试完成之后再手动触发生产环境部署。经过调整后的持续部署流水线可以使团队在开发阶段快速从持续集成上得到反馈,并且对生产环境的部署有更好的控制。

版本化一切

版本化一切,��将服务开发、部署相关的系统都版本化控制。我们不仅将项目代码纳入版本管理,同时将项目相关的服务、基础设施都进行版本化管理。 对于一个服务,我们一般会为它单独配置持续部署流水线,为它配置独立的用于运行的基础设施。此时会涉及两个非常重要的技术实践:

构建流水线即代码

基础设施即代码

构建流水线即代码。通常我们使用Jenkins或者Bamboo来搭建配置持续部署流水线,每次创建流水线需要手动配置,这些手动操作不易重用,并且可读性很差,每次对流水线配置的改动并不会保存在历史记录中,也就是说我们无从追踪配置的改动。

在今年上半年,团队将所有的持续部署流水线从Bamboo迁移到了BuildKite,BuildKite对构建流水线即代码有很好的支持。下图描述了BuildKite的工作方式:

在BuildKite场景下,��们会在每个服务代码库中新增一个pipeline.yml来描述构建步骤。构建服务器(CI Service)会从项目的pipeline.yml中读取配置,生成构建步骤。例如,我们可以使用如下代码描述流水线:

steps: - name: "Run my tests" command: "shared_ci_script/bin/test" agents: queue: test - wait - name: "Push docker image" command: "shared_ci_script/bin/docker-tag" branches: "master" agents: queue: test - wait - name: "Deploy To Test" command: "shared_ci_script/bin/deploy" branches: "master" env: DEPLOYMENT_ENV: test agents: queue: test - block - name: "Deploy to Production" command: "shared_ci_script/bin/deploy" branches: "master" env: DEPLOYMENT_ENV: production agents: queue: production

在上述配置中,command中的步骤(即:test、docker-tag、deploy)分别是具体的构建脚本,这些脚本被放在一个公共的shared_ci_script代码库中,shared_ci_script会以git submodule的方式被引入到每个服务代码库中。

经过构建流水线即代码方式的改造,对于持续部署流水线的任何改动都会在Git中被追踪,并且有很好的可读性。

基础设施即代码。对于一个基于HTTP协议的API服务基础设施可以是:

用于部署的机器

机器的IP和网络配置

设备硬件监控服务(CPU,Memory等)

负载均衡(Load Balancer)

DNS服务

AutoScaling Service(自动伸缩服务)

Splunk日志收集

NewRelic性能监控

PagerDuty报警

这些基础设施我们可以使用代码进行描述,AWS Cloudformation在这方面提供了很好的支持。我们可以使用AWS Cloudformation设计器或者遵循AWS Cloudformation的语法配置基础设施。下图为一个服务的基础设施构件图,图中构建了上面提到的大部分基础设施:

在AWS Cloudformation中,基础设施描述代码可以是JSON文件,也可以是YAML文件。我们将这些文件也放到项目的代码库中进行版本化管理。

所有对基础设施的操作,我们都通过修改AWS Cloudformation配置进行修改,并且所有修改都应该在Git的版本化控制中。

由于我们采用代码描述基础设施,并且大部分服务遵循相通的部署流程和基础设施,基础设施代码的相似度很高。DevOps团队会为团队创建属于自己的部署工具来简化基础设施配置和部署流程。

容器化一切

通常在部署服务时,我们还需要一些辅助服务,这些服务我们也将其容器化,并使用Docker运行。下图描述了一个服务在AWS EC2 Instance上面的运行环境:

在服务部署到AWS EC2 Instance时,我们需要为日志配置收集服务,需要为服务配置Nginx反向代理。

按照12-factors原则,我们基于fluentd,采用日志流的方式处理日志。其中logs-router用来分发日志、splunk-forwarder负责将日志转发到Splunk。

在容器化一切之后,我们的服务启动只需要依赖Docker环境,相关服务的依赖也可以通过Docker的机制运行。

总结

Microservices给业务和技术的扩展性带来了极大的便利,同时在组织和技术层面带来了极大的挑战。由于在架构的演进过程中,会有很多新服务产生,持续部署是技术层面的挑战之一,好的持续部署实践和准则可以让团队从基础设施抽离出来,关注与产生业务价值的功能实现。

持续部署微服务的实践和准则,首发于文章 - 伯乐在线。

1 note

·

View note

Text

July 16, 2020 at 10:00PM - The AWS Certification Training Master Class Bundle (95% discount) Ashraf

The AWS Certification Training Master Class Bundle (95% discount) Hurry Offer Only Last For HoursSometime. Don't ever forget to share this post on Your Social media to be the first to tell your firends. This is not a fake stuff its real.

The AWS SysOps Associate certification training program is designed to give you exposure to the highly scalable Amazon Web Services (AWS) cloud platform. You’ll learn how to analyze various CloudWatch metrics, the major monitoring solution in AWS and learn to build scalable fault-tolerant architecture using ELB, AS and Route 53. You’ll gain a deep understanding of cost-reduction opportunities, tagging in analysis, cloud deployment and provisioning with CloudFormation. You will also learn data management and storage using EC2, EBS, S3, Glacier, and data lifecycle and get an overview of AWS networking and security.

Access 15 hours of high-quality training

Deploy, manage, & operate scalable, highly available and fault-tolerant systems on AWS

Migrate an existing on-premises application to AWS

Ensure data integrity & data security on AWS technology

Select the appropriate AWS service based on compute, data or security requirements

Identify appropriate use of AWS operational best practices

Understand operational cost control mechanisms & estimate AWS usage costs

This AWS Technical Essentials course is designed to train participants on various AWS products, services, and solutions. This course, prepared in line with the AWS syllabus will help you become proficient in identifying and efficiently using AWS services. This course ensures that you are well versed in using the AWS platform and will help you learn how to use the AWS console to create instances, S3 buckets, and more.

Access 7 hours of high-quality e-learning content 24/7

Recognize terminology & concepts as they relate to the AWS platform

Navigate the AWS Management Console

Understand the security measures AWS provides

Differentiate AWS Storage options & create Amazon S3 bucket

Recognize AWS Compute & Networking options and use EC2 and EBS

Describe Managed Services & Database options

Use Amazon Relational Database Service (RDS) to launch an applicaton

Identify Deployment & Management options

This AWS certification training is designed to help you gain an in-depth understanding of Amazon Web Services (AWS) architectural principles and services. You will learn how cloud computing is redefining the rules of IT architecture and how to design, plan, and scale AWS Cloud implementations with best practices recommended by Amazon. The AWS Cloud platform powers hundreds of thousands of businesses in 190 countries, and AWS certified solution architects take home about $126,000 per year.

Access 15 hours of high-quality e-learning content 24/7

Formulate solution plans & provide guidance on AWS architectural best practices

Design & deploy scalable, highly available, & fault tolerant systems on AWS

Identify the lift & shift of an existing on-premises application to AWS

Decipher the ingress & egress of data to and from AWS

Select the appropriate AWS service based on data, computer, database, or security requirements

Estimate AWS costs & identify cost control mechanisms

AWS Database Migration Service is an innovative service that helps you easily migrate your databases to the AWS cloud. This course will demonstrate the key functionality of AWS Database Migration Service and will help you understand how to easily and securely move databases into the AWS cloud platform to take advantage of the cost savings and scalability of AWS.

Access 3 hours of self-paced learning 24/7

Get an overview of AWS DMS

Understand how the AWS Schema Conversion tool works

Review the AWS Database Migration Service

Learn the three types of AWS Database Migration Service

This course will provide an overview of AWS Lambda, its components, the functions, roles, and policies you can create within Lambda. You will learn how to manage, monitor, and debug Lambda functions, review CloudTrail API calls and logs, and use aliases and versions. You will also learn how to deploy Python, NodeJS, and Java codes to Lambda functions, and integrate with other AWS services like S3 and API Gateway.

Access 4 hours of self-paced learning 24/7

Understand the basic security configurations in Lambda

Learn how to integrate Lambda w/ other AWS Services like S3 & API Gateway

Monitor & log Lambda Functions

Understand the steps to review CloudWatch Logs & API Calls in CloudTrail for Lambda and other AWS Services

Create versions & aliases of Lambda Functions

Get an introduction to VPC Endpoints & AWS Mobile SDK

Want complete control over your virtual networking environment and the leeway to launch AWS resources by customizing the IP address range, route tables, and network gateways? Then this Amazon VPC training is the right course to take! This course provides you with details of Amazon Virtual Private Cloud (VPC), which allows the creation of custom cloud-based networks.

Access 1 hours of self-paced learning 24/7

Understand the basic concepts of Amazon VPC

Learn about subnets, internet gateways, route tables, NAT devices, security groups, & implement these methodologies in practical scenarios

Create a custom VPC, Elastic IP address, subnets, & security groups

The cloud is taking the IT industry by storm! Stay ahead of the curve by taking this Amazon EC2 course and utilize EC2 to gain complete control over your computer resources and configure, compute, and scale capacity with minimal friction. This course lays emphasis on providing you with EC2 fundamentals and contains practice modules to help you launch and connect to an EC2 Linux and Windows instance.

Access 2 hours of self-paced learning 24/7

Understand the basic concepts of Amazon EC2

Learn about Amazon Elastic Book Store (EBS), Elastic Load Balancing (ELB) & implement them

Gain knowledge of EC2 best practices & costs

Configure ELB

Grasp AWS Lambda, Elastic Beanstalk, & Command Line Interface terminologies

Launch & connect to ECB Linux & Windows instances

Create an Amazon Machine Image (AMI)

Looking for a reliable, scalable, simple, fast, and cost-effective way to route end users to internet applications? Amazon Route 53 ensures the ability to connect user requests to infrastructure efficiently, running inside and outside of AWS. This course on Route 53 provides users with an overview of the Amazon DNS service. You’ll be introduced to the terminologies and uses of Route 53 to host your own domain names. The course covers everything from a detailed overview of Route 53 to Routing policies, best prices, and cost.

Access 25 minutes of self-paced learning 24/7

Understand the basic concepts of Route 53

Learn about routing policies & best practices

Host your own DNS on Route 53

Use Route 53 to map domain names to your Amazon EC2 instances, Amazon CloudFront distributions, & other AWS resources

Route internet traffic to your domain resources & manage traffic globally across various routing types

Execute DNS health check using Route 53

The need to effortlessly and securely collect, analyze and store data on a massive scale is paramount today. The course starts with an overview of S3 followed by its lifecycle management, best practices, and costs. Integration of Amazon S3 with CloudFront and Import/Export services are clearly explained. In a nutshell, you’ll discover the uses, types, and concepts of Amazon S3.

Access 1 hour of self-paced learning 24/7

Use S3 for media storage for Big Data Analytics & serverless computing applications

Understand the basic concepts of Amazon S3

Learn about Amazon S3 lifecycle management

Integrate S3 w/ CloudFront & Import/Export services

Comprehend Amazon S3 best practices & costs

Create & access a safe S3 buckets and add files to it

Manage & encrypt S3 files w/ free tools

from Active Sales – SharewareOnSale https://ift.tt/2Y21a1m https://ift.tt/eA8V8J via Blogger https://ift.tt/2CK9Kvc #blogger #bloggingtips #bloggerlife #bloggersgetsocial #ontheblog #writersofinstagram #writingprompt #instapoetry #writerscommunity #writersofig #writersblock #writerlife #writtenword #instawriters #spilledink #wordgasm #creativewriting #poetsofinstagram #blackoutpoetry #poetsofig

0 notes

Text

Java Fullstack Developer with AWS

Preferred skills •Experience building front-end applications using HTML5, CSS3, JS, Angular 4/5/6 (NOT AngularJS). •Working understanding of Angular-CLI and NodeJS. •Strong fundamentals of CSS preprocessors - LESS , SASS or SCSS . •Experienced with AWS or other Cloud Services (CloudFormation, Lambda, ECS, EC2, IAM, RDS etc). •Experience with REST- or SOAP-based web services, APIs, XML, JSON, JavaScript, Python/ Node.js/ J2EEE/JAVA, and design patterns •Passionate about learning new things. •Experience with unit testing Angular apps using Karma and Jasmine. Keywords: Core Java, Spring , Hibernate, Collections, Spring Boot, AWS, Angular(Which version used) Reference : Java Fullstack Developer with AWS jobs from Latest listings added - cvwing http://cvwing.com/jobs/technology/java-fullstack-developer-with-aws_i11292

0 notes

Text

Java Fullstack Developer with AWS

Preferred skills •Experience building front-end applications using HTML5, CSS3, JS, Angular 4/5/6 (NOT AngularJS). •Working understanding of Angular-CLI and NodeJS. •Strong fundamentals of CSS preprocessors - LESS , SASS or SCSS . •Experienced with AWS or other Cloud Services (CloudFormation, Lambda, ECS, EC2, IAM, RDS etc). •Experience with REST- or SOAP-based web services, APIs, XML, JSON, JavaScript, Python/ Node.js/ J2EEE/JAVA, and design patterns •Passionate about learning new things. •Experience with unit testing Angular apps using Karma and Jasmine. Keywords: Core Java, Spring , Hibernate, Collections, Spring Boot, AWS, Angular(Which version used) Reference : Java Fullstack Developer with AWS jobs from Latest listings added - LinkHello http://linkhello.com/jobs/technology/java-fullstack-developer-with-aws_i8153

0 notes

Photo

AWSでGCEぽく、Lambdaでインスタンス起動時にDNS自動登録する http://bit.ly/2CZdsic

GCEでインスタンスを起動するときに、先にホスト名を決める必要があります。AutoScaling等の場合は、勝手にプリフィックスがついて名前被りを避けます。これによりAWSのようなIPアドレスベースの使いにくいFQDN(Internal)ではなく、直感的な名前で名前解決ができます。 AWSでもUserData,cloud-initによる機構などで実現することは可能ですが、私の直感として「そんなことはインフラだけで完結しろよ!」と思うので、GCEぽい動きをするLambdaを約2年前に作成していたので��今更蔵出し公開します。

aws lambdaで ec2の local ipを r53へ自動登録. Contribute to h-imaoka/aws-lambda-internaldns development by creating an account on GitHub. h-imaoka/aws-lambda-internaldns - GitHub

github.com

使いたいだけの人は使い方はすべてGitHub上に書いていますので、そちらを見てください。

このエントリでは、今回蔵出ししたこのツールを通して serverlessフレームワークの歴史と使い方について振り返り書きます。書きたいのはこっちですが、タイトル詐欺ではないので許して。

その前にこのツールの意義

前述の通り、このツールは2年前に作成していました。現在と状況が違う点としては、Route53 auto naming (service discovery) が今は存在しますが、一応このツールとは競合していないです。

R53 auto naming との違い

結論から言うと、やっぱり ecs (eksも?) service discoveryとして使うべきものであり、名前解決が面倒だから楽したいという意図で使うべきではないと、一度使った人なら思うはず。

service discovery = サービス(web とか smtp とか)の生死を名前解決に反映させる

本ツール = ただ、内部ホスト間の名前解決を楽したい

のように目的が根本的に違います。そのためservice discovery は見た目こそroute53でHostedZoneも見えるのですが、そのHostedZoneがAWS管轄となり、ユーザーが自力でレコードを一切いじれない となります。

例として、ただ単に名前解決したいと思い、Private Hostedzone .hoge. を作りました。 そこにはすでにいくつかFQDNを登録しています。 その .hoge. に service discovery で追加される分も混ぜることはできないです。

言葉で書いてもピンとこないと思いますが、一発作ればよくわかります、ただ単に名前解決を楽にしたいだけという低い意識に対して service discovery (auto naming) を使うは完全にオーバースペックで悪手です。

UserDataなどを使う方法との違い

本ツールはEC2イベントとLambdaを使いますので、EC2インスタンス側への組み込みは一切不要です。UserData等の場合は、Powershellなのか、Shellなのか、AWSCLIなのか、Powershellのモジュールなのかいろいろ考える必要があります。もしくはGo lang win/linux 共通のバイナリを作るとか。でもそんなのめんどくさくてやってられない。

terraform/Cfnとかでインスタンスと同じタイミングにレコード追加するなどとの違い

上と一緒でめんどくさい。それよりなにより、名前解決ごときでterraformの実行反映がとろいのが嫌。IAMとRoute53のレコードは特に反映までの待ちが長いので、こんなしょうもないこと(ホスト間の名前解決)のためにいちいち待たされんのがいや。それと、AutoScaling等を導入する場合を考慮すると、結局UserDataなどを使わないといけないのでこちらのほうが守備範囲が狭い。

serverless framework(sls)

本エントリの本題に入ります。ダラダラ書きますが、基本は分かっている人向けで、入門的な話は書きません。

概要・存在意義

serverless version 1.x 系のリリースのころから私は始めました。当時の私の認識が間違っていたので、ここでも改めて書きますが

あくまでもLambdaが中心 (API Gatewayが中心じゃない)

プラグイン型にしたことに先見の明あり

nodejs製なので、serverless framework自体のバージョン固定必須

Lambdaを中心として、そのLambdaが必要とするIAMロールと、そのLambdaの導火線(着火剤)となるイベントを定義できます。Webに特化したものではないです、Webにも使えますが。AWSの場合はこの点が非常に重要で、特にインフラ屋が使うツールはたくさんのサービスに対してアクセスすることが多いです。これらを1元管理できるのは便利。 serverlessフレームワークは前身があったはずで、その頃はプラグイン型ではなかったはず。特にAWSのように目まぐるしき新しいサービス・サービス関連系が増えるものは明らかにこのモデルが優秀で、プラグインから本体へ取り込まれたものもいくつかあるはず。 最後に悪い点としてnodejsでできていること。ともかくアップデートのプレッシャーがきつく、nodejs本体のバージョンにも注意が必要。なので、ここはコンテナ(docker)化する。

EC2イベントから slsの本質を知る

2年前の実装をリファインするにあたり、まず最初にいじったのがここ。EC2イベントは当時はsls本体のイベントとしては存在しなかった。じゃあ、どうやって表現していたのか?slsの実装を忖度して、実行時=deploy に生成される Cfnテンプレートをイメージしながら追加リソースを書く となります。なんのとこやらわからないと思うので

# you can add CloudFormation resource templates here resources: Resources: RegisterRule: Type: AWS::Events::Rule Properties: EventPattern: { "source": [ "aws.ec2" ], "detail-type": [ "EC2 Instance State-change Notification" ], "detail": { "state": [ "running" ] } } Name: register-rule Targets: - Arn: Fn::GetAtt: - "RegisterLambdaFunction" - "Arn" Id: "TargetFunc1" RegisterPermissionForEventsToInvokeLambda: Type: "AWS::Lambda::Permission" Properties: FunctionName: Ref: "RegisterLambdaFunction" Action: "lambda:InvokeFunction" Principal: "events.amazonaws.com" SourceArn: Fn::GetAtt: - "RegisterRule" - "Arn" UnRegisterRule: Type: AWS::Events::Rule Properties: EventPattern: { "source": [ "aws.ec2" ], "detail-type": [ "EC2 Instance State-change Notification" ], "detail": { "state": [ "terminated" ] } } Name: unregister-rule Targets: - Arn: Fn::GetAtt: - "UnregisterLambdaFunction" - "Arn" Id: "TargetFunc1" PermissionForEventsToInvokeLambda: Type: "AWS::Lambda::Permission" Properties: FunctionName: Ref: "UnregisterLambdaFunction" Action: "lambda:InvokeFunction" Principal: "events.amazonaws.com" SourceArn: Fn::GetAtt: - "UnRegisterRule" - "Arn"

というふうに書きました。slsはコード以外のデプロイつまりAWSリソースはすべてCfnで行われます。slsの優れているところは、このCfnに対してほぼべた書きでserverless.yml に追記できる点です。これより2年前の私はEC2イベントを実現していました。これは言葉を変えれば、今本体がサポートしていないイベントでも、Cfnがわかる人ならば自分で追加できるということになります。この点は本体slsのマージが遅いならばとっととプラグインとして公開し、みんな早くハッピーになれるので、先見の明と先に書いたのはこの点です。

解説します。

RegisterRule: Type: AWS::Events::Rule

はCloudWatch Events を定義しています、注目ポイントは

Arn: Fn::GetAtt: - "UnregisterLambdaFunction" - "Arn" Id: "TargetFunc1"

で、イベント時に着火するLambdaを指定していますが、この名前は deploy 時に反映されるCfnのテンプレートやリソース名を確認して忖度し適合させています。みなさんがやる場合も生成されるCfnを読んでslsに忖度してあげましょう。

次に

PermissionForEventsToInvokeLambda: Type: "AWS::Lambda::Permission"

の部分です。これはManagementConsoleでしかLambdaをいじったことがないと一生理解できないかもしれないLambda側のパーミッションです。Lambda関数の中で必要となる = APIコールするAWSリソースに対して、Lambdaが権限を持っている必要があるは直感でわかるはfずですが、これは逆に S3やCloudWatch Events のようなものから対象Lambdaが呼ばれてもいいよ という許可設定です。この点は重要なので詳しく書いておきます。 例えばAWSリソース(A)から別のAWSリソース(B)へAPIコールする場合に、AにCredentialなどが持てるのであれば、A -> B の権限を、Aが持っていれば��いとなります。しかし CloudWatch EventsにはCredentialは仕込めません。私はこの手のものを俺用語で公共物と言ってます、具体的にはS3やCloudWatch Events,SQS 。対して、Credentialを仕込ませられるものは占有物と勝手にいい、代表はEC2やLambdaです。 つまり、占有物 -> 公共物ならば 占有物にCredential+権限があればOK。しかし公共物 -> 占有物には Credentialが使えない、「どうしよう、公共物ってことなので、だれでも名前さえ知ってたら呼べちゃう」を解決するのがこのLambda Permission。簡単にいうと、受ける側で送信元を限定する、A -> B の呼び出しをB側で許可するということになります。最近は公共物でもロールを当てることができたりします e.g. Cfnロール

EC2のイベントが本体に取り組まれたため、今回はこんなにスッキリしています。たったこれだけでCloudWatch Eventsの登録 & Lambdaパーミッションの設定ができます。

functions: register: handler: register.handle memorySize: 128 timeout: 30 events: - cloudwatchEvent: event: source: - "aws.ec2" detail-type: - "EC2 Instance State-change Notification" detail: state: - running

nodejs(sls)を固定化させる方法

最近のnpm は lockが可能なので少し立ち位置が微妙ですが yarn(hadoop関係ない方)で固定化させていました。とりあえず今回は npmでlockするので、yarnは無しにしました。 次にnodejs本体のバージョン固定と、ネイティブバイナリのビルドについての考慮です。

nodejs本体のバージョン固定

slsが入ったDockerイメージも誰かが作っているので、これに乗っかるのもありです。が、訳あってこれは使いません。

lambda向けネイティブバイナリビルド

じつは今回公開したLambdaは、ネイティブバイナリのビルドはありません。のでこの機能は必要ないのですが。ネイティブバイナリとは、まあ一般的にはC/C++ で書かれるそのOS/CPUでしか動かないコンパイル済みのバイナリを指します。これはmacでもWindowsでも引っかかる問題です。仮にローカルPCとしてmacでビルドした場合、mac用のバイナリができます。当然これをLinuxに持ち込んでも動かない。 Python使いなら requirements.txt というのを書くと思いますが、これをDocker でビルドして、バイナリをクライアントPCに持ってくるプラグインがあります serverless-python-requirements。 これはrequirements.txtを扱えるだけではなく、Dockerを使ってのクロスコンパイル(というかDockerコンテナがLinuxなのでそこで普通にビルドするだけ)が可能です。 どうせやるならこの点も解消しようと思いました。

slsコマンド本体はDockerでやるのは決めていたので、そのまま何も考えないならば slsのDockerから、更にbuild用Dockerを作りそこでビルドです。ちなみにこれはDinDを使わなくとも一応実現可能ですが、macでしか使えないはずです。今回は採用しませんでしたが、一応残しておくと

slsコンテナに -v で docker.socket(UNIXドメインソケット)を渡す

slsコンテナに dockerコマンドを突っ込む

上記で完璧と思ったが、slsコンテナからビルド用コンテナに行くとき、さらに -v でソース=requirements.ext がある場所をバインドしている。よって -v する mac上のパスと、sls��ンテナのパスを完全に一致させる必要がある。

となります。が、この方法ははっきり言ってアホです。そもそもslsコンテナの時点でLinuxなので、だったらそこで普通にビルドしたら良いだけです。serverless-python-requirementsはDockerを使わないビルドも可能ですので、今回はこちらでやりました。方針としてはslsのblogと同じように、serverless-python-requirementsはグローバルインストールしない、dockerizePip: falseで使うことにします。こちらもpython37/36 だけですが Dockerfile & image を公開しています。

Containers for Serverless framework. Contribute to h-imaoka/sls-builder development by creating an account on GitHub. h-imaoka/sls-builder - GitHub

github.com

https://hub.docker.com/r/himaoka/sls-builder

まとめ・感想

2年たった今でもslsは優秀だなと改めて感じた。プラグイン型や、Cfnによるデプロイは本当に先見の明があり。

ド素人は本体にあるイベントで我慢して

ちょっと分かる人は、Cfnのリソースを直接書き足して(2年前の私)

もっと分かる人は、プラグインにして公開して

プラグインでももうこれは本体に取り込んで良いんじゃないかと思うものは、PRして

こうすることで、素人に優しい環境を提供しつつ、玄人の尊厳も忘れないというOSSの理想の形が体現できているように思う。ま、samは使ったことないんだけどね。

元記事はこちら

「AWSでGCEぽく、Lambdaでインスタンス起動時にDNS自動登録する」

January 09, 2019 at 12:00PM

0 notes

Text

Java Fullstack Developer with AWS

Preferred skills •Experience building front-end applications using HTML5, CSS3, JS, Angular 4/5/6 (NOT AngularJS). •Working understanding of Angular-CLI and NodeJS. •Strong fundamentals of CSS preprocessors - LESS , SASS or SCSS . •Experienced with AWS or other Cloud Services (CloudFormation, Lambda, ECS, EC2, IAM, RDS etc). •Experience with REST- or SOAP-based web services, APIs, XML, JSON, JavaScript, Python/ Node.js/ J2EEE/JAVA, and design patterns •Passionate about learning new things. •Experience with unit testing Angular apps using Karma and Jasmine. Keywords: Core Java, Spring , Hibernate, Collections, Spring Boot, AWS, Angular(Which version used) Reference : Java Fullstack Developer with AWS jobs from Latest listings added - LinkHello http://linkhello.com/jobs/technology/java-fullstack-developer-with-aws_i8153

0 notes