#realtime usecases

Explore tagged Tumblr posts

Text

MuleSoft Advanced Course

🚀 Unlock the Next Level in Your IT Career with Our MuleSoft Advanced-Level Course! 🌟

Are you ready to take your MuleSoft expertise to the next level? Whether you're an IT professional, developer, or aspiring integration architect, our MuleSoft Advanced-Level Course is designed to empower you with cutting-edge skills and real-time hands-on experience.

🔑 What You’ll Learn

✅ Transaction Management: Master implementations, supported connectors, and scenarios.

✅ Object Store: Explore v1 vs. v2, scenarios, and demos.

✅ Batch Processing: Learn phases, error handling, and practical strategies.

✅ Non-Functional Requirements (NFRs): Discover policies and configurations.

✅ API Policies: Implement authentication, caching, logging, and rate-limiting policies.

✅ MUnit Testing: Build and mock tests, achieve 100% coverage.

✅ CloudHub 2.0: Set up CI/CD pipelines and explore advanced features.

✅ Custom Development: Create custom connectors and policies.

...and much more!

This course covers 25+ in-depth topics, packed with real-time examples, demos, and hands-on projects to make you job-ready.

💡 Why Choose Us?

🔹 Learn from a MuleSoft Certified Integration Architect with 12+ years of integration experience.

🔹 Step-by-step guidance from basics to advanced concepts.

🔹 Get access to real-world projects and use cases.

🔹 Interactive support to help you master even the toughest concepts.

🎯 Who Should Enroll?

👉 Developers looking to become MuleSoft experts.

👉 Freshers and non-IT professionals entering the IT field.

👉 Experienced professionals upgrading their integration skills.

🎉 Limited Time Offer – Enroll Now and Save Big!

⏳ Offer ends soon. Don’t miss out!

📌 Secure your spot today and start your journey toward becoming a MuleSoft pro.

🛠 How to Get Started?

👉 Visit: https://klarttechnologies.com/mulesoft-advanced-course/

and

fill this form : https://forms.gle/arPz3s3ntTMeY7EKA

👉 DM us for exclusive course details or call at 7981842069.

🚀 Don’t wait! Level up your career with this advanced MuleSoft course and stand out in the competitive IT market.

📢 Share this post with your network to help others unlock their potential!

#MuleSoft #MuleSoftTraining #AdvancedMuleSoft #Integration #ITCareer #APIDevelopment #TechTraining

0 notes

Link

There are ample of Apache Spark use cases. In this article, you will study some of the best use cases of Spark.

https://data-flair.training/blogs/spark-use-cases/

#Spark # #Screaning #useCases

0 notes

Text

Triển khai ứng dụng và vận hành Data Lake

1. Ingest Data (Đưa dữ liệu vào hệ thống)

- Việc đưa dữ liệu vào hệ thống cần lưu ý:

+ Dữ liệu được đưa vào sẽ phục vụ lưu trữ và đảm bảo cho các dịch vụ sử dụng phía sau nên Data phải đảm bảo thời gian, đỗ trệ dữ liệu phù hợp.

+ Trong các trường hợp việc xử lý theo lô mất nhiều thời gian, đặc biệt các hệ thống Recommendation đòi hỏi độ trễ mức Near RealTime thì Ingest Data chính là bước bạn thực hiện nó.

ð Vì vậy, bạn cần một công cụ đảm bảo tự động gom dữ liệu thành nhóm. Công cụ này giúp bạn tổ chức các luồng Streaming tự động, ở đây mọi người có thể sử dụng stack Nifi + Kafka + Spark Streaming và Kiniesis Stream để chuyển dữ liệu lên Amazon S3 hoặc Redshift trong trường hợp bạn sử dụng Cloud.

- Với Spark Streaming đã phát triển đáng kể về khả năng và tính đơn giản, cho phép bạn phát triển các ứng dụng Streaming. Nó sử dụng các API như Spark Batch, hỗ trợ Java, Scala và Python. Có thể đọc dữ liệu từ HDFS, Flume, Kafka, …

2. Cảnh báo, giám sát và quản lý dữ liệu

- Khi bạn bắt đầu phát triển Data Lake của mình thì cấu trúc trong nó phải được xác định rõ.

- Bạn cần quản trị các Data Flow để đảm bảo các luồng ETL luôn hoạt động hoặc trong trường hợp xảy ra lỗi phải được cảnh báo và giám sát.

a. Giám sát, cảnh báo Data Lake

- Giám sát là việc cực kỳ quan trọng trong việc thành công của Data Lake.

- Ngày ngày các hệ thống giám sát cung cấp một bộ dịch vụ phong phú như bảng điều khiển (dashboard), tin nhắn, emaill, gọi, ..nhằm thông báo sớm các bất thường

- LogicMonitor, Datadog và VictorOps là những công cụ mà bạn có thể tham khảo

- Hiện Data Lake bên mình đang tự phát triển công cụ riêng do nhu cầu, ví dụ hình minh họa dưới:

b. Công cụ quản lý dữ liệu

- Việc quản lý dữ liệu bao gồm bảo mật, an toàn thông tin và quản lý danh mục dữ liệu trong hệ thống

- Apache Ranger đóng vai trò trong việc đảm bảo phân quyền truy xuất, nó là một framework cho phép thiết lập các chính sách quản lý quyền của những người sử dụng hệ thống

- Apache Atlas là một Low-Level Service trong Hadoop Stack và là công cụ hỗ trợ việc quản lý danh mục dữ liệu, hỗ trợ Data Lineage bằng việc giúp mọi người hiểu Meta Data, dòng đời, công thức và các định nghĩa liên quan của dữ liệu

3. Chuẩn bị dữ liệu

- Bước xử lý và tổng hợp sẽ tạo ra những giá trị cho dữ liệu, bước này sẽ bổ sung các thông tin danh mục, id, hoặc tính toán lên các giá trị so sánh cùng kỳ, ..

- Công cụ thường sử dụng ở đây là Hive SQL do khả năng triển khai nhanh, phù hợp và dễ sử dụng với đa số người dùng

- Apache Hive cũng hỗ trợ tương thích với Atlast đẻ đồng bộ danh mục dữ liệu tự động

- Hive được tổ chức để trở thành một Data Warehouse trên nền Hadoop/MapReduce, nó biên dịch SQL -> Spark Job -> MapReduce.

- Hive Metastore lưu trữ cấu trúc của bảng, giúp chuyển các thư mục HDFS thành dữ liệu có cấu trúc để Query

- Bạn có sử dụng Spark SQL,Presto, Tez, Impala , Drill để tương tác với Hive

4. Model và Serve Data

- Phần học máy ở đây có thể dùng Spark ML, nó là một mảng riêng nên mình sẽ không đi sâu, các bạn có thể join group Cộng đồng Big Data Analytics.

- Trong phần này, bạn có thể triển khai các usecase phổ biến về quản lý Khách hàng như Churn, Upsell, Cross Sell,..

5. Khai thác dữ liệu

- Các công cụ BI sẽ cung cấp cho bạn khả năng trình diễn và khai thác dữ liệu hiệu quả

- Ví dụ như Power BI, Tableau, Qlik đều là những công cụ cho phép bạn cấu hình , tùy chỉnh và tương tác với dữ liệu hiệu quả. Nó đều hỗ trợ kết nối với Impala, Presto, Tez,..

6. Triển khai và tự động hóa

- Sau khi bạn đã có Job xử lý, tổng hợp dữ liệu điều cần làm tiếp theo là triển khai để chúng chạy định kỳ theo lịch, đó chính là Work Flow

- Hiện có một số Work Flow Open source phổ biến như Airflow, Ozie, ..

- Ngoài ra, trong các Flow phức tạp, bạn có thể sử dụng các công cụ ETL để tích hợp được nhiều tính năng hơn, ví dụ như Pentaho, Talend

Link tham khảo

https://learning.oreilly.com/library/view/operationalizing-the-data/9781492049517/

1 note

·

View note

Text

Pylon Network is the Moonshot of 2020

Pylon Network (PYLNT) has max supply of 633858 only and sitting at a ridiculously low marketcap of 390k approx. They recently got listed on idex and have recently released updated tokenomics. What we are about to witness is outcome of hardwork of 4-5 years.

First lets understand what the project is all about. PYLNT is a blockchain for energy sector and helps world save energy and consumers their energy bills. Apart from this they are also working on P2P energy trading marketplace, where companies can trade their energy credits (research about Copenhagen summit for this usecase). So they have built a tech, which when implemented , automatically helps companies and people save on energy. In simple language, The tech automatically handles the diversion of surplus energy, provides realtime data and improves efficiency.

As per their new token model, every company from now on will have to market buy tokens and stake in order to be able to run federated Nodes and implement pylon. So a lot of buy pressure is coming up.

They are already being used at 4 muncipalities and few private companies and they recently got contract to implement spain's first renewable energy project.

I feel so proud to see, Pylon team is working on an inclusive approach where token holders share in the business success.

500k only in circulation and low marketcap. Lot's of marketing coming before month end.

Let's also understand more about the project and it's History

It's a highly technical project that boast of several accolades from Individuals and governments. For example, Their Chairman won Engineer of the year award in 2017 , apart from that some of the other positives include but not limited to

Working partnership with Basque Country’s energy cooperative, GoiEner Partnership with Denmark based GreenHydrogen Received the prize from Spain Tech Center, being selected unanimously as the most innovative from all finalist startups, to represent Spain in Silicon Valley Launched decentralized renewable energy exchange pilot in partnership with Ecooo. Partnership with the community of Freetown Christiania, Denmark. The Merchants Association of the San Fernando Market, partner-consumer of the energy cooperative La corriente, joins the Pylon Network pilot test and successfully completes the installation of what is now, the first “Metron” in Madrid, Spain. Partnered with eGEO for the development of a certified energy meter Spanish company Mirubee integrates Blockchain technology and Pylon Network open source software in its energy meters. The only Spanish company invited by the Danish Government to improve public services. Last October, Pylon Network was selected as winners of World Summit Awards (WSA) in the Green Energy & Environment category. Working partnership with Energisme Awarded by WAS (A UN Subsidary) and much more

The list above is very small, and a lot more has been done.

Pylon Network was awarded by UN and featured and hugely refrenced in research articles published by scientists/professors from Institute of Sensors, Signals and Systems, Heriot-Watt University, dinburgh, UK, Department of Anthropology, Durham University, Durham, UK, Siemens Energy Management, Hebburn, Tyne and Wear, UK, School of Energy, Geoscience, Infrastructure and Society, Heriot-Watt University, Edinburgh, UK Highest Award winning team in Crypto.

Project widely in use and being appreciated by reputed science Orgs.

Why you should invest in Pylon ?

Firstly they have started getting regular work contracts in EU region, hence it has now become a revenue generating project and Token holders earn a percentage of revenue share.

[u][i]Low supply, low marketcap and team has confirmed a lots of marketing coming once the tokenomics and mainnet details are out next week.[/i][/u]

The best part is, Pylon isn't in speculation or upcoming phase like other projects, that "this will happen"/"this will come" etc etc , it is already live at various municipalities in and around Spain, so the risk factor has gone. Team will be expanding to other regions of EU and USA pretty soon.

To make it easier for you to understand, what all has been achieved, I went through various artices from past and collated the following for you. These are some of the achievements, team has procured till date. This is not an exhaustive list and there is much much more to the project. But still it will provide you better picture on the hardwork that has gone into the project.

August 2017

Chosen as one of the finalist startups in South Summit 2017

Working partnership with Basque Country’s energy cooperative, GoiEner Interviewed by a spanish media company, El Referente Guto Owen, a highly experienced energy & environment consultant for governments and private sector clients in UK & internationally, joins as Advisor.

September 2017

Cristina Carrascosa, highly experienced blockchain lawyer, education from London School of Economics, Author of various Blockchain Books, Joins the team. Started discussions with Greenpeace for probable collaboration. Code audit by Entropy Factory. Partnership with Denmark based GreenHydrogen

October 2017

Received the prize from Spain Tech Center, being selected unanimously as the most innovative from all finalist startups, to represent Spain in Silicon Valley Presented at Bitcoin meetup The Cube, La Ingobernable, Madrid. Article published in Energias Renovables, most prestigious magazine of Spain on renewable energies. Partnership with the community of Freetown Christiania, Denmark.

Presented at series of meet-ups around Spain and Europe in Copenhagen, Madrid, San Sebastian, Bilbao and others. Finalized details of pilot project in order to improve the energy distribution and management, within Christiania’s micro-grid.

November 2017

Published Press releases in various online magazines. Organized few meetups in Basque country.

Launched decentralized renewable energy exchange pilot in partnership with Ecooo. Trip to Silicon Valley and participation in a 2-week accelerator program in San Francisco. Presented at Embarcadero center in downtown San Francisco.

December 2017

Meetings with Marine Clean Energy and Sonoma Clean Power of Califronia. Visited Blockchain EXPO North America, and met with various crypto players. Visited Plug&Play Tech Center. Visited offices of Silicon Valley Power and Palo Alto Public Utility. Introductory talks with E-On Accelerator (DK), Accelerace (DK) and GridVC (FIN)

Jaunuary 2018

Panel member at European Energy Transition Conference 2018 – Geneva, Switzerland! Presented together with GoiEner at Ateneo de Madrid – Energy & blockchain forum – ICADE, Madrid.

Released screenshots of the platform’s alpha version. Participation in Ateneo (ESP) and European Smart Cities (CH) conferences. Code release for first fully functional blockchain algorithm, designed specifically for the needs of the energy sector.

February 2018

Demo version released for Public. Presented at University of Oxford. Presented at TechHub Swansea, Wales.

March 2018

Installed the first Pylon based “Metron” energy meters at users of the GoiEner energy cooperative, in a real environment.

April 2018

Presented at

EventHorizon (Berlin, GERMANY). EventHorizon is the ONE exclusive annual event centered on energy blockchain solutions for a future based solely on renewable resources. EnergyCities (Rennes, FRANCE). The role of blockchain in the energy transition of cities NIRIG (Belfast, IRELAND).

May 2018

Installed first “Metron” energy meter in Madrid :- The Merchants Association of the San Fernando Market, partner-consumer of the energy cooperative La corriente, joins the Pylon Network pilot test and successfully completes the installation of what is now, the first “Metron” in Madrid, Spain.

Partnered with eGEO for the development of a certified energy meter called – METRON- which will integrate blockchain and the Pyloncoin payment method. First version of METRON dApp launched.

June 2018

Presented at

ENTSO-E, Brussels, Belgium.

ENGIE Global Team Representatives, Madrid, Spain Social Enterprise Leaders Forum (SELF), Seoul, S. Korea. Transactive Energy & Blockchain, Vienna, Austria. RESCoop.eu General Assembly, Milan, Italy.

July 2018

Prosumers and Pyloncoin dynamic payment system integration.

Presented at

Smart Energy Wales, Organised by Renewable UK (Cymru). MARESTON, organised by MARES Madrid. Spanish Foundation for Renewable Energy, Malaga, Spain.

October 2018

Spanish company Mirubee integrates Blockchain technology and Pylon Network open source software in its energy meters.

November 2018

Published the most up-to-date version of its Blockchain Open Source Code and invited energy market players to use it. Pyloncoin Blockchain Explorer released.

January 2019

Presented at Energy Revolution Congress – Valencia, Spain

February 2019

Updated Whitepaper and Tokenomics Released. Interviewed by Sustainable Energy magazine, The Energy Bit.

March 2019

Presented at

GENERA Energia – Madrid, Spain. CTEC – Barcelona, Spain.

May 2019

The only Spanish company invited by the Danish Government to improve public services.

Presented at

Intersolar (Munich, Germany) ,the largest Solar Energy Conference in the industry. UNEF (Organised by the Spanish Union of PV technology) – Madrid, Spain

June 2019

Launched community reward program. Webinar :- the impact of new regulations on the self-consumption landscape in Spain. Belén Gallego, serial Entreprenuer, Public Speaker, Energy Consultant, Founder ATA Insights, Joins Pylon team.

July 2019

Participated in round table conference, Vinalab – Ruta Hackathon

August 2019

Participated in EPRI event :- Presented Pylon Network to investors and US utilities, who were interested to explore the blockchain/ energy landscape.

September 2019

Presented at The Madrid Energy Conference along with representatives of companies such as IBM, Shell etc

October 2019

Presented at International Conference of Energy Communities – Lisbon Presentation in Plug & Play Europe Event – Berlin Foro Solar Conference – Madrid WeekINN Conference – San Sebastian IoT World Congress – Barcelona

Formed Partnership with Energisme

November 2019

Presented at ACOCEX Conference The Unconference Valencia Startup Week – Barrio La Pinada event

December 2019

Proudly mentioned in various Spanish media, for delivering energy efficiency impact for local communities: the Valencian Municipality of Canet achieved a 12% reduction on their annual electricity costs, with the support of simple, consumer-centered and consumer-friendly energy services of Pylon Network!

[u][i]National Winner AT WSA Awards by United Nations[/i][/u]

January 2020

Started releasing Educational Video Series Presented at Presentation at CMES, Barcelona Interview published by The online media outlet “The Daily Chain”

February 2020

Presented at GENERA International Trade Paco Negre Assigned as New CFO of Pylon Network Javier Cervera appointed as chairman of Pylon Network! Javier has been recognized as the engineer of the year for 2017 and as the vice-president of AEE – the Association of Energy Engineers.

March 2020 Listing confirmed on Idex (Trading went live in April) Presented at Effie solar virtual conference

1 note

·

View note

Link

AWS is just too hard to use, and it's not your fault. Today I'm joining to help AWS build for App Developers, and to grow the Amplify Community with people who Learn AWS in Public.

Muck

When AWS officially relaunched in 2006, Jeff Bezos famously pitched it with eight words: "We Build Muck, So You Don’t Have To". And a lot of Muck was built. The 2006 launch included 3 services (S3 for distributed storage, SQS for message queues, EC2 for virtual servers). As of Jan 2020, there were 283. Today, one can get decision fatigue just trying to decide which of the 7 ways to do async message processing in AWS to choose.

The sheer number of AWS services is a punchline, but is also testament to principled customer obsession. With rare exceptions, AWS builds things customers ask for, never deprecates them (even the failures), and only lowers prices. Do this for two decades, and multiply by the growth of the Internet, and it's frankly amazing there aren't more. But the upshot of this is that everyone understands that they can trust AWS never to "move their cheese". Brand AWS is therefore more valuable than any service, because it cannot be copied, it has to be earned. Almost to a fault, AWS prioritizes stability of their Infrastructure as a Service, and in exchange, businesses know that they can give it their most critical workloads.

The tradeoff was beginner friendliness. The AWS Console has improved by leaps and bounds over the years, but it is virtually impossible to make it fit the diverse usecases and experience levels of over one million customers. This was especially true for app developers. AWS was a godsend for backend/IT budgets, taking relative cost of infrastructure from 70% to 30% and solving underutilization by providing virtual servers and elastic capacity. But there was no net reduction in complexity for developers working at the application level. We simply swapped one set of hardware based computing primitives for an on-demand, cheaper (in terms of TCO), unfamiliar, proprietary set of software-defined computing primitives.

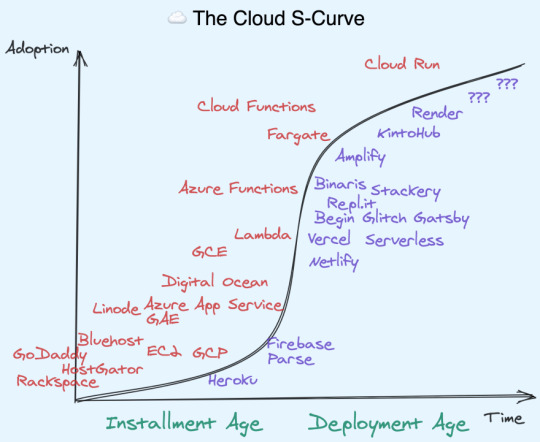

In the spectrum of IaaS vs PaaS, App developers just want an opinionated platform with good primitives to build on, rather than having to build their own platform from scratch:

That is where Cloud Distros come in.

Cloud Distros Recap

I've written before about the concept of Cloud Distros, but I'll recap the main points here:

From inception, AWS was conceived as an "Operating System for the Internet" (an analogy echoed by Dave Cutler and Amitabh Srivasta in creating Azure).

Linux operating systems often ship with user friendly customizations, called "distributions" or "distros" for short.

In the same way, there proved to be good (but ultimately not huge) demand for "Platforms as a Service" - with 2007's Heroku as a PaaS for Rails developers, and 2011's Parse and Firebase as a PaaS for Mobile developers atop AWS and Google respectively.

The PaaS idea proved early rather than wrong – the arrival of Kubernetes and AWS Lambda in 2014 presaged the modern crop of cloud startups, from JAMstack CDNs like Netlify and Vercel, to Cloud IDEs like Repl.it and Glitch, to managed clusters like Render and KintoHub, even to moonshot experiments like Darklang. The wild diversity of these approaches to improving App Developer experience, all built atop of AWS/GCP, lead me to christen these "Cloud Distros" rather than the dated PaaS terminology.

Amplify

Amplify is the first truly first-party "Cloud Distro", if you don't count Google-acquired Firebase. This does not make it automatically superior. Far from it! AWS has a lot of non-negotiable requirements to get started (from requiring a credit card upfront to requiring IAM setup for a basic demo). And let's face it, its UI will never win design awards. That just categorically rules it out for many App Devs. In the battle for developer experience, AWS is not the mighty incumbent, it is the underdog.

But Amplify has at least two killer unique attributes that make it compelling to some, and at least worth considering for most:

It scales like AWS scales. All Amplify features are built atop existing AWS services like S3, DynamoDB, and Cognito. If you want to eject to underlying services, you can. The same isn't true of third party Cloud Distros (Begin is a notable exception). This also means you are paying the theoretical low end of costs, since third party Cloud Distros must either charge cost-plus on their users or subsidize with VC money (unsustainable long term). AWS Scale doesn't just mean raw ability to handle throughput, it also means edge cases, security, compliance, monitoring, and advanced functionality have been fully battle tested by others who came before you.

It has a crack team of AWS insiders. I don't know them well yet, but it stands to reason that working on a Cloud Distro from within offers unfair advantages to working on one from without. (It also offers the standard disadvantages of a bigco vs the agility of a startup) If you were to start a company and needed to hire a platform team, you probably couldn't afford this team. If you fit Amplify's target audience, you get this team for free.

Simplification requires opinionation, and on that Amplify makes its biggest bets of all - curating the "best of" other AWS services. Instead of using one of the myriad ways to setup AWS Lambda and configure API Gateway, you can just type amplify add api and the appropriate GraphQL or REST resources are set up for you, with your infrastructure fully described as code. Storage? amplify add storage. Auth? amplify add auth. There's a half dozen more I haven't even got to yet. But all these dedicated services coming together means you don't need to manage servers to do everything you need in an app.

Amplify enables the "fullstack serverless" future. AWS makes the bulk of its money on providing virtual servers today, but from both internal and external metrics, it is clear the future is serverless. A bet on Amplify is a bet on the future of AWS.

Note: there will forever be a place for traditional VPSes and even on-premises data centers - the serverless movement is additive rather than destructive.

For a company famous for having every team operate as separately moving parts, Amplify runs the opposite direction. It normalizes the workflows of its disparate constituents in a single toolchain, from the hosted Amplify Console, to the CLI on your machine, to the Libraries/SDKs that run on your users' devices. And this works the exact same way whether you are working on an iOS, Android, React Native, or JS (React, Vue, Svelte, etc) Web App.

Lastly, it is just abundantly clear that Amplify represents a different kind of AWS than you or I are used to. Unlike most AWS products, Amplify is fully open source. They write integrations for all popular JS frameworks (React, React Native, Angular, Ionic, and Vue) and Swift for iOS and Java/Kotlin for Android. They do support on GitHub and chat on Discord. They even advertise on podcasts you and I listen to, like ShopTalk Show and Ladybug. In short, they're meeting us where we are.

This is, as far as I know, unprecedented in AWS' approach to App Developers. I think it is paying off. Anecdotally, Amplify is growing three times faster than the rest of AWS.

Note: If you'd like to learn more about Amplify, join the free Virtual Amplify Days event from Jun 10-11th to hear customer stories from people who have put every part of Amplify in production. I'll be right there with you taking this all in!

Personal Note

I am joining AWS Mobile today as a Senior Developer Advocate. AWS Mobile houses Amplify, Amplify Console (One stop CI/CD + CDN + DNS), AWS Device Farm (Run tests on real phones), and AppSync (GraphQL Gateway and Realtime/Offline Syncing), and is closely connected to API Gateway (Public API Endpoints) and Amazon Pinpoint (Analytics & Engagement). AppSync is worth a special mention because it is what first put the idea of joining AWS in my head.

A year ago I wrote Optimistic, Offline-first apps using serverless functions and GraphQL sketching out a set of integrated technologies. They would have the net effect of making apps feel a lot faster and more reliable (because optimistic and offline-first), while making it a lot easier to develop this table-stakes experience (because the GraphQL schema lets us establish an eventually consistent client-server contract).

9 months later, the Amplify DataStore was announced at Re:Invent (which addressed most of the things I wanted). I didn't get everything right, but it was clear that I was thinking on the same wavelength as someone at AWS (it turned out to be Richard Threlkeld, but clearly he was supported by others). AWS believed in this wacky idea enough to resource its development over 2 years. I don't think I've ever worked at a place that could do something like that.

I spoke to a variety of companies, large and small, to explore what I wanted to do and figure out my market value. (As an aside: It is TRICKY for developer advocates to put themselves on the market while still employed!) But far and away the smoothest process where I was "on the same page" with everyone was the ~1 month I spent interviewing with AWS. It helped a lot that I'd known my hiring manager, Nader for ~2yrs at this point so there really wasn't a whole lot he didn't already know about me (a huge benefit of Learning in Public btw) nor I him. The final "super day" on-site was challenging and actually had me worried I failed 1-2 of the interviews. But I was pleasantly surprised to hear that I had received unanimous yeses!

Nader is an industry legend and personal inspiration. When I completed my first solo project at my bootcamp, I made a crappy React Native boilerplate that used the best UI Toolkit I could find, React Native Elements. I didn't know it was Nader's. When I applied for my first conference talk, Nader helped review my CFP. When I decided to get better at CSS, Nader encouraged and retweeted me. He is constantly helping out developers, from sharing invaluable advice on being a prosperous consultant, to helping developers find jobs during this crisis, to using his platform to help others get their start. He doesn't just lift others up, he also puts the "heavy lifting" in "undifferentiated heavy lifting"! I am excited he is leading the team, and nervous how our friendship will change now he is my manager.

With this move, I have just gone from bootcamp grad in 2017 to getting hired at a BigCo L6 level in 3 years. My friends say I don't need the validation, but I gotta tell you, it does feel nice.

The coronavirus shutdowns happened almost immediately after I left Netlify, which caused complications in my visa situation (I am not American). I was supposed to start as a US Remote employee in April; instead I'm starting in Singapore today. It's taken a financial toll - I estimate that this coronavirus delay and change in employment situation will cost me about $70k in foregone earnings. This hurts more because I am now the primary earner for my family of 4. I've been writing a book to make up some of that; but all things considered I'm glad to still have a stable job again.

I have never considered myself a "big company" guy. I value autonomy and flexibility, doing the right thing over the done thing. But AWS is not a typical BigCo - it famously runs on "two pizza teams" (not literally true - Amplify is more like 20 pizzas - but still, not huge). I've quoted Bezos since my second ever meetup talk, and have always admired AWS practices from afar, from the 6-pagers right down to the anecdote told in Steve Yegge's Platforms Rant. Time to see this modern colossus from the inside.

0 notes

Text

Ultimate Mulesoft Traning

We are offering new MuleSoft training course with Realtime project scenarios by industry experts.

Please go through below details for more information.

What we offer

22+ hours of video classes

life time LMS Access

Tutor support

Doubts clarification

Resume building help

Interview Preparation

What you will Learn

How to implement Mulesoft project by your own

API LifeCycle

API LED Connectivity

Gather Requirements for REST API

Design your REST API

Implement your REST API

Cloudhub deployment

data transformation

Error Handling

Security Features

API Policies

Database integration

Salesforce Integration

ActiveMQ Integration

..... Much More.....

Please have a look at our Mulesoft Fundamentals Course Content for detailed Information

Our Approach

Detailed Explanation of Basic topics to Hands-On.

Self paced video tutorials.

Step by step explanation of all topics.

Want to see our teaching Methodology

You can go through couple of videos from our Youtube channel if you want to check our way of explanation.

#mulesoft#onlinetraining#realtime usecases#Mulesoft Online Training#mulesoft online training in india#mulesoft training#mulesoft training in hyderabad#mulesoft online training#mulesoft online course#mule esb training#mulesoftdeveloper#mulesoftdevelopers#mulesoftcommunity#mulesoftarchitect#mulesoftdevelope#online#onlinecourse#onlineeducation#Mule ESB Training

0 notes