#UseCases

Explore tagged Tumblr posts

Text

Use Cases on Migrations from Google Workspace to Microsoft 365

0 notes

Text

Why Do So Many Big Data Projects Fail?

In our business analytics project work, we have often come in after several big data project failures of one kind or another. There are many reasons for this. They generally are not because of unproven technologies that were used because we have found that many new projects involving well-developed technologies fail. Why is this? Most surveys are quick to blame the scope, changing business requirements, lack of adequate skills etc. Based on our experience to date, we find that there are key attributes leading to successful big data initiatives that need to be carefully considered before you start a project. The understanding of these key attributes, below, will hopefully help you to avoid the most common pitfalls of big data projects.

Key attributes of successful Big Data projects

Develop a common understanding of what big data means for you

There is often a misconception of just what big data is about. Big data refers not just to the data but also the methodologies and technologies used to store and analyze the data. It is not simply “a lot of data”. It’s also not the size that counts but what you do with it. Understanding the definition and total scope of big data for your company is key to avoiding some of the most common errors that could occur.

Choose good use cases

Avoid choosing bad use cases by selecting specific and well defined use cases that solve real business problems and that your team already understand well. For example, a good use case could be that you want to improve the segmentation and targeting of specific marketing offers.

Prioritize what data and analytics you include in your analysis

Make sure that the data you’re collecting is the right data. Launching into a big data initiative with the idea that “We’ll just collect all the data that we can, and work out what to do with it later” often leads to disaster. Start with the data you already understand and flow that source of data into your data lake instead of flowing every possible source of data to the data lake.

Then next layer in one or two additional sources to enrich your analysis of web clickstream data or call centre text. Your cross-functional team can meet quarterly to prioritize and select the right use cases for implementation. Realize that it takes a lot of effort to import, clean and organize each data source.

Include non-data science subject matter experts (SMEs) in your team

Non-data science SMEs are the ones who understand their fields inside and out. They provide a context that allows you to understand what the data is saying. These SMEs are what frequently holds big data projects together. By offering on-the-job data science training to analysts in your organization interested in working in big data science, you will be able to far more efficiently fill project roles internally over hiring externally.

Ensure buy-in at all levels and good communication throughout the project

Big data projects need buy-in at every level, including senior leadership, middle management, nuts and bolts techies who will be carrying out the analytics and the workers themselves whose tasks will be affected by the results of the big data project. Everyone needs to understand what the big data project is doing and why? Not everyone needs to understand the ins and outs of the technical algorithms which may be running across the distributed, unstructured data that is analyzed in real time. But there should always be a logical, common-sense reason for what you are asking each member of the project team to do in the project. Good communication makes this happen.

Trust

All team members, data scientists and SMEs alike, must be able to trust each other. This is all about psychological safety and feeling empowered to contribute.

Summary

Big data initiatives executed well delivers significant and quantifiable business value to companies that take the extra time to plan, implement and roll out. Big data changes the strategy for data-driven businesses by overcoming barriers to analyzing large amounts of data, different types of unstructured and semi-structured data, and data that requires quick turnaround on results.

Being aware of the attributes of success above for big data projects would be a good start to making sure your big data project, whether it is your first or next one, delivers real business value and performance improvements to your organization.

#BigData#BigDataProjects#DataAnalytics#BusinessAnalytics#DataScience#DataDriven#ProjectSuccess#DataStrategy#DataLake#UseCases#BusinessValue#DataExperts

0 notes

Text

Transform your videos with 10LevelUp! Create short, engaging clips for social media automatically with AI. From highlight selection to auto captions, grow your channel on autopilot.

Learn more : [ aiwikiweb.com/10levelup ]

0 notes

Text

Amazon DataZone provides end-to-end data lineage preview

Amazon DataZone Documentation

Unlock data with integrated governance features across organisational boundaries.

What is Amazon DataZone?

Customers may catalogue, find, share, and manage data kept on AWS, on-site, and from outside sources more quickly and easily with the help of Amazon DataZone, a data management solution. With Amazon DataZone, administrators and data stewards who oversee an organization’s data assets may manage and limit access to data through fine-grained controls. These safeguards are intended to guarantee access with appropriate context and privilege levels. To find, consume, and work together to create data-driven insights, Amazon DataZone facilitates data access throughout an organisation for engineers, data scientists, product managers, analysts, and business users.

Features

Business data catalogue for Amazon DataZone

Find released data, submit an access request, and begin working with your data in a matter of days as opposed to weeks.

Projects for Amazon DataZone

Utilise data assets to manage and track data assets across projects and to collaborate with teams.

The DataZone site on Amazon

Using an API or web application, get analytics for data assets with a customised view.

Data sharing is controlled by Amazon DataZone

With a regulated workflow, you can be sure that the right people are accessing the correct data for the right purposes.

Use cases

Utilize the business data catalogue to find data

To search, share, and access catalogued data that is kept on AWS, on-site, or with other providers, use business keywords.

Make analytics access simpler

With the help of a web-based application, discover, prepare, transform, analyse, and visualise data in a personalised way.

Simplify the procedures for workflow

Boost productivity by working together across teams and by having self-service access to data and analytics tools.

Manage access from a single location

From a single location, control and regulate data access in compliance with your company’s security policies.

An organization’s data producers and consumers can catalogue, find, analyse, exchange, and manage data with the help of Amazon DataZone, a data management service. A unified data portal makes it simple for engineers, data scientists, product managers, analysts, and business users to access data throughout the entire organisation in order to find, use, and work together to extract data-driven insights.

A recent addition to Amazon DataZone is a feature called “data lineage,” which aids in the visualisation and comprehension of data provenance, change management tracking, root cause investigation in the event of a reported data problem, and readiness for inquiries regarding data flow from source to target. This feature offers a thorough view of lineage events that are automatically collected from the catalogue of Amazon DataZone and combined with other programmatically collected events from outside of Amazon DataZone for an asset.

You may rely on manual paperwork or human contacts when you need to verify how the relevant data originated within the company. The lengthy and perhaps inconsistent nature of this manual approach undermines your confidence in the data. Understanding the data’s origins, changes over time, and consumption patterns through data lineage in Amazon DataZone can assist build trust. AWS Glue can be used to perform ETL transformations on the data and display its data lineage, for instance, from the moment the data was taken as raw files in Amazon Simple Storage Service (Amazon S3) to the moment it was used in tools like Amazon QuickSight.

You can spend less time mapping a data asset and its relationships, creating and debugging pipelines, and enforcing data governance procedures when you use Amazon DataZone’s data lineage. With the use of an API, data lineage enables you to compile all lineage information into one location. From there, it presents the information in a graphical style that helps data users increase productivity, make better data-driven decisions, and locate the source of problems with their data.

Using Amazon DataZone to begin data lineage

You can begin hydrating lineage information into Amazon DataZone programmatically in preview by either sending OpenLineage compatible events from pre-existing pipeline components to capture data movement or transformations that occur outside of Amazon DataZone, or by directly creating lineage nodes using Amazon DataZone APIs. For data consumers, like data analysts or engineers, to know if they are using the correct data for their analysis, or for producers, like data engineers, to track who is using the data they produced, Amazon DataZone automatically captures lineage of its states (i.e., inventory or published states) and its subscriptions for information about assets in the catalogue.

As soon as the data is provided, Amazon DataZone may map the identifier sent through the APIs with the assets that have already been catalogued and begin populating the lineage model. The model creates versions to begin the asset’s visualisation at a specific time based on newly received lineage information, but it also lets you go back to earlier iterations.

Amazon Datazone Pricing

A tiered price structure for Amazon DataZone is dependent on the monthly user count:

The first 500 users will cost $9.00 each.

The following 500 users will cost $8.10 each.

For more than 1,001 users, $7.20 per user

There are no up-front costs or long-term commitments with this pay-as-you-go strategy. A monthly charge depending on your usage will be sent to you.

The following is a summary of the contents of the basic price:

20 megabytes of storage for metadata

4,000 inquiries

0.2 compute units (distributed among users inside the domain)

You will be billed more in accordance with AWS regular charges if you surpass these limitations.

Read more on govindhtech.com

#amazondatazone#providesend#data#lineagepreview#documentation#datazone#usecases#aws#singlelocation#manageaccess#workflow#datamanagement#dataassets#technology#technews#news#govindhtech

0 notes

Text

AOOStar GEM10: A Mini PC with Ryzen 7 7840HS, Oculink, and Dual 2.5 GbE LAN Ports

AOOStar GEM10: A Mini PC with Ryzen 7 7840HS, Oculink, and Dual 2.5 GbE LAN Ports

Introduction

The AOOStar GEM10 is an impressive mini PC that combines powerful hardware with advanced connectivity options. With its Ryzen 7 7840HS processor, Oculink technology, and dual 2.5 GbE LAN ports, this compact device offers exceptional performance and versatility.

Specifications

The AOOStar GEM10 is equipped with an AMD Ryzen 7 7840HS processor, providing high-speed computing power for various tasks. Its Oculink technology enables lightning-fast data transfer rates, making it ideal for demanding applications. Processor The Ryzen 7 7840HS processor is a quad-core, eight-thread CPU with a base clock speed of 3.6 GHz and a boost clock speed of 4.0 GHz. It offers excellent multi-threaded performance, making it suitable for both professional and gaming purposes. Connectivity The AOOStar GEM10 stands out with its dual 2.5 GbE LAN ports, allowing for ultra-fast wired network connections. This feature is particularly beneficial for users who require high-speed data transfers or low-latency connections, such as gamers or content creators. Storage This mini PC supports multiple storage options, including M.2 SSDs and 2.5-inch SATA drives. The flexibility to choose between different storage configurations makes it easy to meet specific storage requirements. Graphics The AOOStar GEM10 features integrated Radeon Vega 11 graphics, delivering impressive visual performance. Whether you're editing videos, playing games, or working with graphic-intensive applications, this mini PC can handle it all.

Performance

The AOOStar GEM10 excels in terms of performance, thanks to its powerful Ryzen 7 7840HS processor and advanced connectivity options. Its high-speed data transfer capabilities and efficient multitasking capabilities ensure smooth and responsive operation, even when handling resource-intensive tasks.

Potential Use Cases

The AOOStar GEM10's compact size and powerful specifications make it suitable for various use cases: Gaming With its powerful processor and integrated graphics, the AOOStar GEM10 is an excellent choice for gaming enthusiasts. It can handle demanding games with ease, providing a smooth and immersive gaming experience. Content Creation Content creators can benefit from the AOOStar GEM10's high-performance capabilities. Whether you're editing videos, rendering 3D models, or working with graphic design software, this mini PC offers the necessary power and speed to enhance your productivity. Home Theater PC The AOOStar GEM10 can be transformed into a home theater PC, allowing you to enjoy your favorite movies and TV shows in stunning detail. Its powerful hardware ensures smooth playback and supports high-resolution content. Productivity For office or productivity use, the AOOStar GEM10 provides a compact and efficient solution. Its powerful processor and ample storage options enable seamless multitasking and efficient workflow management.

Conclusion

The AOOStar GEM10 mini PC offers impressive performance and advanced connectivity options, making it a versatile device for various use cases. Whether you're a gamer, content creator, or simply need a compact and powerful PC for productivity, the AOOStar GEM10 is worth considering. Read the full article

0 notes

Note

Obviously the ability to turn off anonymous asks is very useful and highly popular, but if tumblr had the option to disallow people sending asks under their blog name and accept only anonymous asks, do you think this feature would be useful to anyone at all? (like, not necessarily you in specific, just, do you think it would be a remotely popular choice with any sort of group on tumblr in general)

turning on "harassment only" mode and going to sleep

#serious answer: not like useful but def funny#id probably enable it sometimes just cause#but like i cant think of much of an actual usecase

1K notes

·

View notes

Text

there's nothing quite like using ancient code with all the example images broken and for some god forsaken reason they keep putting everything in tables

#writing#rant#html#they're going directly into divs#i dont know why you used a table and i dont care i will not stand for it#im losing my mind over this tho. literally why a table? its the worst structure. yeah its helpful sometimes. i guess.#but literally the only valid usecase imo is for pre-flexbox times#if you're not using it to have an adjustable variable layout what are you even doing with it#literally? just use a div??? what is happening you're not even using the cells theyre just SITTING THERE IN YOUR CODE#benefit of the doubt says they too were working with restrictions and just doing the best they could#judgement says there is no valid explanation for this tomfoolery

9 notes

·

View notes

Text

this might just be me splitting hairs that dont necessarily need to be split? but i feel like "unaffected" might be a better term than "exempt" (wrt TMA/TME)

#feel free to ignore this#i understand the usecase im just postulating ideas#'exempt' feels too definite for how my brain thinks about it#this isnt even necessarily me saying what the lines are defining has to change#obviously like#people should just get educated about what the terms really mean#but i also feel like improvements to the terminology should be discussed#cause the less friction we have when explaining the better#like if your goal is to communicate ideas there are often better solutions than blaming the person that doesnt understand

4 notes

·

View notes

Note

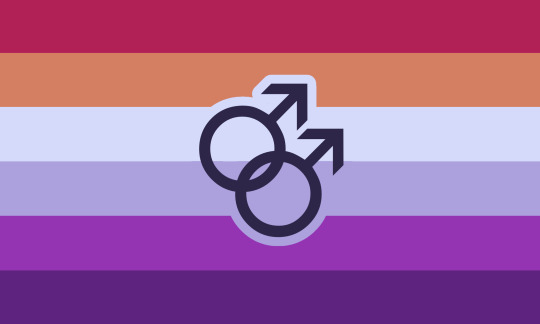

⚣ lesbian : a lesbian that identifies / feels close /whatever to the ⚣ symbol. This flag is used by:

1. gaybians

2. achillean lesbians

3. multigender people

4. butch4butch lesbians

could you design a flag for it? i love making terms but i suck at flags.

[Image ID: Two flags with 6 horizontal stripes and warm, saturated colors. The first has the colors pinkish red, orange, blueish white, lilac, purple, and dark purple. It has symbol in the centre of two interlocking male symbols. The second has the colors dark pink, pink, off white, blueish white, blueish purple, dark purple. /End ID]

⚣ lesbian and ⚢ turian/veldian flags! (i really wanted to complete the set lol)

#this is honestly the most relaxing request ive ever gotten#symbol ✔️#associated colors ✔️#usecases ✔️#name ✔️#⚣ lesbian#⚢ turian#⚢ veldian#suggest a label#mogai#microlabels#mogai coining#orientation

8 notes

·

View notes

Text

Use Cases on Migrations from Google Workspace to Microsoft 365

0 notes

Text

it's gonna be weird as fuck in a couple years when we're all saying "remember the whole ai thing? that shit was wild"

3 notes

·

View notes

Text

Elevate math practice with AI Math Coach! Generate personalized worksheets, mirror classroom exercises, and track progress to help students master math effortlessly.

0 notes

Text

Amazon Route 53 Advanced Features for Global Traffic

What is Amazon Route 53

A dependable and economical method of connecting end users to Internet applications

Sharing and then assigning numerous DNS resources to each Amazon Virtual Private Cloud (Amazon VPC) can be quite time-consuming if you are managing numerous accounts and Amazon VPC resources. You may have even gone so far as to create your own orchestration layers in order to distribute DNS configuration throughout your accounts and VPCs, but you frequently run into limitations with sharing and association.

Amazon Route 53 Resolver DNS firewall

With great pleasure, AWS now provide Amazon Route 53 Profiles, which enable you to centrally manage DNS for all accounts and VPCs in your company. Using Route 53 Profiles, you may apply a standard DNS configuration to several VPCs in the same AWS Region. This configuration includes Amazon Route 53 private hosted zone (PHZ) associations, Resolver forwarding rules, and Route 53 Resolver DNS Firewall rule groups. You can quickly and simply verify that all of your VPCs have the same DNS setup by using Profiles, saving you the trouble of managing different Route 53 resources. It is now as easy to manage DNS for several VPCs as it was for a single VPC.

Because Profiles and AWS Resource Access Manager (RAM) are naturally connected, you can exchange Profiles between accounts or with your AWS Organizations account. By enabling you to create and add pre-existing private hosted zones to your Profile, Profiles effortlessly interacts with Route 53 private hosted zones. This means that when the Profile is shared across accounts, your organizations will have access to the same settings. When accounts are initially provisioned, AWS CloudFormation enables you to utilize Profiles to define DNS settings for VPCs regularly. You may now more effectively manage DNS settings for your multi-account environments with today’s release.

Amazon Route 53 benefits

Automatic scaling and internationally distributed Domain Name System (DNS) servers ensure dependable user routing to your website

Amazon Route 53 uses globally dispersed Domain Name System (DNS) servers to provide dependable and effective end-user routing to your website. By dynamically adapting to changing workloads, automated scaling maximises efficiency and preserves a flawless user experience.

With simple visual traffic flow tools and domain name registration, set up your DNS routing in a matter of minutes

With simple visual traffic flow tools and a fast and easy domain name registration process, Amazon Route 53 simplifies DNS routing configuration. This makes it easier for consumers to manage and direct web traffic effectively by allowing them to modify their DNS settings in a matter of minutes.

To cut down on latency, increase application availability, and uphold compliance, modify your DNS routing policies

Users can customize DNS routing settings with Amazon Route 53 to meet unique requirements including assuring compliance, improving application availability, and lowering latency. With this customization, customers can optimize DNS configurations for resilience, performance, and legal compliance.

How it functions

A DNS (Domain Name System) online service that is both scalable and highly available is Amazon Route 53. Route 53 links user queries to on-premises or AWS internet applications.Image credit to AWS

Use cases

Control network traffic worldwide

Easy-to-use global DNS features let you create, visualize, and scale complicated routing interactions between records and policies.

Construct programmes that are extremely available

In the event of a failure, configure routing policies to predetermine and automate responses, such as rerouting traffic to different Availability Zones or Regions.

Configure a private DNS

In your Amazon Virtual Private Cloud, you can assign and access custom domain names (VPC). Utilise internal AWS servers and resources to prevent DNS data from being visible to the general public.

Which actions can you perform in Amazon Route 53

The operation of Route 53 Profiles

You go to the AWS Management Console for Route 53 to begin using the Route 53 Profiles. There, you can establish Profiles, furnish them with resources, and link them to their respective VPCs. Then use AWS RAM to share the profile you made with another account.

To set up my profile, you select Profiles from the Route 53 console’s navigation pane, and then you select Create profile.

You will optionally add tags to my Profile configuration and give it a pleasant name like MyFirstRoute53Profile.

The Profile console page allows me to add new Resolver rules, private hosted zones, and DNS Firewall rule groups to my account or modify the ones that are already there.

You select which VPCs to link to the Profile. In addition to configuring recursive DNSSEC validation the DNS Firewalls linked to my VPCs’ failure mode, you are also able to add tags. Additionally, you have the ability to decide which comes first when evaluating DNS: Profile DNS first, VPC DNS second, or VPC DNS first.

Up to 5,000 VPCs can be linked to a single Profile, and you can correlate one Profile with each VPC.

You can control VPC settings for different accounts in your organization by using profiles. Instead of setting them up per-VPC, you may disable reverse DNS rules for every VPC that the Profile is connected to. To make it simple for other services to resolve hostnames from IP addresses, the Route 53 Resolver automatically generates rules for reverse DNS lookups on my behalf. You can choose between failing open and failing closed when using DNS Firewall by going into the firewall’s settings. Additionally, you may indicate if you want to employ DNSSEC signing in Amazon Route 53 (or any other provider) in order to enable recursive DNSSEC validation for the VPCs linked to the Profile.

Assume you can link a Profile to a VPC. What occurs when a query precisely matches a PHZ or resolver rule that is linked to the VPC’s Profile as well as one that is related with the VPC directly? Which DNS settings, those from the local VPCs or the profiles, take priority? In the event that the Profile includes a PHZ for example.com and the VPC is linked to a PHZ for example.com, the VPC’s local DNS settings will be applied first. The most specific name prevails when a name query for a conflicting domain name is made (for instance, the VPC is linked to a PHZ with the name account1.infra.example.com, while the Profile has a PHZ for infra.example.com).

Using AWS RAM to share Route 53 Profiles between accounts

You can share the Profile you made in the previous part with my second account using AWS Resource Access Manager (RAM).

On the Profiles detail page, you select the Share profile option. Alternatively, you may access the AWS RAM console page and select Create resource share.

You give your resource share a name, and then you go to the Resources area and look for the “Route 53 Profiles.” You choose the Profile under the list of resources. You have the option to add tags. Next is what you select.

RAM controlled permissions are used by profiles, enabling me to assign distinct permissions to various resource types. The resources inside the Profile can only be changed by the Profile’s owner, the network administrator, by default. Only the contents of the Profile (in read-only mode) will be accessible to the recipients of the Profile, which are the VPC owners. The resource must have the required permissions attached to it in order for the Profile’s recipient to add PHZs or other resources to it. Any resources that the Profile owner adds to the shared resource cannot be edited or removed by recipients.

You choose to allow access to my second account by selecting Next, leaving the default settings.

You select Allow sharing with anyone on the following screen, type in the ID of my second account, and click Add. Next, You select that account ID under Selected Principals and click Next.

You select Create resource share on the Review and create page. The creation of the resource sharing is successful.

You, now navigate to the RAM console using your other account, which you share your profile with. You select the resource name you generated in the first account under the Resource sharing section of the navigation menu. You accept the offer by selecting Accept resource share.

And that’s it! now select the Profile that was shared with you on your Amazon Route 53Profiles page.

The private hosted zones, Resolver rules, and DNS Firewall rule groups of the shared profile are all accessible to you. You are able to link this Profile to the VPCs for this account. There are no resources that you can change or remove. As regional resources, profiles are not transferable between regions.

Amazon Route 53 availability

Using the AWS Management Console, Route 53 API, AWS CloudFormation, AWS Command Line Interface (AWS CLI), and AWS SDKs, you can quickly get started with Route 53 Profiles.

With the exception of Canada West (Calgary), the AWS GovCloud (US) Regions, and the Amazon Web Services China Regions, Route 53 Profiles will be accessible in every AWS Region.

Amazon Route 53 pricing

Please check the Route 53 price page for further information on the costs.

Read more on govindhtech.com

#amazonroute53#globaltraffic#awsregion#usecases#dnsdata#route53#vpc#awsram#ram#aws#awscli#technology#technews#news#govindhtech

0 notes

Text

am i depressed or is the life i'm living rn just super boring and annoying

#did i get tired of the show i was watching because im having an episode or did the show actually get worse in season 2#is everything i eat tasteless because idc abt food anymore or is it because we haven't been cooking well lately#am i annoyed w my partner bc he's been ignoring me and not telling me things or do i really no enjoy his company anymore#am i tired bc i haven't been sleeping well bc he snores or is my insomnia coming back#do i hate the rs i have to do right now because i can't find a practical usecase for it in the modern world or am i struggling to start co#mplex tasks that have no clear end goal#hard to tell the difference

2 notes

·

View notes

Text

Screaming. Crying. Every time I'm asked to extend this piece of software I want to tear it and the previous maintainers apart. Who made these decisions. Why is this thing this way. Now a relatively simple change has me on my knees. "Oh but you should be able to support x" YEAH YEAH I SHOULD. BUT YOU SEE. WHERE WERE YOU AND YOUR OPINIONS WHEN THIS THING WAS BEING IMPLEMENTED ORIGINALLY. NOW I GOTTA DEAL WITH THE SIMPLE THING NOT BEING SIMPLE AT ALL.

#evelyn stuff#i tried to be like “ok this sounds simple but it isnt and actually we dont even have a usecase for the complicated version of this so”#“can we skip it or”#and the answer was “no actually this might be used in x case because we want to implement y”#ok ok. yeah sure. I'll make a feature for you to use in 6 months. no prob.#you dug me into a hole and now i gotta dig you out!

2 notes

·

View notes