#robots.txt validator w3c

Explore tagged Tumblr posts

Photo

Free Robots Checker & Tester Tool

Many robots.txt files contain errors hidden to humans. Run your website’s robots file through the tool below and see if it has any errors.

Like all good robot checkers, this tool validates /robots.txt files according to the robots exclusion de-facto standard. The robots.txt validator checks the syntax and structure of the document, is able to spot typos and can be used to test if a specific crawler is permitted access to a given URL.

If no ERROR is listed within your robots.txt file – then you’re all good to go! Otherwise, the tool will outline what ERROR you need to fix.

Check Your Robot.txt Now

#SEONINJATOOLS#Free Robots Checker & Tester Tool#robots.txt validator w3c#how to check robots.txt file in website#how to test my robots txt#google robots.txt generator#submit robots.txt to google#search console robots tester#robots.txt syntax#check if your site has robots txt

0 notes

Text

AI SEO Analyzer helps to identify your SEO mistakes and optimize your web page contents for a better search engine ranking. It also offers side-by-side SEO comparisons with your competitors. Analysis report also be downloaded as PDF file for offline usage.

In-depth Analysis Report:

Meta Title

Meta Description

Meta Keywords

Headings

Google Preview

Missing Image Alt Attribute

Keywords Cloud

Keyword Consistency

Text/HTML Ratio

GZIP Compression Test

WWW / NON-WWW Resolve

IP Canonicalization

XML Sitemap

Robots.txt

URL Rewrite

Underscores in the URLs

Embedded Objects

Iframe Check

Domain Registration

WHOIS Data

Indexed Pages Count (Google)

Backlinks Counter

URL Count

Favicon Test

Custom 404 Page Test

Page Size

Website Load Time

PageSpeed Insights (Desktop)

Language Check

Domain Availability

Typo Availability

Email Privacy

Safe Browsing

Mobile Friendliness

Mobile Preview Screenshot

Mobile Compatibility

PageSpeed Insights (Mobile)

Server IP

Server Location

Hosting Service Provider

Speed Tips

Analytics

W3C Validity

Doc Type

Encoding

Facebook Likes Count

PlusOne Count

StumbleUpon Count

LinkedIn Count

Estimated Worth

Alexa Global Rank

Visitors Localization

In-Page Links

Broken Links

Enter Your URL. AI SEO Analyzer Will Crawl And Test Your Website To See How Well It’s Optimized For Search Engines.

#search engine optimisation consultants#search engine optimized (seo) articles#seo services#searchengine#seo tips#seo analysis#seo checklist#free seo tools#free seo analysis#free seo audit

2 notes

·

View notes

Text

Search Engine Optimization (SEO) by Digital Go Market

Introduction to SEO • What is SERP? • Types & Techniques of SEO • How Search Engine Index & Rank Pages • Crawling, Indexing, and Penalization Policies • Organic Search Vs Paid Search • Algorithm & Updates of Google • Anatomy of Search Results Keyword Research & Placement Competitor Analysis & Monitoring Setup Google Webmaster and Analytics for your Website Difference Between On-Page & Off-Page Optimization On-Page Optimization • Page Naming (URL Structuring) • Title Optimization • Meta Tag & HTML Tag Optimization • Heading Tags {H1 to H6} • Keyword Optimization and Synonyms • Internal\External Link Optimization • Canonicalization, Pagination & Redirection • Robots.txt & Sitemaps(XML/HTML) • W3C Validation Off-Page Optimization • Link Building & Backlinks • Difference Between Do-Follow and No-Follow Backlinks • What is PA & DA? • Social Bookmarking • Search Engine Submission • Classified & Directory Submission • Article & Blog Submission • Q & A Submission Techniques • Local Citation & Business Listing • Blog Commenting, Forum Posting Important Factors Influencing Link Popularity Basics of HTML & CSS Major SEO Tools Including Google Search Console SEO Ranking Factors Do’s and Don’ts of SEO

To know more visit: https://www.digitalgomarket.com/search-engine-optimization/

1 note

·

View note

Text

SEO Audit For A Better Ranking On Search Engines? 2022

SEO audit is a detailed analysis of how well your website is optimized for search engines. it can help you identify areas where your site needs improvement and provide specific recommendations to help you optimize your website.

what is the purpose of SEO audit in digital marketing?

SEO audits are an important part of any digital marketing campaign. They help you identify and correct any errors or problems with your website that may be preventing it from ranking as high as it could in search engine results pages (SERPs). Additionally, SEO audits can help you benchmark your website against your competition and measure the effectiveness of your website SEO efforts.

Some of the key benefits of SEO audits include:

Higher rankings in SERPs· Increased web traffic· Greater visibility and exposure for your business online· Improved ROI on your digital marketing campaigns· More leads and conversions from website visitors

How often should I use SEO audit?

There is no one-size-fits-all answer to this question, as the frequency with which you should conduct SEO audits will depend on a variety of factors, including your website’s age, size, and competitiveness. However, it is generally recommended that you audit your website at least once a year or for each post or page you add.

What will you learn from an SEO audit?

A good SEO audit will provide you with a comprehensive report of your website’s current SEO health. This report will include an evaluation of your website’s visibility in search engines, as well as its ranking for relevant keywords. The audit will also identify any areas that have issues that might prevent you from ranking as high as you’d like in SERPs. Additionally, the audit may provide specific recommendations on how to improve your website’s SEO.SEO audits can also help you benchmark your website against your competition. By comparing your website’s visibility and ranking for relevant keywords, you can measure the effectiveness of your website SEO

key aspects of SEO audits

An SEO audit will typically look at a number of key factors, including:-Relevancy of content-Use of keywords and keyword density-Title tags, and meta descriptions-Headings and subheadings-Internal linking structure-Image optimization-Robots.txt file and sitemap-Website loading speedEach of these factors can have a significant impact on your website’s SEO. A good SEO audit will identify any deficiencies and provide specific recommendations on how to address them.

If you’re looking to improve your website’s visibility and ranking in search engines, SEO Analyzer Tool is a completely free SEO tool.

What does the Seo analyzer tool provide?

SEO Analyzer Tool helps to identify your SEO mistakes and optimize your web page contents for a better search engine ranking and instantly analyzer your SEO issues.

In-depth Analysis Report:

Meta Title Meta Description Meta Keywords Headings Google Preview Missing Image Alt Attribute Keywords Cloud Keyword Consistency Text/HTML Ratio GZIP Compression Test WWW / NON-WWW Resolve IP Canonicalization XML Sitemap Robots.txt URL Rewrite Underscores in the URLs Embedded Objects Iframe Check Domain Registration WHOIS Data Indexed Pages Count (Google) Backlinks Counter URL Count Favicon Test Custom 404 Page Test Page Size Website Load Time PageSpeed Insights (Desktop) Language Check Domain Availability Typo Availability Email Privacy Safe Browsing Mobile Friendliness Mobile Preview Screenshot Mobile Compatibility PageSpeed Insights (Mobile) Server IP Server Location Hosting Service Provider Speed Tips Analytics W3C Validity DocType Encoding Facebook Likes Count PlusOne Count StumbleUpon Count LinkedIn Count Estimated Worth Alexa Global Rank Visitors Localization In-Page Links Broken Links

For more articles related to digital marketing and how to earn money online check our website.

#seo#seo audit#seo optimization#seo analysis#seo report#ranking on google#rank high on search engines

0 notes

Text

Google Webmaster Tips: Every little thing You Must Know

New Post has been published on http://tiptopreview.com/google-webmaster-guidelines-everything-you-need-to-know/

Google Webmaster Tips: Every little thing You Must Know

What Are the Google Webmaster Tips?

Make Positive Your Key phrases Are Related

Make Positive That Pages on Your Website Can Be Reached by a Hyperlink from One other Findable Web page

Restrict the Amount of Hyperlinks on a Web page to a Affordable Quantity

Use the Robots.txt File to Handle Your Crawl Funds

Create a Helpful, Data-Wealthy Website & Write Pages That Clearly and Precisely Describe Your Content material

Assume Concerning the Phrases Customers Would Kind to Discover Your Pages

Design Your Website to Have a Clear Conceptual Web page Hierarchy

Guarantee All Web site Belongings Are Absolutely Crawlable & Indexable

Make Your Website’s Essential Content material Seen by Default

Google’s Webmaster Tips Are Actually Tips

Google’s Webmaster Tips are elementary to reaching sustainable search engine marketing outcomes utilizing strategies which are in keeping with Google’s expectations.

In case you don’t observe their pointers, you’ll be able to anticipate to expertise both an algorithmic devaluation or an outright guide penalty.

In probably the most extreme instances, you’ll be able to anticipate to be banned from Google SERPs fully.

Understanding the rules in full is the one solution to keep away from potential missteps and future hurt to your website. In fact, there’s all the time multiple solution to interpret their pointers.

Fortunately, Google reps reminiscent of John Mueller and Gary Illyes can be found on Twitter for many questions on these pointers.

For probably the most half, the rules are cut-and-dry.

However, you do have to overview the rules regularly as a result of Google does replace them. They don’t essentially announce modifications to them, so that you should be on alert.

Commercial

Proceed Studying Beneath

One of many more moderen modifications to the rules was when Google added a line about ensuring you code your websites in legitimate HTML and CSS, and that it needs to be validated with the W3C.

This doesn’t essentially imply that it’s going to assist in search (it may possibly by way of a consumer expertise perspective as a consequence of higher cross-browser compatibility).

This can be a completely different sort of information than most.

The objective of this information is to current potential options to widespread issues, so you’re armed with actionable recommendation you need to use in your subsequent web site problem.

Doesn’t that sound like enjoyable?

What Are the Google Webmaster Tips?

These pointers are separated into:

Webmaster pointers.

Normal pointers.

Content material-specific pointers.

High quality pointers.

Webmaster pointers are extra normal finest practices that may assist you to construct your website in order that it’s simpler to look in Google Search.

Commercial

Proceed Studying Beneath

Different pointers embrace these that may forestall your website from showing in search.

Normal guidelines are these finest practices that may assist your website look its finest within the Google SERPs (search engine outcomes pages).

Content material-specific pointers are extra particular in direction of these several types of content material in your website like photos, video, and others.

High quality pointers embrace all these strategies which are prohibited and may get your web page banned from the SERPs.

If that weren’t sufficient, utilizing these strategies may trigger a guide motion to be levied in opposition to your website.

These pointers are targeted on ensuring that you simply don’t write spammy content material and that you simply write content material for people reasonably than search engine spiders.

It’s simpler stated than performed, nevertheless. Creating web sites that adhere to Google’s Webmaster Tips is a problem.

However, by understanding them utterly, you may have handed the primary hurdle.

The following hurdle is making use of them to your web sites in a manner that makes them compliant with these pointers. However, as with every search engine marketing problem, apply makes good!

Make Positive Your Key phrases Are Related

Your website needs to be simply discoverable. One solution to decide key phrase relevance is to look at the top-ranking websites and see what key phrases they’re utilizing of their web page title and meta description.

One other manner is to carry out a competitor evaluation of the top-ranking websites in your area of interest. There are a lot of instruments that may provide help to establish how websites are utilizing key phrases on-page.

Widespread Subject

You have got a website that has zero key phrases. The shopper has given you an inventory of key phrases, and also you need to optimize the positioning for these key phrases. The issue is, you don’t have anything however branded key phrases all through the textual content copy, and there was zero thought given to the web site optimization.

Answer

The answer right here is just not fairly easy. You would want to carry out key phrase analysis and in-depth competitor evaluation to search out the candy spot of optimization for key phrases primarily based in your goal market.

Make Positive That Pages on Your Website Can Be Reached by a Hyperlink from One other Findable Web page

Google recommends that every one pages in your website have no less than one hyperlink from one other web page. Hyperlinks make the world huge internet, so it is smart that your major technique of navigation are hyperlinks. This may be performed by way of your navigation menu, breadcrumbs, or contextual hyperlinks.

Commercial

Proceed Studying Beneath

Hyperlinks also needs to be crawlable. Making your hyperlinks crawlable ensures an incredible consumer expertise, and that Google can simply crawl and perceive your website. Keep away from utilizing generic anchor textual content to create these hyperlinks, and use key phrase phrases to explain the outgoing web page.

A siloed web site structure is finest as a result of this helps reinforce topical relevance of pages in your website and arranges them in a hierarchical construction that Google can perceive. It additionally helps reinforce topical focus.

Widespread Subject

You run right into a website that has orphaned pages all over the place and throughout the sitemap.

Answer

Ensure no less than one hyperlink from the positioning hyperlinks to each different potential web page in your website. If the web page is just not a part of your website, both delete it fully or noindex it.

Restrict the Amount of Hyperlinks on a Web page to a Affordable Quantity

Prior to now, Google is on file saying that you simply shouldn’t use greater than 100 hyperlinks per web page.

It’s higher to have hyperlinks which are helpful to the consumer, reasonably than sticking to a selected amount. The truth is, sticking to particular portions may be dangerous in the event that they negatively affect the consumer expertise.

Commercial

Proceed Studying Beneath

Google’s pointers now state you can have just a few thousand (at most) on a web page. It’s not unreasonable to imagine that Google makes use of portions of hyperlinks as a spam sign.

If you wish to hyperlink again and again, achieve this at your peril. Even then, John Mueller has said that they don’t care about inner hyperlinks, and you are able to do what you need.

Widespread Subject

You have got a website that has greater than 10,000 hyperlinks per web page. That is going to introduce issues in the case of Google crawling your website.

Answer

This depends upon the scope and sort of website you may have. Just be sure you cut back hyperlinks per web page right down to lower than just a few thousand in case your website wants it.

Use the Robots.txt File to Handle Your Crawl Funds

Crawl budget optimization is a crucial a part of ensuring that Google can crawl your website effectively and simply.

You make it extra environment friendly and simpler for Google to crawl your website by way of this course of. You optimize your crawl funds in two methods – the hyperlinks in your website and robots.txt.

Commercial

Proceed Studying Beneath

The strategy that primarily considerations us at this step is utilizing robots.txt for crawl funds optimization. This guide from Google tells you every little thing you must know in regards to the robots.txt file and the way it can affect crawling.

Widespread Subject

You run right into a website that has the next line in its robots.txt file:

Disallow: /

Which means that the robots.txt file is disallowing crawling from the highest of the positioning down.

Answer

Delete that line.

Widespread Subject

You run right into a website that doesn’t have a sitemap.xml directive in robots.txt. That is thought-about an search engine marketing finest apply.

Answer

Be sure you add in a directive declaring the situation of your sitemap file, reminiscent of the next:

Sitemap: https://www.instance.com/sitemap.xml

Create a Helpful, Data-Wealthy Website & Write Pages That Clearly and Precisely Describe Your Content material

As their pointers state, Google prefers information-rich websites. That is dictated by trade, so a contest evaluation is crucial to discovering websites which are thought-about “information-rich.”

Commercial

Proceed Studying Beneath

This “information-rich” requirement varies between trade to trade, which is why such a contest evaluation is required.

The competitors evaluation ought to reveal:

What different websites are writing about.

How they’re writing about these subjects.

How their websites are structured, amongst different attributes.

With this knowledge, it is possible for you to to create a website that meets these pointers.

Widespread Subject

You have got a website that is stuffed with thin, short content that isn’t helpful.

Let’s be clear right here, although – word count is just not the be-all, end-all issue for content material. It’s about content material high quality, depth, and breadth.

Again to our website – you uncover that it’s stuffed with skinny content material.

Answer

A complete content material technique will likely be essential as a way to overcome this website’s content material weaknesses.

Assume Concerning the Phrases Customers Would Kind to Discover Your Pages

When performing keyword research, it’s crucial to make sure that you determine how customers search on your website. In case you don’t know the phrases that customers are trying to find, then the entire key phrase analysis on the planet is for naught.

Commercial

Proceed Studying Beneath

That is the place efficient key phrase analysis comes into play.

Whenever you do efficient key phrase analysis, you have to contemplate issues like your potential shopper’s intent when trying to find a phrase.

For instance, somebody earlier within the shopping for funnel is extra seemingly all about analysis. They’d not be looking out for a similar key phrases that somebody who’s on the finish of the shopping for funnel could be (e.g., they’re nearly to purchase).

As well as, you have to additionally contemplate your potential shopper’s mindset – what are they pondering when they’re trying to find these key phrases?

Upon getting concluded the key phrase analysis part of your undertaking, then you have to carry out on-page optimization. This on-page optimization course of normally contains ensuring that each web page in your website mentions the focused key phrase phrase of that web page.

You can not do search engine marketing with out efficient key phrase analysis and focusing on. search engine marketing doesn’t work that manner. In any other case, you aren’t doing search engine marketing.

Widespread problem

You run right into a website that has nothing however branded key phrase phrases and hasn’t performed all that a lot to distinguish themselves within the market.

Commercial

Proceed Studying Beneath

By way of your analysis, you discover that they haven’t up to date their weblog all that a lot with quite a lot of key phrase subjects, and as an alternative have solely focused on branded posts.

Answer

The answer to that is fairly easy – just remember to use focused key phrase phrases which are of broader topical relevancy to give you content material, reasonably than branded key phrases.

This goes again to the basics of search engine marketing, or search engine marketing 101: embrace the key phrases that your customers would sort to search out these pages, and ensure your website contains these phrases on its pages.

That is, in reality, a part of Google’s normal pointers for serving to customers perceive your pages.

Design Your Website to Have a Clear Conceptual Web page Hierarchy

What’s a transparent conceptual web page hierarchy? Which means that your website is organized by topical relevance.

You have got the primary subjects of your website organized as fundamental subjects, with subtopics organized beneath the primary subjects. These are known as search engine marketing silos. search engine marketing Silos are a good way to arrange the pages in your website in accordance with subjects.

Commercial

Proceed Studying Beneath

The deeper the clear conceptual web page hierarchy, the higher. This tells Google that your website is educated in regards to the matter.

There are two faculties of thought on this guideline – one believes that it is best to by no means stray from a flat structure – which means any web page shouldn’t be greater than three clicks deep from the homepage.

The opposite faculty of thought entails siloing – that it is best to create a transparent conceptual web page hierarchy that dives deep into the breadth and depth of your matter.

Ideally, you have to create an internet site structure that is smart on your matter. search engine marketing siloing helps accomplish this by ensuring your website is as in-depth about your matter as potential.

search engine marketing Siloing additionally presents a cohesive group of topical pages and discussions. Due to this, and the truth that search engine marketing siloing has been noticed to create nice outcomes – it’s my advice that almost all websites pursuing topically-dense topics create a silo structure applicable to that matter.

Widespread Subject

You run right into a website that has pages strewn throughout, with out a lot thought to the group, linking, or different web site structure. These pages are additionally haphazardly put collectively, which means that they don’t have a lot of an organizational circulate.

Commercial

Proceed Studying Beneath

Answer

You may repair this problem by making a siloed web site structure that conforms to what your opponents are doing. The thought behind doing this being that this web site structure will assist reinforce your topical focus, and in flip, enhance your rankings by way of entity relationships between your pages and topical reinforcement.

This topical reinforcement then creates larger relevance on your key phrase phrases.

Guarantee All Web site Belongings Are Absolutely Crawlable & Indexable

You should be pondering – why shouldn’t all belongings be absolutely crawlable and indexable?

Effectively, there are some conditions the place blocking CSS (Cascading Stylesheets) and JS (JavaScript) recordsdata are acceptable.

First, in case you had been blocking them as a result of that they had points taking part in good with one another on the server.

Second, in case you had been blocking them due to another battle, both manner, Google does have pointers for this additionally.

Google’s pointers on this matter state:

“To help Google fully understand your site’s contents, allow all site assets that would significantly affect page rendering to be crawled: for example, CSS and JavaScript files that affect the understanding of the pages. The Google indexing system renders a web page as the user would see it, including images, CSS, and JavaScript files. To see which page assets that Googlebot cannot crawl, use the URL Inspection tool, to debug directives in your robots.txt file, use the robots.txt Tester tool.”

Commercial

Proceed Studying Beneath

That is necessary. You don’t need to block CSS and JavaScript.

All components are crucial to make sure that Google absolutely understands the context of your web page.

Most website homeowners block CSS and JavaScript by way of robots.txt. Generally, that is due to conflicts with different website recordsdata. Different occasions, they current extra issues than not when they’re absolutely rendered.

If website recordsdata current points when they’re rendered, it’s time to create a totally revamped web site.

Widespread Subject

You come throughout a website that has CSS and JavaScript blocked inside robots.txt.

Answer

Unblock CSS and JavaScript in robots.txt. And, if they’re presenting that a lot of battle (and a multitude on the whole), you need to have as clear of an online presence as potential.

Make Your Website’s Essential Content material Seen by Default

Google’s pointers discuss ensuring that your website’s most necessary content material is seen by default. Which means that you don’t need buttons, tabs, and different navigational components to be essential to reveal this content material.

Commercial

Proceed Studying Beneath

Google additionally explains that they “consider this content less accessible to users, and believe that you should make your most important information visible in the default page view.”

Tabbed content material – sure, this falls below content material that’s much less accessible to customers.

Why?

Think about this: you may have a tabbed block of content material in your web page. The primary tab is the one one that’s absolutely viewable and visual to customers till you click on on the tab on the prime to go to the second. And so forth.

Think about Google – they assume this type of content material is much less accessible.

Whereas this can be a reasonably small consideration, you don’t need to do that egregiously – particularly in your homepage.

Ensure that all tabbed content material is absolutely seen.

Widespread Subject

You get an internet site assigned to you that has tabbed content material. What do you do?

Answer

Suggest that the shopper create a model of the content material that’s absolutely seen.

For instance, flip tabbed content material into paragraphed content material going up and down the web page.

Commercial

Proceed Studying Beneath

Google’s Webmaster Tips Are Actually Tips

As SEOs, Google’s Webmaster Tips are simply that, pointers. It may be stated that they’re simply “guidelines” and never essentially a rule.

However, be careful – in case you violate them egregiously, you would be outright banned from the SERPs. I choose remaining on Google’s good facet. Guide actions are ugly.

Don’t say we didn’t warn you.

In fact, penalties can vary from algorithmic devaluations to outright guide actions. All of it depends upon the severity of the violation of that guideline.

And, pages and folders may be devalued in the case of Penguin points. Don’t neglect that real-time Penguin is inherently very granular on this regard.

However, it’s necessary to notice – not all guideline violations will lead to penalties. Some lead to points with crawling and indexing, which may additionally affect your rating. Others lead to main guide actions, reminiscent of spammy hyperlinks again to your website.

When a guide motion hits, it’s necessary to stay calm. You have got seemingly introduced this on your self by way of hyperlink spam or one other sort of spam in your website.

Commercial

Proceed Studying Beneath

The perfect you are able to do now’s examine and work with Google to take away the guide motion.

Usually, if in case you have spent plenty of time moving into bother, Google expects the identical period of time to get out of bother earlier than you get again of their good graces.

Different occasions, the positioning’s so horrible, that the one resolution is to nuke it and begin over.

Armed with this information, it is best to be capable to establish whether or not sure strategies have brought on you to get into some critical bother.

As an apart, it’s positively value it following Google’s pointers from the beginning.

Whereas outcomes are slower when in comparison with different, extra aggressive strategies, we extremely advocate this method. This method will provide help to preserve a extra steady online presence, and also you don’t must endure by way of a guide motion or algorithmic devaluation.

The selection is yours.

Featured Picture Credit score: Paulo Bobita

if( !ss_u )

!function(f,b,e,v,n,t,s) (window,document,'script', 'https://connect.facebook.net/en_US/fbevents.js');

if( typeof sopp !== "undefined" && sopp === 'yes' )else fbq('dataProcessingOptions', []);

fbq('init', '1321385257908563');

fbq('track', 'PageView');

fbq('trackSingle', '1321385257908563', 'ViewContent', content_name: 'webmaster-guidelines', content_category: 'seo ' );

// end of scroll user Source link

0 notes

Photo

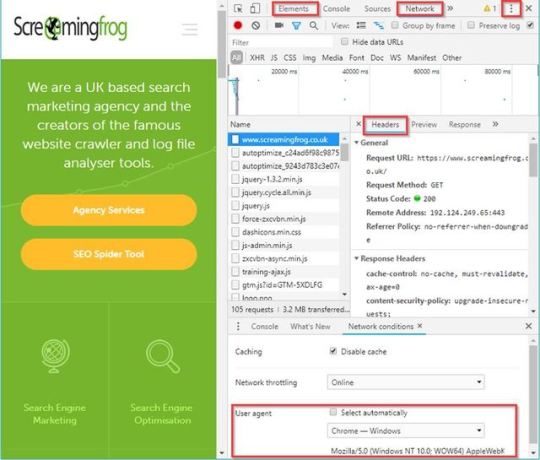

From crawl completion notifications to automated reporting: this post may not have Billy the Kid or Butch Cassidy, instead, here are a few of my most useful tools to combine with the SEO Spider, (just as exciting). We SEOs are extremely lucky—not just because we’re working in such an engaging and collaborative industry, but we have access to a plethora of online resources, conferences and SEO-based tools to lend a hand with almost any task you could think up. My favourite of which is, of course, the SEO Spider—after all, following Minesweeper Outlook, it’s likely the most used program on my work PC. However, a great programme can only be made even more useful when combined with a gang of other fantastic tools to enhance, compliment or adapt the already vast and growing feature set. While it isn’t quite the ragtag group from John Sturges’ 1960 cult classic, I’ve compiled the Magnificent Seven(ish) SEO tools I find useful to use in conjunction with the SEO Spider: Debugging in Chrome Developer Tools Chrome is the definitive king of browsers, and arguably one of the most installed programs on the planet. What’s more, it’s got a full suite of free developer tools built straight in—to load it up, just right-click on any page and hit inspect. Among many aspects, this is particularly handy to confirm or debunk what might be happening in your crawl versus what you see in a browser. For instance, while the Spider does check response headers during a crawl, maybe you just want to dig a bit deeper and view it as a whole? Well, just go to the Network tab, select a request and open the Headers sub-tab for all the juicy details: Perhaps you’ve loaded a crawl that’s only returning one or two results and you think JavaScript might be the issue? Well, just hit the three dots (highlighted above) in the top right corner, then click settings > debugger > disable JavaScript and refresh your page to see how it looks: Or maybe you just want to compare your nice browser-rendered HTML to that served back to the Spider? Just open the Spider and enable ‘JavaScript Rendering’ & ‘Store Rendered HTML’ in the configuration options (Configuration > Spider > Rendering/Advanced), then run your crawl. Once complete, you can view the rendered HTML in the bottom ‘View Source’ tab and compare with the rendered HTML in the ‘elements’ tab of Chrome. There are honestly far too many options in the Chrome developer toolset to list here, but it’s certainly worth getting your head around. Page Validation with a Right-Click Okay, I’m cheating a bit here as this isn’t one tool, rather a collection of several, but have you ever tried right-clicking a URL within the Spider? Well, if not, I’d recommend giving it a go—on top of some handy exports like the crawl path report and visualisations, there’s a ton of options to open that URL into several individual analysis & validation apps: Google Cache – See how Google is caching and storing your pages’ HTML. Wayback Machine – Compare URL changes over time. Other Domains on IP – See all domains registered to that IP Address. Open Robots.txt – Look at a site’s Robots. HTML Validation with W3C – Double-check all HTML is valid. PageSpeed Insights – Any areas to improve site speed? Structured Data Tester – Check all on-page structured data. Mobile-Friendly Tester – Are your pages mobile-friendly? Rich Results Tester – Is the page eligible for rich results? AMP Validator – Official AMP project validation test. User Data and Link Metrics via API Access We SEOs can’t get enough data, it’s genuinely all we crave – whether that’s from user testing, keyword tracking or session information, we want it all and we want it now! After all, creating the perfect website for bots is one thing, but ultimately the aim of almost every site is to get more users to view and convert on the domain, so we need to view it from as many angles as possible. Starting with users, there’s practically no better insight into user behaviour than the raw data provided by both Google Search Console (GSC) and Google Analytics (GA), both of which help us make informed, data-driven decisions and recommendations. What’s great about this is you can easily integrate any GA or GSC data straight into your crawl via the API Access menu so it’s front and centre when reviewing any changes to your pages. Just head on over to Configuration > API Access > [your service of choice], connect to your account, configure your settings and you’re good to go. Another crucial area in SERP rankings is the perceived authority of each page in the eyes of search engines – a major aspect of which, is (of course), links., links and more links. Any SEO will know you can’t spend more than 5 minutes at BrightonSEO before someone brings up the subject of links, it’s like the lifeblood of our industry. Whether their importance is dying out or not there’s no denying that they currently still hold much value within our perceptions of Google’s algorithm. Well, alongside the previous user data you can also use the API Access menu to connect with some of the biggest tools in the industry such as Moz, Ahrefs or Majestic, to analyse your backlink profile for every URL pulled in a crawl. For all the gory details on API Access check out the following page (scroll down for other connections): https://www.screamingfrog.co.uk/seo-spider/user-guide/configuration/ Understanding Bot Behaviour with the Log File Analyzer An often-overlooked exercise, nothing gives us quite the insight into how bots are interacting through a site than directly from the server logs. The trouble is, these files can be messy and hard to analyse on their own, which is where our very own Log File Analyzer (LFA) comes into play, (they didn’t force me to add this one in, promise!). I’ll leave @ScreamingFrog to go into all the gritty details on why this tool is so useful, but my personal favourite aspect is the ‘Import URL data’ tab on the far right. This little gem will effectively match any spreadsheet containing URL information with the bot data on those URLs. So, you can run a crawl in the Spider while connected to GA, GSC and a backlink app of your choice, pulling the respective data from each URL alongside the original crawl information. Then, export this into a spreadsheet before importing into the LFA to get a report combining metadata, session data, backlink data and bot data all in one comprehensive summary, aka the holy quadrilogy of technical SEO statistics. While the LFA is a paid tool, there’s a free version if you want to give it a go. Crawl Reporting in Google Data Studio One of my favourite reports from the Spider is the simple but useful ‘Crawl Overview’ export (Reports > Crawl Overview), and if you mix this with the scheduling feature, you’re able to create a simple crawl report every day, week, month or year. This allows you to monitor and for any drastic changes to the domain and alerting to anything which might be cause for concern between crawls. However, in its native form it’s not the easiest to compare between dates, which is where Google Sheets & Data Studio can come in to lend a hand. After a bit of setup, you can easily copy over the crawl overview into your master G-Sheet each time your scheduled crawl completes, then Data Studio will automatically update, letting you spend more time analysing changes and less time searching for them. This will require some fiddling to set up; however, at the end of this section I’ve included links to an example G-Sheet and Data Studio report that you’re welcome to copy. Essentially, you need a G-Sheet with date entries in one column and unique headings from the crawl overview report (or another) in the remaining columns: Once that’s sorted, take your crawl overview report and copy out all the data in the ‘Number of URI’ column (column B), being sure to copy from the ‘Total URI Encountered’ until the end of the column. Open your master G-Sheet and create a new date entry in column A (add this in a format of YYYYMMDD). Then in the adjacent cell, Right-click > ‘Paste special’ > ‘Paste transposed’ (Data Studio prefers this to long-form data): If done correctly with several entries of data, you should have something like this: Once the data is in a G-Sheet, uploading this to Data Studio is simple, just create a new report > add data source > connect to G-Sheets > [your master sheet] > [sheet page] and make sure all the heading entries are set as a metric (blue) while the date is set as a dimension (green), like this: You can then build out a report to display your crawl data in whatever format you like. This can include scorecards and tables for individual time periods, or trend graphs to compare crawl stats over the date range provided, (you’re very own Search Console Coverage report). Here’s an overview report I quickly put together as an example. You can obviously do something much more comprehensive than this should you wish, or perhaps take this concept and combine it with even more reports and exports from the Spider. If you’d like a copy of both my G-Sheet and Data Studio report, feel free to take them from here: Master Crawl Overview G-Sheet: https://docs.google.com/spreadsheets/d/1FnfN8VxlWrCYuo2gcSj0qJoOSbIfj7bT9ZJgr2pQcs4/edit?usp=sharing Crawl Overview Data Studio Report: https://datastudio.google.com/open/1Luv7dBnkqyRj11vLEb9lwI8LfAd0b9Bm Note: if you take a copy some of the dimension formats may change within DataStudio (breaking the graphs), so it’s worth checking the date dimension is still set to ‘Date (YYYMMDD)’ Building Functions & Strings with XPath Helper & Regex Search The Spider is capable of doing some very cool stuff with the extraction feature, a lot of which is listed in our guide to web scraping and extraction. The trouble with much of this is it will require you to build your own XPath or regex string to lift your intended information. While simply right-clicking > Copy XPath within the inspect window will usually do enough to scrape, by it’s not always going to cut it for some types of data. This is where two chrome extensions, XPath Helper & Regex- Search come in useful. Unfortunately, these won’t automatically build any strings or functions, but, if you combine them with a cheat sheet and some trial and error you can easily build one out in Chrome before copying into the Spider to bulk across all your pages. For example, say I wanted to get all the dates and author information of every article on our blog subfolder (https://www.screamingfrog.co.uk/blog/). If you simply right clicked on one of the highlighted elements in the inspect window and hit Copy > Copy XPath, you would be given something like: /html/body/div[4]/div/div[1]/div/div[1]/div/div[1]/p While this does the trick, it will only pull the single instance copied (‘16 January, 2019 by Ben Fuller’). Instead, we want all the dates and authors from the /blog subfolder. By looking at what elements the reference is sitting in we can slowly build out an XPath function directly in XPath Helper and see what it highlights in Chrome. For instance, we can see it sits in a class of ‘main-blog–posts_single-inner–text–inner clearfix’, so pop that as a function into XPath Helper: //div[@class="main-blog--posts_single-inner--text--inner clearfix"] XPath Helper will then highlight the matching results in Chrome: Close, but this is also pulling the post titles, so not quite what we’re after. It looks like the date and author names are sitting in a sub

tag so let’s add that into our function: (//div[@class="main-blog--posts_single-inner--text--inner clearfix"])/p Bingo! Stick that in the custom extraction feature of the Spider (Configuration > Custom > Extraction), upload your list of pages, and watch the results pour in! Regex Search works much in the same way: simply start writing your string, hit next and you can visually see what it’s matching as you’re going. Once you got it, whack it in the Spider, upload your URLs then sit back and relax. Notifications & Auto Mailing Exports with Zapier Zapier brings together all kinds of web apps, letting them communicate and work with one another when they might not otherwise be able to. It works by having an action in one app set as a trigger and another app set to perform an action as a result. To make things even better, it works natively with a ton of applications such as G-Suite, Dropbox, Slack, and Trello. Unfortunately, as the Spider is a desktop app, we can’t directly connect it with Zapier. However, with a bit of tinkering, we can still make use of its functionality to provide email notifications or auto mailing reports/exports to yourself and a list of predetermined contacts whenever a scheduled crawl completes. All you need is to have your machine or server set up with an auto cloud sync directory such as those on ‘Dropbox’, ‘OneDrive’ or ‘Google Backup & Sync’. Inside this directory, create a folder to save all your crawl exports & reports. In this instance, I’m using G-drive, but others should work just as well. You’ll need to set a scheduled crawl in the Spider (file > Schedule) to export any tabs, bulk exports or reports into a timestamped folder within this auto-synced directory: Log into or create an account for Zapier and make a new ‘zap’ to email yourself or a list of contacts whenever a new folder is generated within the synced directory you selected in the previous step. You’ll have to provide Zapier access to both your G-Drive & Gmail for this to work (do so at your own risk). My zap looks something like this: The above Zap will trigger when a new folder is added to /Scheduled Crawls/ in my G-Drive account. It will then send out an email from my Gmail to myself and any other contacts, notifying them and attaching a direct link to the newly added folder and Spider exports. I’d like to note here that if running a large crawl or directly saving the crawl file to G-drive, you’ll need enough storage to upload (so I’d stick to exports). You’ll also have to wait until the sync is completed from your desktop to the cloud before the zap will trigger, and it checks this action on a cycle of 15 minutes, so might not be instantaneous. Alternatively, do the same thing on IFTTT (If This Then That) but set it so a new G-drive file will ping your phone, turn your smart light a hue of lime green or just play this sound at full volume on your smart speaker. We really are living in the future now! Conclusion There you have it, the Magnificent Seven(ish) tools to try using with the SEO Spider, combined to form the deadliest gang in the west web. Hopefully, you find some of these useful, but I’d love to hear if you have any other suggestions to add to the list. The post SEO Spider Companion Tools, Aka ‘The Magnificent Seven’ appeared first on Screaming Frog.

0 notes

Text

SEO Training Courses

What Is SEO?

SEO stands for “search engine optimization.” It is the procedure of gaining traffic from the “free,” “organic,” “editorial” or “natural” search results on search engines. SEO is the working practice of optimizing a web site by improving internal and external aspects in order to increase the traffic the site receives from search engines.

SEO Courses

SEO- search engine optimization that gives to raise an online traffic while making respective products and services familiarize around the globe. In this new era of high technological world, many of several latest and modernize seo topologies have been introduced to the corporate world. This advancement makes us to launch seo courses with the updated content curriculum from where students can benefit from the same.

SEO, one of the emerging and job oriented courses that demands by all most every type of corporate houses, make the students keen to enroll with Seo Courses in Nagpur India. Here we as brand of web solutions, bring every aspect of Seo Training in Nagpur. Our seo experts and internet marketing analysts will shape our students ready to handle independent seo projects in the corporate market.

SEO Training Courses

Search Engine Optimization (SEO) Training facilitates to learner how to make your site more visible to Search Engines Result Pages SERP's. SEO training is not just teach you something that create your site visible on Search Engines but it is all about to optimize site on first page of search engines.

1. On Page Optimization

2. OFF Page Optimization

SEO On Page Optimization Course

SEO on-page provides the student learning about how to plan and implement on page seo strategies while targeting the respective keywords. Here students will gain how to play with content optimization and how to make coding search engine friendly. Here, our seo on-page expert will guide our students how to work with varied coding techniques as well as Robots.txt, creating sitemap, filling Meta tags, keyword proximity, prominence and density under content and many more.

OSKITSOLUTIONS on page optimization course includes:

1. Search Engine Optimization Strategy

2. Website Analysis

3. Competitive Analysis

4. Search Engine Optimization Strategy

5. Competitive Analysis

6. Keyword Research

7. Keyword Finalize

8. Website Structure Optimization

9. Content Optimization

10. URL Mode Rewrite

11. HTML code optimization

12. W3C Validation

13. Meta tag creation & optimization

14. HTML Sitemap creation & optimization

15. Google XML Sitemap Creation & regular optimization

16. Robots.txt creation & optimization

17. Google Analytics Setup & Monitoring

18. On-Page Optimization Report

SEO Off Page Optimization Course

Here under seo off-page contents, our seo experts will make the students familiar about how to promote your products and services mean how to rank your respective keywords while using varied topologies under seo off-page optimization. This seo off page optimization course will take our students with every best aspect of social marketing while making distinct accounts in blogs, articles, classified, social bookmarking, content sharing and many more.

OSKITSOLUTIONS Off page optimization course includes:

1. Link Building (Relevant and Manual)

2. Manual Search Engine Submission

3. Manual Relevant Directory Submission

4. Local Directory Submission

5. Link Exchange (One Way )

6. Article Submission (per article)

7. Blog Creation, updating and promotion

8. Blog Submission

9. Blogs Targeted For Links

10. Forum posting

11. Classified ads Creation & Posting

12. Bookmarking Comments

13. Link wheel

14. Landing Page Optimization

SEO Training:

Learn the art of traffic generation that enables you to become a trained SEO professional. Being a SEO Professional, you will be capable to help clients in getting top-spots in search engines like Google for their products/services. Wherever you go as search engine optimizer, you can say proudly that you are smart search engine optimizer from SEO world of OSKITSOLUTIONS.

WHY SEO Training in Nagpur@ OSKITSOLUTIONS?

SEO Training for Career Growth:

These days most of the companies are appoint more and more SEO experts and Digital Marketing experts. Companies are offering individuals should have good knowledge and experience in this field. So, there is high requirement and lot of career and job opportunities in this domain. As this is booming industry so selecting a career path in this direction will be right option for an individual. SEO experts with an experience of 2-3 years are paid extra as compared to the profile of similar kind of job nature. Due to high boom of Internet Marketing most of the companies are investing a lot to improve their online presence.

SEO Training for Business Development:

Basically, the websites which come in top positions in Search Engine Result Page (SERP) usually gain maximum clicks and impressions so there results significant increase in traffic of website. SEO usually targets those users who look for products and services online. Once a website is ranked on search engine than thousand of free hits are automatically received. Thus SEO is very cost effective marketing strategy. SEO benefits for business development are endless. It is the good marketing investment that a business owner can make. It leads maximum traffic, leads and there by maximum sales. Most of the companies are increasing their business by significantly investing on it. If you want to streamline your business operation and lead high revenue without any other form of push advertising then SEO marketing strategy is best. As company brand is the strongest asset. So increasing the brand of the company is considered as first concern in any business.

LEARN & BENEFITS OF SEO CLASSES IN NAGPUR @ OSKITSOLUTIONS

OSKITSOLUTIONS has specialization in Search engine optimization with PCSEMO (Professional Certified Search Engine Marketing Optimizer) course. OSKITSOLUTIONS World in the internet market industry, gives online marketing training courses to the ones who are focused in gaining highly professional knowledge allied to internet marketing. We are keen on search engine optimization with training courses for SEO (search engine optimization), SEM (search engine marketing), SMO (social media optimization), SMM (Social Media Marketing, Face Book and Twitter Marketing) and Internet Marketing (PPC Ad words).

Our Certified courses are considered especially for the people who have basic knowledge of computers and websites, and want to learn SEO process to become SEO and PPC Expert. This course covers a large range of topics that helps in making your carrier in the field of SEO/SEM/PPC.

The course expands clear understanding of workings strategies of search engines and SEO skills that ensures a bright future in field of Digital marketing.

OSK IT SOLUTIONS is one of the best SEO Company in Nagpur specializing in Search Engine Optimization (SEO). OSK IT SOLUTIONS is a quality and result oriented SEO Company in Nagpur helping our clients to properly project their brand and generate targeted traffic and revenue.

0 notes

Text

Affordable Best SEO Services Company in Jaipur

Search Engine Optimization

Search Engine Optimization (SEO) is the procedure of getting your web-page or website in search engine result pages (SERPs) using some techniques and tools. It is the process of affecting the visibility of a website or web-page in natural organic results.

Cross Graphic Ideas is providing latest and advanced SEO with info about Google Algorithms Updates (EMD, Humming-bird, Penguin and Panda etc.). We are experts and produce experts with higher level marketing professionals. The Sole objective of SEO is to extend business and getting top results in SERPs.

SEO Services Company in Jaipur

At Cross Graphic Ideas, we have an extensive variety of affordable SEO Services Company in Jaipur and SEO Company in Jaipur focusing on content (content is king) quality, link building techniques, on-page optimization and link building popularity. We follow individualistic strategies and new techniques according to the rightness of your website via updating the regular updates Panda, Penguin, Humming-bird, EMD etc.

Our SEO Basics are:-

Search Engines –Google, Bing

Search Engines V/s Directories

How Search Engine Works

Page rank

Website Architecture

Website Designing Basics

Domain and Hosting

Keyword Research

Keyword Research and Analysis

Competitor Analysis

Average Search Analysis

Targeted Keywords

Off-Page Optimization

Social Bookmarking

Directory Submission

Blogging

Forum Posting

Search Engine Submission

Link Baiting

Link Exchange & Cross Linking

Image and Content Sharing

Content Creation and Posting

Video Promotions

Article Submission

Business Reviews

Local Listing

Classified Submission

Social Shopping Network

Questions and Answers

Document Sharing

CSS, W3C & RSS Submission

Profile Linking

Guest Posting

DMOZ Listing

Favicons

Blog Commenting

Info-graphics

PPT Submission

Press Releases

On-Page Optimization

Content Optimization

Meta Title Tag and Meta Tags Optimization

Anchor Texts

Header Tags (H1, H2, H3, H4, H5, H6)

Header and Footer

Robots.txt Validation

XML Sitemap

URL Canonicalization tag

Keyword Density

Google Webmaster Tools

Google Analytics and Tracking

Google Ad-words Keyword Planner Tool

Social Media Optimization

White Hat SEO

Black Hat SEO – not use this term website penalize for google

Grey Hat SEO

Latest SEO Updates and Tools

Google Panda

Google Penguin

Google Hummingbird

Google EMD

Google Mobile Friendly Update

Screaming Frog SEO Spider Tool

Woo-Rank Tool

Ahrefs Tool

Google Keyword Planner Tool

Google Analytics Tool

Google Mobile Friendly Test

Google Page-Speed Insights

#Affordable Best SEO Services Company in Jaipur#Affordable Best SEO Services Company#Affordable Best SEO Services#Search Engine Optimization#SEO Company Jaipur#SEO Company#SEO#SMO

0 notes

Text

Top 20 Best Free SEO Tools

There is a lot of tools available on the internet to website SEO but it is difficult to find out which of the best SEO tools are. That's why we have researched you for the 20 Best and Free SEO Tools list. These SEO tools are the best and it provides auditing data just like free audit tools paid audit tools. Best Free SEO Tools for 2019.

Apart from writing quality content in addition to writing quality content, a blogger needs SEO optimizing, such as using keywords in the article, creating quality backlinks for your site, fixing broken links and disavowing bad links. And so on. These SEO audit tools will help you in the search engine optimization of your site, whose traffic will boost up to 200%, you should just come to use these tools properly.

Top 20 Free SEO Audit Tools for Bloggers 2019

All these tools are best for 2019 and you can also use them to bring your site to the top in google, be ahead of your competitor. Google Search Console - WordPress Category, Tags and Pagination was not indexed This is the best free SEO tool for Blogger. This helps in crawl & index your site in google search engine. You can submit your sites and their sitemap to google search console tool, see indexed pages, error 404, search queries report. Google Analytics - You may already use it but not just for the performance report check, for the SEO toolkit. If you understand it, it can help a lot in your SEO. I have already told you about this, that you read this article, Google Analytics Performance Report Check Karne Ki 7 Tips Google PageSpeed Insights - The google page speed insights tool is the best choice for checking your website's speed score. Open this tool and enter the URL of your website. Check the analysis report now. The total score of your site should be 85+, if not, by following the guideline of these tools, optimize your site and improve score, it tells every single issue as a solution. Mobile Friendly Test - Now Google has also added the mobile first-index feature to the search console i.e. now it is very important to have your site mobile friendly. Mobile friendly test tool allows you to check the mobile performance of your site whether or not your site is properly open in mobile devices. Mobile Speed Test - Test your mobile website speed and performance tool to check the mobile performance report of the website, which tells you how fast your mobile phone loads. It tells the report in Excellent, Good, Fair or Poor score and with visitors report also tells visitors how much traffic loss would you have so much speed. Structured Data Testing Tool - Structured data testing tool helps in finding structured data issue in your site. It validates mistakes by following Google's Structured Data Guideline. This is the best tool to detect schema markup error on your site, in this you can test by site URL or by direct website coding paste. Google Keyword Planner - This tool is made for google blogging for researching keywords for their blogs. You can research the keyword for your site in it. Although there are many SEO tools like Semrush and Ahrefs for keyword research but they are paid while the google keyword planner tool is free.Xenu Link Sleuth - Xenu Link Sleuth is the best free tool to find site crawler and broken links. This lets you find broken links on your website in a few minutes.Mobile Web Page Test - If you are confusing on your website design, you can check your site's pages in the mobile device of all sizes with the Mobile Web Page Test tool. These tools include Apple iOS, Android and Windows OS etc. Supports all types of Mobiles. You can test 2 pages together and scroll through the entire design of the page.Mobile SERPs Test - This is also the tool of mobile moxie, but in this, you can check the keyword search ranking on mobile. With this you can check which keyword is the rank in mobile. This tool shows the preview according to the mobile category, which results clearly and easily understood.Free Blacklist Checker - This UltraTools RBL database lookup tool states that the blacklist or spam list has not been added to your site. Your site may have been added to the blacklist where it shows their names and also their contact information so that you can contact them.Free HTTPS Validator - Tool It is also necessary to find out the SSL certificate used on your site. The SSL Labs Security Tool tests your site's SSL and provides complete information about how secure it is. It also helps to know what else you need to do to improve site HTTPS security. Siteliner Duplicate Content Checker - Free sideliner duplicate content checker tool has been created by CopyScape. This tells your site's header, layout, footer content, and post, scan pages and duplicate content count. You can find out the percentage of duplicate content on your site. average should not be more than 10% duplicate content. W3C Validator - The W3C validator checks your site's HTML and states whether it is valid or not. If you use self-designed theme, then it is for you. With this you can easily find that you said in your site design but made a mistake in the HTML code, and you can fix it. Web Page Speed Test - This is to test the tool website performance. This allows you to test real-time speed in a different browser from worldwide multiple location. You can also check performance report by cache version, repeat version, exclude video, image, capute from its advanced test feature. Website Speed Optimize Tool - GTmetrix is the speed and performance audit that provides the easiest guide to website speed analysis. It tells you why your site's speed score is low. This generates various benchmark scores and with the score down reason you have to tell in the details that what to do to optimize needs to be optimized. XML Sitemap Generator - Sitemap is required on your site to properly index your website in google. With this tool, you can generate a sitemap for both your XML and HTML format for your site. Robots.txt Generator - A robots.txt file must be on the website to tell Google what content to index your site and which is not. Robots.txt generator is the perfect tool for it. With this tool you can easily generate your own account robots.txt for your site.SEO Analyze Tool - This is my favorite SEO tool to check your site's SEO score. SEO site checkup tells the SEO score report with very simple and easy-to-understand guideline. This tells you why your site's SEO score is low and what you need to do is search engine optimization to fix it.Screaming Frog SEO Spider - Screaming From is a desktop program that you can install on your PC. It mainly evaluates onsite SEO factors. Which includes server errors, broken links, duplicate pages, and blocked URLs. In addition, it crawls your site to analyze title and meta description, which relies on length and relevancy. ConclusionThese 20 free SEO tools are the most important SEO tools that will help you with site optimization. If you use them and take your blog forward and you have a budget, then you can also use premium SEO tools like Semrush.Top Social Bookmarking SitesSocial Bookmarking Sites ListFree Article Submission Sites ListIndian Classified Sites ListUSA Classified Sites ListBUSINESS LISTING SITES LISTWeb 2.0 Sites ListFree Press Release Sites ListFree PDF Submission SitesPPT Submission Sites ListSearch Engine Submission ListPing Submission SitesProfile Creation SitesQuestion and Answer Sites Read the full article

#BUSINESSLISTINGSITESLIST#FreeArticleSubmissionSitesList#FreePDFSubmissionSites#FreePressReleaseSitesList#IndianClassifiedSitesList#infyseo#PingSubmissionSites#pptsubmissionsiteslist#profilecreationsites#QuestionandAnswerSites#searchenginesubmissionlist#socialbookmarkingsiteslist#TopSocialBookmarkingSites#USAClassifiedSitesList#web2.0siteslist

0 notes

Text

A Technical SEO Checklist: Fundamentals for 2018

Keeping up with Google's algorithms is a full-time job. In the past year it's cracked down on ad-heavy content (Fred), shaken up local search results (Possum), and completely changed how websites are indexed (mobile-first indexing).

If you forgot to tend to your technical SEO while you were busy surviving Google algorithm updates, I could hardly blame you. Fortunately, there's no time like the present to double-check your site health and conduct an SEO audit.

This checklist will run you through all the technical SEO fundamentals you need in place in the year ahead.

1. Install essential tools

Your ability to improve your SEO hinges on your tools' ability to find and fix technical problems. Here are the absolute essentials you'll need:

Those tools are the absolute bare minimum for improving your technical SEO.

You won't need anything else to follow along in this article, but if you feel you've reached your limit with Google's free tools and you want to step up your SEO game, I highly recommend you consider tools that will let you dig deeper into your analytics. There are a ton of options, so you're sure to find something that suits your needs.

Consider your needs and your budget, and then look for software that lets you...

Monitor your link health, find and fix broken links, and discover new link-building opportunities.

Correct more errors, such as 404/500 error pages, faulty redirects, W3C validation errors, etc.

Perform deeper keyword analysis and optimize for all rankings, including local and geo-specific.

Discover content errors such as thin content, duplicate content, canonicalization errors, etc.

Spy on your competitors and compare backlinks, anchor text, etc.

2. Improve indexing and crawlability

One of the best ways to find out whether your site has too many duplicate URLs, noncanonical URLs, and URLs that contain a noindex metatag is to check your index status.

To do this, enter site:yourdomain.com into your target search engine, use your favorite SEO crawling software, or log in to Google Search Console and click Google Index > Index Status to see your report.

Ideally, you want your total indexed pages as close to your total number of pages as possible.

Note that while manually reviewing your robots.txt will help you discover some disallowed pages, you're liable to miss many others. I recommend a more dedicated tool that will help you find all blocked pages, including those blocked by robots.txt instructions, noindex metatags, and X-Robot-Tag headers.

3. Improve your 'crawl budget'

We call the number of pages on your website that search engine spiders crawl in a given period of time your "crawl budget." You probably won't have to worry about crawl budget (unless your site is gigantic), but working to improve your crawl budget almost always translates into improving your technical SEO.

To view your approximate crawl budget, log in to Google Search Console and click Crawl > Crawl Stats. Unfortunately, finding a page-by-page breakdown of your crawl stats is a little harder and will require a separate tool.

My team recently tested whether it's possible to boost your crawl budget. What we found is a strong correlation between the number of spider hits and external links, which suggests that growing your link profile will also improve your crawl budget.

Building links organically takes time, however. If you want to improve your crawl budget right now while you begin grooming your link profile, I suggest auditing your internal links, fixing broken links, and limiting your use of uncrawlable media-rich files.

Revisiting your URL structure will also improve your crawl budget. Ensure that all of your URLs are as succinct as possible, include 1-2 focused keywords, and easily identify the topic. For example, if this article's URL were "marketingprofs.com/410571591," we might change it to "marketingprofs.com/2018-technical-seo-checklist."

(For more advice, check out this guide to improving your crawl budget.)

4. Clean up your sitemap

You can also boost your crawlability by improving your sitemap navigation. You can check your sitemap for errors by clicking Crawl > Sitemaps in your Google Search Console.

For the sake of technical SEO, you'll want to make sure that your XML sitemap is...

Uncluttered: Remove all noncanonical URLs, redirects, 400-level pages, and blocked pages from your sitemap.

While you're optimizing your XML sitemap for search engines, don't forget to update your HTML sitemaps, too. Doing so will help improve user experience for your site visitors.

5. Optimize metadata

Review your HTML code and improve your meta descriptions and title tags wherever they're missing. Here are some general guidelines you can follow:

Title Tags

The length should be 70-71 characters for desktop, 78 characters for mobile.

Accurately reflect the content on your page.

Lead with your most important keywords, but don't keyword-stuff your title tags.

Insert your brand/company name at the end of your title tags.

Don't duplicate title tags across multiple pages.

Make it different from your H1 headline.

Meta Descriptions

The length should be 200 characters for desktop, 170-172 characters for mobile.

They should be a brief, readable, and unique description of a page's topic.

Naturally insert 1-2 keywords consistent with title tag keywords.

Headline Tags

Tag your main headline with an H1 tag (only one H1 tag per page!).

Use H2 tags for sub-headers or different topics on the same page.

If you use sub-headers within your sub-headers (lists, source links, etc.) use more H3–H6 tags, in descending order.

6. Fix broken links and redirects

After you've performed your website audit, fixing any redirect chains and broken links is among the best bits of technical SEO you can perform. Redirects and broken links eat up valuable crawl budget, slow down your page, result in a poorer user experience, and may ultimately devalue your page. It's in your best interest to fix them ASAP.

Broken Links

Broken internal links are easy enough to fix: Just find the offending page and turn the broken link into a link to an existing page. Try to keep your site structure as shallow as possible so that no page is more than three clicks away from your homepage.

To fix broken external links, you'll have to contact the webmaster and ask them to fix or remove your link. If that's not possible, you may have to set up a 301 redirect to an active page or disavow the dead link (especially if it's from an unreputable source).

Redirect Chains

Fix chains of three or more redirects first, and do what you can to eliminate redirects altogether. Having many redirects in a row might result in search engine spiders' dropping off before they reach the end of the chain, which might result in unindexed pages.

Orphan Pages

You may also discover orphan pages during your SEO audit. These are pages that aren't linked to from any other page of your website. Add links to ensure that visitors and search engines can find that page.

7. Use rich snippets and structured data

Schema markup gives users a snapshot of your content before they click on the page so that they can see thumbnail images, star ratings, and additional product details before clicking on your link.

Rich snippets like this help build consumer trust and often encourage clickthroughs.

Go to Schema.org to learn how to set up structured data for your pages, then use Google's Structured Data Testing Tool to review your snippets.

8. Optimize site speed for mobile

A recent Google study found that visitors are three times more likely to abandon a page with a 1-5-second load time than bounce from a page with a 1-3-second load time.

Site speed has always been one of Google's most important ranking signals, and it's one of the most important technical SEO elements related to your conversions as well.

Unfortunately, slow load times are common, especially on mobile sites. Google's study noted that "the majority of mobile websites are slow and bloated with too many elements," and 70% of pages took nearly seven seconds to load their above-the-fold content.

Here's what you can do to improve your site speed and mobile performance:

Optimize your images.

Minify JavaScript and CSS Files.

Move files such as images, CSS, and Javascript to a CDN (content delivery network).

If you're looking for technical SEO to improve on your page, this is a good place to start. With Google's recent switch to mobile-first indexing, your site ranking will be largely determined by your mobile site's performance.

9. Look to the future (voice search, RankBrain, and more)

Checklists like this are great if you've fallen behind on your technical SEO, but they don't often help you prepare for upcoming trends. So do yourself a favor and brush up on other innovations you can use to solve customer pain points, such as...

Voice search: Local businesses are becoming increasingly discovered via voice chat, through queries such as "I want to buy _____" or "What are _____ businesses near me?" Optimize for voice search to help these customers find you.

RankBrain/AI: RankBrain allows Google to understand what would be the best result for the user's query, based on historical data. The algorithm is focused on quality for the end-user, so optimize the experience for the audience that you want coming to your website.

Accelerated Mobile Pages (AMP), mobile apps, and progressive Web apps (PWA): As mobile usage increases and Google shifts toward mobile-first indexing, developers are working hard to make the mobile Web as efficient and useful as possible.

* * *

Studies show that after performing a major site audit, 39% of SEOs tackle technical SEO first. Improving your technical SEO is great, because it gives you a bedrock upon which to build everything else, but don't forget about other SEO elements in the process.

At the end of the day, you still need great content, you still need to build citations in prominent directories, and you still need to build up a strong social media presence.

Make sure every part of your website is working together to establish you as an authority in your niche and improve your Web presence.

Join over 600,000 marketing professionals, and gain access to thousands of marketing resources! Don't worry ... it's FREE!

Source

https://www.marketingprofs.com/articles/2017/33236/your-2018-technical-seo-checklist

0 notes

Text

Industrial SEO Training with live project @ Royals Webtech

Royals Webtech Nagpur India, is a SEO Company that offers quality Search Engine Optimization (SEO) training in Nagpur SEO courses and Internet Marketing Training Solutions. Call:- 8788447944

Course Duration:- 3 Month

SEO Training Course Syllabus

• ON PAGE OPTIMIZATION • OFF PAGE OPTIMIZATION

ON PAGE OPTIMIZATION

• Search Engine Optimization Strategy • Website Analysis • Competitive Analysis • Keyword Research • Keyword Finalize • Website Structure Optimization • Content Optimization • URL Mode Rewrite • HTML code optimization • W3C Validation • Meta tag creation & optimization • HTML Sitemap creation & optimization • Google XML Sitemap Creation & regular optimization • Robots.txt creation & optimization • Google Analytics Setup & Monitoring • On-Page Optimization Report

OFF PAGE OPTIMIZATION

• Link Building (Relevant and Manual) • Manual Search Engine Submission • Manual Relevant Directory Submission • Local / Niche Directory Submission • Link Exchange (One Way ) • Article Submission (per article) • Blog Creation, updating and promotion • Blog Submission • Blogs Targeted For Links • Forum posting • Classified ads Creation & Posting • RSS Submission • Bookmarking Comments • Link wheel • Landing Page Optimization

For corporate SEO training the course delivery time can be customized depending upon your time availability. Please contact Royals Webtech at +91-9970619433. The program fee for this course is INR 7,000 + Service tax per Candidate.

1 note

·

View note

Text

Turbo Website Reviewer - In-depth SEO Analysis Tool (Miscellaneous)

Turbo Website Reviewer helps to identify your SEO mistakes and optimize your web page contents for a better search engine ranking. It also offers side-by-side SEO comparisons with your competitors. Analysis report also be downloaded as PDF file for offline usage.

In-depth Analysis Report:

Meta Title

Meta Description

Meta Keywords

Headings

Google Preview

Missing Image Alt Attribute

Keywords Cloud

Keyword Consistency

Text/HTML Ratio

GZIP Compression Test

WWW / NON-WWW Resolve

IP Canonicalization

XML Sitemap

Robots.txt

URL Rewrite

Underscores in the URLs

Embedded Objects

Iframe Check

Domain Registration

WHOIS Data

Indexed Pages Count (Google)

Backlinks Counter

URL Count

Favicon Test

Custom 404 Page Test

Page Size

Website Load Time

PageSpeed Insights (Desktop)

Language Check

Domain Availability

Typo Availability

Email Privacy

Safe Browsing

Mobile Friendliness

Mobile Preview Screenshot

Mobile Compatibility

PageSpeed Insights (Mobile)

Server IP

Server Location

Hosting Service Provider

Speed Tips

Analytics

W3C Validity

Doc Type

Encoding

Facebook Likes Count

PlusOne Count

StumbleUpon Count

LinkedIn Count

Estimated Worth

Alexa Global Rank

Visitors Localization

In-Page Links

Broken Links

Main Features:

- Branded PDF Reports (PDF Report Demo) - User Management System - OAuth Login Support (Google, Facebook and Twitter) - Multilingual Support - Multilingual SEO Friendly URL Support - Responsive design - Powerful admin control panel - Inbuilt Analytics (Track your visitors traffic, source of traffic etc..) - One-Click Ads integration - Easy Maintenance Mode - Captcha Protection (Google reCAPTCHA Included) - Google analytics support - Inbuilt Sitemap Generator - Advance Mailer for Account Activation, Password reset etc.. - Support both SMTP and Native PHP mail - Contact page for visitors to contact you easily - Create unlimited custom pages - Support Theme Customization / Custom Coded Themes - Add-ons Support - Shortcode System - Adblock Detection - Application Level DDoS Detection / Protection - Easy Installer Panel

Demo

Front End Demo: http://ift.tt/2rwTCVT

Admin Panel Demo: http://ift.tt/2r1CDHT

(At admin panel, some features are disabled for security reasons)

Multilingual SEO Friendly URL http://ift.tt/2rww3g0 http://ift.tt/2r1tcIo http://ift.tt/2rwPmG7 http://ift.tt/2r1SO81

Requirements

- PHP 5.4.0 or above - PDO and MySQLI extension - GD Extension - Mcrypt Extension - Rewrite module - WHOIS Port – TCP 43 must be allowed - “allow_url_fopen” must be allowed. - SMTP Mail Server (optional)

Free Screenshot API

Website Preview use ProThemes Screenshot API. You don’t need to spend bucks for generating website screenshot. We providing 99% uptime guarantee free website screenshot service, for free of charge without any watermark of logo / brand name.