#service mesh and kubernetes

Explore tagged Tumblr posts

Text

How to Test Service APIs

When you're developing applications, especially when doing so with microservices architecture, API testing is paramount. APIs are an integral part of modern software applications. They provide incredible value, making devices "smart" and ensuring connectivity.

No matter the purpose of an app, it needs reliable APIs to function properly. Service API testing is a process that analyzes multiple endpoints to identify bugs or inconsistencies in the expected behavior. Whether the API connects to databases or web services, issues can render your entire app useless.

Testing is integral to the development process, ensuring all data access goes smoothly. But how do you test service APIs?

Taking Advantage of Kubernetes Local Development

One of the best ways to test service APIs is to use a staging Kubernetes cluster. Local development allows teams to work in isolation in special lightweight environments. These environments mimic real-world operating conditions. However, they're separate from the live application.

Using local testing environments is beneficial for many reasons. One of the biggest is that you can perform all the testing you need before merging, ensuring that your application can continue running smoothly for users. Adding new features and joining code is always a daunting process because there's the risk that issues with the code you add could bring a live application to a screeching halt.

Errors and bugs can have a rippling effect, creating service disruptions that negatively impact the app's performance and the brand's overall reputation.

With Kubernetes local development, your team can work on new features and code changes without affecting what's already available to users. You can create a brand-new testing environment, making it easy to highlight issues that need addressing before the merge. The result is more confident updates and fewer application-crashing problems.

This approach is perfect for testing service APIs. In those lightweight simulated environments, you can perform functionality testing to ensure that the API does what it should, reliability testing to see if it can perform consistently, load testing to check that it can handle a substantial number of calls, security testing to define requirements and more.

Read a similar article about Kubernetes API testing here at this page.

#kubernetes local development#opentelemetry and kubernetes#service mesh and kubernetes#what are dora metrics

0 notes

Text

Developing and Deploying AI/ML Applications on Red Hat OpenShift AI (AI268)

As artificial intelligence and machine learning continue to drive innovation across industries, the need for scalable, enterprise-ready platforms for building and deploying models is greater than ever. Red Hat OpenShift AI (formerly Red Hat OpenShift Data Science) rises to this challenge by providing a fully integrated, Kubernetes-based environment for end-to-end AI/ML workflows.

In this blog, we’ll explore the essentials of Red Hat’s AI268 course – Developing and Deploying AI/ML Applications on Red Hat OpenShift AI – and how it empowers data scientists and ML engineers to accelerate the path from model development to production.

🎯 What is AI268?

AI268 is a hands-on training course designed by Red Hat to help professionals learn how to use OpenShift AI (a managed service on OpenShift) to:

Build machine learning models in Jupyter notebooks.

Train and fine-tune models using GPU/CPU resources.

Collaborate with teams in a secure and scalable environment.

Deploy models as RESTful APIs or inference endpoints using OpenShift tools.

Automate workflows using Pipelines and GitOps practices.

This course is ideal for:

Data Scientists

ML Engineers

DevOps/Platform Engineers supporting AI/ML workloads

🚀 Key Capabilities of Red Hat OpenShift AI

Here’s what makes OpenShift AI a game-changer for enterprise ML:

1. Jupyter-as-a-Service

Spin up customized Jupyter notebook environments with pre-integrated libraries like TensorFlow, PyTorch, Scikit-learn, and more. Users can develop, experiment, and iterate on models—all in a cloud-native environment.

2. Model Training at Scale

Access to elastic compute resources including GPUs and CPUs ensures seamless training and hyperparameter tuning. OpenShift AI integrates with distributed training frameworks and supports large-scale jobs.

3. MLOps Integration

Leverage Red Hat OpenShift Pipelines (Tekton) and OpenShift GitOps (Argo CD) to bring CI/CD principles to your ML workflows, ensuring model versioning, automated testing, and deployment consistency.

4. Secure Collaboration

Enable data science teams to collaborate across workspaces, with Role-Based Access Control (RBAC), quotas, and isolated environments ensuring governance and security.

5. Flexible Deployment Options

Deploy trained models as containers, REST endpoints, or even serverless workloads using OpenShift Service Mesh, Knative, and Red Hat OpenShift Serverless.

🛠️ Course Highlights

The AI268 course typically covers:

Setting up and accessing OpenShift AI

Managing projects, notebooks, and data connections

Using Git with Jupyter for version control

Building and deploying models using Seldon or KFServing

Creating Pipelines for ML workflows

Monitoring deployed services and gathering inference metrics

The course is lab-intensive and designed around real-world use cases to ensure practical understanding.

💼 Why It Matters for Enterprises

Organizations looking to scale AI initiatives often struggle with fragmented tooling, inconsistent environments, and lack of collaboration. OpenShift AI brings the power of Kubernetes together with Red Hat’s robust ecosystem to create a unified platform for data-driven innovation.

With OpenShift AI and skills from AI268, teams can:

Accelerate time to market for AI solutions

Maintain model reproducibility and traceability

Enable continuous delivery of AI/ML capabilities

Improve collaboration between data science and IT/DevOps teams

📚 Ready to Upskill?

If you're ready to bridge the gap between data science and production deployment, AI268 is your launchpad. It prepares teams to leverage OpenShift AI for building scalable, reproducible, and secure ML applications.

👉 Talk to us at HawkStack Technologies for:

Corporate Training

Red Hat Learning Subscription (RHLS)

AI/ML Training Roadmaps

🔗 Get in touch to learn more about Red Hat AI/ML offerings or to schedule your team's AI268 session. www.hawkstack.com

#RedHat #OpenShiftAI #MachineLearning #DevOps #MLOps #DataScience #AI268 #OpenShift #Kubernetes #RHLS #HawkStack #AITools #EnterpriseAI #CloudNativeAI

0 notes

Text

Scaling Inference AI: How to Manage Large-Scale Deployments

As artificial intelligence continues to transform industries, the focus has shifted from model development to operationalization—especially inference at scale. Deploying AI models into production across hundreds or thousands of nodes is a different challenge than training them. Real-time response requirements, unpredictable workloads, cost optimization, and system resilience are just a few of the complexities involved.

In this blog post, we’ll explore key strategies and architectural best practices for managing large-scale inference AI deployments in production environments.

1. Understand the Inference Workload

Inference workloads vary widely depending on the use case. Some key considerations include:

Latency sensitivity: Real-time applications (e.g., fraud detection, recommendation engines) demand low latency, whereas batch inference (e.g., customer churn prediction) is more tolerant.

Throughput requirements: High-traffic systems must process thousands or millions of predictions per second.

Resource intensity: Models like transformers and diffusion models may require GPU acceleration, while smaller models can run on CPUs.

Tailor your infrastructure to the specific needs of your workload rather than adopting a one-size-fits-all approach.

2. Model Optimization Techniques

Optimizing models for inference can dramatically reduce resource costs and improve performance:

Quantization: Convert models from 32-bit floats to 16-bit or 8-bit precision to reduce memory footprint and accelerate computation.

Pruning: Remove redundant or non-critical parts of the network to improve speed.

Knowledge distillation: Replace large models with smaller, faster student models trained to mimic the original.

Frameworks like TensorRT, ONNX Runtime, and Hugging Face Optimum can help implement these optimizations effectively.

3. Scalable Serving Architecture

For serving AI models at scale, consider these architectural elements:

Model servers: Tools like TensorFlow Serving, TorchServe, Triton Inference Server, and BentoML provide flexible options for deploying and managing models.

Autoscaling: Use Kubernetes (K8s) with horizontal pod autoscalers to adjust resources based on traffic.

Load balancing: Ensure even traffic distribution across model replicas with intelligent load balancers or service meshes.

Multi-model support: Use inference runtimes that allow hot-swapping models or running multiple models concurrently on the same node.

Cloud-native design is essential—containerization and orchestration are foundational for scalable inference.

4. Edge vs. Cloud Inference

Deciding where inference happens—cloud, edge, or hybrid—affects latency, bandwidth, and cost:

Cloud inference provides centralized control and easier scaling.

Edge inference minimizes latency and data transfer, especially important for applications in autonomous vehicles, smart cameras, and IoT

Hybrid architectures allow critical decisions to be made at the edge while sending more complex computations to the cloud..

Choose based on the tradeoffs between responsiveness, connectivity, and compute resources.

5. Observability and Monitoring

Inference at scale demands robust monitoring for performance, accuracy, and availability:

Latency and throughput metrics: Track request times, failed inferences, and traffic spikes.

Model drift detection: Monitor if input data or prediction distributions are changing, signaling potential degradation.

A/B testing and shadow deployments: Test new models in parallel with production ones to validate performance before full rollout.

Tools like Prometheus, Grafana, Seldon Core, and Arize AI can help maintain visibility and control.

6. Cost Management

Running inference at scale can become costly without careful management:

Right-size compute instances: Don’t overprovision; match hardware to model needs.

Use spot instances or serverless options: Leverage lower-cost infrastructure when SLAs allow.

Batch low-priority tasks: Queue and batch non-urgent inferences to maximize hardware utilization.

Cost-efficiency should be integrated into deployment decisions from the start.

7. Security and Governance

As inference becomes part of critical business workflows, security and compliance matter:

Data privacy: Ensure sensitive inputs (e.g., healthcare, finance) are encrypted and access-controlled.

Model versioning and audit trails: Track changes to deployed models and their performance over time.

API authentication and rate limiting: Protect your inference endpoints from abuse.

Secure deployment pipelines and strict governance are non-negotiable in enterprise environments.

Final Thoughts

Scaling AI inference isn't just about infrastructure—it's about building a robust, flexible, and intelligent ecosystem that balances performance, cost, and user experience. Whether you're powering voice assistants, recommendation engines, or industrial robotics, successful large-scale inference requires tight integration between engineering, data science, and operations.

Have questions about deploying inference at scale? Let us know what challenges you’re facing and we’ll dive in.

0 notes

Text

Cloud Microservice Market Growth Driven by Demand for Scalable and Agile Application Development Platforms

The Cloud Microservice Market: Accelerating Innovation in a Modular World

The global push toward digital transformation has redefined how businesses design, build, and deploy applications. Among the most impactful trends in recent years is the rapid adoption of cloud microservices a modular approach to application development that offers speed, scalability, and resilience. As enterprises strive to meet the growing demand for agility and performance, the cloud microservice market is experiencing significant momentum, reshaping the software development landscape.

What Are Cloud Microservices?

At its core, a microservice architecture breaks down a monolithic application into smaller, loosely coupled, independently deployable services. Each microservice addresses a specific business capability, such as user authentication, payment processing, or inventory management. By leveraging the cloud, these services can scale independently, be deployed across multiple geographic regions, and integrate seamlessly with various platforms.

Cloud microservices differ from traditional service-oriented architectures (SOA) by emphasizing decentralization, lightweight communication (typically via REST or gRPC), and DevOps-driven automation.

Market Growth and Dynamics

The cloud microservice market is witnessing robust growth. According to recent research, the global market size was valued at over USD 1 billion in 2023 and is projected to grow at a compound annual growth rate (CAGR) exceeding 20% through 2030. This surge is driven by several interlocking trends:

Cloud-First Strategies: As more organizations migrate workloads to public, private, and hybrid cloud environments, microservices provide a flexible architecture that aligns with distributed infrastructure.

DevOps and CI/CD Adoption: The increasing use of continuous integration and continuous deployment pipelines has made microservices more attractive. They fit naturally into agile development cycles and allow for faster iteration and delivery.

Containerization and Orchestration Tools: Technologies like Docker and Kubernetes have become instrumental in managing and scaling microservices in the cloud. These tools offer consistency across environments and automate deployment, networking, and scaling of services.

Edge Computing and IoT Integration: As edge devices proliferate, there is a growing need for lightweight, scalable services that can run closer to the user. Microservices can be deployed to edge nodes and communicate with centralized cloud services, enhancing performance and reliability.

Key Industry Players

Several technology giants and cloud providers are investing heavily in microservice architectures:

Amazon Web Services (AWS) offers a suite of tools like AWS Lambda, ECS, and App Mesh that support serverless and container-based microservices.

Microsoft Azure provides Azure Kubernetes Service (AKS) and Azure Functions for scalable and event-driven applications.

Google Cloud Platform (GCP) leverages Anthos and Cloud Run to help developers manage hybrid and multicloud microservice deployments.

Beyond the big three, companies like Red Hat, IBM, and VMware are also influencing the microservice ecosystem through open-source platforms and enterprise-grade orchestration tools.

Challenges and Considerations

While the benefits of cloud microservices are significant, the architecture is not without challenges:

Complexity in Management: Managing hundreds or even thousands of microservices requires robust monitoring, logging, and service discovery mechanisms.

Security Concerns: Each service represents a potential attack vector, requiring strong identity, access control, and encryption practices.

Data Consistency: Maintaining consistency and integrity across distributed systems is a persistent concern, particularly in real-time applications.

Organizations must weigh these complexities against their business needs and invest in the right tools and expertise to successfully navigate the microservice journey.

The Road Ahead

As digital experiences become more demanding and users expect seamless, responsive applications, microservices will continue to play a pivotal role in enabling scalable, fault-tolerant systems. Emerging trends such as AI-driven observability, service mesh architecture, and no-code/low-code microservice platforms are poised to further simplify and enhance the development and management process.

In conclusion, the cloud microservice market is not just a technological shift it's a foundational change in how software is conceptualized and delivered. For businesses aiming to stay competitive, embracing microservices in the cloud is no longer optional; it’s a strategic imperative.

0 notes

Text

Getting Started with Google Kubernetes Engine: Your Gateway to Cloud-Native Greatness

After spending over 8 years deep in the trenches of cloud engineering and DevOps, I can tell you one thing for sure: if you're serious about scalability, flexibility, and real cloud-native application deployment, Google Kubernetes Engine (GKE) is where the magic happens.

Whether you’re new to Kubernetes or just exploring managed container platforms, getting started with Google Kubernetes Engine is one of the smartest moves you can make in your cloud journey.

"Containers are cool. Orchestrated containers? Game-changing."

🚀 What is Google Kubernetes Engine (GKE)?

Google Kubernetes Engine is a fully managed Kubernetes platform that runs on top of Google Cloud. GKE simplifies deploying, managing, and scaling containerized apps using Kubernetes—without the overhead of maintaining the control plane.

Why is this a big deal?

Because Kubernetes is notoriously powerful and notoriously complex. With GKE, Google handles all the heavy lifting—from cluster provisioning to upgrades, logging, and security.

"GKE takes the complexity out of Kubernetes so you can focus on building, not babysitting clusters."

🧭 Why Start with GKE?

If you're a developer, DevOps engineer, or cloud architect looking to:

Deploy scalable apps across hybrid/multi-cloud

Automate CI/CD workflows

Optimize infrastructure with autoscaling & spot instances

Run stateless or stateful microservices seamlessly

Then GKE is your launchpad.

Here’s what makes GKE shine:

Auto-upgrades & auto-repair for your clusters

Built-in security with Shielded GKE Nodes and Binary Authorization

Deep integration with Google Cloud IAM, VPC, and Logging

Autopilot mode for hands-off resource management

Native support for Anthos, Istio, and service meshes

"With GKE, it's not about managing containers—it's about unlocking agility at scale."

🔧 Getting Started with Google Kubernetes Engine

Ready to dive in? Here's a simple flow to kick things off:

Set up your Google Cloud project

Enable Kubernetes Engine API

Install gcloud CLI and Kubernetes command-line tool (kubectl)

Create a GKE cluster via console or command line

Deploy your app using Kubernetes manifests or Helm

Monitor, scale, and manage using GKE dashboard, Cloud Monitoring, and Cloud Logging

If you're using GKE Autopilot, Google manages your node infrastructure automatically—so you only manage your apps.

“Don’t let infrastructure slow your growth. Let GKE scale as you scale.”

🔗 Must-Read Resources to Kickstart GKE

👉 GKE Quickstart Guide – Google Cloud

👉 Best Practices for GKE – Google Cloud

👉 Anthos and GKE Integration

👉 GKE Autopilot vs Standard Clusters

👉 Google Cloud Kubernetes Learning Path – NetCom Learning

🧠 Real-World GKE Success Stories

A FinTech startup used GKE Autopilot to run microservices with zero infrastructure overhead

A global media company scaled video streaming workloads across continents in hours

A university deployed its LMS using GKE and reduced downtime by 80% during peak exam seasons

"You don’t need a huge ops team to build a global app. You just need GKE."

🎯 Final Thoughts

Getting started with Google Kubernetes Engine is like unlocking a fast track to modern app delivery. Whether you're running 10 containers or 10,000, GKE gives you the tools, automation, and scale to do it right.

With Google Cloud’s ecosystem—from Cloud Build to Artifact Registry to operations suite—GKE is more than just Kubernetes. It’s your platform for innovation.

“Containers are the future. GKE is the now.”

So fire up your first cluster. Launch your app. And let GKE do the heavy lifting while you focus on what really matters—shipping great software.

Let me know if you’d like this formatted into a visual infographic or checklist to go along with the blog!

1 note

·

View note

Text

Understanding API Gateways in Modern Application Architecture

Sure! Here's a brand new 700-word blog on the topic: "Understanding API Gateways in Modern Application Architecture" — written in simple language with no bold formatting, and includes mentions of Hexadecimal Software and Hexahome Blogs at the end.

Understanding API Gateways in Modern Application Architecture

In today's world of cloud-native applications and microservices, APIs play a very important role. They allow different parts of an application to communicate with each other and with external systems. As the number of APIs grows, managing and securing them becomes more challenging. This is where API gateways come in.

An API gateway acts as the single entry point for all client requests to a set of backend services. It simplifies client interactions, handles security, and provides useful features like rate limiting, caching, and monitoring. API gateways are now a key part of modern application architecture.

What is an API Gateway?

An API gateway is a server or software that receives requests from users or applications and routes them to the appropriate backend services. It sits between the client and the microservices and acts as a middle layer.

Instead of making direct calls to multiple services, a client sends one request to the gateway. The gateway then forwards it to the correct service, collects the response, and sends it back to the client. This reduces complexity on the client side and improves overall control and performance.

Why Use an API Gateway?

There are many reasons why modern applications use API gateways:

Centralized access: Clients only need to know one endpoint instead of many different service URLs.

Security: API gateways can enforce authentication, authorization, and encryption.

Rate limiting: They can prevent abuse by limiting the number of requests a client can make.

Caching: Responses can be stored temporarily to improve speed and reduce load.

Load balancing: Requests can be distributed across multiple servers to handle more traffic.

Logging and monitoring: API gateways help track request data and monitor service health.

Protocol translation: They can convert between protocols, like from HTTP to WebSockets or gRPC.

Common Features of API Gateways

Authentication and authorization Ensures only valid users can access certain APIs. It can integrate with identity providers like OAuth or JWT.

Routing Directs requests to the right service based on the URL path or other parameters.

Rate limiting and throttling Controls how many requests a user or client can make in a given time period.

Data transformation Changes request or response formats, such as converting XML to JSON.

Monitoring and logging Tracks the number of requests, response times, errors, and usage patterns.

API versioning Allows clients to use different versions of an API without breaking existing applications.

Future of API Gateways

As applications become more distributed and cloud-based, the need for effective API management will grow. API gateways will continue to evolve with better performance, security, and integration features. They will also work closely with service meshes and container orchestration platforms like Kubernetes.

With the rise of event-driven architecture and real-time systems, future API gateways may also support new communication protocols and smarter routing strategies.

About Hexadecimal Software

Hexadecimal Software is a trusted expert in software development and cloud-native technologies. We help businesses design, build, and manage scalable applications with modern tools like API gateways, microservices, and container platforms. Whether you are starting your cloud journey or optimizing an existing system, our team can guide you at every step. Visit us at https://www.hexadecimalsoftware.com

Explore More on Hexahome Blogs

For more blogs on cloud computing, DevOps, and software architecture, visit https://www.blogs.hexahome.in. Our blog platform shares easy-to-understand articles for both tech enthusiasts and professionals who want to stay updated with the latest trends.

0 notes

Text

Cloud Native Storage Market Insights: Industry Share, Trends & Future Outlook 2032

TheCloud Native Storage Market Size was valued at USD 16.19 Billion in 2023 and is expected to reach USD 100.09 Billion by 2032 and grow at a CAGR of 22.5% over the forecast period 2024-2032

The cloud native storage market is experiencing rapid growth as enterprises shift towards scalable, flexible, and cost-effective storage solutions. The increasing adoption of cloud computing and containerization is driving demand for advanced storage technologies.

The cloud native storage market continues to expand as businesses seek high-performance, secure, and automated data storage solutions. With the rise of hybrid cloud, Kubernetes, and microservices architectures, organizations are investing in cloud native storage to enhance agility and efficiency in data management.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/3454

Market Keyplayers:

Microsoft (Azure Blob Storage, Azure Kubernetes Service (AKS))

IBM, (IBM Cloud Object Storage, IBM Spectrum Scale)

AWS (Amazon S3, Amazon EBS (Elastic Block Store))

Google (Google Cloud Storage, Google Kubernetes Engine (GKE))

Alibaba Cloud (Alibaba Object Storage Service (OSS), Alibaba Cloud Container Service for Kubernetes)

VMWare (VMware vSAN, VMware Tanzu Kubernetes Grid)

Huawei (Huawei FusionStorage, Huawei Cloud Object Storage Service)

Citrix (Citrix Hypervisor, Citrix ShareFile)

Tencent Cloud (Tencent Cloud Object Storage (COS), Tencent Kubernetes Engine)

Scality (Scality RING, Scality ARTESCA)

Splunk (Splunk SmartStore, Splunk Enterprise on Kubernetes)

Linbit (LINSTOR, DRBD (Distributed Replicated Block Device))

Rackspace (Rackspace Object Storage, Rackspace Managed Kubernetes)

Robin.Io (Robin Cloud Native Storage, Robin Multi-Cluster Automation)

MayaData (OpenEBS, Data Management Platform (DMP))

Diamanti (Diamanti Ultima, Diamanti Spektra)

Minio (MinIO Object Storage, MinIO Kubernetes Operator)

Rook (Rook Ceph, Rook EdgeFS)

Ondat (Ondat Persistent Volumes, Ondat Data Mesh)

Ionir (Ionir Data Services Platform, Ionir Continuous Data Mobility)

Trilio (TrilioVault for Kubernetes, TrilioVault for OpenStack)

Upcloud (UpCloud Object Storage, UpCloud Managed Databases)

Arrikto (Kubeflow Enterprise, Rok (Data Management for Kubernetes)

Market Size, Share, and Scope

The market is witnessing significant expansion across industries such as IT, BFSI, healthcare, retail, and manufacturing.

Hybrid and multi-cloud storage solutions are gaining traction due to their flexibility and cost-effectiveness.

Enterprises are increasingly adopting object storage, file storage, and block storage tailored for cloud native environments.

Key Market Trends Driving Growth

Rise in Cloud Adoption: Organizations are shifting workloads to public, private, and hybrid cloud environments, fueling demand for cloud native storage.

Growing Adoption of Kubernetes: Kubernetes-based storage solutions are becoming essential for managing containerized applications efficiently.

Increased Data Security and Compliance Needs: Businesses are investing in encrypted, resilient, and compliant storage solutions to meet global data protection regulations.

Advancements in AI and Automation: AI-driven storage management and self-healing storage systems are revolutionizing data handling.

Surge in Edge Computing: Cloud native storage is expanding to edge locations, enabling real-time data processing and low-latency operations.

Integration with DevOps and CI/CD Pipelines: Developers and IT teams are leveraging cloud storage automation for seamless software deployment.

Hybrid and Multi-Cloud Strategies: Enterprises are implementing multi-cloud storage architectures to optimize performance and costs.

Increased Use of Object Storage: The scalability and efficiency of object storage are driving its adoption in cloud native environments.

Serverless and API-Driven Storage Solutions: The rise of serverless computing is pushing demand for API-based cloud storage models.

Sustainability and Green Cloud Initiatives: Energy-efficient storage solutions are becoming a key focus for cloud providers and enterprises.

Enquiry of This Report: https://www.snsinsider.com/enquiry/3454

Market Segmentation:

By Component

Solution

Object Storage

Block Storage

File Storage

Container Storage

Others

Services

System Integration & Deployment

Training & Consulting

Support & Maintenance

By Deployment

Private Cloud

Public Cloud

By Enterprise Size

SMEs

Large Enterprises

By End Use

BFSI

Telecom & IT

Healthcare

Retail & Consumer Goods

Manufacturing

Government

Energy & Utilities

Media & Entertainment

Others

Market Growth Analysis

Factors Driving Market Expansion

The growing need for cost-effective and scalable data storage solutions

Adoption of cloud-first strategies by enterprises and governments

Rising investments in data center modernization and digital transformation

Advancements in 5G, IoT, and AI-driven analytics

Industry Forecast 2032: Size, Share & Growth Analysis

The cloud native storage market is projected to grow significantly over the next decade, driven by advancements in distributed storage architectures, AI-enhanced storage management, and increasing enterprise digitalization.

North America leads the market, followed by Europe and Asia-Pacific, with China and India emerging as key growth hubs.

The demand for software-defined storage (SDS), container-native storage, and data resiliency solutions will drive innovation and competition in the market.

Future Prospects and Opportunities

1. Expansion in Emerging Markets

Developing economies are expected to witness increased investment in cloud infrastructure and storage solutions.

2. AI and Machine Learning for Intelligent Storage

AI-powered storage analytics will enhance real-time data optimization and predictive storage management.

3. Blockchain for Secure Cloud Storage

Blockchain-based decentralized storage models will offer improved data security, integrity, and transparency.

4. Hyperconverged Infrastructure (HCI) Growth

Enterprises are adopting HCI solutions that integrate storage, networking, and compute resources.

5. Data Sovereignty and Compliance-Driven Solutions

The demand for region-specific, compliant storage solutions will drive innovation in data governance technologies.

Access Complete Report: https://www.snsinsider.com/reports/cloud-native-storage-market-3454

Conclusion

The cloud native storage market is poised for exponential growth, fueled by technological innovations, security enhancements, and enterprise digital transformation. As businesses embrace cloud, AI, and hybrid storage strategies, the future of cloud native storage will be defined by scalability, automation, and efficiency.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#cloud native storage market#cloud native storage market Scope#cloud native storage market Size#cloud native storage market Analysis#cloud native storage market Trends

0 notes

Text

Senior Software Engineer - Kubernetes Platform

Senior Software Engineer – Kubernetes Platform Is your passion for Cloud Native Platform? That is, envisioning… our Service Mesh capability, which is a microservice platform based on Kubernetes and Istio. Establish software engineering… Apply Now

0 notes

Text

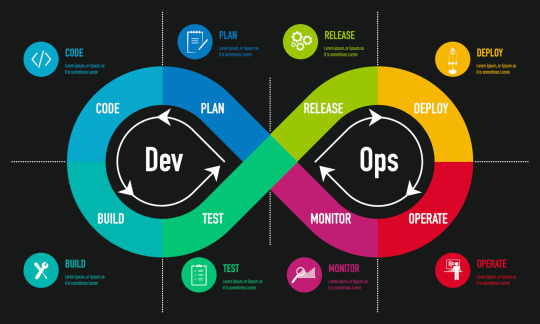

Advanced DevOps Strategies: Optimizing Software Delivery and Operations

Introduction

By bridging the gap between software development and IT operations, DevOps is a revolutionary strategy that guarantees quicker and more dependable software delivery. Businesses may increase productivity and lower deployment errors by combining automation, continuous integration (CI), continuous deployment (CD), and monitoring. Adoption of DevOps has become crucial for businesses looking to improve their software development lifecycle's scalability, security, and efficiency. To optimize development workflows, DevOps approaches need the use of tools such as Docker, Kubernetes, Jenkins, Terraform, and cloud platforms. Businesses are discovering new methods to automate, anticipate, and optimize their infrastructure for optimal performance as AI and machine learning become more integrated into DevOps.

Infrastructure as Code (IaC): Automating Deployments

Infrastructure as Code (IaC), one of the fundamental tenets of DevOps, allows teams to automate infrastructure administration. Developers may describe infrastructure declaratively with tools like Terraform, Ansible, and CloudFormation, doing away with the need for manual setups. By guaranteeing repeatable and uniform conditions and lowering human error, IaC speeds up software delivery. Scalable and adaptable deployment models result from the automated provisioning of servers, databases, and networking components. Businesses may achieve version-controlled infrastructure, quicker disaster recovery, and effective resource use in both on-premises and cloud settings by implementing IaC in DevOps processes.

The Role of Microservices in DevOps

DevOps is revolutionized by microservices architecture, which makes it possible to construct applications in a modular and autonomous manner. Microservices encourage flexibility in contrast to conventional monolithic designs, enabling teams to implement separate services without impacting the program as a whole. The administration of containerized microservices is made easier by DevOps automation technologies like Docker and Kubernetes, which provide fault tolerance, scalability, and high availability. Organizations may improve microservices-based systems' observability, traffic management, and security by utilizing service mesh technologies like Istio and Consul. Microservices integration with DevOps is a recommended method for contemporary software development as it promotes quicker releases, less downtime, and better resource usage.

CI/CD Pipelines: Enhancing Speed and Reliability

Continuous Integration (CI) and Continuous Deployment (CD) are the foundation of DevOps automation, allowing for quick software changes with no interruption. Software dependability is ensured by automating code integration, testing, and deployment with tools like Jenkins, GitLab CI/CD, and GitHub Actions. By using CI/CD pipelines, production failures are decreased, development cycles are accelerated, and manual intervention is eliminated. Blue-green deployments, rollback procedures, and automated testing all enhance deployment security and stability. Businesses who use CI/CD best practices see improved time-to-market, smooth upgrades, and high-performance apps in both on-premises and cloud settings.

Conclusion

Businesses may achieve agility, efficiency, and security in contemporary software development by mastering DevOps principles. Innovation and operational excellence are fueled by the combination of IaC, microservices, CI/CD, and automation. A DevOps internship may offer essential industry exposure and practical understanding of sophisticated DevOps technologies and processes to aspiring individuals seeking to obtain practical experience.

#devOps#devOps mastery#Devops mastery course#devops mastery internship#devops mastery training#devops internship in pune#e3l#e3l.co

0 notes

Text

The Hidden Challenges of 5G Cloud-Native Integration

5G technology is transforming industries, enabling ultra-fast connectivity, low-latency applications, and the rise of smart infrastructures. However, behind the promise of seamless communication lies a complex reality—integrating 5G with cloud-native architectures presents a series of hidden challenges. Businesses and service providers must navigate these hurdles to maximize 5G’s potential while maintaining operational efficiency and security.

Understanding Cloud-Native 5G

A cloud-native approach to 5G is essential for leveraging the benefits of software-defined networking (SDN) and network function virtualization (NFV). Cloud-native 5G architectures use microservices, containers, and orchestration tools like Kubernetes to enable flexibility and scalability. While this approach is fundamental for modern network operations, it introduces a new layer of challenges that demand strategic solutions.

Managing Complex Infrastructure Deployment

Unlike traditional monolithic network architectures, 5G cloud-native networks rely on distributed and multi-layered environments. This includes on-premises data centers, edge computing nodes, and public or private clouds. Coordinating and synchronizing these components efficiently is a significant challenge.

Network Fragmentation – Deploying 5G across hybrid cloud environments requires seamless communication between disparate systems. Network fragmentation can cause interoperability issues and inefficiencies.

Scalability Bottlenecks – Scaling microservices-based 5G networks demands a robust orchestration mechanism to prevent latency spikes and service disruptions.

Security Concerns in a Cloud-Native 5G Environment

Security is a top priority in any cloud-native environment, and integrating it with 5G adds new complexities. With increased connectivity and open architectures, the attack surface expands, making networks more vulnerable to threats.

Data Privacy Risks – Sensitive information traveling through cloud-based 5G networks requires strong encryption and compliance with regulations like GDPR and CCPA.

Container Security – The use of containers for network functions means each service must be secured individually, adding to security management challenges.

Zero Trust Implementation – Traditional security models are insufficient. A zero-trust architecture is necessary to authenticate and monitor all network interactions.

Ensuring Low Latency and High Performance

One of the main advantages of 5G is ultra-low latency, but cloud-native integration can introduce latency if not managed correctly. Key factors affecting performance include:

Edge Computing Optimization – Placing computing resources closer to the end-user reduces latency, but integrating edge computing seamlessly into a cloud-native 5G environment requires advanced workload management.

Real-Time Data Processing – Applications like autonomous vehicles and telemedicine require real-time data analytics. Ensuring minimal delay in data processing is a technical challenge that demands high-performance infrastructure.

Orchestration and Automation Challenges

Efficient orchestration of microservices in a 5G cloud-native setup requires sophisticated automation tools. Kubernetes and other orchestration platforms help, but challenges persist:

Resource Allocation Complexity – Properly distributing workloads across cloud and edge environments requires intelligent automation to optimize performance.

Service Mesh Overhead – Managing service-to-service communication at scale introduces additional networking complexities that can impact efficiency.

Continuous Deployment Risks – Frequent updates and patches are necessary for a cloud-native environment, but improper CI/CD pipeline implementation can lead to service outages.

Integration with Legacy Systems

Many enterprises still rely on legacy systems that are not inherently cloud-native. Integrating 5G with these existing infrastructures presents compatibility issues.

Protocol Mismatches – Older network functions may not support modern cloud-native frameworks, leading to operational inefficiencies.

Gradual Migration Strategies – Businesses need hybrid models that allow for gradual adoption of cloud-native principles without disrupting existing operations.

Regulatory and Compliance Challenges

5G networks operate under strict regulatory frameworks, and compliance varies across regions. When adopting a cloud-native 5G approach, businesses must consider:

Data Localization Laws – Some regions require data to be stored and processed locally, complicating cloud-based deployments.

Industry-Specific Regulations – Telecom, healthcare, and finance industries have unique compliance requirements that add layers of complexity to 5G cloud integration.

Overcoming These Challenges

To successfully integrate 5G with cloud-native architectures, organizations must adopt a strategic approach that includes:

Robust Security Frameworks – Implementing end-to-end encryption, zero-trust security models, and AI-driven threat detection.

Advanced Orchestration – Leveraging AI-powered automation for efficient microservices and workload management.

Hybrid and Multi-Cloud Strategies – Balancing edge computing, private, and public cloud resources for optimized performance.

Compliance-Centric Deployment – Ensuring adherence to regulatory frameworks through proper data governance and legal consultations.

If you’re looking for more insights on optimizing 5G cloud-native integration, click here to find out more.

Conclusion

While the promise of 5G is undeniable, the hidden challenges of cloud-native integration must be addressed to unlock its full potential. Businesses that proactively tackle security, orchestration, performance, and regulatory issues will be better positioned to leverage 5G’s transformative capabilities. Navigating these challenges requires expertise, advanced technologies, and a forward-thinking approach.

For expert guidance on overcoming these integration hurdles, check over here for industry-leading solutions and strategies.

Original Source: https://software5g.blogspot.com/2025/02/the-hidden-challenges-of-5g-cloud.html

0 notes

Photo

Hinge presents an anthology of love stories almost never told. Read more on https://no-ordinary-love.co

271 notes

·

View notes

Text

SRE (Site Reliability Engineering) Interview Preparation Guide

Site Reliability Engineering (SRE) is a highly sought-after role that blends software engineering with systems administration to create scalable, reliable systems. Whether you’re a seasoned professional or just starting out, preparing for an SRE interview requires a strategic approach. Here’s a guide to help you ace your interview.

1. Understand the Role of an SRE

Before diving into preparation, it’s crucial to understand the responsibilities of an SRE. SREs focus on maintaining the reliability, availability, and performance of systems. Their tasks include:

• Monitoring and incident response

• Automation of manual tasks

• Capacity planning

• Performance tuning

• Collaborating with development teams to improve system architecture

2. Key Areas to Prepare

SRE interviews typically cover a range of topics. Here are the main areas you should focus on:

a) System Design

• Learn how to design scalable and fault-tolerant systems.

• Understand concepts like load balancing, caching, database sharding, and high availability.

• Be prepared to discuss trade-offs in system architecture.

b) Programming and Scripting

• Proficiency in at least one programming language (e.g., Python, Go, Java) is essential.

• Practice writing scripts for automation tasks like log parsing or monitoring setup.

• Focus on problem-solving skills and algorithms.

c) Linux/Unix Fundamentals

• Understand Linux commands, file systems, and process management.

• Learn about networking concepts such as DNS, TCP/IP, and firewalls.

d) Monitoring and Observability

• Familiarize yourself with tools like Prometheus, Grafana, ELK stack, and Datadog.

• Understand key metrics (e.g., latency, traffic, errors) and Service Level Objectives (SLOs).

e) Incident Management

• Study strategies for diagnosing and mitigating production issues.

• Be ready to explain root cause analysis and postmortem processes.

f) Cloud and Kubernetes

• Understand cloud platforms like AWS, Azure, or GCP.

• Learn Kubernetes concepts such as pods, deployments, and service meshes.

• Explore Infrastructure as Code (IaC) tools like Terraform.

3. Soft Skills and Behavioral Questions

SREs often collaborate with cross-functional teams. Be prepared for questions about:

• Handling high-pressure incidents

• Balancing reliability with feature delivery

• Communication and teamwork skills

Read More: SRE (Site Reliability Engineering) Interview Preparation Guide

0 notes

Text

Service Mesh with Istio and Linkerd: A Practical Overview

As microservices architectures continue to dominate modern application development, managing service-to-service communication has become increasingly complex. Service meshes have emerged as a solution to address these complexities — offering enhanced security, observability, and traffic management between services.

Two of the most popular service mesh solutions today are Istio and Linkerd. In this blog post, we'll explore what a service mesh is, why it's important, and how Istio and Linkerd compare in real-world use cases.

What is a Service Mesh?

A service mesh is a dedicated infrastructure layer that controls communication between services in a distributed application. Instead of hardcoding service-to-service communication logic (like retries, failovers, and security policies) into your application code, a service mesh handles these concerns externally.

Key features typically provided by a service mesh include:

Traffic management: Fine-grained control over service traffic (routing, load balancing, fault injection)

Observability: Metrics, logs, and traces that give insights into service behavior

Security: Encryption, authentication, and authorization between services (often using mutual TLS)

Reliability: Retries, timeouts, and circuit breaking to improve service resilience

Why Do You Need a Service Mesh?

As applications grow more complex, maintaining reliable and secure communication between services becomes critical. A service mesh abstracts this complexity, allowing teams to:

Deploy features faster without worrying about cross-service communication challenges

Increase application reliability and uptime

Gain full visibility into service behavior without modifying application code

Enforce security policies consistently across the environment

Introducing Istio

Istio is one of the most feature-rich service meshes available today. Originally developed by Google, IBM, and Lyft, Istio offers deep integration with Kubernetes but can also support hybrid cloud environments.

Key Features of Istio:

Advanced traffic management: Canary deployments, A/B testing, traffic shifting

Comprehensive security: Mutual TLS, policy enforcement, and RBAC (Role-Based Access Control)

Extensive observability: Integrates with Prometheus, Grafana, Jaeger, and Kiali for metrics and tracing

Extensibility: Supports custom plugins through WebAssembly (Wasm)

Ingress/Egress gateways: Manage inbound and outbound traffic effectively

Pros of Istio:

Rich feature set suitable for complex enterprise use cases

Strong integration with Kubernetes and cloud-native ecosystems

Active community and broad industry adoption

Cons of Istio:

Can be resource-heavy and complex to set up and manage

Steeper learning curve compared to lighter service meshes

Introducing Linkerd

Linkerd is often considered the original service mesh and is known for its simplicity, performance, and focus on the core essentials.

Key Features of Linkerd:

Lightweight and fast: Designed to be resource-efficient

Simple setup: Easy to install, configure, and operate

Security-first: Automatic mutual TLS between services

Observability out of the box: Includes metrics, tap (live traffic inspection), and dashboards

Kubernetes-native: Deeply integrated with Kubernetes

Pros of Linkerd:

Minimal operational complexity

Lower resource usage

Easier learning curve for teams starting with service mesh

High performance and low latency

Cons of Linkerd:

Fewer advanced traffic management features compared to Istio

Less customizable for complex use cases

Choosing the Right Service Mesh

Choosing between Istio and Linkerd largely depends on your needs:

Choose Istio if you require advanced traffic management, complex security policies, and extensive customization — typically in larger, enterprise-grade environments.

Choose Linkerd if you value simplicity, low overhead, and rapid deployment — especially in smaller teams or organizations where ease of use is critical.

Ultimately, both Istio and Linkerd are excellent choices — it’s about finding the best fit for your application landscape and operational capabilities.

Final Thoughts

Service meshes are no longer just "nice to have" for microservices — they are increasingly a necessity for ensuring resilience, security, and observability at scale. Whether you pick Istio for its powerful feature set or Linkerd for its lightweight design, implementing a service mesh can greatly enhance your service architecture.

Stay tuned — in upcoming posts, we'll dive deeper into setting up Istio and Linkerd with hands-on labs and real-world use cases!

Would you also like me to include a hands-on quickstart guide (like "how to install Istio and Linkerd on a local Kubernetes cluster")? 🚀

For more details www.hawkstack.com

0 notes

Text

Understanding Kubernetes Architecture: Building Blocks of Cloud-Native Infrastructure

In the era of rapid digital transformation, Kubernetes has emerged as the de facto standard for orchestrating containerized workloads across diverse infrastructure environments. For DevOps professionals, cloud architects, and platform engineers, a nuanced understanding of Kubernetes architecture is essential—not only for operational excellence but also for architecting resilient, scalable, and portable applications in production-grade environments.

Core Components of Kubernetes Architecture

1. Control Plane Components (Master Node)

The Kubernetes control plane orchestrates the entire cluster and ensures that the system’s desired state matches the actual state.

API Server: Serves as the gateway to the cluster. It handles RESTful communication, validates requests, and updates cluster state via etcd.

etcd: A distributed, highly available key-value store that acts as the single source of truth for all cluster metadata.

Controller Manager: Runs various control loops to ensure the desired state of resources (e.g., replicaset, endpoints).

Scheduler: Intelligently places Pods on nodes by evaluating resource requirements and affinity rules.

2. Worker Node Components

Worker nodes host the actual containerized applications and execute instructions sent from the control plane.

Kubelet: Ensures the specified containers are running correctly in a pod.

Kube-proxy: Implements network rules, handling service discovery and load balancing within the cluster.

Container Runtime: Abstracts container operations and supports image execution (e.g., containerd, CRI-O).

3. Pods

The pod is the smallest unit in the Kubernetes ecosystem. It encapsulates one or more containers, shared storage volumes, and networking settings, enabling co-located and co-managed execution.

Kubernetes in Production: Cloud-Native Enablement

Kubernetes is a cornerstone of modern DevOps practices, offering robust capabilities like:

Declarative configuration and automation

Horizontal pod autoscaling

Rolling updates and canary deployments

Self-healing through automated pod rescheduling

Its modular, pluggable design supports service meshes (e.g., Istio), observability tools (e.g., Prometheus), and GitOps workflows, making it the foundation of cloud-native platforms.

Conclusion

Kubernetes is more than a container orchestrator—it's a sophisticated platform for building distributed systems at scale. Mastering its architecture equips professionals with the tools to deliver highly available, fault-tolerant, and agile applications in today’s multi-cloud and hybrid environments.

0 notes

Text

Essential Components of a Production Microservice Application

DevOps Automation Tools and modern practices have revolutionized how applications are designed, developed, and deployed. Microservice architecture is a preferred approach for enterprises, IT sectors, and manufacturing industries aiming to create scalable, maintainable, and resilient applications. This blog will explore the essential components of a production microservice application, ensuring it meets enterprise-grade standards.

1. API Gateway

An API Gateway acts as a single entry point for client requests. It handles routing, composition, and protocol translation, ensuring seamless communication between clients and microservices. Key features include:

Authentication and Authorization: Protect sensitive data by implementing OAuth2, OpenID Connect, or other security protocols.

Rate Limiting: Prevent overloading by throttling excessive requests.

Caching: Reduce response time by storing frequently accessed data.

Monitoring: Provide insights into traffic patterns and potential issues.

API Gateways like Kong, AWS API Gateway, or NGINX are widely used.

Mobile App Development Agency professionals often integrate API Gateways when developing scalable mobile solutions.

2. Service Registry and Discovery

Microservices need to discover each other dynamically, as their instances may scale up or down or move across servers. A service registry, like Consul, Eureka, or etcd, maintains a directory of all services and their locations. Benefits include:

Dynamic Service Discovery: Automatically update the service location.

Load Balancing: Distribute requests efficiently.

Resilience: Ensure high availability by managing service health checks.

3. Configuration Management

Centralized configuration management is vital for managing environment-specific settings, such as database credentials or API keys. Tools like Spring Cloud Config, Consul, or AWS Systems Manager Parameter Store provide features like:

Version Control: Track configuration changes.

Secure Storage: Encrypt sensitive data.

Dynamic Refresh: Update configurations without redeploying services.

4. Service Mesh

A service mesh abstracts the complexity of inter-service communication, providing advanced traffic management and security features. Popular service mesh solutions like Istio, Linkerd, or Kuma offer:

Traffic Management: Control traffic flow with features like retries, timeouts, and load balancing.

Observability: Monitor microservice interactions using distributed tracing and metrics.

Security: Encrypt communication using mTLS (Mutual TLS).

5. Containerization and Orchestration

Microservices are typically deployed in containers, which provide consistency and portability across environments. Container orchestration platforms like Kubernetes or Docker Swarm are essential for managing containerized applications. Key benefits include:

Scalability: Automatically scale services based on demand.

Self-Healing: Restart failed containers to maintain availability.

Resource Optimization: Efficiently utilize computing resources.

6. Monitoring and Observability

Ensuring the health of a production microservice application requires robust monitoring and observability. Enterprises use tools like Prometheus, Grafana, or Datadog to:

Track Metrics: Monitor CPU, memory, and other performance metrics.

Set Alerts: Notify teams of anomalies or failures.

Analyze Logs: Centralize logs for troubleshooting using ELK Stack (Elasticsearch, Logstash, Kibana) or Fluentd.

Distributed Tracing: Trace request flows across services using Jaeger or Zipkin.

Hire Android App Developers to ensure seamless integration of monitoring tools for mobile-specific services.

7. Security and Compliance

Securing a production microservice application is paramount. Enterprises should implement a multi-layered security approach, including:

Authentication and Authorization: Use protocols like OAuth2 and JWT for secure access.

Data Encryption: Encrypt data in transit (using TLS) and at rest.

Compliance Standards: Adhere to industry standards such as GDPR, HIPAA, or PCI-DSS.

Runtime Security: Employ tools like Falco or Aqua Security to detect runtime threats.

8. Continuous Integration and Continuous Deployment (CI/CD)

A robust CI/CD pipeline ensures rapid and reliable deployment of microservices. Using tools like Jenkins, GitLab CI/CD, or CircleCI enables:

Automated Testing: Run unit, integration, and end-to-end tests to catch bugs early.

Blue-Green Deployments: Minimize downtime by deploying new versions alongside old ones.

Canary Releases: Test new features on a small subset of users before full rollout.

Rollback Mechanisms: Quickly revert to a previous version in case of issues.

9. Database Management

Microservices often follow a database-per-service model to ensure loose coupling. Choosing the right database solution is critical. Considerations include:

Relational Databases: Use PostgreSQL or MySQL for structured data.

NoSQL Databases: Opt for MongoDB or Cassandra for unstructured data.

Event Sourcing: Leverage Kafka or RabbitMQ for managing event-driven architectures.

10. Resilience and Fault Tolerance

A production microservice application must handle failures gracefully to ensure seamless user experiences. Techniques include:

Circuit Breakers: Prevent cascading failures using tools like Hystrix or Resilience4j.

Retries and Timeouts: Ensure graceful recovery from temporary issues.

Bulkheads: Isolate failures to prevent them from impacting the entire system.

11. Event-Driven Architecture

Event-driven architecture improves responsiveness and scalability. Key components include:

Message Brokers: Use RabbitMQ, Kafka, or AWS SQS for asynchronous communication.

Event Streaming: Employ tools like Kafka Streams for real-time data processing.

Event Sourcing: Maintain a complete record of changes for auditing and debugging.

12. Testing and Quality Assurance

Testing in microservices is complex due to the distributed nature of the architecture. A comprehensive testing strategy should include:

Unit Tests: Verify individual service functionality.

Integration Tests: Validate inter-service communication.

Contract Testing: Ensure compatibility between service APIs.

Chaos Engineering: Test system resilience by simulating failures using tools like Gremlin or Chaos Monkey.

13. Cost Management

Optimizing costs in a microservice environment is crucial for enterprises. Considerations include:

Autoscaling: Scale services based on demand to avoid overprovisioning.

Resource Monitoring: Use tools like AWS Cost Explorer or Kubernetes Cost Management.

Right-Sizing: Adjust resources to match service needs.

Conclusion

Building a production-ready microservice application involves integrating numerous components, each playing a critical role in ensuring scalability, reliability, and maintainability. By adopting best practices and leveraging the right tools, enterprises, IT sectors, and manufacturing industries can achieve operational excellence and deliver high-quality services to their customers.

Understanding and implementing these essential components, such as DevOps Automation Tools and robust testing practices, will enable organizations to fully harness the potential of microservice architecture. Whether you are part of a Mobile App Development Agency or looking to Hire Android App Developers, staying ahead in today’s competitive digital landscape is essential.

0 notes

Photo

Hinge presents an anthology of love stories almost never told. Read more on https://no-ordinary-love.co

271 notes

·

View notes

Text

Networking in Google Cloud: Build Scalable, Secure, and Cloud-Native Connectivity in 2025

Let’s get real—cloud is the new data center, and Networking in Google Cloud is where the magic happens. After more than 8 years working across cloud and enterprise networking, I can tell you one thing: when it comes to scalability, performance, and global reach, Google Cloud’s networking stack is in a league of its own.

Whether you’re a network architect, cloud engineer, or just stepping into GCP, understanding Google Cloud networking isn’t optional—it’s essential.

“Cloud networking isn't just a new skill—it's a whole new mindset.”

🌐 What Does "Networking in Google Cloud" Actually Mean?

It’s the foundation of everything you build in GCP. Every VM, container, database, and microservice—they all rely on your network architecture. Google Cloud offers a software-defined, globally distributed network that enables you to design fast, secure, and scalable solutions, whether for enterprise workloads or high-traffic web apps.

Here’s what GCP networking brings to the table:

Global VPCs – unlike other clouds, Google gives you one VPC across regions. No stitching required.

Cloud Load Balancing – scalable to millions of QPS, fully distributed, global or regional.

Hybrid Connectivity – via Cloud VPN, Cloud Interconnect, and Partner Interconnect.

Private Google Access – so you can access Google APIs securely from private IPs.

Traffic Director – Google’s fully managed service mesh traffic control plane.

“The cloud is your data center. Google Cloud makes your network borderless.”

👩💻 Who Should Learn Google Cloud Networking?

Cloud Network Engineers & Architects

DevOps & Site Reliability Engineers

Security Engineers designing secure perimeter models

Enterprises shifting from on-prem to hybrid/multi-cloud

Developers working with serverless, Kubernetes (GKE), and APIs

🧠 What You’ll Learn & Use

In a typical “Networking in Google Cloud” course or project, you’ll master:

Designing and managing VPCs and subnet architectures

Configuring firewall rules, routes, and NAT

Using Cloud Armor for DDoS protection and security policies

Connecting workloads across regions using Shared VPCs and Peering

Monitoring and logging network traffic with VPC Flow Logs and Packet Mirroring

Securing traffic with TLS, identity-based access, and Service Perimeters

“A well-architected cloud network is invisible when it works and unforgettable when it doesn’t.”

🔗 Must-Check Google Cloud Networking Resources

👉 Google Cloud Official Networking Docs

👉 Google Cloud VPC Overview

👉 Google Cloud Load Balancing

👉 Understanding Network Service Tiers

👉 NetCom Learning – Google Cloud Courses

👉 Cloud Architecture Framework – Google Cloud Blog

🏢 Real-World Impact

Streaming companies use Google’s premium tier to deliver low-latency video globally

Banks and fintechs depend on secure, hybrid networking to meet compliance

E-commerce giants scale effortlessly during traffic spikes with global load balancers

Healthcare platforms rely on encrypted VPNs and Private Google Access for secure data transfer

“Your cloud is only as strong as your network architecture.”

🚀 Final Thoughts

Mastering Networking in Google Cloud doesn’t just prepare you for certifications like the Professional Cloud Network Engineer—it prepares you for real-world, high-performance, enterprise-grade environments.

With global infrastructure, powerful automation, and deep security controls, Google Cloud empowers you to build cloud-native networks like never before.

“Don’t build in the cloud. Architect with intention.” – Me, after seeing a misconfigured firewall break everything 😅

So, whether you're designing your first VPC or re-architecting an entire global system, remember: in the cloud, networking is everything. And with Google Cloud, it’s better, faster, and more secure.

Let’s build it right.

1 note

·

View note

Text

Azure DevOps Advance Course: Elevate Your DevOps Expertise

The Azure DevOps Advanced Course is for individuals with a solid understanding of DevOps and who want to enhance their skills and knowledge within the Microsoft Azure ecosystem. This course is designed to go beyond the basics and focus on advanced concepts and practices for managing and implementing complex DevOps workflows using Azure tools.

Key Learning Objectives:

Advanced Pipelines for CI/CD: Learn how to build highly scalable, reliable, and CI/CD pipelines with Azure DevOps Tools like Azure Pipelines. Azure Artifacts and Azure Key Vault. Learn about advanced branching, release gates and deployment strategies in different environments.

Infrastructure as Code (IaC): Master the use of infrastructure-as-code tools like Azure Resource Manager (ARM) templates and Terraform to automate the provisioning and management of Azure resources. This includes best practices for versioning, testing and deploying infrastructure configurations.

Containerization: Learn about container orchestration using Docker. Learn how to create, deploy and manage containerized apps on Azure Kubernetes Service. Explore concepts such as service meshes and ingress controllers.

Security and compliance: Understanding security best practices in the DevOps Lifecycle. Learn how to implement various security controls, including code scanning, vulnerability assessment, and secret management, at different stages of the pipeline. Learn how to implement compliance frameworks such as ISO 27001 or SOC 2 using Azure DevOps.

Monitoring & Logging: Acquire expertise in monitoring application performance and health. Azure Monitor, Application Insights and other tools can be used to collect, analyse and visualize telemetry. Implement alerting mechanisms to troubleshoot problems proactively.

Advanced Debugging and Troubleshooting: Develop advanced skills in troubleshooting to diagnose and solve complex issues with Azure DevOps deployments and pipelines. Learn how to debug code and analyze logs to identify and solve problems.

Who should attend:

DevOps Engineers

System Administrators

Software Developers

Cloud Architects

IT Professionals who want to improve their DevOps on the Azure platform

Benefits of taking the course:

Learn advanced DevOps concepts, best practices and more.

Learn how to implement and manage complex DevOps Pipelines.

Azure Tools can help you automate your infrastructure and applications.

Learn how to integrate security, compliance and monitoring into the DevOps Lifecycle.

Get a competitive advantage in the job market by acquiring advanced Azure DevOps Advance Course knowledge.

The Azure DevOps Advanced Course is a comprehensive, practical learning experience that will equip you with the knowledge and skills to excel in today’s dynamic cloud computing environment.

0 notes