Don't wanna be here? Send us removal request.

Text

In the current era enormous amounts of data are available everywhere, and to handle this data effectively, data analysts use various advanced data analysis techniques. All they need is to search, analyze, get that particular data, and use it according to their needs. How is the huge amount of data handled? How to organize raw data into meaningful and structured data? That is where the data analysis comes into the picture. Sometimes data analysts find it challenging to structure the data due to its complexity in nature. Even though the algorithms of data science are well organized, sometimes data analysts face difficulties.

The right solution to handle complex data effectively is to create a list of the most effective data analysis techniques like identifying inconsistencies, bivariate data analysis, and many more. This blog gives you a list of techniques that effectively handles the data and provides a clear output that helps in taking the right decisions.

Data Cleaning and Processing Technique:

There are various steps involved to ensure the data is accurate, and suitable for the advanced data analytics, and decision-making process. Data cleaning is the process of correcting and identifying the inconsistencies found in the data, outliers, and other missing data. The process also involves standardizing the data with the help of data formats and resolving inconsistencies. Some of the data cleaning and processing tasks are listed below.

Data Cleaning Techniques:

Missing values: Finding out the missing data points and creating strategies to handle those data.

Outlier detection: An outlier is an observation that lies different from other values. Outlier detection is the process of finding out the outliers that may disturb the data analysis.

Duplicate records: Finding out and removing the duplicate entries that may distort the result analysis.

Formats and units standardizing: Creation of strong data formatting and unit conversion process to make the comparisons meaningful.

Data processing:

Data processing majorly focuses on unstructured and meaningless data into a format for suitable analysis. Some of the data processing techniques are listed below.

Filtering: Selection of specific subsets of data based on predefined criteria like categorical values, and numerical values.

Sorting: Organizing specific data in ascending or descending order based on the number of variables.

Aggregating: Combining various data points to a single value using functions like sum, average, or other statistical measures.

Summarizing: Creation of summary tables and descriptive statistics to provide an overview of the data.

Exploratory Data Analysis (EDA) Technique:

The exploratory Data Analysis technique is the process of analyzing and summarizing the major patterns in a dataset. To gain insights and understand the structure of the data, analysts use graphical and numerical techniques.

Other techniques like frequency count, variance, Pareto analysis, visualization, and data cleaning are also used in the Exploratory Data Analysis technique.

Data visualization: This technique helps to present the data in the form of histograms, scatter plots, and box plots for easier identification of patterns.

Summary Statistics: This process helps to create a summary of measures like mean, meridian, mode, and standard deviation.

Statistical Analysis Technique:

Statistical analysis is a technique that is used to draw conclusions from the interpreted data. Some of the techniques used in the statistical analysis are listed below.

Hypothesis testing: This technique helps data scientists to determine whether the observed patterns between the groups are statistically significant or received the data as random results.

Correlation analysis: Correlation analysis measures the strength of the relationship between two variables.

Regression analysis: This technique is used to predict the relation between a dependent variable and more dependent variables.

Machine Learning Technique:

Machine learning is a type of artificial intelligence that uses algorithms to make computers learn and make decisions without the creation of further new programs. In applied data analysis, people use ML for classification and regression. Some of the commonly used Machine Learning algorithm techniques are Random Forests, Support vector Machines, K-Nearest Neighbors, and Neural Networks.

Random Forest: A set of decision tree techniques created using random subsets. A different sample of data creates each decision tree. During this stage, Random Forest combines the predictions of all the data to make the final decision.

Support Vector Machines: Support vector machines are one of the techniques used for the classification and regression of the data to predict the class or value of a target variable.

K- Nearest Neighbors: K-nearest neighbors (KNN) is a prominent classification and regression algorithm used in data science. KNN determines a data point’s “nearest neighbors” based on its distance from other data points in the feature space.

Clustering and dimensionality reduction:

Clustering is a way to put similar data points into groups based on what they have in common. It helps find similarities and trends in the data. In data analysis, businesses often use clustering to divide customers into groups, identify unusual items, and analyze visuals. Dimensionality reduction, on the other hand, tries to reduce the number of features or variables in the data while keeping its most important features. Popular ways for dimensionality reduction are Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE).

Natural Language Processing (NLP):

Data analysis focuses on the relationship between computers and human language in Natural Language Processing (NLP). To gain insights and understand the structure of the data, researchers use graphical and numerical techniques. Human language processing, understanding, and generation are all part of this discipline. Sentiment analysis, chatbots, text classification, and language translation are some of the areas where NLP is useful. Natural language processing (NLP) has emerged as a crucial instrument for gaining insights from unstructured data in light of the growing availability of textual data.

Spatial Analysis Technique:

Spatial analysis is another significant technique that allows data analysts to gain insights from geospatial data and analyze patterns and trends in a spatial context. This technique is especially useful for applications in fields like urban planning, environmental science, and transportation.

In Conclusion:

Data analysis is the core of data science, and it uses a wide range of methods to get useful information out of raw data. Turning data into insights that can be used requires completing each step, from cleaning and preparing data to machine learning and natural language processing (NLP). As technology keeps getting better, so will methods for analyzing data.

Applied data analysis involves the practical application of various data analysis techniques to specific real-world scenarios. Data analysts utilize statistical methods, machine learning algorithms, and natural language processing (NLP) to draw meaningful conclusions and make data-driven decisions.

This will let data scientists find deeper and more useful insights in the ever-growing amounts of data. In this data-driven world, businesses and organizations need to know how to use data analysis techniques to stay competitive and make good choices.

0 notes

Text

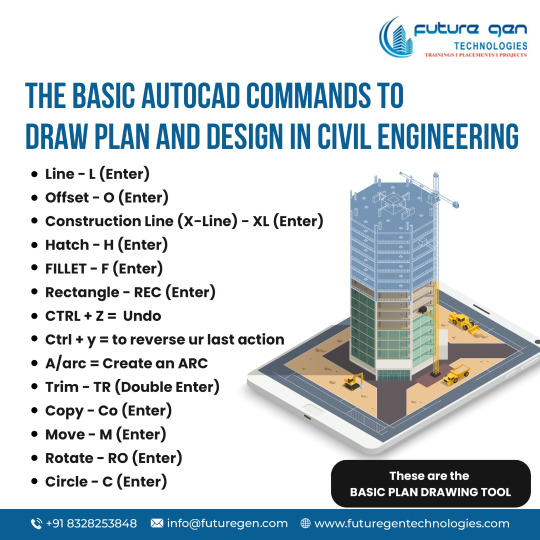

Future Gen provides the real time training on 100% job oriented courses on real time projects. Knowledge of Students who trained under Future Gen will be as good as experienced professionals. Future Gen training & course syllabus can make the student to challenge on the success of international interviews

0 notes

Text

1 note

·

View note

Text

Are you a student or a beginner interested in exploring Salesforce? If yes, you should have heard about the terms Salesforce Management, Service Cloud, and CRM Salesforce during your learning journey. But what exactly is Salesforce, and how to leverage it to build an excellent custom app? Don’t worry, we’ve got you covered!

Salesforce is not a very technical word in the IT world. Every beginner and learner is aware of Salesforce. It is a revolutionary customer management system (CRM) that helps businesses operate and engage with their customers effectively. With the help of Salesforce management, organizations can efficiently manage sales pipelines, marketing campaigns, and customer support.

To know more about Salesforce, read our previous blog:- Salesforce Opportunities: A Comprehensive Guide for Beginners.

This blog will take you through the basics of Salesforce, and a step-by-step guide to creating your very own custom app. Without any delay, let’s start.

How Salesforce benefits for beginners

It offers various advantages for students and beginners that help them showcase their skills in the job market.

User-Friendly Interface:

It provides an intuitive and user-friendly application, making it easier for beginners to navigate and work with it.

A cloud-based platform:

With Salesforce being cloud-based, maintenance and installation are easy, which helps to focus on app development.

Vast community support:

There are thousands of Salesforce experts available in the industry. Joining a massive community of Salesforce developers and experts helps beginners get more clarity about their doubts and challenges.

Customization features:

It allows you to build custom applications that are specially developed for specific business needs, enabling you to create unique solutions.

Better career opportunities:

Learning Salesforce opens many opportunities in the world of CRM and cloud computing.

Service Cloud in Salesforce:

The Salesforce service cloud is one of the company’s most important offerings for businesses. It is designed to provide businesses with excellent customer service and support. Service Cloud enables beginners and experts to manage customer inquiries, track cases, and provide clear-cut solutions.

0 notes