Don't wanna be here? Send us removal request.

Text

Degree Show Participation

I was part of the web team. We worked from the very beginning to the very end developing and deploying the exhibition site. I was personally in charge of collecting personal and artwork information from all DAC students across years 1-3. In order to facilitate this task I liaised with the Logistics team, creating an online form using Airtable.

The main architecture of the website was provided to us by Julian Burgess and Nathan Adams (MA students responsible for developing Chimera Garden). Although this expedited the process to an extent, we still embarked on major work. We redesigned and improved the whole site (as noted when comparing our site and theirs), changing colours, fonts, placements, streams, and filtering systems, while also adding highlighters, animations, interactive text, and slideshow capabilities, among other features.

Implementing the collected information was not a straight out process. This task required chasing people and separating all data into two .tsv spreadsheets (one for artworks, one for artists) with 27 combined fields, individual .md files for artwork descriptions, and formatting profile and artwork photos to the standard. I carried out this process weekly until the last week, when I started updating the site on a daily basis.

In the end, all our efforts paid off. The site not only works and exhibits our works, but it does that in a slick and effective way.

0 notes

Text

Time Is Inherently Cruel

The piece is finished. It was done in time for the exhibition. Everything went according to plan. The work can be seen here: https://www.thomasnoya.com

0 notes

Text

Update

Everything is still going to plan. I started training my final GAN models last Thursday April 8th. I ran into some troubles along the way. My first model collapsed after 24h of training. After doing some research and restarting training from a snap previous to going awry, but with different augmentation parameters, I think I discovered what went wrong the first time. I believe the default colour augmentation in the styleGAN2-ada code was the cause of problems. However, because I was taking snaps every tick, I was able to capture the collapse in an interesting way. Some of these “broken” models do generate interesting results. Although I still trained a new model from zero again, but this time using transfer learning from FFHQ (to speed up the process a bit).

In the end I ended up doing 5 training runs. I’m very happy with the results, some of my GAN clouds are really smooth and delicate. Might just be the best GAN clouds I’ve seen (in all honesty). I think I managed to achieve my goal of getting non-GAN looking results. These feel very analog and real.

I then proceeded to generate seeds from a selection of these pkl files. I decided to generate 6000 seeds per pkl file. In total I generated 72000 seeds. I went through all of them and selected the ones I liked the most for each of these models and proceeded to generate interpolation videos. I spent 2 days tweaking and perfecting the interpolations, playing with different truncation values, amount of seeds, time, and spaces (line-z vs line-w).

I am now in the process of editing my final videos. I have decided to present 5 videos of 2 minutes duration each, instead of 1 long 10 minutes video. After this is done, I still need to finish my behind-the-scenes material that will be on a dedicated website and my documentation.

collapsing

it’s broken, but it’s pretty

back at it

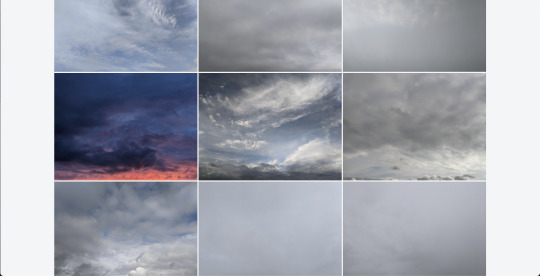

some of the final seeds

0 notes

Text

DATASET 100%

Done! I made it! 6 months, 186 days, 1888 photos. I managed to take 1 photo of the sky every hour (daylight), every day, for six months. My dataset is now complete. Now I have to crop all the photos and start training my final styelGAN2 models. It was a tough ride, not gonna lie. Massive respect to Tehching Hsieh.

This was the last photo (taken on the Williamsburg bridge on 07/04/21 at 19:04)

All photos are on Flickr and Google Photos. The Flickr album is separated into 2 accounts (there’s a limit on free accounts).

Flickr (I/II)

https://www.flickr.com/photos/190500161@N05/

Flickr (II/II)

https://www.flickr.com/photos/191760977@N05/with/50837769478/

Google Photos (all photos together)

https://photos.app.goo.gl/epXQWYBUmTGdq4Ea9

0 notes

Text

March update

I’ve been thinking a lot about two aspects of my project. One, the more time has passed, the more personal this work has become. The initial idea was to artistically contain time through the use of Machine Learning, by crafting a dataset through a durational process consisting of taking multiple photos of the sky every day for 6 months, and using a styleGAN2 model to generate digital copies of the physical world. A translation if you will. However, an overlapping with my Critical Studies dissertation, where I dealt with nostalgia within a Venezuelan context (I’m from Venezuela, I lived there until August 2015), started sparking feelings and thoughts I had had before, but hadn't use in any art projects (that I can recall).

These ideas started taking shape: the inherent cruelty of time – time is inherently cruel. Moments pass and disappear never to be seen again. The sky turns out to be a perfect example of this. Always similar, for millions of years, yet never the same. In my case, blue skies symbolise an easier time. My childhood. Life was easier –or at least it seemed–, my worries were simpler. Things were warm and fun.

Slowly, as I ventured into my pre-teen years, the socio-cultural environment where I resided started to change for the worse. This had immediate and long lasting consequences on me, my family, and million others. Growing up surrounded by ultra-violence, injustice, cultural decay, and many other horrors takes a toll on you. It changes you forever. Even if one is able to get out without physical scars, psychological scars are there to stay. Not only does it alter your behaviour –to this day I cannot walk without being aware of who and what is around me, motorcycle sounds and sudden loud noises scare me to death, and any interaction with police or immigration officers makes me feel like I’m about to die– but it creates a perfect storm for an epidemic of nostalgia.

It was –and it is– common to hear comments in pro of the military dictatorship of Pérez Jiménez in the 50s, as bad as that one was, at least there was economic growth and rule of law, or so people said. Other eras came to people’s minds as well, but for me, it wasn’t about a previous political era, nor a previous period of social stability, for me it was about my childhood years. Years riddled with adventures, fun, and freedom. This marked me. I have grown up to be a very nostalgic and melancholic person. Having been forced to leave my homeland has created a sort of dissociative state of mind, one does an effort to bury this, but it permeates one way or another. These are some of my thoughts, my meditations:

Living two lives, one in the past, one on the present. What if? I have murdered my past selfs, my past lives; now I have to live with the consequences. I have done everything, yet I haven’t done enough. I judge others. How can they complain? Do they even know? They don’t know shit. Who am I to judge? Who am I to know? I know. Forever an outsider. To inhabit between places. Borrowed words. If you look back you lose, but if you don’t, you forget. We drove around, not knowing it was gonna be the last time. Seeing those fields. Crossing that river. The crux. I have seen moments that are now lost. Webs of innocent decisions with decade lasting consequences. What if I had turned right. What if I had said yes. What if I had kicked that ball. Wha if I hadn’t taken that bus. Never-ending adaptation and acculturation. The thing about immigration is that you can never really go back to exactly where you came from because that place no longer exists once enough time has passed. The person you were back in that first home also doesn’t really exist anymore. Your struggles and your triumphs, your experiences and your age have also changed who you are. That ‘you’, who once lived in that place that no longer exists, also no longer exists. How can I detach?

I’ve come to the conclusion that I need to adapt my work slightly for web. Originally, this was supposed to be an immersive installation. With 4 large projections and surround sound. This would’ve facilitated the experience of witnessing subtle changes in my GAN skies. Given that the exhibition will be online, I think I need another way to hook audiences. As I believe seeing a small video of GAN skies will inevitably become boring after some seconds (if I’m lucky minutes). This is where the previous written thoughts come into play. I’m gonna add on-screen text to my final video. Red subtitles. Sentences, passages, words. I will use them to craft a narrative. It’ll be like a stream of consciousness. I believe these thoughts and experiences are universal among migrants and refugees, among people who were forced to flee. I think it works well with the main concept of using Machine Learning to try to contain time, moments. To recreate them. Given them new infinite life inside the digital world, where things can persist till eternity –or till the servers are disconnected.

Influences: Moshin Hamid (Exit West), Vahni Capildeo (Measures of Expatriation), Svetlana Boym (The Future of Nostalgia), (Arcade Fire (The Suburbs), Terrence Malick (The Tree of Life, Knight of Cups, A Hidden Life, Badlands, Days of Heaven).

The second thing I've been thinking about it the actual structure of my GAN skies. So far my options are:

1) create a whole day, from sunrise to sundown and spread it among x minutes.

2) create several days spread among x minutes, which will create more of a time-lapse vibe, unless I go for something extremely long like 2h (which I don’t want to, I wanna stay around the 12min mark).

3) don’t have a logical structure and have random seeds populate x minutes.

This is where I’m at wight now, at least in terms of content and concept. Technically speaking, I feel alright. I have done tests in TensorFlow through Paperspace and it’s just a matter of waiting till I have my final dataset on the first week of April. I still plan to use Max to create my soundtrack. I’ll probably mix the final track in an app/software that allows to do binaural mixes, as I think this is a good replacement for mi initial 5.1 surround sound idea.

Along these lines:

v1

youtube

v2

youtube

0 notes

Text

12 photos

Now that days are finally longer, I’m extending the daily amount of photos I take from 10 to 12 (for the remaining days).

0 notes

Text

February Update

My dataset is going well. By the end of the day I will have 1298 photos.

Prototyping styleGAN2 models using Paperspace is also going well. In fact, I’ve been thinking for the past few weeks how to expand my project, as I’m feeling very confident with time and technicalities. I think I’m gonna use Max to create the soundtrack for the piece, using some sort of generative ambient patch using field recordings (e.g. birds, frogs) from the place I grew up in. This way I can add a personal element to the project and tether the nostalgic element of capturing time via a durational process with my own childhood memories. This patch could also be modified in real time by the video.

I’m not feeling very fond of the idea of doing video processing to create a 3D effect anymore. I guess we’ll see.

I’ve also been doing audio reactive GAN tests using Hans Brouer Colab notebook. One thing I find interesting about his code is that he uses Network Bending to export videos in 1920x1080. I might take this idea if I decide to have my final video in 16:9 instead of 1:1. Although I could do this by cropping in editing, it could be interesting to see how the Network Bending technique would look on GAN skies where alterations might be more subtle than in FFHQ faces.

Audio Reactive GAN

youtube

youtube

Linear Interpolations

youtube

youtube

0 notes

Text

Audio Reactive GAN Test

Testing the Audio Reactive GAN Colab notebook by Hans Brouwer. I used the styleGAN2 model I trained a few months ago, it’s not the best, but it works as proof of concept.

youtube

0 notes

Text

24th Jan: Work in Progress update

My final project will be an ambient-GAN-living-painting that will be presented as either an installation or an online film.

Since the proposal: I have kept taking photos for my dataset (I’m at 1098). I have successfully trained several styleGAN2 models outside of RunwayML (on Paperspace). I have a clearer technical idea of what I have to do and I feel very confident that I will achieve all my goals.

The piece will probably be exhibited as an online film hosted on the exhibition site (due to covid-19).

The next steps are: Keep building the dataset. Make an updated prototype. Consider using Max to make the soundtrack. Reflect on the piece (what am I doing and why? what does it mean? why am I using ML and not other techniques?). Should I train a second model with a different dataset? If so, which and how?

0 notes

Text

New Flickr account

I had to create a second Flickr account, as free accounts only allow 1000 photos. I got to 998 yesterday. I decided to create a second account for the rest of my photos rather than paying a monthly subscription for Flickr Pro.

Here’s the new album (II/II):

https://www.flickr.com/photos/191760977@N05/with/50837769478/

Old album (I/II):

https://www.flickr.com/photos/190500161@N05/

0 notes

Text

First styleGAN2 tests outside of RunwayML

I finally produced images and latent space walk videos with my own styleGAN2 models outside of RunwayML. I used TensorFlow on a Paperspace virtual machine.

I used the stylegan2-ada fork by Derrick Schultz. I trained three models over two days. Two models were trained from scratch, something not possible on RunwayML. I was really curious to see what the first steps looked like when transfer learning is not used. One model was trained using transfer learning from FFHQ.

I used a datasets comprised of all runway photos of Iris van Herpen, Richard Malone, and Richard Quinn. All these photos were found on vogue.com and were scraped by hand.

Reals

Fakes: Trained from scratch

Until eventually I reached this level. This model was trained for 50 ticks.

Fakes: Trained with transfer learning from FFHQ

Until eventually I reached this level. This model was trained for 115 ticks.

Now I have to test generating images and videos using the skies dataset I have been building.

0 notes

Text

MoMA 1h

Short visit to MoMA on Dec 24th. They closed early and I forgot to check the schedule. I only had time for a quick 1h visit, but I still found some cool visual references.

I really liked this idea of mostly just using “untitled”

I really liked this way of presenting projections

0 notes

Text

NeurIPS 2020

I’m attending a series of really cool workshops today.

https://neurips2020creativity.github.io

0 notes

Text

46 Days in – 137 to go: Update

I took the first photo for this project on Wednesday October 7th at 10:00. I have been taking a photo of the sky every hour for 46 days. That is 451 photos as of now (November 21st 10:00).

In that time I have adjusted the start and end times of my photo schedule, as I started taking the first photo at 08:00 and my last one at 17:00, but when the days started getting shorter I changed to 07:00 and 16:00.

Doing this has had an impact in my life. During the first few days I had a bit of trouble remembering to take the photos, so I started using an alarm that I would update every hour to keep me involved. This worked, but the I changed to having 10 alarms that repeat every day, so I don’t have to waste time setting alarms every hour (also so I don't forget).

When I started waking up at around 06:30 to take my 07:00 photo and then start my work and uni day, I noticed an increased productivity feeling at the end of my days. It made me do more and feel more accomplished. However, not all is good, sometimes it’s made me feel really stressed, specially on weekends. Will I wake up on time to take my 07:00? What if I deice to keep sleeping one more hour between photo no. 1 and photo no. 2, will I wake up again? Today I didn’t hear any of my pre-seven alarms and missed my 07:00 photo, which made me feel like shit as soon as I woke up at 08:00. I’m still upset about this 2 hours later. I will compensate this missed photo by taking one at 17:00, but it’ll be dark and I won’t be happy. I hope this doesn’t happen again.

It’s also been incredible to actually see every day and every hour how much the sky changes, how different every minute and every hour are. It is a canvas in constant flux. That’s made me cement my early view of this project as an evolution of still life painting –a living painting.

I should clarify that even though my alarms are on the hour and I do try my best to take them at that precise moment, sometimes I have to take them a few minutes later: maybe I have a work call, I’m doing homework, I’m in class, I’m out, etc.

Anyway, it sounds like an easy thing to do, but when you add up the amount of days I’ve done and I still have to do, it’s not so easy anymore. I am committed though, I will keep building my dataset like this. Even though I hope I stop making mistakes, all I can do when they happen is learn from them.

I’m flying on December 14th and I’m already stressing about being sure I have a window seat so I can take my photos during the flight.

One more thing, a couple week ago I did a test with RunwayML and my short dataset at the time (250 photos) and I realised I had too many greys and blues, but no red nor yellows. Ever since that day I have been trying extra hard to get sunrises and sunsets whenever they are visible.

0 notes

Text

Pipeline

Here’s the current pipeline I have designed:

1: Dataset.

2: StyleGAN2 processing demos.

3: StyleGAN2 processing final.

4: 3D Displacement shaders.

5: Exhibition Option A.

6: Exhibition Option B.

and here’s a model zenithal view blueprint for Option B.

0 notes

Text

Prototype 1

Just finished a small quick test. This interpolation video was generated from a model I trained on RunwayML for 3 hours (2500 steps). The dataset only had 250 images, so not great, but this is only like 14% of the images I’ll have by April. Overall, it’s just a small reference.

youtube

0 notes