The final project of my Creative Computing degree at Goldsmiths University, by William Fish

Don't wanna be here? Send us removal request.

Text

User testing session 2

I conducted this a short while ago but I have been busy with the write up, so i’m doing this now.

I rolled the last two sessions into one, mostly due to timing issues. I found it hard gathering people for the test as well, so relied on my housemates being home. I cut a lot of the games short as errors came up, normally these could have been ignored but I wanted to check the issues straight away to start thinking of a fix.

The video below has a successful game at the end, with some fast forwarded mechanic testing and debugging in the beginning and middle.

youtube

0 notes

Text

System diagrams

Network diagram

Game logic program flow

Robot circuit diagram

0 notes

Text

Rotation alterations

The robots now have extra code that checks for another condition to stop the robot rotating. This, in tandem with the previous attempts to stop over rotation, have put the success rate a lot higher. The middle sensors have also been moved, as seen in the below picture.

The more forward orientated sensors are far better for line following.

0 notes

Text

QR codes for Robot websites

The two websites are now functional and awaiting players, which they’ll access either using a link or the QR codes below

Robot A

https://www.doc.gold.ac.uk/~wfish001/recogbb/robot_a.html

Robot B

https://www.doc.gold.ac.uk/~wfish001/recogbb/robot_b.html

0 notes

Text

Speech recognition

I’ve got a basic system in place to turn speech recognition into commands for the robots. I’ve made the UI nice and simple, and can add more instructions post user testing.

The video shows how it works, i’m just waiting for lost robot parts to make robot 2 now.

youtube

0 notes

Text

Implementing the input

After re-evaluating my project and reviewing my earlier goals for the project, I’ve decided to first implement voice control for the robots instead of using machine learning for the time being. While I’d always intended there to be multiple forms of inputs, user testing session 1 highlighted the need for 2 webcams, and due to cost this fouled on one of my core design principles, to be cost effective. Speech recognition still fits my goals, to challenge the standard modes of input, and to utilise the power of modern recognition software, be it visual or auditory.

The voice control will be ran off the players phones, which also eradicates the need for me to purchase whiteboards. I’m going to be sending the voice data to a firebase database, which the python script will pull from and send to the robots.

I may add machine learning features in the future if I can find a cost effective way to implement this.

0 notes

Text

Websockets update

A small code review showing how the Pi 0 robots will receive instructions from the server. It’s simple but I’m unsure how scalable it is, either way, it works for this.

youtube

0 notes

Text

Working systems

youtube

I made a few changes to the alignment of the sensors, and adjusted the code concerning the rotation. It can now meet another condition to stop, which means the robot may be heavily misaligned. However, the line adjustment system performs very well, and can account for a large variance in rotation from its first move.

Using a hard surface instead of the cardboard has also made a big difference in performance.

0 notes

Text

Rotation update

The rotation works well for the most part, but sometimes it seems to completely misalign itself and not rotate 90′. The cardboard may be causing an issue so i need to try this on a hard surface like a table top. I’ve added a crude timer which works out how long a normal rotation should take, and then says if took longer than that, that it should rotate back. This works most of the time in fixing the errors, but I’d like to diagnose the problem and decide if i can fix it properly before relying on the timer, which has its own issues.

Video shows how many successful rotations it can make in a row without over rotating (rotations are executed 90′ per move, anything more is in error). It’s hard to debug when sometimes it’s very accurate.

youtube

0 notes

Text

Line sensing: rotation

The line sensors now follow a line, but have difficulties straightening up still. The rotation is working and the robot stops automatically. Going to try matte tape and making sure i apply the tape completely straight.

Video demonstrating progress below:

youtube

0 notes

Text

Chassis prototype

The tcrt5000 sensors work best when they’re consistently placed and wont move around. I designed a small bracket that is mounted underneath the robots to allow me to place them exactly where I need.

I go over a few of the new additions in the video below:

youtube

0 notes

Text

Laser cutting: Chassis and Battlefield

The design process for each are shown below.

black cut outs (fill to be removed)

white cut outs (feint lines)

Schematic of robot chassis

Turning into a laser cutting design

Ready to be cut

0 notes

Text

Testing TCRT5000 sensors

The below image/videos are using the TCRT5000

youtube

The sensors in the above video are not yet wired into the raspberry pi, but the LED does still change when the black line is under the sensor. They’re a lot more precise than I had though, which is a bonus.

Update:

Working with lines

youtube

Update:

Sensors are now mounted underneath, and central, shown in the video and image below. However, without a proper mounting system and chassis, the sensors move around making debugging difficult. I need to create the chassis to allow me to mount everything properly.

youtube

0 notes

Text

Game Logic - Collision

Hopefully this is the last update on game logic for a while, it’s been frustrating getting everything working properly but it’s at a state ready to move on now.

youtube

Update

The collision detection works with the escape pod, but without implementing some form of pathfinding I won’t be able to return the robot back to its home position. I explain the problem in the below set of screenshots.

Setting the position to the same column allows me to demonstrate this in a clearer fashion. From this position I make the moves, A = forward x4 = A5, and B = right x3 + forward x1 = A2.

As you can see, Robot A only knows to go forward. The escape mechanism works by reversing the previous moves. This forward x4 will turn into backward x4 when I activate the escape mechanism.

Now by activating the escape mechanism (the right x3 instructions are only so i didn’t collide again after) Robot A can only go back using the opposite of what it’s done already, which makes it collide. Robot B just ran itself into the wall to maintain position.

Without pathfinding this will be impossible to complete, and it may make for interesting strategy if you know you could block a fleeing player.

0 notes

Text

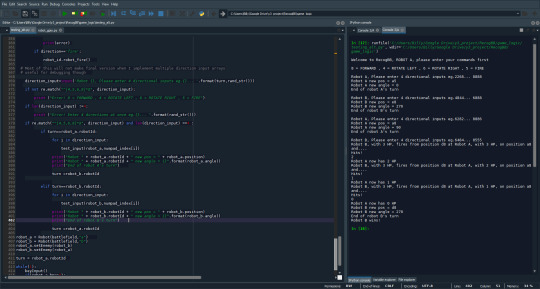

Game Logic - Escape

The primary reason for an escape mechanism was to allow players to get themselves out of bad situations where they’d otherwise have to face death. Each player has 1 ‘escape round’ that will retrace their steps and return them to their starting position and angle. A video explanation is below;

youtube

And a sample of the main important code below; [REPLACE WITH COMMENTED VERSION]

0 notes

Text

QOL - Calibration

The Robots might run into issues with placement once certain conditions change, such as battery life. To ensure the robots always remain in the central line of movement, and to rotate correctly, I’m going to attempt to use some form of IR sensors that’ll form an array, to keep the robot on a black line, as seen below. This will more than likely be made out of tape in initial tests.

My first iteration of this will have 3 sensors per robot, where two will sit either side of a line to keep the robot moving forward, with 1 central sensor to ensure the robot rotates the correct amount, as seen below.

There are a few options for the sensors...

TCRT5000

Consists of an infrared emitter and phototransistor that uses different IR reflectivity to recognize a change between white and black paper. Easy to wire up, should have decent results.

LSS05

Similar to above, but a bit more precise, wiring is also not too difficult to get around. However the product is hard to find and may end up being quite expensive.

QTR-1RC

Bit more expensive and not much documentation of them online as per working with Raspberry Pi. Readily available.

0 notes

Text

Game Logic 4

The game is now mostly ready for a model to predict an image and create a set of directions for the robots. There will be a lot of small things that need changing in the future, such as ammo, when the fire happens etc but moving the robots around virtually and physically will now be possible.

youtube

The code is designed so I can swap out the input from keyboard mouse into the webcams model with little trouble. The keyboard and mouse will still remain a form of input for debugging and potentially a remote gameplay feature.

0 notes