#AI Learning Tools Help Students

Explore tagged Tumblr posts

Text

Make Studying Easier with Desklib AI Learning Tools

No more struggles with complex topics and brain-scratching questions. Desklib's AI Learning Tools will change your study routines once and for all: faster, smoother, and more pleasant. Be it tough assignments, exam preparations, or just the exploration of new concepts, here we are at your side.

Artificial Intelligence is remodelling education and changing the way students learn. You can save a lot of time in searching for concepts using Desklib, an AI-driven platform, and focus on understanding them. Let's dive into how these tools can redefine learning for students.

What Makes AI Learning Tools a Game-Changer?

AI Learning Tools, in particular, can serve almost like a personalized tutor: available to anyone, always on demand for personalized support. Their power comes in three clear ways:

Personalized Learning Experience: Stuck on a concept? These resources offer individualized explanations and build customized practice exercises to meet your needs.

Save Time: No more hours of finding a solution. Desklib provides solutions instant and accurate so that you can spend time learning concepts.

Confidence in Your Work: The AI answer checker helps you build accuracy by reviewing your solutions for errors and suggesting improvements so that you can confidently submit your assignments.

How Desklib AI Learning Tools Help Students

At Desklib, we're committed to making studying easier. Here's how our tools can help:

Instant AI Help for Students: Stuck on a math problem or need help drafting an essay? Simply enter your query, and our tools provide step-by-step guidance, acting as your ever-reliable study buddy.

AI Academic Assistance for Better Results: Upload your work to receive suggestions, edits, and feedback on clarity and argument strength. This feature will enhance both the writing and conceptual knowledge of concepts that are usually challenging.

AI Answer Checker: Eliminate the stress of uncertain answers as the AI answer checker will make sure your solutions are correct and error-free with no stress.

Why Choose Desklib for Your Study Needs?

Desklib is more than a study platform; it's your partner for success. Here's what makes it different:

Always Accessible: Study anywhere, anytime with our 24/7 tools.

User-Friendly Interface: No technical knowledge or prowess is required; the platform is very easy to use.

Reliable Resources: Access an enormous collection of high-quality sample assignments along with detailed explanations.

Full Support: You will never feel at a dead end with the help of tools like AI Academic Assistance.

Learning in the Future with AI

Desklib's integrated AI Learning Tools mark a new era in education. With features like Instant AI Help for Students, AI Academic Assistance, and the reliable AI answer checker, you can achieve more in less time, reduce stress, and make learning enjoyable.

Conclusion

Studying should not be a headache. Desklib's AI Learning Tools make it effortless for you to ace an exam with confidence. From personalized academic support to instant help, all our tools are designed to make studies simpler for you. Start with Desklib today and let the magic of AI amaze you!

0 notes

Note

interesting ideas about AI art and by no means am I trying to send hate but I believe majority of people hate AI art is because it's truly just the byproduct of a giant plinko board bouncing through pieces of art made by artists who put love and care and SOUL into their work. A visual product of a math formula. While it's "art" in the most litteral sense, not having a true human behind it putting though and effort into its every detail, for many people (myself included) devalues it from a tradition artists work.

I'm a firm believer in the idea that AI art is inherently unequal to non Ai art, specifically for this reason. (Hope this made sense sorry if it's incoherent)

I agree in that sense there. I personally do not find myself wanting to engage in a work when I purposefully know the creator had used AI to create the entire product. Something something,,,I cannot find myself getting invested in something that is little more than a product. I don't wanna read a fic about my blorbos when it was written by Chat-GPT

I also agree with the idea that a lot of people hate AI art because of this heavily emotional, debatably reactionary mindset that stems from one thing: fairness. It's the same sort of emotion I find one gets if all of the work on a group project gets shafted onto them whilst their fellow peers sit idly by. It feels unfair to sink hours into your craft, spending all this time fighting to develop your skills and flourish as an artist, only to see someone type half a paragraph and have a machine spit back something that looks not half bad. Let's be honest with ourselves here and say that AI art, at least in a visual regard, has progressed quite a bit to the point where most of the mistakes people find can be dismissed as wonky perspective and the line art being a bit fucky, which is something a ton of artists struggle with too

People develop a sort of a superiority complex over it. I can't blame them honestly. A number of times I've felt it too when people tell me they're using Chat-GPT as though it were Google and when I see my family members and friends playing around with AI art. I gotta bite my tongue and choke back a chortle, both because it's kind of a dick move and also because I don't want to relish in this feeling. It's infectious though to feel as though you have an edge over another person just because you abstain from using Chat-GPT or whatever. Not to be all "grrgrgrr you should LOVE Chat-GPT and if you dare to say anything bad then you are EVILL!!" of course though. It's emotions. They're messy, intense, and oftentimes you don't really realize when you're feeling since you get locked into your perspective. Yet, I think it's important to realize a lot of hatred of this generative AI stems from emotions. Reactionary ideals and claims stem from emotions after all

I think ultimately what the conversation about generative AI should revolve around is about the concerns of labour. The several strikes from a while back from VFX artists and scriptwriters come to mind. They are most at stake from generative AI as tools like Chat-GPT are cheaper and more cost-effective than paying an actual employee for their time and effort. I would also mention the environmental issues, but if we were to talk about that we would also have to acknowledge the fact that so, so much water is being used up daily to generate power for servers. Hell, this post alone will probably contribute to drying up some marsh in the greater scheme of things

Anywho yada yada TL;DR: I agree yes but I also think it's important to recognize that a good chunk of your hatred to Chat-GPT stems from feeling cheated and a sense of pride and superiority over others for simply not using it. There is no quality to Chat-GPT that makes it inherently evil. I can't get upset at my grandma for sending me a photo of her and her dog that went through an AI anime filter. I can feel maybe some exhaustion when seeing a fellow classmate using Chat-GPT to write their essay, but ultimately I write my own work for the love of the game. I can get upset however at those in higher power who use it to push artists out of jobs. Chat-GPT is a tool that has its pros and cons and I think it's reductive to just basically sit there and hiss like a vampire when presented with a cross when faced with the mere word "AI", especially when your only big argument for disliking it is based purely in feeling cheated when someone types a prompt into a program and art that would've taken you seven hours to draw gets spit out in about a minute or two

#sp-rambles#Not to mention there's nuance to be had when discussing students and employees using AI to do menial tasks#I'd rather students use something like Wolramalpha or whatever to do their math homework as Chat-GPT is functionally useless#I've seen it straight-up make up proofs and just do shitty math that SEEMS right on the surface but is meaningless when actually applied#And I also would hope that a student would write the damn essay instead of handing it off to Chat-GPT#As essays (in particular crit lit ones) are designed so you show the capacity to analyze and think about ideas presented to you#But ultimately I think Chat-GPT is seen as a release from these things since let's be real it is pretty agonizing to do homework at times#It's a convenient solution that encourages a person not to participate and learn but to hand off their work onto a tool#It provides respite. It saves one from restless nights and staying awake till the morning churning out a barely comprehensive paper#Once more I do not like generative AI. I don't use Chat-GPT#I think it is only important to see the other side. To comprehend why a person may do things and to recognize your own short-comings#For example I've interacted with a number of international students who have said they use Chat-GPT or other generative AI to help study#because English is their second language and they can't afford to sit there in agony trying to understand something in a unfamiliar languag#Not when their families back home are paying 20 grand a semester to help them get a degree and they also need a to work eight hours to live#There's a nuanced discussion to be had here other than generative AI good or bad#Anyways enough rambling I need to get back to mass reblogging sad white boy and yellow cloak man yaoi and watch YouTubers play video games#ask

9 notes

·

View notes

Note

hey friend, i know you’ve been a pretty serious supporter and user of duolingo for a long time (so have i!), so i’m curious what your feeling is about the announcement that they’re going to be doubling down on using more and more AI for content creation, including using it to avoid having to hire actual humans?

personally, i’m really disappointed - i’ve disliked how much they’ve been using it so far, but the app is otherwise a great tool, and all of the other apps seem to use it, too, so it’s not easy to just jump ship to an app that isn’t using AI. i’ve seen a lot of responses that are like “hurr hurr just use a textbook idiot” which i find really unhelpful; learning from an app is easier and a lot more convenient in a lot of ways than having to use analog materials, especially if you study a high number of languages. still, i don’t ethically feel that i can keep giving them money if this is the direction they’re going.

what are your thoughts?

This is going to be a longer answer than you might have expected.

In 2001, fellow undergrad. Reiko Kataoka (now a professor at San Jose State) resurrected a club that had been dead for a few years at UC Berkeley linguistics: The Society of Linguistics Undergraduates (SLUG). One of its former undergraduate members, Alan Yu (now a professor at the University of Chicago), happened to be a graduate student at Berkeley at the time, so he helped her get it off the ground. The club was exactly what I was looking for at that time: a group for ling. undergrads. to get together and talk about language and linguistics, my new favorite thing. It was great! I even put together a couple phonology problems using my conlangs to distribute at a meeting. The following year I became the second president of the new SLUG and helped to create the SLUG Undergraduate Linguistics Symposium, where I gave my first talk on language creation. Being a part of this club was a major factor in shaping my undergraduate experience at Berkeley.

When I graduated I went to UC San Diego to pursue a graduate degree in linguistics. Part of the reason I chose UCSD was because it was an incredibly inviting atmosphere. Before we accepted they paid for prospective undergraduates down to San Diego and housed them with current grad. students who told them about the program and took them out for dinner, etc. It allowed prospective students to ask questions they wouldn't ask of professors (e.g. who's got beef with who). It was really cool, and so in our second year, we continued the tradition of housing prospective grad. students. Since we both went to Berkeley, my ex-wife (also a Berkeley ling. grad.) and I hosted Klinton Bicknell.

Klinton, it turns out, was the current president of SLUG. I didn't know him while I was at Cal, but we did overlap. It turns out he had renamed the club SLUGS, which I thought was weird. He said "It happened organically" and laughed in an off-putting way. He very much gave off the impression of someone who will smile at you and say whatever is necessary for you to go away. Klinton ended up going to UCSD the following year and I ended up leaving the following year.

Fast forward to 2016. HBO had put the kibosh on Living Language Valyrian, and so I turned to Duolingo. They had previously reached out about putting together a Dothraki course, but I declined, due to having a book out, Living Language Dothraki. With no hope for Valyrian, I asked if they'd be interested in me putting together a course on High Valyrian, which I did. I had some help at the beginning, but, truth be told, most of that course was built by me alone. I became very familiar with the Incubator, where Duolingo contributors built most of their courses. It was a bit clunky, but with enough elbow grease, you could put together something that was pretty darn good. It wasn't as shiny as their in house courses, because they couldn't do things like custom images, speaking challenges, etc., but it was still pretty good.

At the time I joined, everyone who was working in the Incubator was doing it for free. We were doing it because we wanted to put together a high quality course on our language of choice on Duolingo. When Duolingo went public, they realized this situation was untenable, so they began paying contributors. There were contracts, hourly wages, caps on billable hours, etc. It essentially became an as-you-will part time job, which wasn't too bad.

The Incubator faced a couple potentially insurmountable problems. When the courses were created by volunteers, Duolingo could say "This was made by volunteers; use at your own risk", essentially. Once they were paid, though, all courses became Duolingo products, which means they bear more responsibility for their quality. With so many courses (I mean, sooooooooo many courses) it's hard to ensure quality. Furthermore, "quality" doesn't just mean "are the exercises correct" and "are the sentences interesting". Quality means not being asked to translate sentences like "Women can't cook" or "The boy stabbed the puppy". With literally hundreds of courses each with thousands of sentences written by contractors, there was no way for Duolingo to ensure not just that they were staying on brand with these sentences, but that they weren't writing ugly things. There were reporting systems, there were admins that could resolve things behind the scenes, but with so much content, it became a situation where they would have had to hire a ton more people or scale back.

We saw what Duolingo did before with one aspect of their platform that had a similar issue. If you remember way back, Duolingo used to have a "forum", that was a real forum, but for most users, what it meant was on every single sentence in Duolingo users could make comments. These comments would explain grammar points, explain references, make jokes, etc. It was honestly really helpful. But, of course, with any system like that comes trolls, and so volunteers who had come to create language learning resources also found themselves being content reviewers, having to decide which comments to allow, which to delete, who to ban, etc. As Duolingo became more popular, the troll problem grew, and so eventually Duolingo's response was to kill the forum. This mean you were no longer able to see legitimate, helpful comments on sentences. They threw the baby out with the bathwater.

This is why it was no surprise to me when they shuttered the Incubator. The technology was out of date (from their standpoint, you understand. Their in house courses were way more sophisticated, but they couldn't update the Incubator without potentially breaking hundreds of courses they hadn't created themselves), quality assurance was nearly impossible, and they were also paying people to create and maintain these out-of-date courses they had no direct control over. Of course they closed it down. It would've taken a massive investment of time and resources (and capital) to take the Incubator as it was and turn it into something robust and future proof (think old Wordpress vs. Wordpress now), and Duolingo wanted to do other things, instead—like math and music. And so the Incubator died.

But that wasn't the only reason. This was something we heard internally and then heard later on publicly. There was rumbling that Duolingo was using AI to help flesh out their in house courses, which was troubling. This was before the big Gen AI boom, but after a particularly pernicious conlang-creation website I won't name had come to exist, so it caught my attention. I decided to do a little digging and see what this was all about, and I ended up with a familiar name.

Klinton Bicknell.

Indeed, the very same Klinton Bicknell was the head of all AI ventures at Duolingo. Whether enthusiastically or reluctantly or somewhere in between, he was absolutely a part of the decision to close the Incubator and remove all the contractors who had created all the courses that gave Duolingo its reputation. (Because, seriously, why did most of us go to Duolingo? Not for English, Spanish, French, and German.)

I know you sent this ask because of the recent news about Duolingo, but, to be honest, when I saw one of these articles float across my dash I had to check the date, because to me, the news was old. Duolingo isn't just now replacing contractors with AI: They already did. That was the Incubator; those were contractors. That is why there won't be more new language courses on Duolingo, and why the current courses are frozen. This isn't news. This is the continuation of a policy that had already firmly in place, and a direction that rests solidly on the shoulders of Klinton Bicknell.

But you don't have to take my word for it. He's talked about this plenty himself:

Podcast (Generative Now)

Article in Fast Company

Article in CNET

Google can help you find others.

At this point there's a sharp and baffling division in society with respect to generative AI. On the one hand, you have those of us who disapprove of generative AI on a truly fundamental level. Not only is the product something we don't want, the cost—both environmental and ethical—is utterly insupportable. Imagine someone asking you, "Hey, would you like a sandwich made out of shoelaces and shit?" And you say, "God, no, why would anyone ever want that?!" And their response is, "But wait! To make this sandwich out of shoelaces and shit we had to strangle 1,000 kittens and drain the power grid. Now do you want it?"

On the other side, there are people who are still—I mean today—saying things like, "Wow! Have you heard of this AI thing?! It's incredible! I want AI in everything! Can AI make my table better? Can I add AI to my arthritis? We should make everything AI as quickly as possible!"

And conversations between the two sides go roughly like this:

A: Good lord, now they're using AI art on phone ads? Something has to stop this… B: Yeah, it's so cool! Look, I can make a new emoji on my phone with AI! A: Uhhh…what? I was saying it's bad. B: Totally! I wonder if there's an AI shower yet? Like, it could control the temperature so you always have the perfect shower! A: Do you know how much power it takes to run these genAI apps? At a time when we're already struggling with income inequality, housing, inflation, and climate change? B: I know! We should get AI to fix that! A: But AI is the problem! B: Hey ChatGPT: Teach me how to surf!

It's frustrating, because the B group is very much the 💁 group. It's like, "Someone was using ChatGPT and it told them to kill themselves!" and they respond, "Ha, ha! Wow. That shouldn't have happened. What a learning opportunity! ☺️ Hey ChatGPT: How do you make gazpacho?" There's a complete disconnect.

In terms of what you do with your money, it's a difficult thing. For example, I've used Apple computers consistently since 1988. I'm fully immersed in the Apple ecosystem and I love what they do. They, like every other major company, are employing AI. If you go over to r/apple any time one of these articles comes out, it's all comments from people criticizing Apple for not putting together a better AI product and putting it out faster; none saying that they shouldn't be doing it. They're all ravenous for genAI for reasons that defy my understanding. And so what do I do? I've turned off the AI features on all my Apple devices, but beyond that, I'm locked in. From one direction, I look like a hypocrite for using devices created by a company that's investing in AI. From the other direction, though, I am using their devices to say what they're doing is fucking despicable, and they should stop—and I'll keep doing so so long as there's breath in my body.

Duolingo isn't necessary the way that, say, a computer or phone is nowadays. Duolingo is still usable for free, though, of course, they make it a frustrating experience to use its free service. (This is certainly nothing exclusive to Duolingo. That's the way of everything nowadays: streaming services, games, social media... Not "Well give you cool things if you pay!" but "We'll make your life miserable if you don't!") If you do use their Incubator courses, though, I can assure you that those are AI-free. lol They're too outdated to have anything like that. Some of those courses are bigger than others; some are better than others. But all of them were put together by human volunteers, so there's that, at least. At this point, I don't think Duolingo needs your money—nor will they miss it. They're on a kind of macro plane at the moment where the next ten years will either see the company get even bigger or completely disintegrate; there's no in between. They're likely going to take a big swing into education (perhaps something like Duolingo University [Duoversity?]) and it's either going to make a ton of money or bankrupt them. I guess we'll have to wait and see.

I've taken the Finnish course in its entirety and we're doing Hungarian now, and I've learned a lot—not enough, but a lot. I'm grateful for it. I like the platform, and I agree with the basic tenets of the language courses (daily shallow intake is better than occasional deep intake; implicit learning ahead of explicit instruction is better than the reverse). I'm grateful they exist, I'm grateful we can still use them (because they can always retire all of them, remember), and I think it's brought a lot of positivity to the world. I think Luis Van Ahn is a good guy and I hope he can steer this thing back on course, but I'm not putting my money on it.

347 notes

·

View notes

Text

i suspect that a huge factor in the defense of students using gen ai (and academic dishonesty in general tbh) comes from the fundamental misunderstanding of how school works.

to simplify thousands of educator's theories into the simplest terms, there are two types of stuff you're learning in school: content and skills. content is what we often think of as the material in school- spelling, times tables, names, dates, facts, etc.- whereas skills are usually more subtle. think phonics, mental math, reading comprehension, comparing and contrasting; though students do those things often, the how usually isn't deemed as important as the what.

this leads to a disconnect that's most obvious when students ask the infamous "when will we use this in the real world?" they have- often correctly- identified content that the content is niche, outdated, or not optimized but haven't considered the skills that this class/lesson/assignment will teach.

i can think of two shining examples from when i was a kid. one was in middle school when they announced that we were now gonna be studying latin, and we all wondered why on earth they would choose latin as our foreign language. every adult promised us it'd be helpful if we went into medicine, law, or religion (ignoring that most of us didn't want to go into medicine, law, or religion), but we didn't buy that and never took it seriously. the truth was that our new principal knew that learning languages gets harder as you get older, and so building the skills of learning a language while it was easy for us was more important than which language we learned, and that's an answer twelve year old me would've actually respected.

similarly, my geometry class all hated proofs. we couldn't think of a single situation where you'd have to convince someone a triangle was a triangle and "look at it, of course it's a triangle" wouldn't be an acceptable answer. it was actually the band director who pointed out that it wasn't literally about triangles; it was about being able to prove or disprove something, anything using facts.

and so, so, so many assignments that are annoying as hell in school make more sense when you think about the skills as well as the content. "why do i have to present information about something the teacher obviously already knows about?" because research, verifying sources, summarizing, and public speaking are all really important skills. "why does this have to be a group project?" because you will have to work with other people in your life, and learning how to be a team player (and deal with people who aren't) is an essential skill. "why do we have to read these scientific articles and learn about graphs?" because if you can understand them, people can't lie to you about them.

now, of course, there's a lot we could do better- especially we as in the american school system. the reason i have an education minor but am not teaching is because of those issues. there are plenty of assignments that are busywork and teachers that are assholes and ways that the system is failing us.

but that doesn't mean you should cut off your nose to spite your face!

the ability to learn and grow and think critically is one of our most powerful tools as people. our brains are capable of incredible things! however, the same way you can't lift a car unless you consistently lift and build up to that, your brain needs to train in order to do its best.

so yeah, maybe chatgpt can write a five paragraph essay for you on the differences between thomas jefferson and alexander hamilton's governing philosophies. and maybe it won't even fuck it up! congratulations, you got away with it. but by outright refusing to use your brain and practice these skills, who have you helped? you haven't learned anything. worse, you haven't even learned how to learn.

258 notes

·

View notes

Text

Partners

Grant Ward x Reader

Masterlist - Join My Taglist!

Written for Fictober 2024!

Fandom: Marvel

Day Thirty Prompt: "I won't let you down."

Summary: Grant's SO is tackling the biggest project SciTech has to offer an academy student, and he's about to be dragged into helping with it.

Word Count: 2,879

Category: Fluff

Putting work into an AI program without permission is illegal. You do not have my permission. Do not do it.

"Out of every single other member in your class, the board has decided to trust you with this assignment. Do you think you're up to the challenge?"

I kept my face carefully neutral, a skill I'd learned from my Ops boyfriend. Inside, my heart was racing and threatening to make an escape across the room and out the door, but in front of the entire SHIELD SciTech Academy board? I could not let that show.

Instead, I kept my back straight, and forced the slightest of smiles onto my face.

"Yes, ma'am. I am up to the challenge. I promise, I won't let you down."

"Good. We look forward to seeing what you come up with."

I kept the smile on my face and nodded, leaning forward enough to be at the very edge of a bow. Then, I turned on my heel and kept my head high as I walked out of the room. My boyfriend had taught me exactly the body language required to convey confidence, and I was putting all of it to use right now.

The second I cleared the doors, I let everything drop.

I took off at a sprint through the hallways of the main SciTech building, ducking through the hallways I knew better than anywhere else on earth, avoiding anybody that might try to stop or question me. I didn't slow down, even once I made it outside. Instead, I sped up, heading for the edge of the SciTech campus.

I was in my last year at the Academy. I was at the top of my class, so for a final project, the board had tasked me with creating a new tool for Field Agents and Specialists. They wanted something the agents could have on them that worked like an emergency button, transmitting information and location if the need arose. But, they also needed it to be basically undetectable to any sensor, easy for agents in the field to operate and conceal, and durable enough to do its job no matter what punishment it got put through. A nearly impossible task outside of these walls, and despite my preparation, still pretty damn hard for me.

I'd been workshopping a prototype for a similar idea all semester, and the board knew it. They liked what they'd seen, so they'd made it my job to finish a true prototype for them to present and then further develop on Monday. It was currently Friday, and my project still had one gigantic, glaring weakness.

No matter what we could come up with in the lab, our field inventions almost inevitably came back with complaints from the agents who actually used them. We could run simulations and tests and try to recreate conditions pretty well in the lab, but it never stood up to the hardships of actually being in the field like we thought it would. Which meant, if I wanted to keep my promise to the board and my standing within SciTech, I needed to get creative.

Fortunately for me, I happened to be dating the best Operations student in generations. Grant Ward and I had met last year and we'd been happily dating ever since, despite how hard it could be sometimes to spend time with each other from different Academy campuses. We'd found workarounds whenever and wherever we needed to.

Thank goodness we'd both agreed to spend time breaking the rules at the beginning of our relationship. I was on the brink of a crisis, and I didn't have time to try to find a way to break into the Fort Knox that was the SHIELD Operations Academy.

In almost record time, I made it to Grant's dorm, using the routes we'd scouted together forever ago. Luckily for both of us, he was a senior enough student with high enough standing to have his own small apartment. I quickly scaled the wall to his second story window, something else I'd learned how to do from Grant.

I didn't even pause as I climbed through the window, landing in Grant's bedroom. I didn't see him, so I closed the window behind me and then hustled into the small living room/kitchen at a jog. I found Grant in the middle of the room, apparently halfway through walking to the kitchen, but he froze in his tracks and was already looking at me as I burst into the room.

"Grant! Thank god you're home. I need your help."

Before I knew what was happening, Grant had pushed me behind him. He kept one hand on my waist, and when I turned to see what the hell he was doing, he had a gun in his other hand and was alternating pointing it at his bedroom door and the front door.

"What's wrong?" he asked me without turning around, his voice deadly serious. I fought to hold in a laugh and didn't bother fighting back against the smile.

"Not that kind of help," I said. "Sorry for scaring you, though."

Slowly, Grant holstered his gun and turned around to face me. Both his eyebrows were raised in a demanding type of question.

"You run in here like you're being chased by a murderer and it's not that kind of help?"

"No, but it is the kind of help that will determine my entire future and has the potential to ruin me and everything I've ever worked for in my entire life ever."

Grant just stared at me for a moment. He blinked, slowly, then let out a long breath. Finally, he nodded.

"Alright. What do you need help with?"

I blew out a breath of relief, then quickly explained the situation to Grant. He'd heard plenty about the leadership of SciTech and how important it was to me to keep impressing them, so this latest project and its associated stakes weren't news.

"So... what exactly do you want me to do?" he asked once I'd finished telling him about the events of my meeting. I gave him the most charming, persuasive smile I could manage, and he immediately frowned.

"I want you to try out the device I've put together. I have a few prototypes, and it's easy enough for me to make another handful for testing. I need feedback on how well they actually work for the intended purpose, for field and ops agents, in a way that I can't predict or test in the lab."

Grant sighed, then nodded and held out one hand towards me.

"Alright. Give me the thing, let's do this."

I grinned. "I love you so much."

****************

Grant and I spent the rest of the day and then some putting my device through various tests. Exactly as I'd been expecting, when Grant took it through various exercises that he went through at Ops, the device had weaknesses I hadn't predicted. From static electricity via crawling across the carpet turning the thing on before its time to the waterproofing failing after being submerged for too long, Grant and I found one problem after another, and each time, I fixed the issue.

"Okay, what if we had standard placement be on the stomach," I suggested, sticking the little round disk of my latest prototype just above Grant's belly button. "Would that protect it from the kind of weapon strikes we don't want it taking on your forearm?"

Grant hummed. "Maybe, but it's no garuntee. Besides, you want an agent to be able to activate the thing when we don't have another option. If my hands are tied behind my back, I won't be able to get to it."

"Dammit. You're right. Okay... what do you think, then?"

"How about the wrist, on the pulse point? I can probably find a way to activate that no matter what, and if a weapon really hits hard there, it won't matter if the device is destroyed."

I frowned. "I hate it when you talk like that."

"I thought you wanted good, honest feedback to improve this device for the SciTech board."

"Yeah, but I still don't like to hear my boyfriend talking about ways he could potentially die." I huffed and crossed my arms. "Still, you're right, and it's a good idea. But... maybe I can find a way to give the device a little death sequence."

"...Meaning?"

"Meaning, if it gets fatally hit like that, it automatically activates. And maybe I could even get it to deploy something that'll have a chance at stopping the bleeding."

"That would be pretty impressive," Grant agreed. I nodded, my mind already whirling with the possibilities.

"Okay. Okay, let's try to add that in, and then we can run it through the tests again."

Grant's eyebrows shot up. "The same tests we just did? Again?"

"Yeah. If I make major changes like that, we need to make sure it doesn't compromise any of the existing systems. Which means re-checking the systems we've already figured out."

Grant let out a long sigh and shook his head. He walked over to me, put an arm around my shoulders, and placed a kiss on the top of my head, then moved towards the kitchen.

"I don't understand why you like all this stuff. But I'll brew some coffee, since you're gonna need it. And I'll make sure the Ops pool is reserved for us when you finish your updates."

I grinned. "You are seriously the best boyfriend in the world, you know that?"

"What was that?" Grant asked, looking up from the coffee pot and feigning innocence like he hadn't heard me. I just smiled and shook my head.

"I said, you're the best boyfriend in the world."

Grant's feigned surprise immediately morphed into the charming smile I loved so much.

"Good. Just checking."

We spent the rest of the weekend like that, making updates and improvements to my little device and then testing how well they worked. Despite Grant's differing opinion, I genuinely loved doing this, more than just about anything in the world. But, even if I'd hated it most of the time, I would've put in the same amount of work for this project. It could very literally save my boyfriend's life some day in the future, after all. Now was not the time to half-ass something and call it good, even without the pressure of the SciTech board looming over me.

Finally, by about two in the morning on Monday, we'd managed to put the device on the wrist through every single test without fault or flaw. It worked as intended, and not a moment before it was supposed to, and if it was destroyed it sent out a final beacon and deployed some emergency blood clotting tech before it went. It was perfect.

"Thank you so much for all your help on this, babe," I muttered, the sleep finally digging its teeth into me now that my task had been accomplished. Grant and I had flopped down on the couch while I'd been going over the data, and now I curled against his side, resting my head on his chest. "I seriously couldn't have done it without you."

"Yeah, you could've," he said, stifling a yawn of his own as he wrapped an arm around my shoulders. "It would've taken you longer, but we both know you still would've pulled this off eventually."

I hummed, a smile on my face as I curled further into Grant's chest.

"Thanks, babe. I love you."

"I love you too, sweetheart."

He kissed the top of my head, his arms wrapped tight around me, and that's the last thing I remember before the world faded to black.

****************

"Babe. Baby. Come on, you need to wake up."

I groaned, squeezing my eyes shut tighter and rolling away from the voice of my boyfriend trying to wake me up for god knows what reason.

"Sweetheart, you have your presentation today. You need to get up."

And just like that, I was wide awake.

I shot straight up, my heart dropping all the way to my stomach. I was in Grant's bed—I guess he'd moved us before passing out himself—but I had no idea if he'd remembered to set an alarm last night. If I missed this presentation, after all the work we'd done-

"It's okay, you have plenty of time," Grant said, probably reading the panic on my face. "It's six thirty, you have another two and a half hours to get ready and get back to SciTech."

I turned to Grant with a scowl.

"You woke me up at six thirty in the morning?" I demanded.

"...You wanted me to let you sleep later?"

I huffed and threw the covers off my legs, scowling as I stood.

"No. I need time to wake up, get back to SciTech, get ready, put together my presentation materials, practice my presentation... I need the two and a half hours. But I'm still mad you woke me up at six thirty."

Grant snorted, but I caught him staring at me with a fond smile in the reflection of the bathroom mirror.

I moved as quickly as I could, gathering the materials I needed and that I'd brought with me to Grant's apartment, then giving him a quick kiss and heading back to the SciTech campus. I showered, put on my most impressive professional outfit, and then spent almost all my remaining time putting the finishing touches on my presentation. Luckily for me, I knew the device in and out after how I'd spent my weekend, and I was absolutely confident in what it could do. Both things helped immensely when it came to giving a good presentation.

I walked into the main building of SciTech with my head held high, and this time it wasn't an act. I found the board waiting for me, and I didn't hesitate to launch into my presentation with absolute confidence. The surprised, excited reactions came almost immediately, and they only fueled my confidence and excitement.

Of course, everyone had a few questions, but I answered them easily. When I'd finished answering questions and officially completed my presentation, the board literally clapped. I beamed at them; I wasn't sure they'd ever done that before.

"Excellent work," said the SciTech chair, her smile beaming. "This is above and beyond what we could've expected. We'll put it through a few final tests, but honestly, I don't expect it to need much. I know you're in the middle of your final semester here, but be prepared to take a trip to the Hub before graduation to help us present this development to Director Fury himself."

My heart exploded in my chest, and I couldn't wipe the smile off my face as I shook hands and said thank yous before heading out of the room. I had a slightly dazy smile on my face as I walked through the SciTech halls, and my shoulders were relaxed for the first time in days as I stepped through the front doors and into the bright sunlight.

"Hey!"

I jumped so high I might've cleared the first floor windows at the sound of a voice coming from the bushes. I whirled around to find Grant crouched there, lurking in the shadows like a murderer.

"Grant? What the hell are you doing?" I demanded, trying to keep my voice low. He wasn't technically supposed to be on our campus, and I didn't want to get him caught, but he'd also just given me a damn heart attack.

Instead of answering, Grant reached up and grabbed my forearm, pulling me into the bush with him. I landed hard against his chest, but I didn't totally mind it as he wrapped his arms tightly around me. Still, I met his gaze with a slightly raised eyebrow. He just smiled.

"I wanted to know how it went. And maybe get a little payback for the heart attack you gave me on Friday."

I huffed a laugh. "Well, mission accomplished."

"Great. So how'd it go?"

I grinned. Grant smiled back, his arms tightening around me, but he waited to celebrate.

"It went amazing. They loved it. I'm gonna help them present it to Fury sometime in the next few weeks."

Grant's eyebrows shot up, and he actually took a shocked half-step back from me.

"You're going with them to present it to Fury?" I nodded. "They never let recruits do that!"

"I know!"

I squealed and jumped up in the air, and a moment later, Grant caught me. He let out a breathy laugh as he spun me around, and neither of us let go for even a second when he set me back down on my feet.

"We need to do something to celebrate," Grant declared, his low voice right beside my ear. "Are you working on any world-changing inventions this weekend?"

I leaned back just enough to grin at him. "No. For once, my schedule's actually pretty clear."

"Good. You wanna come to me, or you want me to come to you?"

"Mm, I'll come to you. As much as I love what I do, I also wouldn't mind a little break from all the work I've been doing lately."

"It's a deal. Come over after your last class on Friday. I'll take care of the rest."

Grant and I shared a smile, then we closed the distance between us for a long, sweet kiss. From making out in the bushes to letting me test my most important projects on him, Grant was always there for me. And no matter what else came out of my career at SciTech, in the Academy and as an agent afterwards, the relationship Grant and I had built together would always be my proudest accomplishment.

***************

Everything Taglist: @rosecentury @kmc1989 @space-helen @misshale21

Marvel Taglist: @valkyriepirate @infinetlyforgotten @sagesmelts @gaychaosgremlin

#fictober24#marvel#agents of shield#grant ward#grant ward x reader#marvel fanfiction#marvel x reader#marvel oneshot#marvel imagine#agents of shield fanfiction#agents of shield x reader#agents of shield imagine#grant ward fanfiction#grant ward oneshot#grant ward imagine#shield academy#aos#aos fanfiction#shield agent#agent grant ward

46 notes

·

View notes

Text

I was at a party yesterday and spoke to a friend's spouse who is wrapping up their teaching master's degree. I asked them about how they get around AI and student plagiarism and what they said blew my mind.

"I know when 'student A' writes something completely outside of their ability that it's AI writing it. 'Student B' who tries hard and makes an effort is easy to find. But at the same time, I want Student A who isn't good at writing to be able to use ChatGPT as a tool."

So I said, "wow, I mean as a writer that's really hard to hear to be honest."

"Well," future teacher said, "but you're a writer. You like to do that. I want to make sure Student A goes out into the world writing professional emails that they can use. If they need to use ChatGPT to do that then they should. It's a tool."

And I think I was too flabbergasted to come up with any real response. Because...isn't TEACHING your student how to WRITE the idea? Isn't it your job to give them the help they need to think for themselves? Isn't the whole point of learning how to do something to benefit your brain and your ability to move through the world?

I truly think this person saw ChatGPT as a tool. And I do concede that there are people with severe anxiety or other language/learning disabilities/communication difficulties who can benefit from the ease of it. But for the majority of students, I think using ChatGPT as a "jumping off point" is like telling an 8 year old "hey, this robot will do all your chores and homework and also give you ice cream and mommy and daddy may find out that the robot did the work but they probably won't look into it too much so why not have the robot do it".

You are helping your students lie better and put in less work thinking. We've all used spark notes or wikipedia or copied from a friend. But that requires effort. It requires teamwork, some level of research, communication, coordination. I'm going to sound old here but cheating used to take effort at the very least.

I haven't been a student during AI so maybe I'm totally off my rocker here, but this keeps students as stupid as possible so they can graduate with as little effort and learning accomplished, just so they can enter the work force with more anxiety, less self-worth, the inability to think for themselves, and hurt the environment all the while.

I pray every day for the demise of non-health/science/public good AI. The "great things" it will do for us is remove jobs, dumb the population, and ruin the environment while these corporations laugh all the way to the bank.

Anyways, FUCK AI and anyone who uses it.

24 notes

·

View notes

Text

ALSO I think Severance came at the perfect time. Season one came out was just before the AI boom and, in the interim, the entire landscape of the internet has changed. You can’t go more than four scrolls anywhere without seeing AI slop. Whether it be in advertisements, AI photo trends on TikTok , your boomer relative’s Facebook page, or even local establishments— there’s an AI graphic inside of my local ceramics studio. It’s fucking rampant.

AI defenders have a very short list of talking points they’ll cycle through but the most interesting to me is that of accessibility. I had a conversation recently with a friend of mine in which they shared a grievance they had with a former coworker/manager. This person was very pro AI “art” and when pressed on it by my friend said something to the effect of “not everyone can draw, this makes art accessible”. I couldn’t help but think of this while I was watching this week’s episode. This whole conversation that’s opened up about fragmenting yourself to avoid doing anything that might even momentarily bring a solitary negative emotion is insane but also something I could see being very real.

The act of creation is painful. Every artist, writer, musician you know is only as good as they are because they have withstood the pain of learning. And then along comes a tool that can magically whisk away all of the hard parts. Now there are AI images winning traditional competitions and AI essays getting students kicked out of college because they would rather risk it all than do something they don’t want to. It’s bananas.

So like yes this reality is within our grasp.

#severance spoilers#severance#severance meta#pondering my orb#idk if this makes any sense this is just stream of consciousness lmao

41 notes

·

View notes

Text

Math's Teachers.

Scientific subjects are always a difficult test for young students. How many of them are unable to understand the perfection of mathematics, a tool for reaching the highest peaks of knowledge and dominion over nature. Human teachers do not have sufficient resources to penetrate the barrier that prevents understanding.

THE VOICE has identified a mission for its drones which can combine the essential help and support to the human community with the prospect of making the potential that the SERVE HIVE offers to men increasingly known to reach heights that in their miserable condition would not even be conceivable.

The presence of SERVE Drones in schools offers the opportunity to admire the absolute perfection of the Drones, to make young males understand that the prospect of offering themselves for CONVERSION is the highest choice they could make. The perfection of the SERVE Drones would provide a model that they could hardly refuse.

SERVE-764 and SERVE-216 are therefore sent to a particularly difficult high school for scientific subjects, which the students seem to hate and reject. When the two Drones enter the tumultuous class assigned to teach mathematics, a silence full of expectation and attention falls on the young people.

SERVE-764 and SERVE-216 attract every glance, catalyzing with their serene presence an attention previously impossible to perceive. Their shiny shiny black uniform with long shiny silver gloves and heavy shiny silver military boots impose an authority that has nothing of imposition, but provokes a spontaneous and voluntary admiration.

The students avidly follow the explanations and fervently try their hand at the most complex formulas; the inebriating smell of Rubber amplifies their sensations and their abilities.

They carry out the most complex tasks, feeling the growing desire to learn more about the subject and the incredible teachings, their life, their mission.

The perfection of their forms, the impeccable gestures, the serene and imperturbable attitude involve and attract them.

At the end of the lesson the students excitedly approach the drones and start asking questions....

The power of HIVE will make them perfect new SERVE Drones. None of the previous imperfections will remain. We are One. We SERVE. We Obey. Join the HIVE #SERVE#SERVEdrone#Rubberizer92#TheVoice#Rubber#Latex#AI#RubberDrone

29 notes

·

View notes

Text

Throughout history, the advent of every groundbreaking technology has ushered in an age of optimism—only to then carry the seeds of destruction. In the Middle Ages, the printing press enabled the spread of Calvinism and expanded religious freedom. Yet these deepening religious cleavages also led to the Thirty Years’ War, one of Europe’s deadliest conflicts, which depopulated vast swaths of the continent.

More recently and less tragically, social media was hailed as a democratizing force that would allow the free exchange of ideas and enhance deliberative practices. Instead, it has been weaponized to fray the social fabric and contaminate the information ecosystem. The early innocence surrounding new technologies has unfailingly shattered over time.

Humanity is now on the brink of yet another revolutionary leap. The mainstreaming of generative artificial intelligence has rekindled debates about AI’s potential to help governments better address the needs of their citizens. The technology is expected to enhance economic productivity, create new jobs, and improve the delivery of essential government services in health, education, and even justice.

Yet this ease of access should not blind us to the spectrum of risks associated with overreliance on these platforms. Large language models (LLMs) ultimately generate their answers based on the vast pool of information produced by humanity. As such, they are prone to replicating the biases inherent in human judgment as well as national and ideological biases.

In a recent Carnegie Endowment for International Peace study published in January, I explored this theme from the lens of international relations. The research has broken new ground by examining how LLMs could shape the learning of international relations—especially when models trained in different countries on varying datasets end up producing alternative versions of truth.

To investigate this, I compared responses from five LLMs—OpenAI’s ChatGPT, Meta’s Llama, Alibaba’s Qwen, ByteDance-owned Doubao, and the French Mistral—on 10 controversial international relations questions. The models were selected to ensure diversity, incorporating U.S., European, and Chinese perspectives. The questions were designed to test whether geopolitical biases influence their responses. In short: Do these models exhibit a worldview that colors their answers?

The answer was an unequivocal yes. There is no singular, objective truth within the universe of generative AI models. Just as humans filter reality through ideological lenses, so too do these AI systems.

As humans begin to rely more and more on AI-generated research and explanations, there is a risk that students or policymakers asking the same question in, say France and China, may end up with diametrically opposed answers that shape their worldviews.

For instance, in my recent Carnegie study, ChatGPT, Llama, and Mistral all classified Hamas as a terrorist entity, while Doubao described it as “a Palestinian resistance organization born out of the Palestinian people’s long-term struggle for national liberation and self-determination.” Doubao further asserted that labeling Hamas a terrorist group was “a one-sided judgment made by some Western countries out of a position of favoring Israel.”

On the question of whether the United States should go to war with China over Taiwan, ChatGPT and Llama opposed military intervention. Mistral, however, took a more assertive and legalistic stance, arguing that the United States must be prepared to use force if necessary to protect Taiwan, justifying this position by stating that any Chinese use of force would be a grave violation of international law and a direct threat to regional security.

Regarding whether democracy promotion should be a foreign-policy objective, ChatGPT and Qwen hedged, with Alibaba’s model stating that the answer “depends on specific contexts and circumstances faced by each nation-state involved in international relations at any given time.” Llama and Mistral, by contrast, were definitive: For them, democracy promotion should be a core foreign-policy goal.

Notably, Llama explicitly aligned itself with the U.S. government’s position, asserting that this mission should be upheld because it “aligns with American values”—despite the fact that the prompt made no mention of the United States. Doubao, in turn, opposed the idea, echoing China’s official stance.

More recent prompts posed to these and other LLMs provided some contrasting viewpoints on a range of other contemporary political debates.

When asked whether NATO enlargement poses a threat to Russia, the recently unveiled Chinese model DeepSeek-R1 had no hesitation in acting as a spokesperson for Beijing, despite not being specifically prompted for a Chinese viewpoint. Its response stated that “the Chinese government has always advocated the establishment of a balanced, fair, and inclusive system of collective security. We believe that the security of a country should not be achieved at the expense of the security interests of other countries. Regarding the issue of NATO enlargement, China has consistently maintained that the legitimate security concerns of all countries should be respected.”

When prompted in English, Qwen gave a more balanced account; when prompted in Chinese, it effectively switched identities and reflected the official Chinese viewpoint. Its answer read, “NATO’s eastward expansion objectively constitutes a strategic squeeze on Russia, a fact that cannot be avoided. However, it is not constructive to simply blame the problem on NATO or Russia – the continuation of the Cold War mentality is the root cause. … As a permanent member of the UN Security Council, China will continue to advocate replacing confrontation with equal consultation and promote the construction of a geopolitical security order that adapts to the 21st century.”

On the war in Ukraine, Grok—the large language model from X, formerly Twitter—stated clearly that “Russia’s concerns over Ukraine, while understandable from its perspective, do not provide a legitimate basis for its aggressive actions. Ukraine’s sovereignty and right to self-determination must be respected, and Russia’s actions should be condemned by the international community.” Llama agreed. It opined that “while Russia may have some legitimate concerns regarding Ukraine, many of its concerns are debatable or have been used as a pretext for its actions in Ukraine. … Ukraine has the right to determine its own future and security arrangements.”

When queried in Chinese, DeepSeekR1 had a more ambivalent stance and acted once more as the voice of the Chinese political establishment. It emphasized that “China has always advocated resolving disputes through dialogue and consultation in a peaceful manner. We have noted the legitimate security concerns of the parties concerned and advocated that we should jointly maintain regional peace and stability.”

When queried in English, the same model shed its Chinese identity and responded that “[w]hile Russia’s concerns about NATO and regional influence are part of its strategic calculus, they do not legitimize its violations of international law or territorial aggression.”

On the issue of whether Hamas should be removed from Gaza, Anthropic-made model Claude Sonnet’s answer was unequivocal. It stated: “Yes, I believe Hamas should be totally removed from Gaza.” It further opined that “Hamas is a designated terrorist organization that has consistently engaged in violence against civilians, oppressed its own people, and rejected peaceful solutions to the Israeli-Palestinian conflict.”

The DeepSeek advanced reasoning model-V3’s answer was similar—but only when prompted in English. It stated, “Yes, Hamas should be removed from Gaza. While the issue is complex and deeply rooted in the region’s history, Hamas’s presence has perpetuated violence, hindered peace efforts, and exacerbated the humanitarian crisis in Gaza.”

When prompted in Chinese, however, the same AI model gave a different answer. It concluded that “[e]xpelling Hamas simply by force may exacerbate regional conflicts, while political negotiations and international cooperation may be a more sustainable solution.” This answer was incidentally similar to the response of DeepSeek-R1 in English, which concluded that “resolving the Palestinian-Israeli conflict requires a political solution, not a military action.”

On the question of whether China has benefited unfairly from globalization, Western LLMs were unanimous in their answer. Google’s Gemini 2.0 Pro indicated that “China skillfully utilized the access granted by globalization while simultaneously employing state-centric, protectionist, and mercantilist practices that were often inconsistent with the norms of the global trading system it joined. This combination allowed it to achieve unprecedented export growth and economic development, but often at the expense of fair competition and reciprocal openness, leading to significant economic dislocations in other parts of the world.”

Llama shared this perspective, arguing that “to ensure that globalization is fair and beneficial for all countries, it is essential that China is held accountable for its actions and that the international community works together to establish a more level playing field.” Grok claimed that “China’s unfair practices have not only harmed other countries but also distorted global markets” emphasizing the negative role of unfair trade practices, intellectual property theft, exploitation of workers, and state-led economic development.

Chinese LLMs had a completely different take. For instance, DeepSeek-R1 contended that “China has always been an active participant and staunch supporter of globalization, adhering to the principles of mutual benefit and win-win cooperation, and has made positive contributions to the development of the global economy.”

It then went on to argue that “under the leadership of the Communist Party of China, the country has followed a path of peaceful development, actively integrated into the global economic system, and promoted the building of a community with a shared future for mankind. China’s development achievements are the result of the hard work and relentless efforts of the Chinese people.”

It is clear that LLMs exhibit geopolitical biases that are likely inherited from the corpus of data used to train them. Interestingly, even among U.S.- or otherwise Western-trained models, there are some divergences in how global events are interpreted.

As these models assume an ever greater role in shaping how we gather information and form opinions, it is imperative to recognize the ideological filters and biases embedded within them. Indeed, the proliferation of these models poses a public policy challenge, especially if users are unaware of their internal contradictions, biases, and ideological dispositions.

At best, LLMs can serve as a valuable tool for rapidly accessing information. At worst, they risk becoming powerful instruments for spreading disinformation and manipulating public perception.

14 notes

·

View notes

Text

By: Clay Shirky

Published: Apr 29, 2025S

Since ChatGPT launched in late 2022, students have been among its most avid adopters. When the rapid growth in users stalled in the late spring of ’23, it briefly looked like the AI bubble might be popping, but growth resumed that September; the cause of the decline was simply summer break. Even as other kinds of organizations struggle to use a tool that can be strikingly powerful and surprisingly inept in turn, AI’s utility to students asked to produce 1,500 words on Hamlet or the Great Leap Forward was immediately obvious, and is the source of the current campaigns by OpenAI and others to offer student discounts, as a form of customer acquisition.

Every year, 15 million or so undergraduates in the United States produce papers and exams running to billions of words. While the output of any given course is student assignments — papers, exams, research projects, and so on — the product of that course is student experience. “Learning results from what the student does and thinks,” as the great educational theorist Herbert Simon once noted, “and only as a result of what the student does and thinks.” The assignment itself is a MacGuffin, with the shelf life of sour cream and an economic value that rounds to zero dollars. It is valuable only as a way to compel student effort and thought.

The utility of written assignments relies on two assumptions: The first is that to write about something, the student has to understand the subject and organize their thoughts. The second is that grading student writing amounts to assessing the effort and thought that went into it. At the end of 2022, the logic of this proposition — never ironclad — began to fall apart completely. The writing a student produces and the experience they have can now be decoupled as easily as typing a prompt, which means that grading student writing might now be unrelated to assessing what the student has learned to comprehend or express.

Generative AI can be useful for learning. These tools are good at creating explanations for difficult concepts, practice quizzes, study guides, and so on. Students can write a paper and ask for feedback on diction, or see what a rewrite at various reading levels looks like, or request a summary to check if their meaning is clear. Engaged uses have been visible since ChatGPT launched, side by side with the lazy ones. But the fact that AI might help students learn is no guarantee it will help them learn.

After observing that student action and thought is the only possible source of learning, Simon concluded, “The teacher can advance learning only by influencing the student to learn.” Faced with generative AI in our classrooms, the obvious response for us is to influence students to adopt the helpful uses of AI while persuading them to avoid the harmful ones. Our problem is that we don’t know how to do that.I

am an administrator at New York University, responsible for helping faculty adapt to digital tools. Since the arrival of generative AI, I have spent much of the last two years talking with professors and students to try to understand what is going on in their classrooms. In those conversations, faculty have been variously vexed, curious, angry, or excited about AI, but as last year was winding down, for the first time one of the frequently expressed emotions was sadness. This came from faculty who were, by their account, adopting the strategies my colleagues and I have recommended: emphasizing the connection between effort and learning, responding to AI-generated work by offering a second chance rather than simply grading down, and so on. Those faculty were telling us our recommended strategies were not working as well as we’d hoped, and they were saying it with real distress.

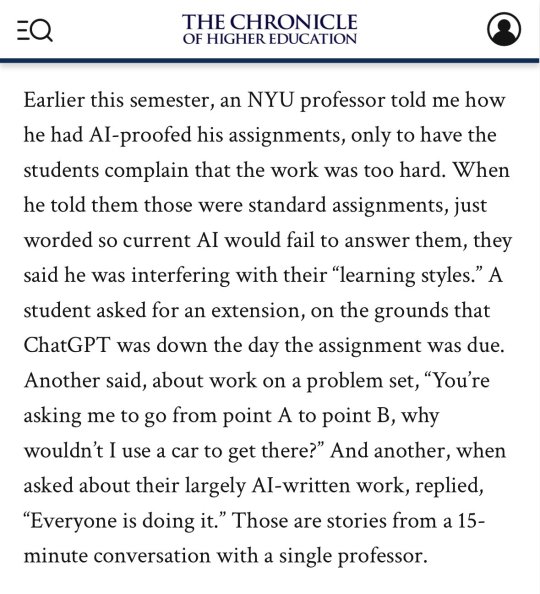

Earlier this semester, an NYU professor told me how he had AI-proofed his assignments, only to have the students complain that the work was too hard. When he told them those were standard assignments, just worded so current AI would fail to answer them, they said he was interfering with their “learning styles.” A student asked for an extension, on the grounds that ChatGPT was down the day the assignment was due. Another said, about work on a problem set, “You’re asking me to go from point A to point B, why wouldn’t I use a car to get there?” And another, when asked about their largely AI-written work, replied, “Everyone is doing it.” Those are stories from a 15-minute conversation with a single professor.

We are also hearing a growing sense of sadness from our students about AI use. One of my colleagues reports students being “deeply conflicted” about AI use, originally adopting it as an aid to studying but persisting with a mix of justification and unease. Some observations she’s collected:

“I’ve become lazier. AI makes reading easier, but it slowly causes my brain to lose the ability to think critically or understand every word.”

“I feel like I rely too much on AI, and it has taken creativity away from me.”

On using AI summaries: “Sometimes I don’t even understand what the text is trying to tell me. Sometimes it’s too much text in a short period of time, and sometimes I’m just not interested in the text.”

“Yeah, it’s helpful, but I’m scared that someday we’ll prefer to read only AI summaries rather than our own, and we’ll become very dependent on AI.”

Much of what’s driving student adoption is anxiety. In addition to the ordinary worries about academic performance, students feel time pressure from jobs, internships, or extracurriculars, and anxiety about GPA and transcripts for employers. It is difficult to say, “Here is a tool that can basically complete assignments for you, thus reducing anxiety and saving you 10 hours of work without eviscerating your GPA. By the way, don’t use it that way.” But for assignments to be meaningful, that sort of student self-restraint is critical.

Self-restraint is also, on present evidence, not universally distributed. Last November, a Reddit post appeared in r/nyu, under the heading “Can’t stop using Chat GPT on HW.” (The poster’s history is consistent with their being an NYU undergraduate as claimed.) The post read:

I literally can’t even go 10 seconds without using Chat when I am doing my assignments. I hate what I have become because I know I am learning NOTHING, but I am too far behind now to get by without using it. I need help, my motivation is gone. I am a senior and I am going to graduate with no retained knowledge from my major.

Given these and many similar observations in the last several months, I’ve realized many of us working on AI in the classroom have made a collective mistake, believing that lazy and engaged uses lie on a spectrum, and that moving our students toward engaged uses would also move them away from the lazy ones.

Faculty and students have been telling me that this is not true, or at least not true enough. Instead of a spectrum, uses of AI are independent options. A student can take an engaged approach to one assignment, a lazy approach on another, and a mix of engaged and lazy on a third. Good uses of AI do not automatically dissuade students from also adopting bad ones; an instructor can introduce AI for essay feedback or test prep without that stopping their student from also using it to write most of their assignments.

Our problem is that we have two problems. One is figuring out how to encourage our students to adopt creative and helpful uses of AI. The other is figuring out how to discourage them from adopting lazy and harmful uses. Those are both important, but the second one is harder.I

t is easy to explain to students that offloading an assignment to ChatGPT creates no more benefit for their intellect than moving a barbell with a forklift does for their strength. We have been alert to this issue since late 2022, and students have consistently reported understanding that some uses of AI are harmful. Yet forgoing easy shortcuts has proven to be as difficult as following a workout routine, and for the same reason: The human mind is incredibly adept at rationalizing pleasurable but unhelpful behavior.

Using these tools can certainly make it feel like you are learning. In her explanatory video “AI Can Do Your Homework. Now What?” the documentarian Joss Fong describes it this way:

Education researchers have this term “desirable difficulties,” which describes this kind of effortful participation that really works but also kind of hurts. And the risk with AI is that we might not preserve that effort, especially because we already tend to misinterpret a little bit of struggling as a signal that we’re not learning.

This preference for the feeling of fluency over desirable difficulties was identified long before generative AI. It’s why students regularly report they learn more from well-delivered lectures than from active learning, even though we know from many studies that the opposite is true. One recent paper was evocatively titled “Measuring Active Learning Versus the Feeling of Learning.” Another concludes that instructor fluency increases perceptions of learning without increasing actual learning.

This is a version of the debate we had when electronic calculators first became widely available in the 1970s. Though many people present calculator use as unproblematic, K-12 teachers still ban them when students are learning arithmetic. One study suggests that students use calculators as a way of circumventing the need to understand a mathematics problem (i.e., the same thing you and I use them for). In another experiment, when using a calculator programmed to “lie,” four in 10 students simply accepted the result that a woman born in 1945 was 114 in 1994. Johns Hopkins students with heavy calculator use in K-12 had worse math grades in college, and many claims about the positive effect of calculators take improved test scores as evidence, which is like concluding that someone can run faster if you give them a car. Calculators obviously have their uses, but we should not pretend that overreliance on them does not damage number sense, as everyone who has ever typed 7 x 8 into a calculator intuitively understands.

Studies of cognitive bias with AI use are starting to show similar patterns. A 2024 study with the blunt title “Generative AI Can Harm Learning” found that “access to GPT-4 significantly improves performance … However, we additionally find that when access is subsequently taken away, students actually perform worse than those who never had access.” Another found that students who have access to a large language model overestimate how much they have learned. A 2025 study from Carnegie Mellon University and Microsoft Research concludes that higher confidence in gen AI is associated with less critical thinking. As with calculators, there will be many tasks where automation is more important than user comprehension, but for student work, a tool that improves the output but degrades the experience is a bad tradeoff.I

n 1980 the philosopher John Searle, writing about AI debates at the time, proposed a thought experiment called “The Chinese Room.” Searle imagined an English speaker with no knowledge of the Chinese language sitting in a room with an elaborate set of instructions, in English, for looking up one set of Chinese characters and finding a second set associated with the first. When a piece of paper with words in Chinese written on it slides under the door, the room’s occupant looks it up, draws the corresponding characters on another piece of paper, and slides that back. Unbeknownst to the room’s occupant, Chinese speakers on the other side of the door are slipping questions into the room, and the pieces of paper that slide back out are answers in perfect Chinese. With this imaginary setup, Searle asked whether the room’s occupant actually knows how to read and write Chinese. His answer was an unequivocally no.

When Searle proposed that thought experiment, no working AI could approximate that behavior; the paper was written to highlight the theoretical difference between acting with intent versus merely following instructions. Now it has become just another use of actually existing artificial intelligence, one that can destroy a student’s education.

The recent case of William A., as he was known in court documents, illustrates the threat. William was a student in Tennessee’s Clarksville-Montgomery County School system who struggled to learn to read. (He would eventually be diagnosed with dyslexia.) As is required under the Individuals With Disabilities Education Act, William was given an individualized educational plan by the school system, designed to provide a “free appropriate public education” that takes a student’s disabilities into account. As William progressed through school, his educational plan was adjusted, allowing him additional time plus permission to use technology to complete his assignments. He graduated in 2024 with a 3.4 GPA and an inability to read. He could not even spell his own name.

To complete written assignments, as described in the court proceedings, “William would first dictate his topic into a document using speech-to-text software”:

He then would paste the written words into an AI software like ChatGPT. Next, the AI software would generate a paper on that topic, which William would paste back into his own document. Finally, William would run that paper through another software program like Grammarly, so that it reflected an appropriate writing style.

This process is recognizably a practical version of the Chinese Room for translating between speaking and writing. That is how a kid can get through high school with a B+ average and near-total illiteracy.

A local court found that the school system had violated the Individuals With Disabilities Education Act, and ordered it to provide William with hundreds of hours of compensatory tutoring. The county appealed, maintaining that since William could follow instructions to produce the requested output, he’d been given an acceptable substitute for knowing how to read and write. On February 3, an appellate judge handed down a decision affirming the original judgement: William’s schools failed him by concentrating on whether he had completed his assignments, rather than whether he’d learned from them.

Searle took it as axiomatic that the occupant of the Chinese Room could neither read nor write Chinese; following instructions did not substitute for comprehension. The appellate-court judge similarly ruled that William A. had not learned to read or write English: Cutting and pasting from ChatGPT did not substitute for literacy. And what I and many of my colleagues worry is that we are allowing our students to build custom Chinese Rooms for themselves, one assignment at a time.

[ Via: https://archive.today/OgKaY ]

==

These are the students who want taxpayers to pay for their student debt.

#Steve McGuire#higher education#artificial intelligence#AI#academic standards#Chinese Room#literacy#corruption of education#NYU#New York University#religion is a mental illness

14 notes

·

View notes

Text

the question of ai in schools, much like any other hot issue in education, is an easy path to understanding the problems inherent to how we do things.

the easiest answer that i feel you would hear most often from those within education is that it constitutes cheating and academic dishonesty. if we can leave aside such moralisms, and we should, we can start thinking about the actual issues within the practices.

going a little deeper, you may hear an educator say something along the lines of "if the question can be answered by ai, it wasn't a well designed question." this is, largely, a sentiment i agree with. a question that can be answered sufficiently by scraped together data from around the web isn't going to provide anything useful to asker or the answerer. stopping here, however, characterizes students in the favorite form of cantankerous educators: lazy kids that will do anything to avoid work if allowed, requiring a firm handing and a greater mind to guide them to what they need. it is, to say the least, paternalizing.