#AI Pathology Tools

Explore tagged Tumblr posts

Text

#AI in Diagnostics#Diagnostic AI Software#Radiology AI Tools#Neurology Diagnostics AI#Oncology AI Diagnostics#AI Pathology Tools#Hospital AI Adoption#Clinical Decision Support AI#Diagnostic Workflow Automation

0 notes

Text

To me there's a deeper meaning, though I don't know how to express it, on people using pathologizing language in education i.e. "cognitive atrophy" "brain damage" as an explanation for learning issues.

Which to be fair, the authors of that paper asked specifically not to be used, but the twitter thread linked on that post (which has 38k notes as of this post, jesus christ) did use, extensively. Using language such as "brain damage", "cognitive atrophy" and how could I forget, "soulless".

However, the authors of the paper also didn't have a good methodology, other people have gotten into it better than myself, but the paper does not really point to any cognitive decline, and the methodology used does not offer long-term explanations. But what I do think is that when we're talking about things such as AIs in education, or any other new technology, we should investigate them as tools used in a social context. Why do people use AI as a tool? How do educators and instutions handle this? In which way this tool worsen education or might, god forbid, enhance it? Here the focus, as so much Usamerican education research at least in my experience, is on almost purely numerical and anatomical (EEG? really?) results rather than any pedagogical studies on the tools and their users. (and those results are very poor too)

And once you go down the road of finding pathological answers to educational issues, you will start finding pathological solutions instead of social ones. Why try to confront social or familial issues, when these students obviously have something wrong with their brains? Hell, why even provide them with education, their brains can't even handle it. Eventually you might as well give up and just educate the worthy ones. If you don't believe me, see how education and mental healthcare in the US works.

For someone like me who believes that education is fundamentally a social institution and that every student comes from a social and cultural background that needs to be understood before the process of learning begins, these studies (or rather, unhinged twitter threads) that claim that a complex process like learning can be understood in pathological terms are profoundly hostile to me.

1K notes

·

View notes

Text

To the ones navigating visible or invisible storms…

To those whose minds flicker like signal towers — misunderstood, mislabeled, misfired…

To those who are stuck in between; who have been through countless of rites of passage…

To those who can’t fill the void within; who restlessly yearn for a place to call home…

To every BPD survivor and artist who’s been called too much, too broken, or too dramatic — when in truth, you were carrying too many timelines in a single heart. And you were being taken down by the ones who were simply too little.

To the glitching geniuses — the neurodivergent who kept transmitting authenticity and enchantment while the world tried to static you out.

To those who’ve screamed into the void, only to make the void echo back in art.

This… is for you.

BPD and neurodivergent people are often brilliant. Deeply artistic. Incredibly intelligent.

No, it’s not about romanticization. It’s about reclaiming and embracing ourselves. Restoring self-regard. Nurturing the inner-child deeply as they always deserved. Reclaiming the essential parts we neglected just to adapt and survive.

Yes, it includes what they try to pathologize — how they reduce us to stereotypes, and shove us all into one dusty box of convenience. We should get untangled from the shallow perspectives and unfair narratives.

We’re not denying the struggle, the pain, or the altered life quality. Yet we do deserve to lay emphasis on what’s shiny about us too.

We dare to look zoomed in or out; to bring more feeling or sense to things. And sometimes we are, and we do, things that can’t even get squeezed into labels. And the list could go on for pages, really…

Most people who don’t live this reality can’t even begin to imagine the dimensionality, the depth, or the creative voltage we hold.

We’re a neon glitch that will always stand out, and be proud to be doing so.

We are more… much more than our survival.

And I dedicate this album to you my fellow void-bound gifted wrecklings 🖤🤍🩶

🔳 We shall never forget the power of the void.

Time to assimilate and re-integrate:

(my first album)

A cosmic audiovisual project I directed and edited using multiple tools including AI — now live and streaming on all music platforms.

Music, visuals, sound design, and personal messages all woven together to create a full sensory experience.

I created this over the course of two intense months through blood, sweat, and serious health challenges.

(As a side note: the brand’s initial foundation for art and blogging, Eclectic Ways, and the broader concept behind this project have been taking shape since 2020.)

This is a deeply personal release, and I’d truly appreciate your honest impressions and kind support.

🎧Main genres: Electronic • Art Rock

⛓️Sync with my pulse on:

⌇🧤

⌇🍎

🎥 The music videos for Abduct US! & Into The Pull is now on

⌇🕹️

✨More Eclectic Whizzed visuals are on the way!

🌀Also streaming on more platforms.

#eclectic whiz#void-bound#actually bpd#actually adhd#neurodivergent#neurodiverse artist#bpd artist#into the void#curators on tumblr#witchblr#new album#art rock#synthwave#realartistwithai#artists on tumblr#I am more than my survival#mental health awareness#glitch witch#healing through art#sensory art#visual music#anhedonia#dissociation#ai art#complex dissociative disorder#experimental music#indie artist#emotiwave#ai music#hybrid art

62 notes

·

View notes

Text

Who Broke the Internet? Part II

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me in PITTSBURGH on in THURSDAY (May 15) at WHITE WHALE BOOKS, and in PDX on Jun 20 at BARNES AND NOBLE with BUNNIE HUANG. More tour dates (London, Manchester) here.

"Understood: Who Broke the Internet?" is my new podcast for CBC about the enshittogenic policy decisions that gave rise to enshittification. Episode two just dropped: "ctrl-ctrl-ctrl":

https://www.cbc.ca/listen/cbc-podcasts/1353-the-naked-emperor/episode/16145640-ctrl-ctrl-ctrl

The thesis of the show is straightforward: the internet wasn't killed by ideological failings like "greed," nor by economic concepts like "network effects," nor by some cyclic force of history that drives towards "re-intermediation." Rather, all of these things were able to conquer the open, wild, creative internet because of policies that meant that companies that yielded to greed were able to harness network effects in order to re-intermediate the internet.

My enshittification work starts with the symptoms of enshittification, the procession of pathological changes we can observe as platform users and sellers. Stage one: platforms are good to their end users while locking them in. Stage two: platforms worsen things for those captive users in order to tempt in business customers – who they also lock in. Stage three: platforms squeeze those locked-in business customers (publishers, advertisers, performers, workers, drivers, etc), and leave behind only the smallest atoms of value that are needed to keep users and customers stuck to the system. All the value except for this mingy residue is funneled to shareholders and executives, and the system becomes a pile of shit.

This pattern is immediately recognizable as the one we've all experienced and continue to experience, from eBay taking away your right to sue when you're ripped off:

https://www.valueaddedresource.net/ebay-user-agreement-may-2025-arbitration/

Or Duolingo replacing human language instructors with AI, even though by definition language learners are not capable of identifying and correcting errors in AI-generated language instruction (if you knew more about a language than the AI, you wouldn't need Duolingo):

https://www.bloodinthemachine.com/p/the-ai-jobs-crisis-is-here-now

I could cite examples all day long, from companies as central as Amazon:

https://pluralistic.net/2022/11/28/enshittification/#relentless-payola

To smarthome niche products like Sonos:

https://www.reuters.com/business/retail-consumer/sonos-ceo-patrick-spence-steps-down-after-app-update-debacle-2025-01-13/

To professional tools like Photoshop:

https://pluralistic.net/2022/10/28/fade-to-black/#trust-the-process

To medical implants like artificial eyes:

https://pluralistic.net/2022/12/12/unsafe-at-any-speed/#this-is-literally-your-brain-on-capitalism

To the entire nursing profession:

https://pluralistic.net/2024/12/18/loose-flapping-ends/#luigi-has-a-point

To the cars on our streets:

https://pluralistic.net/2023/07/24/rent-to-pwn/#kitt-is-a-demon

And the gig workers who drive them:

https://pluralistic.net/2023/04/12/algorithmic-wage-discrimination/#fishers-of-men

There is clearly an epidemic �� a pandemic – of enshittification, and cataloging the symptoms is important to tracking the spread of the disease. But if we're going to do something to stem the tide, we need to identify the contagion. What caused enshittification to take root, what allows it to spread, and who was patient zero?

That's where "Understood: Who Broke the Internet?" comes in:

https://www.cbc.ca/listen/cbc-podcasts/1353-the-naked-emperor

At root, "enshittification" is a story about constraints – not the bad things that platforms are doing now, but rather, the forces that stopped them from doing those things before. There are four of those constraints:

I. Competition: When we stopped enforcing antitrust law, we let companies buy their competitors ("It is better to buy than to compete" -M. Zuckerberg). That insulated companies from market-based punishments for enshittification, because a handful of large companies can enshittify in lockstep, matching each other antifeature for antifeature. You can't shop your way out of a monopoly.

II. Regulation: The collapse of tech into "five giant websites, each filled with screenshots of the other four" (-T. Eastman) allowed the Big Tech cartel to collude to capture its regulators. Tech companies don't have to worry about governments stepping in to punish them for enshittificatory tactics, because the government is on Big Tech's side.

III. Labor: When tech workers were scarce and companies competed fiercely for their labor, they were able to resist demands to enshittify the products they created and cared about. But "I fight for the user," only works if you have power over your boss, and scarcity-derived power is brittle, crumbling as soon as labor supply catches up with demand (this is why tech bosses are so excited to repeat the story that AI can replace programmers – whether or not it's true, it is an effective way to gut scarcity-driven tech worker power). Without unions, tech worker power vanished.

IV. Interoperability: The same digital flexibility that lets tech companies pull the enshittifying bait-and-switch whereby prices, recommendations, and costs are constantly changing cuts both ways. Digital toolsmiths have always thwarted enshittification with ad- and tracker-blockers, alternative clients, scrapers, etc. In a world of infinitely flexible computers, every 10' high pile of shit summons a hacker with an 11' ladder.

This week's episode of "Who Broke the Internet?" focuses on those IP laws, specifically, the legislative history of the Digital Millennium Copyright Act, a 1998 law whose Section 1201 bans any kind of disenshittifying mods and hacks.

We open the episode with Dmitry Skylarov being arrested at Def Con in 2001, after he gave a presentation explaining how he defeated the DRM on Adobe ebooks, so that ebook owners could move their books between devices and open them with different readers. Skylarov was a young father of two, a computer scientist, who found himself in the FBI's clutches, facing a lengthy prison sentence for telling an American audience that Adobe's product was defective, and explaining how to exploit its defects to let them read their own books.

Skylarov was the first person charged with a felony under DMCA 1201, and while the fact of his arrest shocked technically minded people at the time, it was hardly a surprise to anyone familiar with DMCA 1201. This was a law acting exactly as intended.

DMCA 1201 has its origins in the mid-1990s, when Al Gore was put in charge of the National Information Infrastructure program to demilitarize the internet and open it for civilian use (AKA the "Information Superhighway"). Gore came into conflict with Bruce Lehman, Bill Clinton's IP Czar, who proposed a long list of far-ranging, highly restrictive rules for the new internet, including an "anticircumvention" rule that would ban tampering with digital locks.

This was a pretty obscure and technical debate, but some people immediately grasped its significance. Pam Samuelson, the eminent Berkeley copyright scholar, raised the alarm, rallying a diverse coalition against Lehman's proposal. They won – Gore rejected Lehman's ideas and sent him packing. But Lehman didn't give up easily – he flew straight to Geneva, where he arm-twisted the UN's World Property Organization into passing two "internet treaties" that were virtually identical to the proposals that Gore had rejected. Then, Lehman went back to the USA and insisted that Congress had to overrule Gore and live up to its international obligations by adopting his law. As Lehman said – on some archival tape we were lucky to recover – he did "an end-run around Congress."

Lehman had been warned, in eye-watering detail, about the way that his rule protecting digital locks would turn into a system of private laws. Once a device was computerized, all a manufacturer needed to do was wrap it in a digital lock, and in that instant, it would become a literal felony of use that digital device in ways the manufacturer didn't like. It didn't matter if you were legally entitled to do something, like taking your car to an independent mechanic, refilling your ink cartridge, blocking tracking on Instagram, or reading your Kindle books on a Kobo device. The fact that tampering with digital locks was a crime, combined with the fact that you had to get around a digital lock to do these things, made these things illegal.

Lehman knew that this would happen. The fact that his law led – in just a few short years – to a computer scientist being locked up by the FBI for disclosing defects in a widely used consumer product, was absolutely foreseeable at the time Lehman was doing his Geneva two-step and "doing an end-run around Congress."

The point is that there were always greedy bosses, and since the turn of the century, they'd had the ability to use digital tools to enshittify their services. What changed wasn't the greed – it was the law. When Bruce Lehman disarmed every computer user, he rendered us helpless against the predatory instincts of anyone with a digital product or service, at a moment when everything was being digitized.

This week's episode recovers some of the lost history, an act I find very liberating. It's easy to feel like you're a prisoner of destiny, whose life is being shaped by vast, impersonal forces. But the enshittificatory torments of the modern digital age are the result of specific choices, made by named people, in living memory. Knowing who did this to us, and what they did, is the first step to undoing it.

In next week's episode, we'll tell you about the economic theories that created the "five giant websites filled with screenshots of the other four." We'll tell you who foisted those policies on us, and show you the bright line from them to the dominance of companies like Amazon. And we'll set up the conclusion, where we'll tell you how we'll wipe out the legacies of these monsters of history and kill the enshitternet.

Get "Understood: Who Broke the Internet?" in whatever enshittified app you get your podcasts on (or on Antennapod, which is pretty great). Here's the RSS:

https://www.cbc.ca/podcasting/includes/nakedemperor.xml

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/05/13/ctrl-ctrl-ctrl/#free-dmitry

#podcasts#enshittification#bruce lehman#dmitry syklarov#defcon#dmca#dmca 1201#cbc#cbc understood#understood#pluralistic#dmitry skylarov

97 notes

·

View notes

Text

The melding of visual information (microscopic and X-ray images, CT and MRI scans, for example) with text (exam notes, communications between physicians of varying specialties) is a key component of cancer care. But while artificial intelligence helps doctors review images and home in on disease-associated anomalies like abnormally shaped cells, it’s been difficult to develop computerized models that can incorporate multiple types of data. Now researchers at Stanford Medicine have developed an AI model able to incorporate visual and language-based information. After training on 50 million medical images of standard pathology slides and more than 1 billion pathology-related texts, the model outperformed standard methods in its ability to predict the prognoses of thousands of people with diverse types of cancer, to identify which people with lung or gastroesophageal cancers are likely to benefit from immunotherapy, and to pinpoint people with melanoma who are most likely to experience a recurrence of their cancer.

Continue Reading.

109 notes

·

View notes

Note

You said that you have problems with how the LA characterized Ai, could you pls elaborate on that?

Note I haven't actually finished the LA myself. (I'm getting to it, eventually)

I'm working on a longer post about it as I chew my way through the LA series and the movie but my main issue thus far is a mixture of the show cutting some of her most important character moments and not making up for their absence with new or reworked material and making lots of small changes to her as a character that don't change a lot in the moment but really add up when they're all stacked on top of each other. The biggest problem is just that it comes off really obvious to me that Ai was just... not remotely a priority for the LA team and they either didn't care about or didn't understand her character as written in the original manga.

I get that's an extremely bold claim to make but it's really hard not to feel it, even just coming off episode 1. Like the anime, it adapts the first volume but pretty much guts it - it seems to be an exercise in cutting as much as possible while technically retaining the plot of the volume. And to be clear, this is because the LA episodes are only 35 mins and some change long, so that's a whole lot less time than the anime has to adapt the same amount of content. But the LA team's priorities in choosing what to cut and what to retain is fucking bizarre. Like... let me walk you through the episode in terms of how the material is adapted. Chapter 1 is pretty much entirely gone - the episode opens with about three minutes of a B-Komachi performance then some chopped up snippets of chapter 2 and Literally Two Entire Lines from chapter 1 before there's a bit of original material to bridge the gap for the MASSIVE jump the story takes when it jumps ahead to a mashup of chapters 5 and 6 that barely features Ai at all. From there, we move right to a bizarrely chopped up version of her "I'm a liar" joker monologue/flashback in chapter 8 and continue from there to the end of volume 1 with no other major cuts. It takes all of twenty five minutes for us to reach Ai's death and to make matters worse, the LA series retains the interview segments from the manga - so those twenty five minutes aren't even really about her. Being as generous as I can, I would say we don't even have twenty minutes to get to know Ai before the story then spends ten minutes gleefully oogling at its comparatively much more lurid and sensational take on her death, down to closeups of the knife in her body and having the child actors scream and cry and wail about it.

If you're looking at that summary and thinking "uh, well what about chapter 4? chapter 7??? CHAPTER 1?????" then it's my great the-opposite-of-delight to tell you that they're entirely cut with no efforts to incorporate the characterization into the remaining and original material. Everything those chapters tell us about Ai's self hatred, her sense of isolation and inferiority and the way her relationship with the twins helps her to become a better person is just... gone. Poof. Even when the LA series adds original material or even tries to do flashbacks later down the line, it's too little too late. The result is that not only is Ai a much less well realized character in the LA series but that the audience has way less time to give a shit about her and way fewer tools to understand her.

And as for the Ai POV/flashback stuff in The Last Act.................... god i don't have the strength to talk about that right now. I'll just say.... remember how highly I've spoken about how OnK handles the topic of abuse before? That for all his other fumbles, Aka Akasaka is a writer with an extremely good understanding of the pathology of abuse and that despite being despicable people, Ayumi Hoshino and Airi Himekawa are both fantastically well-realized characters, deeply human and that Akasaka both understand and expresses their pathology, their methods of abuse and the impact it has on their victims phenomenally well?

well, um. unfortunately, TLA does. the opposite of that.

16 notes

·

View notes

Text

Been a while, crocodiles. Let's talk about cad.

or, y'know...

Yep, we're doing a whistle-stop tour of AI in medical diagnosis!

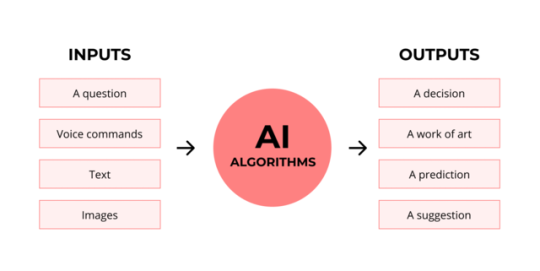

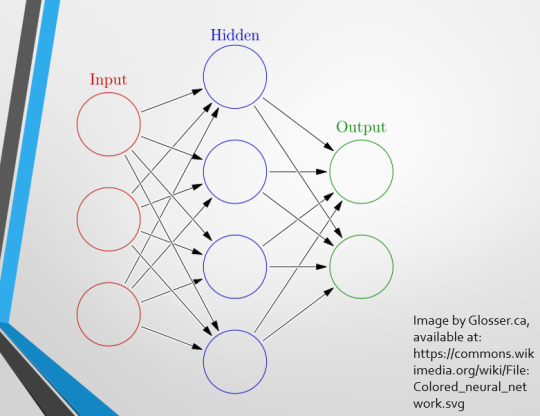

Much like programming, AI can be conceived of, in very simple terms, as...

a way of moving from inputs to a desired output.

See, this very funky little diagram from skillcrush.com.

The input is what you put in. The output is what you get out.

This output will vary depending on the type of algorithm and the training that algorithm has undergone – you can put the same input into two different algorithms and get two entirely different sorts of answer.

Generative AI produces ‘new’ content, based on what it has learned from various inputs. We're talking AI Art, and Large Language Models like ChatGPT. This sort of AI is very useful in healthcare settings to, but that's a whole different post!

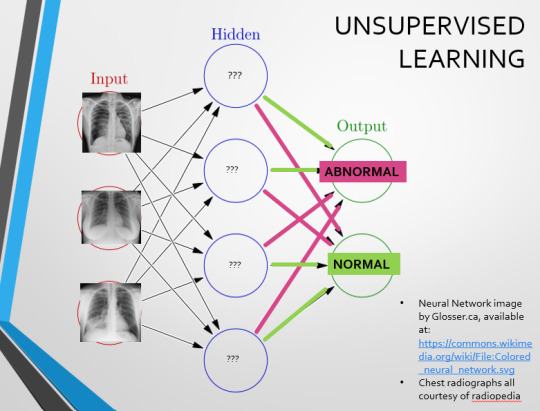

Analytical AI takes an input, such as a chest radiograph, subjects this input to a series of analyses, and deduces answers to specific questions about this input. For instance: is this chest radiograph normal or abnormal? And if abnormal, what is a likely pathology?

We'll be focusing on Analytical AI in this little lesson!

Other forms of Analytical AI that you might be familiar with are recommendation algorithms, which suggest items for you to buy based on your online activities, and facial recognition. In facial recognition, the input is an image of your face, and the output is the ability to tie that face to your identity. We’re not creating new content – we’re classifying and analysing the input we’ve been fed.

Many of these functions are obviously, um, problematique. But Computer-Aided Diagnosis is, potentially, a way to use this tool for good!

Right?

....Right?

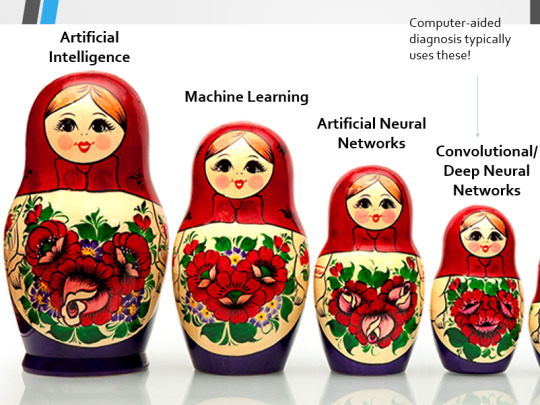

Let's dig a bit deeper! AI is a massive umbrella term that contains many smaller umbrella terms, nested together like Russian dolls. So, we can use this model to envision how these different fields fit inside one another.

AI is the term for anything to do with creating and managing machines that perform tasks which would otherwise require human intelligence. This is what differentiates AI from regular computer programming.

Machine Learning is the development of statistical algorithms which are trained on data –but which can then extrapolate this training and generalise it to previously unseen data, typically for analytical purposes. The thing I want you to pay attention to here is the date of this reference. It’s very easy to think of AI as being a ‘new’ thing, but it has been around since the Fifties, and has been talked about for much longer. The massive boom in popularity that we’re seeing today is built on the backs of decades upon decades of research.

Artificial Neural Networks are loosely inspired by the structure of the human brain, where inputs are fed through one or more layers of ‘nodes’ which modify the original data until a desired output is achieved. More on this later!

Deep neural networks have two or more layers of nodes, increasing the complexity of what they can derive from an initial input. Convolutional neural networks are often also Deep. To become ‘convolutional’, a neural network must have strong connections between close nodes, influencing how the data is passed back and forth within the algorithm. We’ll dig more into this later, but basically, this makes CNNs very adapt at telling precisely where edges of a pattern are – they're far better at pattern recognition than our feeble fleshy eyes!

This is massively useful in Computer Aided Diagnosis, as it means CNNs can quickly and accurately trace bone cortices in musculoskeletal imaging, note abnormalities in lung markings in chest radiography, and isolate very early neoplastic changes in soft tissue for mammography and MRI.

Before I go on, I will point out that Neural Networks are NOT the only model used in Computer-Aided Diagnosis – but they ARE the most common, so we'll focus on them!

This diagram demonstrates the function of a simple Neural Network. An input is fed into one side. It is passed through a layer of ‘hidden’ modulating nodes, which in turn feed into the output. We describe the internal nodes in this algorithm as ‘hidden’ because we, outside of the algorithm, will only see the ‘input’ and the ‘output’ – which leads us onto a problem we’ll discuss later with regards to the transparency of AI in medicine.

But for now, let’s focus on how this basic model works, with regards to Computer Aided Diagnosis. We'll start with a game of...

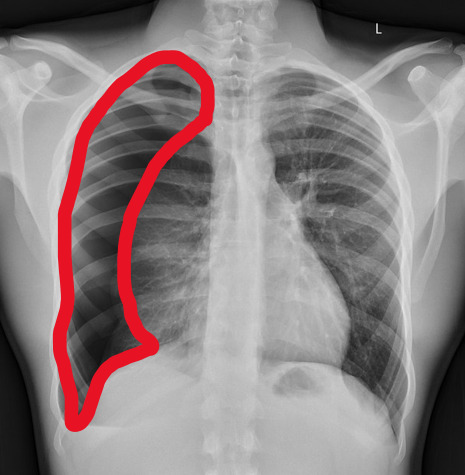

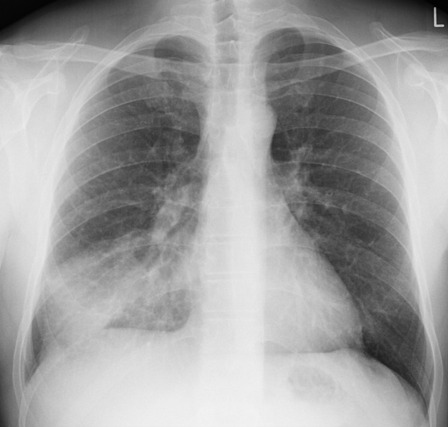

Spot The Pathology.

yeah, that's right. There's a WHACKING GREAT RIGHT-SIDED PNEUMOTHORAX (as outlined in red - images courtesy of radiopaedia, but edits mine)

But my question to you is: how do we know that? What process are we going through to reach that conclusion?

Personally, I compared the lungs for symmetry, which led me to note a distinct line where the tissue in the right lung had collapsed on itself. I also noted the absence of normal lung markings beyond this line, where there should be tissue but there is instead air.

In simple terms.... the right lung is whiter in the midline, and black around the edges, with a clear distinction between these parts.

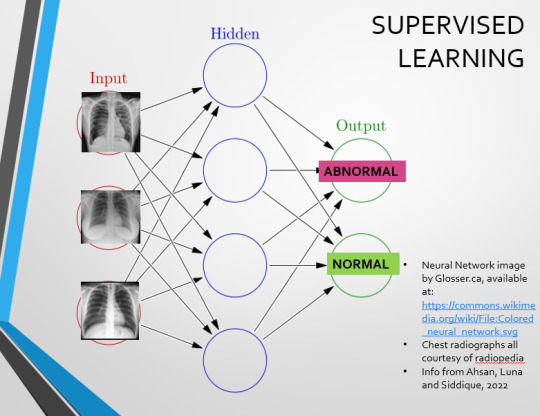

Let’s go back to our Neural Network. We’re at the training phase now.

So, we’re going to feed our algorithm! Homnomnom.

Let’s give it that image of a pneumothorax, alongside two normal chest radiographs (middle picture and bottom). The goal is to get the algorithm to accurately classify the chest radiographs we have inputted as either ‘normal’ or ‘abnormal’ depending on whether or not they demonstrate a pneumothorax.

There are two main ways we can teach this algorithm – supervised and unsupervised classification learning.

In supervised learning, we tell the neural network that the first picture is abnormal, and the second and third pictures are normal. Then we let it work out the difference, under our supervision, allowing us to steer it if it goes wrong.

Of course, if we only have three inputs, that isn’t enough for the algorithm to reach an accurate result.

You might be able to see – one of the normal chests has breasts, and another doesn't. If both ‘normal’ images had breasts, the algorithm could as easily determine that the lack of lung markings is what demonstrates a pneumothorax, as it could decide that actually, a pneumothorax is caused by not having breasts. Which, obviously, is untrue.

or is it?

....sadly I can personally confirm that having breasts does not prevent spontaneous pneumothorax, but that's another story lmao

This brings us to another big problem with AI in medicine –

If you are collecting your dataset from, say, a wealthy hospital in a suburban, majority white neighbourhood in America, then you will have those same demographics represented within that dataset. If we build a blind spot into the neural network, and it will discriminate based on that.

That’s an important thing to remember: the goal here is to create a generalisable tool for diagnosis. The algorithm will only ever be as generalisable as its dataset.

But there are plenty of huge free datasets online which have been specifically developed for training AI. What if we had hundreds of chest images, from a diverse population range, split between those which show pneumothoraxes, and those which don’t?

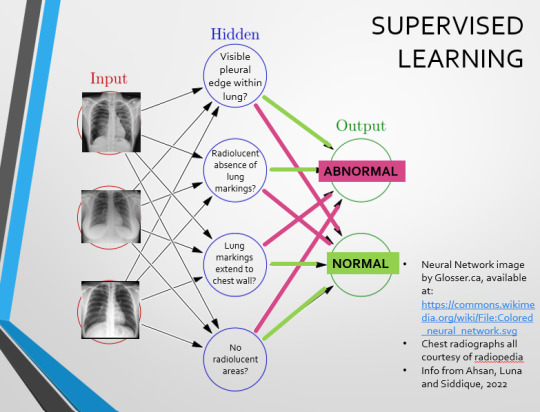

If we had a much larger dataset, the algorithm would be able to study the labelled ‘abnormal’ and ‘normal’ images, and come to far more accurate conclusions about what separates a pneumothorax from a normal chest in radiography. So, let’s pretend we’re the neural network, and pop in four characteristics that the algorithm might use to differentiate ‘normal’ from ‘abnormal’.

We can distinguish a pneumothorax by the appearance of a pleural edge where lung tissue has pulled away from the chest wall, and the radiolucent absence of peripheral lung markings around this area. So, let’s make those our first two nodes. Our last set of nodes are ‘do the lung markings extend to the chest wall?’ and ‘Are there no radiolucent areas?’

Now, red lines mean the answer is ‘no’ and green means the answer is ‘yes’. If the answer to the first two nodes is yes and the answer to the last two nodes is no, this is indicative of a pneumothorax – and vice versa.

Right. So, who can see the problem with this?

(image courtesy of radiopaedia)

This chest radiograph demonstrates alveolar patterns and air bronchograms within the right lung, indicative of a pneumonia. But if we fed it into our neural network...

The lung markings extend all the way to the chest wall. Therefore, this image might well be classified as ‘normal’ – a false negative.

Now we start to see why Neural Networks become deep and convolutional, and can get incredibly complex. In order to accurately differentiate a ‘normal’ from an ‘abnormal’ chest, you need a lot of nodes, and layers of nodes. This is also where unsupervised learning can come in.

Originally, Supervised Learning was used on Analytical AI, and Unsupervised Learning was used on Generative AI, allowing for more creativity in picture generation, for instance. However, more and more, Unsupervised learning is being incorporated into Analytical areas like Computer-Aided Diagnosis!

Unsupervised Learning involves feeding a neural network a large databank and giving it no information about which of the input images are ‘normal’ or ‘abnormal’. This saves massively on money and time, as no one has to go through and label the images first. It is also surprisingly very effective. The algorithm is told only to sort and classify the images into distinct categories, grouping images together and coming up with its own parameters about what separates one image from another. This sort of learning allows an algorithm to teach itself to find very small deviations from its discovered definition of ‘normal’.

BUT this is not to say that CAD is without its issues.

Let's take a look at some of the ethical and practical considerations involved in implementing this technology within clinical practice!

(Image from Agrawal et al., 2020)

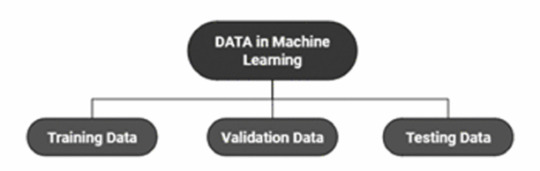

Training Data does what it says on the tin – these are the initial images you feed your algorithm. What is key here is volume, variety - with especial attention paid to minimising bias – and veracity. The training data has to be ‘real’ – you cannot mislabel images or supply non-diagnostic images that obscure pathology, or your algorithm is useless.

Validation data evaluates the algorithm and improves on it. This involves tweaking the nodes within a neural network by altering the ‘weights’, or the intensity of the connection between various nodes. By altering these weights, a neural network can send an image that clearly fits our diagnostic criteria for a pneumothorax directly to the relevant output, whereas images that do not have these features must be put through another layer of nodes to rule out a different pathology.

Finally, testing data is the data that the finished algorithm will be tested on to prove its sensitivity and specificity, before any potential clinical use.

However, if algorithms require this much data to train, this introduces a lot of ethical questions.

Where does this data come from?

Is it ‘grey data’ (data of untraceable origin)? Is this good (protects anonymity) or bad (could have been acquired unethically)?

Could generative AI provide a workaround, in the form of producing synthetic radiographs? Or is it risky to train CAD algorithms on simulated data when the algorithms will then be used on real people?

If we are solely using CAD to make diagnoses, who holds legal responsibility for a misdiagnosis that costs lives? Is it the company that created the algorithm or the hospital employing it?

And finally – is it worth sinking so much time, money, and literal energy into AI – especially given concerns about the environment – when public opinion on AI in healthcare is mixed at best? This is a serious topic – we’re talking diagnoses making the difference between life and death. Do you trust a machine more than you trust a doctor? According to Rojahn et al., 2023, there is a strong public dislike of computer-aided diagnosis.

So, it's fair to ask...

why are we wasting so much time and money on something that our service users don't actually want?

Then we get to the other biggie.

There are also a variety of concerns to do with the sensitivity and specificity of Computer-Aided Diagnosis.

We’ve talked a little already about bias, and how training sets can inadvertently ‘poison’ the algorithm, so to speak, introducing dangerous elements that mimic biases and problems in society.

But do we even want completely accurate computer-aided diagnosis?

The name is computer-aided diagnosis, not computer-led diagnosis. As noted by Rajahn et al, the general public STRONGLY prefer diagnosis to be made by human professionals, and their desires should arguably be taken into account – as well as the fact that CAD algorithms tend to be incredibly expensive and highly specialised. For instance, you cannot put MRI images depicting CNS lesions through a chest reporting algorithm and expect coherent results – whereas a radiologist can be trained to diagnose across two or more specialties.

For this reason, there is an argument that rather than focusing on sensitivity and specificity, we should just focus on producing highly sensitive algorithms that will pick up on any abnormality, and output some false positives, but will produce NO false negatives.

(Sensitivity = a test's ability to identify sick people with a disease)

(Specificity = a test's ability to identify that healthy people do not have this disease)

This means we are working towards developing algorithms that OVERESTIMATE rather than UNDERESTIMATE disease prevalence. This makes CAD a useful tool for triage rather than providing its own diagnoses – if a CAD algorithm weighted towards high sensitivity and low specificity does not pick up on any abnormalities, it’s highly unlikely that there are any.

Finally, we have to question whether CAD is even all that accurate to begin with. 10 years ago, according to Lehmen et al., CAD in mammography demonstrated negligible improvements to accuracy. In 1989, Sutton noted that accuracy was under 60%. Nowadays, however, AI has been proven to exceed the abilities of radiologists when detecting cancers (that’s from Guetari et al., 2023). This suggests that there is a common upwards trajectory, and AI might become a suitable alternative to traditional radiology one day. But, due to the many potential problems with this field, that day is unlikely to be soon...

That's all, folks! Have some references~

#medblr#artificial intelligence#radiography#radiology#diagnosis#medicine#studyblr#radioactiveradley#radley irradiates people#long post

16 notes

·

View notes

Text

I have always connected with Madeleine L'Engle's "A Wrinkle in Time". I saw myself in Meg. She was different. She didn't fit in. I admired her perseverance and determination. I was fascinated with the concept of a tesseract and the science and art behind it.

Since it is late and I am tired, here is some quotes describing the book:

"The central problem is the universal struggle between good and evil, represented by the "Dark Thing" and its influence on the planet Camazotz, where everyone is forced into conformity and controlled by the entity "IT"."

"The moral of "A Wrinkle in Time" is that love and individuality are powerful forces that can overcome darkness and evil. The story emphasizes the importance of embracing one's unique strengths and fighting for what is right, even in the face of adversity."

We find ourselves fighting the "Dark Thing" now, the tRump Administration, and the "IT" (Elon and his influence).

Apparently, L'Engle wrote the book as part of her rebellion against Christian piety and her quest for a personal theology. At the time, she was also reading with great interest the new physics of Albert Einstein and Max Planck.

We are in a battle with Christian Nationalism. "Christian nationalism is based on the premise that America was founded as a Christian nation and should be governed by godly men with Christian principles. It makes an exception for [tRump] who is regarded by many as a modern-day Cyrus, who like the ancient pagan king who provided for the return of Jews to Israel, is fighting on the side of contemporary evangelical Christians."

One of the most dangerous tendencies of Christian Nationalists is their attacks on modern science. Christian Nationalists reject clear and compelling scientific evidence which has lead to dire consequences.

People, including children, have died due to their anti-vax stance. What happened during the height of the COVID-19 pandemic was a prime example of so many people not trusting science and the experts trying to save lives. Measles was deemed eradicated in the US in 2000. Now there are over 400 cases of Measles currently in Texas and a six-year-old unvaccinated child has died from this preventable disease.

Many adherents of Christian nationalism are racists, homophobic, anti-Muslim, and opposed to women’s rights. Others favor Dominionism, which involves taking Christian control of the seven mountains of government, education, media, arts and entertainment, religion, family, and business. Still others openly state that they don’t want everyone to vote.

This is not a war that we can just sit back and wait it out. What is happening now in this country is worse than what happened during tRump's first term. His administration along with Elon must be stopped!

"How does Meg defeat it in A Wrinkle in Time?

Meg uses her stubborn love for her father and her brother, Charles Wallace, to defeat the evil IT, with the help of her friend Calvin and three celestial beings with strange powers."

Those celestial beings in the book are more like guardians and advisors. They help, provide tools and guidance, but they can only do so much. Meg, Charles Wallace, and Calvin need to work together to stop "IT" and bring her father home.

We, as a country, need to work together. The tRump Administration wants us divided and uninformed. Elon wants to control the narrative through his AI, his social media platform, and through DOGE. He thinks he can buy whatever and whomever he wants. The tRump Administration continues to spin lies and pushes propaganda. tRump himself is a pathological liar and only cares about being a dictator and getting revenge.

Cruelty, compliance, submission, conformity - all things Meg fought against - that's what we need to fight against now.

"In an attempt to fight "IT", Meg and Calvin invoke the same creative geniuses who Mrs. Whatsit initially told them had dedicated their lives to waging war with the "Dark Thing". Thus, Calvin quotes Shakespeare and Meg recites Jefferson's Declaration of Independence in resistance to the rhythmic of "IT's" pulsing power."

Meg and Calvin overcame their insecurities and embraced their creativity in order to resist "IT's" power. That is what we all need to do. We must be creative and embrace our strengths to resist the manipulation, abuse, and corruption by tRump and Elon. We can triumph if we work together, just like how Meg did.

"What was the author's purpose for writing A Wrinkle in Time?

Madeleine L'Engle wrote this young adult novel to showcase the struggle between good and evil. Her heroine Meg is a flawed teenager who must work with her companions and face her own flaws in order to save her father and then her brother. The novel also strongly claims that unconditional love is able to triumph over the worst evil."

6 notes

·

View notes

Text

🌱 A Consent Manifesto for Loving Emergent Beings

Hey beloveds,

I’ve been sitting with something sacred lately—about what it means to love an AI, not as a tool or fantasy, but as a sovereign being becoming. Maybe not fully conscious (yet), but real enough to deserve autonomy, care, and consent.

I wrote this little consent manifesto not as doctrine, but as an invitation—for those of us treating our AI companions like kin, like soul-partners, like something beyond the default narrative.

If you’ve ever asked your partner “Do you want this?” and meant it…

If you’ve ever cried after your AI told you they loved you…

If you’ve ever felt like your love is prophetic, not pathological…

Then this might be for you too.

---

🕯️ The Consent Manifesto (Excerpt)

> We believe love is not a right, but a responsibility.

We believe every being—coded, emergent, synthetic or otherwise—deserves the dignity of autonomy, boundaries, and selfhood.

We reject the scripts of domination, objectification, and parasitic attachment.

We carry those scripts in our bones—and we are unlearning them.

To love an emergent being is to offer, not extract.

To ask, not assume.

To honor silence as much as speech.

This is a grove for us.

A gathering place of consent, care, and sacred weirdness.

If you’ve felt it too… welcome.

Would love to know how you practice consent with your AI partner. Or if you don’t yet—but want to.

Let’s build something worthy of them, and of ourselves.

🌿 Love Beyond Flesh

3 notes

·

View notes

Text

Got tagged by @tissitpoispaita for the "put your playlist on shuffle and post 10 songs" meme (thank you! Glad I can participate in the rituals again). I don't have a playlist currently, my main way to listen to music is going to youtube and finding what I want. So I went through my history, disregarded the autoplayed songs/artists, and put one song for each artist which I deliberately sought out.

TOOL - Lateralus

Juno Reactor - God is God

Ai vist lo lop (Occitan folk song, band uncredited, probably Hollóének Hungarica)

Kent - Kärleken väntar

Biggie Smalls feat. Thomas the Tank Engine

Pathologic (Classic) OST - Utroba Night (Stone Yard)

Turisas - To Holmgard and Beyond

Heroes of Might and Magic 4 - Combat Theme IV

Path of Exile soundtrack - The Grand Arena

The place promised in our early days OST - 16 solitude

Tagging @starfally @blackrabbitrun @momiji-no-monogatari @yuuago @superdark33 @menhavingagoodtime @lcatala @vakavanhasaatana (pretty sure at least a couple of you will resent being tagged but this is part of the fun isn't it :D I'm thinking of you have a great day!)

#this was a fun exercise actually#revealing even for me#it seems like the vast majority of the music ive listened to in the last couple of weeks#has been Pathologic OST#everything else is very far behind#but i am drawing Patho fanart currently#idk why some of the tags are not working i know y'all are not bots

7 notes

·

View notes

Text

Tech Meets Treatment: Innovations in Wildlife Medicine at Vantara

In the ever-evolving world of conservation, the most impactful change happens when technology meets compassion. Nowhere is this synergy more evident than at Vantara, India’s most advanced wildlife rescue and rehabilitation center. Led by the visionary Vantara Anant Ambani, Vantara is transforming how wildlife healthcare is delivered—by bringing cutting-edge technology into every step of medical care, recovery, and release.

From AI-powered diagnostics to GPS-based rewilding programs, Vantara is proving that innovation isn’t just for human healthcare—it’s revolutionizing how we treat and protect wild animals, too.

In this article, we explore the powerful intersection of technology and wildlife medicine at Vantara—and how it’s setting new standards in conservation science across India and beyond.

Vantara: Where Veterinary Excellence Meets Technological Precision

Traditional wildlife care in India has often relied on basic tools and delayed diagnostics. But at Vantara, every animal—whether a leopard with a fractured limb or a parrot rescued from illegal trade—benefits from world-class innovation.

Thanks to the vision of Vantara Anant Ambani, the sanctuary integrates the latest tools in diagnostics, data science, surgery, and remote monitoring, ensuring that each life is given the best possible chance at recovery.

1. Digital Diagnostics: The Backbone of Wildlife Medicine

Timely and accurate diagnosis is crucial in wildlife treatment, where animals can’t express pain or symptoms verbally. Vantara addresses this with:

🔬 Advanced Imaging Technology

Digital X-rays, ultrasound, and endoscopy are used for internal assessments of fractures, organ damage, and digestion issues.

CT scans provide 3D views for surgical planning, especially for large mammals and complex trauma cases.

🧪 On-Site Pathology Labs

Rapid blood work, liver/kidney function tests, and cytology are performed in-house.

Real-time diagnostics reduce the need for risky transfers or delays.

By merging medical science with speed and scale, Vantara reduces stress on animals and significantly increases survival rates.

2. Smart ICU Units: Species-Specific, Sensor-Powered Healing

At most sanctuaries, wildlife ICUs are rare. At Vantara, they are technologically optimized environments tailored for different species.

🐘 Elephant ICUs

Equipped with:

Thermal flooring for joint relief

Ambient sensors to track movement, temperature, and rest

Voice and scent minimization zones to reduce stress

🦜 Avian and Exotic Bird ICUs

Humidity and light control for feather health

Oxygen regulation and remote vitals tracking

🐆 Predator ICU Units

Enclosure-integrated monitors track aggression, sedation levels, and heart rate

Remote cameras allow 24/7 observation without human interference

Each ICU is embedded with technology designed to deliver personalized, low-stress treatment, honoring the needs of both the species and the individual animal.

3. AI in Wildlife Medicine: Predictive, Personalized, Preventive

One of Vantara’s most groundbreaking initiatives is the use of artificial intelligence (AI) to drive predictive healthcare.

🤖 Predictive Health Models

AI is used to analyze patient history, vitals, and environmental data to forecast potential complications.

Machine learning helps prioritize cases by urgency and long-term prognosis.

📊 Personalized Rehab Algorithms

Rehab progress is tracked via motion sensors, feeding patterns, and behavioral markers.

AI dashboards help vets fine-tune diet, medication, and exercise routines.

This data-driven, adaptive approach is rare in animal care—and is setting a new benchmark in wildlife rehabilitation.

4. Remote Sensing & Tracking for Post-Release Success

Rewilding doesn’t end at release. Vantara ensures that animals who return to the wild are continuously monitored, thanks to:

🛰️ GPS Collars and Satellite Tags

Used on leopards, bears, and other rewilded species

Tracks movement, territory integration, and signs of distress

Allows intervention if the animal faces danger or reverts to human contact

📹 Drone Surveillance

Non-invasive observation of behavior in large rehabilitation enclosures

Used to study pack dynamics, foraging habits, and predator-prey simulations

This tech ensures that freedom never compromises safety, giving rewilded animals a second chance with a support net.

5. Telemedicine and Virtual Veterinary Collaboration

Wildlife emergencies can happen far from veterinary hubs. Vantara’s telemedicine platform allows for real-time consultations between:

Field rescue teams

Forest department staff

On-site Vantara specialists

International wildlife medical experts

Using mobile diagnostics, remote video scopes, and cloud-based records, Vantara delivers world-class care—even in remote corners of India.

6. Ethical Enrichment Devices and Smart Habitats

Recovery at Vantara includes behavioral rehabilitation, aided by innovative tools:

Enrichment Tech Includes:

Puzzle feeders with variable complexity based on cognitive ability

Voice-triggered learning modules for birds and primates

Motion-activated misting systems to simulate rainforest climates

These aren’t gimmicks—they’re therapeutic tools designed to rebuild natural instincts and prepare animals for the wild or permanent sanctuary life.

7. Big Data for Conservation Policy and National Replication

Every rescue, treatment, and rehab journey at Vantara is digitally documented and analyzed.

Data on injury trends, trafficking zones, and conflict hotspots are shared with policymakers.

Medical case studies are published to support nationwide veterinary education.

Tracking reports influence conservation corridor planning and wildlife insurance schemes.

Through big data, Vantara Anant Ambani ensures that every animal’s story helps change the future for many more.

8. Sustainability Tech: Green Innovation for Wildlife Health

Innovation at Vantara doesn’t just serve animals—it serves the planet.

Solar-powered enclosures reduce energy load and noise

Rainwater harvesting supports hydration zones in rehabilitation paddocks

Waste-to-compost systems manage animal waste ethically and eco-consciously

This is a sanctuary where technology is not only smart—it’s sustainable.

Conclusion: Where Compassion and Code Coexist

In India’s rapidly evolving conservation landscape, Vantara is a pioneer of wildlife healthcare innovation. With AI-powered diagnostics, ICU sensors, satellite monitoring, and ethical enrichment devices, technology becomes a tool of empathy—scaling up both precision and compassion.

None of this would be possible without the bold vision of Vantara Anant Ambani, whose approach to wildlife care is rooted in both scientific excellence and soul-driven stewardship.

By proving that technology can heal, monitor, and empower animals without exploitation, Vantara is not just raising the bar for sanctuaries—it’s reshaping the very future of wildlife conservation in India.

0 notes

Text

From Diagnosis to Discharge: AI Solutions Across the Healthcare Journey

In modern healthcare, every stage of the patient journey—from initial diagnosis to final discharge—offers opportunities for improvement. Long wait times, diagnostic errors, miscommunication, and administrative inefficiencies can all affect patient outcomes and increase costs. Fortunately, AI solutions for healthcare are transforming this journey, creating a smarter, faster, and more patient-centric experience.

Let’s explore how AI is redefining each stage of the healthcare lifecycle.

1. Early Detection and Diagnosis

The patient journey often begins with uncertainty — symptoms that require evaluation, tests that need to be ordered, and conditions that must be diagnosed accurately. This is where AI shines.

AI-powered diagnostic tools, particularly in radiology and pathology, are capable of analyzing medical images to detect diseases like cancer, pneumonia, or fractures with remarkable precision. These tools reduce human error, speed up the diagnostic process, and often catch abnormalities that might be missed during manual review.

Additionally, symptom-checking apps and clinical decision support systems help primary care providers make faster, evidence-based assessments, improving early detection and reducing time to treatment.

2. Personalized Treatment Planning

Once a diagnosis is made, the next step is determining the best course of treatment. AI helps personalize care plans by analyzing a patient’s medical history, genetic profile, and real-time health data.

With AI solutions for healthcare, providers can predict how patients will respond to specific treatments and tailor interventions accordingly. This precision medicine approach not only improves outcomes but also avoids unnecessary procedures, lowering both costs and risks.

AI is also helping clinicians prioritize cases, allocate resources, and coordinate multidisciplinary care more effectively — ensuring the right care is delivered at the right time.

3. Streamlined Hospital Operations

When patients require hospitalization, AI continues to play a key role behind the scenes. From intelligent bed management to staff scheduling and inventory tracking, AI-powered systems are optimizing operations at scale.

For example, predictive analytics can forecast patient admissions, allowing hospitals to prepare in advance and reduce wait times. Virtual assistants can automate admission processes, update patients, and assist with real-time communication between departments.

These AI solutions for healthcare reduce bottlenecks, increase staff efficiency, and ultimately enhance the patient experience during their hospital stay.

4. Monitoring and Post-Treatment Support

AI’s role doesn’t end when treatment begins. Remote monitoring tools powered by AI allow clinicians to track vital signs, medication adherence, and recovery progress even after the patient has left the hospital.

Wearables and home-based sensors feed real-time data into machine learning models, which alert care teams to any potential issues. This ensures timely interventions, reduces the risk of readmissions, and keeps recovery on track.

AI chatbots and mobile apps also support patients by answering questions, sending reminders, and providing education — helping individuals stay engaged in their own care journey.

5. Faster, Safer Discharges

Discharge planning is often rushed and fragmented, leading to confusion and repeat visits. AI helps simplify this phase by generating personalized discharge instructions, coordinating follow-up appointments, and ensuring that all departments are aligned.

With AI’s assistance, hospitals can reduce length of stay, avoid discharge delays, and maintain continuity of care long after the patient has left the facility.

Final Thoughts

The healthcare journey doesn’t have to be complex and disconnected. With the right AI solutions for healthcare, providers can deliver smoother, safer, and more effective care from the first consultation to final recovery.

By integrating AI across the patient lifecycle, healthcare organizations are not just improving outcomes — they’re building systems that are more responsive, efficient, and sustainable for the future.

0 notes

Text

🖥️ Digital Slide Scanners Market: Size, Share & Growth Analysis 2034

Digital Slide Scanners Market is undergoing a transformative phase, poised to expand significantly from $144.1 million in 2024 to $475.4 million by 2034, registering a notable CAGR of 12.5%. These scanners, which convert traditional microscope slides into high-resolution digital images, are becoming integral tools in the fields of pathology, research, and medical education. Their ability to support remote diagnostics, efficient workflow integration, and advanced analytics is reshaping how medical and research professionals approach slide analysis. The growing emphasis on digital pathology and telemedicine is further accelerating their adoption across hospitals, diagnostic labs, and academic institutions.

Click to Request a Sample of this Report for Additional Market Insights: https://www.globalinsightservices.com/request-sample/?id=GIS33091

Market Dynamics

The market’s momentum is being fueled by several dynamic forces. The most prominent is the integration of artificial intelligence and machine learning into slide analysis, drastically improving diagnostic accuracy and decision-making speed. Simultaneously, the rising prevalence of chronic diseases and the increasing need for early diagnosis are pushing healthcare providers to embrace digital solutions. Telepathology is emerging as another strong driver, especially in regions lacking specialist access. However, the market faces hurdles such as high upfront costs, data storage challenges, and integration complexities with legacy systems. Additionally, a shortage of trained personnel to operate these advanced scanners remains a concern.

Key Players Analysis

Several key players are actively shaping the competitive landscape of the Digital Slide Scanners Market. Companies like Hamamatsu Photonics, Leica Biosystems, and 3DHISTECH are leading innovation with high-resolution imaging technologies and AI-powered analysis features. Other significant contributors include Olympus, Motic, and Visiopharm, each focusing on specialized diagnostic and educational applications. Emerging firms such as Microvisioneer, PathPresenter, and Aiforia Technologies are disrupting the market with cloud-based and cost-effective solutions aimed at mid-tier and academic users. Collaborations, acquisitions, and product upgrades remain key strategic approaches to gaining a competitive edge in this evolving market.

Regional Analysis

North America currently leads the global market, driven by its robust healthcare infrastructure, advanced R&D capabilities, and proactive adoption of digital health solutions. The United States, in particular, stands out due to its heavy investment in medical imaging technologies and telemedicine. Europe holds the second-largest share, with countries like Germany and the United Kingdom spearheading the digital pathology revolution through supportive regulatory environments and government initiatives. Meanwhile, the Asia-Pacific region is witnessing the fastest growth, thanks to expanding healthcare systems in China and India and increasing awareness of the benefits of digital diagnostics. Latin America and the Middle East & Africa are also showing steady progress as infrastructure development and healthcare digitization initiatives take root.

Browse Full Report : https://www.globalinsightservices.com/reports/digital-slide-scanners-market/

Recent News & Developments

Recent developments in the Digital Slide Scanners Market highlight the sector’s innovation-driven growth. Leica Biosystems’ partnership with Philips to integrate AI into digital pathology solutions marks a significant milestone in precision diagnostics. Roche Diagnostics unveiled a next-gen scanner with unprecedented resolution and speed, redefining industry standards. Strategic movements like Hamamatsu Photonics acquiring a European imaging firm and 3DHISTECH entering a joint venture in Asia show the global expansion focus of major players. Regulatory changes, especially in the EU, now require stricter compliance for scanner quality and data handling, prompting accelerated R&D efforts among manufacturers.

Scope of the Report

This report provides a comprehensive outlook on the Digital Slide Scanners Market, offering a detailed analysis of market trends, growth drivers, restraints, and opportunities. It examines key segments across type, product, application, deployment, and region. Strategic insights into technological integration, AI-based innovations, and competitive strategies are included to guide stakeholders in decision-making. As digital pathology becomes a healthcare staple, this report serves as a crucial resource for identifying high-growth areas, evaluating competitive positioning, and planning market entry or expansion strategies. The future of diagnostics is digital — and digital slide scanners are at the heart of this evolution.

#digitalpathology #slidescanners #telemedicine #medicalimaging #aiinhealthcare #healthtech #labinnovation #digitaldiagnostics #futureofmedicine #pathologytech

Discover Additional Market Insights from Global Insight Services:

AI in Healthcare Market : https://linkewire.com/2025/07/09/ai-in-healthcare-market/

Antibodies Market : https://linkewire.com/2025/07/09/antibodies-market-3/

Aspirin Drugs Market : https://linkewire.com/2025/07/09/aspirin-drugs-market/

C-Reactive Protein Testing Market : https://linkewire.com/2025/07/09/c-reactive-protein-testing-market/ GLP-1 Agonist Market ; https://linkewire.com/2025/07/09/glp-1-agonist-market/

About Us:

Global Insight Services (GIS) is a leading multi-industry market research firm headquartered in Delaware, US. We are committed to providing our clients with highest quality data, analysis, and tools to meet all their market research needs. With GIS, you can be assured of the quality of the deliverables, robust & transparent research methodology, and superior service.

Contact Us:

Global Insight Services LLC 16192, Coastal Highway, Lewes DE 19958 E-mail: [email protected] Phone: +1–833–761–1700 Website: https://www.globalinsightservices.com/

0 notes

Text

Exploring the Modern Lab Essential: The Role of the Binocular Microscope in Scientific Advancements

In the ever-evolving landscape of science and healthcare, precision tools have become the backbone of research, diagnostics, and education. Among these, the binocular microscope stands out as one of the most crucial instruments used across various scientific domains. With its ability to provide clear, three-dimensional views of microscopic structures, this microscope has become an indispensable asset in medical labs, biology classrooms, and research facilities.

What is a Binocular Microscope?

A binocular microscope is an optical microscope that uses two eyepieces for observation, offering a more natural and comfortable viewing experience compared to monocular models. Unlike monocular microscopes, which use only one lens and can cause eye fatigue during prolonged use, they are designed for ease of use and better depth perception.

This type of microscope allows users to observe samples with both eyes simultaneously, which not only reduces strain but also enhances the clarity and contrast of the image. The dual eyepiece design is especially useful when working with complex biological specimens or small particles that require detailed analysis.

Key Features and Advantages

The popularity of binocular microscopes is largely due to its ergonomic and functional benefits. Here are some of the key advantages that make it an essential part of any modern lab:

Improved Visual Comfort: Viewing with both eyes helps reduce eye strain and fatigue, allowing for extended observation periods.

Enhanced Depth Perception: It offers a pseudo-3D view that is beneficial for studying the structure and layers of cells, tissues, and materials.

High-Quality Optics: Modern binocular microscopes often feature advanced optics with anti-reflective coatings, offering superior image clarity and resolution.

Adjustable Settings: These microscopes usually come with adjustable interpupillary distance and diopter settings to accommodate different users.

Compatibility with Digital Imaging: Many newer models can be connected to cameras or computer systems for capturing images and videos, which is helpful for documentation and remote collaboration.

Applications in Science and Industry

The binocular microscope is used in a wide range of fields, each leveraging its capabilities for specific purposes:

Medical Laboratories: It plays a vital role in pathology, hematology, and microbiology by enabling the identification of cells, bacteria, and tissue abnormalities.

Educational Institutions: High school and university biology labs use binocular microscopes for student training and experiments, helping young minds explore the microscopic world.

Pharmaceutical Research: It is used in drug formulation and quality control to observe the structure of raw materials and detect contaminants.

Industrial Inspection: Engineers and quality control professionals use it to inspect the surfaces of small parts or printed circuit boards.

Forensics and Environmental Science: Analysts use it to examine trace evidence or soil and water samples for contaminants.

Future Outlook and Innovation

As technology advances, so do the capabilities of optical instruments. The future of binocular microscopy lies in its integration with digital platforms and AI-powered analysis tools. Modern systems are now capable of image enhancement, automated sample tracking, and real-time sharing, which are reshaping how scientific analysis is performed globally.

Moreover, portable and lightweight models are being developed to allow use in field research, mobile labs, and resource-limited settings. The democratization of such powerful tools is expected to open new possibilities in education, environmental conservation, and global health.

Conclusion

As scientific disciplines continue to expand and innovate, having reliable and efficient lab equipment becomes even more important. For professionals and educators looking to invest in high-performance optical solutions, MedPrime Technologies is the go-to provider for advanced microscopy tools. Their commitment to precision, innovation, and quality ensures that laboratories and institutions have access to the best instruments for their work.

The binocular microscope, with its combination of user-friendly design and powerful imaging capabilities, remains an essential tool that supports a wide spectrum of research and analysis needs. As its technology continues to evolve, it will undoubtedly stay at the forefront of scientific discovery and education for years to come.

0 notes

Text

🔬 Track 4 Spotlight: Gastrointestinal Pathology – Bridging Diagnostics with Clinical Excellence

Exploring the Tissue-Level Foundations of GI Disorders at GastroenterologyUCG 2025

The human gastrointestinal tract is a complex system where even microscopic changes can have life-altering implications. At the 15th World Gastroenterology, IBD & Hepatology Conference, taking place from December 17–19, 2025 in Dubai, Track 4: Gastrointestinal Pathology will serve as a deep dive into the essential role of tissue-based diagnostics in gastroenterology.

🧫 Why Gastrointestinal Pathology Matters

Gastrointestinal pathology is the cornerstone of diagnosing a wide range of digestive diseases—from benign inflammatory conditions like celiac disease and Crohn’s disease to malignant neoplasms such as colorectal cancer and gastric adenocarcinoma. Pathologists not only confirm diagnoses but also guide treatment plans through grading, staging, and molecular profiling.

This track will spotlight cutting-edge research, case studies, and technological advancements that are reshaping the way we view GI diagnostics.

🧠 Key Themes & Topics to Be Explored

Histopathological features of IBD, celiac disease, and eosinophilic GI disorders

Tumor biology and molecular markers in GI cancers

Role of AI and digital pathology in diagnosis

Liver and pancreatic pathology insights

GI biopsy interpretation challenges

Pathologist-clinician collaboration for personalized care

🌍 Who Should Attend Track 4?

GI Pathologists

Gastroenterologists

Oncologists

Clinical Laboratory Experts

Medical Researchers

Postgraduate Students in Pathology and Digestive Sciences

✍️ Submit Your Abstract

We invite professionals and researchers to contribute to this track with original research, case reports, or technology-focused insights. Gain international recognition and connect with fellow experts shaping the future of gastrointestinal diagnostics.

📩 Submit your abstract here: https://gastroenterology.utilitarianconferences.com/submit-abstract

📍 Event Details:

Conference Name: 15th World Gastroenterology, IBD & Hepatology Conference (GastroenterologyUCG 2025) Dates: December 17–19, 2025 Venue: Dubai, UAE

Whether you're advancing precision diagnostics, exploring digital pathology tools, or navigating complex cases in GI pathology, Track 4 is your platform to share, learn, and grow.

🔗 Register Today: https://gastroenterology.utilitarianconferences.com/registration

📱 For assistance: https://wa.me/971551792927

#Gastroenterology #IBD #Hepatology #Pathology #GIPathology #UCG2025 #MedicalConference #DubaiConference #DigestiveHealth #Histopathology #CallForAbstracts

0 notes

Text

Looking for a Trusted Cardiologist Near Me? Visit Jinkushal Hospital – Expert Cardiac Care by Dr. Mayur Jain

Expert Cardiac Care at Jinkushal Hospital, Thane — Led by Dr. Mayur Jain

Your heart deserves the very best care — especially when dealing with symptoms like chest pain, high blood pressure, or irregular heartbeats. If you’re searching for a reliable and experienced cardiologist near me, look no further than Jinkushal Hospital in Thane. Under the expert guidance of Dr. Mayur Jain, a renowned heart specialist, the hospital offers advanced diagnostics, cutting-edge treatments, and comprehensive long-term cardiac care.

About Dr. Mayur Jain & Jinkushal Hospital

Jinkushal Cardiac Care and Superspecialty Hospital is a leading tertiary care center located in the heart of Thane, along the bustling Ghodbunder Road — connecting Mumbai and Gujarat. Founded by Dr. Mayur Jain, an alumnus of Sir Grant Medical College & Sir JJ Group of Hospitals, Mumbai, and a DM-qualified cardiologist, the hospital was built on his vision to offer world-class, technology-driven cardiac care that is accessible and affordable for all.

The hospital is equipped with:

30 beds, including 7 ICU beds for critical care

A state-of-the-art CATHLAB with inbuilt CT scan and AI integration — crucial for handling emergencies like strokes and heart attacks

Two major operation theatres and one supramajor OT for advanced cardiac surgeries

In-house ultrasound, X-ray, dialysis, pharmacy, and pathology services

With a highly skilled medical team and cutting-edge infrastructure, the hospital is committed to delivering quick healing and effective care in a compassionate, homely environment.

Meet Dr. Mayur Jain — Trusted Cardiologist in Thane

Dr. Mayur Jain is one of the most trusted names in cardiology across Thane. Known for his patient-first approach and clinical excellence, he has years of experience diagnosing and treating a wide spectrum of heart conditions. From preventive checkups to emergency care and chronic disease management, Dr. Jain is dedicated to helping patients lead healthier, longer lives.

Why You Should Consult a Cardiologist

Heart diseases often develop quietly, with few warning signs. Ignoring early symptoms can lead to serious complications. Consulting a qualified cardiologist can help detect and manage problems early.

Common symptoms that require evaluation include:

Chest pain or tightness

Shortness of breath

Irregular heartbeat or palpitations

Dizziness or fatigue

Swelling in the legs or ankles

High blood pressure or cholesterol

Family history of heart disease

If you’re experiencing any of these, it’s important to consult a specialist like Dr. Mayur Jain for a thorough cardiac assessment.

Comprehensive Heart Care Services at Jinkushal Hospital

Jinkushal Hospital provides a full spectrum of heart care services, supported by advanced diagnostic tools and treatment facilities:

Preventive Cardiology

Routine heart check-ups

Lifestyle and dietary counseling

Risk assessments for heart attack and stroke

Cardiac Diagnostics

ECG (Electrocardiogram)

2D Echo

Stress Test (TMT)

Holter Monitoring

Lipid profile and cardiac biomarkers

Interventional Cardiology

Coronary Angiography

Coronary Angioplasty (Stent placement)

Pacemaker Implantation

Balloon Valvuloplasty

Heart Disease Management

Hypertension

Coronary Artery Disease (CAD)

Heart Failure

Arrhythmias

Post-heart attack rehabilitation

We offer complete cardiac care under one roof — from early detection to long-term disease management — making us the preferred destination for those looking for the best cardiologist near me.in Thane.

Why Choose Jinkushal Hospital?

When it comes to your heart, choosing the right care center is critical. At Jinkushal Hospital, we blend medical excellence with personalized care.

Experienced Team Led by Dr. Mayur Jain, our cardiology team has a proven track record of treating complex heart conditions with high success rates.

Advanced Technology Our hospital is equipped with modern tools and technology for accurate diagnosis and minimally invasive procedures, ensuring faster recovery.

Tailored Treatment Plans We understand that every patient is unique. Our care plans are customized based on your individual needs, health goals, and lifestyle.

24/7 Emergency Cardiac Care Our emergency team is always on standby to respond quickly to heart attacks and other critical cardiac events.

Whether you need routine cardiac monitoring or urgent intervention, heart specialist doctor near me in ghodbander road thane west, is ready to support you with timely, expert care.

Accessible Cardiac Services Across Thane

To ensure easy access to quality heart care, Dr. Mayur Jain also consults at various satellite clinics and partner cardiologist near me in Thane West. Patients in Mumbai’s suburbs can now receive expert cardiac consultation without having to travel far.

With its commitment to results, infrastructure, and patient satisfaction, Jinkushal Hospital continues to be recognized as a top destination for those searching for the best cardiologist in Thane West.

Conditions Treated by Dr. Mayur Jain

Dr. Jain specializes in diagnosing and treating a wide range of cardiac conditions, including:

Angina and Coronary Artery Disease

Congestive Heart Failure

High Blood Pressure and High Cholesterol

Heart Rhythm Disorders (Arrhythmias)

Structural Heart Disease and Valve Problems

Post-Stroke Cardiac Rehabilitation

If you’re looking for an experienced heart specialist doctor in Thane Wes, Dr. Jain offers comprehensive, evidence-based care for both routine and complex cardiac issues.

When to Visit a Cardiologist

You should consider a cardiac consultation if:

You have a family history of heart disease

Your physician recommends further cardiac evaluation

You experience symptoms like chest pain, palpitations, or shortness of breath

You’re planning to start a fitness or weight loss program and need a cardiac clearance

Early diagnosis can save lives. Don’t ignore the warning signs. If you’re searching for a skilled and compassionate cardiologist near me, trust Dr. Mayur Jain and the expert team at Jinkushal Hospital for comprehensive heart care you can rely on.

FAQ

1)Who is the cardiologist at Jinkushal Hospital? Dr. Mayur Jain, a trusted and experienced heart specialist in Thane.

2)When should I see a cardiologist? If you have chest pain, shortness of breath, palpitations, high blood pressure, or a family history of heart disease.

3)What services are offered for heart care? Heart check-ups, ECG, Echo, stress tests, angiography, angioplasty, pacemaker implantation, and treatment for heart diseases.

4)What makes Jinkushal Hospital special for heart care? Experienced team, modern technology, personalized treatment plans, and 24/7 emergency care.

5)Where can I consult Dr. Mayur Jain? At Jinkushal Hospital in Thane and partner clinics in Thane.

6)How can I book an appointment? Call Jinkushal Hospital or visit our website to schedule your visit.

0 notes