#AI and HPC server

Explore tagged Tumblr posts

Text

HexaData HD‑H231‑H60 Ver Gen001 – 2U High-Density Dual‑Node Server

The HexaData HD‑H231‑H60 Ver Gen001 is a 2U, dual-node high-density server powered by 2nd Gen Intel Xeon Scalable (“Cascade Lake”) CPUs. Each node supports up to 2 double‑slot NVIDIA/Tesla GPUs, 6‑channel DDR4 with 32 DIMMs, plus Intel Optane DC Persistent Memory. Features include hot‑swap NVMe/SATA/SAS bays, low-profile PCIe Gen3 & OCP mezzanine expansion, Aspeed AST2500 BMC, and dual 2200 W 80 PLUS Platinum redundant PSUs—optimized for HPC, AI, cloud, and edge deployments. Visit for more details: Hexadata HD-H231-H60 Ver: Gen001 | 2U High Density Server Page

#2U high density server#dual node server#Intel Xeon Scalable server#GPU optimized server#NVIDIA Tesla server#AI and HPC server#cloud computing server#edge computing hardware#NVMe SSD server#Intel Optane memory server#redundant PSU server#PCIe expansion server#OCP mezzanine server#server with BMC management#enterprise-grade server

0 notes

Text

Diodes debuts 64GT/s PCIe 6.0 ReDriver targeting AI servers and HPC systems

June 16, 2025 /SemiMedia/ — Diodes Incorporated has launched the PI3EQX64904, a 64GT/s PAM4 linear ReDriver that supports PCI Express® 6.0 signaling and maintains backward compatibility with PCIe 5.0, 4.0, and 3.0 protocols. Designed for demanding data-intensive environments, the device addresses connectivity needs in AI data centers, high-performance computing clusters, and 5G…

#AI server connectivity#Diodes Incorporated#electronic components news#Electronic components supplier#Electronic parts supplier#high-speed PCIe interface#HPC interconnects#low power linear ReDriver#PAM4 signal integrity#PCIe 6.0 ReDriver

0 notes

Text

Future Applications of Cloud Computing: Transforming Businesses & Technology

Cloud computing is revolutionizing industries by offering scalable, cost-effective, and highly efficient solutions. From AI-driven automation to real-time data processing, the future applications of cloud computing are expanding rapidly across various sectors.

Key Future Applications of Cloud Computing

1. AI & Machine Learning Integration

Cloud platforms are increasingly being used to train and deploy AI models, enabling businesses to harness data-driven insights. The future applications of cloud computing will further enhance AI's capabilities by offering more computational power and storage.

2. Edge Computing & IoT

With IoT devices generating massive amounts of data, cloud computing ensures seamless processing and storage. The rise of edge computing, a subset of the future applications of cloud computing, will minimize latency and improve performance.

3. Blockchain & Cloud Security

Cloud-based blockchain solutions will offer enhanced security, transparency, and decentralized data management. As cybersecurity threats evolve, the future applications of cloud computing will focus on advanced encryption and compliance measures.

4. Cloud Gaming & Virtual Reality

With high-speed internet and powerful cloud servers, cloud gaming and VR applications will grow exponentially. The future applications of cloud computing in entertainment and education will provide immersive experiences with minimal hardware requirements.

Conclusion

The future applications of cloud computing are poised to redefine business operations, healthcare, finance, and more. As cloud technologies evolve, organizations that leverage these innovations will gain a competitive edge in the digital economy.

🔗 Learn more about cloud solutions at Fusion Dynamics! 🚀

#Keywords#services on cloud computing#edge network services#available cloud computing services#cloud computing based services#cooling solutions#cloud backups for business#platform as a service in cloud computing#platform as a service vendors#hpc cluster management software#edge computing services#ai services providers#data centers cooling systems#https://fusiondynamics.io/cooling/#server cooling system#hpc clustering#edge computing solutions#data center cabling solutions#cloud backups for small business#future applications of cloud computing

0 notes

Text

As this synergy grows, the future of engineering is set to be more collaborative, efficient, and innovative. Cloud computing truly bridges the gap between technical creativity and practical execution. To Know More: https://mkce.ac.in/blog/the-intersection-of-cloud-computing-and-engineering-transforming-data-management/

#engineering college#top 10 colleges in tn#private college#engineering college in karur#mkce college#best engineering college#best engineering college in karur#mkce#libary#mkce.ac.in#Cloud Computing#Data Management#Big Data#Analytics#Cost Efficiency#scalability#High-Performance Computing (HPC)#Artificial Intelligence (AI)#Machine Learning#Automation#Data Storage#Remote Work#Data Security#Global Reach#Cloud Servers#digitaltransformation

0 notes

Text

NVIDIA HGX H100 Delta-Next 8x 80GB SXM5 GPUs – 935-24287-0000-000

Meet the future of AI and high-performance computing: the NVIDIA HGX H100 Delta Next. With 8x 80GB SXM5 GPUs, this cutting-edge solution is designed to handle the most demanding workloads, from AI training to massive data processing. Discover how it can elevate your data center's capabilities.

Learn More: https://www.viperatech.com/product/nvidia-hgx-h100-delta-next-8x-80gb-sxm5-gpus-935-24287-0000-000/

0 notes

Text

A3 Ultra VMs With NVIDIA H200 GPUs Pre-launch This Month

Strong infrastructure advancements for your future that prioritizes AI

To increase customer performance, usability, and cost-effectiveness, Google Cloud implemented improvements throughout the AI Hypercomputer stack this year. Google Cloud at the App Dev & Infrastructure Summit:

Trillium, Google’s sixth-generation TPU, is currently available for preview.

Next month, A3 Ultra VMs with NVIDIA H200 Tensor Core GPUs will be available for preview.

Google’s new, highly scalable clustering system, Hypercompute Cluster, will be accessible beginning with A3 Ultra VMs.

Based on Axion, Google’s proprietary Arm processors, C4A virtual machines (VMs) are now widely accessible

AI workload-focused additions to Titanium, Google Cloud’s host offload capability, and Jupiter, its data center network.

Google Cloud’s AI/ML-focused block storage service, Hyperdisk ML, is widely accessible.

Trillium A new era of TPU performance

Trillium A new era of TPU performance is being ushered in by TPUs, which power Google’s most sophisticated models like Gemini, well-known Google services like Maps, Photos, and Search, as well as scientific innovations like AlphaFold 2, which was just awarded a Nobel Prize! We are happy to inform that Google Cloud users can now preview Trillium, our sixth-generation TPU.

Taking advantage of NVIDIA Accelerated Computing to broaden perspectives

By fusing the best of Google Cloud’s data center, infrastructure, and software skills with the NVIDIA AI platform which is exemplified by A3 and A3 Mega VMs powered by NVIDIA H100 Tensor Core GPUs it also keeps investing in its partnership and capabilities with NVIDIA.

Google Cloud announced that the new A3 Ultra VMs featuring NVIDIA H200 Tensor Core GPUs will be available on Google Cloud starting next month.

Compared to earlier versions, A3 Ultra VMs offer a notable performance improvement. Their foundation is NVIDIA ConnectX-7 network interface cards (NICs) and servers equipped with new Titanium ML network adapter, which is tailored to provide a safe, high-performance cloud experience for AI workloads. A3 Ultra VMs provide non-blocking 3.2 Tbps of GPU-to-GPU traffic using RDMA over Converged Ethernet (RoCE) when paired with our datacenter-wide 4-way rail-aligned network.

In contrast to A3 Mega, A3 Ultra provides:

With the support of Google’s Jupiter data center network and Google Cloud’s Titanium ML network adapter, double the GPU-to-GPU networking bandwidth

With almost twice the memory capacity and 1.4 times the memory bandwidth, LLM inferencing performance can increase by up to 2 times.

Capacity to expand to tens of thousands of GPUs in a dense cluster with performance optimization for heavy workloads in HPC and AI.

Google Kubernetes Engine (GKE), which offers an open, portable, extensible, and highly scalable platform for large-scale training and AI workloads, will also offer A3 Ultra VMs.

Hypercompute Cluster: Simplify and expand clusters of AI accelerators

It’s not just about individual accelerators or virtual machines, though; when dealing with AI and HPC workloads, you have to deploy, maintain, and optimize a huge number of AI accelerators along with the networking and storage that go along with them. This may be difficult and time-consuming. For this reason, Google Cloud is introducing Hypercompute Cluster, which simplifies the provisioning of workloads and infrastructure as well as the continuous operations of AI supercomputers with tens of thousands of accelerators.

Fundamentally, Hypercompute Cluster integrates the most advanced AI infrastructure technologies from Google Cloud, enabling you to install and operate several accelerators as a single, seamless unit. You can run your most demanding AI and HPC workloads with confidence thanks to Hypercompute Cluster’s exceptional performance and resilience, which includes features like targeted workload placement, dense resource co-location with ultra-low latency networking, and sophisticated maintenance controls to reduce workload disruptions.

For dependable and repeatable deployments, you can use pre-configured and validated templates to build up a Hypercompute Cluster with just one API call. This include containerized software with orchestration (e.g., GKE, Slurm), framework and reference implementations (e.g., JAX, PyTorch, MaxText), and well-known open models like Gemma2 and Llama3. As part of the AI Hypercomputer architecture, each pre-configured template is available and has been verified for effectiveness and performance, allowing you to concentrate on business innovation.

A3 Ultra VMs will be the first Hypercompute Cluster to be made available next month.

An early look at the NVIDIA GB200 NVL72

Google Cloud is also awaiting the developments made possible by NVIDIA GB200 NVL72 GPUs, and we’ll be providing more information about this fascinating improvement soon. Here is a preview of the racks Google constructing in the meantime to deliver the NVIDIA Blackwell platform’s performance advantages to Google Cloud’s cutting-edge, environmentally friendly data centers in the early months of next year.

Redefining CPU efficiency and performance with Google Axion Processors

CPUs are a cost-effective solution for a variety of general-purpose workloads, and they are frequently utilized in combination with AI workloads to produce complicated applications, even if TPUs and GPUs are superior at specialized jobs. Google Axion Processors, its first specially made Arm-based CPUs for the data center, at Google Cloud Next ’24. Customers using Google Cloud may now benefit from C4A virtual machines, the first Axion-based VM series, which offer up to 10% better price-performance compared to the newest Arm-based instances offered by other top cloud providers.

Additionally, compared to comparable current-generation x86-based instances, C4A offers up to 60% more energy efficiency and up to 65% better price performance for general-purpose workloads such as media processing, AI inferencing applications, web and app servers, containerized microservices, open-source databases, in-memory caches, and data analytics engines.

Titanium and Jupiter Network: Making AI possible at the speed of light

Titanium, the offload technology system that supports Google’s infrastructure, has been improved to accommodate workloads related to artificial intelligence. Titanium provides greater compute and memory resources for your applications by lowering the host’s processing overhead through a combination of on-host and off-host offloads. Furthermore, although Titanium’s fundamental features can be applied to AI infrastructure, the accelerator-to-accelerator performance needs of AI workloads are distinct.

Google has released a new Titanium ML network adapter to address these demands, which incorporates and expands upon NVIDIA ConnectX-7 NICs to provide further support for virtualization, traffic encryption, and VPCs. The system offers best-in-class security and infrastructure management along with non-blocking 3.2 Tbps of GPU-to-GPU traffic across RoCE when combined with its data center’s 4-way rail-aligned network.

Google’s Jupiter optical circuit switching network fabric and its updated data center network significantly expand Titanium’s capabilities. With native 400 Gb/s link rates and a total bisection bandwidth of 13.1 Pb/s (a practical bandwidth metric that reflects how one half of the network can connect to the other), Jupiter could handle a video conversation for every person on Earth at the same time. In order to meet the increasing demands of AI computation, this enormous scale is essential.

Hyperdisk ML is widely accessible

For computing resources to continue to be effectively utilized, system-level performance maximized, and economical, high-performance storage is essential. Google launched its AI-powered block storage solution, Hyperdisk ML, in April 2024. Now widely accessible, it adds dedicated storage for AI and HPC workloads to the networking and computing advancements.

Hyperdisk ML efficiently speeds up data load times. It drives up to 11.9x faster model load time for inference workloads and up to 4.3x quicker training time for training workloads.

With 1.2 TB/s of aggregate throughput per volume, you may attach 2500 instances to the same volume. This is more than 100 times more than what big block storage competitors are giving.

Reduced accelerator idle time and increased cost efficiency are the results of shorter data load times.

Multi-zone volumes are now automatically created for your data by GKE. In addition to quicker model loading with Hyperdisk ML, this enables you to run across zones for more computing flexibility (such as lowering Spot preemption).

Developing AI’s future

Google Cloud enables companies and researchers to push the limits of AI innovation with these developments in AI infrastructure. It anticipates that this strong foundation will give rise to revolutionary new AI applications.

Read more on Govindhtech.com

#A3UltraVMs#NVIDIAH200#AI#Trillium#HypercomputeCluster#GoogleAxionProcessors#Titanium#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

2 notes

·

View notes

Text

Exploring the Growing $21.3 Billion Data Center Liquid Cooling Market: Trends and Opportunities

In an era marked by rapid digital expansion, data centers have become essential infrastructures supporting the growing demands for data processing and storage. However, these facilities face a significant challenge: maintaining optimal operating temperatures for their equipment. Traditional air-cooling methods are becoming increasingly inadequate as server densities rise and heat generation intensifies. Liquid cooling is emerging as a transformative solution that addresses these challenges and is set to redefine the cooling landscape for data centers.

What is Liquid Cooling?

Liquid cooling systems utilize liquids to transfer heat away from critical components within data centers. Unlike conventional air cooling, which relies on air to dissipate heat, liquid cooling is much more efficient. By circulating a cooling fluid—commonly water or specialized refrigerants—through heat exchangers and directly to the heat sources, data centers can maintain lower temperatures, improving overall performance.

Market Growth and Trends

The data centre liquid cooling market is on an impressive growth trajectory. According to industry analysis, this market is projected to grow USD 21.3 billion by 2030, achieving a remarkable compound annual growth rate (CAGR) of 27.6%. This upward trend is fueled by several key factors, including the increasing demand for high-performance computing (HPC), advancements in artificial intelligence (AI), and a growing emphasis on energy-efficient operations.

Key Factors Driving Adoption

1. Rising Heat Density

The trend toward higher power density in server configurations poses a significant challenge for cooling systems. With modern servers generating more heat than ever, traditional air cooling methods are struggling to keep pace. Liquid cooling effectively addresses this issue, enabling higher density server deployments without sacrificing efficiency.

2. Energy Efficiency Improvements

A standout advantage of liquid cooling systems is their energy efficiency. Studies indicate that these systems can reduce energy consumption by up to 50% compared to air cooling. This not only lowers operational costs for data center operators but also supports sustainability initiatives aimed at reducing energy consumption and carbon emissions.

3. Space Efficiency

Data center operators often grapple with limited space, making it crucial to optimize cooling solutions. Liquid cooling systems typically require less physical space than air-cooled alternatives. This efficiency allows operators to enhance server capacity and performance without the need for additional physical expansion.

4. Technological Innovations

The development of advanced cooling technologies, such as direct-to-chip cooling and immersion cooling, is further propelling the effectiveness of liquid cooling solutions. Direct-to-chip cooling channels coolant directly to the components generating heat, while immersion cooling involves submerging entire server racks in non-conductive liquids, both of which push thermal management to new heights.

Overcoming Challenges

While the benefits of liquid cooling are compelling, the transition to this technology presents certain challenges. Initial installation costs can be significant, and some operators may be hesitant due to concerns regarding complexity and ongoing maintenance. However, as liquid cooling technology advances and adoption rates increase, it is expected that costs will decrease, making it a more accessible option for a wider range of data center operators.

The Competitive Landscape

The data center liquid cooling market is home to several key players, including established companies like Schneider Electric, Vertiv, and Asetek, as well as innovative startups committed to developing cutting-edge thermal management solutions. These organizations are actively investing in research and development to refine the performance and reliability of liquid cooling systems, ensuring they meet the evolving needs of data center operators.

Download PDF Brochure :

The outlook for the data center liquid cooling market is promising. As organizations prioritize energy efficiency and sustainability in their operations, liquid cooling is likely to become a standard practice. The integration of AI and machine learning into cooling systems will further enhance performance, enabling dynamic adjustments based on real-time thermal demands.

The evolution of liquid cooling in data centers represents a crucial shift toward more efficient, sustainable, and high-performing computing environments. As the demand for advanced cooling solutions rises in response to technological advancements, liquid cooling is not merely an option—it is an essential element of the future data center landscape. By embracing this innovative approach, organizations can gain a significant competitive advantage in an increasingly digital world.

#Data Center#Liquid Cooling#Energy Efficiency#High-Performance Computing#Sustainability#Thermal Management#AI#Market Growth#Technology Innovation#Server Cooling#Data Center Infrastructure#Immersion Cooling#Direct-to-Chip Cooling#IT Solutions#Digital Transformation

2 notes

·

View notes

Text

HBM2 DRAM Market: Competitive Landscape and Key Players 2025–2032

MARKET INSIGHTS

The global HBM2 DRAM Market size was valued at US$ 2.84 billion in 2024 and is projected to reach US$ 7.12 billion by 2032, at a CAGR of 12.2% during the forecast period 2025-2032.

HBM2E (High Bandwidth Memory 2E) is an advanced DRAM technology designed for high-performance computing applications. As the second generation of HBM standard, it delivers exceptional bandwidth capabilities up to 460 GB/s per stack, supporting configurations of up to 8 dies per stack with 16GB capacity. This cutting-edge memory solution stacks DRAM dies vertically using through-silicon vias (TSVs) for unprecedented speed and efficiency.

The market growth is primarily driven by increasing demand from artificial intelligence, machine learning, and high-performance computing applications. While servers dominate the application segment with over 65% market share, networking applications are witnessing rapid adoption. Technological advancements by industry leaders including Samsung and SK Hynix, who collectively hold approximately 85% of the market share, continue to push performance boundaries. Recent developments include Samsung's announcement of 8-layer HBM2E with 16GB capacity and 410GB/s bandwidth, while SK Hynix has achieved 460GB/s speeds in its premium offerings.

MARKET DYNAMICS

MARKET DRIVERS

Rising Demand for High-Bandwidth Applications in AI and HPC to Fuel Market Growth

The explosive growth of artificial intelligence (AI) and high-performance computing (HPC) applications is driving unprecedented demand for HBM2E DRAM solutions. These cutting-edge memory technologies deliver bandwidth capabilities exceeding 460 GB/s, making them indispensable for training complex neural networks and processing massive datasets. The AI accelerator market alone is projected to grow at over 35% CAGR through 2030, with data center investments reaching record levels as enterprises embrace machine learning at scale. HBM2E's 3D-stacked architecture provides the necessary throughput to eliminate memory bottlenecks in next-gen computing architectures.

Advancements in Semiconductor Packaging Technologies Create New Opportunities

The development of advanced packaging solutions like 2.5D and 3D IC integration is enabling broader adoption of HBM2E across diverse applications. Major foundries have reported a 50% increase in demand for chiplet-based designs that incorporate HBM memory stacks. This packaging revolution allows HBM2E to be tightly integrated with processors while maintaining signal integrity at extreme bandwidths. The emergence of universal chiplet interconnect standards has further accelerated design cycles, with several leading semiconductor firms now offering HBM2E-integrated solutions for datacenter, networking, and high-end computing applications.

Increasing Data Center Investments Stimulate Demand for Memory Performance

With global hyperscalers allocating over 40% of their infrastructure budgets to AI/ML capabilities, HBM2E is becoming a critical component in next-generation server architectures. The memory's combination of high density (up to 24GB per stack) and exceptional bandwidth significantly reduces data transfer latency in large-scale deployments. This advantage is particularly valuable for real-time analytics, natural language processing, and scientific computing workloads where memory subsystem performance directly impacts total system throughput.

MARKET RESTRAINTS

High Manufacturing Complexity and Cost Constraints Limit Mass Adoption

The sophisticated 3D stacking process required for HBM2E production presents significant manufacturing challenges that restrain market expansion. Each stack involves precisely bonding multiple memory dies with thousands of through-silicon vias (TSVs), a process that currently yields below 70% for premium configurations. This manufacturing complexity translates to costs nearly 3-4× higher than conventional GDDR memory, making HBM2E cost-prohibitive for many mainstream applications. While prices are expected to decline as production scales, the technology remains constrained to premium segments where performance outweighs cost considerations.

Other Restraints

Thermal Management Challenges The high-density packaging of HBM2E creates substantial thermal dissipation challenges that complicate system design. With power densities exceeding 500mW/mm² in some configurations, effective cooling solutions can add 15-20% to total system costs. These thermal constraints have led to specialized cooling requirements that limit deployment scenarios.

Supply Chain Vulnerabilities The concentrated supply base for advanced memory packaging creates potential bottlenecks. With only a handful of facilities worldwide capable of high-volume HBM production, any disruption could significantly impact market availability.

MARKET CHALLENGES

Design Complexity Poses Integration Hurdles for System Architects

Implementing HBM2E in real-world systems requires addressing multiple signal integrity and power delivery challenges. The ultra-wide interfaces operating at 3.2 Gbps/pin demand sophisticated PCB designs with tight impedance control and advanced power distribution networks. These requirements have led to development cycles that are typically 30% longer than conventional memory implementations, discouraging adoption among cost-sensitive OEMs. Furthermore, the ecosystem for design tools and IP supporting HBM2E remains nascent, requiring specialized engineering expertise that is in short supply across the industry.

Competition from Alternative Memory Technologies Creates Market Uncertainty

Emerging memory technologies like GDDR6X and CXL-based architectures are beginning to erode HBM2E's performance advantage in certain applications. GDDR6X now offers over 1 TB/s of bandwidth in some configurations at significantly lower cost points for applications that can tolerate higher latency. Meanwhile, Compute Express Link (CXL) enables disaggregated memory architectures that provide flexibility advantages for certain cloud workloads. While HBM2E maintains superiority in bandwidth-intensive applications, these alternatives are creating market segmentation challenges.

MARKET OPPORTUNITIES

Automotive and Edge AI Applications Present Untapped Growth Potential

The surging demand for autonomous vehicle computing and edge AI processing is creating new markets for high-performance memory solutions. While currently representing less than 5% of HBM2E deployments, automotive applications are projected to grow at nearly 60% CAGR as Level 4/5 autonomous systems require real-time processing of multiple high-resolution sensors. The technology's combination of high bandwidth and power efficiency makes it particularly suitable for in-vehicle AI accelerators where thermal and spatial constraints are paramount.

Next-Gen HBM3 Standard to Drive Refresh Cycles and Upgrade Opportunities

The impending transition to HBM3 technology, offering bandwidth exceeding 800 GB/s, is creating opportunities for OEMs to develop upgrade paths and migration strategies. Early adoption in high-end servers and supercomputing applications demonstrates the potential for performance doubling while maintaining backward compatibility. This transition cycle presents vendors with opportunities to offer value-added design services and memory subsystem co-optimization solutions.

HBM2E DRAM MARKET TRENDS

High-Performance Computing Demand Accelerating HBM2E Adoption

The rapid expansion of artificial intelligence (AI), machine learning, and cloud computing has created unprecedented demand for high-bandwidth memory solutions like HBM2E. With a maximum bandwidth of 460 GB/s per stack, this next-generation memory technology is becoming indispensable for data centers handling complex workloads. Over 65% of AI accelerators are expected to incorporate HBM technology by 2026, driven by its ability to process massive datasets efficiently. The 8G and 16G variants are seeing particular adoption in GPU-accelerated systems, where memory bandwidth directly impacts deep learning performance.

Other Trends

Advanced Packaging Innovations

While HBM2E provides superior bandwidth, its widespread adoption faces challenges in packaging technology. The industry is responding with 2.5D and 3D stacking solutions that enable higher thermal efficiency and yield rates. TSMC's CoWoS (Chip on Wafer on Substrate) packaging, for instance, has proven particularly effective for HBM integration, allowing processors to achieve 50% better power efficiency compared to traditional configurations. These packaging breakthroughs are critical as HBM2E stacks scale to 12 dies per package while managing heat dissipation challenges.

Geopolitical Factors Reshaping Supply Chains

The HBM2E market is experiencing significant shifts due to global semiconductor trade dynamics. With the U.S. accounting for nearly 40% of high-performance computing demand and China rapidly expanding its data center infrastructure, regional supply chains are adapting. Domestic production capabilities are becoming strategic priorities, as evidenced by government investments exceeding $50 billion worldwide in advanced memory manufacturing. SK Hynix and Samsung continue to dominate production, but new fabs in North America and Europe aim to diversify the supply base amid ongoing industry consolidation.

COMPETITIVE LANDSCAPE

Key Industry Players

Semiconductor Giants Drive Innovation in High-Bandwidth Memory Solutions

The HBM2E DRAM market is highly concentrated, dominated by a few major semiconductor manufacturers with strong technological capabilities and production capacities. SK Hynix leads the market with its advanced 16GB HBM2E offering 460 GB/s bandwidth - the highest available bandwidth among commercial solutions as of 2024. The company's strong position comes from its vertical integration strategy and early investments in 3D stacking technology.

Samsung Electronics closely follows with its 410 GB/s HBM2E memory, holding approximately 40% of the global market share. Samsung differentiates itself through its proprietary TSV (Through-Silicon Via) technology and foundry partnerships with major GPU manufacturers. The company's ability to package HBM2E with logic dies gives it an edge in serving AI accelerator markets.

These two Korean memory giants collectively control over 85% of the HBM2E market, creating significant barriers to entry for other players. However, several factors are gradually changing this dynamic:

Growing demand from diverse applications including servers, networking equipment, and high-performance computing

Increasing design wins with FPGA and ASIC manufacturers

Government initiatives supporting domestic semiconductor production in multiple countries

While the market remains oligopolistic, emerging players are making strategic investments to challenge the incumbents. Micron Technology is developing alternative architectures that could compete with HBM2E, though it hasn't yet reached mass production. Meanwhile, Intel has been integrating HBM solutions in its server processors, potentially creating new avenues for competition.

The competitive intensity is expected to increase as bandwidth requirements continue growing across various applications. Both SK Hynix and Samsung have announced development of next-generation HBM solutions with even higher densities and speeds, aiming to maintain their technological leadership positions.

List of Key HBM2E DRAM Companies Profiled

SK Hynix (South Korea)

Samsung Electronics (South Korea)

Micron Technology (U.S.)

Intel Corporation (U.S.)

ChangXin Memory Technologies (China)

Segment Analysis:

By Type

16 G Segment Gains Traction Due to High Demand for High-Performance Computing Applications

The market is segmented based on type into:

8 G

16 G

Subtypes: Standard 16 G and high-bandwidth variants

By Application

Servers Segment Drives Market Growth with Increasing Cloud Computing and Data Center Demand

The market is segmented based on application into:

Servers

Networking

Consumer electronics

Others

By Bandwidth

High-Bandwidth Variants Emerge as Key Differentiators in Performance-Critical Applications

The market is segmented based on bandwidth specifications into:

Standard HBM2E (307 GB/s)

Enhanced variants

SK Hynix (460 GB/s)

Samsung (410 GB/s)

By End-User Industry

AI and HPC Applications Dominate Adoption Due to Intensive Memory Bandwidth Requirements

The market is segmented based on end-user industry into:

Artificial Intelligence

High Performance Computing

Graphics Processing

Enterprise Storage

Regional Analysis: HBM2E DRAM Market

North America The North American HBM2E DRAM market is characterized by high-performance computing applications and strong demand from data centers. The U.S. dominates the regional market, driven by investments in AI infrastructure and hyperscale cloud computing. SK Hynix and Samsung have established partnerships with major tech firms to supply HBM2E for GPUs and AI accelerators. However, supply chain constraints and complex fabrication processes have led to price volatility, pushing manufacturers to accelerate production capacity expansion. The region benefits from government-funded semiconductor initiatives, such as the CHIPS and Science Act, which indirectly supports memory innovation ecosystems.

Europe Europe’s HBM2E DRAM adoption is primarily fueled by automotive and industrial applications, particularly in Germany and the Nordic countries. The region emphasizes energy-efficient memory solutions due to strict sustainability regulations under the EU Green Deal. While local production remains limited, collaborative R&D projects between academic institutions and global players like Intel (partnering with SK Hynix for Ponte Vecchio GPUs) are bridging the gap. The aerospace and defense sectors also contribute to niche demand, though higher costs compared to conventional DDR5 solutions restrain mass-market penetration.

Asia-Pacific As the largest consumer and producer of HBM2E DRAM, Asia-Pacific is fueled by South Korea’s semiconductor leadership and China’s aggressive investments in domestic AI hardware. SK Hynix and Samsung collectively control over 90% of global HBM2E production, leveraging advanced packaging technologies. Japan plays a critical role in supplying key materials like photoresists, while Taiwan focuses on downstream integration with TSMC’s CoWoS platforms. Price sensitivity in Southeast Asia, however, limits adoption to high-margin applications, with most demand concentrated in data center deployments and supercomputing projects.

South America The region exhibits nascent demand, chiefly driven by Brazil’s developing AI and fintech sectors. Limited local manufacturing forces reliance on imports, subjecting buyers to logistical delays and tariff-related cost fluctuations. Chile and Argentina show sporadic interest in HBM2E for academic research clusters, but budget constraints prioritize cheaper alternatives like GDDR6. The lack of specialized data infrastructure further slows adoption, though partnerships with cloud service providers could unlock incremental growth.

Middle East & Africa Growth is emerging in Gulf Cooperation Council (GCC) nations, where sovereign wealth funds invest in smart city initiatives requiring high-bandwidth memory. The UAE and Saudi Arabia lead in deploying HBM2E for oil/gas simulations and financial analytics, albeit at small scales. Africa’s market remains largely untapped due to limited AI/ML adoption and underdeveloped semiconductor ecosystems. Long-term potential exists as regional tech hubs like Nigeria and Kenya gradually embrace advanced computing, though scalability depends on improved power infrastructure and cost reductions.

Report Scope

This market research report provides a comprehensive analysis of the Global HBM2E DRAM market, covering the forecast period 2025–2032. It offers detailed insights into market dynamics, technological advancements, competitive landscape, and key trends shaping the high-bandwidth memory industry.

Key focus areas of the report include:

Market Size & Forecast: Historical data and future projections for revenue, unit shipments (Million GB), and market value across major regions and segments. The market was valued at USD 2.1 billion in 2024 and is projected to reach USD 5.8 billion by 2032 at a CAGR of 13.5%.

Segmentation Analysis: Detailed breakdown by product type (8G, 16G), application (Servers, Networking, Consumer, Others), and end-user industries to identify high-growth segments.

Regional Outlook: Insights into market performance across North America, Europe, Asia-Pacific, Latin America, and Middle East & Africa. Asia-Pacific dominates with 62% market share in 2024.

Competitive Landscape: Profiles of leading manufacturers including SK Hynix and Samsung, covering their product portfolios (410-460 GB/s bandwidth variants), R&D investments, and strategic partnerships.

Technology Trends: Analysis of JEDEC standards evolution, 3D stacking advancements (up to 12 dies), and integration with AI/ML workloads requiring >400 GB/s bandwidth.

Market Drivers & Restraints: Evaluation of data center expansion vs. high manufacturing costs and thermal management challenges in HBM architectures.

Stakeholder Analysis: Strategic insights for memory suppliers, foundries, hyperscalers, and investors regarding capacity planning and technology roadmaps.

The research methodology combines primary interviews with industry experts and analysis of financial reports from semiconductor manufacturers to ensure data accuracy.

FREQUENTLY ASKED QUESTIONS:

What is the current market size of Global HBM2E DRAM Market?

->HBM2 DRAM Market size was valued at US$ 2.84 billion in 2024 and is projected to reach US$ 7.12 billion by 2032, at a CAGR of 12.2% during the forecast period 2025-2032.

Which companies lead the HBM2E DRAM market?

-> SK Hynix (46% market share) and Samsung (41% share) dominate the market, with their 16GB stacks offering 460GB/s and 410GB/s bandwidth respectively.

What drives HBM2E DRAM adoption?

-> Key drivers include AI accelerator demand (60% of 2024 shipments), HPC applications, and bandwidth requirements for 5G infrastructure.

Which region has highest growth potential?

-> North America (32% CAGR) leads in AI server deployments, while Asia-Pacific accounts for 78% of manufacturing capacity.

What are key technology developments?

-> Emerging innovations include 12-die stacks, TSV scaling below 5μm, and heterogeneous integration with logic dies for next-gen AI chips.

Related Reports:https://semiconductorblogs21.blogspot.com/2025/06/ssd-processor-market-segmentation-by.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/semiconductor-wafer-processing-chambers.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/medical-thermistor-market-supply-chain.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/industrial-led-lighting-market.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/fz-polished-wafer-market-demand-outlook.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/fanless-embedded-system-market-regional.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/ceramic-cement-resistor-market-emerging.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/universal-asynchronous-receiver.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/fbg-strain-sensor-market-competitive.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/led-display-module-market-industry-size.htmlhttps://semiconductorblogs21.blogspot.com/2025/06/gain-and-loss-equalizer-market-growth.html

0 notes

Text

VDO Bàn Giao Thành Công Lô GPU Server White Box Cho Dự Án AI Quy Mô Lớn

🚚🚚 Lô hàng GPU Server White box tiếp theo đã được VDO bàn giao thành công cho khách hàng thuộc một dự án công nghệ quy mô lớn tại Việt Nam.

Đây là dấu mốc quan trọng tiếp theo trong hành trình VDO đồng hành cùng doanh nghiệp Việt trong quá trình chuyển đổi hạ tầng công nghệ, đặc biệt trong lĩnh vực AI, big data, và ứng dụng tính toán hiệu năng cao (HPC).

✅ Hệ thống được tích hợp GPU mạnh mẽ, thiết kế white-box linh hoạt

✅ VDO trực tiếp cấu hình, lắp ráp và tối ưu hiệu suất, sử dụng linh kiện phần cứng chính hãng từ các thương hiệu mà VDO là nhà phân phối chính thức tại Việt Nam

✅ Đáp ứng yêu cầu khắt khe của các ứng dụng AI, phân tích dữ liệu, học máy và xử lý hình ảnh

✅ Hỗ trợ tùy chỉnh cấu hình theo từng mô hình triển khai thực tế

-------------

Với nền tảng kinh nghiệm triển khai nhiều dự án hạ tầng công nghệ, VDO đã lắp đặt thành công các hệ thống GPU Server White box cho nhiều đối tác, khách hàng tại Việt Nam. Chúng tôi sẵn sàng đồng hành cùng doanh nghiệp trong hành trình ứng dụng AI, từ tư vấn giải pháp, cung cấp thiết bị đến triển khai, vận hành và mở rộng hạ tầng.

👉👉 Hãy liên hệ VDO để được tư vấn giải pháp hạ tầng CNTT tối ưu cho doanh nghiệp của bạn:

☎ 1900 0366

🗂 https://vdo.com.vn | https://vdoecommerce.com

#VDO#VDOdistributor#Luonviban#Alwaysforyou#GPUserver#VDOGPUServer#AIinfrastructure#Datacenter#WhiteBoxServer#ServerChoDoanhNghiep#HạTầngCôngNghệ

0 notes

Text

In the fast-paced digital world of today, businesses and industries are relying more than ever on efficient and scalable solutions for managing their infrastructure. One of the most promising innovations is the combination of cloud computing infrastructure and artificial intelligence (AI). Together, they are transforming how we handle infrastructure asset management and optimizing industries such as energy. This blog will explain how these technologies work together and the impacts that they are having across a wide array of sectors, including in the USA energy markets

What is Cloud Computing Infrastructure?

Cloud computing infrastructure refers to the systems that serve as the basis for delivering cloud services. This may include virtual servers, storage systems, networking capabilities, and databases. They are offered to businesses and consumers through the internet. Instead of having to hold expensive physical infrastructure, a company can use cloud infrastructure solutions to scale its operations very efficiently.

Businesses do not have to be concerned about the capital expenses for on-premise infrastructure maintenance and upgrades. With cloud service provision, organizations are enabled with tools for the management of cloud infrastructure on digital resources to watch out for them seamlessly. With cloud computing in the energy industry, companies run their simulations and manage the output without having to buy large, expensive hardware.

Changing the Face of Computing Infrastructure

The Role of AI Technology

Artificial intelligence (AI refers to computer systems designed to perform tasks that typically require human intelligence, such as learning, reasoning, and problem-solving. AI is revolutionizing how infrastructure is managed by enabling automated systems to make decisions based on data and real-time analysis.

In the energy industry, for instance, AI technology can be used in analyzing large volumes of data to optimize operations, predict failures, and recommend improvements. Such is vital in industries like US energy markets, where AI solutions can predict market fluctuations, optimize energy distribution, and increase overall efficiency.

Artificial Intelligence in Cloud Computing

When artificial intelligence in cloud computing is introduced, the possibilities expand exponentially. AI-based cloud solutions allow businesses to benefit from AI capabilities without requiring investment in dedicated hardware or a specialized team. For example, companies can utilize AI cloud computing benefits to analyze large data sets stored in the cloud, forecast energy demands, or predict equipment failures in real time.

AI and Cloud Computing for Asset Management

Among the benefits that come from the integration of AI with cloud computing infrastructure is infrastructure asset management. It is complex managing equipment, machines, or even digital services. AI algorithms help in optimizing this by identifying patterns and predicting when assets will require maintenance or replacement.

#ai infrastructure#cloudstorage#cloud computing#artificial intelligence tools#artificial intelligence

0 notes

Text

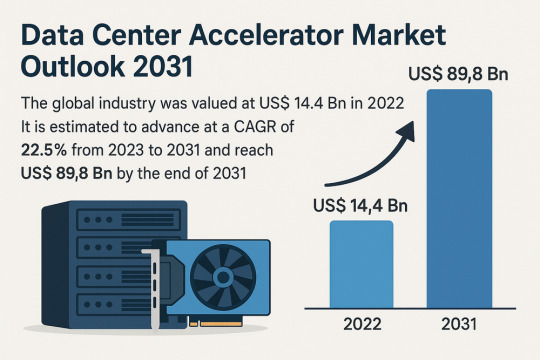

Data Center Accelerator Market Set to Transform AI Infrastructure Landscape by 2031

The global data center accelerator market is poised for exponential growth, projected to rise from USD 14.4 Bn in 2022 to a staggering USD 89.8 Bn by 2031, advancing at a CAGR of 22.5% during the forecast period from 2023 to 2031. Rapid adoption of Artificial Intelligence (AI), Machine Learning (ML), and High-Performance Computing (HPC) is the primary catalyst driving this expansion.

Market Overview: Data center accelerators are specialized hardware components that improve computing performance by efficiently handling intensive workloads. These include Graphics Processing Units (GPUs), Tensor Processing Units (TPUs), Field Programmable Gate Arrays (FPGAs), and Application-Specific Integrated Circuits (ASICs), which complement CPUs by expediting data processing.

Accelerators enable data centers to process massive datasets more efficiently, reduce reliance on servers, and optimize costs a significant advantage in a data-driven world.

Market Drivers & Trends

Rising Demand for High-performance Computing (HPC): The proliferation of data-intensive applications across industries such as healthcare, autonomous driving, financial modeling, and weather forecasting is fueling demand for robust computing resources.

Boom in AI and ML Technologies: The computational requirements of AI and ML are driving the need for accelerators that can handle parallel operations and manage extensive datasets efficiently.

Cloud Computing Expansion: Major players like AWS, Azure, and Google Cloud are investing in infrastructure that leverages accelerators to deliver faster AI-as-a-service platforms.

Latest Market Trends

GPU Dominance: GPUs continue to dominate the market, especially in AI training and inference workloads, due to their capability to handle parallel computations.

Custom Chip Development: Tech giants are increasingly developing custom chips (e.g., Meta’s MTIA and Google's TPUs) tailored to their specific AI processing needs.

Energy Efficiency Focus: Companies are prioritizing the design of accelerators that deliver high computational power with reduced energy consumption, aligning with green data center initiatives.

Key Players and Industry Leaders

Prominent companies shaping the data center accelerator landscape include:

NVIDIA Corporation – A global leader in GPUs powering AI, gaming, and cloud computing.

Intel Corporation – Investing heavily in FPGA and ASIC-based accelerators.

Advanced Micro Devices (AMD) – Recently expanded its EPYC CPU lineup for data centers.

Meta Inc. – Introduced Meta Training and Inference Accelerator (MTIA) chips for internal AI applications.

Google (Alphabet Inc.) – Continues deploying TPUs across its cloud platforms.

Other notable players include Huawei Technologies, Cisco Systems, Dell Inc., Fujitsu, Enflame Technology, Graphcore, and SambaNova Systems.

Recent Developments

March 2023 – NVIDIA introduced a comprehensive Data Center Platform strategy at GTC 2023 to address diverse computational requirements.

June 2023 – AMD launched new EPYC CPUs designed to complement GPU-powered accelerator frameworks.

2023 – Meta Inc. revealed the MTIA chip to improve performance for internal AI workloads.

2023 – Intel announced a four-year roadmap for data center innovation focused on Infrastructure Processing Units (IPUs).

Gain an understanding of key findings from our Report in this sample - https://www.transparencymarketresearch.com/sample/sample.php?flag=S&rep_id=82760

Market Opportunities

Edge Data Center Integration: As computing shifts closer to the edge, opportunities arise for compact and energy-efficient accelerators in edge data centers for real-time analytics and decision-making.

AI in Healthcare and Automotive: As AI adoption grows in precision medicine and autonomous vehicles, demand for accelerators tuned for domain-specific processing will soar.

Emerging Markets: Rising digitization in emerging economies presents substantial opportunities for data center expansion and accelerator deployment.

Future Outlook

With AI, ML, and analytics forming the foundation of next-generation applications, the demand for enhanced computational capabilities will continue to climb. By 2031, the data center accelerator market will likely transform into a foundational element of global IT infrastructure.

Analysts anticipate increasing collaboration between hardware manufacturers and AI software developers to optimize performance across the board. As digital transformation accelerates, companies investing in custom accelerator architectures will gain significant competitive advantages.

Market Segmentation

By Type:

Central Processing Unit (CPU)

Graphics Processing Unit (GPU)

Application-Specific Integrated Circuit (ASIC)

Field-Programmable Gate Array (FPGA)

Others

By Application:

Advanced Data Analytics

AI/ML Training and Inference

Computing

Security and Encryption

Network Functions

Others

Regional Insights

Asia Pacific dominates the global market due to explosive digital content consumption and rapid infrastructure development in countries such as China, India, Japan, and South Korea.

North America holds a significant share due to the presence of major cloud providers, AI startups, and heavy investment in advanced infrastructure. The U.S. remains a critical hub for data center deployment and innovation.

Europe is steadily adopting AI and cloud computing technologies, contributing to increased demand for accelerators in enterprise data centers.

Why Buy This Report?

Comprehensive insights into market drivers, restraints, trends, and opportunities

In-depth analysis of the competitive landscape

Region-wise segmentation with revenue forecasts

Includes strategic developments and key product innovations

Covers historical data from 2017 and forecast till 2031

Delivered in convenient PDF and Excel formats

Frequently Asked Questions (FAQs)

1. What was the size of the global data center accelerator market in 2022? The market was valued at US$ 14.4 Bn in 2022.

2. What is the projected market value by 2031? It is projected to reach US$ 89.8 Bn by the end of 2031.

3. What is the key factor driving market growth? The surge in demand for AI/ML processing and high-performance computing is the major driver.

4. Which region holds the largest market share? Asia Pacific is expected to dominate the global data center accelerator market from 2023 to 2031.

5. Who are the leading companies in the market? Top players include NVIDIA, Intel, AMD, Meta, Google, Huawei, Dell, and Cisco.

6. What type of accelerator dominates the market? GPUs currently dominate the market due to their parallel processing efficiency and widespread adoption in AI/ML applications.

7. What applications are fueling growth? Applications like AI/ML training, advanced analytics, and network security are major contributors to the market's growth.

Explore Latest Research Reports by Transparency Market Research: Tactile Switches Market: https://www.transparencymarketresearch.com/tactile-switches-market.html

GaN Epitaxial Wafers Market: https://www.transparencymarketresearch.com/gan-epitaxial-wafers-market.html

Silicon Carbide MOSFETs Market: https://www.transparencymarketresearch.com/silicon-carbide-mosfets-market.html

Chip Metal Oxide Varistor (MOV) Market: https://www.transparencymarketresearch.com/chip-metal-oxide-varistor-mov-market.html

About Transparency Market Research Transparency Market Research, a global market research company registered at Wilmington, Delaware, United States, provides custom research and consulting services. Our exclusive blend of quantitative forecasting and trends analysis provides forward-looking insights for thousands of decision makers. Our experienced team of Analysts, Researchers, and Consultants use proprietary data sources and various tools & techniques to gather and analyses information. Our data repository is continuously updated and revised by a team of research experts, so that it always reflects the latest trends and information. With a broad research and analysis capability, Transparency Market Research employs rigorous primary and secondary research techniques in developing distinctive data sets and research material for business reports. Contact: Transparency Market Research Inc. CORPORATE HEADQUARTER DOWNTOWN, 1000 N. West Street, Suite 1200, Wilmington, Delaware 19801 USA Tel: +1-518-618-1030 USA - Canada Toll Free: 866-552-3453 Website: https://www.transparencymarketresearch.com Email: [email protected] of Form

Bottom of Form

0 notes

Text

High-Performance 2U 4-Node Server – Hexadata HD-H252-Z10

The Hexadata HD-H252-Z10 Ver: Gen001 is a cutting-edge 2U high-density server featuring 4 rear-access nodes, each powered by AMD EPYC™ 7003 series processors. Designed for HPC, AI, and data analytics workloads, it offers 8-channel DDR4 memory across 32 DIMMs, 24 hot-swappable NVMe/SATA SSD bays, and 8 M.2 PCIe Gen3 x4 slots. With advanced features like OCP 3.0 readiness, Aspeed® AST2500 remote management, and 2000W 80 PLUS Platinum redundant PSU, this server ensures optimal performance and scalability for modern data centers. for more details, Visit- Hexadata Server Page

#High-density server#AMD EPYC Server#2U 4-Node Server#Data Center Solutions#HPC Server#AI Infrastructure#NVMe Storage Server#OCP 3.0 Ready#Remote Server Management#HexaData servers

0 notes

Text

Available Cloud Computing Services at Fusion Dynamics

We Fuel The Digital Transformation Of Next-Gen Enterprises!

Fusion Dynamics provides future-ready IT and computing infrastructure that delivers high performance while being cost-efficient and sustainable. We envision, plan and build next-gen data and computing centers in close collaboration with our customers, addressing their business’s specific needs. Our turnkey solutions deliver best-in-class performance for all advanced computing applications such as HPC, Edge/Telco, Cloud Computing, and AI.

With over two decades of expertise in IT infrastructure implementation and an agile approach that matches the lightning-fast pace of new-age technology, we deliver future-proof solutions tailored to the niche requirements of various industries.

Our Services

We decode and optimise the end-to-end design and deployment of new-age data centers with our industry-vetted services.

System Design

When designing a cutting-edge data center from scratch, we follow a systematic and comprehensive approach. First, our front-end team connects with you to draw a set of requirements based on your intended application, workload, and physical space. Following that, our engineering team defines the architecture of your system and deep dives into component selection to meet all your computing, storage, and networking requirements. With our highly configurable solutions, we help you formulate a system design with the best CPU-GPU configurations to match the desired performance, power consumption, and footprint of your data center.

Why Choose Us

We bring a potent combination of over two decades of experience in IT solutions and a dynamic approach to continuously evolve with the latest data storage, computing, and networking technology. Our team constitutes domain experts who liaise with you throughout the end-to-end journey of setting up and operating an advanced data center.

With a profound understanding of modern digital requirements, backed by decades of industry experience, we work closely with your organisation to design the most efficient systems to catalyse innovation. From sourcing cutting-edge components from leading global technology providers to seamlessly integrating them for rapid deployment, we deliver state-of-the-art computing infrastructures to drive your growth!

What We Offer The Fusion Dynamics Advantage!

At Fusion Dynamics, we believe that our responsibility goes beyond providing a computing solution to help you build a high-performance, efficient, and sustainable digital-first business. Our offerings are carefully configured to not only fulfil your current organisational requirements but to future-proof your technology infrastructure as well, with an emphasis on the following parameters ���

Performance density

Rather than focusing solely on absolute processing power and storage, we strive to achieve the best performance-to-space ratio for your application. Our next-generation processors outrival the competition on processing as well as storage metrics.

Flexibility

Our solutions are configurable at practically every design layer, even down to the choice of processor architecture – ARM or x86. Our subject matter experts are here to assist you in designing the most streamlined and efficient configuration for your specific needs.

Scalability

We prioritise your current needs with an eye on your future targets. Deploying a scalable solution ensures operational efficiency as well as smooth and cost-effective infrastructure upgrades as you scale up.

Sustainability

Our focus on future-proofing your data center infrastructure includes the responsibility to manage its environmental impact. Our power- and space-efficient compute elements offer the highest core density and performance/watt ratios. Furthermore, our direct liquid cooling solutions help you minimise your energy expenditure. Therefore, our solutions allow rapid expansion of businesses without compromising on environmental footprint, helping you meet your sustainability goals.

Stability

Your compute and data infrastructure must operate at optimal performance levels irrespective of fluctuations in data payloads. We design systems that can withstand extreme fluctuations in workloads to guarantee operational stability for your data center.

Leverage our prowess in every aspect of computing technology to build a modern data center. Choose us as your technology partner to ride the next wave of digital evolution!

#Keywords#services on cloud computing#edge network services#available cloud computing services#cloud computing based services#cooling solutions#hpc cluster management software#cloud backups for business#platform as a service vendors#edge computing services#server cooling system#ai services providers#data centers cooling systems#integration platform as a service#https://www.tumblr.com/#cloud native application development#server cloud backups#edge computing solutions for telecom#the best cloud computing services#advanced cooling systems for cloud computing#c#data center cabling solutions#cloud backups for small business#future applications of cloud computing

0 notes

Text

ABF Substrate (FC-BGA) Market Growth Analysis 2025

The global market for ABF (FCBGA) Substrate was valued at US$ 5.16 billion in the year 2023, is projected to reach a revised size of US$ 10.2 billion by 2030, growing at a CAGR of 9.86% during the forecast period 2024-2030.

Get free sample of this report at : https://www.intelmarketresearch.com/download-free-sample/318/abf-substrate-fc-bga

PCBs are key component of various electronic products. They are used in computers, communications, and various consumer electronic products and equipment. In recent years, they have been widely used in the automotive, industrial, medical, military and aerospace industries. Therefore, the development of this industry is driven by the advancement of modern science and technology, and is closely related to the demand of various end products.

Currently the ABF (FCBGA) Substrates are mainly produced in Japan, China Taiwan, South Korea, Southeast Asia and China Mainland, etc.

The Japan market for ABF (FCBGA) substrates was valued at US$ 1,684 million in 2023 and will reach US$ 2,746 million by 2030, at a CAGR of 7.21% during the forecast period of 2024 through 2030.

The China Taiwan market for ABF (FCBGA) substrates was valued at US$ 2,011 million in 2023 and will reach US$ 3,079 million by 2030, at a CAGR of 6.03% during the forecast period of 2024 through 2030.

The South Korea market for ABF (FCBGA) substrates was valued at US$ 614 million in 2023 and will reach US$ 1,677 million by 2030, at a CAGR of 12.97% during the forecast period of 2024 through 2030.

The China Mainland market for ABF (FCBGA) substrates was valued at US$ 654 million in 2023 and will reach US$ 2,284 million by 2030, at a CAGR of 18.92% during the forecast period of 2024 through 2030.

The global key manufacturers of ABF (FCBGA) substrates include Unimicron, Ibiden, Nan Ya PCB, Shinko Electric Industries, Kinsus Interconnect, AT&S, Semco, Kyocera, and TOPPAN, etc. In 2023, the global top five players had a share approximately 73% in terms of revenue.

Asia Pacific is the largest market, holds a share about 78%, key consumers in Asia are Chinese Taiwan, South Korea, Japan, China mainland, and Southeast Asia.

In terms of products, 4-8 Layers ABF substrates are the most common used products, due to the strong demand from PC. In next few years, the segment over 10 layers ABF substrate will be widely used, driven by the demand of AI, HPC chips, high end servers and 5G.

Key end users are Intel, AMD, Nvidia, Apple, and Samsung, etc. In 2023, the key end users are heating up competition to win more capacity support from suppliers of ABF substrates needed to process their HPC chips through at least 2025. Almost all of ABF substrates manufacturers have plans to expand production capacity in next few years, and there also several companies have planned to enter to produce ABF substrates, such as Anhui Splendid Technology, Aoxin Semiconductor Technology (Taicang), and Keruisi Semiconductor Technology (Dongyang) etc. The global competitive situation will be totally different after two or five years, filled with uncertainty.

Report Scope

This report aims to provide a comprehensive presentation of the global market for FC-BGA, with both quantitative and qualitative analysis, to help readers develop business/growth strategies, assess the market competitive situation, analyze their position in the current marketplace, and make informed business decisions regarding FC-BGA.

The FC-BGA market size, estimations, and forecasts are provided in terms of output/shipments (K Square Meters) and revenue ($ millions), considering 2023 as the base year, with history and forecast data for the period from 2019 to 2030. This report segments the global FC-BGA market comprehensively. Regional market sizes, concerning products by Type, by Application, and by players, are also provided.

For a more in-depth understanding of the market, the report provides profiles of the competitive landscape, key competitors, and their respective market ranks. The report also discusses technological trends and new product developments.

The report will help the FC-BGA manufacturers, new entrants, and industry chain related companies in this market with information on the revenues, production, and average price for the overall market and the sub-segments across the different segments, by company, by Type, by Application, and by regions.

Market Segmentation

By Company

Unimicron

Ibiden

Nan Ya PCB

Shinko Electric Industries

Kinsus Interconnect

AT&S

Semco

Kyocera

Toppan

Zhen Ding Technology

Daeduck Electronics

Shenzhen Fastprint Circuit Tech

Zhuhai Access Semiconductor

LG InnoTek

Shennan Circuit

by Type

4-8 Layers ABF Substrate

8-16 Layers ABF Substrate

Others

by Application

PCs

Server & Data Center

HPC/AI Chips

Communication

Others

Production by Region

China Mainland

Japan

South Korea

China Taiwan

Southeast Asia

Consumption by Region

North America (United States, Canada, Mexico)

Europe (Germany, France, United Kingdom, Italy, Spain, Rest of Europe)

Asia-Pacific (China, India, Japan, South Korea, Australia, Rest of APAC)

The Middle East and Africa (Middle East, Africa)

South and Central America (Brazil, Argentina, Rest of SCA)

Get free sample of this report at : https://www.intelmarketresearch.com/download-free-sample/318/abf-substrate-fc-bga

0 notes

Text

Lenovo NVIDIA HGX H200

Unlock new levels of AI and HPC performance with the Lenovo NVIDIA HGX H200. Featuring 141GB memory, 700W, and 8 GPUs, it's designed for the most challenging tasks.

GET NOW: https://www.viperatech.com/product/lenovo-nvidia-hgx-h200-141gb-700w-8-gpu/

#Viperatech #AI #HPC #NVIDIA #Lenovo #TechInnovation #Server

0 notes