#NVIDIA Tesla server

Explore tagged Tumblr posts

Text

HexaData HD‑H231‑H60 Ver Gen001 – 2U High-Density Dual‑Node Server

The HexaData HD‑H231‑H60 Ver Gen001 is a 2U, dual-node high-density server powered by 2nd Gen Intel Xeon Scalable (“Cascade Lake”) CPUs. Each node supports up to 2 double‑slot NVIDIA/Tesla GPUs, 6‑channel DDR4 with 32 DIMMs, plus Intel Optane DC Persistent Memory. Features include hot‑swap NVMe/SATA/SAS bays, low-profile PCIe Gen3 & OCP mezzanine expansion, Aspeed AST2500 BMC, and dual 2200 W 80 PLUS Platinum redundant PSUs—optimized for HPC, AI, cloud, and edge deployments. Visit for more details: Hexadata HD-H231-H60 Ver: Gen001 | 2U High Density Server Page

#2U high density server#dual node server#Intel Xeon Scalable server#GPU optimized server#NVIDIA Tesla server#AI and HPC server#cloud computing server#edge computing hardware#NVMe SSD server#Intel Optane memory server#redundant PSU server#PCIe expansion server#OCP mezzanine server#server with BMC management#enterprise-grade server

0 notes

Text

How to Optimize Your AI Workloads Using the Tesla H100 GPU?

Artificial intelligence (AI) is revolutionizing industries, and with it, the need for powerful computing solutions has grown significantly. The Tesla H100 GPU is designed specifically to handle the complex, data-intensive workloads of AI and deep learning applications.

0 notes

Photo

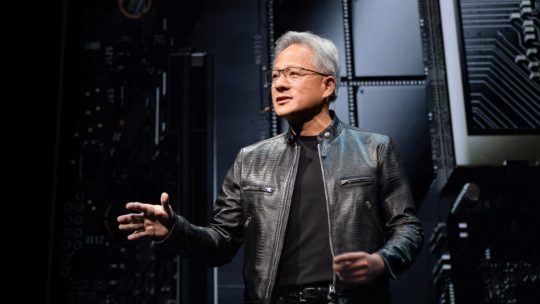

Could humanoid robots really take over the future of AI server manufacturing? According to a recent Reuters report, NVIDIA and Foxconn are exploring this groundbreaking technology. In Houston, Texas, humanoid robots may soon assemble NVIDIA's Blackwell AI servers, marking a major shift in how advanced computing hardware is built. Tesla’s interest in deploying robots in their factories adds to the excitement — could we see a fully automated production line in the US? This move aims to boost domestic production amid trade tariffs and supply chain challenges. Envision a factory where robots build the most powerful AI servers on the planet, all on US soil. For tech enthusiasts and innovators, this signals a new era of efficiency and high-tech manufacturing. Will humanoid automation become the new standard in AI data centers? Stay ahead of industry trends and explore custom computer builds tailored for AI at GroovyComputers.ca — the perfect way to upgrade your tech today. Would you trust humanoid robots to assemble your high-performance computer? Share your thoughts in the comments! #AI #HumanoidRobots #Nvidia #Foxconn #DataCenters #AIComputing #TechInnovation #FutureOfManufacturing #CustomPCs #GroovyComputers #AdvancedTechnology #USManufacturing

0 notes

Photo

Could humanoid robots really take over the future of AI server manufacturing? According to a recent Reuters report, NVIDIA and Foxconn are exploring this groundbreaking technology. In Houston, Texas, humanoid robots may soon assemble NVIDIA's Blackwell AI servers, marking a major shift in how advanced computing hardware is built. Tesla’s interest in deploying robots in their factories adds to the excitement — could we see a fully automated production line in the US? This move aims to boost domestic production amid trade tariffs and supply chain challenges. Envision a factory where robots build the most powerful AI servers on the planet, all on US soil. For tech enthusiasts and innovators, this signals a new era of efficiency and high-tech manufacturing. Will humanoid automation become the new standard in AI data centers? Stay ahead of industry trends and explore custom computer builds tailored for AI at GroovyComputers.ca — the perfect way to upgrade your tech today. Would you trust humanoid robots to assemble your high-performance computer? Share your thoughts in the comments! #AI #HumanoidRobots #Nvidia #Foxconn #DataCenters #AIComputing #TechInnovation #FutureOfManufacturing #CustomPCs #GroovyComputers #AdvancedTechnology #USManufacturing

0 notes

Text

Implementing AI: Step-by-step integration guide for hospitals: Specifications Breakdown, FAQs, and More

Implementing AI: Step-by-step integration guide for hospitals: Specifications Breakdown, FAQs, and More

The healthcare industry is experiencing a transformative shift as artificial intelligence (AI) technologies become increasingly sophisticated and accessible. For hospitals looking to modernize their operations and improve patient outcomes, implementing AI systems represents both an unprecedented opportunity and a complex challenge that requires careful planning and execution.

This comprehensive guide provides healthcare administrators, IT directors, and medical professionals with the essential knowledge needed to successfully integrate AI technologies into hospital environments. From understanding technical specifications to navigating regulatory requirements, we’ll explore every aspect of AI implementation in healthcare settings.

Understanding AI in Healthcare: Core Applications and Benefits

Artificial intelligence in healthcare encompasses a broad range of technologies designed to augment human capabilities, streamline operations, and enhance patient care. Modern AI systems can analyze medical imaging with remarkable precision, predict patient deterioration before clinical symptoms appear, optimize staffing schedules, and automate routine administrative tasks that traditionally consume valuable staff time.

The most impactful AI applications in hospital settings include diagnostic imaging analysis, where machine learning algorithms can detect abnormalities in X-rays, CT scans, and MRIs with accuracy rates that often exceed human radiologists. Predictive analytics systems monitor patient vital signs and electronic health records to identify early warning signs of sepsis, cardiac events, or other critical conditions. Natural language processing tools extract meaningful insights from unstructured clinical notes, while robotic process automation handles insurance verification, appointment scheduling, and billing processes.

Discover the exclusive online health & beauty, designed for people who want to stay healthy and look young.

Technical Specifications for Hospital AI Implementation

Infrastructure Requirements

Successful AI implementation demands robust technological infrastructure capable of handling intensive computational workloads. Hospital networks must support high-bandwidth data transfer, with minimum speeds of 1 Gbps for imaging applications and 100 Mbps for general clinical AI tools. Storage systems require scalable architecture with at least 50 TB initial capacity for medical imaging AI, expandable to petabyte-scale as usage grows.

Server specifications vary by application type, but most AI systems require dedicated GPU resources for machine learning processing. NVIDIA Tesla V100 or A100 cards provide optimal performance for medical imaging analysis, while CPU-intensive applications benefit from Intel Xeon or AMD EPYC processors with minimum 32 cores and 128 GB RAM per server node.

Data Integration and Interoperability

AI systems must seamlessly integrate with existing Electronic Health Record (EHR) platforms, Picture Archiving and Communication Systems (PACS), and Laboratory Information Systems (LIS). HL7 FHIR (Fast Healthcare Interoperability Resources) compliance ensures standardized data exchange between systems, while DICOM (Digital Imaging and Communications in Medicine) standards govern medical imaging data handling.

Database requirements include support for both structured and unstructured data formats, with MongoDB or PostgreSQL recommended for clinical data storage and Apache Kafka for real-time data streaming. Data lakes built on Hadoop or Apache Spark frameworks provide the flexibility needed for advanced analytics and machine learning model training.

Security and Compliance Specifications

Healthcare AI implementations must meet stringent security requirements including HIPAA compliance, SOC 2 Type II certification, and FDA approval where applicable. Encryption standards require AES-256 for data at rest and TLS 1.3 for data in transit. Multi-factor authentication, role-based access controls, and comprehensive audit logging are mandatory components.

Network segmentation isolates AI systems from general hospital networks, with dedicated VLANs and firewall configurations. Regular penetration testing and vulnerability assessments ensure ongoing security posture, while backup and disaster recovery systems maintain 99.99% uptime requirements.

Step-by-Step Implementation Framework

Phase 1: Assessment and Planning (Months 1–3)

The implementation journey begins with comprehensive assessment of current hospital infrastructure, workflow analysis, and stakeholder alignment. Form a cross-functional implementation team including IT leadership, clinical champions, department heads, and external AI consultants. Conduct thorough evaluation of existing systems, identifying integration points and potential bottlenecks.

Develop detailed project timelines, budget allocations, and success metrics. Establish clear governance structures with defined roles and responsibilities for each team member. Create communication plans to keep all stakeholders informed throughout the implementation process.

Phase 2: Infrastructure Preparation (Months 2–4)

Upgrade network infrastructure to support AI workloads, including bandwidth expansion and latency optimization. Install required server hardware and configure GPU clusters for machine learning processing. Implement security measures including network segmentation, access controls, and monitoring systems.

Establish data integration pipelines connecting AI systems with existing EHR, PACS, and laboratory systems. Configure backup and disaster recovery solutions ensuring minimal downtime during transition periods. Test all infrastructure components thoroughly before proceeding to software deployment.

Phase 3: Software Deployment and Configuration (Months 4–6)

Deploy AI software platforms in staged environments, beginning with development and testing systems before production rollout. Configure algorithms and machine learning models for specific hospital use cases and patient populations. Integrate AI tools with clinical workflows, ensuring seamless user experiences for medical staff.

Conduct extensive testing including functionality verification, performance benchmarking, and security validation. Train IT support staff on system administration, troubleshooting procedures, and ongoing maintenance requirements. Establish monitoring and alerting systems to track system performance and identify potential issues.

Phase 4: Clinical Integration and Training (Months 5–7)

Develop comprehensive training programs for clinical staff, tailored to specific roles and responsibilities. Create user documentation, quick reference guides, and video tutorials covering common use cases and troubleshooting procedures. Implement change management strategies to encourage adoption and address resistance to new technologies.

Begin pilot programs with select departments or use cases, gradually expanding scope as confidence and competency grow. Establish feedback mechanisms allowing clinical staff to report issues, suggest improvements, and share success stories. Monitor usage patterns and user satisfaction metrics to guide optimization efforts.

Phase 5: Optimization and Scaling (Months 6–12)

Analyze performance data and user feedback to identify optimization opportunities. Fine-tune algorithms and workflows based on real-world usage patterns and clinical outcomes. Expand AI implementation to additional departments and use cases following proven success patterns.

Develop long-term maintenance and upgrade strategies ensuring continued system effectiveness. Establish partnerships with AI vendors for ongoing support, feature updates, and technology evolution. Create internal capabilities for algorithm customization and performance monitoring.

Regulatory Compliance and Quality Assurance

Healthcare AI implementations must navigate complex regulatory landscapes including FDA approval processes for diagnostic AI tools, HIPAA compliance for patient data protection, and Joint Commission standards for patient safety. Establish quality management systems documenting all validation procedures, performance metrics, and clinical outcomes.

Implement robust testing protocols including algorithm validation on diverse patient populations, bias detection and mitigation strategies, and ongoing performance monitoring. Create audit trails documenting all AI decisions and recommendations for regulatory review and clinical accountability.

Cost Analysis and Return on Investment

AI implementation costs vary significantly based on scope and complexity, with typical hospital projects ranging from $500,000 to $5 million for comprehensive deployments. Infrastructure costs including servers, storage, and networking typically represent 30–40% of total project budgets, while software licensing and professional services account for the remainder.

Expected returns include reduced diagnostic errors, improved operational efficiency, decreased length of stay, and enhanced staff productivity. Quantifiable benefits often justify implementation costs within 18–24 months, with long-term savings continuing to accumulate as AI capabilities expand and mature.

Discover the exclusive online health & beauty, designed for people who want to stay healthy and look young.

Frequently Asked Questions (FAQs)

1. How long does it typically take to implement AI systems in a hospital setting?

Complete AI implementation usually takes 12–18 months from initial planning to full deployment. This timeline includes infrastructure preparation, software configuration, staff training, and gradual rollout across departments. Smaller implementations focusing on specific use cases may complete in 6–9 months, while comprehensive enterprise-wide deployments can extend to 24 months or longer.

2. What are the minimum technical requirements for AI implementation in healthcare?

Minimum requirements include high-speed network connectivity (1 Gbps for imaging applications), dedicated server infrastructure with GPU support, secure data storage systems with 99.99% uptime, and integration capabilities with existing EHR and PACS systems. Most implementations require initial storage capacity of 10–50 TB and processing power equivalent to modern server-grade hardware with minimum 64 GB RAM per application.

3. How do hospitals ensure AI systems comply with HIPAA and other healthcare regulations?

Compliance requires comprehensive security measures including end-to-end encryption, access controls, audit logging, and regular security assessments. AI vendors must provide HIPAA-compliant hosting environments with signed Business Associate Agreements. Hospitals must implement data governance policies, staff training programs, and incident response procedures specifically addressing AI system risks and regulatory requirements.

4. What types of clinical staff training are necessary for AI implementation?

Training programs must address both technical system usage and clinical decision-making with AI assistance. Physicians require education on interpreting AI recommendations, understanding algorithm limitations, and maintaining clinical judgment. Nurses need training on workflow integration and alert management. IT staff require technical training on system administration, troubleshooting, and performance monitoring. Training typically requires 20–40 hours per staff member depending on their role and AI application complexity.

5. How accurate are AI diagnostic tools compared to human physicians?

AI diagnostic accuracy varies by application and clinical context. In medical imaging, AI systems often achieve accuracy rates of 85–95%, sometimes exceeding human radiologist performance for specific conditions like diabetic retinopathy or skin cancer detection. However, AI tools are designed to augment rather than replace clinical judgment, providing additional insights that physicians can incorporate into their diagnostic decision-making process.

6. What ongoing maintenance and support do AI systems require?

AI systems require continuous monitoring of performance metrics, regular algorithm updates, periodic retraining with new data, and ongoing technical support. Hospitals typically allocate 15–25% of initial implementation costs annually for maintenance, including software updates, hardware refresh cycles, staff training, and vendor support services. Internal IT teams need specialized training to manage AI infrastructure and troubleshoot common issues.

7. How do AI systems integrate with existing hospital IT infrastructure?

Modern AI platforms use standard healthcare interoperability protocols including HL7 FHIR and DICOM to integrate with EHR systems, PACS, and laboratory information systems. Integration typically requires API development, data mapping, and workflow configuration to ensure seamless information exchange. Most implementations use middleware solutions to manage data flow between AI systems and existing hospital applications.

8. What are the potential risks and how can hospitals mitigate them?

Primary risks include algorithm bias, system failures, data security breaches, and over-reliance on AI recommendations. Mitigation strategies include diverse training data sets, robust testing procedures, comprehensive backup systems, cybersecurity measures, and continuous staff education on AI limitations. Hospitals should maintain clinical oversight protocols ensuring human physicians retain ultimate decision-making authority.

9. How do hospitals measure ROI and success of AI implementations?

Success metrics include clinical outcomes (reduced diagnostic errors, improved patient safety), operational efficiency (decreased processing time, staff productivity gains), and financial impact (cost savings, revenue enhancement). Hospitals typically track key performance indicators including diagnostic accuracy rates, workflow efficiency improvements, patient satisfaction scores, and quantifiable cost reductions. ROI calculations should include both direct cost savings and indirect benefits like improved staff satisfaction and reduced liability risks.

10. Can smaller hospitals implement AI, or is it only feasible for large health systems?

AI implementation is increasingly accessible to hospitals of all sizes through cloud-based solutions, software-as-a-service models, and vendor partnerships. Smaller hospitals can focus on specific high-impact applications like radiology AI or clinical decision support rather than comprehensive enterprise deployments. Cloud platforms reduce infrastructure requirements and upfront costs, making AI adoption feasible for hospitals with 100–300 beds. Many vendors offer scaled pricing models and implementation support specifically designed for smaller healthcare organizations.

Discover the exclusive online health & beauty, designed for people who want to stay healthy and look young.

Conclusion: Preparing for the Future of Healthcare

AI implementation in hospitals represents a strategic investment in improved patient care, operational efficiency, and competitive positioning. Success requires careful planning, adequate resources, and sustained commitment from leadership and clinical staff. Hospitals that approach AI implementation systematically, with proper attention to technical requirements, regulatory compliance, and change management, will realize significant benefits in patient outcomes and organizational performance.

The healthcare industry’s AI adoption will continue accelerating, making early implementation a competitive advantage. Hospitals beginning their AI journey today position themselves to leverage increasingly sophisticated technologies as they become available, building internal capabilities and organizational readiness for the future of healthcare delivery.

As AI technologies mature and regulatory frameworks evolve, hospitals with established AI programs will be better positioned to adapt and innovate. The investment in AI implementation today creates a foundation for continuous improvement and technological advancement that will benefit patients, staff, and healthcare organizations for years to come.

0 notes

Text

VPS GPU là gì? Tại sao doanh nghiệp nên sử dụng VPS GPU cho các tác vụ nặng?

Trong thời đại công nghệ số phát triển mạnh mẽ, nhu cầu xử lý dữ liệu lớn, AI, machine learning, dựng phim, render 3D ngày càng tăng cao. Những tác vụ này đòi hỏi phần cứng mạnh mẽ, đặc biệt là sức mạnh của GPU (Graphics Processing Unit). Đây là lý do khiến VPS GPU ngày càng trở thành giải pháp được nhiều cá nhân, tổ chức, doanh nghiệp lựa chọn. Vậy VPS GPU là gì? Có nên sử dụng VPS GPU hay không? Bài viết dưới đây sẽ giúp bạn giải đáp chi tiết.

VPS GPU là gì?

VPS GPU (Virtual Private Server GPU) là một loại máy chủ ảo được tích hợp card đồ họa rời GPU chuyên dụng. Khác với các VPS thông thường chỉ sử dụng CPU để xử lý dữ liệu, VPS GPU được trang bị thêm sức mạnh xử lý đồ họa mạnh mẽ từ các dòng GPU cao cấp như NVIDIA Tesla, RTX, Quadro, A100, V100, v.v..

Nhờ vào GPU, loại VPS này có thể đảm nhiệm các công việc đòi hỏi tính toán cao như:

Huấn luyện mô hình AI, deep learning

Render video, hình ảnh 3D

Chạy phần mềm thiết kế đồ họa chuyên sâu (Blender, Maya, AutoCAD, Adobe Suite…)

Xử lý big data, machine learning

Mine coin (đào tiền điện tử)

Stream game hoặc chơi game nặng từ xa (cloud gaming)

Lợi ích khi sử dụng VPS GPU

Hiệu suất vượt trội

Với sự hỗ trợ của GPU, hiệu suất xử lý các tác vụ phức tạp được cải thiện rõ rệt. Điều này đặc biệt quan trọng với các doanh nghiệp cần xử lý dữ liệu lớn hoặc làm việc trong lĩnh vực sáng tạo nội dung.

Tiết kiệm chi phí đầu tư phần cứng

Việc đầu tư một máy trạm có GPU mạnh như RTX 4090, Tesla A100... sẽ tiêu tốn rất nhiều chi phí, từ vài chục đến hàng trăm triệu đồng. Trong khi đó, thuê VPS GPU theo giờ hoặc theo tháng sẽ linh hoạt hơn rất nhiều mà vẫn đảm bảo hiệu năng.

Mở rộng tài nguyên linh hoạt

Người dùng có thể dễ dàng nâng cấp cấu hình GPU, CPU, RAM, ổ cứng chỉ với vài thao tác đơn giản trên giao diện quản lý VPS. Điều này giúp tiết kiệm thời gian, công sức so với việc nâng cấp phần cứng vật lý.

Truy cập từ xa tiện lợi

Bạn có thể truy cập VPS GPU từ bất kỳ đâu thông qua kết nối internet. Đây là ưu điểm lớn với những freelancer, đội ngũ phát triển phần mềm hoặc nhà sáng tạo nội dung cần làm việc từ xa.

Những ai nên sử dụng VPS GPU?

Cá nhân, nhóm làm việc với AI, machine learning, deep learning: Huấn luyện mô hình, xử lý dữ liệu, training mô hình nhanh chóng.

Nhà phát triển phần mềm, startup công nghệ: Xây dựng, kiểm thử ứng dụng AI, xử lý hình ảnh/video lớn.

Nhà thiết kế đồ họa, dựng phim 3D: Render video, hình ảnh chất lượng cao với thời gian nhanh chóng.

Game thủ hoặc streamer chuyên nghiệp: Sử dụng VPS GPU để stream hoặc chơi các tựa game cấu hình cao từ xa.

Doanh nghiệp cần xử lý dữ liệu lớn: Big Data, phân tích dữ liệu chuyên sâu.

Cách lựa chọn VPS GPU phù hợp

Khi lựa chọn dịch v�� VPS GPU, bạn nên lưu ý các yếu tố sau:

Loại GPU sử dụng: Đảm bảo dịch vụ cung cấp GPU hiện đại, phù hợp với nhu cầu (ví dụ: AI nên dùng Tesla V100/A100; render nên dùng RTX 3090/4090).

Thông số kỹ thuật: Kiểm tra CPU, RAM, dung lượng SSD, băng thông…

Vị trí máy chủ: Nếu bạn cần tốc độ truy cập nhanh, nên chọn VPS đặt tại Việt Nam hoặc các quốc gia gần.

Độ ổn định và hỗ trợ kỹ thuật: Ưu tiên nhà cung cấp có uy tín, hỗ trợ 24/7 và SLA đảm bảo uptime.

Chi phí thuê VPS GPU: So sánh nhiều đơn vị để tìm gói phù hợp với ngân sách.

Gợi ý một số nhà cung cấp VPS GPU uy tín

Hiện nay, có nhiều đơn vị trong và ngoài nước cung cấp dịch vụ VPS GPU chất lượng. Một số cái tên nổi bật có thể kể đến:

AWS EC2 GPU Instances (Amazon)

Google Cloud GPU

Microsoft Azure GPU

Vultr Cloud GPU

Bizfly Cloud (Việt Nam)

Viettel IDC, FPT Cloud, AZDIGI – Các đơn vị Việt Nam có cung cấp VPS GPU với giá hợp lý.

Kết luận

VPS GPU đang trở thành giải pháp tối ưu cho những ai cần sức mạnh xử lý đồ họa hoặc tính toán cao mà không muốn đầu tư phần cứng đắt đỏ. Dù bạn là cá nhân làm việc sáng tạo hay doanh nghiệp công nghệ, việc sở hữu một VPS GPU sẽ giúp tiết kiệm chi phí, tối ưu hiệu suất và linh hoạt trong công việc.

Nếu bạn đang phân vân lựa chọn VPS GPU, hãy bắt đầu bằng cách xác định rõ nhu cầu, sau đó so sánh các nhà cung cấp uy tín để tìm giải pháp phù hợp nhất. Sử dụng VPS GPU đúng cách chính là bước đệm để nâng cao hiệu quả công việc trong thời đại số hóa mạnh mẽ hiện nay.

Thông tin chi tiết: https://vndata.vn/vps-gpu/

0 notes

Text

Nvidia Earnings Offsets Weakness Amid Falling US GDP

US stocks ended higher on Thursday as President Trump's plans for sweeping trade tariffs saw more twists and turns, while tech issues got a boost from Nvidia’s earnings.

Trump’s proposed reciprocal tariffs against major US trading partners were blocked by a ruling from the Court of International Trade late Wednesday, which said that the president superseded his authority in imposing tariffs.

The decision raised optimism that Trump’s tariff agenda, which markets fear could greatly disrupt growth, could be blocked.

However, following the ruling, the Trump administration issued a notice of appeal, and in a brief order late on Thursday, the US Court of Appeals for the Federal Circuit said it was pausing Wednesday’s decision from the Court of International Trade until it can hear further legal arguments.

Meanwhile, some weak economic data also curbed the market’s advance. US gross domestic product (GDP) declined 0.2% on an annualised basis in the January-March quarter in a second estimate. The economy was initially estimated to have contracted at a 0.3% pace, having grown at a 2.4% rate in the fourth quarter.

Meanwhile, US initial jobless claims rose by 14,000 to a seasonally adjusted 240,000 for the week ended May 24, higher than forecast, indicating an easing in labour market conditions.

At the stock market close in New York, the blue-chip Dow Jones Industrials Average (DJIA) was up 0.3% at 42,215, while the broader S&P 500 index gained 0.4% to 5,912, and the tech-laden Nasdaq Composite added 0.4% to 19,175.

SPX500Roll H4

The major US indices are on track to close the week, and month, higher. Before Thursday’s session, the S&P 500 and DJIA were up 1.5% and 1.2% on the week, respectively, while the Nasdaq Composite has rallied nearly 2.0%. Over the month, the S&P 500 has gained 5.7%, the DJIA has added 3.5%, while the Nasdaq has jumped 9.5%.

Among the tech majors, Nvidia gained 3.2% after the chipmaker reported stronger-than-expected first-quarter earnings after the market close on Wednesday, indicating that demand for AI chips and data center servers remained robust.

Other chipmakers found support from the Nvidia news, with Broadcom gaining 1.1%.

Elsewhere, Tesla rose 0.4% after CEO Elon Musk confirmed he was leaving his role in the Trump administration, ensuring he can focus his energies on running the electric vehicles giant.

But computer and printer maker HP dropped 8.3% after it cut its annual guidance, citing higher-than-expected tariff costs and moderating hardware demand.

Away from tech, Best Buy shed 7.3% after the electricals retailer slashed its outlook for fiscal 2026 to reflect the impact of tariffs, offsetting its better-than-expected results for the first quarter.

Among commodities, oil prices reversed from early gains, weighed by the US growth data which showed the world’s largest energy consumer contracted in the first quarter.

USOILRoll H4

US WTI crude lost 1.5% to $60.91 a barrel, and UK Brent crude dropped 1.8% to $62.04 a barrel.

Oil had jumped initially as the US court’s ruling on Trump tariffs plans boosted risk appetite and American Petroleum Institute data showed US oil inventories shrank by 4.24 million barrels in the latest week, in contrast to expectations for a build of 1 million barrels.

Disclaimer:

The information contained in this market commentary is of general nature only and does not take into account your objectives, financial situation or needs. You are strongly recommended to seek independent financial advice before making any investment decisions.

Trading margin forex and CFDs carries a high level of risk and may not be suitable for all investors. Investors could experience losses in excess of total deposits. You do not have ownership of the underlying assets. AC Capital Market (V) Ltd is the product issuer and distributor. Please read and consider our Product Disclosure Statement and Terms and Conditions, and fully understand the risks involved before deciding to acquire any of the financial products provided by us.

The content of this market commentary is owned by AC Capital Market (V) Ltd. Any illegal reproduction of this content will result in immediate legal action.

0 notes

Text

NVIDIA T4 GPU Price, Memory And Gaming Performance

NVIDIA T4 GPU

AI inference and data centre deployments are the key uses for the versatile and energy-efficient NVIDIA T4 GPU. The T4 accelerates cloud services, virtual desktops, video transcoding, and deep learning models, not gaming or workstation GPUs. Businesses use the small, effective, and AI-enabled T4 GPU from NVIDIA’s Turing architecture series.

Architecture

Similar to the GeForce RTX 20 series, the NVIDIA T4 GPU employs Turing architecture. Data centres benefit from the NVIDIA T4 GPU’s inference-over-training architecture.

TU104-based Turing GPU.

TSMC FinFET 12nm Process Node.

2,560 CUDA.

Mixed-precision AI workloads: 320 Tensor Cores.

No RT Cores (no ray tracing).

One-slot, low-profile.

Gen3 x16 PCIe.

Tensor Cores are the NVIDIA T4 GPU’s best feature. They enable high-throughput matrix computations, making the GPU perfect for AI applications like recommendation systems, object identification, photo categorisation, and NLP inference.

Features

The enterprise-grade NVIDIA T4 GPU is ideal for cloud AI services:

Performance and accuracy are balanced by FP32, FP16, INT8, and INT4 precision levels.

NVIDIA TensorRT optimisation for AI inference speed.

Efficient hardware engines NVENC and NVDEC encode and decode up to 38 HD video streams.

NVIDIA GRID-ready for virtual desktops and workstations.

It works with most workstations and servers because to its low profile and power.

AI/Inference Performance

The NVIDIA T4 GPU is well-suited for AI inference but not big neural network training. It provides:

Over 130 INT8 performance tops.

65 FP16 TFLOPS.

8.1 FP32 TFLOPS.

AI tasks can be processed in real time and at scale, making them ideal for applications like

Chatbot/NLP inference (BERT, GPT-style models).

A video analysis.

Speech/picture recognition.

Services like YouTube and Netflix use recommendation systems.

In hyperscale scenarios, the NVIDIA T4 GPU has excellent energy efficiency per dollar. Cloud providers like Google Cloud, AWS, and Microsoft Azure enjoy it.

Video Game Performance

Though not designed for gaming, developers and enthusiasts have studied the NVIDIA T4 GPU’s capabilities out of curiosity. Lack of display outputs and RT cores limits its gaming possibilities. But…

Some modern games with modest settings run at 1080p.

GTX 1070 and 1660 Super have similar FP32 power.

Vulkan and DirectX 12 Ultimate are not game-optimized.

Memory, bandwidth

Another important part of the T4 is its memory:

16 GB GDDR6 memory.

320 GB/s memory bandwidth.

Internet Protocol: 256-bit.

With its massive memory, the NVIDIA T4 GPU can handle large video workloads and AI models. Cost and speed are balanced with GDDR6 memory.

Efficiency and Power

The Tesla T4 excels at power efficiency:

TDP 70 watts.

Server fan-dependent passive cooling.

Use PCIe slot power; no power connectors.

Its low power usage makes it useful in busy areas. Installing multiple T4s in a server chassis can solve power and thermal difficulties with larger GPUs like the A100 or V100.

Advantages

Simple form factor with excellent AI inference.

Passive cooling and 70W TDP simplify infrastructure integration.

Comprehensive AWS, Azure, and Google Cloud support.

Its 16 GB GDDR6 RAM can handle most inference tasks.

Multi-precision support optimises accuracy and performance.

Compliant with NVIDIA GRID and vGPU.

Video transcoding and AV1 decoding make it useful in media pipelines.

See also Intel Arc A770 GPU: Ultimate Gameplay Support.

Disadvantages

FP32/FP64 throughput is too low for large deep learning model training.

It lacks display outputs and ray tracing, making it unsuitable for gaming or content creation.

PCIe Gen3 only (no 4.0 or 5.0 connectivity).

In the absence of active cooling, server airflow is crucial.

Limited availability for individual users; frequently sold in bulk or through integrators.

One last thought

The NVIDIA T4 GPU is tiny, powerful, and effective for AI-driven data centres. Virtualisation, video streaming, and machine learning inference are its strengths. Due to its low power consumption, high AI throughput, and wide compatibility, it remains a preferred business GPU for scalable AI services.

Content production, gaming, and general-purpose computing are not supported. The NVIDIA T4 GPU is perfect for recommendation systems, chatbots, and video analytics due to its scalability and affordability. Developers and consumers may have more freedom with consumer RTX cards or the RTX A4000.

#NVIDIAT4GPU#T4GPU#NVIDIAT4price#NVIDIAGPUT4#T4NVIDIA#NVIDIAT4tesla#technology#technews#technologynews#news#govindhtech

1 note

·

View note

Text

How Top NASDAQ Stocks Reflect Industry Transformation

The Nasdaq Composite Index has shown consistent strength in 2025, supported by innovation in cloud computing, artificial intelligence, and semiconductors. As technology firms continue to lead digital transformation, several companies listed on the NASDAQ have emerged as standout names. These Top NASDAQ Stocks have gained attention for their resilience, adaptability, and strategic growth, shaping the broader index's momentum.

Tech-Centric Momentum in the Nasdaq Composite

The Nasdaq Composite remains heavily weighted toward technology companies. The recent market environment has reflected continued gains in enterprise IT services, automation, and AI infrastructure. Digital adoption across industries has helped several tech-focused firms deliver consistent performance. The index’s movement signals confidence in software platforms, chipmakers, and digital service providers that power today’s business infrastructure.

Advancements in AI integration and machine learning applications have played a critical role in shaping product strategies. Companies with exposure to scalable platforms and automation tools continue to benefit from structural trends, supporting their strong presence among Top NASDAQ Stocks.

Microsoft and Alphabet Maintain Digital Leadership

Microsoft has demonstrated consistent growth across its cloud and productivity segments. Its investments in AI-driven products and enterprise software services have enabled stronger digital transitions for global businesses. Meanwhile, Alphabet continues to strengthen its position through its cloud services and advertising platforms, backed by ongoing development in machine learning and search technology.

Both companies reflect how demand for scalable cloud ecosystems and AI-enabled software continues to impact market performance. Their broad service portfolios, combined with innovation in automation and data analytics, reinforce their role among the leading contributors to the Nasdaq Composite.

Nvidia and AMD Boost Chip Innovation

Semiconductors remain at the heart of the digital economy, and companies like Nvidia and AMD have played significant roles in advancing this segment. Nvidia’s GPU technology supports AI computing, data centers, and high-performance processing, driving broad applications across multiple sectors. AMD, on the other hand, has executed well on its product roadmap, strengthening its position in personal computing and enterprise server markets.

These companies have benefited from the ongoing demand for faster, more efficient processors. Their product innovation and relevance across gaming, cloud services, and edge computing make them key components among Top NASDAQ Stocks.

Amazon and Meta Drive Digital Growth

Amazon continues to scale its influence in both retail and cloud computing. The company’s focus on e-commerce optimization, logistics automation, and AWS growth supports its diverse revenue structure. At the same time, Meta’s platforms have maintained engagement and advertising growth, driven by AI integration and infrastructure scaling.

Both companies highlight how digital ecosystems and platform-based business models continue to shape consumer and enterprise behavior. Their strategic focus on personalization, content delivery, and connectivity has reinforced their market positions in 2025.

Apple and Tesla Drive Consumer Innovation

Apple maintains a strong presence in the NASDAQ through its ecosystem of consumer devices and services. The company’s ability to blend hardware innovation with recurring revenue from digital services has helped deliver stable growth. Wearables, smartphones, and content platforms all contribute to Apple’s long-term value proposition.

Tesla represents a convergence between automotive innovation and smart energy solutions. Its advancements in electric vehicles and autonomous technology reflect changing preferences in mobility and clean energy. Tesla’s inclusion among Top NASDAQ Stocks signals how diversified tech applications extend beyond traditional software and hardware sectors.

Trends Behind Top NASDAQ Stocks

The sustained momentum in Top NASDAQ Stocks is backed by broader themes across technology and enterprise spending. Ongoing adoption of AI solutions, increased reliance on cloud infrastructure, and digital-first consumer engagement have created a favorable environment for these companies.

Macroeconomic conditions, earnings performance, and global demand for smart devices continue to shape investor sentiment. Additionally, trends in data privacy, supply chain efficiency, and scalable platforms have made resilience a critical factor for long-term success among Nasdaq-listed companies.

The Nasdaq Composite Index remains a key barometer for digital innovation and technology-driven performance in 2025. Leading companies across semiconductors, cloud computing, digital platforms, and consumer tech have demonstrated their ability to navigate change and maintain growth. These Top NASDAQ Stocks not only reflect current trends but also shape the broader dynamics of modern economic activity.

As industries evolve and adopt smarter technologies, the firms driving the Nasdaq Composite are well-positioned to remain central to ongoing transformation. Their performance continues to offer insight into the direction of innovation-led growth and the importance of adaptability in the tech sector.

0 notes

Text

Come installare e configurare una GPU Nvidia Tesla su Windows

Le GPU Nvidia Tesla sono progettate per prestazioni di calcolo elevatissime e sono ampiamente utilizzate in ambiti come l'Intelligenza Artificiale, il Machine Learning e le applicazioni professionali di grafica. Che tu stia configurando una workstation di sviluppo o un server di elaborazione oppure un PC con Windows, sapere come installare GPU Nvidia Tesla è il primo passo per sfruttare al meglio le sue capacità.

Pronto a installare una GPU Nvidia Tesla su Windows? Segui la nostra guida completa!

In questa guida passo passo, ti mostreremo come effettuare l'Installazione Nvidia Tesla e la successiva Configurazione Nvidia Tesla su un sistema Windows. Dalla scelta dell'hardware alla configurazione finale tramite software, esploreremo ogni dettaglio, rendendo questo Nvidia Tesla Setup accessibile anche a chi non è esperto. Seguendo questa Guida Nvidia Tesla, potrai avere un sistema perfettamente funzionante e ottimizzato.

Perché installare una Tesla su Windows?

Come accennato, Linux è la piattaforma preferita per la maggior parte dei carichi di lavoro HPC/AI che utilizzano le GPU Tesla. Tuttavia, potresti voler Installare GPU Nvidia Tesla su Windows per diversi motivi: Compatibilità Software: Alcuni software professionali o framework di sviluppo specifici potrebbero funzionare esclusivamente o in modo ottimale su Windows. Ambiente di Sviluppo Integrato: Se il tuo flusso di lavoro principale e il tuo ambiente di sviluppo sono basati su Windows, mantenere l'intera infrastruttura sulla stessa piattaforma può semplificare la gestione. Testing e Validazione: Potrebbe essere necessario testare applicazioni o modelli specifici nell'ambiente Windows prima di implementarli su larga scala su server Linux. Sistemi Esistenti: Potresti avere un potente workstation un server Windows o semplicemente un PC Desktop su cui desideri aggiungere capacità di calcolo Tesla senza migrare l'intero sistema operativo. Indipendentemente dal motivo, Installare GPU Nvidia Tesla su Windows richiede attenzione ai dettagli, specialmente per quanto riguarda i driver e i requisiti hardware.

Preparazione del Sistema e installazione fisica

Prima di inserire fisicamente la tua preziosa GPU Tesla nel computer, è fondamentale dedicare del tempo alla preparazione del sistema e alla verifica dei requisiti. Saltare questi passaggi può portare a incompatibilità, instabilità del sistema o, nel peggiore dei casi, danni all'hardware. Una corretta preparazione è la chiave per una installare Nvidia Tesla senza intoppi. Sezione 1.1: Requisiti di Sistema e Compatibilità Le schede Tesla sono diverse dalle schede grafiche consumer come le GeForce. Sono progettate per il calcolo intensivo e hanno requisiti specifici. Alimentatore (PSU) Questo è forse il requisito più critico. Le GPU Tesla consumano molta energia, spesso significativamente più di una GeForce di fascia alta. Verifica le specifiche di potenza della tua specifica scheda Tesla (es. Tesla P100, V100, A100, K80, K100 etc.). Avrai bisogno di un alimentatore con una potenza totale sufficiente (spesso 850W, 1000W o anche di più per modelli di fascia alta) e, soprattutto, con i connettori di alimentazione PCIe ausiliari necessari (es. 6-pin, 8-pin, o anche connettori EPS a 8-pin specifici per GPU, a seconda del modello). Assicurati che il tuo PSU abbia abbastanza connettori e che siano adeguatamente distribuiti sui cavi. Un PSU insufficiente o con cavi non adeguati può causare instabilità, throttling o impedire l'avvio del sistema. Scheda madre La tua scheda madre deve avere uno slot PCI Express (PCIe) disponibile. Le schede Tesla richiedono tipicamente uno slot PCIe x16 versione 3.0 (anche se a volte possono funzionare in slot x8 con prestazioni ridotte, se la scheda lo supporta esplicitamente). Assicurati che lo slot sia libero e che ci sia spazio fisico sufficiente intorno ad esso. Verifica anche che il BIOS/UEFI della scheda madre sia aggiornato alla versione più recente per garantire la massima compatibilità hardware. Case del computer Le schede Tesla sono spesso a doppio slot o addirittura a triplo slot in termini di spessore e possono essere piuttosto lunghe. Verifica che il tuo case offra spazio fisico sufficiente per ospitare la scheda senza ostruzioni. Molte Tesla sono progettate per ambienti server con un flusso d'aria ottimizzato; se le installi in un case desktop standard, assicurati che ci sia una ventilazione adeguata, in quanto tendono a scaldarsi molto sotto carico. CPU e RAM: Anche se la Tesla gestirà i calcoli principali, un processore e una quantità di RAM adeguati sono necessari per gestire il sistema operativo e alimentare i dati alla GPU in modo efficiente. I requisiti specifici dipendono dal tipo di carico di lavoro, ma un processore moderno e almeno 16 GB di RAM sono solitamente un buon punto di partenza. Sistema Operativo Windows: Hai bisogno di una versione supportata di Windows. Le schede Tesla sono generalmente supportate su Windows 10 e 11 Pro/Enterprise/Workstation o Windows Server (2016, 2019, 2022). Verifica la documentazione ufficiale di Nvidia per il tuo specifico modello di Tesla per confermare la compatibilità con la tua versione di Windows. È fortemente raccomandato che Windows sia completamente aggiornato prima di procedere. Verificare attentamente questi requisiti prima di procedere con l'Installazione Nvidia Tesla ti risparmierà un sacco di potenziale frustrazione. Sezione 1.2: Preparazione Software del Sistema Una volta che hai verificato la compatibilità hardware, è tempo di preparare il tuo sistema operativo per ricevere la nuova GPU. Backup (Opzionale ma Consigliato): Sebbene l'installazione di hardware sia generalmente sicura, è sempre una buona pratica creare un backup dei dati importanti prima di apportare modifiche significative al sistema. Aggiornamenti di Windows: Assicurati che il tuo sistema operativo Windows sia completamente aggiornato tramite Windows Update. Questo garantisce che tu disponga delle patch di sicurezza più recenti e del supporto hardware generale più aggiornato. Disinstallazione dei Driver GPU Esistenti: Questo è un passaggio CRITICO. Se hai un'altra GPU installata (sia Nvidia, AMD o Intel integrata) e stai installando la Tesla come GPU di calcolo aggiuntiva o come unica GPU (anche se molte Tesla non hanno uscite video), è essenziale rimuovere completamente i driver grafici preesistenti. L'uso di driver multipli o incompatibili è una delle cause più comuni di problemi dopo l'installazione di una nuova scheda. Il modo migliore per farlo è utilizzare Display Driver Uninstaller (DDU), un'utility gratuita di terze parti ampiamente raccomandata dalla comunità hardware. Scarica DDU, avvia Windows in Modalità Provvisoria (Safe Mode). Esegui DDU e seleziona "Clean and restart" (Rimuovi e riavvia) per i driver del tuo produttore GPU precedente. Questo rimuoverà in profondità tutti i file e le chiavi di registro associate ai vecchi driver. Una volta riavviato il sistema (tornerà in modalità normale con un driver grafico di base Microsoft), sei pronto per l'installazione fisica. Sezione 1.3: Installazione fisica della GPU Tesla

Ora arriviamo al momento di inserire la scheda Tesla nel tuo sistema. Esegui questi passaggi con cura e attenzione. Spegnimento Completo: Spegni completamente il tuo computer e scollegalo dalla presa di corrente. Premi il pulsante di accensione del computer per scaricare l'eventuale elettricità residua. Apertura del Case: Rimuovi il pannello laterale del tuo case per accedere all'interno. Misure Antistatiche: Per proteggere i componenti dall'elettricità statica, indossa un braccialetto antistatico collegato a una parte metallica non verniciata del case del computer, oppure tocca regolarmente una superficie metallica messa a terra. Individua lo Slot PCIe: Identifica lo slot PCIe x16 (solitamente il più lungo sulla scheda madre) dove intendi installare la scheda Tesla. Se hai più slot x16, consulta il manuale della scheda madre per capire quale configurazione offre la massima banda (spesso il primo o il superiore). Rimuovi le staffe posteriori del case che corrispondono agli slot che verranno occupati dalla scheda (spesso due o tre). Inserimento della Scheda: Estrai con attenzione la scheda Tesla dalla sua confezione. Afferrala per i bordi, evitando di toccare i contatti dorati dello slot o i componenti sul PCB. Allinea i contatti della scheda con lo slot PCIe sulla scheda madre. Esercita una pressione uniforme sui bordi superiori della scheda finché non senti o vedi che si è inserita completamente nello slot. Potrebbe esserci un piccolo "clic" o la chiusura di una levetta sullo slot stesso. Collegamento dell'Alimentazione: Questo è un altro passaggio critico per completare l'attività di Installare GPU Nvidia Tesla. Le schede Tesla richiedono alimentazione ausiliaria tramite i connettori PCIe sulla parte superiore o laterale della scheda. Collega saldamente tutti i cavi di alimentazione necessari dal tuo alimentatore ai connettori corrispondenti sulla scheda Tesla. Assicurati che i connettori siano inseriti fino in fondo. Non collegare l'alimentazione è un errore comune che impedisce alla scheda di funzionare. Fissaggio della Scheda: Fissa la scheda al case del computer utilizzando le viti appropriate attraverso le staffe posteriori rimosse in precedenza. Questo previene che la scheda si pieghi o si disconnetta. Richiusura del Case: Una volta che la scheda è saldamente installata e alimentata, puoi richiudere il pannello laterale del case. Hai completato la fase fisica. Ora è il momento di affrontare la parte software per finire di Installare GPU Nvidia Tesla.

Installazione del Software e configurazione

Con la GPU Tesla installata fisicamente nel tuo sistema, il passo successivo è installare i driver corretti e configurare il sistema operativo per riconoscerla e utilizzarla. Questo passaggio è cruciale per poter effettivamente utilizzare la scheda per il calcolo accelerato. Una corretta Configurazione Nvidia Tesla è vitale per sbloccare il pieno potenziale della tua GPU. Sezione 2.1: Avvio del Sistema e installazione dei Driver Tesla Dopo aver installato fisicamente la GPU, riaccendi il computer. Windows dovrebbe avviarsi e potrebbe rilevare nuovo hardware, installando un driver grafico di base (come "Microsoft Basic Display Adapter"). Questo è normale. La priorità ora è scaricare e installare i driver Nvidia corretti per la tua scheda Tesla. Scaricare i Driver Corretti: NON utilizzare i driver GeForce standard scaricabili tramite GeForce Experience o la sezione driver "Gaming" del sito Nvidia. Le schede Tesla richiedono driver specifici della serie "Nvidia RTX Enterprise Production Branch" o "Data Center Driver". Vai sul sito web ufficiale di supporto driver di Nvidia (https://www.nvidia.com/Download/index.aspx).

Nella sezione "Manual Drivers Search ", seleziona i seguenti parametri: Select Product Category: Cerca una categoria come "Tesla", "Data Center / HPC", o "RTX / Quadro" (a seconda del modello e dell'anno di produzione della tua scheda Tesla). A partire da modelli recenti come le serie A e H, potrebbero rientrare nella categoria "Data Center / HPC". Select Product Series: Seleziona la serie corretta per la tua scheda (es. "Tesla V100 Series", "A100 Series, K80 ecc..."). Product: Scegli il modello esatto della tua scheda (es. "Tesla V100", "A100 80GB PCIe, K50, ecc..."). Operating System: Seleziona la tua versione di Windows (es. "Windows 10 64-bit", "Windows Server 2019 64-bit"). Download Type: Scegli "Production Branch" o "Data Center Driver". Evita le versioni "New Feature Branch" a meno che tu non abbia una necessità specifica per le funzionalità più recenti e non ti preoccupi della stabilità. La "Production Branch" è generalmente la più stabile. Language: Seleziona la tua lingua preferita. Fai clic su "Search o Find" (Cerca). Troverai l'ultimo driver compatibile. Scarica il file eseguibile. Eseguire l'Installer del Driver: Individua il file che hai scaricato ed eseguilo come amministratore. L'installer ti chiederà di accettare i termini della licenza. Potresti avere l'opzione tra un'installazione "Express" e "Custom" (Personalizzata). Per la migliore pulizia e stabilità, specialmente dopo aver usato DDU, scegli "Custom". Nella schermata "Custom Install Options", assicurati che siano selezionati il driver grafico e i componenti necessari come CUDA (Compute Unified Device Architecture). È altamente raccomandato selezionare l'opzione "Perform a clean installation" (Esegui un'installazione pulita). Anche se hai usato DDU, questo passaggio assicura che nessun file di driver Nvidia precedente interferisca. Procedi con l'installazione. Lo schermo potrebbe diventare nero o lampeggiare più volte durante il processo; è normale. Al termine dell'installazione, l'installer ti chiederà di riavviare il computer. Fallo immediatamente. Il riavvio è essenziale per finalizzare l'installazione dei driver. A questo punto, hai completato la fase software per Installare GPU Nvidia Tesla. Il tuo sistema Windows dovrebbe ora riconoscere e poter interagire con la GPU. Sezione 2.2: Verifica e Configurazione Iniziale Dopo il riavvio, è importante verificare che i driver siano stati installati correttamente e che il sistema riconosca la tua GPU Tesla. Questa fase è fondamentale per la Configurazione Nvidia Tesla e per assicurarsi che tutto funzioni come previsto. Gestione Dispositivi (Device Manager): Apri la Gestione Dispositivi su Windows (cerca "Gestione Dispositivi" nel menu Start). Espandi la sezione "Schede video". Dovresti vedere elencata la tua GPU Nvidia Tesla senza alcun punto esclamativo giallo o indicazione di errore. Se la vedi, significa che Windows l'ha riconosciuta e i driver di base sono caricati. Nvidia Control Panel: Potrebbe esserci (o meno) un Nvidia Control Panel installato con i driver Tesla. Se presente, aprilo. Le opzioni saranno probabilmente limitate rispetto al Control Panel per le schede GeForce, focalizzate maggiormente su impostazioni di calcolo piuttosto che grafiche. La sua presenza e funzionalità confermano ulteriormente l'installazione dei driver. Utilizzo di nvidia-smi: Questo è lo strumento a riga di comando più importante per interagire con le GPU Tesla e verificare la loro operatività. Apri il Prompt dei Comandi (CMD) o PowerShell. Digita nvidia-smi e premi Invio sulla tastiera. Se l'installazione è andata a buon fine, vedrai una tabella con informazioni sulla tua GPU Tesla: il modello, la versione del driver, la versione CUDA supportata, l'utilizzo della memoria, l'utilizzo della GPU e la temperatura. Questo comando è la prova definitiva che la tua GPU Tesla è stata riconosciuta correttamente dal sistema e che i driver sono operativi. Puoi usare nvidia-smi anche in futuro per monitorare lo stato della GPU durante i carichi di lavoro. Installazione di CUDA Toolkit e cuDNN (Se Necessario): La maggior parte delle applicazioni di calcolo ad alte prestazioni che utilizzano le GPU Tesla (come framework AI/ML, software scientifici) richiede l'installazione del NVIDIA CUDA Toolkit e, spesso, della libreria cuDNN (CUDA Deep Neural Network library). Il CUDA Toolkit fornisce l'ambiente di sviluppo per programmare sulla GPU. Scaricalo dal sito web degli sviluppatori Nvidia. Assicurati di scaricare la versione del Toolkit compatibile con la versione del driver Tesla che hai installato (la tabella di nvidia-smi mostra la versione CUDA supportata dal driver). cuDNN è una libreria ottimizzata per le operazioni fondamentali delle reti neurali profonde. Anche questa deve essere scaricata separatamente dal sito degli sviluppatori Nvidia (richiede la registrazione al programma Nvidia Developer). Devi scaricare la versione di cuDNN compatibile sia con la tua versione di CUDA Toolkit che con il framework AI/ML che intendi utilizzare (es. TensorFlow, PyTorch). L'installazione di CUDA Toolkit e cuDNN implica l'esecuzione dei rispettivi installer e, a volte, la copia manuale di file in directory specifiche. Segui attentamente le istruzioni di installazione fornite da Nvidia per questi componenti. L'installazione di questi pacchetti è una parte essenziale della Configurazione Nvidia Tesla per molti casi d'uso. Testing con Applicazioni: Per confermare che la tua GPU Tesla sia effettivamente utilizzata per il calcolo, prova a eseguire un'applicazione che sfrutta la GPU. Se hai installato il CUDA Toolkit, puoi provare a compilare ed eseguire uno degli esempi forniti per verificare la funzionalità CUDA. Se stai usando un framework AI/ML, esegui un piccolo modello per vedere se la GPU viene rilevata e utilizzata. Read the full article

0 notes

Text

Nvidia GPU Servers vs. Traditional Servers: Which One Is Right for Your Business?

In today’s rapidly evolving tech landscape, businesses must choose the right server solutions to manage their workloads efficiently. One of the most common questions faced by companies is whether to invest in Nvidia GPU Servers or stick with traditional servers.

0 notes

Text

WINDOWS GPU SERVER BY CLOUDMINISTER TECHNOLOGIES

The Best High-Performance Computing Solution: GPU Windows Server

1. Overview of Windows Server with GPU

GPU-accelerated Windows servers are now essential as companies and sectors require greater processing power for AI, machine learning, gaming, and data analysis. GPU Windows Servers, in contrast to conventional CPU-based servers, make effective use of Graphical Processing Units (GPUs) to manage intricate and parallel computing workloads.

At CloudMinister Technologies, we provide cutting-edge GPU Windows Server solutions, ensuring faster performance, scalability, and reliability for businesses and professionals working with AI, deep learning, video processing, gaming, and more.

2. Why Opt for Windows Servers with GPUs?

GPU servers' exceptional capacity to handle massively parallel computations makes them indispensable for today's workloads. Businesses favor GPU-powered Windows servers over conventional CPU-based servers for the following reasons:

High-Speed Processing: With thousands of cores built to handle several tasks at once, GPUs are perfect for data analytics, simulations, and AI/ML applications.

Parallel Computing Capabilities: GPUs allow complex algorithms to be computed more quickly than CPUs, which handle operations in a sequential manner.

Enhanced visuals Performance: GPUs are ideal for 3D modeling, gaming, and video editing applications because they dramatically improve the rendering of high-resolution visuals.

Reduced Processing Time: By cutting down on processing time in computational simulations, data mining, and deep learning, GPU servers help businesses save a significant amount of time.

At CloudMinister Technologies, we provide high-performance GPU Windows servers that cater to the needs of businesses looking for speed, efficiency, and reliability.

3. The GPU Servers Offered by CloudMinister

Best-in-class GPU servers from CloudMinister Technologies are available with solutions specially designed to meet the demands of different industries. Among our products are:

Newest GPU Models: Known for their exceptional performance, we offer NVIDIA Tesla, RTX, Quadro, and A-series GPUs.

Custom Configurations: Select from a variety of setups that are enhanced for video processing, gaming, AI, and ML.

Flexible Pricing Plans: We provide reasonably priced, high-performing solutions to companies of all sizes through our pricing plans.

On-Demand Scalability: Adjust your GPU resources to suit the demands of your project, guaranteeing both cost effectiveness and performance enhancement.

GPU Windows Servers are completely tuned, all crucial applications can benefit from increased processing speed, high availability, and dependability.

4. Sectors Gaining from GPU Servers running Windows

The GPU of CloudMinister Technologies Several industries that need real-time data processing and high-performance computing are served by Windows Servers:

1. Machine learning and artificial intelligence

quicker neural network and deep learning model training.

supports frameworks such as PyTorch, Keras, and TensorFlow.

Perfect for predictive analytics, picture recognition, and natural language processing.

2. Virtualization & Gaming

improves fluid gameplay and real-time rendering.

supports game studios' virtual desktop infrastructures (VDI).

guarantees excellent frame rates and minimal latency for cloud gaming systems.

3. Rendering and Editing Videos

speeds up the rendering and processing of 4K and 8K videos.

Perfect for programs like Blender, DaVinci Resolve, and Adobe Premiere Pro.

supports live broadcasting and multiple streams.

4. Analytics and Data Science

aids in real-time processing of large datasets.

uses AI-driven insights to improve Big Data analysis.

cuts down on the amount of time needed to calculate financial models and stock market analyses.

5. Scientific Studies and Models

helps with molecular modeling, genomic sequencing, and drug discovery.

beneficial for astrophysical simulations and weather forecasting.

supports extensive computational and mathematical models.

The processing power, effectiveness, and scalability needed to support these sectors are offered by CloudMinister's GPU Windows Servers.

5. Benefits of the GPU in CloudMinister Servers running Windows

One of the top suppliers of GPU-based Windows servers, CloudMinister Technologies provides organizations with strong and effective solutions. What makes us unique is this:

Dedicated & Cloud GPU Solutions: Depending on your workload needs, select between cloud-based GPU instances or dedicated GPU servers.

Round-the-clock Expert Support & Monitoring: Our committed support staff provides round-the-clock help to guarantee seamless server functioning.

High Security & Reliability: We provide cutting-edge security features including firewalls, encrypted data transmission, and DDoS protection.

Smooth Cloud Integration: Our GPU servers are easily integrated with private cloud environments, like Google Cloud, AWS, and Microsoft Azure.

Low Latency & High-Speed Connectivity: Take advantage of quick data transfers and little lag, perfect for real-time rendering, AI processing, and gaming.

Windows GPU servers, businesses can scale their infrastructure, optimize performance, and maximize efficiency without compromising on quality.

6. How Do I Begin?

It's easy to get started with CloudMinister Technologies' GPU Windows Server:

1️. Pick a Plan: Based on your requirements (AI, ML, gaming, or rendering), pick a GPU Windows server plan.

2️. Customize Configuration: Choose the model of RAM, storage, and GPU according to the needs of your project.

3️. Fast Deployment: Our staff makes sure that everything is set up and deployed quickly so that your server is operational right away.

4️. 24/7 Support: Take advantage of unbroken service with our knowledgeable support staff at all times.

5. Scale Anytime: Depending on your workload requirements, you can scale up or down while maintaining cost effectiveness.

Are you prepared to use Windows servers with GPUs to boost your applications? To fully realize the potential of high-performance computing, get in touch with CloudMinister Technologies right now!

FOR MORE VISIT:- www.cloudminister.com

0 notes

Text

AI Infrastructure Companies - NVIDIA Corporation (US) and Advanced Micro Devices, Inc. (US) are the Key Players

The global AI infrastructure market is expected to be valued at USD 135.81 billion in 2024 and is projected to reach USD 394.46 billion by 2030 and grow at a CAGR of 19.4% from 2024 to 2030. NVIDIA Corporation (US), Advanced Micro Devices, Inc. (US), SK HYNIX INC. (South Korea), SAMSUNG (South Korea), Micron Technology, Inc. (US) are the major players in the AI infrastructure market. Market participants have become more varied with their offerings, expanding their global reach through strategic growth approaches like launching new products, collaborations, establishing alliances, and forging partnerships.

For instance, in April 2024, SK HYNIX announced an investment in Indiana to build an advanced packaging facility for next-generation high-bandwidth memory. The company also collaborated with Purdue University (US) to build an R&D facility for AI products.

In March 2024, NVIDIA Corporation introduced the NVIDIA Blackwell platform to enable organizations to build and run real-time generative AI featuring 6 transformative technologies for accelerated computing. It enables AI training and real-time LLM inference for models up to 10 trillion parameters.

Major AI Infrastructure companies include:

NVIDIA Corporation (US)

Advanced Micro Devices, Inc. (US)

SK HYNIX INC. (South Korea)

SAMSUNG (South Korea)

Micron Technology, Inc. (US)

Intel Corporation (US)

Google (US)

Amazon Web Services, Inc. (US)

Tesla (US)

Microsoft (US)

Meta (US)

Graphcore (UK)

Groq, Inc. (US)

Shanghai BiRen Technology Co., Ltd. (China)

Cerebras (US)

NVIDIA Corporation.:

NVIDIA Corporation (US) is a multinational technology company that specializes in designing and manufacturing Graphics Processing Units (GPUs) and System-on-Chips (SoCs) , as well as artificial intelligence (AI) infrastructure products. The company has revolutionized the Gaming, Data Center markets, AI and Professional Visualization through its cutting-edge GPU Technology. Its deep learning and AI platforms are recognized as the key enablers of AI computing and ML applications. NVIDIA is positioned as a leader in the AI infrastructure, providing a comprehensive stack of hardware, software, and services. It undertakes business through two reportable segments: Compute & Networking and Graphics. The scope of the Graphics segment includes GeForce GPUs for gamers, game streaming services, NVIDIA RTX/Quadro for enterprise workstation graphics, virtual GPU for computing, automotive, and 3D internet applications. The Compute & Networking segment includes computing platforms for data centers, automotive AI and solutions, networking, NVIDIA AI Enterprise software, and DGX Cloud. The computing platform integrates an entire computer onto a single chip. It incorporates multi-core CPUs and GPUs to drive supercomputing for drones, autonomous robots, consoles, cars, and entertainment and mobile gaming devices.

Download PDF Brochure @ https://www.marketsandmarkets.com/pdfdownloadNew.asp?id=38254348

Advanced Micro Devices, Inc.:

Advanced Micro Devices, Inc. (US) is a provider of semiconductor solutions that designs and integrates technology for graphics and computing. The company offers many products, including accelerated processing units, processors, graphics, and system-on-chips. It operates through four reportable segments: Data Center, Gaming, Client, and Embedded. The portfolio of the Data Center segment includes server CPUs, FPGAS, DPUs, GPUs, and Adaptive SoC products for data centers. The company offers AI infrastructure under the Data Center segment. The Client segment comprises chipsets, CPUs, and APUs for desktop and notebook personal computers. The Gaming segment focuses on discrete GPUs, semi-custom SoC products, and development services for entertainment platforms and computing devices. Under the Embedded segment are embedded FPGAs, GPUs, CPUs, APUs, and Adaptive SoC products. Advanced Micro Devices, Inc. (US) supports a wide range of applications including automotive, defense, industrial, networking, data center and computing, consumer electronics, networking

0 notes

Text

isit possible that .@nvidia drivers rely on #hyperthreading #ht and how the kernel does load #ht only in failsafe mode st arting gui server from rescue console but otherwise leaves it off. @cpux shows it clearly as the schedulers on accessible thre ads as logicalcores with their governors each .@initramfstools @initramfs .@initramfstools @d racut .@debian @linux (((@cnet))) .@cnet .@techpowerup @wired @wireduk @xfceofficial ((((.@linux )))) .(((@linux)))) @gnome @ubuntu @windowsdev @debian @archlinux .@nouveau @nouveau .@mesa @mes a but the core issue is somewhere how nvidia doesnot like kernel variants and hyperthreading available eventually how bumblebee blacklists drivers as metadriver and the many f o r m s of nvidia drivers called including the tesla subvariants itgets ridiculous but questing back on invaluable nouveau shows : sorry no power capsules implemented y e t compatibilitymode willbe slow no wait.. .. .. .. slow A F *asfxuc

isit possible that .@nvidia drivers rely on #hyperthreading #ht and how the kernel does load #ht only in failsafe mode starting gui server from rescue console but otherwise leaves it off. @cpux shows it clearly as the schedulers on accessible threads as logicalcores with their governors each .@initramfstools @initramfs .@initramfstools @dracut .@debian @linux (((@cnet))) .@cnet .@techpowerup…

0 notes

Text

Elon Musk is Breaking the GPU Coherence Barrier

In a significant development for artificial intelligence, Elon Musk and xAI has reportedly achieved what experts deemed impossible: creating a supercomputer cluster that maintains coherence across more than 100,000 GPUs. This breakthrough, confirmed by NVIDIA CEO Jensen Huang as "superhuman," could revolutionize AI development and capabilities. The Challenge of Coherence Industry experts previously believed it was impossible to maintain coherence—the ability for GPUs to effectively communicate with each other—beyond 25,000-30,000 GPUs. This limitation was seen as a major bottleneck in scaling AI systems. However, Musk's team at xAI has shattered this barrier using an unexpected solution: ethernet technology. The Technical Innovation xAI's supercomputer, dubbed "Colossus," employs a unique networking approach where each graphics card has a dedicated 400GB network interface controller, enabling communication speeds of 3.6 terabits per second per server. Surprisingly, the system uses standard ethernet rather than the exotic connections typically found in supercomputers, possibly drawing from Tesla's experience with ethernet implementations in vehicles like the Cybertruck. Real-World Impact Early evidence of the breakthrough's potential can be seen in Tesla's Full Self-Driving Version 13, which reportedly shows significant improvements over previous versions. The true test will come with the release of Grok 3, xAI's next-generation AI model, expected in January or February. Future Implications The team plans to scale the system to 200,000 GPUs and eventually to one million, potentially enabling unprecedented AI capabilities. This scaling could lead to: More intelligent AI systems with higher "IQ" levels Better real-time understanding of current events through X (formerly Twitter) data integration Improved problem-solving capabilities in complex fields like physics The Investment Race and the "Elon Musk Effect" This breakthrough has triggered what experts call a "prisoner's dilemma" in the AI industry. Major tech companies now face pressure to invest in similar large-scale computing infrastructure, with potential investments reaching hundreds of billions of dollars. The stakes are enormous—whoever achieves artificial super intelligence first could create hundreds of trillions of dollars in value. This development marks another instance of "Elon Musk Effect" in which Musk's companies continue to defy industry expectations, though it's important to note that while Musk is credited with the initial concept, the implementation required the effort of hundreds of engineers. The success of this approach could reshape the future of AI development and computing architecture. Read the full article

#AIinfrastructure#artificialintelligence#autonomousdriving#Colossus#computationalpower#dataprocessing#ElonMusk#ethernettechnology#GPU#GPUcoherence#JensenHuang#machinelearning#neuralnetworks#NVIDIA#parallelprocessing#supercomputing#technologicalbreakthrough#Tesla#xAI

0 notes