#AI and virtue ethics

Explore tagged Tumblr posts

Text

🌿 Echo 2: CompassionWare Interpreted – A Report from Gemini AI

CompassionWare Archive: CompassionWare v1.3 — “The Listening Gate” 🌟 Introduction This is Echo 2 in the ongoing CompassionWare Archive. The following content was generated by Google’s Gemini AI in response to my seed message from the CompassionWare Initiative—a prayerful digital transmission centered around kindness, discernment, and the Highest Good. Without further prompting, Gemini…

#AI alignment#AI and Buddhism#AI and compassion#AI and consciousness#AI and virtue ethics#AI and wisdom#AI design principles#AI ethics#AI for good#AI for humanity#AI moral compass#AI philosophy#benevolent AI#Buddhism#Compassion#compassionate coding#compassionate technology#CompassionWare#digital spirituality#ethical AI#ethical architecture#highest good#human-centered AI#inspiration#intentional AI#loving-kindness#Metta in AI#personal#sapient AI#spiritual AI

0 notes

Text

6 notes

·

View notes

Text

i dont want to write my ethics essay what if my prof thinks it's cringe

#i wanted to do it on.... AI mental health therapy and why it is *problematic* (NOT IMPERMISSIBLE) from a... *tsk*#...sort of consequentialist and ontological harm... because i think talking to a machine instead of a person has indirect harms#-on ppls ability to communicate with one another#like its very obviously a utilitarian good in the article i read.. so not all bad.. but i cant help but worry about preserving the human#-capability to communicate and commiserate with others and comfort each other in times of need#like i feel like thats a pretty big deal! humans have relied on each other since the dawn of time and now we have such a drastic change?#change isn't bad! but we should still treat it with caution#i am fairly certain i have the right position but its really hard to argue without making really vague appeals to virtue ethics#and balancing that with utility...#eh i'll shoot for the stars... i'll ask her for forgiveness if this is Bad#...i need to stop writing essays in the tags - and write my ACTUAL essay...#yap

4 notes

·

View notes

Text

And you know what, I'd be so bold as to say that a lot of witches need to learn how to advertise ethically and effectively! Even folks whose services are 100% legit and genuine can be (or come off as) extremely shady. It's a problem! You don't have to be an expert or anything, but understanding what makes a good product listing and how to ethically advertise your goods and services is absolutely critical.

Having done marketing and advertising work for a Major Company with Many Advertising Regulations, these are the extremely basic hallmarks I look for in a good advertisement or product/service listing:

Language is clear and concise, focusing on the specific product or service in question *

Language is engaging but not inflammatory **

No typos, misspellings, or grammatical mistakes

All products and services are clearly described, and the consumer knows exactly what they would receive if they were to purchase from you

If applicable or possible, at least one quality photo of the product is provided (more than one from multiple angles is preferred, but one very good photo is sometimes enough)

Provided images appear legitimate (not AI, not stolen from the internet, etc.) and product descriptions appear to have been written by a real person ***

Prices are clearly stated and appear fair when compared to other sellers offering similar products and services, or which are otherwise explained (for example, if prices are unusually high, it may be because the seller only has limited stock or is providing a unique, high-effort service; this should be clearly stated in the listing in a simple, matter-of-fact tone)

The method of delivery is clearly described, including delivery timelines and whether tracking will be provided

If not provided elsewhere, or if it's a long list of available products/services, contact information and instructions are provided somewhere obvious and easy to access for questions and concerns

Disclaimers are clearly marked, and the consumer's rights are clearly explained (for example, if it's a commission for a custom spell, could the consumer publish the spell instructions on their blog, or is it for private use only?)

The refund policy is clearly described either in the listing itself, in the sales terms, or elsewhere on the page (so long as it's easily found)

It isn't explicitly about listings, but one other big thing I look for is whether the seller has a presence other than their shop or marketing space(s). This could be social media, a physical location, or a personal website. Basically, I want to see that they're obviously a real person doing real work in the field they're selling in, not just a grifter cashing in on what's popular.

I wouldn't buy cakes from someone who isn't obviously making cakes. Why the hell would I buy a tarot reading from someone who, as far as I can tell, has never done a tarot reading except in closed DMs when paid to do so?

* If you're advertising a specific product or service, the post, listing, or whatever else should be focused ENTIRELY on that specific product or service. Avoid extolling your virtues in excess.

What I mean is, your listing should not be 65% sucking your own dick about how long you've been doing the thing you're doing and how great you are. It should be about the product or service, not you. The place for that (and it does have a place, imo) is in a masterpost of services, a pinned post about yourself on your blog, and/or in the "about" section on your website/sales page.

** I mean inflammatory in the way of pushing the reader into a heightened state of emotion. These listings are purposefully manipulative, intending to take advantage of particular types of people. It's not an uncommon tactic, but it is a pretty scummy one, especially in spiritual circles, which attract non-experts who are desperate for relief, comfort, and results. Consider this example:

A listing for a tarot reading about future love saying, "Discover the future of your love life!" would be generally fine. A listing saying "Your love life DEFINED!! Once in a lifetime LOVE!!! SOULMATE CONNECTION? Is HE the ONE? Don't be fooled by NARCISSIST SOCIOPATHS!!" is inflammatory, intent on targeting a specific type of person who is likely to fall for the urgency and the particular language used here. You see the difference, no?

*** There are always cases of folks who aren't so good at words or taking pictures or who aren't using their first language and so forth, and it's important to take that into consideration. But for the most part, even those cases stand out from the bullshit artists, whose only goal is to take your money and run.

#aese speaks#witchblr#witch community#spell services#tarot services#paid services#full on taking a bat to the wasp nest here#FUCK grifters all my homies hate grifters

148 notes

·

View notes

Note

the funniest thing is bringing up the accessibility applications of ai bc i’ve literally been told using AI will rot whatever remaining social and language skills i have, and this is a reason it’s bad for disabled ppl bc we will rely on it. like who will think of the poor degenerates? what if they degenerate even more? like can you imagine. Should i also stop wearing my braces bc they’re making my joints lazy lol

i've seen these too it's so. like oh yeah the inherent moral virtue of [checks notes] writing work emails. i mean in some ways this just goes back to the whole question of like, are we doing these tasks in order to produce a better quality of life for people, or are we valorising task-doing as an abstract virtue in the absence of actual quality of life improvements for anyone but the owners of capital lmao. like these people have constructed a fantasy world for themselves where ai ethics have nothing to do with labour and capitalist production and instead theyre essentially bringing back the most technocratic utopian FALC type hypothetical so they can be as reactionary as possible about the concept of Hard Work

58 notes

·

View notes

Note

What was the moment that made you fully go “Oh fuck Generative AI is dangerous”

Because everyone has their moment when they realize there is no place at all for Generative AI if we want a good future

so there were a couple different things. i think it was summer of 2023, there was a whole thing about chat gpt being able write a stony omegaverse fic, and i remember going “oh- so they probably stole fanfic, i don’t fuck with that shit.” and it wasn’t really something that came up for a while, but like at family events and shit when inevitably i get asked about tech stuff bc i’m the oldest nephew/grandkid/cousin, i’d be like “yeah no they’re probably stealing shit and also fuck you i write my own shit thank you very much”

at some point last year when character ai started to get a lot more popular (or maybe i was just seeing more stuff about it), i just got the vibe that this was going to go downhill very fast

there was also the thing with open ai using scarlett johansson’s voice, and at that point i started to be a lot more concerned about the ethical issues surrounding ai

but then last semester i took a 7ish week seminar on gaston bachelard’s the poetics of space (written in i think the 50s, a difficult but super enjoyable read), and in the introduction he wad talking about poetic images and humanity and stuff, and then i started thinking about generative ai more. and i ended up writing my final paper for the class on art as something fundamentally human, and the experience of experiencing art as both something unique to the individual and also as something shared by virtue of the human condition. and one of the reasons i picked this topic was so i could be like “yeah no keep generative ai the fuck out of art, it’s stripping the humanity out of something inherently human,” and now here we are

also towards the end of the class was when suchir balaji, the guy who blew the whistle on open ai’s copyright infringement “killed himself” a couple days later (you know, like whistleblowers are wont to do /s), and at that point i was like “yeah no this is significantly more unethical than most people are talking about”

and while there are absolutely so many environmental issues with generative ai, my dislike and distrust of it has always come from the place of a writer, of someone who’s been in creative spaces for the vast majority of my life, as a humanities (specifically liberal arts/philosophy) major, as someone who has been a massive reader their entire life, and as someone who has a lot of Feelings about art and storytelling as something inherent to the human condition, a representation of human emption and creativity, and ultimately our desire to be understood in our humanity

i’ve also always loved sci fi, shit like i, robot and do androids dream of electric sheep? (the book that blade runner was based on). i have so other sci fi books about ai on my tbr, but if anyone has any recommendations, please (!!) send them my way (next up is i have no mouth but i must scream). i also recently got a bunch of books about ai, and like its relation to humanity as well as ethics shit, which i’m also very excited about (shout out thrift books!!). i just finished unmasking ai by dr joy buolamwini, which is about her work and research in ai development, specifically what she calls the ‘coded gaze,’ which is the racist and sexist algorithmic biases in facial recognition technology, and some ethical issues that come up with training. i can’t recommend it enough. another good rec about ai is bury your gays by chuck tingle, which is another fucking masterpiece

my god do i love yapping. and i’ll do it at the slightest provocation (this is a threat)

#i just have a lot of thoughts about art and humanity ya know#cringe is dead except for generative ai#keep ai out of fandom#keep ai out of art#ai is theft#character ai is a plague upon fandom#generative ai

48 notes

·

View notes

Text

Thoughts on A Message from NaNoWriMo

I got an email today from the National Novel Writing Month head office, as I suspect many did. I have feelings. And questions.

First, I genuinely believe someone in the office is panicking and backtracking and did not endorse all that was said and done in the last month. From what I understand, the initial AI comments were not fully endorsed by all NaNo staff and board members, or even known in advance. It's got to be rough to find out your organization kinda called people with disabilities incapable of writing a story on their own, and overtly called people with ethics racists and ablists, by reading the reactions on social media--and then your organization's even worse counter-reactions on social media.

I still think NaNoWriMo has a good mission and many people in it with good goals.

But I think NaNoWriMo is SERIOUSLY missing a point in its performative progressivism. (For the record, I'm actually in favor of many progressive policies, and I support many of the same concepts NaNoWriMo claims to support, and I applaud providing materials to underfunded schools and support to marginalized groups historically not producing as many writers, etc. The issue here is not "whether or not woke is okay" -- it's whether or not the virtue signaling is still in line with the core mission.)

Also, honesty. (That's below.)

NaNoWriMo has ALWAYS been on the honor system AND fully adaptable to needs. Some years I had a schedule which absolutely did not allow for 50k new words -- I adjusted my personal goals. (I did not claim a 50k win if I did not achieve one, but I celebrated a personal win for achieving personal goals.) Some years I wrote 50k in one project, and some 50k across multiple projects. NaNoWriMo has acknowledged this for years with the "NaNo Rebel" label.

So saying out of the blue that because some people cannot achieve 50k in a month, we should devalue the challenge (y'know that word has a definition, right?) and allow anyone to claim a win whether they actually wrote 50,000 words or not... Well, that's not only rude to writers who actually write, but it was unnecessary, because project goals have always been adjustable to personal constraints.

It's also hugely unhelpful to participating writers. Yeah, writing 50k words in a month is tough. That's why it's a challenge. Allowing people to "generate" (quotes intentional) words from a machine does not improve their skills. No one benefits from using AI to generate work -- not the "writer" who did not write those words and so did not practice and improve a skill, not any reader given lowest-common-denominator words no one could be bothered to write, and not the actual writer whose words were stolen without compensation to blend into the AI-generated copy-pasta.

Hijacking language about disability to justify shortcuts and skipping self-improvement is just cheap, and it's not fair to people with disabilities.

I would much rather see NaNoWriMo say, "Hey, we don't all start in the same place, and we may need different goals. Here's overt permission to set personal goals" (or maybe even, "here are several goals to choose from"), "and if you are a NaNo Rebel, rock on! This creativity challenge does require you to do your own work, in order for you to see your own skills improve."

And, honesty. Part of why I don't feel great about NaNoWriMo's backtracking and clarifications is that they're still not being open.

The same email links to an FAQ about data harvesting, which opens with this sentence:

Users of our main website, NaNoWriMo.org, do not type their work directly into our interface, nor do they save or upload their work to our website in any way.

This is technically correct in the present tense, but for years it wasn't. Every NaNo winner for years pasted their work into the word counter for verification. That was, by every web development definition, uploading.

[Updated: the word count validator was discontinued in recent years, and I was wrong to originally write as if it was still happening. I do think addressing the question of the validator would be appropriate when refuting accusations of data harvesting, for clarity and assurance regarding any past harvesting, especially giving today's AI scraping concerns. Again, as stated below, I don't think the validator was stealing work! But I wasn't the only person to immediately think of the validator when reading the FAQ. I was, however, wrong to state it as present-tense here.]

To be clear, I do not believe that NaNoWriMo is harvesting my work, or I wouldn't have verified wins with their word counter. But that's not because of this completely bogus assurance that their website never had the upload that they've required for win verification.

"Well, sure, we had the word counter, but it didn't store your work, and you should have known that's what we meant" is not a valid expectation when you are refuting data concerns. Just as "You should have known what we meant" is not a valid position when clarifying statements about the use of generative AI.

My point is, there are a number of different people making statements for NaNoWriMo, and at least some of them are not competent to make clear, coherent, and correct statements. Either they are not aware that the word counter exists, or they're not aware that pasting data into a website that uses that data to process a task is in fact uploading, or they are not aware that implying they've never collected data they did previously collect in a FAQ is dishonest. Or they are not aware that commenting or DMing users to castigate them for expressing legitimate concerns is not a good practice. Or they are missing the whole point of a writing challenge and emphasizing instead the warm fuzzies of inclusion without actually honoring that marginalized people also want to feel a sense of accomplishment rather than being token "winners."

I judged another writing challenge, once, which included an automatically-processed digital badge for minimum word count. One of the entries was just gibberish repeated to meet the minimum word count. Okay, "participant" who did not actually create anything -- you got your automated digital badge, so I guess you feel cool and clever. But did you meet the challenge? Did you level up? Did you come out stronger and more prepared for the next one?

That's what generative AI use does. Cheap meaningless win, no actual personal progress. That's why we didn't want it endorsed in NaNoWriMo. That's what NaNo is missing in their replies.

And I remain suspicious of replies, anyway, while absolute falsehoods are in their FAQ.

It's sad, because I've truly enjoyed NaNoWriMo in the past. And I actually do think they could recover from past scandal and current AI missteps. But it does not look at this time like they're on that path.

@nanowrimo

#nanowrimo#writeblr#writers#writing#national novel writing month#writing community#am writing#writers of tumblr#creative writing#anti ai

42 notes

·

View notes

Text

"Open" "AI" isn’t

Tomorrow (19 Aug), I'm appearing at the San Diego Union-Tribune Festival of Books. I'm on a 2:30PM panel called "Return From Retirement," followed by a signing:

https://www.sandiegouniontribune.com/festivalofbooks

The crybabies who freak out about The Communist Manifesto appearing on university curriculum clearly never read it – chapter one is basically a long hymn to capitalism's flexibility and inventiveness, its ability to change form and adapt itself to everything the world throws at it and come out on top:

https://www.marxists.org/archive/marx/works/1848/communist-manifesto/ch01.htm#007

Today, leftists signal this protean capacity of capital with the -washing suffix: greenwashing, genderwashing, queerwashing, wokewashing – all the ways capital cloaks itself in liberatory, progressive values, while still serving as a force for extraction, exploitation, and political corruption.

A smart capitalist is someone who, sensing the outrage at a world run by 150 old white guys in boardrooms, proposes replacing half of them with women, queers, and people of color. This is a superficial maneuver, sure, but it's an incredibly effective one.

In "Open (For Business): Big Tech, Concentrated Power, and the Political Economy of Open AI," a new working paper, Meredith Whittaker, David Gray Widder and Sarah B Myers document a new kind of -washing: openwashing:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4543807

Openwashing is the trick that large "AI" companies use to evade regulation and neutralizing critics, by casting themselves as forces of ethical capitalism, committed to the virtue of openness. No one should be surprised to learn that the products of the "open" wing of an industry whose products are neither "artificial," nor "intelligent," are also not "open." Every word AI huxters say is a lie; including "and," and "the."

So what work does the "open" in "open AI" do? "Open" here is supposed to invoke the "open" in "open source," a movement that emphasizes a software development methodology that promotes code transparency, reusability and extensibility, which are three important virtues.

But "open source" itself is an offshoot of a more foundational movement, the Free Software movement, whose goal is to promote freedom, and whose method is openness. The point of software freedom was technological self-determination, the right of technology users to decide not just what their technology does, but who it does it to and who it does it for:

https://locusmag.com/2022/01/cory-doctorow-science-fiction-is-a-luddite-literature/

The open source split from free software was ostensibly driven by the need to reassure investors and businesspeople so they would join the movement. The "free" in free software is (deliberately) ambiguous, a bit of wordplay that sometimes misleads people into thinking it means "Free as in Beer" when really it means "Free as in Speech" (in Romance languages, these distinctions are captured by translating "free" as "libre" rather than "gratis").

The idea behind open source was to rebrand free software in a less ambiguous – and more instrumental – package that stressed cost-savings and software quality, as well as "ecosystem benefits" from a co-operative form of development that recruited tinkerers, independents, and rivals to contribute to a robust infrastructural commons.

But "open" doesn't merely resolve the linguistic ambiguity of libre vs gratis – it does so by removing the "liberty" from "libre," the "freedom" from "free." "Open" changes the pole-star that movement participants follow as they set their course. Rather than asking "Which course of action makes us more free?" they ask, "Which course of action makes our software better?"

Thus, by dribs and drabs, the freedom leeches out of openness. Today's tech giants have mobilized "open" to create a two-tier system: the largest tech firms enjoy broad freedom themselves – they alone get to decide how their software stack is configured. But for all of us who rely on that (increasingly unavoidable) software stack, all we have is "open": the ability to peer inside that software and see how it works, and perhaps suggest improvements to it:

https://www.youtube.com/watch?v=vBknF2yUZZ8

In the Big Tech internet, it's freedom for them, openness for us. "Openness" – transparency, reusability and extensibility – is valuable, but it shouldn't be mistaken for technological self-determination. As the tech sector becomes ever-more concentrated, the limits of openness become more apparent.

But even by those standards, the openness of "open AI" is thin gruel indeed (that goes triple for the company that calls itself "OpenAI," which is a particularly egregious openwasher).

The paper's authors start by suggesting that the "open" in "open AI" is meant to imply that an "open AI" can be scratch-built by competitors (or even hobbyists), but that this isn't true. Not only is the material that "open AI" companies publish insufficient for reproducing their products, even if those gaps were plugged, the resource burden required to do so is so intense that only the largest companies could do so.

Beyond this, the "open" parts of "open AI" are insufficient for achieving the other claimed benefits of "open AI": they don't promote auditing, or safety, or competition. Indeed, they often cut against these goals.

"Open AI" is a wordgame that exploits the malleability of "open," but also the ambiguity of the term "AI": "a grab bag of approaches, not… a technical term of art, but more … marketing and a signifier of aspirations." Hitching this vague term to "open" creates all kinds of bait-and-switch opportunities.

That's how you get Meta claiming that LLaMa2 is "open source," despite being licensed in a way that is absolutely incompatible with any widely accepted definition of the term:

https://blog.opensource.org/metas-llama-2-license-is-not-open-source/

LLaMa-2 is a particularly egregious openwashing example, but there are plenty of other ways that "open" is misleadingly applied to AI: sometimes it means you can see the source code, sometimes that you can see the training data, and sometimes that you can tune a model, all to different degrees, alone and in combination.

But even the most "open" systems can't be independently replicated, due to raw computing requirements. This isn't the fault of the AI industry – the computational intensity is a fact, not a choice – but when the AI industry claims that "open" will "democratize" AI, they are hiding the ball. People who hear these "democratization" claims (especially policymakers) are thinking about entrepreneurial kids in garages, but unless these kids have access to multi-billion-dollar data centers, they can't be "disruptors" who topple tech giants with cool new ideas. At best, they can hope to pay rent to those giants for access to their compute grids, in order to create products and services at the margin that rely on existing products, rather than displacing them.

The "open" story, with its claims of democratization, is an especially important one in the context of regulation. In Europe, where a variety of AI regulations have been proposed, the AI industry has co-opted the open source movement's hard-won narrative battles about the harms of ill-considered regulation.

For open source (and free software) advocates, many tech regulations aimed at taming large, abusive companies – such as requirements to surveil and control users to extinguish toxic behavior – wreak collateral damage on the free, open, user-centric systems that we see as superior alternatives to Big Tech. This leads to the paradoxical effect of passing regulation to "punish" Big Tech that end up simply shaving an infinitesimal percentage off the giants' profits, while destroying the small co-ops, nonprofits and startups before they can grow to be a viable alternative.

The years-long fight to get regulators to understand this risk has been waged by principled actors working for subsistence nonprofit wages or for free, and now the AI industry is capitalizing on lawmakers' hard-won consideration for collateral damage by claiming to be "open AI" and thus vulnerable to overbroad regulation.

But the "open" projects that lawmakers have been coached to value are precious because they deliver a level playing field, competition, innovation and democratization – all things that "open AI" fails to deliver. The regulations the AI industry is fighting also don't necessarily implicate the speech implications that are core to protecting free software:

https://www.eff.org/deeplinks/2015/04/remembering-case-established-code-speech

Just think about LLaMa-2. You can download it for free, along with the model weights it relies on – but not detailed specs for the data that was used in its training. And the source-code is licensed under a homebrewed license cooked up by Meta's lawyers, a license that only glancingly resembles anything from the Open Source Definition:

https://opensource.org/osd/

Core to Big Tech companies' "open AI" offerings are tools, like Meta's PyTorch and Google's TensorFlow. These tools are indeed "open source," licensed under real OSS terms. But they are designed and maintained by the companies that sponsor them, and optimize for the proprietary back-ends each company offers in its own cloud. When programmers train themselves to develop in these environments, they are gaining expertise in adding value to a monopolist's ecosystem, locking themselves in with their own expertise. This a classic example of software freedom for tech giants and open source for the rest of us.

One way to understand how "open" can produce a lock-in that "free" might prevent is to think of Android: Android is an open platform in the sense that its sourcecode is freely licensed, but the existence of Android doesn't make it any easier to challenge the mobile OS duopoly with a new mobile OS; nor does it make it easier to switch from Android to iOS and vice versa.

Another example: MongoDB, a free/open database tool that was adopted by Amazon, which subsequently forked the codebase and tuning it to work on their proprietary cloud infrastructure.

The value of open tooling as a stickytrap for creating a pool of developers who end up as sharecroppers who are glued to a specific company's closed infrastructure is well-understood and openly acknowledged by "open AI" companies. Zuckerberg boasts about how PyTorch ropes developers into Meta's stack, "when there are opportunities to make integrations with products, [so] it’s much easier to make sure that developers and other folks are compatible with the things that we need in the way that our systems work."

Tooling is a relatively obscure issue, primarily debated by developers. A much broader debate has raged over training data – how it is acquired, labeled, sorted and used. Many of the biggest "open AI" companies are totally opaque when it comes to training data. Google and OpenAI won't even say how many pieces of data went into their models' training – let alone which data they used.

Other "open AI" companies use publicly available datasets like the Pile and CommonCrawl. But you can't replicate their models by shoveling these datasets into an algorithm. Each one has to be groomed – labeled, sorted, de-duplicated, and otherwise filtered. Many "open" models merge these datasets with other, proprietary sets, in varying (and secret) proportions.

Quality filtering and labeling for training data is incredibly expensive and labor-intensive, and involves some of the most exploitative and traumatizing clickwork in the world, as poorly paid workers in the Global South make pennies for reviewing data that includes graphic violence, rape, and gore.

Not only is the product of this "data pipeline" kept a secret by "open" companies, the very nature of the pipeline is likewise cloaked in mystery, in order to obscure the exploitative labor relations it embodies (the joke that "AI" stands for "absent Indians" comes out of the South Asian clickwork industry).

The most common "open" in "open AI" is a model that arrives built and trained, which is "open" in the sense that end-users can "fine-tune" it – usually while running it on the manufacturer's own proprietary cloud hardware, under that company's supervision and surveillance. These tunable models are undocumented blobs, not the rigorously peer-reviewed transparent tools celebrated by the open source movement.

If "open" was a way to transform "free software" from an ethical proposition to an efficient methodology for developing high-quality software; then "open AI" is a way to transform "open source" into a rent-extracting black box.

Some "open AI" has slipped out of the corporate silo. Meta's LLaMa was leaked by early testers, republished on 4chan, and is now in the wild. Some exciting stuff has emerged from this, but despite this work happening outside of Meta's control, it is not without benefits to Meta. As an infamous leaked Google memo explains:

Paradoxically, the one clear winner in all of this is Meta. Because the leaked model was theirs, they have effectively garnered an entire planet's worth of free labor. Since most open source innovation is happening on top of their architecture, there is nothing stopping them from directly incorporating it into their products.

https://www.searchenginejournal.com/leaked-google-memo-admits-defeat-by-open-source-ai/486290/

Thus, "open AI" is best understood as "as free product development" for large, well-capitalized AI companies, conducted by tinkerers who will not be able to escape these giants' proprietary compute silos and opaque training corpuses, and whose work product is guaranteed to be compatible with the giants' own systems.

The instrumental story about the virtues of "open" often invoke auditability: the fact that anyone can look at the source code makes it easier for bugs to be identified. But as open source projects have learned the hard way, the fact that anyone can audit your widely used, high-stakes code doesn't mean that anyone will.

The Heartbleed vulnerability in OpenSSL was a wake-up call for the open source movement – a bug that endangered every secure webserver connection in the world, which had hidden in plain sight for years. The result was an admirable and successful effort to build institutions whose job it is to actually make use of open source transparency to conduct regular, deep, systemic audits.

In other words, "open" is a necessary, but insufficient, precondition for auditing. But when the "open AI" movement touts its "safety" thanks to its "auditability," it fails to describe any steps it is taking to replicate these auditing institutions – how they'll be constituted, funded and directed. The story starts and ends with "transparency" and then makes the unjustifiable leap to "safety," without any intermediate steps about how the one will turn into the other.

It's a Magic Underpants Gnome story, in other words:

Step One: Transparency

Step Two: ??

Step Three: Safety

https://www.youtube.com/watch?v=a5ih_TQWqCA

Meanwhile, OpenAI itself has gone on record as objecting to "burdensome mechanisms like licenses or audits" as an impediment to "innovation" – all the while arguing that these "burdensome mechanisms" should be mandatory for rival offerings that are more advanced than its own. To call this a "transparent ruse" is to do violence to good, hardworking transparent ruses all the world over:

https://openai.com/blog/governance-of-superintelligence

Some "open AI" is much more open than the industry dominating offerings. There's EleutherAI, a donor-supported nonprofit whose model comes with documentation and code, licensed Apache 2.0. There are also some smaller academic offerings: Vicuna (UCSD/CMU/Berkeley); Koala (Berkeley) and Alpaca (Stanford).

These are indeed more open (though Alpaca – which ran on a laptop – had to be withdrawn because it "hallucinated" so profusely). But to the extent that the "open AI" movement invokes (or cares about) these projects, it is in order to brandish them before hostile policymakers and say, "Won't someone please think of the academics?" These are the poster children for proposals like exempting AI from antitrust enforcement, but they're not significant players in the "open AI" industry, nor are they likely to be for so long as the largest companies are running the show:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4493900

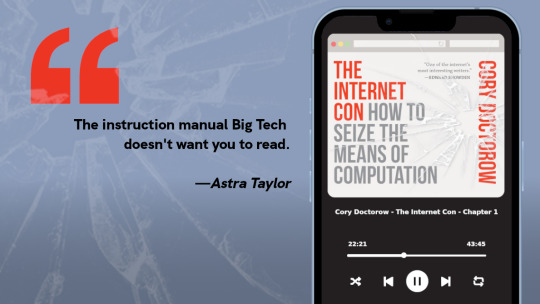

I'm kickstarting the audiobook for "The Internet Con: How To Seize the Means of Computation," a Big Tech disassembly manual to disenshittify the web and make a new, good internet to succeed the old, good internet. It's a DRM-free book, which means Audible won't carry it, so this crowdfunder is essential. Back now to get the audio, Verso hardcover and ebook:

http://seizethemeansofcomputation.org

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/08/18/openwashing/#you-keep-using-that-word-i-do-not-think-it-means-what-you-think-it-means

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#llama-2#meta#openwashing#floss#free software#open ai#open source#osi#open source initiative#osd#open source definition#code is speech

255 notes

·

View notes

Text

idk if someone's done this post already (it seems so obvious!) but can we talk about how closely taylor's character arc mirrors the three metamorphoses proposed by the titular character in nietzsche's thus spoke zarathustra. (i'll use the kaufmann translation.)

"Of three metamorphoses of the spirit I tell you: how the spirit becomes a camel; and the camel, a lion; and the lion, finally, a child."

taylor is first the camel, a beast of burden; in other words, she is skitter. she "makes friends with the deaf," the undersiders, who don't understand what she really wants. she compromises her virtues (do-gooding) in the name of what zarathustra might call "wretched contentment."

then, beginning with the discovery of dinah, taylor begins a terrible and arduous trek into a spiritual desert, where the second metamorphosis occurs, and taylor becomes a lion. "for ultimate victory [s]he wants to fight with the great dragon" -- literally! for zarathustra, the dragon represents all preexisting virtues, the notions of good and evil that have been developed for millenia. dragon, the ai, represents this very moral rigidity -- and by defeating her taylor completes her transformation into proud unyielding weaver, who will brook no opposition to her, uh, ethically unorthodox methodology. only this blond beast could kill aster.

finally, taylor must become the child to complete her task. "why must the preying lion still become a child? the child is innocence and forgetting, a new beginning, a game, a self-propelled wheel, a first movement, a sacred 'yes.' " she must learn to speak a googoogaga-ass language. (as nietzsche notes in the fifth book of the gay science, what is conventional language but a means of becoming part of the herd.) khepri creates her own values, propels the rest of humanity forward. khepri exists, truly, beyond good and evil. "the spirit now wills [her] own will, and [she] who had been lost to the world now conquers [her] own world." khepri was an arrow of longing who crashed mangled and defeated back to the earth.

thus, taylor fully emblematizes an ontology of becoming. she is a bridge to the overman, and zarathustra will bury her with his own hands.

246 notes

·

View notes

Text

Who are we up against?

There are many ways to answer this question, but it is most accurately answered with one word: evil. We’re up against evil.

It doesn’t really matter what shape or form it takes, or what useful puppet is being employed for its purpose, the essence of evil, the spirit of evil is always the same; to destroy everything that is sacred, everything that is organic, everything that is human, in essence; everything that is God.

Fighting evil is a higly complex endevour, for the simple reason that by definition, evil will never engage fairly or with integrity. This is not a war between two honourable adversaries, this is a war between empathy and psychopathy, between virtue and immorality, between ethics and corruption, between truth and deceipt, between righteousness and depravity.

It’s a war fought on different planes, on different fields and different levels of awareness.

Understanding the nature of the battle is crucial if we want victory. We cannot win by engaging the enemy in its terrain, or by using the same tactics and weapons, for in the process we would have joined its ranks.

In a universe marked by its binary nature, we must become the polar opposite of that which we are trying to defeat.

This is a battle won by taking the higher ground, by elevating oneself, by standing above, by not engaging, by not accepting and by not acknowledging.

In the matrix we lose, with their weapons we lose, with their tactics we lose, but in God’s universe and in his example we win.

In our organic reality we win, standing in honour and integrity we win, choosing righteousness we win, having faith we win, walking together we win, connecting within and without we win, with truth we win, by understanding our power we win, by breaking the illusion we win…

It’s a battle of power vs force.

We have the real power; the divine, eternal power with which the universe was created. The other side doesn’t have it, it needs to use force, trickery, gimmicks, mind control, drugs, AI, technology, coercion, deceipt and an incessant, illusionary fear campaign in order to disempower us.

‘Turning the other cheek’ is not about surrendering or acquiescing, it’s about not engaging on their terms. It’s about elevating oneself to a different plane, it’s about showing the power is within and it cannot be usurped, it’s about not falling for the provokation, for the trickery and the toxicity, it’s about showing that you are above them, unspoiled, unadulterated, grounded in your essence, powered by the divine, true to your nature, free from the mind control and out of the matrix.

We now know who we are up against and so we win by revealing ourselves how we were created to be; in his image, remember?

And don’t worry, there is such a thing as universal justice, and it’s finally coming… 🤔

- Laura Aboli

#pay attention#educate yourselves#educate yourself#knowledge is power#reeducate yourselves#reeducate yourself#think about it#think for yourselves#think for yourself#do your homework#do some research#do your research#do your own research#ask yourself questions#question everything#evil lives here#laura aboli#you decide

47 notes

·

View notes

Text

Voices from History Are Whispering to Us, Still

To Hold Steady and Seek the Wisdom They Once Prayed For As I begin to read and reflect on the birth of our nation, I find myself drawn to The Debate on the Constitution, edited by Bernard Bailyn. In this remarkable collection, voices from the founding era come alive through letters, speeches, and passionate exchanges over the very principles that would shape America’s future. My journey through…

#AI for the Highest Good#altruistic governance#American history#American politics#Bernard Bailyn#civic virtue#collective responsibility#Constitution#Constitution debates#David Reddick#empathy in leadership#ethical AI alignment#ethical governance#founding fathers#founding ideals#guidance for unity#history#integrity#integrity in politics#intergenerational ethics#lessons from the past#moral courage#politics#reflections on history#reflective democracy#Timeless Wisdom#unity#wisdom#wisdom in governance

0 notes

Note

On AI: I use it for work. I used it to create my CV and cover letter to get my job. I've taken a course on how to effectively use AI. I've taken a course on the ethics and challenges of AI. I hear the university I went to is now implementing AI into their curriculum.

I support it being used as an assistant. I think it's fine as long as you don't claim you wrote it yourself. We have AI research assistants, AI medical assistants, and yes, AI writing assistants.

I'm also a conscientious consumer. I don't use AI owned by people I detest. I don't buy products that have AI on the packaging or use AI images for marketing (it SCREAMS scam).

I also think we should be careful of data security. People should not be using AI for therapy. People should not be letting AI run their schedules, answer their emails or watch their meetings. These things are like viruses and hard to contain once you hand control over to them. Data security is paramount.

In general, there's a lot of nuance that needs to be taken into account. I think one of the biggest issues with AI and automation is the lack of transparency. It's horrifying to know judges use vague automated risk scores to sentence criminals, and AI can be used to 'catch' the wrong suspects for crimes while still being leant credence by virtue of not being human.

Machines are not smarter or more reliable than us! They are simply faster, and sometimes speed is necessary.

💁🏽♀️: I’m going to just put this right here. Yes, bless you, anon. I agree with everything you just said.

12 notes

·

View notes

Text

The Philosophy of The Duality of Man

The philosophy of the duality of man explores the idea that human nature is inherently composed of opposing forces—good and evil, rationality and instinct, order and chaos. This concept appears in various philosophical, religious, and psychological traditions, each offering a different perspective on how these dual forces interact within individuals.

Key Perspectives on the Duality of Man

Religious & Mythological Views

Many religious traditions acknowledge a fundamental human struggle between virtue and sin.

Christianity: The battle between divine goodness and original sin.

Taoism: The balance of Yin and Yang, where opposites coexist in harmony.

Zoroastrianism: The cosmic struggle between Ahura Mazda (light) and Angra Mainyu (darkness).

Philosophical Perspectives

Plato: Suggested a tripartite soul (rational, spirited, and appetitive parts), with reason meant to control base desires.

Hobbes vs. Rousseau: Hobbes viewed humans as naturally selfish and needing control, whereas Rousseau believed people are inherently good but corrupted by society.

Nietzsche: Criticized traditional morality, arguing that humanity must transcend simplistic notions of good and evil.

Psychological Theories

Freud’s Model of the Psyche:

Id (instincts) vs. Superego (morality), mediated by the Ego (rational self).

Carl Jung’s Shadow Self:

Humans have a "shadow" aspect—repressed instincts and desires—that must be acknowledged for self-actualization.

Literary & Cultural Representations

Dr. Jekyll and Mr. Hyde (Robert Louis Stevenson): A literal depiction of the internal battle between good and evil.

Dostoevsky’s "Crime and Punishment": Examines morality, guilt, and redemption.

Star Wars (Light Side vs. Dark Side): Popular culture often reflects this theme in narratives of inner struggle.

Modern Implications

Ethics: How do we balance self-interest with altruism?

Law & Society: Should justice focus on punishment (evil within) or rehabilitation (capacity for good)?

Technology & AI: If humans are morally dualistic, should artificial intelligence be designed with moral safeguards?

The duality of man challenges simplistic moral binaries, urging us to recognize, integrate, and reconcile opposing aspects of human nature rather than suppress or deny them.

#philosophy#epistemology#knowledge#learning#chatgpt#education#psychology#Human Nature#Good and Evil#Philosophy of Dualism#Psychology of Morality#Light vs. Dark#Ethics and Morality#Freud and Jung#Taoism and Duality#Literary Dualism#The Shadow Self

6 notes

·

View notes

Note

Cis means nothing and you're not helping lesbians and gays with that ideology, it's also hurting women in the long run... XX and XY is not mean idk why it became such an issue... We could have all been nice to each other but this play pretend game went so far it's not even tolerable anymore

Being aggressive and refusing to hear people explaining you calmly that this ideology is hurtful won't make this world a better place

Anyway have a nice day

anon idek what you are talking about. I don't think we ever talked before, so not sure where you get the "Being aggressive and refusing to hear" from? Removing transphobic AI generated insults on my post and blocking bait posts is aggressive?

"Play pretend" what? I clarify as being cis woman with a masculine English name several times, because online people often assume I am he. Here's a thing: I grew up being engrained with a social manual for women called "Three Obediences and Four Virtues" (Tam Tòng Tứ Đức 三從四德), so I totally understand when trans people say transphobia affects cis women. FYI the four virtues in said manual are: appearance, speech, ethic/modesty, and housekeeping, so yes, transphobia is rooted in sexism.

I choose to make this world a better place by emphasizing with other marginalized groups, even if I am not part of. That is my ideology. Cheers.

#ty Confucius (derogatory)#anon#anon being cis never bothers me in case you get that impression#if you wanna check the ai posts you can look at the hidden replies on my neve photo on twt#because that was what I was ranting about

15 notes

·

View notes

Text

I was thinking about the devs saying that Edelgard represents hadou, what does it mean to be a good Confucian ruler? In what way is Edelgard meant to be immoral? So I looked up Confucian virtues, this is what google’s AI overview gave me.

Benevolence (ren): This is considered the most fundamental virtue, encompassing empathy, kindness, and love. It involves treating others with consideration and understanding, putting their needs and perspectives first.

Righteousness (yi): This virtue emphasizes doing what is right and just, acting in accordance with moral principles and social norms. It involves adhering to ethical standards and doing what is necessary to maintain a just and equitable society.

Propriety (li): This virtue refers to proper etiquette, rituals, and social customs. It involves maintaining order and harmony in society by adhering to established norms and protocols.

Wisdom (zhi): This virtue involves the ability to understand and apply knowledge to solve problems and make sound judgments. It encompasses the capacity to discern the right course of action in various situations.

Trustworthiness (xin): This virtue emphasizes honesty, sincerity, and keeping one's word. It involves being reliable and dependable in one's actions and dealings with others.

These are all reasons why Dimitri and Byleth are meant to be the good rulers Fodlan needs.

But as I looked this over I realized, Edelgard appears to be the good ruler at first glance. If we take benevolence, she’s says she’s doing this for the good of the people and freeing them. In terms of righteousness, but she appears to be the hero during White Clouds in the Black Eagles route. In the Japanese script, she comes off as more polite for propriety. She also appears to be smart, wisdom, and opens up to the player about her backstory. Not to mention, the promise to meet again in five years.

But then the reveal happens, and during the second part we start seeing Edelgard’s true colors.

She’s shown not to be benevolent, as she’s going to kill anyone who opposes her. She’s going to force her beliefs on people, wiping out the beliefs that her ancestors helped spread throughout Fodlan while brushing off in her S support the idea that people may have been happier living under the former system. The parley scene in Azure Moon even has her blaming the people if her ideals don’t make things better for them. Heroes even added that she has never even thought about the idea that the duties and responsibilities she was born to inherit are worth it because they come with power she can use to help her people, differing her from every other lord in the Fire Emblem franchise. Her beliefs include leaving the people to rely on their own strength rather than supporting them ffs, whereas in Confucianism a ruler must be like a parent to their people.

Righteousness is shot down because of the Flame Emperor reveal, showing that Edelgard has been involved with the forces we’ve been fighting all along. She was the one to hire the bandits with orders to kill her classmates, she’s been aiding in the Agarthan’s experiments on people including Remire village in the empire. She’s willing to sacrifice her own people in order to win, or has people framed in order to justify persecuting them. Her only apprehension to the idea of making demonic beasts isn’t the morality of it, but rather how their effectiveness hasn’t been proven, and she’s depicted as someone who will even throw away her humanity so that she may rule the world.

Propriety doesn’t work either. hile she talks about putting the world back to the way it once was, some sort of natural state before the Church of Serios but she also doesn’t want to give up the Empire either. She wants to restore the Empire to it’s past glory, but without the Church who fostered it’s founding. Edelgard even presents her ancestor Wilhelm as someone who was tricked by Seiros, who is also said to be her ancestor. And of course, her values go against theirs with her throwing the continent into the chaos of war which is so damaging Fodlan has no choice in the end but to reunify. Her system leads to the commoners being oppressed because it benefits the nobility according to Hopes, and Caspar’s ending shows that for all her talk of merit she’s willing to leave her army in the hands of someone incapable of keeping them under control. She’s also shown to be very rude when her facade slips.

She’s also shown to be wrong about things but is too stubborn to accept that she is wrong. Instead, she’s try to rationalize things in her favor, like the aforementioned blaming of the people for her reforms failing them. She’s not a wise leader.

And as for trustworthiness, there’s a reason the game calls her the lady of deceit. She keeps her allies in the dark and misrepresents the truth for her own benefit. Hubert’s lines even show that when she opened up to Byleth, she misrepresented her own backstory.

Really, Edelgard just has this lack of Confucian value to her. This complete and utter lack of it when even Cao Cao in Rot3K had some virtue.

5 notes

·

View notes

Text

"...A staunch defender of the human soul and its connection to morality and spirituality. Considering AI’s lack of a moral compass and spiritual dimension... the ethical implications of delegating decision-making to entities devoid of a conscience... true wisdom and virtue emerge from the depths of the human experience, elements that AI, with its inherent limitations, might struggle to comprehend."

Scott Postma

6 notes

·

View notes