#AI for pipeline monitoring

Explore tagged Tumblr posts

Text

Smart Pipeline Monitoring - AI + IoT for Real-Time Fault Detection in Oil Refineries

Ensure operational safety in oil refineries with AI and IoT-powered pipeline monitoring. Learn how Theta Technolabs, a top industrial IoT development company in Dallas, delivers real-time fault detection, predictive maintenance, and AI-based pipeline diagnostics to minimize downtime and optimize refinery performance.

#AI for pipeline monitoring#Artificial Intelligence#Real-time fault detection systems#IoT development company#IoT Solutions#Smart monitoring systems#IoT-based asset monitoring solutions

0 notes

Text

Exciting developments in MLOps await in 2024! 🚀 DevOps-MLOps integration, AutoML acceleration, Edge Computing rise – shaping a dynamic future. Stay ahead of the curve! #MLOps #TechTrends2024 🤖✨

#MLOps#Machine Learning Operations#DevOps#AutoML#Automated Pipelines#Explainable AI#Edge Computing#Model Monitoring#Governance#Hybrid Cloud#Multi-Cloud Deployments#Security#Forecast#2024

0 notes

Text

Site Update - 2/9/2024

Hi Pillowfolks!

Today is the day. Post Queueing & Scheduling is finally here for everyone. Hooray! As always we will be monitoring closely for any unexpected bugs so please let us know if you run into any.

New Features/Improvements

✨ *NEW* Queue & Schedule - One of the most highly requested features has finally arrived at Pillowfort. Users can now effortlessly Queue or Schedule a post for a future time.

Queue helps keep your Pillowfort active by staggering posts over a period of hours or days. Just go to your Settings page to set your queue interval and time period.

How to add a post to your queue:

While creating a new post or editing a draft, click on the clock icon to the right of the “Publish” button and choose “Queue.” Then click “Queue” when you’re ready to submit the post.

Schedule assigns a post a specific publishing time in the future (based on your timezone you’ve selected in Account Settings). How to schedule a post:

While creating a new post or editing a draft, click on the clock icon to the right of “Publish” and choose “Schedule.” Enter the time you wish to publish your post, click on “Submit” and then click “Schedule.”

How to review your queued & scheduled posts:

On the web, your Queue is available in the user sidebar located on the left side of the screen underneath “Posts.” (On mobile devices, click on the three line icon located on the upper left of your screen to access your user sidebar.)

Note: the “Queue” button will only display if you have one or more queued or scheduled posts.

A CAVEAT: It is not currently possible to queue or schedule posts to Communities. We do intend to add this feature in the future, but during development it was determined that enabling queueing & scheduling to Communities would require additional workflow and use case requirements that would extend development time when this project has already been delayed, and so it was decided to release queue & scheduling for blogs only at the present time. We will add the ability to queue & schedule to Communities soon after the Pillowfort PWA (our next major development project) is complete.

✨ End of Year Fundraiser Reward Badges: End of Year Fundraiser Rewards Badges will begin to be distributed today. We'll update everyone when distribution is done.

✨ End of Year Fundraiser Reward Frames: As a special thank you to our community for helping keep Pillowfort online we have released two very special (and cozy!) Avatar Frames for all users.

As for the remaining End of Year Fundraiser Rewards - we will be asking the Community for feedback on the upcoming Light Mode soon.

✨ Valentine’s Day Avatar Frame: A new Valentine’s Day inspired frame is now available!

✨ Valentine’s Day Premium Frames: Alternate colors of the Valentine’s Day frame are available to Pillowfort Premium subscribers.

✨ Site FAQ Update - Our Site FAQ has received a revamp.

Terms of Service Update

As of today (February 9th), we are updating our Terms of Service to prohibit the following content:

Images created through the use of generative AI programs such as Stable Diffusion, Midjourney, and Dall-E.

An explanation of how this policy will be enforced and what exactly that means for you is available here: https://www.pillowfort.social/posts/4317673

Thank you again for your continued support. Other previously mentioned updates (such as the Pillowfort Premium Price increase, Multi Account Management, PWA, and more) will be coming down the pipeline soon. As always, stay tuned for updates.

Best, Staff

#pillowfort.social#pifo#pillowfort#queue#schedule#site update#new release#dev update#valentines#ai policy#tos#premium frames

136 notes

·

View notes

Text

AI & Cybersecurity: Navigating the Future Safely & Ethically

The integration of Ai is revolutionizing cybersecurity, empowering organizations with unprecedented capabilities to detect, predict, and respond to threats at a scale. From advanced anomaly detection to automated incident response, AI is an indispensable tool in strengthening our digital defenses. We embrace the transformative power of AI. It offers immense potential to enhance threat hunting, streamline operations, and elevate our collective security posture. However, with great power comes great responsibility. The rapid adoption of AI also introduces new complexities and potential risks that demand a proactive, security-first approach: How to Be Safe in the AI-Driven Cyber Landscape: Secure AI Development & Deployment: Treat AI models and their underlying data pipelines as critical assets. Implement robust security measures from the initial design phase through deployment, ensuring secure coding practices, vulnerability management, and secure configurations. Data Privacy & Governance: AI systems are data-hungry. Establish strict data privacy protocols, anonymization techniques, and clear governance frameworks to protect sensitive information used for training and operation. Continuous Monitoring & Auditing: AI models can evolve and introduce unforeseen vulnerabilities or biases. Implement continuous monitoring, regular audits, and validation processes to ensure AI systems are functioning as intended and not creating new attack surfaces. Human Oversight & Intervention: AI is a powerful assistant, not a replacement. Maintain strong human oversight in decision-making processes, ensuring that human experts can review, validate, and intervene, when necessary, especially in critical security operations. Building an Ethical Foundation for AI in Cybersecurity: Beyond technical safeguards, an ethical framework is paramount. We believe in an AI Code of Conduct that prioritizes: Fairness: Ensuring AI systems do not perpetuate or amplify biases that could unfairly target or disadvantage individuals or groups. Transparency: Striving for explainable AI where possible, so that security decisions driven by AI can be understood and audited. Accountability: Establishing clear lines of responsibility for the performance and impact of AI systems, ensuring human accountability for AI-driven outcomes. Beneficial Use: Committing to using AI solely for defensive, protective purposes, and actively preventing its misuse of malicious activities. The future of cybersecurity is intrinsically linked to AI. By approaching its integration with diligence, a commitment to security, and a strong ethical compass, we can harness its full potential while safeguarding our digital world. What are your thoughts on building secure and ethical AI in cybersecurity? Share your perspective! Read More: https://centurygroup.net/the-evolving-threat-of-ai-in-the-public-housing-sector-how-to-protect-your-organization/

#AI #Cybersecurity #AIethics #SecureAI #RiskManagement #Innovation #FutureOfTech #CenturySolutionsGroup

3 notes

·

View notes

Text

Your Guide to Choosing the Right AI Tools for Small Business Growth

In state-of-the-art speedy-paced international, synthetic intelligence (AI) has come to be a game-changer for businesses of all sizes, mainly small corporations that need to stay aggressive. AI tools are now not constrained to big establishments; less costly and available answers now empower small groups to improve efficiency, decorate patron experience, and boost revenue.

Best AI tools for improving small business customer experience

Here’s a detailed review of the top 10 AI tools that are ideal for small organizations:

1. ChatGPT by using OpenAI

Category: Customer Support & Content Creation

Why It’s Useful:

ChatGPT is an AI-powered conversational assistant designed to help with customer service, content creation, and more. Small companies can use it to generate product descriptions, blog posts, or respond to purchaser inquiries correctly.

Key Features:

24/7 customer service via AI chatbots.

Easy integration into web sites and apps.

Cost-powerful answers for growing enticing content material.

Use Case: A small e-trade commercial enterprise makes use of ChatGPT to handle FAQs and automate patron queries, decreasing the workload on human personnel.

2. Jasper AI

Category: Content Marketing

Why It’s Useful:

Jasper AI specializes in generating first rate marketing content. It’s ideal for creating blogs, social media posts, advert reproduction, and extra, tailored to your emblem’s voice.

Key Features:

AI-powered writing assistance with customizable tones.

Templates for emails, advertisements, and blogs.

Plagiarism detection and search engine optimization optimization.

Use Case: A small enterprise owner uses Jasper AI to create search engine optimization-pleasant blog content material, enhancing their website's visibility and traffic.

Three. HubSpot CRM

Category: Customer Relationship Management

Why It’s Useful:

HubSpot CRM makes use of AI to streamline purchaser relationship control, making it less difficult to music leads, control income pipelines, and improve consumer retention.

Key Features:

Automated lead scoring and observe-ups.

AI insights for customized purchaser interactions.

Seamless integration with advertising gear.

Use Case: A startup leverages HubSpot CRM to automate email follow-ups, increasing conversion costs without hiring extra staff.

Four. Hootsuite Insights Powered by means of Brandwatch

Category: Social Media Management

Why It’s Useful:

Hootsuite integrates AI-powered social media insights to help small businesses tune tendencies, manipulate engagement, and optimize their social media method.

Key Features:

Real-time social listening and analytics.

AI suggestions for content timing and hashtags.

Competitor evaluation for a competitive aspect.

Use Case: A nearby café uses Hootsuite to agenda posts, tune customer feedback on social media, and analyze trending content material ideas.

Five. QuickBooks Online with AI Integration

Category: Accounting & Finance

Why It’s Useful:

QuickBooks Online automates bookkeeping responsibilities, rate monitoring, and economic reporting using AI, saving small agencies time and reducing mistakes.

Key Features:

Automated categorization of costs.

AI-driven economic insights and forecasting.

Invoice generation and price reminders.

Use Case: A freelance photo designer uses QuickBooks to simplify tax practise and hold tune of assignment-primarily based earnings.

6. Canva Magic Studio

Category: Graphic Design

Why It’s Useful:

Canva Magic Studio is an AI-more advantageous design tool that empowers non-designers to create stunning visuals for marketing, social media, and presentations.

Key Features:

AI-assisted layout guidelines.

One-click background elimination and resizing.

Access to templates, inventory pictures, and videos.

Use Case: A small bakery makes use of Canva Magic Studio to create pleasing Instagram posts and promotional flyers.

7. Grammarly Business

Category: Writing Assistance

Why It’s Useful:

Grammarly Business guarantees that each one written communications, from emails to reviews, are expert and blunders-unfastened. Its AI improves clarity, tone, and engagement.

Key Features:

AI-powered grammar, spelling, and style corrections.

Customizable tone adjustments for branding.

Team collaboration gear.

Use Case: A advertising company makes use of Grammarly Business to make sure consumer proposals and content material are polished and compelling.

Eight. Zapier with AI Automation

Category: Workflow Automation

Why It’s Useful:

Zapier connects apps and automates workflows without coding. It makes use of AI to signify smart integrations, saving time on repetitive tasks.

Key Features:

Automates responsibilities throughout 5,000+ apps.

AI-pushed recommendations for green workflows.

No coding required for setup.

Use Case: A small IT consulting corporation makes use of Zapier to routinely create tasks in their assignment management device every time a brand new lead is captured.

9. Surfer SEO

Category: Search Engine Optimization

Why It’s Useful:

Surfer SEO uses AI to assist small businesses improve their internet site’s seek engine scores thru content material optimization and keyword strategies.

Key Features:

AI-pushed content audit and optimization.

Keyword studies and clustering.

Competitive evaluation equipment.

Use Case: An on-line store uses Surfer search engine marketing to optimize product descriptions and blog posts, increasing organic site visitors.

10. Loom

Category: Video Communication

Why It’s Useful:

Loom lets in small groups to create video messages quick, which are beneficial for group collaboration, client updates, and customer service.

Key Features:

Screen recording with AI-powered editing.

Analytics for viewer engagement.

Cloud garage and smooth sharing hyperlinks.

Use Case: A digital advertising consultant makes use of Loom to offer video tutorials for customers, improving expertise and lowering in-man or woman conferences.

Why Small Businesses Should Embrace AI Tools

Cost Savings: AI automates repetitive duties, reducing the need for extra group of workers.

Efficiency: These equipment streamline operations, saving time and increasing productiveness.

Scalability: AI permits small organizations to manipulate boom with out full-size infrastructure changes.

Improved Customer Experience: From personalized tips to 24/7 help, AI gear help small groups deliver superior customer service.

3 notes

·

View notes

Text

How-To IT

Topic: Core areas of IT

1. Hardware

• Computers (Desktops, Laptops, Workstations)

• Servers and Data Centers

• Networking Devices (Routers, Switches, Modems)

• Storage Devices (HDDs, SSDs, NAS)

• Peripheral Devices (Printers, Scanners, Monitors)

2. Software

• Operating Systems (Windows, Linux, macOS)

• Application Software (Office Suites, ERP, CRM)

• Development Software (IDEs, Code Libraries, APIs)

• Middleware (Integration Tools)

• Security Software (Antivirus, Firewalls, SIEM)

3. Networking and Telecommunications

• LAN/WAN Infrastructure

• Wireless Networking (Wi-Fi, 5G)

• VPNs (Virtual Private Networks)

• Communication Systems (VoIP, Email Servers)

• Internet Services

4. Data Management

• Databases (SQL, NoSQL)

• Data Warehousing

• Big Data Technologies (Hadoop, Spark)

• Backup and Recovery Systems

• Data Integration Tools

5. Cybersecurity

• Network Security

• Endpoint Protection

• Identity and Access Management (IAM)

• Threat Detection and Incident Response

• Encryption and Data Privacy

6. Software Development

• Front-End Development (UI/UX Design)

• Back-End Development

• DevOps and CI/CD Pipelines

• Mobile App Development

• Cloud-Native Development

7. Cloud Computing

• Infrastructure as a Service (IaaS)

• Platform as a Service (PaaS)

• Software as a Service (SaaS)

• Serverless Computing

• Cloud Storage and Management

8. IT Support and Services

• Help Desk Support

• IT Service Management (ITSM)

• System Administration

• Hardware and Software Troubleshooting

• End-User Training

9. Artificial Intelligence and Machine Learning

• AI Algorithms and Frameworks

• Natural Language Processing (NLP)

• Computer Vision

• Robotics

• Predictive Analytics

10. Business Intelligence and Analytics

• Reporting Tools (Tableau, Power BI)

• Data Visualization

• Business Analytics Platforms

• Predictive Modeling

11. Internet of Things (IoT)

• IoT Devices and Sensors

• IoT Platforms

• Edge Computing

• Smart Systems (Homes, Cities, Vehicles)

12. Enterprise Systems

• Enterprise Resource Planning (ERP)

• Customer Relationship Management (CRM)

• Human Resource Management Systems (HRMS)

• Supply Chain Management Systems

13. IT Governance and Compliance

• ITIL (Information Technology Infrastructure Library)

• COBIT (Control Objectives for Information Technologies)

• ISO/IEC Standards

• Regulatory Compliance (GDPR, HIPAA, SOX)

14. Emerging Technologies

• Blockchain

• Quantum Computing

• Augmented Reality (AR) and Virtual Reality (VR)

• 3D Printing

• Digital Twins

15. IT Project Management

• Agile, Scrum, and Kanban

• Waterfall Methodology

• Resource Allocation

• Risk Management

16. IT Infrastructure

• Data Centers

• Virtualization (VMware, Hyper-V)

• Disaster Recovery Planning

• Load Balancing

17. IT Education and Certifications

• Vendor Certifications (Microsoft, Cisco, AWS)

• Training and Development Programs

• Online Learning Platforms

18. IT Operations and Monitoring

• Performance Monitoring (APM, Network Monitoring)

• IT Asset Management

• Event and Incident Management

19. Software Testing

• Manual Testing: Human testers evaluate software by executing test cases without using automation tools.

• Automated Testing: Use of testing tools (e.g., Selenium, JUnit) to run automated scripts and check software behavior.

• Functional Testing: Validating that the software performs its intended functions.

• Non-Functional Testing: Assessing non-functional aspects such as performance, usability, and security.

• Unit Testing: Testing individual components or units of code for correctness.

• Integration Testing: Ensuring that different modules or systems work together as expected.

• System Testing: Verifying the complete software system’s behavior against requirements.

• Acceptance Testing: Conducting tests to confirm that the software meets business requirements (including UAT - User Acceptance Testing).

• Regression Testing: Ensuring that new changes or features do not negatively affect existing functionalities.

• Performance Testing: Testing software performance under various conditions (load, stress, scalability).

• Security Testing: Identifying vulnerabilities and assessing the software’s ability to protect data.

• Compatibility Testing: Ensuring the software works on different operating systems, browsers, or devices.

• Continuous Testing: Integrating testing into the development lifecycle to provide quick feedback and minimize bugs.

• Test Automation Frameworks: Tools and structures used to automate testing processes (e.g., TestNG, Appium).

19. VoIP (Voice over IP)

VoIP Protocols & Standards

• SIP (Session Initiation Protocol)

• H.323

• RTP (Real-Time Transport Protocol)

• MGCP (Media Gateway Control Protocol)

VoIP Hardware

• IP Phones (Desk Phones, Mobile Clients)

• VoIP Gateways

• Analog Telephone Adapters (ATAs)

• VoIP Servers

• Network Switches/ Routers for VoIP

VoIP Software

• Softphones (e.g., Zoiper, X-Lite)

• PBX (Private Branch Exchange) Systems

• VoIP Management Software

• Call Center Solutions (e.g., Asterisk, 3CX)

VoIP Network Infrastructure

• Quality of Service (QoS) Configuration

• VPNs (Virtual Private Networks) for VoIP

• VoIP Traffic Shaping & Bandwidth Management

• Firewall and Security Configurations for VoIP

• Network Monitoring & Optimization Tools

VoIP Security

• Encryption (SRTP, TLS)

• Authentication and Authorization

• Firewall & Intrusion Detection Systems

• VoIP Fraud DetectionVoIP Providers

• Hosted VoIP Services (e.g., RingCentral, Vonage)

• SIP Trunking Providers

• PBX Hosting & Managed Services

VoIP Quality and Testing

• Call Quality Monitoring

• Latency, Jitter, and Packet Loss Testing

• VoIP Performance Metrics and Reporting Tools

• User Acceptance Testing (UAT) for VoIP Systems

Integration with Other Systems

• CRM Integration (e.g., Salesforce with VoIP)

• Unified Communications (UC) Solutions

• Contact Center Integration

• Email, Chat, and Video Communication Integration

2 notes

·

View notes

Text

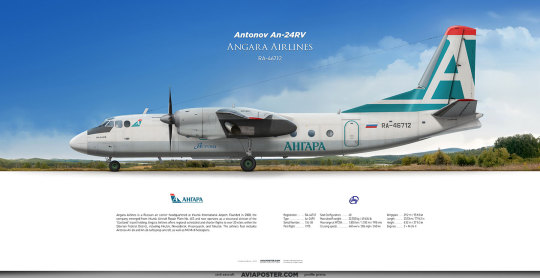

Antonov An-24RV Angara Airlines

Registration: RA-46712 Type: Ан-24RV Engines: 2 × AI-24-II Serial Number: 104-08 First flight: 1975

Angara Airlines is a Russian domestic air carrier in Eastern Siberia, operating regular passenger flights within the Irkutsk region, the Republic of Buryatia, and the Trans-Baikal Territory. Additionally, it offers charter services to various destinations. The airline was established in 2000, originating from the Irkutsk Aircraft Repair Plant No. 403, and is now a part of the Eastland travel holding.

Angara Airlines provides passenger services to all operational airports in the Irkutsk region. In partnership with the Disaster Medicine Service, it conducts flights for emergency medical assistance. The airline collaborates with the Irkutsk Aviation Forest Protection Base for patrol and monitoring missions, supports search and rescue operations, and delivers cargo, mail, and medicines to remote and hard-to-reach areas. Furthermore, it patrols power lines and oil pipelines, and undertakes construction and installation projects.

The airline’s fleet includes An-24, An-26 aircraft, and Mi-8 helicopters.

Poster for Aviators aviaposter.com

5 notes

·

View notes

Text

Edge Computing Market Disruption: 7 Startups to Watch

Edge Computing Market Valuation and Projections

The global edge computing market is undergoing a transformative evolution, with projections estimating an edge computing market size escalation from USD 15.96 billion in 2023 to approximately USD 216.76 billion by 2031, marking a compound annual growth rate (CAGR) of 33.6%. This unprecedented trajectory is being driven by rising demand for real-time data processing, the proliferation of Internet of Things (IoT) devices, and the deployment of 5G infrastructure worldwide.

Request Sample Report PDF (including TOC, Graphs & Tables): https://www.statsandresearch.com/request-sample/40540-global-edge-computing-market

Accelerated Demand for Real-Time Data Processing

Edge computing is revolutionizing the digital ecosystem by decentralizing data processing, shifting it from core data centers to the edge of the network—closer to the point of data generation. This architectural transformation is enabling instantaneous insights, reduced latency, and optimized bandwidth usage, which are critical in sectors requiring rapid decision-making.

Industries such as automotive, healthcare, telecommunications, and manufacturing are leading adopters of edge technologies to empower smart operations, autonomous functionality, and predictive systems.

Get up to 30%-40% Discount: https://www.statsandresearch.com/check-discount/40540-global-edge-computing-market

Edge Computing Market Segmentation Analysis:

By Component

Hardware

Edge computing hardware includes edge nodes, routers, micro data centers, servers, and networking gear. These devices are designed to endure harsh environmental conditions while delivering low-latency data processing capabilities. Companies are investing in high-performance edge servers equipped with AI accelerators to support intelligent workloads at the edge.

Software

Software solutions in edge environments include container orchestration tools, real-time analytics engines, AI inference models, and security frameworks. These tools enable seamless integration with cloud systems and support distributed data management, orchestration, and real-time insight generation.

Services

Edge services encompass consulting, deployment, integration, support, and maintenance. With businesses adopting hybrid cloud strategies, service providers are essential for ensuring compatibility, uptime, and scalability of edge deployments.

By Application

Industrial Internet of Things (IIoT)

Edge computing plays a vital role in smart manufacturing and Industry 4.0 initiatives. It facilitates predictive maintenance, asset tracking, process automation, and remote monitoring, ensuring enhanced efficiency and minimized downtime.

Smart Cities

Municipalities are leveraging edge computing to power traffic control systems, surveillance networks, waste management, and public safety infrastructure, enabling scalable and responsive urban development.

Content Delivery

In media and entertainment, edge solutions ensure low-latency content streaming, localized data caching, and real-time audience analytics, thereby optimizing user experience and reducing network congestion.

Remote Monitoring

Critical infrastructure sectors, including energy and utilities, employ edge computing for pipeline monitoring, grid analytics, and remote equipment diagnostics, allowing for proactive threat identification and response.

By Industry Vertical

Manufacturing

Edge solutions in manufacturing contribute to real-time production analytics, defect detection, and logistics automation. With AI-powered edge devices, factories are becoming increasingly autonomous and intelligent.

Healthcare

Hospitals and clinics implement edge computing to support real-time patient monitoring, diagnostic imaging processing, and point-of-care data analysis, enhancing treatment accuracy and responsiveness.

Transportation

The sector is utilizing edge technology in autonomous vehicle systems, smart fleet tracking, and intelligent traffic signals. These systems demand ultra-low latency data processing to function safely and efficiently.

Energy & Utilities

Edge computing enables smart grid optimization, renewable energy integration, and predictive fault detection, allowing utilities to manage resources with greater precision and sustainability.

Retail & Others

Retailers deploy edge devices for personalized marketing, real-time inventory management, and customer behavior analysis, enabling hyper-personalized and responsive shopping experiences.

Key Drivers Behind Edge Computing Market Growth:

1. IoT Proliferation and Data Deluge

With billions of connected devices transmitting real-time data, traditional cloud architectures cannot meet the bandwidth and latency demands. Edge computing solves this by processing data locally, eliminating unnecessary round trips to the cloud.

2. 5G Deployment

5G networks offer ultra-low latency and high throughput, both essential for edge applications. The synergy between 5G and edge computing is pivotal for real-time services like AR/VR, telemedicine, and autonomous navigation.

3. Hybrid and Multi-Cloud Strategies

Enterprises are embracing decentralized IT environments. Edge computing integrates with cloud-native applications to form hybrid infrastructures, offering agility, security, and location-specific computing.

4. Demand for Enhanced Security and Compliance

By localizing sensitive data processing, edge computing reduces exposure to cyber threats and supports data sovereignty in regulated industries like finance and healthcare.

Competitive Landscape

Leading Players Shaping the Edge Computing Market

Amazon Web Services (AWS) – Offers AWS Wavelength and Snowball Edge for low-latency, high-performance edge computing.

Microsoft Azure – Delivers Azure Stack Edge and Azure Percept for AI-powered edge analytics.

Google Cloud – Provides Anthos and Edge TPU for scalable, intelligent edge infrastructure.

IBM – Offers edge-enabled Red Hat OpenShift and hybrid edge computing solutions for enterprise deployment.

NVIDIA – Powers edge AI workloads with Jetson and EGX platforms.

Cisco Systems – Delivers Fog Computing and edge networking solutions tailored to enterprise-grade environments.

Dell Technologies – Supplies ruggedized edge gateways and scalable edge data center modules.

Hewlett Packard Enterprise (HPE) – Delivers HPE Edgeline and GreenLake edge services for data-intensive use cases.

FogHorn Systems & EdgeConneX – Innovators specializing in industrial edge analytics and data center edge infrastructure respectively.

Edge Computing Market Regional Insights

North America

A mature digital infrastructure, coupled with high IoT adoption and strong cloud vendor presence, makes North America the dominant regional edge computing market.

Asia-Pacific

Driven by rapid urbanization, smart city initiatives, and industrial automation in China, India, and Japan, Asia-Pacific is projected to experience the fastest CAGR during the forecast period.

Europe

The region benefits from strong government mandates around data localization, Industry 4.0 initiatives, and investments in telecom infrastructure.

Middle East and Africa

Emerging adoption is evident in smart energy systems, oilfield monitoring, and urban digital transformation projects.

South America

Growth in agritech, mining automation, and public safety systems is propelling the edge market in Brazil, Chile, and Argentina.

Purchase Exclusive Report: https://www.statsandresearch.com/enquire-before/40540-global-edge-computing-market

Edge Computing Market Outlook and Conclusion

Edge computing is not just an enabler but a strategic imperative for digital transformation in modern enterprises. As we move deeper into an AI-driven and hyperconnected world, the integration of edge computing with 5G, IoT, AI, and cloud ecosystems will redefine data management paradigms.

Businesses investing in edge infrastructure today are setting the foundation for resilient, intelligent, and real-time operations that will determine industry leadership in the years ahead. The edge is not the future—it is the present frontier of competitive advantage.

Our Services:

On-Demand Reports: https://www.statsandresearch.com/on-demand-reports

Subscription Plans: https://www.statsandresearch.com/subscription-plans

Consulting Services: https://www.statsandresearch.com/consulting-services

ESG Solutions: https://www.statsandresearch.com/esg-solutions

Contact Us:

Stats and Research

Email: [email protected]

Phone: +91 8530698844

Website: https://www.statsandresearch.com

1 note

·

View note

Text

How SRE Certification Prepares You for the Future of IT Operations and Cloud Reliability

Introduction

As IT systems become more complex, ensuring reliability, scalability, and automation has become a top priority for organizations. SRE certification equips IT professionals with the skills to manage modern infrastructure efficiently. A site reliability engineer certification validates expertise in maintaining high-performance, fault-tolerant IT operations.

Key Skills Gained Through SRE Certification

By earning an SRE Foundation Certification, professionals develop expertise in automation, incident management, monitoring, and cloud reliability. A site reliability engineering certification enhances proficiency in CI/CD pipelines, observability, and DevOps best practices.

Benefits of SRE Certification

Holding an SRE certification boosts career growth, improves earning potential, and ensures job security in cloud-driven environments. Certified professionals can optimize system performance, reduce downtime, and implement best-in-class operational strategies.

Job Opportunities After SRE Certification

With an increasing demand for cloud reliability, professionals with SRE certifications can pursue roles such as Site Reliability Engineer, DevOps Engineer, Cloud Operations Manager, and Infrastructure Architect.

Market Demand & Industry Growth

The adoption of cloud computing and DevOps methodologies has accelerated the need for site reliability engineering certification holders. Leading tech companies are actively hiring SRE professionals to enhance system resilience and minimize service disruptions.

Future Trends in IT Operations & Cloud Reliability

The future of SRE revolves around AI-driven monitoring, self-healing systems, and automation-first strategies. Professionals with an SRE Foundation Certification will play a critical role in shaping the next generation of IT operations.

Conclusion & Call-to-Action

Earning an SRE certification positions you as a leader in IT reliability and cloud operations. Take the next step in your career by enrolling in an SRE Foundation Certification program today.

For information visit: -

Contact : +41444851189

#SRECertification #SiteReliabilityEngineer #CloudReliability #ITOperations #SREFoundation #DevOps #InfrastructureAutomation

#sre certification#site reliability engineer certification#SRE Foundation Certification#site reliability engineering certification#sre certifications

1 note

·

View note

Text

Leak Detection and Repair Market Size, Anticipating Growth Prospects from 2032

The leak detection and repair market was valued at US$ 18.22 billion in 2022 and is projected to grow at a CAGR of 4.1% during the forecast period from 2022 to 2032, reaching an estimated US$ 27.23 billion by 2032.

Key Drivers of Market Demand for Leak Detection and Repair

The increasing adoption of machine learning and Artificial Intelligence (AI) is significantly enhancing leak detection and repair (LDAR) capabilities. Many gas emission monitoring companies are leveraging AI-driven algorithms to detect leaks more efficiently by analyzing satellite imagery and ground-level data. These advanced technologies help operators identify patterns and trends over time, enabling data-driven decision-making.

As a result, oil and gas companies are progressively replacing traditional LDAR systems with technologically advanced solutions. Additionally, venture capital firms are actively investing in companies specializing in AI-based LDAR technologies, further fueling market growth and innovation.

𝐅𝐨𝐫 𝐦𝐨𝐫𝐞 𝐢𝐧𝐬𝐢𝐠𝐡𝐭𝐬 𝐢𝐧𝐭𝐨 𝐭𝐡𝐞 𝐌𝐚𝐫𝐤𝐞𝐭, 𝐑𝐞𝐪𝐮𝐞𝐬𝐭 𝐚 𝐒𝐚𝐦𝐩𝐥𝐞 𝐨𝐟 𝐭𝐡𝐢𝐬 𝐑𝐞𝐩𝐨𝐫𝐭: https://www.factmr.com/connectus/sample?flag=S&rep_id=7094

Country-wise Analysis

The United States holds a dominant position in the leak detection and repair (LDAR) market, accounting for 54% of the total market share. This growth is largely driven by stringent government regulations, including mandatory leak detection systems for hazardous liquid pipelines as per U.S. pipeline leak detection regulations.

Additionally, strong government support for the oil and gas sector is fueling industrial expansion, increasing the demand for advanced LDAR solutions. Government subsidies further contribute to the market’s growth, reinforcing the need for efficient leak detection and repair systems across the industry.

Category-wise Insights

The handheld gas detectors segment holds the largest share of the global leak detection and repair (LDAR) market, accounting for over 36% of total revenue. These detectors are widely adopted in the oil and gas sector due to their fast and reliable detection capabilities, compact and lightweight design, and ease of use. They are utilized for detecting various gases, including methanol, carbon dioxide, ammonia, and methane. The rising production of natural gas, along with increasing trade in oil and gas products, continues to drive demand for handheld gas detectors.

The market is segmented into handheld gas detectors, UAV-based detectors, vehicle-based detectors, and manned aircraft detectors. Among these, the UAV-based detectors segment is expected to register the highest CAGR during the forecast period. The ability of UAV-based detectors to navigate hazardous areas enhances safety and operational efficiency, making them increasingly valuable for oil and gas companies. These detectors are used to identify gases such as benzene, ethanol, heptane, octane, and methane, further fueling their adoption in the industry.

Competitive Landscape

The leak detection and repair (LDAR) market is highly competitive, with key players focusing on product innovation, strategic mergers, and acquisitions to strengthen their market presence. Leading companies are expanding their production capacities and global reach to capitalize on opportunities in emerging economies.

In August 2021, Southern California Gas Company (SoCalGas) signed a $12 million agreement with Bridger Photonics, Inc. to enhance methane detection. SoCalGas is utilizing Bridger’s Gas Mapping LiDAR technology to identify, locate, and measure methane emissions across its distribution network.

In June 2021, Clean Air Engineering, Inc. entered into a channel partnership with Picarro. This collaboration integrates Picarro’s industry-leading ethylene oxide (EO) monitoring technology with CleanAir’s advanced services, providing industrial clients with cutting-edge EO measurement and monitoring solutions.

Such strategic initiatives continue to drive advancements in LDAR technologies, ensuring enhanced efficiency and regulatory compliance across industries.

Key Market Segments Covered in Leak Detection and Repair Industry Research

By Component :

Equipment

Services

By Product :

Handheld Gas Detectors

UAV-based Detectors

Vehicle-based Detectors

Manned Aircraft Detectors

By Technology :

Volatile Organic Compounds (VOC) Analyzer

Optical Gas Imaging (OGI)

Laser Absorption Spectroscopy

Ambient/Mobile Leak Monitoring

Acoustic Leak Detection

Audio-Visual-Olfactory Inspection

𝐂𝐨𝐧𝐭𝐚𝐜𝐭:

US Sales Office 11140 Rockville Pike Suite 400 Rockville, MD 20852 United States Tel: +1 (628) 251-1583, +353-1-4434-232 Email: [email protected]

1 note

·

View note

Text

Revolutionize Efficiency with Top AI Productivity & Lead Generation Tools

In the quickly changing the world, the technologies in the artificial intelligence can be thus seen to highly contribute to productivity as well as lead generation for businesses. All of these tools use artificial intelligence analytics to boost productivity, automate processes. Based upon knowledge from TechLaugh, this blog focuses on the best AI tools that exist in the present day, discussing their advantages and successes, responding to people’s questions, and sharing the experiences of others.

AI Productivity Tools: One way of increasing ROI is by enhancing operational efficiency.

AI staff improvement tools are meant to help overcome some daily tasks, manage the flow of work and increase the efficiency. These tools leverage upon the use of AI technologies such as machine learning and natural language processing to enhance business operations.Here are some leading AI productivity tools:

Notion AI

Overview: Notion AI is an extension of the workspace within Notion that provides intelligent recommendations and performs many automatic actions within the course of project management.

Case Study: There is a recorded case of a digital marketing agency adopting Notion AI to handle project process. The team productivity of the employees increased by 35 percent while the time taken to complete projects was cut down tremendously.

Testimonial: At Notion AI has made a significant impact on improving the management of our project. This is primarily because the software advances al our tedious procedures thereby providing intelligent prompts.

2. ClickUp

Overview: ClickUp is a tool that has a range of functionality such as task management and artificial intelligence for scheduling among others.

Case Study: One day, a tech startup in America decided to apply AI aspects in ClickUp for solving project management and communication. This led to an increase of task completion rate by 40% and reduction in delayed projects by 30%.

Testimonial: ”ClickUp offers some AI tools that have increased our efficiency at work; we tackle more projects with less downtime and the results are better.”

3. Monday. com

Overview: Monday. com is a tool that uses artificial intelligence to provide tools for project management such that one can plan, monitor and manage their teams effectively.

Case Study: This is illustrated in a retail company that adopted Monday. company can benefit using AI functions of com to enhance the control of its inventory and advertising. The organisation received a 25 per cent improvement in the process efficiency, and a 15 per cent uplift to their marketing return on investment.

Testimonial: “I have being using Monday. com AI tools to help in managing our project and marketing them and have recorded impressive results”.

AI Lead Generation Tools: The topic on this part is Sales Performance Improvement.

AI lead generation tools are software tools which help in finding out the potential leads, qualifying and nurturing them to become customers. These tools allow businesses to identify that large pool of high-value leads that can be targeted through the most optimal way. Here are some top AI lead generation tools:Here are some top AI lead generation tools:

HubSpot AI

Overview: Currently, AI is used in HubSpot to enhance lead scoring and email follow-ups and to generate relevant recommendations based on the customers’ behavior.

Case Study: An e-commerce company implemented HubSpot AI features to sharpen the leads cultivation process and further automation of follow-ups. The team raised its lead conversion rates by 50 percent and grew the sales pipeline by 30 percent.

Testimonial: AI integrations at HubSpot have been instrumental in changing how we lead generation has been done before essentially enhancing our campaigns.

2. ZoomInfo

Overview: There are new features in ZoomInfo including geographic information, contact data, and sales hints based on the application of artificial intelligence.

Case Study: An up and coming financial services firm used ZoomInfo’s AI features to increase their lead generation and connect with prospective clients increasing lead response by 45% and sales leads by 25%.

Testimonial: ‘I am glad that through the application of AI, ZoomInfo has made it easier for us to generate leads, especially those which are potentially valuable.’ — Emily R. , VP of Sales

3. LinkedIn Sales Navigator

Overview: LinkedIn Sales Navigator makes use of AI in a way that it is able to identify potential prospects from LinkedIn profile and their activities.

Case Study: In a software company, LinkedIn Sales Navigator was used to optimize the leads prospecting which resulted in the gain of 35% of the number of sales-ready leads and 20% of closed-won deals.

Testimonial: One of the new features of LinkedIn Sales Navigator that makes it very helpful is the recommendations provided by AI. It has improved our leads generation in comparison to the previous year along with increased engagement rates.

Frequently Asked Questions (FAQs)

1. What is the meaning of the term AI productivity tools? AI productivity tools are designed to apply artificial intelligence in different functional areas of business, with the main purpose to create value by supporting different processes and activities in an organization, to guarantee effectiveness and efficiency in business organization practices.

2. The main idea of using AI lead generation tools How does it work? AI lead generation tools involve evaluating data to find possible leads, categorising them, scoring them accordingly and then contacting them. They assist the businesses to locate and communicate with potential and quality buyers in the marketplace much effectively.

3. Are there ways for those AI tools to be incorporated into already acquired software out there? Yes, most of the AI tools are designed to work with the existing business applications like CRM, project management tools, email marketing tools and other communication platforms thus making their implementation easier and more effective.

4. Is the use of AI tools relevant for the small business? Absolutely. AI tools are available in different forms with varying capabilities whereby many of the modern AI tools are developed to be suitable for small and medium scale enterprises. They level the playing field in extent to which leads are generated and the extent to which many tasks are automated.

5. What is the rate of return (and risk) of investment with artificial intelligence frameworks? The return that can be realised through the adoption of AI tools is normally high since the business is most likely to experience increased production, reduced costs and high revenues. The actual ROI will highly depend on the organisational tools of choice and how they are singly and jointly implemented within the required business environment.

2 notes

·

View notes

Text

How can you optimize the performance of machine learning models in the cloud?

Optimizing machine learning models in the cloud involves several strategies to enhance performance and efficiency. Here’s a detailed approach:

Choose the Right Cloud Services:

Managed ML Services:

Use managed services like AWS SageMaker, Google AI Platform, or Azure Machine Learning, which offer built-in tools for training, tuning, and deploying models.

Auto-scaling:

Enable auto-scaling features to adjust resources based on demand, which helps manage costs and performance.

Optimize Data Handling:

Data Storage:

Use scalable cloud storage solutions like Amazon S3, Google Cloud Storage, or Azure Blob Storage for storing large datasets efficiently.

Data Pipeline:

Implement efficient data pipelines with tools like Apache Kafka or AWS Glue to manage and process large volumes of data.

Select Appropriate Computational Resources:

Instance Types:

Choose the right instance types based on your model’s requirements. For example, use GPU or TPU instances for deep learning tasks to accelerate training.

Spot Instances:

Utilize spot instances or preemptible VMs to reduce costs for non-time-sensitive tasks.

Optimize Model Training:

Hyperparameter Tuning:

Use cloud-based hyperparameter tuning services to automate the search for optimal model parameters. Services like Google Cloud AI Platform’s HyperTune or AWS SageMaker’s Automatic Model Tuning can help.

Distributed Training:

Distribute model training across multiple instances or nodes to speed up the process. Frameworks like TensorFlow and PyTorch support distributed training and can take advantage of cloud resources.

Monitoring and Logging:

Monitoring Tools:

Implement monitoring tools to track performance metrics and resource usage. AWS CloudWatch, Google Cloud Monitoring, and Azure Monitor offer real-time insights.

Logging:

Maintain detailed logs for debugging and performance analysis, using tools like AWS CloudTrail or Google Cloud Logging.

Model Deployment:

Serverless Deployment:

Use serverless options to simplify scaling and reduce infrastructure management. Services like AWS Lambda or Google Cloud Functions can handle inference tasks without managing servers.

Model Optimization:

Optimize models by compressing them or using model distillation techniques to reduce inference time and improve latency.

Cost Management:

Cost Analysis:

Regularly analyze and optimize cloud costs to avoid overspending. Tools like AWS Cost Explorer, Google Cloud’s Cost Management, and Azure Cost Management can help monitor and manage expenses.

By carefully selecting cloud services, optimizing data handling and training processes, and monitoring performance, you can efficiently manage and improve machine learning models in the cloud.

2 notes

·

View notes

Text

What is Software Testing?

Software testing is the process of evaluating and verifying that a software product or application performs as intended. The benefits of robust testing include identifying and preventing bugs, improving performance, and ensuring the product meets user expectations and requirements.

Goals of Software Testing

The primary goals of software testing include:

Validation: Ensuring the software meets user requirements and expectations.

Verification: Confirming that the software functions correctly as per its design specifications.

Quality Assurance: Improving the reliability, performance, and security of the software.

The Evolution of Software Testing

Modern software testing emphasizes continuous testing, integrating testing processes throughout the software development lifecycle. This approach begins during the design phase, continues during development, and extends into production. Key philosophies in this domain include:

Shift-Left Testing: Incorporating testing earlier in the development process, closer to the design stage.

Shift-Right Testing: Leveraging real-world validation and feedback from end users in production environments.

To support faster delivery cycles, test automation has become essential, allowing repetitive and complex testing processes to be handled efficiently.

The Role of GenQE.ai in AI-Assisted Testing

GenQE.ai, a cutting-edge AI-powered testing tool, revolutionizes the software testing process by introducing intelligent automation and predictive insights. It enhances software quality assurance with features such as:

Automated Test Case Generation: GenQE.ai can create detailed and effective test cases based on application requirements, significantly reducing manual effort.

Predictive Defect Analysis: The AI analyzes historical and current data to predict areas with a high likelihood of defects, enabling focused testing.

Self-Adaptive Test Scripts: GenQE.ai dynamically updates test scripts to reflect changes in application design, ensuring test cases remain accurate.

Continuous Monitoring: It supports shift-left and shift-right testing by integrating seamlessly into CI/CD pipelines, offering real-time insights throughout development and production phases.

Types of Software Testing

There are various types of software testing, each serving a specific purpose:

Acceptance Testing: Verifies whether the entire system works as intended.

Code Review: Ensures adherence to coding standards and best practices.

Integration Testing: Confirms that different components or modules work together seamlessly.

Unit Testing: Validates individual software units (smallest testable components).

Functional Testing: Emulates business scenarios to test application functionalities.

Performance Testing: Evaluates software under different workloads (e.g., load testing).

Regression Testing: Ensures new features do not negatively impact existing functionality.

Security Testing: Identifies vulnerabilities and ensures system security.

Stress Testing: Determines the system's behavior under extreme conditions.

Usability Testing: Assesses how easily users can interact with the system.

Optimizing the Testing Process

A test management plan is critical to prioritizing which tests yield the most value given available time and resources. Exploratory testing complements planned tests by identifying unpredictable scenarios that may lead to errors.

How GenQE.ai Enhances Testing Efficiency

By leveraging AI to optimize test coverage and streamline processes, GenQE.ai reduces testing cycles and improves defect detection rates. It empowers teams to focus on high-priority tasks and deliver higher-quality software in less time.

Incorporating tools like GenQE.ai into your testing strategy ensures that software testing evolves alongside the complexity and speed of modern application development.

1 note

·

View note

Text

Chihuahua state officials and a notorious Mexican security contractor broke ground last summer on the Torre Centinela (Sentinel Tower), an ominous, 20-story high-rise in downtown Ciudad Juarez that will serve as the central node of a new AI-enhanced surveillance regime. With tentacles reaching into 13 Mexican cities and a data pipeline that will channel intelligence all the way to Austin, Texas, the monstrous project will be unlike anything seen before along the U.S.-Mexico border.

And that's saying a lot, considering the last 30-plus years of surging technology on the U.S side of the border.

The Torre Centinela will stand in a former parking lot next to the city's famous bullring, a mere half-mile south of where migrants and asylum seekers have camped and protested at the Paso del Norte International Bridge leading to El Paso. But its reach goes much further: the Torre Centinela is just one piece of the Plataforma Centinela (Sentinel Platform), an aggressive new technology strategy developed by Chihuahua's Secretaria de Seguridad Pública Estatal (Secretary of State Public Security or SSPE) in collaboration with the company Seguritech.

With its sprawling infrastructure, the Plataforma Centinela will create an atmosphere of surveillance and data-streams blanketing the entire region. The plan calls for nearly every cutting-edge technology system marketed at law enforcement: 10,000 surveillance cameras, face recognition, automated license plate recognition, real-time crime analytics, a fleet of mobile surveillance vehicles, drone teams and counter-drone teams, and more.

If the project comes together as advertised in the Avengers-style trailer that SSPE released to influence public opinion, law enforcement personnel on site will be surrounded by wall-to-wall monitors (140 meters of screens per floor), while 2,000 officers in the field will be able to access live intelligence through handheld tablets.

Texas law enforcement will also have "eyes on this side of the border" via the Plataforma Centinela, Chihuahua Governor Maru Campos publicly stated last year. Texas Governor Greg Abbott signed a memorandum of understanding confirming the partnership.

2 notes

·

View notes

Text

Azure Data Engineering Tools For Data Engineers

Azure is a cloud computing platform provided by Microsoft, which presents an extensive array of data engineering tools. These tools serve to assist data engineers in constructing and upholding data systems that possess the qualities of scalability, reliability, and security. Moreover, Azure data engineering tools facilitate the creation and management of data systems that cater to the unique requirements of an organization.

In this article, we will explore nine key Azure data engineering tools that should be in every data engineer’s toolkit. Whether you’re a beginner in data engineering or aiming to enhance your skills, these Azure tools are crucial for your career development.

Microsoft Azure Databricks

Azure Databricks is a managed version of Databricks, a popular data analytics and machine learning platform. It offers one-click installation, faster workflows, and collaborative workspaces for data scientists and engineers. Azure Databricks seamlessly integrates with Azure’s computation and storage resources, making it an excellent choice for collaborative data projects.

Microsoft Azure Data Factory

Microsoft Azure Data Factory (ADF) is a fully-managed, serverless data integration tool designed to handle data at scale. It enables data engineers to acquire, analyze, and process large volumes of data efficiently. ADF supports various use cases, including data engineering, operational data integration, analytics, and data warehousing.

Microsoft Azure Stream Analytics

Azure Stream Analytics is a real-time, complex event-processing engine designed to analyze and process large volumes of fast-streaming data from various sources. It is a critical tool for data engineers dealing with real-time data analysis and processing.

Microsoft Azure Data Lake Storage

Azure Data Lake Storage provides a scalable and secure data lake solution for data scientists, developers, and analysts. It allows organizations to store data of any type and size while supporting low-latency workloads. Data engineers can take advantage of this infrastructure to build and maintain data pipelines. Azure Data Lake Storage also offers enterprise-grade security features for data collaboration.

Microsoft Azure Synapse Analytics

Azure Synapse Analytics is an integrated platform solution that combines data warehousing, data connectors, ETL pipelines, analytics tools, big data scalability, and visualization capabilities. Data engineers can efficiently process data for warehousing and analytics using Synapse Pipelines’ ETL and data integration capabilities.

Microsoft Azure Cosmos DB

Azure Cosmos DB is a fully managed and server-less distributed database service that supports multiple data models, including PostgreSQL, MongoDB, and Apache Cassandra. It offers automatic and immediate scalability, single-digit millisecond reads and writes, and high availability for NoSQL data. Azure Cosmos DB is a versatile tool for data engineers looking to develop high-performance applications.

Microsoft Azure SQL Database

Azure SQL Database is a fully managed and continually updated relational database service in the cloud. It offers native support for services like Azure Functions and Azure App Service, simplifying application development. Data engineers can use Azure SQL Database to handle real-time data ingestion tasks efficiently.

Microsoft Azure MariaDB

Azure Database for MariaDB provides seamless integration with Azure Web Apps and supports popular open-source frameworks and languages like WordPress and Drupal. It offers built-in monitoring, security, automatic backups, and patching at no additional cost.

Microsoft Azure PostgreSQL Database

Azure PostgreSQL Database is a fully managed open-source database service designed to emphasize application innovation rather than database management. It supports various open-source frameworks and languages and offers superior security, performance optimization through AI, and high uptime guarantees.

Whether you’re a novice data engineer or an experienced professional, mastering these Azure data engineering tools is essential for advancing your career in the data-driven world. As technology evolves and data continues to grow, data engineers with expertise in Azure tools are in high demand. Start your journey to becoming a proficient data engineer with these powerful Azure tools and resources.

Unlock the full potential of your data engineering career with Datavalley. As you start your journey to becoming a skilled data engineer, it’s essential to equip yourself with the right tools and knowledge. The Azure data engineering tools we’ve explored in this article are your gateway to effectively managing and using data for impactful insights and decision-making.

To take your data engineering skills to the next level and gain practical, hands-on experience with these tools, we invite you to join the courses at Datavalley. Our comprehensive data engineering courses are designed to provide you with the expertise you need to excel in the dynamic field of data engineering. Whether you’re just starting or looking to advance your career, Datavalley’s courses offer a structured learning path and real-world projects that will set you on the path to success.

Course format:

Subject: Data Engineering Classes: 200 hours of live classes Lectures: 199 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 70% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

Subject: DevOps Classes: 180+ hours of live classes Lectures: 300 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 67% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

For more details on the Data Engineering courses, visit Datavalley’s official website.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#power bi#business intelligence#data analytics course#data science course#data engineering course#data engineering training

3 notes

·

View notes

Text

Web Development in 2025: The Rise of AI-Driven Innovation

web development is undergoing an earthquake change by advanced in Artificial Intelligence. From automated code generation to individual user experiences, AI is no longer a future concept - it is an essential toolkit for modern developers and businesses seeking competitive benefits. This article explains how AI-powered innovation web development is re-shaping every stage of the life cycle, which brings profit, and the teams of challenges have to navigate to exploit their full potential.

1. AI-August Design & Prototyping

Intelligent wireframing: AI -run equipment can now explain the brief of the natural language to generate wireframe within minutes. Instead of sketching the layout by hand, the designers describe their vision, and produce a clickable prototype designed for the AI review.

Automatic Style Guide: Machine Learning algorithms analyze existing brand assets- logos, color straps, typography- and automatically construct constant styling guides. This design cuts from phase to days and aligns each page with corporate identity.

Predictive UX Test: AI-operated analytics platforms follow user interactions to identify possible purposeful issues before the development begins. By predicting pain points, teams can continuously adapt to navigation flow and page layout.

2. Code generation and optimization

Natural Language Coding: In 2025, developers regularly use AI assistants to translate plain-English signals into the production-cod snipet. Whether a custom form verification or a complex animation sequence is formed, AI speeds up to 50%coding.

Performance tuning: Machine learning models monitor the performance of the real-world site and recommend adaptation-like image compression levels, cashing strategies, and lazy olds loading thresholds to ensure the page load in less than two seconds.

3. Individual user experience

Dynamic Content Generation: AI algorithms analyze user's behavior, preferences and demographics, which are to generate personal headlines, product recommendations and even the entire page layout on the fly. This level of adaptation greatly increases engagement and conversion rates.

Chatbots and Virtual Assistant: Interactive AI directly handles the constrich customer questions in websites 24/7, handles books appointments, and collects feedback. With emotion analysis, these bots customize tone and language to match each visitor, causing a human experience on a scale.

Adaptive interface: using real-time data, AI UI can adjust the components-such as button size, contrast ratio and content conforming with density-individual requirements, including access requirements. This ensures inclusion without separate growth track.

4. Uninterrupted integration and deployment

Devops automation: AI-powered pipeline orchestrate tests, security scans and deployment in many environment. Continuous integration triggers reduce discrepancies, downtime and manual intervention found during automatic rollbacks or therapeutic scripts.

API Orchitation: As microsarvis architecture, AI platforms manage dependence graphs, automatically update the service closing points and monitor health check -ups. This ensures that the front-end application always optimal back. End connect with resources.

Cloud Resource Adaptation: Machine learning models predict traffic spikes and provisions server -free functions or container institutes. This reduces the cost just the time by maintaining extreme performance in the scalability.

5. Safety and compliance

Intelligent Threat Detection: AI systems analyze millions of logs per second to identify unusual patterns - such as DDOS attacks or credentials stuffing - often prevent violations before impressing users.

Automatic Code Auditing: Safety-focused AI tools scan new committees for weaknesses such as SQL injection or cross-site scripting (XSS). They destroy issues in bridge requests and even suggest remade codes, which spend time security teams on manual reviews.

Regulatory Compliance Monitoring: For industries under GDPR, HIPAA, or other rules, AI monitors data flow and configuration, automatically generates audit reports and alerts teams to non -use changes.

6. Challenges and ideas

While AI provides transformational benefits, it requires careful planning to adopt it effectively:

Data privacy: comprehensive user data collection fuel privatization engine. Organizations should apply strict data governance policies, anonymity protocols and clear opts in mechanisms.

Prejudice mitigation: Trained AI model materials on diagonally dataset can eliminate bias in recommendations or code tips. Regular bias audit and diverse training data are essential safety measures.

Skill Development: Teams must be excusable to work with AI tools - how to validate early engineering, model boundaries, and AI output.

Ethical Uses: Transparent AI use produces the user trust. Websites should disclose AI - Povered features (eg, chatbots, personalization) and provide an option of human fall.

7. Looking forward

As we move towards the end of 2025, AI's role in web development will only increase. Future innovations can be involved:

Generative 3D web experience: Beyond the flat page, AI can automate the creation of an immersive 3D interface that immediately load in browsers.

Emotion Inters Conscious Interface: Detection of real Ootion through webcam can cause material tone and visual to fit the user's mood.

Self -Heling website: Advanced AI agents can autonomally break up link, patch safety holes, and update the leveled libraries without human intervention.

conclusion

AI-run innovation is redefining web development in 2025. From automatic to automatic tasks to craft the hyper onsoursalized user trips, the artificial intelligence empowers the teams to rapidly, intelligent, intelligent and distribute more secure websites. By embracing AI responsibly - promoting privacy, morality and continuous learning - the development team can unlock new levels of creativity and efficiency, puts their businesses at the forefront of digital frontier.

0 notes